A Tree-based Algorithm for Ranking Web Services

Fatma Ezzahra Gmati

1

, Nadia Yacoubi-Ayadi

1

, Afef Bahri

2

, Salem Chakhar

3,4

and Alessio Ishizaka

3,4

1

RIADI Research Laboratory, National School of Computer Sciences, University of Manouba, Manouba, Tunisia

2

MIRACL Laboratory, High School of Computing and Multimedia, University of Sfax, Sfax, Tunisia

3

Portsmouth Business School, University of Portsmouth, Portsmouth, U.K.

4

Centre for Operational Research and Logistics, University of Portsmouth, Portsmouth, U.K.

Keywords:

Web Service, Service Scoring, Service Ranking, Tree Structure, Algorithm.

Abstract:

The aim of this paper is to propose a new algorithm for Web services ranking. The proposed algorithm relies

on a tree data structure that is constructed based on the scores of Web services. Two types of scores are

considered, which are computed by respectively selecting the edge with the minimum or the edge with the

maximum weight in the matching graph. The construction of the tree requires the successive use of both

scores, leading to two different versions of the tree. The final ranking is obtained by applying a pre-order

traversal on the tree and picks out all leaf nodes ordered from the left to the right. The performance evaluation

shows that the proposed algorithm is most often better than similar ones.

1 INTRODUCTION

Although the semantic matchmaking (Paolucci et al.,

2002; Doshi et al., 2004; Bellur and Kulkarni, 2007;

Fu et al., 2009; Chakhar, 2013; Chakhar et al., 2014;

Chakhar et al., 2015) permits to avoid the problem

of simple syntactic and strict capability-based match-

making, it is not very suitable for efficient Web ser-

vice selection. This is because it is difficult to distin-

guish between a pool of similar Web services (Rong

et al., 2009).

A possible solution to this issue is to use some

appropriate techniques and some additional informa-

tion to rank the Web services delivered by the seman-

tic matching algorithm and then provide a manage-

able set of ‘best’ Web services to the user from which

s/he can select one Web service to deploy. Several ap-

proaches have been proposed to implement this idea

(Manikrao and Prabhakar, 2005; Maamar et al., 2005;

Kuck and Gnasa, 2007; Gmati et al., 2014; Gmati

et al., 2015).

The objective of this paper is to introduce a new

Web services ranking algorithm. The proposed al-

gorithm relies on a tree data structure that is con-

structed based on the scores of Web services. Two

types of scores are considered, which are computed

by respectively selecting the edge with the minimum

or the edge with the maximum weight in the match-

ing graph. The construction of the tree requires the

successive use of both scores, leading to two differ-

ent versions of the tree. The final ranking is obtained

by applying a pre-order traversal on the tree and picks

out all the leaf nodes ordered from the left to the right.

The rest of the paper is organized as follows. Sec-

tion 2 provides a brief review of Web services match-

ing. Section 3 shows how the similarity degrees are

computed. Section 4 presents the Web services scor-

ing technique. Section 5 details the tree-based rank-

ing algorithm. Section 6 evaluates the performance

of the proposed algorithm. Section 7 discusses some

related work. Section 8 concludes the paper.

2 MATCHING WEB SERVICES

The main input for the ranking algorithm is a set of

Web services satisfying the user requirements. In

this section, we briefly review three matching algo-

rithms that can be used to identify this set of Web

services. These algorithms support different levels of

customization. This classification of matching algo-

rithms according to the levels of customization that

they support enrich and generalize our previous work

in (Chakhar, 2013; Chakhar et al., 2014; Gmati et al.,

2014; Gmati et al., 2014).

In the rest of this paper, a Web service S is defined

as a collection of attributes that describe the service.

170

Ezzahra Gmati F., Yacoubi-Ayad N., Bahri A., Chakhar S. and Ishizaka A..

A Tree-based Algorithm for Ranking Web Services.

DOI: 10.5220/0005498901700178

In Proceedings of the 11th International Conference on Web Information Systems and Technologies (WEBIST-2015), pages 170-178

ISBN: 978-989-758-106-9

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

Let S.A denotes the set of attributes of service S and

S.A

i

denotes each member of this set. Let S.N denotes

the cardinality of this set.

2.1 Trivial Matching

The trivial matching assumes that the user can spec-

ify only the functional specifications of the desired

service. Let S

R

be the Web service that is requested,

and S

A

be the Web service that is advertised. A suffi-

cient trivial match exists between S

R

and S

A

if for ev-

ery attribute in S

R

.A there exists an identical attribute

of S

A

.A and the similarity between the values of the

attributes does not fail. Formally,

∀

i

∃

j

(S

R

.A

i

= S

A

.A

j

) ∧ µ(S

R

.A

i

= S

A

.A

j

) ≻ Fail

⇒ SuffTrivialMatch(S

R

,S

A

) 1 ≤ i ≤ S

R

.N.

(1)

where: µ(S

A

i

.A

j

,S

R

.A

j

) (j = 1,··· , N) is the similarity

degree between the requested Web service and the ad-

vertised Web service on the jth attribute A

j

. Accord-

ing to this definition, all the attributes of the requested

service S

R

should be considered during the matching

process. This is the default case with no support of

customization.

The trivial matching is formalized in Algorithm 1.

This algorithm follows directly from Sentence (1).

Algorithm 1: Trivial Matching.

1 [h!]

Input : S

R

, // Requested service.

S

A

, // Advertised service.

Output: Boolean, // fail/success.

2 while

i ≤ S

R

.N

do

3 Append S

R

.A

i

to rAttrSet;

4 while

k ≤ S

A

.N

do

5 if

S

A

.A

k

= S

R

.A

i

then

6 Append S

A

.A

k

to aAttrSet;

7 k ←− k+ 1;

8 i ←− i+ 1;

9 while

t ≤ S

R

.N

do

10 if (µ(

rAttrSet

[t],

aAttrSet

[t]) = Fail) then

11 return fail;

12 t ←− t +1;

13 return success;

The definition of the semantic degrees used in

Sentence (1) and Algorithm 1 (and also in the two

other matching algorithms presented later) is given in

Section 3.

2.2 Partially Parameterized Matching

In this case, the user can specify the list of attributes

to consider during the matching process. In order to

allow this, we use the concept of Attributes List that

serves as a parameter to the matching process. An

Attributes List, L, is a relation consisting of one at-

tribute, L.A that describes the service attributes to be

compared. Let L.A

i

denotes the service attribute value

in the ith tuple of the relation. L.N denotes the total

number of tuples in L. We assume that the order of

attributes in L is randomly specified.

Let S

R

be the service that is requested, S

A

be the

service that is advertised, and L be an Attributes List.

A sufficient partially parameterized match exists be-

tween S

R

and S

A

if for every attribute in L.A there ex-

ists an identical attribute of S

R

and S

A

and the similar-

ity between the values of the attributes does not fail.

Formally,

∀

i

∃

j,k

(L.A

i

= S

R

.A

j

= S

A

.A

k

) ∧ µ(S

R

.A

j

,S

A

.A

k

) ≻ Fail

⇒ SuffPartiallyParamMatch(S

R

,S

A

) 1 ≤ i ≤ L.N.

(2)

According to this definition, only the attributes spec-

ified by the user in the Attributes List are considered

during the matching process.

The partially parameterized matching is formal-

ized in Algorithm 2 that follows directly from Sen-

tence (2).

Algorithm 2: Partially Parameterized Matching.

Input : S

R

, // Requested service.

S

A

, // Advertised service.

L, // Attributes List.

Output: Boolean, // fail/success.

1 while (i ≤ L.N) do

2 while

j ≤ S

R

.N

do

3 if

S

R

.A

j

= L.A

i

then

4 Append S

R

.A

j

to rAttrSet;

5 j ←− j +1;

6 while

k ≤ S

A

.N

do

7 if

S

A

.A

k

= L.A

i

then

8 Append S

A

.A

k

to aAttrSet;

9 k ←− k+ 1;

10 i ←− i+ 1;

11 while (t ≤ L.N) do

12 if (µ(

rAttrSet

[t],

aAttrSet

[t]) = Fail) then

13 return fail;

14 t ←− t +1;

15 return success;

2.3 Fully Parameterized Matching

The fully parameterized matching supports three cus-

tomizations by allowing the user to specify: (i) the list

of attributes to be considered; (ii) the order in which

these attributes are considered; and (iii) a desired sim-

ilarity degree for each attribute. In order to support all

the above-cited customizations, we used the concept

ATree-basedAlgorithmforRankingWebServices

171

of Criteria Table, introduced by (Doshi et al., 2004).

A Criteria Table, C, is a relation consisting of two

attributes, C.A and C.M. C.A describes the service

attribute to be compared, andC.M gives the least pre-

ferred similarity degree for that attribute. Let C.A

i

and

C.M

i

denote the service attribute value and the desired

degree in the ith tuple of the relation. C.N denotes the

total number of tuples in C.

Let S

R

be the service that is requested, S

A

be the

service that is advertised, and C be a Criteria Table.

A sufficient fully parameterized match exists between

S

R

and S

A

if for every attribute in C.A there exists an

identical attribute of S

R

and S

A

and the values of the

attributes satisfy the desired similarity degree as spec-

ified in C.M. Formally,

∀

i

∃

j,k

(C.A

i

= S

R

.A

j

= S

A

.A

k

) ∧ µ(S

R

.A

j

,S

A

.A

k

) C.M

i

⇒ SuffFullyParamMatch(S

R

,S

A

) 1 ≤ i ≤ C.N.

(3)

According to this definition, only the attributes speci-

fied by the user in the Criteria Table C are considered

during the matching process. The fully parameterized

matching is formalized in Algorithm 3 that follows

directly from Sentence (3).

Algorithm 3: Fully Parameterized Matching.

Input : S

R

, // Requested service.

S

A

, // Advertised service.

C, // Criteria Table.

Output: Boolean, // fail/success.

1 while (i ≤ C.N) do

2 while

j ≤ S

R

.N

do

3 if

S

R

.A

j

= C.A

i

then

4 Append S

R

.A

j

to rAttrSet;

5 j ←− j +1;

6 while

k ≤ S

A

.N

do

7 if

S

A

.A

k

= C.A

i

then

8 Append S

A

.A

k

to aAttrSet;

9 k ←− k+ 1;

10 i ←− i+ 1;

11 while (t ≤ C.N) do

12 if (µ(

rAttrSet

[t],

aAttrSet

[t]) ≺ C.M

t

) then

13 return fail;

14 t ←− t +1;

15 return success;

The complexity of the matching algorithms are

detailed in (Gmati, 2015).

3 SIMILARITY DEGREE

COMPUTING APPROACH

In this section, we first define the similarity degree

used in the matching algorithms and then discuss how

it is computed.

3.1 Similarity Degree Definition

A semantic match between two entities frequently in-

volves a similarity degree that quantifies the semantic

distance between the two entities participating in the

match. The similarity degree, µ(·,·), of two service at-

tributes is a mapping that measures the semantic dis-

tance between the conceptual annotations associated

with the service attributes. Mathematically,

µ : A×A → {Exact, Plug-in, Subsumption, Container,

Part-of, Fail}

where A is the set of all possible attributes. The

definitions of the mapping between two conceptual

annotations are given in (Doshi et al., 2004; Chakhar,

2013; Chakhar et al., 2014).

A preferential total order is established on the

above mentioned similarity maps: Exact ≻ Plug-in

≻ Subsumption ≻ Container ≻ Part-of ≻ Fail; where

a ≻ b means that a is preferred over b.

3.2 Similarity Degree Computing

To compute the similarity degrees, we generalized

and implemented an idea proposed by (Bellur and

Kulkarni, 2007). This idea starts by constructing a bi-

partite graph where the vertices in the left side corre-

spond to the concepts associated with advertised ser-

vices, while those in the right side correspond to the

concepts associated with the requested service. The

edges correspond to the semantic relationships be-

tween concepts. The authors in (Bellur and Kulka-

rni, 2007) assign a weight to each edge and then ap-

ply the Hungarian algorithm (Kuhn, 1955) to iden-

tify the complete matching that minimizes the maxi-

mum weight in the graph. The final returned degree is

the one corresponding to the maximum weight in the

graph.

We generalize this idea as follows. Let first as-

sume that the output of the matching algorithm is a

list mServices of matching Web services. The generic

structure of a row in mServices is as follows:

(S

A

i

,µ(S

A

i

.A

1

,S

R

.A

1

),··· , µ(S

A

i

.A

N

,S

R

.A

N

)),

where: S

A

i

is an advertised Web service, S

R

is

the requested Web service, N the total number of at-

tributes and µ(S

A

i

.A

j

,S

R

.A

j

) (j = 1, ··· ,N) is the sim-

ilarity degree between the requested Web service and

the advertised Web service on the jth attribute A

j

.

Two versions can be distinguished for the defi-

nition of the list mServices, along with the way the

similarity degrees are computed. The first version of

mServices is as follows:

(S

A

i

,µ

max

(S

A

i

.A

1

,S

R

.A

1

),··· , µ

max

(S

A

i

.A

N

,S

R

.A

N

)),

WEBIST2015-11thInternationalConferenceonWebInformationSystemsandTechnologies

172

where: S

A

i

, S

R

and N are as defined above; and

µ

max

(S

A

i

.A

j

,S

R

.A

j

) (j = 1,·· · , N) is the similarity de-

gree between the requested Web service and the ad-

vertised Web service on the jth attribute A

j

computed

by selecting the edge with the maximum weight in

the matching graph.

The second version of mServices is as follows:

(S

A

i

,µ

min

(S

A

i

.A

1

,S

R

.A

1

),··· ,µ

min

(S

A

i

.A

N

,S

R

.A

N

)),

where S

A

i

, S

R

and N are as defined above; and

µ

min

(S

A

i

.A

j

,S

R

.A

j

) (j = 1,··· ,N) is the similarity de-

gree between the requested Web service and the ad-

vertised Web service on the jth attribute A

j

computed

by selecting the edge with the minimum weight in

the matching graph.

4 SCORING WEB SERVICES

In this section, we propose a technique to compute the

scores of the Web services based on the input data.

4.1 Score Definition

First, we need to assign a numerical weight to ev-

ery similarity degree as follows: Fail: w

1

, Part-of:

w

2

, Container: w

3

, Subsumption: w

4

, Plug-in: w

5

and Exact: w

6

. These degrees correspond to the pos-

sible values of µ

⋄

(S

A

i

.A

j

,S

R

.A

j

) with (j = 1,· ·· ,N)

and where S

A

i

, S

R

and N are as defined above; and

⋄ ∈ {min,max}. In this paper, we assume that the

weights are computed as follows:

w

1

≥ 0, (4)

w

i

= (w

i−1

· N) + 1, i = 2, ··· ,N; (5)

where N is the number of attributes. This way of

weights computation ensures that a single higher sim-

ilarity degree will be greater than a set of N similarity

degrees of lower weights taken together. Indeed, the

weights values verify the following condition:

w

i

> w

j

· N, ∀i > j. (6)

Then, the initial score of an advertised Web service

S

A

is computed as followers:

ρ

⋄

(S

A

) =

i=N

∑

i=1

w

i

. (7)

The scores as computed by Equation (7) are not in the

range 0-1. Hence, we need to normalize these scores

by using the following procedure:

ρ

′

⋄

(S

A

) =

ρ

⋄

(S

A

) − min

K

ρ

⋄

(S

K

)

max

K

ρ

⋄

(S

K

) − min

K

ρ

⋄

(S

K

)

. (8)

This normalization procedure assigns to each adver-

tised Web service S

A

the percentage of the extent

of the similarity degrees scale (i.e., max

K

ρ

⋄

(S

K

) −

min

K

ρ

⋄

(S

K

)). It ensures that the scores cover all the

range [0,1]. In other words, the lowest score will be

equal to 0 and the highest score will be equal to 1. We

note that other normalization procedures can be used

(see (Gmati, 2015) for more details on these proce-

dures).

4.2 Score Computing Algorithm

The computing of the normalized scores is opera-

tionalized by Algorithm 4. This algorithm takes as in-

put a list mServices of Web services each is described

by a set of N similarity degrees where N is the num-

ber of attributes. The data structure mServices used

as input assumed to be defined as:

(S

A

i

,µ

⋄

(S

A

i

.A

1

,S

R

.A

1

),··· , µ

⋄

(S

A

i

.A

N

,S

R

.A

N

)),

where: S

A

i

is an advertised Web service, S

R

is the re-

quested Web service, N the total number of attributes;

⋄ ∈ {min,max}, and µ

⋄

(S

A

i

.A

j

,S

R

.A

j

) (j = 1,·· · , N)

is the similarity degree between the requested Web

service and the advertised Web service on the jth at-

tribute A

j

computed using one of the of two versions

given in Section 3.2.

Algorithm 4: ComputeNormScores.

Input :

mServices

, // List of Web services.

N, // Number of attributes.

Output:

mServices

, // List of Web services with normalized scores.

1 r ←−length (

mServices

);

2 t ←− 1;

3 while (t ≤ r) do

4 simDegrees←− the tth row in

mServices

;

5 s ←− ComputeInitialScore(simDegrees,N,w);

6

mServices

[t,N + 2] ←− s;

7 a ←−

mServices

[1,N + 2];

8 b ←−

mServices

[1,N + 2];

9 t ←− 1;

10 while (t < r) do

11 if (a >

mServices

[t +1,N +2])) then

12 a ←−

mServices

[t +1,N +2];

13 if (b <

mServices

[t +1,N +2])) then

14 b ←−

mServices

[t +1,N +2];

15 t ←− 1;

16 while (t ≤ r) do

17 ns ←− (

mServices

[t,N +2] − a)/(b− a);

18

mServices

[t,N + 2] ←− ns;

19 return

mServices

;

At the output, Algorithm 4 provides an updated

version of mServices by adding to it the normalized

scores of the Web services:

(S

A

i

,µ

⋄

(S

A

i

.A

1

,S

R

.A

1

),··· , µ

⋄

(S

A

i

.A

N

,S

R

.A

N

),ρ

′

⋄

(S

A

i

)),

ATree-basedAlgorithmforRankingWebServices

173

where ρ

′

⋄

(S

A

i

) is the normalized score of Web service

S

A

i

.

The function ComputeInitialScore in Algorithm 4

permits to compute the initial scores of Web services

using Equation (7). Function ComputeInitialScore

takes a row simDegrees of similarity degrees for a

given Web service and computes the initial score of

this Web service based on Equation (7). The list

simDegrees is assumed to be defined as follows:

(S

A

i

,µ

⋄

(S

A

i

.A

1

,S

R

.A

1

),··· ,µ

⋄

(S

A

i

.A

N

,S

R

.A

N

)),

where S

A

i

, S

R

, N and µ

⋄

(S

A

i

.A

j

,S

R

.A

j

) (j = 1,··· , N)

are as defined previously. It is easy to see that the list

simDegrees is a row from the data structure mSer-

vices introduced earlier.

The complexity of the score computing algorithm

is detailed in (Gmati, 2015).

5 TREE-BASED RANKING OF

WEB SERVICES

In this section, we propose a new algorithm for Web

services ranking.

5.1 Principle

The basic idea of the proposed ranking algorithm is

to use the two types of scores introduced in Section

3.2 to first construct a tree T and then apply a pre-

order tree traversal on T to identify the final and best

ranking. The construction of the tree requires thus to

use the score computing algorithm twice, once using

the minimum weight value and once using a the maxi-

mum weight value (see Section 3.2). In what follows,

we assume that the input of the ranking algorithm is

a list of matching Web services mServScores defined

as follows:

(S

A

i

,ρ

′

min

(S

A

i

),ρ

′

max

(S

A

i

)),

where: S

A

i

is an advertised Web service; and ρ

′

min

(S

A

i

)

and ρ

′

max

(S

A

i

) are the normalized scores of S

A

i

that are

computed based on the minimum weight value and

the maximum weight value, respectively.

The tree-based ranking algorithm given later in

Section 5.3 is composed of two main phases. The ob-

jective of the first phase is to construct the tree T. The

objective of the second phase is to identify the final

ranking using a pre-order tree traversal on T.

5.2 Tree Construction

The tree construction process starts by defining a root

node containing the initial list of Web services and

then uses different node splitting functions to progres-

sively split the nodes of each level into a collection of

new nodes. The tree construction process is designed

such that each of the leaf nodes will contain a sin-

gle Web service. Let first introduce the node splitting

functions.

5.2.1 Node Splitting Functions

The first node splitting function is formalized in Al-

gorithm 5. This function receives in entry one node

with a list of Web services and generates a ranked list

of nodes each with one or more Web services. The

function SortScoresMax and SortScoresMin in Algo-

rithm 5 permit to sort Web services based either on

the maximum edge value or the minimum edge value,

respectively. Function Split permit to split the Web

services in L

0

into a set of sublists, each with a subset

of services having the same score. The instructions in

lines 8-11 in Algorithm 5 permit to create a node for

each sublist in Sublists. The algorithm outputs a list

T of nodes ordered, according to the scores of Web

services in each node, from the left to the right.

Algorithm 5: Node splitting.

Input : Node, // A node.

NodeSplittingType

,// Node splitting type.

Output: L, // List of ranked nodes.

1 L ←

/

0;

2 L

0

← Node.mServScores;

3 if (

NodeSplittingType

=

′

Max

′

) then

4 L

0

←

SortScoresMax

(L

0

);

5 else

6 L

0

←

SortScoresMin

(L

0

);

7

SubLists

←

Split

(L

0

);

8 for ( for each elem in

SubLists

) do

9 create node n;

10 n.add(elem);

11 L.add(n);

12 return L;

The second node splitting function is formalized

in Algorithm 6. This functions permits to split ran-

domly a node into a set of nodes, each with one Web

service.

5.2.2 Tree Construction Algorithm

The tree construction process contains four steps:

1. Construction of Level 0. In this initialization

step, we create a root node r containing the list of Web

services to rank. At this level, the tree T contains only

the root node.

2. Construction of Level 1. Here, we split the

root node into a set of nodes using the node splitting

function (Algorithm 5) with the desired sorting type

WEBIST2015-11thInternationalConferenceonWebInformationSystemsandTechnologies

174

(i.e. based either on the maximum edge value or the

minimum edge value). At the end of this step, a new

level is added to the tree T. The nodes of this level

are ordered from left to right. Each node of this level

will contain one or several Web services. The Web

services of a given node will have the same score.

3. Construction of Level 2. Here, we split the

nodes of the previous level that have more than one

Web services using the node splitting function (Algo-

rithm 5) with a different sorting type than the previous

step. At the end of this step, a new level is added to

the tree T. The nodes of this level are ordered from

left to right. Each node of this level will contain one

or several Web services. The Web services of a given

node will have the same score.

4. Construction of Level 3. Here, we apply the

random splitting function given in Algorithm 6 to ran-

domly split the nodes of the previous level that has

more than one Web service. At the end of this step, a

new level is added to the tree T. All the nodes of this

level contain only one Web service.

Algorithm 6: Random Node Splitting.

Input : Node, // A node.

Output: L, // List of ranked nodes.

1 L ←

/

0;

2 L

0

← Node.mServScores;

3 i ← |L|;

4 Z ← L

0

;

5 while i > 0 do

6 select randomly a service n from Z;

7 create node n ;

8 n.add(elem);

9 L.add(n);

10 i ← i− 1;

11 Z ← Z \ {n};

12 return L;

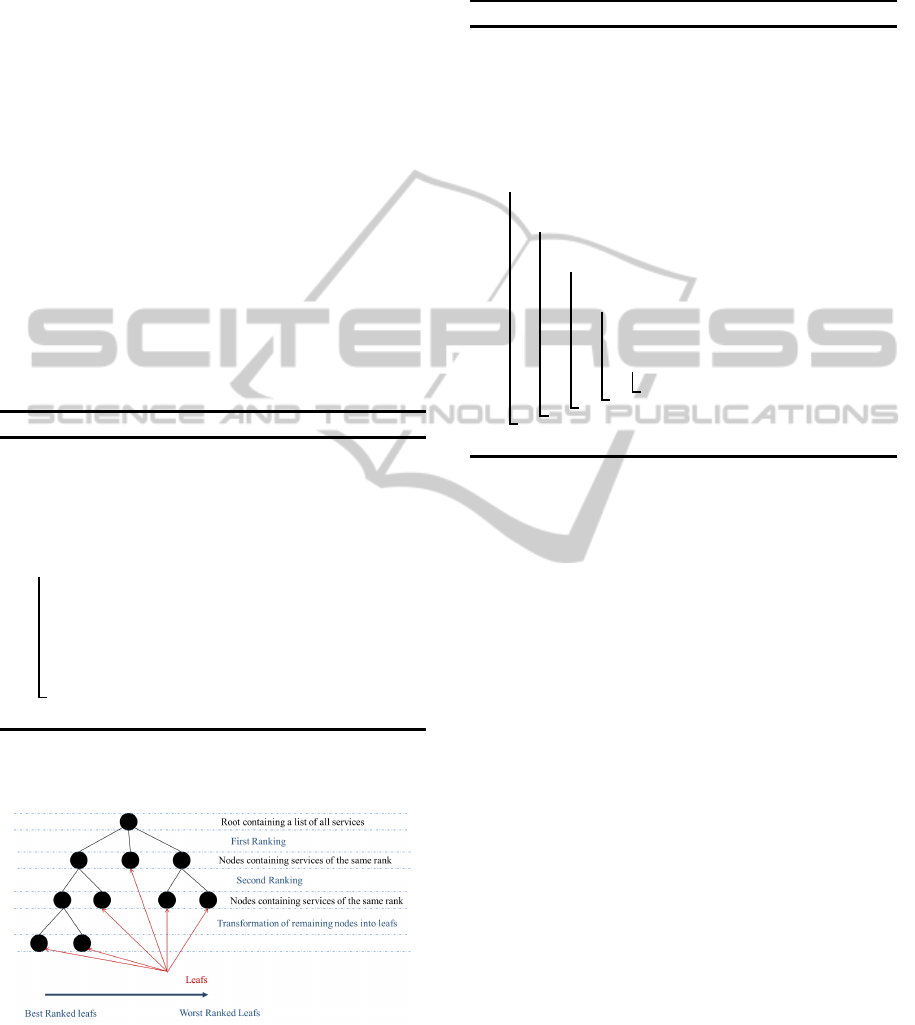

Figure 1 provides an example of a tree constructed

based on this idea.

Figure 1: Tree structure.

We may distinguish two version for the tree con-

struction process. The first version, which is shown in

Algorithm 7, uses the node splitting based on the Max

to generate the nodes of the second level and then the

node splitting based on the Min to generate the nodes

of the third level. The second version, which is not

give in this paper, uses an opposite order.

Algorithm 7: Tree Construction (Max-Min).

1 [h]

Input : mServScores, // Initial list of services with their scores.

Output: T, // Tree.

2 create node Node;

3 Node.add(mServScores);

4 add Node as root of the tree T;

5

FirstLevelNodes

← NodeSplitting(Node,

′

Max

′

);

6 for each node n

1

in

FirstLevelNodes

do

7 add n

1

as a child of the root;

8 if n

1

is not a leaf then

9

SecondLevelNodes

← NodeSplitting(Node,

′

Min

′

);

10 for each node n

2

in

SecondLevelNodes

do

11 add n

2

as a child of n

1

;

12 if n

2

is not a leaf then

13

ThirdLevelNodes

←

RandomNodeSplitting(n

2

);

14 for each node n

3

in

ThirdLevelNodes

do

15 add n

3

as a child of n

2

;

16 return T;

5.3 Tree-based Ranking Algorithm

We propose here a new algorithm for implementing

the solution proposed in Section 5.1. The idea of the

algorithm is to construct first a tree T using one of the

algorithms discussed in the previous section and then

scanning through this tree in order to identify the final

ranking. The identification of the best and final rank-

ing needs to apply a tree traversal (also known as tree

search) on the tree T. The tree traversal refers to the

process of visiting each node in a tree data structure,

exactly once, in a systematic way. Different types of

traversals are possible: pre-order, in-order and post-

order. They differ by the order in which the nodes are

visited. In this paper, we will use the pre-order type.

The idea discussed in the previous paragraph is

implemented by Algorithm 8. The main input for this

algorithm is the initial list mServScores of Web ser-

vices with their scores. This list is assumed to have

the same structure as indicated in Section 5.1. The

output of Algorithm 8 is a list FinalRanking of ranked

Web services.

The functions ComputeNormScoresMax and

ComputeNormScoresMin are not given in this paper.

They are similar to function ComputeNormScores

introduced previously in Algorithm 4. The scores

in function ComputeNormScoresMax are computed

by selecting the edge with the maximum weight

in the matching graph while the scores in function

ATree-basedAlgorithmforRankingWebServices

175

ComputeNormScoresMin are computed by selecting

the edge with the minimum weight in the matching

graph.

Algorithm 8: Tree-Based Ranking.

Input : mServScores, // List of matching services with their scores.

N, // Number of attributes.

SplittingOrder

,// Nodes splitting order.

Output:

FinalRanking

, // Ranked list of Web services.

1 T ←

/

0;

2 mServScores ←

ComputeNormScoresMax(

mServScores,N

)

;

3 mServScores ←

ComputeNormScoresMin(

mServScores,N

)

;

4 if (

SplittingOrder

=

′

MaxMin

′

) then

5 T ←

ConstructTreeMaxMin(

mServScores

)

;

6 else

7 T ←

ConstructTreeMinMax(

mServScores

)

;

8

FinalRanking

←

TreeTraversal

(T);

9 return

FinalRanking

;

Algorithm 8 can be organized into two phases.

The first phase concerns the construction of the tree

T. This phase is implemented by the instructions in

lines 1-7. According to the type of nodes splitting or-

der (Max-Min or Min-Max), Algorithm 8 uses either

function ConstructTreeMaxMin (for Max-Min order)

or function ConstructTreeMinMax (for Min-Max or-

der) to construe the tree.

The second phase of Algorithm 8 concerns the

identification of the best and final ranking by apply-

ing a pre-order tree traversal on the tree T. The pre-

order tree traversal contains three main steps: (1) ex-

amine the root element (or current element); (2) tra-

verse the left subtree by recursively calling the pre-

order function; and (3) traverse the right subtree by

recursively calling the pre-order function. The pre-

order tree traversal is implemented by Algorithm 9.

Algorithm 9: Tree Traversal.

Input : T, // Tree.

Output: L, //Final ranking.

1 L ←

/

0;

2

CurrNode

← T.R;

3 if

CurrNode

contains a single Web service then

4 Let

currService

be the single Web service in

CurrNode

;

5 L.Append(

currService

);

6 for each child f of

CurrNode

do

7 Traverse (f,

CurrNode

);

8 return L;

Algorithm 9 takes as input a tree T and generates

the final ranking list L. Algorithm 9 scans the tree T

and picks out all leaf nodes of T ordered from the left

to the right.

The complexity of the tree-based ranking algo-

rithms are detailed in (Gmati, 2015).

6 PERFORMANCE EVALUATION

In what follows, we first compare the tree-based al-

gorithm presented in this paper to the score-based

(Gmati et al., 2014) and rule-based (Gmati et al.,

2015) ranking algorithms and then discuss the effect

of the edge weight order on the final results.

The SME2 (Klusch et al., 2010; K¨uster and

K¨onig-Ries, 2010), which is an open source tool for

testing differentsemantic matchmakers in a consistent

way, is used for this comparative study. The SME2

uses OWLS-TC collections to provide the matchmak-

ers with Web service descriptions, and to compare

their answers to the relevance sets of the various

queries.

The experimentations have been conducted on a

Dell Inspiron 15 3735 Laptop with an Intel Core I5

processor (1.6 GHz) and 2 GB of memory. The

test collection used is OWLS-TC4, which consists of

1083 Web service offers described in OWL-S 1.1 and

42 queries.

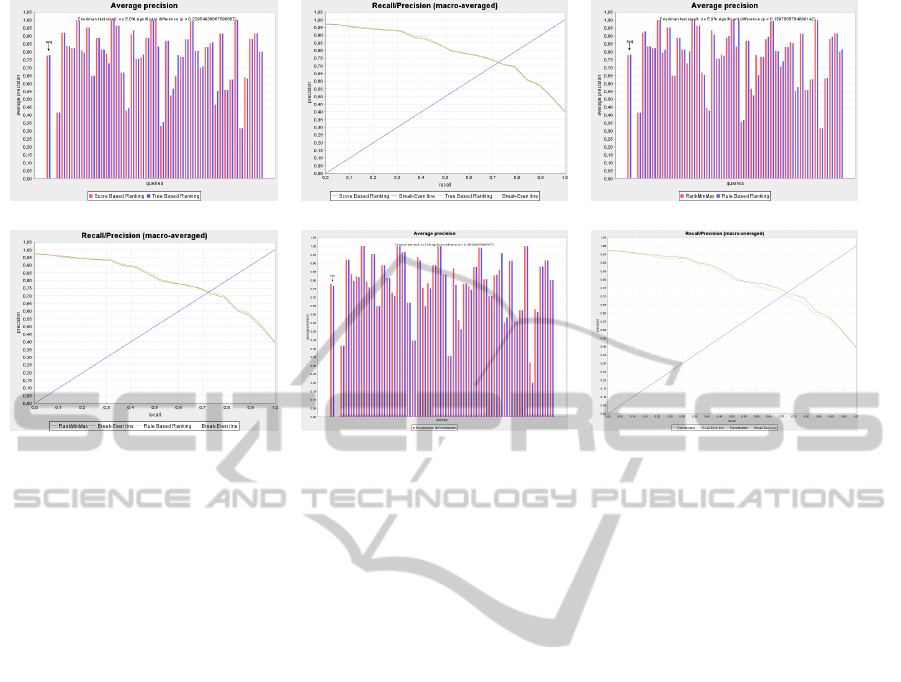

Figures 2.a and 2.b show that the tree-based rank-

ing algorithm has better average precision and recall

precision than score-based ranking algorithm. Figure

2.c shows that the tree-based ranking algorithm has a

slightly better average precision than rule-based rank-

ing algorithm. Figure 2.d shows that rule-based rank-

ing algorithm is slightly faster than tree-based ranking

algorithm.

The tree-based ranking algorithm is designed to

work with either the Min-Max or Max-Min versions

of the tree construction algorithm. As discussed in

Section 5.2.2, the main difference between these ver-

sions is the order in which the edge weight values are

used. To study the effect of tree construction versions

on the final results, we conduced a series of experi-

ments using the OWL-TC test collection. We evalu-

ated the two versions in respect to the Average Preci-

sion and the Recall/Precision metrics.

The result of the comparison is shown in Fig-

ures 2.e and 2.f. According to these figures, we con-

clude that Min-Max version outperforms the Max-

Min. However, this final constatation should not be

taken as a rule since it might depend on the consid-

ered test collection.

7 RELATED WORK

We may distinguish three basic Web services rank-

ing approaches, along with the nature of information

used for the ranking process: (i) ranking approaches

based only on the Web service description informa-

tion; (ii) ranking approaches based on external infor-

WEBIST2015-11thInternationalConferenceonWebInformationSystemsandTechnologies

176

(a) (b) (c)

(d) (e) (f)

Figure 2: Performance analysis.

mation; and (iii) ranking approaches based on the user

preferences.

The first ranking approach relies only on the

information available in the Web service descrip-

tion, which concerns generally the service capability

(IOPE attributes), the service quality (QoS attributes)

and/or the service property (additional information).

This type of ranking is the most used, since all the

needed data is directly available in the Web service

description. Among the methods based on this ap-

proach, we cite (Manoharan et al., 2011) where the

authors combine the QoS and the fuzzy logic and pro-

pose a ranking matrix. However, this approach is cen-

tered only on the QoS and discards the other Web

service attributes. We also mention the work of (Sk-

outas et al., 2010) where the authors propose a rank-

ing method that computes a dominance score between

services. The calculation of these scores requires a

pairwise comparison that increases the time complex-

ity of the ranking algorithm.

The second ranking approach uses both the Web

service description and other external information

(see, e.g., (Kuck and Gnasa, 2007)(Kokash et al.,

2007)(Maamar et al., 2005)(Manikrao and Prabhakar,

2005)(Segev and Toch, 2011)). For instance, the au-

thors in (Kuck and Gnasa, 2007) take into account

additional information concerning time, place and lo-

cation in order to rank Web services. In (Kokash

et al., 2007), the authors rank Web services on the

basis of the user past behavior. The authors in (Maa-

mar et al., 2005) rely their ranking on the customer

and providers situations. However, these additional

constraints make the system more complex. The au-

thors in (Segev and Toch, 2011) extract the context

of Web services and employ it as an additional infor-

mation during the ranking. The general problem with

this type of approaches is that the use of external in-

formation can only be performed in some situations

where the data is available, which is not always the

case in practice.

The third ranking approach is based on the user

preference. In (Beck and Freitag, 2006), for instance,

the authors use some constraints specified by the user.

A priority is then assigned to each constraint or group

of constraints. The algorithm proposed by (Beck and

Freitag, 2006) uses then a tree structure to perform the

matching and ranking procedure.

8 CONCLUSION

In this paper, we proposed a new algorithm for Web

services ranking. This algorithm relies on a tree data

structure that is constructed based on two types of

Web services scores. The final ranking is obtained

by applying a pre-order traversal on the tree and picks

out all leaf nodes ordered from the left to the right.

The tree-based algorithm proposed in this paper is

compared two other ones: score-based algorithm and

rule-based algorithm. The performance evaluation of

the three algorithms shows that the tree-based algo-

rithm outranks the score-based algorithm in all cases

and most often better than the rule-based algorithm.

ATree-basedAlgorithmforRankingWebServices

177

REFERENCES

Beck, M. and Freitag, B. (2006). Semantic matchmaking

using ranked instance retrieval. In Proceedings of the

1st International Workshop on Semantic Matchmak-

ing and Resource Retrieval, Seoul, South Korea.

Bellur, U. and Kulkarni, R. (2007). Improved matchmaking

algorithm for semantic Web services based on bipar-

tite graph matching. In IEEE International Confer-

ence on Web Services, pages 86–93, Salt Lake City,

Utah, USA.

Chakhar, S. (2013). Parameterized attribute and service

levels semantic matchmaking framework for service

composition. In Fifth International Conference on Ad-

vances in Databases, Knowledge, and Data Applica-

tions (DBKDA 2013), pages 159–165, Seville, Spain.

Chakhar, S., Ishizaka, A., and Labib, A. (2014). Qos-

aware parameterized semantic matchmaking frame-

work for Web service composition. In Monfort, V.

and Krempels, K.-H., editors, WEBIST 2014 - Pro-

ceedings of the 10th International Conference on

Web Information Systems and Technologies, Volume

1, Barcelona, Spain, 3-5 April, 2014, pages 50–61,

Barcelona, Spain. SciTePress.

Chakhar, S., Ishizaka, A., and Labib, A. (2015). Seman-

tic matching-based selection and qos-aware classifi-

cation of web services. In Selected Papers from The

10th International Conference on Web Information

Systems and Technologies (WEBIST 2014), Lecture

Notes in Business Information Processing. Springer-

Verlag, Berlin, Heidelberg, Germany. forthcoming.

Doshi, P., Goodwin, R., Akkiraju, R., and Roeder,

S. (2004). Parameterized semantic matchmaking

for workflow composition. IBM Research Report

RC23133, IBM Research Division.

Fu, P., Liu, S., Yang, H., and Gu, L. (2009). Matching algo-

rithm of Web services based on semantic distance. In

International Workshop on Information Security and

Application (IWISA 2009), pages 465–468, Qingdao,

China.

Gmati, F. E. (2015). Parameterized semantic matchmaking

and ranking framework for web service composition.

Master thesis, Higher Business School of Tunis (Uni-

versity of Manouba) and Institute of Advanced Busi-

ness Studies of Carthage (University of Carthage),

Tunisia.

Gmati, F. E., Yacoubi-Ayadi, N., Bahri, A., Chakhar, S., and

Ishizaka, A. (2015). PMRF: Parameterized matching-

ranking framework. Studies in Computational Intelli-

gence. Springer. forthcoming.

Gmati, F. E., Yacoubi-Ayadi, N., and Chakhar, S. (2014).

Parameterized algorithms for matching and ranking

Web services. In Proceedings of the On the Move to

Meaningful Internet Systems: OTM 2014 Conferences

2014, volume 8841 of Lecture Notes in Computer Sci-

ence, pages 784–791. Springer.

Klusch, M., Dudev, M., Misutka, J., Kapahnke, P., and

Vasileski, M. (2010). SME

2

Version 2.2. User Man-

ual. The German Research Center for Artificial Intel-

ligence (DFKI), Germany.

Kokash, N., Birukou, A., and DAndrea, V. (2007). Web

service discovery based on past user experience. In

Proceedings of the 10th International Conference on

Business Information Systems, pages 95–107.

Kuck, J. and Gnasa, M. (2007). Context-sensitive service

discovery meets information retrieval. In Fifth Annual

IEEE International Conference on Pervasive Com-

puting and Communications Workshops (PerCom’07),

pages 601–605.

Kuhn, H. (1955). The Hungarian method for the assignment

problem. Naval Research Logistics Quarterly, 2:83–

97.

K¨uster, U. and K¨onig-Ries, B. (2010). Measures for bench-

marking semantic Web service matchmaking correct-

ness. In Proceedings of the 7th International Con-

ference on The Semantic Web: Research and Appli-

cations - Volume Part II, ESWC’10, pages 45–59,

Berlin, Heidelberg. Springer-Verlag.

Maamar, Z., Mostefaoui, S., and Mahmoud, Q. (2005).

Context for personalized Web services. In In Proceed-

ings of the 38th Annual Hawaii International Con-

ference on System Sciences (HICSS’05), pages 166b–

166b.

Manikrao, U. and Prabhakar, T. (2005). Dynamic selec-

tion of Web services with recommendation system. In

Proceedings of the International Conference on Next

Generation Web Services Practices (NWeSP 2005),

pages 117–121.

Manoharan, R., Archana, A., and Cowla, S. (2011). Hybrid

Web services ranking algorithm. International Jour-

nal of Computer Science Issues, 8(2):83–97.

Paolucci, M., Kawamura, T., Payne, T., and Sycara, K.

(2002). Semantic matching of web services capabili-

ties. In Proceedings of the First International Seman-

tic Web Conference on The Semantic Web, ISWC ’02,

pages 333–347, London, UK, UK. Springer-Verlag.

Rong, W., Liu, K., and Liang, L. (2009). Personalized Web

service ranking via user group combining association

rule. In IEEE International Conference on Web Ser-

vices (ICWS 20090, pages 445–452.

Segev, A. and Toch, E. (2011). Context based matching

and ranking of Web services for composition. IEEE

Transactions on Services Computing, 3(2):210–222.

Skoutas, D., Sacharidis, D., Simitsis, A., and Sellis, T.

(2010). Ranking and clustering Web services using

multicriteria dominance relationships. IEEE Transac-

tions on Services Computing, 3(3):163–177.

WEBIST2015-11thInternationalConferenceonWebInformationSystemsandTechnologies

178