Studying the User Experience with a Multimodal Pedestrian Navigation

Assistant

Gustavo Rovelo

1,2

, Francisco Abad

3

, M. C.-Juan

3

and Emilio Camahort

3

1

Expertise Centre for Digital Media, Hasselt University, Wetenschapspark 2, Diepenbeek, Belgium

2

Dpto. de Sistemas Inform

´

aticos y Computaci

´

on, Universitat Polit

`

ecnica de Val

`

encia, Camino de Vera S/N, Valencia, Spain

3

Instituto Universitario de Autom

´

atica e Inform

´

atica Industrial, Universitat Polit

`

ecnica de Val

`

encia,

Camino de Vera S/N, Valencia, Spain

Keywords:

Multimodal Interface, User Evaluation, Mobile Augmented Reality.

Abstract:

The widespread usage of mobile devices together with their computational capabilities enables the implemen-

tation of novel interaction techniques to improve user performance in traditional mobile applications. Navi-

gation assistance is an important area in the mobile domain, and probably Google Maps is the most popular

example. This type of applications is highly demanding for user’s attention, especially in the visual channel.

Tactile and auditory feedback have been studied as alternatives to visual feedback for navigation assistance to

reduce this dependency. However, there is still room for improvement and more research is needed to under-

stand, for example, how the three feedback modalities complement each other, especially with the appearance

of new technology such as smart watches and new displays such as Google Glass. The goal of our work is to

study how the users perceives multimodal feedback when their route is augmented with directional cues. Our

results show that tactile guidance cues produced the worst user performance, both objectively and subjectively.

Participants reported that vibration patterns were hard to decode. However, tactile feedback was an unobtru-

sive technique to inform participants when to look to the mobile screen or listen to the spoken directions. The

results show that combining feedback modalities produces good user performance.

1 INTRODUCTION

Smartphones combine, among other features, a high

resolution display with multitouch capabilities, dif-

ferent tracking sensors, permanent Internet connec-

tion and good processing power. The widespread us-

age of these devices has created new opportunities for

Human-Computer Interaction researchers (HCI), de-

veloping novel types of applications and interaction

techniques. One of the main challenges in this area is

that smartphones are used, most of the time, in highly

demanding environments for users’ attention, espe-

cially when they are on the move (Jameson, 2002;

Oulasvirta et al., 2005). For that reason, developing

mobile applications that implement efficient user in-

teraction is very important. In particular, pedestrian

navigation assistants have to (1) provide robust guid-

ing cues and (2) avoid distracting users from keeping

an eye on the road or socially interact with friends.

Different studies in this particular application do-

main have investigated how to use auditory, tactile

and visual cues to improve how pedestrians receive

navigation assistance (Liljedahl et al., 2012; Pielot

and Boll, 2010). Multimodal feedback offers many

benefits in this regard: eyes-free operation, language

independence, faster decision-making, and reduced

cognitive load (Jacob et al., 2011b). Multimodal feed-

back is also appreciated by the users (Pielot et al.,

2012b): complementary tactile feedback was used in

one third of the logged routes where users had the op-

tion to receive only visual feedback on a digital map

similar to Google Maps. However, as also mentioned

in many of those works, there is still room for analy-

sis of the properties of multimodal feedback and thus,

improvement of the mobile interfaces.

The main contribution of our work is to study the

scenario where visual directional cues are presented

using the smartphone’s screen as a video see-through

display, where the user can observe both the surround-

ings and the visual feedback. We build our multi-

modal guidance system upon the findings of previous

research, and evaluate how using the augmented view

of the path to provide visual directional cues affects

the experience and performance of the user in our mo-

438

Rovelo G., Abad F., Juan M. and Camahort E..

Studying the User Experience with a Multimodal Pedestrian Navigation Assistant.

DOI: 10.5220/0005297504380445

In Proceedings of the 10th International Conference on Computer Graphics Theory and Applications (GRAPP-2015), pages 438-445

ISBN: 978-989-758-087-1

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

bile application.

Visual direction cues were presented as virtual ar-

rows or paths superimposed on the rear camera feed,

showing an augmented view of the path as depicted

in Figure 1. Auditory and tactile direction cues were

presented as spoken directions and vibration patterns,

respectively. Based on the results of (Vainio, 2009),

we designed our system to provide continuous rhyth-

mic feedback to guide the user in the navigation task.

Figure 1: Visual directional cues augment the video feed of

the rear camera on the smartphone’s display, letting the user

perceive both the environment and the guidance at the same

time.

We invited a group of volunteers to complete a

predefined route in our university, a semi-controlled

environment where they could safely perform the task

while still being immersed in a realistic scenario. The

results of the statistical analysis show that vibration

patterns produced the worst results when used alone:

participants needed more time completing the route

and in general, decoding directions. However, when

this feedback was combined with auditory or visual

modalities it produced better results. Participants

mentioned that vibrations indicated them when to put

more attention to the auditory directions or when to

observe the smartphone’s screen. On the other hand,

auditory and visual feedback produced good results

both alone and combined. These modalities were

more intuitive. However, participants reported that

the sun glares made it difficult to read on-screen mes-

sages and the noise level in the environment affected

hearing the auditory directional cues.

2 RELATED RESEARCH

Previous research has presented different navigation

prototypes that use vibrotactile output devices to pro-

vide directional information to pedestrians. For ex-

ample using a belt with 6 tactors (tactile actuators) to

provide navigation assistance (Pielot et al., 2009). It

resulted more efficient for following the route from

point A to point B than map-based navigation.

In NaviRadar (R

¨

umelin et al., 2011) the system

provided different vibration patterns using an exter-

nal device connected via bluetooth to a mobile phone.

The user received feedback when an automatic radar

sweeping mechanism crossed the area containing the

direction to follow. This approach produced effective

results, as participants could follow the directional

cues easily.

Continuous tactile feedback produced good re-

sults guiding pedestrian navigation in the Pocket Nav-

igator system (Pielot et al., 2010). The user receives

vibrotactile feedback when pointing the mobile phone

in the right direction and helped participants to com-

plete the required route successfully.

Using tactile feedback to indicate the direction to

follow without forcing the user to complete a prede-

fined route to reach a specific destination is another

approach studied in the past (Robinson et al., 2010).

Even when they did not find statistically significant

differences with respect to the base line condition, the

results of the tactile feedback were slightly better.

Tactile feedback has also been combined with

visual feedback (Jacob et al., 2011a; Jacob et al.,

2011b): using a colour code, i.e. green, orange and

red; to indicate the required navigation task (continue

with the route, scan for new direction, and error in

route) and vibrations that indicate the direction to fol-

low. Our experiment proposes a less abstract repre-

sentation of visual directional cues, augmenting the

view of the route with virtual graphical directions. We

expect the AR view to improve on the code-based di-

rections, as it provides a live view of the surroundings

of the user. As shown in (Chittaro and Burigat, 2005),

arrows displayed on top of pictures of the route pro-

vide efficient guidance.

Tactile navigation has also been combined with

auditory feedback for navigation tasks such as sight-

seeing (Magnusson et al., 2010; Szymczak et al.,

2012). In this case, vibrotactile feedback was effi-

ciently used to guide tourists, while auditory feedback

provided information about the points of interest close

to the user.

On the other hand, different variations of audi-

tory feedback have been proposed as navigation aids.

For example, using spatial sound as guiding feedback,

asking the user to scan the environment to find the

new direction, moving the audio source from one ear

plug to another, and increasing the intensity when the

user points the device in the right direction (Liljedahl

StudyingtheUserExperiencewithaMultimodalPedestrianNavigationAssistant

439

and Papworth, 2012). However, this approach re-

quires the user wearing headphones that can isolate

users from their surroundings, for example, interfer-

ing with the conversation with another person. A sim-

ilar approach is used in “gpsTunes” (Strachan et al.,

2005). That system proposes to use changes in the

volume of music, increasing it when the user comes

closer to the desired place. Their results show that

these auditory cues can help users complete naviga-

tion tasks successfully.

All the different approaches have proved to some

extent the benefits of tactile and auditory feedback to

complement or replace visual navigation aids. How-

ever, there are open questions regarding how the

three feedback modalities can be used together; espe-

cially with the increasing processing power of mobile

computing platforms and wearable devices that can

present visual feedback without distracting the user’s

attention.

3 EXPERIMENT DESCRIPTION

We evaluated the effect of multimodal feedback for

pedestrian navigation assistance guided by the follow-

ing research questions:

• How useful is visual feedback—the augmented

view—to provide directional cues? This type of

feedback typically requires to deviate visual at-

tention from the path to the screen and can also

be affected by visibility problems, caused for ex-

ample by sun glares.

• How useful is auditory feedback? In a real-world

situation, this type of feedback can be missed be-

cause of noise. However it also represents an in-

tuitive way to give direction cues, and can be used

when vision is not available.

• How useful and intuitive is tactile feedback to pro-

vide direction cues?

• Does the combination of feedback modalities im-

prove user performance?

During the experiment, we gathered subjective in-

formation from participants about their experience

using the application through questionnaires and in-

terviews. The questionnaires included yes/no, open,

multiple choice and Likert scale questions (from 1 to

5, the lowest and the highest score for each question

respectively).

The questionnaire included a general Section for

every participant in the experiment, but it also in-

cluded specific questions regarding visual, auditory

and tactile feedback for those participants that com-

pleted the path receiving them. We also quantified

participants’ performance according to:

• Time to complete the route (T ): Time required to

complete the route, from the moment the partici-

pant chooses the destination, to the confirmation

of the end-of-route signal.

• Time to confirm the reception of the end-of-route

signal (T

e

): Time a participant takes to acknowl-

edge the end of route signal.

• Average number of missed checkpoints (N

mc

):

Number of times a participant missed a check-

point where she was supposed to turn. We de-

fine a checkpoint as a place on the path where

the participant has to make a decision: turn left

or right, keep walking straight ahead, or confirm

the arrival at the destination. A checkpoint is con-

sidered missed when the participant reaches the

checkpoint but does not turn as indicated and con-

tinues walking in the wrong direction. We set the

threshold distance to 5 meters from the geograph-

ical coordinate of the checkpoint.

3.1 Methodology

Every participant in the experiment started the trial

reading the written instructions about how to inter-

pret the information given by the application. They

also filled out a questionnaire with their demograph-

ics. After that, and before starting to follow the path,

a member of our team confirmed that the instruc-

tions were understood, and gave some final explana-

tion when it was required. Participants were also in-

structed about the purpose of the experiment: follow

the directions provided by the system as accurately as

possible. They were kindly asked not to stop during

the trial for any reason that was not related to the ex-

periment, such as talking to a friend.

Participants were also explicitly instructed to keep

the phone pointing along their way to avoid noise in

the above measurements. We did not observe any

miss-pointing problems and we did not have to dis-

card any sample.

When they were ready to start, participants had

to select the destination from a menu option. In

this case, there was only one available destination to

choose from. Then, participants had to start the route,

followed by one member of our team from an approx-

imate distance of 5 meters. No interventions were re-

quired during the whole experiment.

When participants reached the destination point at

the main entrance of one of the university buildings,

they had to touch the screen of the smartphone two

GRAPP2015-InternationalConferenceonComputerGraphicsTheoryandApplications

440

times—two taps—in order to confirm the reception of

the end-of-route message. After completing the path,

participants answered a written questionnaire regard-

ing their experience.

Our application is able to show visual elements

either in portrait or landscape modes. However, we

performed a pilot experiment with members of our re-

search institute to evaluate both layouts. The 10 par-

ticipants of the pilot experiment preferred holding the

smartphone horizontally, in landscape mode. One of

the comments was that “the text and the rest of the

content was better viewed this way”. For this reason,

we modified the application to only show the content

in landscape mode, and not responding to the smart-

phone’s orientation changes.

We performed the experiment with first year stu-

dents during their first month at the university. We did

not mention the destination building to avoid biasing

the results. We also performed the experiments during

class hours, to avoid revealing the route in advance to

participants before they performed the experiment.

Participants were randomly assigned to one of the

following groups, determining what combination of

feedback they received during the experiment:

Group 1 (A) Auditory feedback only.

Group 2 (T) Tactile feedback only.

Group 3 (V) Visual feedback only.

Group 4 (AT) Auditory and tactile feedback.

Group 5 (AV) Auditory and visual feedback.

Group 6 (TV) Tactile and visual feedback.

Group 7 (ATV) Auditory, tactile and visual feed-

back.

3.2 Participants

There were 77 participants in our experiment, 54

men and 23 women. All of them first year univer-

sity students. They were between 17 and 24 years

old (19.03 ± 1.71 in average). Only 11 participants

(14.29%) had used an AR application before; 31 par-

ticipants (40.26%) had used a GPS before, and 23 par-

ticipants (29.87%) owned a smartphone.

3.3 Apparatus

After evaluating the available options for developing

AR mobile applications, we decided to develop our

pedestrian navigation assistant for a smartphone run-

ning Android OS. The application has the same basic

purpose as a commercial GPS system: providing di-

rection cues to reach a desired destination, but with

subtle differences that are explained in this Section.

We used the digital compass, the GPS and the

built-in camera of a smartphone with a microproces-

sor running at 1GHz. It has a 3.7

00

display (480 × 800

pixels resolution), a 5 MP rear camera that is able to

record video at 24 fps at a maximum resolution of

640 × 480 pixels.

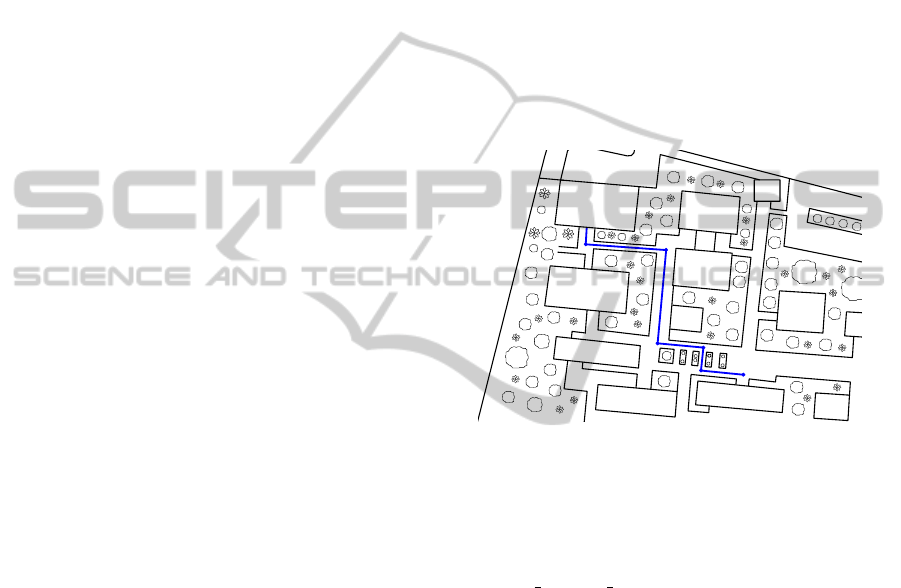

We constructed a virtual map of one portion of the

university and designed a path to follow during our

experiment. It is 155 m long, and it has 6 checkpoints

where the system provides direction cues: 3 left turns,

2 right turns, and the destination point. We carefully

chose the path to avoid zones which may cause track-

ing issues because of buildings or trees blocking the

GPS signal. Figure 2 shows the map of the route. The

path can lead to 7 possible buildings, and there are

different alternative routes to follow from start to end.

Start

End

Figure 2: The route every participant followed.

Battery consumption was not an issue for our pur-

poses, hence the screen was turned on during the en-

tire experiment, we collected GPS data every 200

ms and set the request of orientation data with the

SENSOR DELAY FASTEST constant, which, as de-

scribed by the official Android SDK documentation,

makes the application to get sensor data as fast as pos-

sible.

The standard interface of the application includes

four basic elements: the video stream from the mobile

phone’s camera, the application menu (on demand),

the destination and the distance to the destination la-

bels. These elements are always visible, regardless of

the feedback combination under evaluation.

During the execution, the application can be in one

of four states: (i) the participant points the mobile in

the right direction, (ii) the participant has to turn, (iii)

the participant has missed a checkpoint and has to go

back, and (iv) the participant has reached the final des-

tination.

For example, consider the following scenario:

when the participant approaches a point where she has

to turn left, the application passes from state 1 to state

2; if she misses the turn, and keeps walking, there is

StudyingtheUserExperiencewithaMultimodalPedestrianNavigationAssistant

441

a transition to state 3. When the participant walks

back pointing the mobile phone in the direction of the

checkpoint, there is a transition to state 1, and when

the missed checkpoint is reached, state 2 is activated

again to point the participant in the right direction. Fi-

nally, when the participant reaches the destination, the

system presents a message and stops providing feed-

back. The following sections describe the different

types of feedback provided during the experiment.

3.3.1 Visual Feedback

To enhance the system described in (Chittaro and

Burigat, 2005), when visual feedback is active, the

participant is presented with these elements: a virtual

path drawn on the camera’s view when the participant

points the mobile phone in the right direction towards

the next checkpoint, an arrow pointing to the correct

direction when the participant has to turn or has devi-

ated from the right path, and a radar image that codes

direction and distance to target. Figure 1 depicts the

visual elements of the interface when the user is point-

ing the mobile phone in the right direction.

When the subject completes the path and reaches

the desired destination, a visual signal is shown on the

screen to indicate that the task has finished.

We employed OpenGL ES to draw the virtual ele-

ments of our application on top of the camera’s video

feed.

3.3.2 Auditory Feedback

Auditory feedback presents navigation cues as any

commercial GPS device does. In the first state, a syn-

thetic voice informs the participant to continue in that

direction: “Walk straight ahead”. When the partici-

pant has to turn left or right, the synthetic voice indi-

cates so: “Turn left” or “Turn right”. When the par-

ticipant has missed a checkpoint, the synthetic voice

indicates so: “You missed a checkpoint and you need

to go back”. Finally, when the participant reaches the

final point in the path, the voice utters the message:

“You have reached your destination”.

Each one of the voice directions is repeated once,

when a state change occurs. However, participants

could ask for the repetition of the last message select-

ing an option from the menu. We used the Text-To-

Speech features of the Android library to generate the

voice instructions.

3.3.3 Tactile Feedback

Tactile feedback is provided by means of the vibrator

of the mobile phone using the magic wand metaphor

(Fr

¨

ohlich et al., 2011), that requires the participant

pointing the mobile phone in the right direction to re-

ceive the directional information. This metaphor has

been used before in way finding tasks with good re-

sults (Pielot et al., 2012a; Raisamo et al., 2012).

We follow a similar approach to the one proposed

by (Raisamo et al., 2012), changing the vibration

pattern at a checkpoint to attract participant’s atten-

tion. Our system also required participants to turn

the phone scanning the space around them to find the

new direction. Our system uses the following vibra-

tion patterns: when the participant is on the right path,

a repetition of 0.5 s vibration pulses separated by 1 s

periods with no vibration; an approaching change in

direction is represented as a sequence of 0.5 s vibra-

tion, 0.3 s with no vibration, 0.5 s vibration and 1

s with no vibration; when the destination is reached,

three 0.5 s vibration pulses, separated by 0.3 s with no

vibration as a confirmation, and then the application

stops producing feedback.

4 STATISTICAL ANALYSIS

To analyse the results of the questionnaires, we used

the Kruskal-Wallis Rank Sum test to compare the ef-

fect of feedback modality on the response of the par-

ticipants to Likert scale questions and the Wilcoxon

rank sum test with continuity correction to compare

the effect of gender and previous experience using a

GPS on the response of the participants to Likert scale

questions.

Shapiro-Wilk test of normality and Bartlett Test of

Homogeneity of Variances showed that our data did

not fulfil ANOVA prerequisites. Therefore, we used

data transformation techniques to be able to use para-

metric tests in the analysis of the performance mea-

sures. In this Section we use the * mark to highlight

statistically significant differences with p < 0.05 and

the ** statistically significant differences with p <

0.01.

4.1 Questionnaires and Interviews

When asked how much participants liked the appli-

cation, 59 out of the 77 (76.62%) chose the level 5

in the Likert scale; 15 (19.48%) chose level 4, and

3 (3.90%) participants chose level 3. Neither the

Kruskal-Wallis Rank Sum of Feedback modality nor

the Wilcoxon rank sum test with continuity correc-

tion for Gender and Previous Experience using a GPS

showed any statistically significant effect on the de-

gree to which participants liked or disliked our appli-

cation.

Regarding how easy to use the application was,

GRAPP2015-InternationalConferenceonComputerGraphicsTheoryandApplications

442

52 participants (67.53%) rated it with a 5 in the Lik-

ert scale; 9 participants (11.69%) with a 4, 11 partic-

ipants (14.29%) with a 3, 4 participants (5.19%) with

a 2 and only one participant (1.30%) with a 1. In this

case, only the Gender factor had a statistically signif-

icant effect on the easiness level (Wilcoxon rank sum

test with continuity correction W = 452.5, p = 0.02).

Women rated the easiness level with a median of 4

(IQR = 2) in the Likert scale, while men rated the

easiness of using the application with a median of 5

(IQR = 0).

The sun glares caused problems when reading the

messages on the screen for 41 participants (53.25%).

We should take into account that even participants of

groups without visual feedback had the distance to

target and the destination labels on the screen. None

of the factors we analysed had a statistically signifi-

cant effect on the number of participants that reported

problems with sun glares. On the other hand, all the

participants believed that using more than one feed-

back modality would improve the effectiveness of the

system.

None of the participants that completed the path

receiving auditory feedback (A, AT, AV or ATV

groups) considered it annoying. They also reported

having all the information they needed to complete

the route. The frequency of auditory feedback was

appropriate for 37 of the 44 participants (84.09%).

However, none of the factors under analysis present

statistically significant differences. Auditory feed-

back usefulness was rated with the highest score by

30 of the 44 participants (68.18%); 7 participants

(15.91%) chose level 4 in the Likert scale; 5 par-

ticipants (11.36%) chose level 3 and only 2 partici-

pants (4.55%) rated it with a 2. The volume of audi-

tory feedback was a problem for 6 participants, who

mentioned that the voice was too low (even when the

smartphone speaker was set at its maximum level).

Another comment made by a participant was that lis-

tening to the auditory feedback was boring, because it

was too repetitive.

Participants that completed the route receiving

tactile feedback (T, AT, TV and ATV groups) men-

tioned that it was not annoying. However, tactile feed-

back was not enough to receive guiding information

for 39 out of the 44 participants (88.63%). Only 5

participants (from the ATV group) mentioned that it

was enough. Regarding the frequency of the tactile

feedback, 39 out of the 44 participants (88.63%) con-

sidered it appropriate. The other 5 participants belong

to one of the multimodal groups (AT, TV and ATV).

The perceived usefulness was lower for tactile feed-

back: 20 participants out of 44 (45.46%) rated it with

a 1 in the Likert scale; 11 participants (25%) with 2, 7

participants (15.91%) rated it with 3, 5 participants

(11.36%) with 4 and only one participant (2.27%)

with the highest score, 5 in the Likert scale. This last

participant belonged to the ATV group.

Comments about tactile feedback were more var-

ied: 3 participants mentioned that the vibration fre-

quency was too slow. On the other hand, 2 partici-

pants gave positive comments about tactile feedback,

mentioning that it keeps you alert on your route. They

remarked that tactile alerts combined with auditory

and visual feedback made the application very useful.

Finally, 43 out of 44 participants that completed

the path with visual feedback mentioned it was not

annoying. The only participant that thought other-

wise belonged to the TV group. Only 4 participants

(9.09%) mentioned they felt they needed more infor-

mation for completing the route. On the other hand,

37 participants (84.09%) reported that the frequency

of the feedback was appropriate. Regarding the use-

fulness of Visual feedback to provide the guiding

information, 28 participants (63.64%) rated it with

the highest score in the Likert scale; 8 participants

(18.18%) rated it with a 4; 6 participants (13.64%)

with a 3; one participant rated it with a 2, and one par-

ticipant rated visual feedback with the lowest value of

the Likert scale. Sun glares caused 37 participants

(84.09%) to report trouble and discomfort reading the

on-screen messages. Combining visual with auditory

and tactile feedback was mentioned as a good alterna-

tive for this situations.

The easiness to follow the instructions to complete

the route was rated with a 5 in the Likert scale by

57 participants (74.03%). It was rated with a 4 by

10 participants (12.99%), with a 3 by 4 participants

(5.19%); with 2 by 5 participants (6.49%), and with

the lowest rate by one participant only (1.30%). Par-

ticipants that considered it was somehow difficult be-

longed to A, V, TV and ATV groups. The participant

that considered it very difficult belonged to AV group.

4.2 Performance Results

The Multifactor ANOVA reveals that only the Feed-

back modality factor has a statistically significant ef-

fect on Time to complete the route (T ) with a large

effect size (F(6, 70) = 13.52, p = 1.0 × 10

−3

**, par-

tial η

2

= 0.609). There is no statistically significant

interaction among any of the factors under analysis

with respect to T .

The Tukey HSD post-hoc analysis for Feedback

modality factor and T revealed statistically significant

differences between tactile feedback and the rest of

the groups. Figure 3 shows the average and standard

deviation T for every feedback group.

StudyingtheUserExperiencewithaMultimodalPedestrianNavigationAssistant

443

150

200

250

300

A T V AT AV TV ATV

Feedback combination

Time (s)

Figure 3: Time to complete the route box plot (T ).

The Multifactor ANOVA did not show any statis-

tically significant differences regarding the Time to

confirm the reception of the end-of-route message.

Finally, no participant missed a checkpoint, and thus,

there was no data to compare the Average number of

missed checkpoints.

5 DISCUSSION

Visual and auditory feedback were the most intuitive

way to receive navigation assistance for participants

in our experiment. Spoken directions and the visual

elements mixed with the video feed of the smart-

phones’ camera are both promising ways to provide

navigational cues to the user. Especially if we con-

sider the fact that wearable devices such as Google

Glass provide the necessary hardware to reduce the

visibility and hearing problems reported in our exper-

iment.

Our results align with what has been reported

by previous studies (Jacob et al., 2011b; Magnusson

et al., 2010; Szymczak et al., 2012): multimodal feed-

back improves user performance. Participants in our

experiment mentioned that receiving a different vi-

bration pattern at a checkpoint increased their aware-

ness of the decision point, efficiently attracting their

attention to the smartphone screen or the spoken di-

rectional cues. Participants mentioned that tactile and

auditory feedback helped them to keep focus on the

route.

On the other hand, the fact that we considered

in our design the precision of commercial GPS sys-

tems, including a radius of 5 m around the check-

points to provide feedback, caused that none of the

participants missed a checkpoint during the experi-

ment. This highlights the importance of timing in

pedestrian navigation assistance, because even when

participants receiving only tactile feedback required

more time to complete the route, all the participants

were able to complete the itinerary.

Participants mentioned that sun glares made it dif-

ficult to observe and read the on-screen messages, and

that the volume of auditory feedback was not high

enough (even when the volume of the smartphone was

set at its maximum level). This problem highlights the

importance of a careful design considering the context

in which feedback is going to be used. For example,

auditory feedback would not be appropriate in con-

texts such as a library or a museum.

6 CONCLUSIONS AND FUTURE

WORK

Visual and auditory feedback produced better results,

both from the subjective appreciation of the partici-

pants and also regarding the performance measures.

In our approach, the augmented view provided simi-

lar information than a commercial GPS, but with the

advantage of showing the real environment instead of

just a map, while auditory feedback provided spo-

ken instructions about the direction to follow. Tac-

tile feedback is the least effective way to provide di-

rectional cues, which is not surprising, as previous

research found similar results when tactile feedback

was compared to traditional GPS systems feedback

(Pielot and Boll, 2010). However, according to the

user experience, tactile feedback is helpful to alert

the user about an approaching decision point in the

route, and also as an alternative when visual or audi-

tory feedback are not available.

We plan to take our application one step further,

and repeat the experiment using an wearable devices,

such as Google Glass and the Samsung Smart watch,

to evaluate the user experience and to study how mul-

timodal feedback can be used to improve pedestrian

navigation assistants.

ACKNOWLEDGEMENTS

This research has been funded by the National

Council of Science and Technology of M

´

exico as part

of the Special Program of Science and Technology.

REFERENCES

Chittaro, L. and Burigat, S. (2005). Augmenting Audio

Messages with Visual Directions in Mobile Guides:

GRAPP2015-InternationalConferenceonComputerGraphicsTheoryandApplications

444

An Evaluation of Three Approaches. In Proc. of the

7th Int. Conf. on Human Computer Interaction with

Mobile Devices and Services, MobileHCI, pages 107–

114.

Fr

¨

ohlich, P., Oulasvirta, A., Baldauf, M., and Nurminen, A.

(2011). On the Move, Wirelessly Connected to the

World. Com. of the ACM, 54(1):132–138.

Jacob, R., Mooney, P., Corcoran, P., and Winstanley, A. C.

(2011a). Guided by Touch: Tactile Pedestrian Navi-

gation. In Proc. of the GIS Research UK 19th Annual

Conf., GISRUK, pages 205–215.

Jacob, R., Mooney, P., and Winstanley, A. C. (2011b).

Guided by Touch: Tactile Pedestrian Navigation. In

Proc. of the 1st Int. Workshop on Mobile Location-

based Service, MLBS, pages 11–20.

Jameson, A. (2002). Usability Issues and Methods for Mo-

bile Multimodal Systems. In Proc. of the ISCA Tu-

torial and Research Workshop on Multi-Modal Dia-

logue in Mobile Environments.

Liljedahl, M., Lindberg, S., Delsing, K., Poloj

¨

arvi, M., Sa-

loranta, T., and Alak

¨

arpp

¨

a, I. (2012). Testing Two

Tools for Multimodal Navigation. Adv. in Human-

Computer Interaction, 2012.

Liljedahl, M. and Papworth, N. (2012). Using Sound to

Enhance Users’ Experiences of Mobile Applications.

In Proc. of the 7th Audio Mostly Conf.: A Conference

on Interaction with Sound, AM, pages 24–31.

Magnusson, C., Molina, M., Rassmus-Gr

¨

ohn, K., and

Szymczak, D. (2010). Pointing for Non-visual Orien-

tation and Navigation. In Proc. of the 6th Nordic Conf.

on Human-Computer Interaction: Extending Bound-

aries, NordiCHI, pages 735–738.

Oulasvirta, A., Tamminen, S., Roto, V., and Kuorelahti,

J. (2005). Interaction in 4-second bursts: The frag-

mented nature of attentional resources in mobile hci.

In Proc. of the SIGCHI Conf. on Human Factors in

Computing Systems, CHI, pages 919–928.

Pielot, M. and Boll, S. (2010). Tactile Wayfinder: Compari-

son of Tactile Waypoint Navigation with Commercial

Pedestrian Navigation Systems. In Proc. of the Int.

Conf. on Pervasive Computing, Pervasive, pages 76–

93.

Pielot, M., Henze, N., and Boll, S. (2009). Supporting

Map-based Wayfinding with Tactile Cues. In Proc. of

the 11th Int. Conf. on Human-Computer Interaction

with Mobile Devices and Services, MobileHCI, pages

23:1–23:10.

Pielot, M., Heuten, W., Zerhusen, S., and Boll, S. (2012a).

Dude, Where’s My Car?: In-situ Evaluation of a Tac-

tile Car Finder. In Proc. of the 7th Nordic Conf.

on Human-Computer Interaction: Making Sense

Through Design, NordiCHI, pages 166–169.

Pielot, M., Poppinga, B., and Boll, S. (2010). Pocketnav-

igator: vibro-tactile waypoint navigation for every-

day mobile devices. In Proc of the Conf. on Human-

Computer Interaction with Mobile Devices and Ser-

vices, Mobile HCI, pages 423–426.

Pielot, M., Poppinga, B., Heuten, W., and Boll, S. (2012b).

PocketNavigator: Studying Tactile Navigation Sys-

tems In-situ. In Proc. of the SIGCHI Conf. on Hu-

man Factors in Computing Systems, CHI, pages 3131–

3140.

Raisamo, R., Nukarinen, T., Pystynen, J., M

¨

akinen, E., and

Kildal, J. (2012). Orientation Inquiry: A New Haptic

Interaction Technique for Non-visual Pedestrian Nav-

igation. In Proc. of the 2012 Int. Conf. on Haptics:

Perception, Devices, Mobility, and Communication -

Volume Part II, EuroHaptics, pages 139–144.

Robinson, S., Jones, M., Eslambolchilar, P., Murray-Smith,

R., and Lindborg, M. (2010). ”I Did It My Way”:

Moving Away from the Tyranny of Turn-by-turn

Pedestrian Navigation. In Proc. of the 12th Int. Conf.

on Human Computer Interaction with Mobile Devices

and Services, MobileHCI, pages 341–344.

R

¨

umelin, S., Rukzio, E., and Hardy, R. (2011). NaviRadar:

A Novel Tactile Information Display for Pedestrian

Navigation. In Proc. of the 24th Annual ACM Sym-

posium on User Interface Software and Technology,

UIST, pages 293–302.

Strachan, S., Eslambolchilar, P., Murray-Smith, R., Hughes,

S., and O’Modhrain, S. (2005). GpsTunes: Control-

ling Navigation via Audio Feedback. In Proc. of the

7th Int. Conf. on Human Computer Interaction with

Mobile Devices and Services, MobileHCI, pages 275–

278.

Szymczak, D., Magnusson, C., and Rassmus-Gr

¨

ohn, K.

(2012). Guiding Tourists Through Haptic Interaction:

Vibration Feedback in the Lund Time Machine. In

Proc. of the 2012 Int. Conf. on Haptics: Perception,

Devices, Mobility, and Communication - Volume Part

II, EuroHaptics, pages 157–162.

Vainio, T. (2009). Exploring Multimodal Navigation Aids

for Mobile Users. In Proc. of the 12th IFIP TC 13 Int.

Conf. on Human-Computer Interaction: Part I, IN-

TERACT, pages 853–865.

StudyingtheUserExperiencewithaMultimodalPedestrianNavigationAssistant

445