An eXtended Center-Symmetric Local Binary Pattern

for Background Modeling and Subtraction in Videos

Caroline Silva, Thierry Bouwmans and Carl Fr

´

elicot

Lab. Math

´

ematiques, Images et Applications, Universit

´

e de La Rochelle, 17000 La Rochelle, France

Keywords:

Local Binary Patterns, Background Modeling, Background Subtraction.

Abstract:

In this paper, we propose an eXtended Center-Symmetric Local Binary Pattern (XCS-LBP) descriptor for

background modeling and subtraction in videos. By combining the strengths of the original LBP and the

similar CS ones, it appears to be robust to illumination changes and noise, and produces short histograms,

too. The experiments conducted on both synthetic and real videos (from the Background Models Challenge)

of outdoor urban scenes under various conditions show that the proposed XCS-LBP outperforms its direct

competitors for the background subtraction task.

1 INTRODUCTION

The background subtraction (BS) is one of the main

steps in many computer vision applications, such as

object tracking, behavior understanding and activity

recognition (Pietik

¨

ainen et al., 2011). The BS process

consists basically of: a) background model initializa-

tion, b) background model maintenance and c) fore-

ground detection. Many BS methods have been de-

veloped during the last few years (Bouwmans, 2014;

Sobral and Vacavant, 2014; Shah et al., 2013), and the

main resources can be found at the Background Sub-

traction Web Site

1

.

The BS needs to face several challenging situa-

tions such as illumination changes, dynamic back-

grounds, bad weather, camera jitter, noise and shad-

ows. Several feature extraction methods have been

developed to deal with these situations. Color fea-

tures are the most widely used, but they present sev-

eral limitations when illumination changes, shadows

and camouflage occurrences are present. A variety of

local texture descriptors recently have attracted great

attention for background modeling, especially the Lo-

cal Binary Pattern (LBP) because it is simple and fast

to compute. Figure 1 (top) shows how a (center) pixel

is encoded by a series of bits, accordingly to the rel-

ative gray levels of its circular neighboring pixels.

It shows great invariance to monotonic illumination

changes, do not require many parameters to be set,

and have a high discriminative power. However, the

1

https://sites.google.com/site/backgroundsubtraction/

Home

24

72

68

52

56

51

57 60 58

positive

differences

0

1 1

0 0

1 1 1

code:

11100110

24

72

68

52

59

51

57 60 58

positive

differences

0

1 1

0 0

0

1

0

code:

01000110

Figure 1: Examples of LBP encoding.

original LBP descriptor in (Ojala et al., 2002) is not

efficient for background modeling because of its sen-

sitivity to noise, see Figure 1 (bottom) where a little

change of the central value greatly affects the result-

ing code.

The LBP feature of an image consists in building a

histogram based on the codes of all the pixels within

the image. As it only adopts first-order gradient in-

formation between the center pixel and its neighbors,

see (Xue et al., 2011), the produced histogram can be

rather long. A large number of local texture descrip-

tors based on LBP (Richards and Jia, 2014) have been

proposed so far for background modeling. In order to

be more robust to noise or illumination changes, most

of them are unfortunately either very time-consuming

or produce a long feature histogram.

In this paper, we propose to extend the variant by

Heikkil

¨

a et al. (2009) by introducing a new neighbor-

ing pixels comparison strategy that allows the descrip-

tor to be less sensitive to noisy pixels and to produce

a short histogram, while preserving robustness to il-

395

Silva C., Bouwmans T. and Frélicot C..

An eXtended Center-Symmetric Local Binary Pattern for Background Modeling and Subtraction in Videos.

DOI: 10.5220/0005266303950402

In Proceedings of the 10th International Conference on Computer Vision Theory and Applications (VISAPP-2015), pages 395-402

ISBN: 978-989-758-089-5

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

lumination changes and slightly gaining in time con-

sumption when compared to its direct competitors.

The rest of this paper is organized as follows. Sec-

tion 2 provides quite an exhaustive overview of LBP-

based descriptors. The new descriptor that we pro-

pose is described in Section 3. Comparative results

obtained on both synthetic and real videos are given

in Section 4. Finally, concluding remarks and some

perspectives are drawn in Section 5.

2 RELATED WORK

One of the first descriptors based on the LBP for

background modeling can be found in (Heikkil

¨

a and

Pietik

¨

ainen, 2006). It improves the original LBP in

image areas where the gray values of the neighboring

pixels are very close to the center pixel one, e.g. sky,

grass, etc.

Shimada and Taniguchi (2009) propose a Spatial-

Temporal Local Binary Pattern (STLBP) which is ro-

bust to short-term illumination changes by using some

temporal information. Two variants of LBP, called

εLBP and Adaptive εLBP, are developed in (Wang

and Pan, 2010; Wang et al., 2010). They are fast to

compute and less sensitive to the illumination varia-

tion or some color similarity between foreground and

background. Heikkil

¨

a et al. (2009) propose the Center

Symmetric Local Binary Pattern (CS-LBP) descrip-

tor which generates more compact binary patterns by

working only with the center-symmetric pairs of pix-

els. In (Xue et al., 2010), a Spatial Extended Center-

Symmetric (SCS-LBP) is presented. It improves the

CS-LBP by better capturing the gradient information

and hence, making it more discriminative. The au-

thors explain that their SCS-LBP produces a relatively

short feature histogram with low computationally

complexity. Liao et al. (2010) propose the Scale In-

variant Local Ternary Pattern (SILTP) which is more

efficient for noisy images. The Center-Symmetric

Local Derivative Pattern descriptor (CS-LDP) is de-

scribed in (Xue et al., 2011). It extracts more detailed

local information while preserving the same feature

lengths than the CS-LBP, but with a slightly lower

precision than the original LBP. Zhou et al. (2011)

develop a Spatial-Color Binary Pattern (SCBP) that

fuse color and texture information. The SCBP out-

performs LBP and SCS-LBP for background subtrac-

tion tasks. In (Lee et al., 2011), the authors propose

an Opponent Color Local Binary Pattern (OCLBP)

that uses color and texture information. The OCLBP

extracts several pixel’s pieces of information, but the

length of the produced histogram makes it useless for

some applications. An Uniform LBP Patterns with a

new thresholding method can be found in (Yuan et al.,

2012). It appears to be tolerant to the interference

of the sampling noise. Yin et al. (2013) propose a

Stereo LBP on Appearance and Motion (SLBP-AM)

which uses information from a set of frames of three

different planes. This texture descriptor is not only

robust to slight noise, but it also adapts quickly to

the large-scale and sudden light changes. A Local

Binary Similarity Patterns (LBSP) descriptor is de-

veloped in (Bilodeau et al., 2013). Based on abso-

lute absolute differences, it applies on small areas and

is calculated inside one image and between two im-

ages. This allows LBSP to capture both texture and

intensity changes. Noh and Jeon (2012) propose to

improve the SILTP (Liao et al., 2010) thanks to a

codebook method. The derived descriptor gain in ro-

bustness when segmenting moving objects from dy-

namic and complex backgrounds. Wu et al. (2014)

extend SILTP by introducing a novel Center Symmet-

ric Scale Invariant Local Ternary Patterns (CS-SILTP)

descriptor which explores spatial and temporal rela-

tionships within the neighborhood. The LBP descrip-

tors present a significant drawback as it ignores the in-

tensity information. Because of this, there could be a

wrong pixel comparison result when intensity values

of pixels differ drastically, but their LBP values are

identical. To overcome this drawback, Vishnyakov

et al. (2014) propose an intensity LBP (iLBP) to build

a fast background model is proposed in (Vishnyakov

et al., 2014). It is defined as a collection of LBP de-

scriptor values and intensity values of the image. The

main characteristics of all the above reviewed LBP

variants, including those we will compare our new de-

scriptor to, are summarized in Table 1.

3 THE XCS-LBP DESCRIPTOR

The original LBP descriptor introduced by Ojala et al.

(2002) has proved to be a powerful local image de-

scriptor. It labels the pixels of an image block by

thresholding the neighbourhood of each pixel with the

center value and considering the result as a binary

number. The LBP encodes local primitives such as

curved edges, spots, flat areas, etc. In the context of

BS, both the current image and the image represent-

ing the background model are encoded such that they

become a texture-based representation of the scene.

Let a pixel at a certain location, considered as the

center pixel c = (x

c

,y

c

) of a local neighborhood com-

posed of P equally spaced pixels on a circle of radius

R. The LBP operator applied to c can be expressed as:

LBP

P,R

(c) =

P−1

∑

i=0

s(g

i

− g

c

) 2

i

(1)

VISAPP2015-InternationalConferenceonComputerVisionTheoryandApplications

396

Table 1: Comparison of LBP and variants.

Descriptor

Robust to

noise

Robust to

illumination

changes

Uses

color

information

Uses

temporal

information

Histogram

size with 8

neighbors

Original LBP (Ojala et al., 2002) • 256

Modified LBP (Heikkil

¨

a and Pietik

¨

ainen, 2006) • • 256

CS-LBP (Heikkil

¨

a et al., 2009) • 16

STLBP (Shimada and Taniguchi, 2009) • • 256

εLBP Wang and Pan (2010) • 256

Adaptive εLBP (Wang et al., 2010) • 256

SCS-LBP (Xue et al., 2010) • • 16

SILTP (Liao et al., 2010) • 256

CS-LDP (Xue et al., 2011) • 16

SCBP (Xue et al., 2011) • 64

OCLBP (Lee et al., 2011) • 1536

Uniform LBP (Yuan et al., 2012) • 59

SALBP (Noh and Jeon, 2012) • 128

SLBP-AM (Yin et al., 2013) • • 256

LBSP (Bilodeau et al., 2013) • • 256

iLBP (Vishnyakov et al., 2014) • 256

CS-SILTP (Wu et al., 2014) • • 16

XCS-LBP (in this paper) • • 16

where g

c

is the gray value of the center pixel c and g

i

is the gray value of each neighboring pixel, and s is a

thresholding function defined as:

s(x) =

1 if x ≥ 0

0 otherwise.

(2)

From (1), it is easy to show that the number of binary

terms to be summed is

∑

P−1

i=0

2

i

= 2

P

− 1, so that the

length of the resulting histogram (including the bin-

0 location) is 2

P

. The underlying idea of CS-LBP in

(Heikkil

¨

a et al., 2009) is to compare the gray levels

of pairs of pixels in centered symmetric directions in-

stead of comparing the central pixel to its neighbors.

Assuming an even number P of neighboring pixels,

the CS-LBP operator is given by:

CS − LBP

P,R

(c) =

(P/2)−1

∑

i=0

s(g

i

− g

i+(P/2)

)2

i

(3)

where g

i

and g

i+(P/2)

are the gray values of center-

symmetric pairs of pixels, and s is the thresholding

function defined as:

s(x) =

1 if x > T

0 otherwise

(4)

where T is a user-defined threshold. Since the gray

levels are normalized in [0,1], the authors recommend

to use of a small value. We will set it to 0.01 in the ex-

periments presented in Section 4. By construction, the

length of the histogram resulting from the CS-LBP

descriptor falls down to 1 +

∑

P/2−1

i=0

2

i

= 2

P/2

. For BS,

the CS-LBP encodes the two images to be compared

as texture-based images with a lower quantization that

slightly favors robustness.

We propose to extend the CS-LBP operator

by comparing the gray values of pairs of center-

symmetric pixels so that the produced histogram are

short as well, but considering the central pixel also.

This combination makes the resulting descriptor less

sensitive to noise for the BS application. The new

LBP variant, called XCS-LBP (eXtended CS-LBP),

expresses as:

XCS − LBP

P,R

(c) =

(P/2)−1

∑

i=0

s(g

1

(i,c) + g

2

(i,c))2

i

(5)

where the threshold function s, which is used to deter-

mine the types of local pattern transition, is defined as

a characteristic function:

s(x

1

+ x

2

) =

1 if (x

1

+ x

2

) ≥ 0

0 otherwise.

(6)

and where g

1

(i,c) and g

2

(i,c) are defined by:

g

1

(i,c) = (g

i

− g

i+(P/2)

) + g

c

g

2

(i,c) = (g

i

− g

c

) (g

i+(P/2)

− g

c

)

(7)

with the same notation conventions than in equations

(1) and (3). It is worth noting that the threshold

function does not need a user-defined threshold value,

contrary to CS-LBP.

The computation of the original LBP for a neigh-

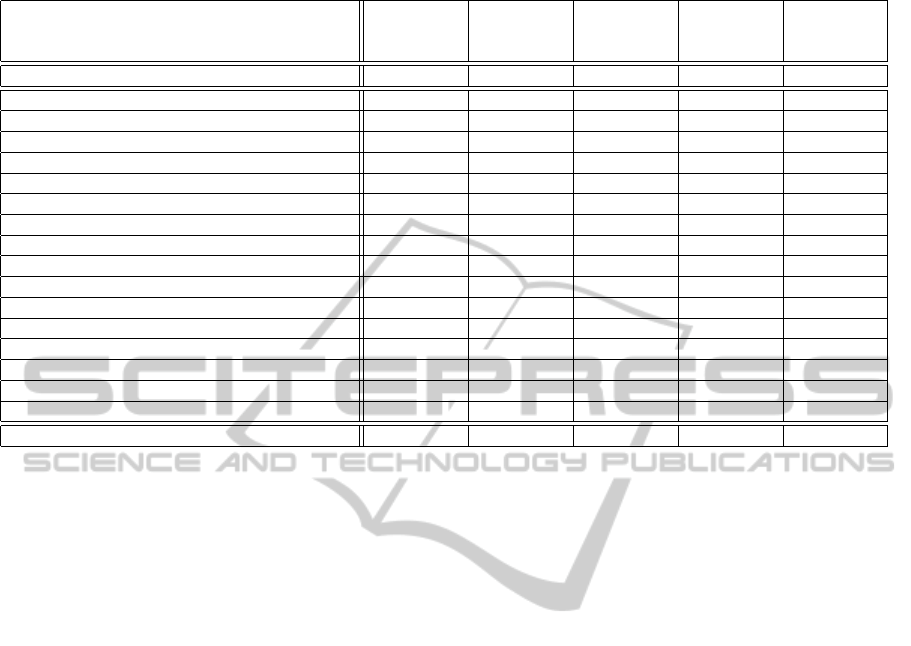

borhood of size P = 8 is illustrated in Figure 2 and

the computation of the proposed XCS-LBP is shown

AneXtendedCenter-SymmetricLocalBinaryPatternforBackgroundModelingandSubtractioninVideos

397

Figure 3: The XCS-LBP descriptor.

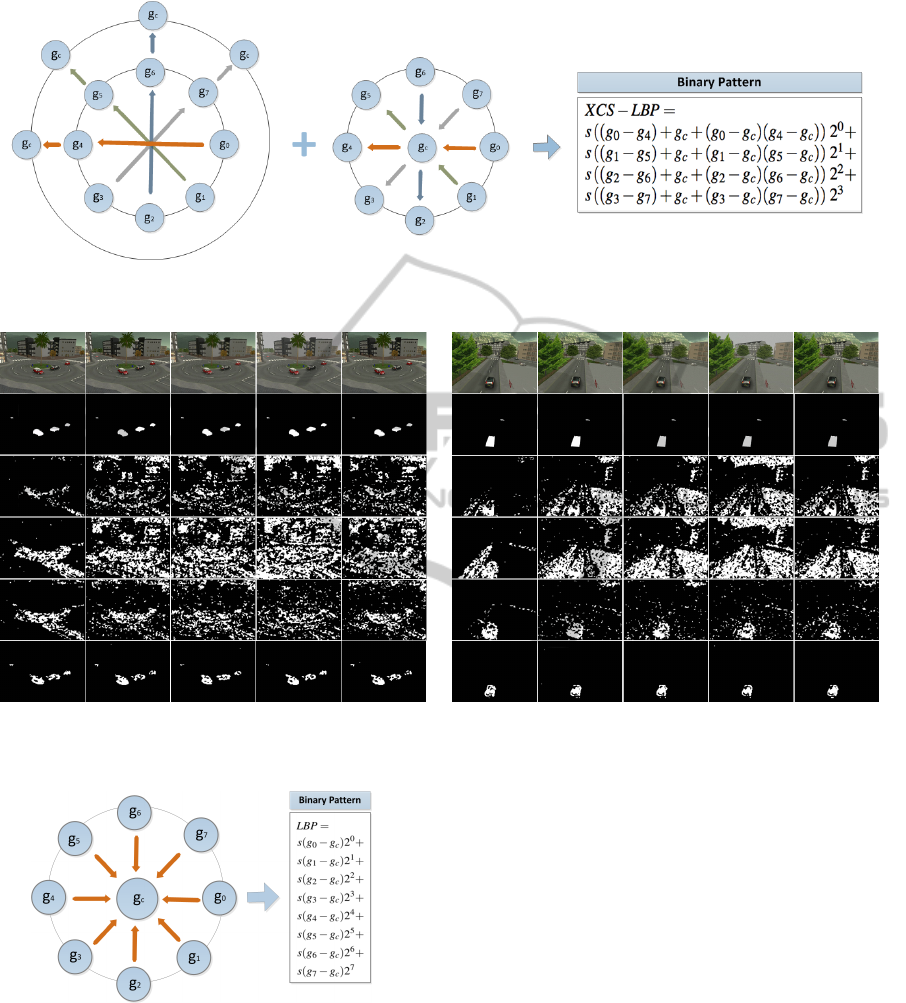

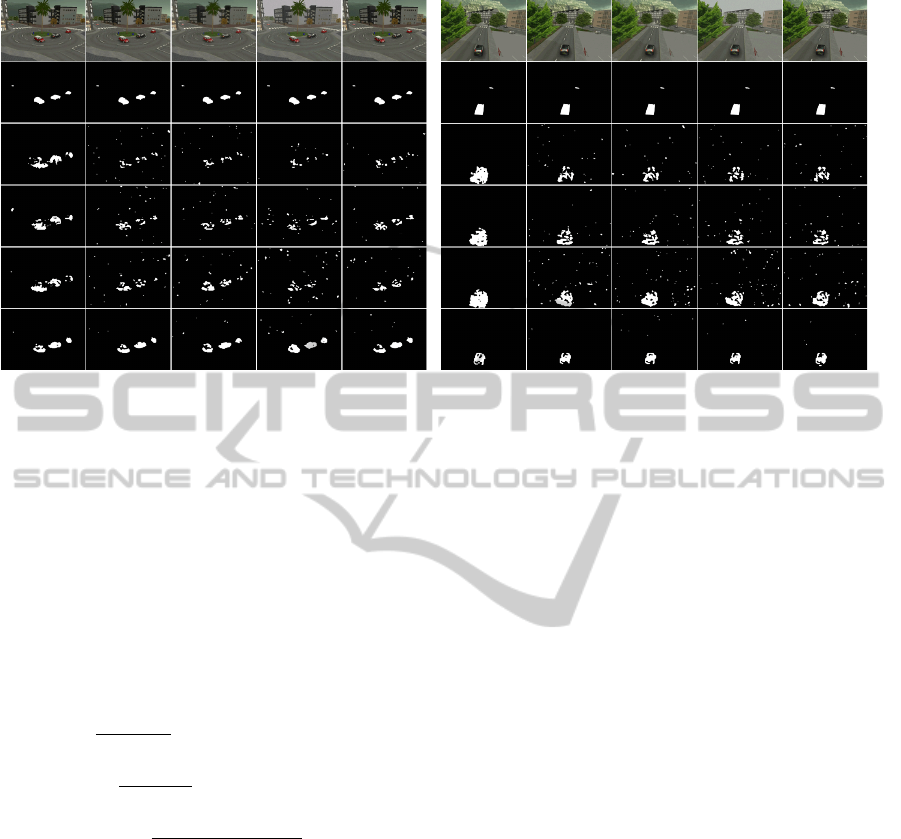

Rotary (frame #1140) – scenes 122, 222, 322, 422 and 522 Street (frame #301) – scenes 112, 212, 312, 412 and 512

(a)

(b)

(c)

(d)

(e)

(f)

Figure 4: Background subtraction results using the ABL method on synthetic scenes – (a) original frame, (b) ground truth, (c)

LBP, (d) CS-LBP, (e) CS-LDP and (f) proposed XCS-LBP.

Figure 2: The LBP descriptor.

in Figure 3 in order to make the comparison more un-

derstandable for the reader. Note the respective code

lengths of 8 and 4 that lead to respective image com-

pressions.

The proposed XCS-LBP produces a shorter his-

togram than LBP, as short as CS-LBP, but it extracts

more image details than CS-LBP because (i) it takes

into account the gray value of the central pixel, and

(ii) it relies on a new strategy for neighboring pix-

els comparison. Since it is also more robust to noisy

images than both LBP and CS-LBP, the proposed

descriptor appears to more efficient for background

modeling and subtraction.

4 EXPERIMENTAL RESULTS

Several experiments were conducted to illustrate both

the qualitative and quantitative performances of the

proposed descriptor XCS-LBP. We use datasets from

the BMC (Background Models Challenge) which

comprises synthetic and real videos of outdoor situ-

ations (urban scenes) acquired with a static camera,

under different weather variations such as: wind, sun

or rain (Vacavant et al., 2012). We compare XCS-

LBP with three other texture descriptors among the

reviewed ones, namely:

VISAPP2015-InternationalConferenceonComputerVisionTheoryandApplications

398

Table 2: Performance of the different descriptors on syn-

thetic videos of the BMC using the ABL method.

Scenes Descriptor Recall Precision F-score

Rotary

122

LBP 0.682 0.564 0.618

CS-LBP 0.832 0.520 0.640

CS-LDP 0.809 0.523 0.635

XCS-LBP 0.850 0.784 0.816

Rotary

222

LBP 0.611 0.505 0.553

CS-LBP 0.673 0.504 0.577

CS-LDP 0.753 0.510 0.608

XCS-LBP 0.852 0.782 0.815

Rotary

322

LBP 0.603 0.505 0.550

CS-LBP 0.647 0.504 0.566

CS-LDP 0.733 0.507 0.600

XCS-LBP 0.829 0.793 0.810

Rotary

422

LBP 0.573 0.502 0.535

CS-LBP 0.609 0.503 0.550

CS-LDP 0.733 0.508 0.600

XCS-LBP 0.751 0.780 0.765

Rotary

522

LBP 0.610 0.505 0.553

CS-LBP 0.663 0.504 0.573

CS-LDP 0.745 0.509 0.605

XCS-LBP 0.852 0.732 0.787

Street

112

LBP 0.702 0.530 0.604

CS-LBP 0.839 0.512 0.636

CS-LDP 0.826 0.525 0.642

XCS-LBP 0.803 0.793 0.798

Street

212

LBP 0.636 0.504 0.562

CS-LBP 0.716 0.503 0.591

CS-LDP 0.798 0.513 0.624

XCS-LBP 0.808 0.790 0.799

Street

312

LBP 0.627 0.504 0.558

CS-LBP 0.699 0.503 0.585

CS-LDP 0.801 0.511 0.624

XCS-LBP 0.800 0.796 0.798

Street

412

LBP 0.580 0.501 0.558

CS-LBP 0.599 0.501 0.546

CS-LDP 0.754 0.507 0.607

XCS-LBP 0.748 0.781 0.764

Street

512

LBP 0.628 0.503 0.559

CS-LBP 0.677 0.503 0.577

CS-LDP 0.771 0.508 0.612

XCS-LBP 0.800 0.575 0.669

Average

scores

LBP 0.625 0.512 0.565

CS-LBP 0.695 0.506 0.584

CS-LDP 0.772 0.512 0.616

XCS-LBP 0.809 0.761 0.782

• original LBP (Ojala et al., 2002),

• CS-LBP (Heikkil

¨

a et al., 2009) and

• CS-LDP(Xue et al., 2011).

We choose these two last descriptors on fair com-

parison purpose. Indeed, among those who rely on the

same construction principle, i.e. Center Symmetric

(CS), they are the only ones that use neither color nor

temporal information, see Table 1. For all descriptors,

the neighborhood size is empirically selected so that

P = 8 and R = 1, and we evaluate the performance

Table 3: Performance of the different descriptors on syn-

thetic videos of the BMC using the GMM method.

Scenes Descriptor Recall Precision F-score

Rotary

122

LBP 0.817 0.701 0.755

CS-LBP 0.830 0.705 0.763

CS-LDP 0.819 0.677 0.741

XCS-LBP 0.831 0.800 0.815

Rotary

222

LBP 0.636 0.653 0.644

CS-LBP 0.741 0.687 0.713

CS-LDP 0.651 0.616 0.633

XCS-LBP 0.825 0.794 0.809

Rotary

322

LBP 0.661 0.646 0.653

CS-LBP 0.741 0.656 0.696

CS-LDP 0.674 0.613 0.642

XCS-LBP 0.821 0.767 0.793

Rotary

422

LBP 0.611 0.585 0.598

CS-LBP 0.673 0.575 0.620

CS-LDP 0.611 0.548 0.578

XCS-LBP 0.748 0.702 0.724

Rotary

522

LBP 0.636 0.627 0.631

CS-LBP 0.743 0.672 0.706

CS-LDP 0.605 0.650 0.627

XCS-LBP 0.825 0.760 0.791

Street

112

LBP 0.940 0.674 0.785

CS-LBP 0.924 0.675 0.780

CS-LDP 0.938 0.656 0.772

XCS-LBP 0.844 0.755 0.808

Street

212

LBP 0.676 0.642 0.659

CS-LBP 0.752 0.658 0.702

CS-LDP 0.694 0.577 0.630

XCS-LBP 0.833 0.760 0.795

Street

312

LBP 0.684 0.633 0.657

CS-LBP 0.742 0.627 0.680

CS-LDP 0.729 0.581 0.647

XCS-LBP 0.821 0.713 0.763

Street

412

LBP 0.619 0.566 0.591

CS-LBP 0.705 0.567 0.628

CS-LDP 0.659 0.539 0.593

XCS-LBP 0.751 0.619 0.679

Street

512

LBP 0.662 0.566 0.610

CS-LBP 0.727 0.568 0.638

CS-LDP 0.689 0.551 0.612

XCS-LBP 0.828 0.629 0.715

Average

scores

LBP 0.694 0.629 0.658

CS-LBP 0.758 0.639 0.693

CS-LDP 0.707 0.601 0.648

XCS-LBP 0.813 0.730 0.769

with two popular background subtraction methods,

see (Bouwmans, 2014):

• Adaptive Background Learning (ABL) and

• Gaussian Mixture Models (GMM).

First, we present results of background subtraction

on individual frames of five different scenes from two

video sequences: Rotary (frame #1140) and Street

(frame #301). Figures 4 and 5 show the foreground

detection results using the ABL and the GMM meth-

ods, respectively. Our descriptor clearly appears to be

less sensitive to the background subtraction method,

AneXtendedCenter-SymmetricLocalBinaryPatternforBackgroundModelingandSubtractioninVideos

399

Rotary (frame #1140) – scenes 122, 222, 322, 422 and 522 Street (frame #301) – scenes 112, 212, 312, 412 and 512

(a)

(b)

(c)

(d)

(e)

(f)

Figure 5: Background subtraction results using the GMM method on synthetic scenes – (a) original frame, (b) ground truth,

(c) LBP, (d) CS-LBP, (e) CS-LDP and (f) proposed XCS-LBP.

whereas the three others are very useless in detecting

the moving objects when using the ABL method, un-

less a strong post-processing procedure.

Next, we give quantitative results on the same

data. We use three classical measures based on the

numbers of true positive T P pixels (correctly detected

foreground pixels), false positive FP pixels (back-

ground pixels detected as foreground ones), false neg-

ative pixels FN (foreground pixels detected as back-

ground ones), and true negative pixels (correctly de-

tected background pixels):

• Recall =

T P

T P + FN

,

• Precision =

T P

T P + FP

, and

• F −score = 2 ×

Recall × Precision

Recall + Precision

.

Tables 2 and 3 shows the scores of the different de-

scriptors obtained on the Rotary and Street entire

scenes when using the ABL and the GMM method,

respectively. Best scores are in bold. The pro-

posed XCS-LBP gives the highest value for each

score on almost all scenes, except for scene Street-

[112, 312,412], for which CS-LBP and CS-LDP has

achieved the best Recall using ABL, and scene Street-

112 for which LBP gives the best Recall using GMM.

Note that both CS-LBP and CS-LDP gives lower

scores (Precision and F-score) than LBP for some

scenes, while our XCS-LBP descriptor takes always

the advantage on the others, as shown by the average

scores reported at the bottom of each Table.

Finally, we evaluate the proposed descriptor on

nine long duration (about one hour) real outdoor

video scenes from BMC. Each video sequence shows

different challenging situations of real world: mov-

ing trees, casted shadows, the presence of a contin-

uous car flow near to the surveillance zone, general

climatic conditions (sunny, rainy and snowy condi-

tions), fast light changes and the presence of big ob-

jects. The scores obtained using the ABL and the

GMM methods are given in Table 4 and 5, respec-

tively. Once again, our descriptor achieved the best

scores on almost always scenes, even when using the

simple ABL method whereas it dramatically compro-

mises the other descriptors. The average scores re-

ported at the bottom of each Table show that our XCS-

LBP outperforms the original LBP and both the simi-

lar construction-based CS-LBP and CS-LDP descrip-

tors, the latter one being less performant than the LBP

using GMM method. We use Matlab R2013a on a

MacBook Pro (OS X 10.9.4) equipped with 2.2 GHz

Intel Core i7 and 8 GB - 1333 MHz DDR3.

We collected the elapsed CPU times needed to

segment the foregrounds using the ABL and the

GMM methods, averaged over the nine real videos

of BMC. Since the reference is the (fastest) LBP de-

scriptor, the times are divided by LBP ones. Table 6

reports the resulting ratios for the compared CS de-

scriptors. Our XCS-LBP shows slightly better time

performance than both CS-LBP and CS-LDP.

5 CONCLUSION

In this paper, a new texture descriptor for background

modeling is proposed. It combines the strengths

VISAPP2015-InternationalConferenceonComputerVisionTheoryandApplications

400

Table 4: Performance of the different descriptors on real-

world videos of the BMC using the ABL method.

Videos Descriptor Recall Precision F-score

Boring

parking,

active

bkbg

LBP 0.555 0.512 0.533

CS-LBP 0.663 0.539 0.595

CS-LDP 0.712 0.556 0.624

XCS-LBP 0.673 0.628 0.650

Big trucks

LBP 0.456 0.490 0.473

CS-LBP 0.664 0.583 0.621

CS-LDP 0.675 0.673 0.674

XCS-LBP 0.623 0.788 0.696

Wandering

students

LBP 0.500 0.500 0.500

CS-LBP 0.632 0.525 0.573

CS-LDP 0.691 0.566 0.622

XCS-LBP 0.854 0.714 0.778

Rabbit in

the night

LBP 0.562 0.515 0.537

CS-LBP 0.657 0.515 0.577

CS-LDP 0.742 0.561 0.639

XCS-LBP 0.818 0.706 0.758

Snowy

christmas

LBP 0.568 0.516 0.541

CS-LBP 0.640 0.508 0.567

CS-LDP 0.684 0.513 0.586

XCS-LBP 0.719 0.557 0.628

Beware of

the trains

LBP 0.542 0.511 0.526

CS-LBP 0.608 0.556 0.581

CS-LDP 0.711 0.618 0.662

XCS-LBP 0.780 0.674 0.723

Train in

the tunnel

LBP 0.524 0.505 0.514

CS-LBP 0.636 0.640 0.638

CS-LDP 0.668 0.659 0.663

XCS-LBP 0.655 0.688 0.672

Traffic

during

windy day

LBP 0.491 0.497 0.494

CS-LBP 0.597 0.528 0.560

CS-LDP 0.589 0.515 0.550

XCS-LBP 0.572 0.529 0.550

One rainy

hour

LBP 0.536 0.508 0.521

CS-LBP 0.563 0.504 0.532

CS-LDP 0.658 0.520 0.581

XCS-LBP 0.694 0.649 0.671

Average

scores

LBP 0.526 0.506 0.515

CS-LBP 0.629 0.544 0.583

CS-LDP 0.681 0.576 0.558

XCS-LBP 0.710 0.659 0.681

of the original Local Binary Pattern (LBP) and the

Center-Symmetric (CS) LBPs. Thus, the new vari-

ant XCS-LBP (eXtended CS-LBP) produces a shorter

histogram than LBP, by its CS-construction. It is also

tolerant to illumination changes as LBP and CS-LBP

are whereas CS-LDP is not, and robust to noise as CS-

LDP is whereas LBP and CS-LBP are not. We com-

pared the XCS-LBP to the original LBP and to its two

direct competitors on both synthetic and real videos

of the Background Modeling Challenge (BMC) using

two popular background subtraction methods. The

experimental results show that the proposed descrip-

tor qualitatively and quantitatively outperforms the

mentioned descriptors, making it a serious candidate

for the background substation task in computer vision

applications.

Table 5: Performance of the different descriptors on real-

world videos of the BMC using the GMM method.

Videos Descriptor Recall Precision F-score

Boring

parking,

active

bkbg

LBP 0.684 0.587 0.632

CS-LBP 0.716 0.593 0.649

CS-LDP 0.674 0.579 0/623

XCS-LBP 0.680 0.607 0.641

Big trucks

LBP 0.695 0.778 0.734

CS-LBP 0.698 0.773 0.733

CS-LDP 0.649 0.758 0.699

XCS-LBP 0.630 0.792 0.702

Wandering

students

LBP 0.704 0.667 0.685

CS-LBP 0.700 0.640 0.668

CS-LDP 0.654 0.634 0.643

XCS-LBP 0.826 0.742 0.782

Rabbit in

the night

LBP 0.767 0.659 0.709

CS-LBP 0.826 0.626 0.712

CS-LDP 0.706 0.619 0.659

XCS-LBP 0.805 0.684 0.740

Snowy

christmas

LBP 0.750 0.519 0.614

CS-LBP 0.734 0.516 0.606

CS-LDP 0.625 0.510 0.562

XCS-LBP 0.726 0.538 0.618

Beware of

the trains

LBP 0.657 0.685 0.671

CS-LBP 0.699 0.664 0.681

CS-LDP 0.641 0.642 0.642

XCS-LBP 0.759 0.731 0.744

Train in

the tunnel

LBP 0.724 0.711 0.717

CS-LBP 0.710 0.675 0.692

CS-LDP 0.679 0.697 0.688

XCS-LBP 0.695 0.680 0.687

Traffic

during

windy day

LBP 0.523 0.509 0.516

CS-LBP 0.553 0.520 0.536

CS-LDP 0.527 0.510 0.518

XCS-LBP 0.532 0.518 0.525

One rainy

hour

LBP 0.867 0.574 0.691

CS-LBP 0.774 0.589 0.669

CS-LDP 0.797 0.556 0.655

XCS-LBP 0.761 0.628 0.688

Average

scores

LBP 0.708 0.632 0.663

CS-LBP 0.712 0.622 0.661

CS-LDP 0.661 0.612 0.632

XCS-LBP 0.713 0.658 0.681

Table 6: Elapsed CPU times (averaged on the nine real-

world videos of the BMC) over LBP times.

Descriptor CS-LBP CS-LDP XCS-LBP

ABL 1.10 1.12 1.09

GMM 1.06 1.07 1.05

Future works will explore how to extend the pro-

posed descriptor to include temporal relationships be-

tween neighboring pixels for dynamic texture classi-

fication or human action recognition.

REFERENCES

Bilodeau, G.-A., Jodoin, J.-P., and Saunier, N. (2013).

Change detection in feature space using local binary

AneXtendedCenter-SymmetricLocalBinaryPatternforBackgroundModelingandSubtractioninVideos

401

similarity patterns. In Int. Conf. on Computer and

Robot Vision, pages 106–112.

Bouwmans, T. (2014). Traditional and recent approaches

in background modeling for foreground detection: An

overview. In Computer Science Review, pages 31–66.

Heikkil

¨

a, M. and Pietik

¨

ainen, M. (2006). A texture-based

method for modeling the background and detecting

moving objects. IEEE Trans. on Pattern Analysis and

Machine Intelligence, 28:657–662.

Heikkil

¨

a, M., Pietik

¨

ainen, M., and Schmid, C. (2009). De-

scription of interest regions with local binary patterns.

Pattern Recognition, 42:425–436.

Lee, Y., Jung, J., and Kweon, I.-S. (2011). Hierarchical on-

line boosting based background subtraction. In Korea-

Japan Joint Workshop on Frontiers of Computer Vi-

sion (FCV), pages 1–5.

Liao, S., Zhao, G., Kellokumpu, V., Pietikainen, M., and Li,

S. (2010). Modeling pixel process with scale invariant

local patterns for background subtraction in complex

scenes. In IEEE Int. Conf. on Computer Vision and

Pattern Recognition, pages 1301–1306.

Noh, S. and Jeon, M. (2012). A new framework for back-

ground subtraction using multiple cues. In Asian Conf.

on Computer Vision, LNCS 7726, pages 493–506.

Springer.

Ojala, T., Pietik

¨

ainen, M., and M

¨

aenp

¨

a

¨

a, T. (2002). Mul-

tiresolution gray-scale and rotation invariant texture

classification with local binary patterns. IEEE Trans.

on Pattern Analysis and Machine Intelligence, pages

971–987.

Pietik

¨

ainen, M., Hadid, A., Zhao, G., and Ahonen, T.

(2011). Computer vision using local binary pat-

terns, volume 40 of Computational Imaging and Vi-

sion. Springer-Verlag.

Richards, J. and Jia, X. (2014). Local binary patterns: New

variants and applications, volume 506 of Studies in

Computational Intelligence. Springer-Verlag.

Shah, M., Deng, J., and Woodford, B. (2013). Video back-

ground modeling: Recent approaches, issues and our

solutions. In Machine Vision and Applications, pages

1–15.

Shimada, A. and Taniguchi, R.-I. (2009). Hybrid back-

ground model using spatial-temporal lbp. In IEEE Int.

Conf. on Advanced Video and Signal Based Surveil-

lance, pages 19–24.

Sobral, A. and Vacavant, A. (2014). A comprehensive re-

view of background subtraction algorithms evaluated

with synthetic and real videos. Computer Vision and

Image Understanding, 122:4–21.

Vacavant, A., Chateau, T., Wilhelm, A., and Lequievre,

L. (2012). A benchmark dataset for outdoor fore-

ground/background extraction. In Asian Conf. on

Computer Vision, pages 291–300.

Vishnyakov, B., Gorbatsevich, V., Sidyakin, S., Vizilter, Y.,

Malin, I., and Egorov, A. (2014). Fast moving objects

detection using ilbp background model. International

Archives of the Photogrammetry, Remote Sensing and

Spatial Information Sciences, XL-3:347–350.

Wang, L. and Pan, C. (2010). Fast and effective back-

ground subtraction based on εLBP. In IEEE Int. Conf.

on Acoustics, Speech, and Signal Processing, pages

1394–1397.

Wang, L., Wu, H.-Y., and Pan, C. (2010). Adaptive εLBP

for background subtraction. In Kimmel, R., Klette, R.,

and Sugimoto, A., editors, Asian Conf. on Computer

Vision, LNCS 6494, pages 560–571. Springer.

Wu, H., Liu, N., Luo, X., Su, J., and Chen, L. (2014). Real-

time background subtraction-based video surveillance

of people by integrating local texture patterns. Signal,

Image and Video Processing, 8(4):665–676.

Xue, G., Song, L., Sun, J., and Wu, M. (2011). Hy-

brid center-symmetric local pattern for dynamic back-

ground subtraction. In IEEE Int. Conf. on Multimedia

and Expo, pages 1–6.

Xue, G., Sun, J., and Song, L. (2010). Dynamic back-

ground subtraction based on spatial extended center-

symmetric local binary pattern. In IEEE Int. Conf. on

Multimedia and Expo, pages 1050–1054.

Yin, H., Yang, H., Su, H., and Zhang, C. (2013). Dynamic

background subtraction based on appearance and mo-

tion pattern. In IEEE Int. Conf. on Multimedia and

Expo Workshop, pages 1–6.

Yuan, G.-W., Gao, Y., Xu, D., and Jiang, M.-R. (2012). A

new background subtraction method using texture and

color information. In Advanced Intelligent Computing

Theories and Applications, LNAI 6839, pages 541–

548. Springer.

Zhou, W., Liu, Y., Zhang, W., Zhuang, L., and Yu,

N. (2011). Dynamic background subtraction using

spatial-color binary patterns. In Int. Conf. on Graphic

and Image Processing, pages 314–319. IEEE Com-

puter Society.

VISAPP2015-InternationalConferenceonComputerVisionTheoryandApplications

402