Evaluating the Usability of an Automated Transport and

Retrieval System

Paul Whittington, Huseyin Dogan and Keith Phalp

Faculty of Science and Technology, Bournemouth University, Fern Barrow, Poole, U.K.

Keywords: Assistive Technology, Automated Transport and Retrieval System, Controlled Usability Evaluation, NASA

Task Load Index, People with Disability, Pervasive Computing, System Usability Scale, User Interface.

Abstract: The Automated Transport and Retrieval System (ATRS) is a technically advanced system that enables a

powered wheelchair (powerchair) to autonomously dock onto a platform lift of a vehicle using an automated

tailgate and a motorised driver’s seat. The proposed prototype, SmartATRS, is an example of pervasive

computing that considerably improves the usability of ATRS. Two contributions have been made to ATRS:

an improved System Architecture incorporating a relay board with an embedded web server that interfaces

with the smartphone and ATRS, and an evaluation of the usability of SmartATRS using the System

Usability Scale (SUS) and NASA Task Load Index (NASA TLX). The contributions address weaknesses in

the usability of ATRS where small wireless keyfobs are used to control the lift, tailgate and seat. The

proposed SmartATRS contains large informative buttons, increased safety features, a choice of interaction

methods and easy configuration. This research is the first stage towards a “SmartPowerchair”, where

pervasive computing technologies would be integrated into the powerchair to help further improve the

lifestyle of disabled users.

1 INTRODUCTION

Smart technology has proliferated over recent years

(Suarez-Tangil et al., 2013) due to the popularity of

smartphones and other smart devices (e.g.

SmartTVs, tablets and wearable devices) that have

the potential to improve quality of life, particularly

for people with disability.

The Automated Transport and Retrieval System

(ATRS) is a technically advanced system developed

by Freedom Sciences LLC in the United States of

America (USA) featured in New Scientist magazine

(Kleiner, 2008). The system uses robotics and Light

Detection and Ranging (LiDAR) technology to

autonomously dock a powered wheelchair

(powerchair) onto a platform lift fitted in the rear of

a standard Multi-Purpose Vehicle (MPV) while a

disabled driver is seated in the driver’s seat. The

overall objective of developing ATRS was to create

a reliable, robust means for a wheelchair user to

autonomously dock a powerchair onto a platform lift

without the need of an assistant (Gao et al., 2008).

The rationale behind creating the smartphone

system, SmartATRS, was to improve the usability of

the ATRS keyfobs shown in Figure 1 (similar to

those used to operate automated gates). One of the

Authors is a user of ATRS and identified the need to

improve the small keyfobs. This was also

emphasised at the 2011 Mobility Roadshow in

Peterborough (Elap Mobility, 2011). Increased

ATRS usability has the potential to attract new users

who were originally deterred by the keyfobs.

Figure 1: ATRS Keyfobs.

SmartATRS was implemented as two sub-systems:

Vehicle (ATRS) and Home Control, each with a

separate Graphical User Interface (GUI). Home

Control can operate any device containing a relay,

such as automated doors or gates, but is outside the

scope of this paper.

SmartATRS is a first step towards a

SmartPowerchair, where pervasive computing

technologies would be integrated into a standard

powerchair.

59

Whittington P., Dogan H. and Phalp K..

Evaluating the Usability of an Automated Transport and Retrieval System.

DOI: 10.5220/0005205000590066

In Proceedings of the 5th International Conference on Pervasive and Embedded Computing and Communication Systems (PECCS-2015), pages 59-66

ISBN: 978-989-758-084-0

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

2 STATE OF THE ART

There is an ever-increasing market for assistive

technologies (Gallagher et al., 2013), as

approximately 500 million people worldwide have a

disability that affects their interaction with society

and the environment (Cofré et al., 2012). It is

therefore important to encourage independent living

and improve quality of life for people with

disability.

2.1 Automated Transport and

Retrieval System (ATRS)

ATRS uses a laser guidance system comprising of a

compact LiDAR device coupled with a robotics unit,

which is fitted to the powerchair for locating the

exact position of the lift and to drive the powerchair

onto the lift. Using a joystick attached to the driver’s

seat, the user manoeuvres the powerchair to the rear

of the vehicle until the LiDAR unit is able to see two

highly reflective fiducials fitted to the lift. From then

on, the docking of the powerchair is completed

autonomously. The autonomous control area has an

approximate diameter of one metre (see Figure 2).

Figure 2: ATRS Operating Zones.

If the powerchair drives outside this area, it will

stop instantly and requires manual control via the

joystick to return the chair into the autonomous

control area.

ATRS requires the vehicle to be installed with

three components:

1. A Freedom Seat that rotates and exits the

vehicle through the driver’s door to enable

easy transfer between the powerchair and the

driver’s seat.

2. A pneumatic ram fitted to the tailgate.

3. A Tracker Lift fitted in the rear boot space.

Although there is an autonomous aspect to

ATRS, it is seen as an interactive system that

requires user interaction to operate the seat, tailgate

and lift. The user group for ATRS consists of people

who use powerchairs. SmartATRS was developed to

eliminate the small keyfobs that could be dropped

easily, falling out of reach.

2.2 User Interaction

A key aspect of user interaction is usability, which is

defined as “the extent to which a product can be

used by specified users to achieve specified goals

with effectiveness, efficiency and satisfaction in a

specified context of use” (International Organization

for Standardization, 1998). The usability of a system

has greater importance when the users have

disabilities (Adebesin et al., 2010). The success of

any system is mainly dependent upon the usability

from the “user-perspective” and this can only be

achieved by adopting a user-centred design approach

early in the design process (Newell and Gregor,

2002). Such an approach was taken when designing

SmartATRS, as the requirements of potential users

with disabilities were elicited at the 2011 Mobility

Roadshow in Peterborough (Elap Mobility, 2011).

SmartATRS has two interaction methods: touch

and joystick. In general, each method has

limitations, highlighted in research conducted by

Song et al. (2007), where a variety of interaction

methods for a robot were analysed by performing a

user evaluation on five students. It was found that a

button interface operated by touch had the slowest

mean completion times to move and set the speed of

the robot, but was more efficient at controlling

rotation. Joystick interaction was identified to be the

most efficient overall, as it provided the most

consistent performance.

A similar user evaluation was completed for

SmartATRS using Controlled Usability Testing

(Adebesin et al., 2010). Users performed pre-defined

tasks with SmartATRS in a controlled environment

to reveal any specific usability problems that could

impact the user’s ability to operate ATRS safely and

efficiently. Usability testing was combined with

questionnaires (Adebesin et al., 2010) measuring the

extent to which SmartATRS met the users’

expectations.

3 THE SMARTATRS

PROTOTYPE

Each requirement for the SmartATRS prototype was

defined using a shortened version of the Volére

Requirements shell (Robertson and Robertson,

Manual control area

Autonomous

control area

PECCS2015-5thInternationalConferenceonPervasiveandEmbeddedComputingandCommunicationSystems

60

2009). The requirements were categorized using the

Volére types including Safety (SFR), Functionality

(FR) and Reliability (RR) and the fit criterion was

validated through the usability evaluation presented

in Section 4. The key requirements being:

(SFR1) SmartATRS shall not prevent the

existing handheld pendants or keyfobs being

used as a backup method of controlling ATRS.

(FR1) SmartATRS shall be able to control the

following ATRS functions: the Freedom Seat,

Tracker Lift and Automated Tailgate.

(SFR2) SmartATRS shall ensure safe

operation of all ATRS functions by not

creating a risk to the user.

(RR1) SmartATRS shall be reliable, as a user

would depend on the system for their

independence.

3.1 System Architecture

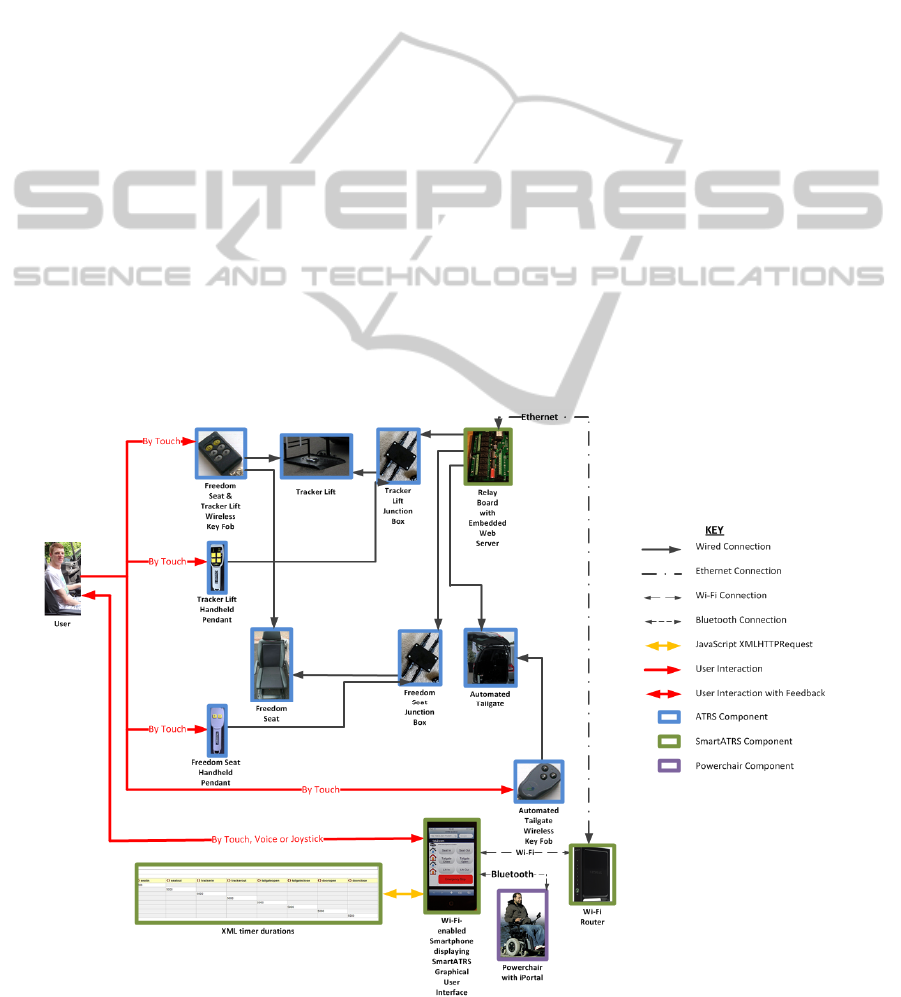

Figure 3 shows a System Architecture diagram for

the prototype SmartATRS. The interactions between

the components are shown by the black and yellow

lines and the user interactions are shown in red. The

diagram contains all of the existing ATRS

components and the addition of the hardware for

SmartATRS. Wireless keyfobs and handheld

pendants were the only method of interaction in the

standard ATRS and this presented a limitation in the

Human Computer Interaction (HCI). However,

SmartATRS allows users to interact by touch or

joystick providing a significant improvement in the

usability of the system. Junction boxes were

manufactured so that the existing handheld pendants

remained operational to satisfy Requirement SFR1.

To integrate the System Architecture with the

standard ATRS, wiring diagrams were analysed to

identify that each component contain relay. A relay

board was then used to interface between the ATRS

components and the JavaScript. Six relays were

utilized for the functions of ATRS: Seat In, Seat

Out, Lift In, Lift Out, Tailgate Open and Tailgate

Close. The relay board contained an embedded web

server storing the HyperText Markup Language

(HTML) and JavaScript GUI’s as webpages.

JavaScript XMLHTTPRequests were transmitted to

access an eXtensible Markup Language (XML) file

located on the web server that contained the timer

durations for each ATRS component. These

durations were integers that represented the number

of milliseconds each function had to be switched on

and were dependent upon the vehicle used (e.g.

longer Lift Out durations will be required for

vehicles that have greater distances to the ground)

Figure 3: System Architecture Diagram for SmartATRS.

EvaluatingtheUsabilityofanAutomatedTransportandRetrievalSystem

61

and the preferences of the user (e.g. a greater Seat

Out duration maybe required to ensure safe transfers

to the powerchair). An XML editor allowed the

durations to be easily viewed and changed by an

installer via a matrix. The process of editing the

XML file was not visible to the end users, thereby

ensuring the safety of ATRSy. Ethernet was used to

connect the web server to a Wi-Fi router located in

the rear of the vehicle, as it has greater reliability

than Wi-Fi and this was essential for ensuring the

safe operation of SmartATRS. As the relay board is

used in an outdoor environment, it could have been

exposed to interference from other Wi-Fi networks

or devices, which could cause unsafe operation of

ATRS. There was no risk of such interference with

Ethernet and therefore, Requirement SFR2 was

satisfied.

Figure 4: Mounted smartphone and SmartATRS GUI.

A smartphone communicated with the Wi-Fi router

over a secure Wi-Fi Protected Access II (WPA2)

network and the GUI could be loaded by entering

the Uniform Resource Locator (URL) of the

webpage or by accessing a bookmark created on the

smartphone. Joystick control of SmartATRS was

achieved using iPortal developed by Dynamic

Controls (Dynamic Controls, 2014) that communi-

cated with a smartphone via Bluetooth and also

enabled the device to be securely mounted onto the

arm of the powerchair (Figure 4), making the system

easier to use.

3.2 User Interface

The rationale for the Graphical User Interface

(GUI), shown in Figure 4, was based upon views

from the Mobility Roadshow (Elap Mobility, 2011).

User feedback and safety features were then

incorporated into SmartATRS, which are not present

in the keyfobs. Seven command buttons are used to

activate each ATRS function. The red Emergency

Stop button is twice the width of the other buttons,

so that it can be selected quickly in an emergency

situation.

Five icons allow the navigation between the

ATRS Control GUI and up to four Home Control

GUIs. All icons are stored on the web server and can

be customized to suit the user’s preferences by

editing the XML file. An image of a vehicle was

used for the ATRS Control icon, whereas the images

for the Home Control icons represent the function to

be controlled, e.g. a gate or door. The use of large

command buttons and clearly defined icons reduces

the risk of incorrect selection, ensuring visibility in

adverse weather conditions.

The background colour of the command buttons

changes to light blue and only reverts to the original

colour when the function completes. The exceptions

to this are the Tailgate and Lift Out buttons that

change to orange and disable when necessary to

maintain safe operation of ATRS (Requirement

SFR2).

Joystick control was developed as an alternative

to touch. In this method, navigation through the GUI

is achieved by moving the powerchair joystick left

or right and buttons are selected by moving the

joystick forwards.

4 EVALUATION

A Controlled Usability Evaluation was conducted on

both ATRS and SmartATRS to assess the usability

of the interaction methods: keyfobs, touch and

joystick. The evaluation provided a means to verify

the GUI design ensuring that it was “fit for purpose”

for users of ATRS.

4.1 Method

The participants of the evaluation performed six

predefined tasks with ATRS:

1. Driving the seat out of the vehicle.

2. Opening the tailgate.

3. Driving the lift out of the vehicle.

4. Performing an emergency stop whilst the

seat and lift were simultaneously driving

into the vehicle.

5. Closing the tailgate.

6. Driving the seat in and out of the vehicle.

Tasks 1, 2, 3, 5 and 6 were specifically chosen

because they must be performed whilst using

SmartATRS. Task 4 was included to evaluate safety.

PECCS2015-5thInternationalConferenceonPervasiveandEmbeddedComputingandCommunicationSystems

62

4.2 Participants and Procedure

The evaluation was simulated by forming a user

group of 12 participants in powerchairs who could

drive a car. Each participant completed an

evaluation pack comprising of two questionnaires.

The first questionnaire contained ten statements

adapted from the System Usability Scale (SUS)

(Brook, 1996), where participants’ rated ten

statements on a 5-point scale from ‘Strongly

Disagree’ to ‘Strongly Agree’ for keyfobs,

SmartATRS by touch and joystick. Example

statements included: “I thought using the keyfobs

was easy” and “I thought that the emergency stop

feature of SmartATRS by touch was safe”. SUS was

selected as a usability measurement, as each

participant was able to provide a single score in

relation to each question (Bangor et al., 2008),

enabling SUS scores to be calculated for all three

interaction methods.

The second questionnaire concerned the

workload experienced during the tasks, based on the

NASA Task Load Index (NASA TLX) (National

Aeronautics and Space Administration, 1996)

measuring the Physical, Mental, Temporal,

Performance, Effort and Frustration demands. It is a

well-established method of analysing a user’s

workload and is a quick and easy method of

estimating workload that can be implemented with a

minimal amount of training (Stanton et al., 2005).

4.3 System Usability Scale (SUS)

Results

The Adjective Rating Scale (Bangor et al., 2009)

was used to interpret the SUS scores, with keyfobs

achieving a score of 51.7 (OK Usability), touch

achieving 90.4 (Excellent Usability, bordering on

Best Imaginable) and joystick achieving 73.3 (Good

Usability). This clearly highlights that touch is the

most usable; however, joystick can be seen as a

significant improvement to keyfobs.

A second important result identified the safety of

the emergency stop function. A stopwatch measured

the time between the command “Stop Lift!” being

exclaimed and the lift actually stopping, revealing a

standard deviation of 6.8 seconds for the keyfobs,

compared to 1.2 seconds for SmartATRS. The

average stopping times were 8.4 seconds and 2.2

seconds respectively. These reductions were due to

the participants being required to make a decision to

press the appropriate button on the keyfobs, whereas

with SmartATRS, the emergency stop button could

be pressed to immediately stop all functions.

4.4 NASA TLX Results

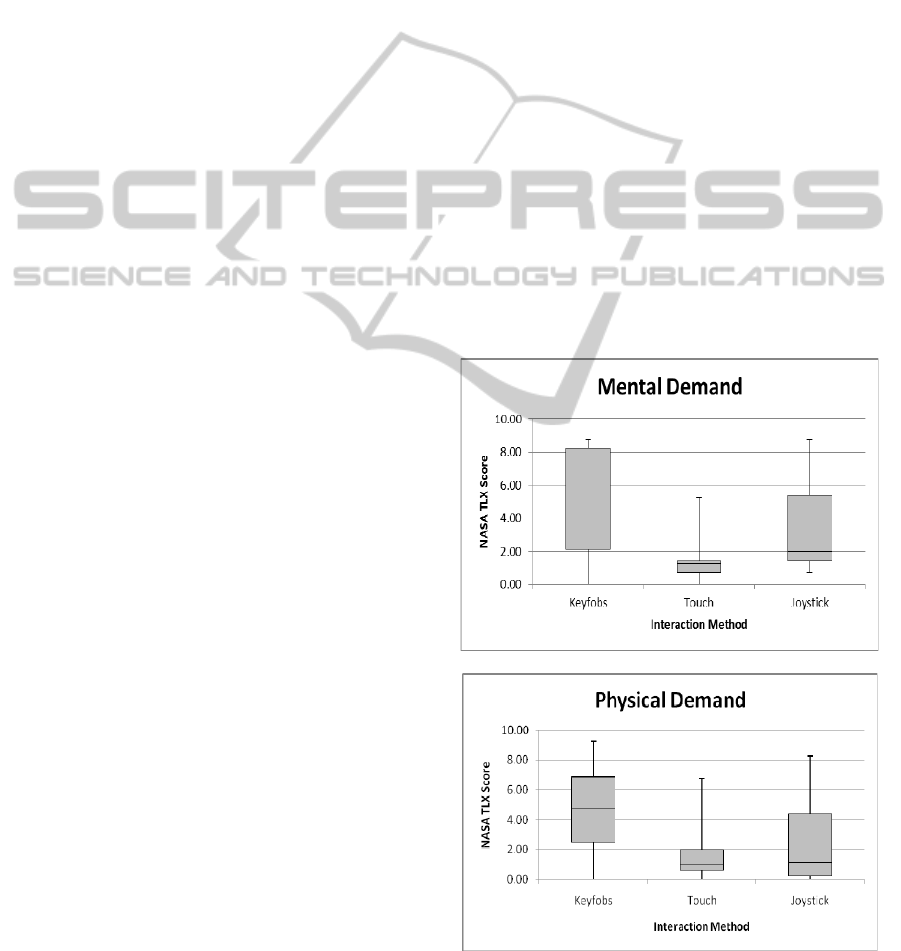

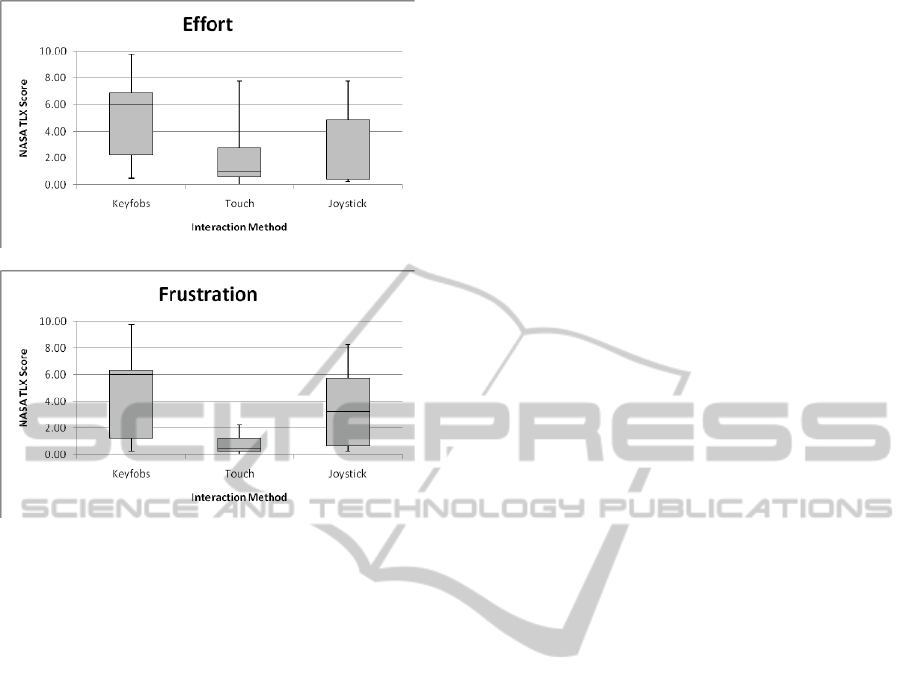

The box plot comparisons in Figure 5 and Figure 5

illustrate the differences in the workload

experienced when using keyfobs, touch and joystick.

From the minimum, lower quartile, median,

upper quartile and maximum values, it is evident

that ‘touch’ showed lower mental and physical

demands. Thus proving that keyfobs are more

mentally and physically demanding to use than

‘touch’ and are less efficient.

A second important observation was the higher

effort and frustration levels of the joystick in

comparison with touch due to it being less intuitive.

Another finding was that ‘touch’ had a greater

discrepancy between the maximum values and the

majority of the data. The discrepancy was caused by

a participant who was not familiar with using a

smartphone, therefore making ‘touch’ more

demanding.

There was a minority of users who experienced

low workload levels when using the keyfobs, but

overall the box plots are fairly conclusive that

‘touch’ is the most efficient and least demanding

interaction method.

Figure 5: Comparing Mental and Physical Demand

experienced.

EvaluatingtheUsabilityofanAutomatedTransportandRetrievalSystem

63

Figure 6: Comparing Effort and Frustration experienced.

5 DISCUSSION

SmartATRS is a new pervasive technology that has

been successfully developed to provide an

alternative means to interact with ATRS. The key to

this was the novel use of a relay board with an

embedded web server to interface with the ATRS

functions. This created a solution that was

smartphone-independent, as the GUI could be

accessed with any Wi-Fi enabled smartphone. The

use of XML for configuring SmartATRS was

efficient as it provided a method that was not visible

to the users, ensuring that there was no risk of them

tampering with or accidentally altering the timer

durations.

Controlled Usability Testing was an effective

method of proving that SmartATRS by touch was

more usable than the existing keyfobs. Informative

statistics were obtained from the questionnaires,

which enabled conclusions to be drawn. It was

evident that feedback to the user, through the use of

button colours and clear text with SmartATRS was a

considerable advantage over the keyfobs that

provided no user feedback. This was particularly

noticeable with the lift where it was difficult to

observe the state of the lift from the driver’s

perspective when using the keyfobs. SmartATRS

provides feedback by changing the button colours

depending on the current state. The user feedback is

particularly important for SmartATRS as it can be

viewed as an assistive environment. Metsis et al.

(2008) comment, that assistive environments should

not be obtrusive. SmartATRS is less obtrusive than

standard ATRS as the users are not required to use

small keyfobs that need to be carried in addition to a

smartphone.

A key finding from the user evaluation was the

increased safety of the emergency stop with

SmartATRS, where all functions are stopped

instantly using a single button press, unlike the

keyfobs. The large size of the emergency stop button

and its distinctive red colour contributes to safety.

This improved safety was reflected by the

substantial difference in emergency stop times (6.8

seconds for keyfobs and 1.2 seconds for

SmartATRS) and that 100 per cent of participants

agreed that the emergency stop with SmartATRS

was safe. The importance of robust assistive

technologies is acknowledged by Metsis et al.

(2008) who recommend that unusual situations must

be supported by such technologies to cater for user

errors. The NASA TLX results showed noticeable

increases in the mental and physical demands

experienced when using keyfobs, compared to

SmartATRS.

Trewin et al. (2013) conclude that mobile

devices have great potential for increasing the

independence of people with disability in their daily

lives and this is reflected with SmartATRS. The

addition of smartphone control to ATRS may

increase the independence of users who were

initially deterred by the poor usability of the

keyfobs.

6 FUTURE WORK

Alternative interaction methods will be assessed and

evaluated to determine whether the usability of

SmartATRS can be even further improved. The

ability to control the powerchair-vehicle interaction

using electroencephalograph (EEG), eye and head

tracking, as well as voice will be researched. It will

be necessary to contact powerchair manufacturers to

investigate whether there is an interest in our

initiative of integrating pervasive technologies into a

powerchair to develop a SmartPowerchair.

7 CONCLUSIONS

ATRS and SmartATRS have been evaluated and

PECCS2015-5thInternationalConferenceonPervasiveandEmbeddedComputingandCommunicationSystems

64

shown that an innovative and novel use of pervasive

technology has improved usability compared to the

small keyfobs. SmartATRS thereby meets a

functionality metric defined by Metsis et al. (2008),

stating that “an assistive technology must perform

correctly in order to serve its purpose”.

The user feedback obtained at the Mobility

Roadshow highlighted the need to improve the

usability of the keyfobs. A SmartATRS prototype

was developed that provided a smartphone

independent solution that integrated a relay board

and embedded web server into the standard ATRS

system architecture. The SUS results proved that

SmartATRS by touch had ‘Excellent’ and borderline

‘Best Imaginable’ usability, compared to the keyfobs

that achieved ‘OK’ usability. Completing NASA

TLX on the interaction methods showed that

SmartATRS by touch was less mentally and

physically demanding than keyfobs. Despite joystick

control having higher levels of demand than touch, it

was concluded to also be an improved interaction

method over keyfobs. Safety of ATRS was enhanced

through an emergency stop function that allowed all

functions to be immobilised with a single command

button, producing dramatically reduced emergency

stop times than keyfobs.

The development of SmartATRS has been an

initial step to creating a SmartPowerchair. In order

to achieve this, future user evaluations will be

conducted to identify the most suitable pervasive

computing technologies to apply. Through the

successful integration of such technologies, a

SmartPowerchair is anticipated to further enhance

the quality of life and independence of people with

disability.

ACKNOWLEDGMENTS

The authors would like to thank the experimentation

participants, Keith Whittington and Jane Merrington

for their support with the experimentation.

REFERENCES

Adebesin, F., Kotzé, P. and Gelderblom, H, 2010. The

Complementary Role of Two Evaluation Methods in

the Usability and Accessibility Evaluation of a Non-

Standard System. In: The 2010 Annual Research

Conference of the South African Institute for

Computer Scientists and Information Technologists:

proceedings of the 2010 Annual Research Conference

of the South African Institute of Computer Scientists

and Information Technologists Bela-Bela, South

Africa, October 11-13, 2010. New York, NY: ACM

Press. 1-11.

Bangor, A., Kortum, P.T. and Miller, J.T, 2008. An

Empirical Evaluation of the System Usability Scale.

International Journal of Human–Computer Intera-

ction, 24 (6), 574-594.

Bangor, A., Kortum, P.T., Miller, J.T, 2009. Determining

What Individual SUS Scores Mean: Adding an

Adjective Rating Scale. Journal of Usability Studies, 4

(3), 114-123.

Brook, J, 1996. SUS: a “quick and dirty” usability scale.

In: Jordan, P.W., Thomas, B., Weerdmeester, B.A.

and McClelland, I.L., eds. 1996. Usability Evaluation

In Industry. London: Taylor and Francis, 189-194.

Cofré, J.P., Rusu, C., Mercado, I., Inostroza, R. and

Jiménez, C, 2012. Developing a Touchscreen-based

Domotic Tool for Users with Motor Disabilities. In:

2012 Ninth International Conference on Information

Technology: New Generations: proceedings of the

2012 Ninth International Conference on Information

Technology: New Generations Las Vegas, NV, April

16-18, 2012. Piscataway, NJ: IEEE.

Dynamic Controls, 2014. iPortalTM Dashboard [online].

Christchurch, New Zealand: Dynamic Controls.

Available from: http://www.dynamiccontrols.com/

iportal/ [Accessed 10 December 2014].

Elap Mobility, 2011. A Successful Mobility Roadshow

2011 [online]. Accrington: Elap Mobility. Available

from: http://www.elap.co.uk/news/a-successful-mobili

ty-roadshow-2011/ [Accessed 10 December 2014].

Gallagher, B. and Petrie, H, 2013. Initial Results from a

Critical Review of Research for Older and Disabled

People. In: The 15th ACM SIGACCESS International

Conference on Computers and Accessibility:

proceedings of the 15th International ACM

SIGACCESS Conference on Computers and

Accessibility Bellevue, Washington, WA, USA,

October 21-23, 2013, New York, NY: ACM Press. 53.

Gao, A., Miller, T., Spletzer, J.R., Hoffman, I. and

Panzarella, T, 2008. Autonomous docking of a smart

wheelchair for the Automated Transport and Retrieval

System (ATRS). Journal of Field Robotics, 25 (4-5),

203-222.

ISO 9241-11, 1998. Ergonomic Requirements for Office

Work with Visual Display Terminals (VDTs) - Part 11:

Guidance on Usability. Geneva: International

Organization for Standardization. ISO 9241-11.

Kleiner, K, 2008. Robotic wheelchair docks like a

spaceship. New Scientist [online], 30 April 2008.

Available from: http://www.newscientist.com/article/

dn13805-robotic-wheelchair-docks-like-a-spaceship.

html [Accessed 10 December 2014].

Metsis, V., Zhengyi, L., Lei, Y. and Makedon, F, 2008.

Towards an Evaluation Framework for Assistive

Technology Environments. In: The 1st International

Conference on PErvasive Technologies Related to

Assistive Environments: proceedings of the 1st

international conference on PErvasive Technologies

EvaluatingtheUsabilityofanAutomatedTransportandRetrievalSystem

65

Related to Assistive Environments Athens, July 15-19,

2008. New York, NY: ACM Press. 12.

National Aeronautics and Space Administration. 1996.

Nasa Task Load Index (TLX) v. 1.0 Manual.

Washington, WA: National Aeronautics and Space

Administration.

Newell, A.F. and Gregor, P, 2002. Design for older and

disabled people – where do we go from here? Univer-

sal Access in the Information Society, 2 (1), 3-7.

Robertson, J. and Robertson, S, 2009. Atomic

Requirements: where the rubber hits the road [online].

Available from: http://www.volere.co.uk/pdf%20files/

06%20Atomic%20Requirements.pdf [Accessed 10

December 2014].

Song, T.H., Park, J.H., Chung, S.M., Hong, S.H., Kwon,

K.H, Lee, S. and Jeon, J.W, 2007. A Study on

Usability of Human-Robot Interaction Using a Mobile

Computer and a Human Interface Device. In: The 9th

International Conference on Human Computer

Interaction with Mobile Devices and Services:

proceedings of the 9th international conference on

Human computer interaction with mobile devices and

services Singapore, Malaysia, September 11-14, 2007.

New York, NY: ACM Press. 462-466.

Stanton, N. A., Salmon, P. M., Walker, G. H., Baber, C.

and Jenkins, D. P, 2005. The National Aeronautics and

Space Administration Task Load Index (NASA TLX).

In: Stanton, N. A., Salmon, P. M., Walker, G. H.,

Baber, C. and Jenkins, D. P., eds. 2013. Human

Factors Methods: A Practical Guide for Engineering

and Design. Farnham: Ashgate Publishing.

Suarez-Tangil, G., Tapiador, J., Peris-Lopez, P.,

Ribagorda, A, 2013. Evolution, Detection and

Analysis of Malware for Smart Devices.

Communications Surveys & Tutorials, 99, 1-27.

Trewin, S., Swart, C. and Pettick, D, 2013. Physical

Accessibility of Touchscreen Smartphones. In: The

15th ACM SIGACCESS International Conference on

Computers and Accessibility: proceedings of the 15th

International ACM SIGACCESS Conference on

Computers and Accessibility Bellevue, Washington,

WA, USA, October 21-23, 2013, New York, NY:

ACM Press. 19.

PECCS2015-5thInternationalConferenceonPervasiveandEmbeddedComputingandCommunicationSystems

66