Reflections on the Use of a Personal Response System (PRS) for

Summative Assessment in an Undergraduate Taught Module

Alan Hilliard

School of Health and Social Work, University of Hertfordshire, College Lane, Hatfield, U.K.

Keywords: Computer-aided Assessment, Assessment Software Tools, Technology Enhanced Learning.

Abstract: This paper outlines the author’s experience of using a Personal Response System (PRS) for summative

assessment in a 3

rd

year undergraduate taught module, over a 2 year period. The rationale for

implementation of this method of assessment was a relatively high failure rate in the previous written

examination (37%), and to reduce the marking burden for the teaching team. Key challenges identified with

the implementation of the assessment process were reliability of the hardware/software, and student and

staff confidence with the PRS and assessment process. Following the introduction of the new assessment

method, the assessment failure rate was reduced to 9%. The PRS was seen as a good tool for summative

assessment and received very positive student feedback comments. The PRS proved to be reliable, and with

support and guidance, both students and staff felt confident with the process.

1 INTRODUCTION

Comparative Imaging is a 3

rd

year undergraduate

taught module (30 credits at level 6), which explores

the applications of different imaging modalities for

three anatomical areas: head and neck, thorax and

heart, and abdomen and pelvis. Previously the

assessment of the module was by an individual

written assignment, and a two hour unseen

examination at the end of the module. In 2011, the

examination was replaced by three, one hour long,

summative multiple choice question (MCQ) tests,

which were undertaken using the TurningPoint™

personal response system (PRS). The tests were

staged throughout the module, each of the

anatomical regions being assessed individually on

completion of the taught content. Part of the

rationale for the change, particularly with respect to

staging of the assessment, was as a result of previous

student feedback which highlighted that students felt

overwhelmed with the volume of information

learned, and a relatively high failure rate (37%) in

the previous year’s examination. The change in

assessment method was also introduced to reduce

the marking burden for the teaching team. This was

significant, as there is a relatively large student

cohort (125 students were registered on the module).

The student cohort was also diverse with respect to

age, with approximately 50% of the cohort being

mature entry students, and also diverse with regard

to ethnic and cultural backgrounds.

Previous research by the author investigating

the use of the PRS for formative feedback with

students had been very positive. 98.5% of students

reported that the system was easy to use and 92.5%

perceived that the use of the system helped their

learning (Lorimer and Hilliard, 2007). Other studies

have reported similar findings (Jefferson and

Spiegel, 2009; Chen and Lan, 2013). Despite many

studies exploring the use of PRS for formative

feedback, there is little information on the use on the

use of PRS for summative assessment.

2 USING THE PRS FOR

SUMMATIVE ASSESSMENT

PRS typically comprise four elements: a tool for

presenting lecture content and questions (e.g. a

computer, PowerPoint™, and a digital projector),

electronic handsets that enable students to respond to

questions, a receiver that captures students’

responses and PRS software collates and presents

students’ responses (Kennedy and Cutts, 2005).

In order to use the PRS for summative

assessment, a number of steps needed to be

undertaken: Firstly, a “participant” list needs to be

created which includes the students’ individual

identification numbers along with a designated

keypad number for each student. The student cohort

288

Hilliard A..

Reflections on the Use of a Personal Response System (PRS) for Summative Assessment in an Undergraduate Taught Module.

DOI: 10.5220/0004946002880291

In Proceedings of the 6th International Conference on Computer Supported Education (CSEDU-2014), pages 288-291

ISBN: 978-989-758-021-5

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

undertaking the assessment did not have possession

of their own individual handsets, so an assessment

signing in sheet was created. When students signed

in at the assessment room, they showed their student

identification to the invigilator who could then

locate and give them their individual handsets. At

the end of the assessment, the handsets were

collected from the students prior to them leaving the

assessment room.

Each test consisted of 20 MCQs, and each

question was allocated a time limit of 2 minutes.

Traditionally, when used for instant formative

feedback, it is common to display a graph showing

the percentage responses for each option answer,

and to display the correct answer for each question.

As the system was going to be used for summative

assessment, the author felt that displaying this data

would be a distraction for the students, and could

impact negatively on their confidence if they saw

that they were getting a number of questions wrong.

Therefore, after each question was polled for 2

minutes, signalled by a countdown indicator, no data

was displayed in the assessment room and the

lecturer would move on to the next question.

Following the completion of each MCQ test, The

PRS software was used to generate reports of

individual student marks, and also generic feedback

for each question with pie charts showing the spread

of responses and identifying the correct answers. For

each of the tests, the students’ individual marks and

generic feedback was made available through the

module website on the University’s managed

learning environment (MLE) within a 24 hour

period.

3 CHALLENGES

One if the primary considerations in considering

adopting the PRS as an assessment method in the

module, was the overall contribution of the

assessment towards the students final module mark.

The three PRS assessments were equally weighted at

at 20% so, collectively, The PRS assessments

contributed 60% towards the students’ final module

mark. The other 40% contribution towards the

students’ final module mark was by submission of

an individually written assignment. As the PRS

assessments contributed the greater portion of the

Students final module mark, it was important to

ensure that the assessment method and process were

robust and reliable. Prior to the implementation of

the PRS for summative assessment, a number of key

challenges were identified:

i. Reliability of hardware/software.

ii. Student trust in the PRS and assessment

process.

iii. Lecturer confidence in the PRS and the

assessment process.

Similar challenges have been identified by other

researchers (Roe and Robinson, 2010).

The PRS has been used extensively since 2005

for formative feedback, and during that time, had

shown itself to be reliable. In order to be assured of

reliability of its use for summative assessment a

number of checks were put in place. Once the MCQ

test had been created, it was given a trial run using a

small number of handsets (approximately 6). This

allowed a visual check of the questions for visibility

and clarity of format. It also allowed for visibility

and consistency of the countdown indicator on each

question slide to be checked as a means of showing

the open polling window and automatically closing

the question poll after the allotted timeframe had

elapsed. Finally, a report was generated to

demonstrate that the question data had been captured

and could be displayed as both individual marks and

generic feedback.

A key challenge to the assessment method would

be the need to gain the students trust and confidence

in the process, particularly as this would be the first

time that they had encountered this method of

summative assessment. This was approached in a

number of ways. Firstly, the assessment process was

described in detail during the module induction

session, which helped to demonstrate to the students

that the structure and process of the assessment had

been fully considered prior to its introduction.

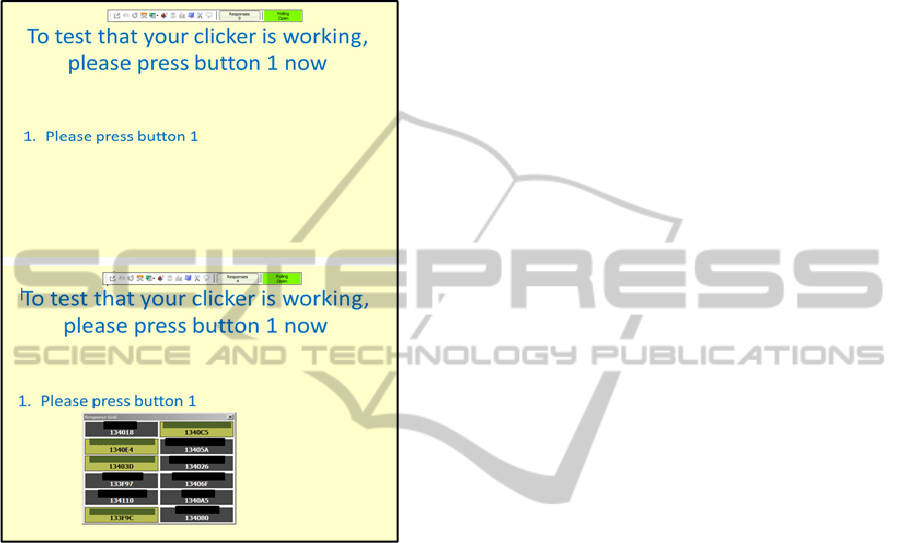

Secondly, early in the module following the first

topic, there was a formative session arranged with a

“mock” of an actual summative assessment. At this

point handsets had been assigned to individual

names, and were distributed to the students. The first

slide asked students to “press button 1” to check that

the handsets were working. During the polling

timeframe, the “show response grid” button was

selected on the TurningPoint™ toolbar. This

projected a grid of all the handsets, and students

could see their name change colour as they pressed

their handset buttons (see figure 1 below – the

student names have been removed to ensure

confidentiality). This helped to increase the students’

confidence and trust in the reliability of the

technology.

Following the “mock” PRS assessment, the

process was discussed and any questions or concerns

were addressed. One issue that the students raised

was concerns that their handset wouldn’t work on

ReflectionsontheUseofaPersonalResponseSystem(PRS)forSummativeAssessmentinanUndergraduateTaught

Module

289

the day. It was agreed that a paper “receipt slip”

would be used during the assessment and handed in

with the handsets at the end of the assessments. The

paper “receipt slip” listed the question numbers and

students could write their answers in the boxes

provided.

Figure 1: Showing the student participant response grid.

In adopting this system, three points were made

clear. Firstly, the paper “receipt slips” would not be

marked. Secondly, the paper “receipt slips” would

only be considered where there was clear evidence

of a non-functioning handset, and thirdly, because

students could change their answers to questions

within the polling window timeframe, there would

be no discussion where students selected one answer

using their PRS handset and wrote a different

answer on their “receipt slip”. The electronic

response would be taken as the official answer.

Finally, the issue of lecturer confidence in the

PRS and assessment process was not without its

challenges. I had a number of years of experience of

using the PRS for formative assessment, but had not

previously used it for summative assessment. I was

very conscious of the need for the process to work

without problems to establish the students’ trust

from the outset. I would be the main administrator

for the assessment, and a colleague would

simultaneously undertake the assessment in a

separate room with students who had specific study

needs agreements. An experienced teaching team

member was selected, and a step-by-step guide for

the assessment process was produced and it was

discussed prior to each assessment. Also before each

assessment, every handset was checked that it was

working and was set to the correct radiofrequency

(RF) channel for the RF receiver so that data

responses would be received. This was checked by

again using the “show response grid”. Next the

assessment question slides were run using a small

number of PRS handsets, and a report was generated

to ensure that the software was working correctly.

4 STUDENT RESULTS AND

FEEDBACK

Combining the marks for the individual PRS

assessments resulted in an overall fail rate of 9%, as

compared with the previous failure rate of 37%.

Student feedback on the module was very positive:

“This was a very well organised module, and the

modular assessment was helpful. This has been an

enjoyable module to study”.

“I really liked the structure of the in class tests...

Got feedback of exam results the next day! All the

teachers are really good and helpful”.

“The current set-up of the module is great. I

wouldn't change anything”.

“This has been an excellent module…I like the

way the module has been split into 3 sections which

are tested throughout the semester. The PRS system

of testing appears to work well and gives the student

prompt feedback”.

One feedback point that was mentioned by a number

of students was about the linearity of the assessment,

i.e. each question slide was answered in turn,

without the opportunity for students to go back and

review their answers to previous questions, which

they would have liked. This point was taken on

board for the second year that the assessment

method was used. In order to enable this, students

had a conventional paper-based MCQ. At the end of

the allotted time, the PRS was used to collect the

data. The answers to each of the questions were

collected in turn, with students entering the answer

they had previously written down. At the end of the

assessment, the students handsets were collected in

along with their paper-based MCQs. Here the

students MCQ papers replaced the previous idea of

the “receipt slip”, and it was again made clear that

the students’ electronic responses would be taken as

CSEDU2014-6thInternationalConferenceonComputerSupportedEducation

290

their official answers.

5 PERSONAL REFLECTION ON

THE ASSESSMENT PROCESS

Following undertaking this method of assessment

over a 2 year period, and reflecting on the process, a

number of advantages and challenges have been

identified.

Some of the clear advantages of this method are

the reduction in staff marking time, along with the

ability to provide rapid student feedback and rapid

release of marks. In each of the student assessments,

individual marks and general feedback was provided

within a 24 hour timeframe. An unexpected finding

was that the assessment method and process

appeared to be very suited to the ethnically, and

culturally, diverse student cohort. Some students

who had not performed well with traditional written

examinations appeared to perform better with the

PRS assessments. This finding is not specifically

supported within current literature.

The process was not without its challenges,

however. There was a considerable time input

required to construct MCQs at the appropriate level,

and which would challenge the students to

demonstrate their level of knowledge and

understanding. Additionally, time was required to

create participant lists, check handsets and

TurningPoint™ question slides prior to each

assessment.

Due to the cohort size and the centralised

timetable booking system at my institution, it

became necessary to run the summative assessment

in more than one room at the same time. This led to

the need to train other teaching team members in the

use of the PRS and how to conduct the summative

assessment process. There was also a greater staff

input required to invigilate the assessment in

multiple rooms.

Any concerns regarding the reliability of the

hardware and software, proved to be unfounded.

Each of the assessment sessions ran smoothly and

without technical error.

Student trust in the system and assessment

process was established early in the module, through

careful explanation, dialogue and discussion. It was

supported by running a formative “mock”

assessment, which enabled students to experience

the process and become familiar with using the PRS

handsets. Student feedback on the module provided

further evidence regarding the success of this

method of assessment.

Lecturer confidence in the system and process

could be a challenge if staff felt inexperienced in the

use of the PRS or lacked understanding of the

structure and process of the assessment. Myself, as

lead lecturer, had considerable experience in the use

of the PRS and took responsibility for the design and

structure of the assessment. Where other teaching

team members were required to administer the

assessment, support, training and guidance were

given. The teaching team managed to successfully

run the assessments, and reported high levels of

confidence as a result.

6 CONCLUSIONS

The PRS has shown itself to be a successful tool for

summative assessment, which can save on staff

marking time, although there is a need to be mindful

of the time requirement to set up and check the PRS

and handsets prior to use. The assessment method

may be well suited for ethnically and culturally

diverse suited cohorts, although this has not been

established. It is important to support students, so

that they have trust and confidence in the assessment

process and the use of the PRS. Similarly, it is

important to recognise the needs of the staff teaching

team, and to provide training, support and guidance

as required.

REFERENCES

Chen, T., Lan, Y. 2013. Using a personal response system

as an in-class assessment tool in the teaching of basic

college chemistry. Australasian Journal of

Educational Technology, 29(1), 32-40.

Jefferson, W., Spiegel, D. 2009. Implementation of a

university standard for personal response systems.

Association for the Advancement of Computing in

Education Journal, 17(1), 1-9.

Kennedy, G. E., Cutts, Q. I. 2005. The association

between students’ use of an electronic voting system

and their learning outcomes. Journal of Computer

Assisted Learning, Vol. 21, 260-268.

Lorimer, J., Hilliard, A., 2007. Net gen or not gen?

Student and Staff Evaluations of the use of

Podcasts/Audio Files and an Electronic Voting System

(EVS) in a Blended Learning Module. Conference

Proceedings: 6

th

European Conference on e-Learning,

407-414.

Roe, H. M., Robinson, D. P., 2010. The use of Personal

Response Systems (PRS) in multiple-choice

assessment: benefits and pitfalls over traditional,

paper-based approaches. Planet, Issue 23, 54-62.

ReflectionsontheUseofaPersonalResponseSystem(PRS)forSummativeAssessmentinanUndergraduateTaught

Module

291