Using Word Sense as a Latent Variable in LDA Can Improve Topic

Modeling

Yunqing Xia

1

, Guoyu Tang

1

, Huan Zhao

1

, Erik Cambria

2

and Thomas Fang Zheng

1

1

Department of Computer Science and Technology, Tsinghua University, Beijing, China

2

Temasek Laboratories, National University of Singapore, Singapore, Singapore

Keywords:

Topic Modeling, LDA, Latent Variable, Word Sense.

Abstract:

Since proposed, LDA have been successfully used in modeling text documents. So far, words are the common

features to induce latent topic, which are later used in document representation. Observation on documents

indicates that the polysemous words can make the latent topics less discriminative, resulting in less accurate

document representation. We thus argue that the semantically deterministic word senses can improve quality

of the latent topics. In this work, we proposes a series of word sense aware LDA models which use word sense

as an extra latent variable in topic induction. Preliminary experiments on document clustering on benchmark

datasets show that word sense can indeed improve topic modeling.

1 INTRODUCTION

In the past decade, Latent Dirichlet Allocation (LDA)

(Blei et al., 2003) has been proved an effective topic

model for information retrieval (Dietz et al., 2007;

Wang et al., 2007). So far, words are the common

features to induce latent topic, which are later used in

document representation. Observation on documents

indicates that the polysemous words can make the la-

tent topics less discriminative, resulting in less accu-

rate document representation. For example, we all

know that apple refers a kind of fruit in some cases

but a computer company in other contexts. In the la-

tent topics induced by LDA, the word feature ”apple”

is assigned to different topics with different probabil-

ity. It is unknown which word sense plays a key role

in these assignment: the fruit or the company? Our

intuition is that topic models with ambiguous words

can be less precise than that with word senses.

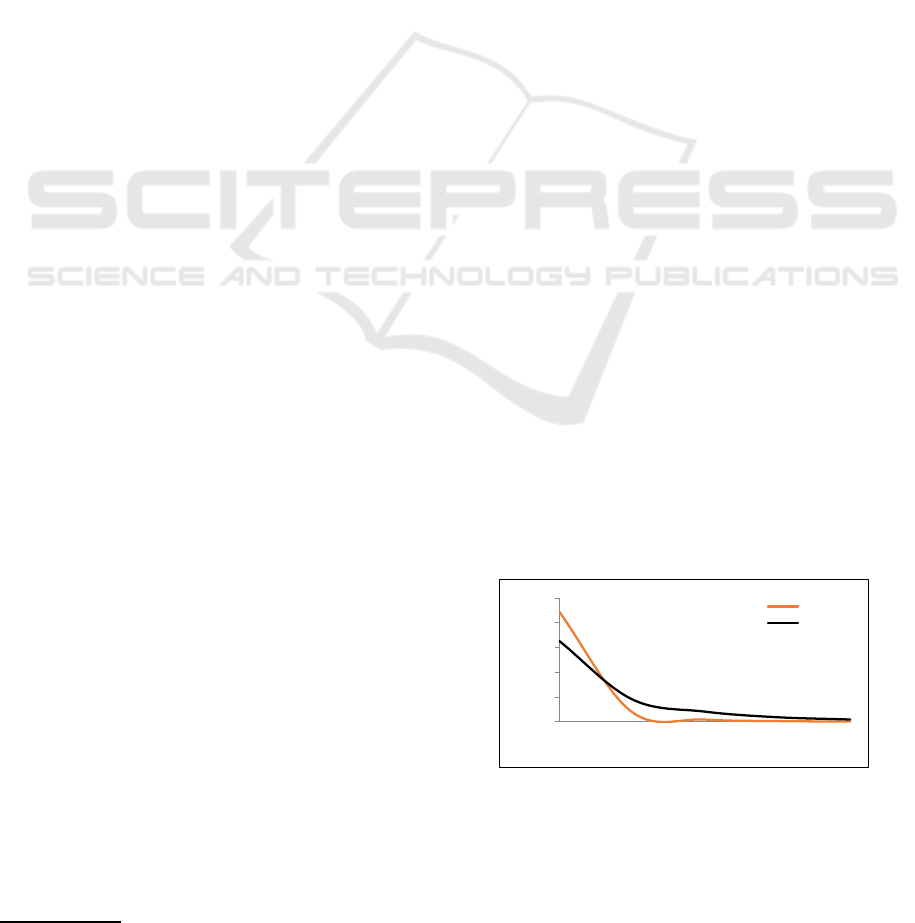

An empirical study has been conducted to con-

firm this intuition. With the word-topic probability

matrix produced by LDA, we calculate average prob-

ability within the top N topics (avgpr@N) as follows.

For each word, the topics that a word w is associ-

ated are ranked according to the probability p(z|w)

1

.

1

p(z|w) can be calculated with p(z|w) ∝

p(w|z)Σp(z|d)p(d) where p(w|z) and p(z|d) are pa-

rameters of the model thus can be estimated while we

estimate p(d) to be the proportion of ds document length to

the length of the entire document collection .

argpr@N is calculated by averaging the probabilities

p(z|w) of all words on the top N topics. For each

word sense, we calculate argpr@N based on p(z|s) .

We use the senses in the last iteration of SLDA mod-

els(e.g. 2200) and the topics inferred by these word

senses. We run the classic word based LDA and a

word sense aware LDA (SLDA) proposed in this work

on the same dataset (i.e., Reuters) and calculated the

avgpr@N values with different N. The curve are pre-

sented in Figure 1.

Experimental results show that the proposed

models outperform the baselines significantly in

document clustering. This implies that the

statistical word sense induced from corpora can

provide the LDA topic models with additional

llocation (LDA) (Blei

topic model for

Wang et al.,

tremendous

identifying semantic relations

presented in Figure 1.

Figure 1. Word-topic distribution in LDA and sense-topic

0

0.2

0.4

0.6

0.8

1

1 2 3 4

5

avgpr@N

top N topics

SLDA

LDA

Figure 1: Word-topic distribution in LDA vs. sense-topic

d

istribution in SLDA on Reuters dataset.

From Figure 1, we find the curve for sense-topic

distribution is sharper than that for word-topic distri-

bution. This indicates that word senses are more dis-

criminative than words. This confirms with us that

word sense can improve the LDA topic model. We

argue that the semantically deterministic word senses

can improve quality of the latent topics.

In this work, we proposes two word sense aware

532

Xia Y., Tang G., Zhao H., Cambria E. and Fang Zheng T..

Using Word Sense as a Latent Variable in LDA Can Improve Topic Modeling.

DOI: 10.5220/0004889705320537

In Proceedings of the 6th International Conference on Agents and Artificial Intelligence (ICAART-2014), pages 532-537

ISBN: 978-989-758-015-4

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

LDA models which use word sense as an extra latent

variable in topic induction. The first SLDA model is

standalone SLDA (SA-SLDA), in which word senses

are first induced from a development dataset and then

replace words in LDA. The second SLDA model is

collaborative SLDA (CO-SLDA), in which the topic

assigned to a word has a positive feedback on word

sense induction. Preliminary experiments on doc-

ument clustering on benchmark datasets show that

word sense can indeed improve topic modeling.

The reminder of this paper is organized as follows.

In Section 2, we elaborate the word sense aware LDA

models. In Section 3, we present the experiments and

discussions. We summarize related work in Section 4,

and conclude this paper in Section 5.

2 WORD SENSE AWARE LDA

MODELS

The classic LDA assigns each word in the document

a topic and consider the surface words as the basic

granularity for a document. Alternatively, our model

emits a sense for each surface word and assigns each

sense a topic. Therefore, the basic granularity for our

model is the word sense. To address this motivation,

we introduce a latent variable of word sense and in-

duce it from the observed surface words. We design

two approaches to implement this purpose as follows:

• Standalone SLDA (SA-SLDA): We isolate the

Word Sense Induction (WSI) process as a stan-

dalone step. With the induced word sense in hand,

we perform the word sense based LDA for docu-

ment clustering.

• Collaborative SLDA (PCo-SLDA): We identify

the generativestory as two iterativelyinterchange-

able steps. Given an observed topic, we generate

the word sense from the topic. Given an observed

word sense, we generate the topic for each word

sense, where the word sense is a point estimate

from the mode of the distribution.

We describe all our models in two perspectives.

First, we performword sense induction (WSI) on each

word. Second, documents are represented (DR) as a

collection of word senses, which are then used to infer

topics. For presentation convenience, we first brief

the classic LDA model (Blei et al., 2003).

2.1 The LDA Model

Given D documents and W word types, the generative

story for LDA with Z topics is as follows:

1. For each topic z:

(a) choose φ

z

∼ Dir(β).

2. For each document d

i

:

(a) choose θ

d

i

∼ Dir(α).

(b) for each word w

ij

in document d

i

:

i. choose topic z

ij

∼ Mult(θ

d

i

).

ii. choose word w

ij

∼ Mult(φ

z

ij

).

where d

i

refers to i-th document in the corpus; w

ij

refers to j-th word in document d

i

; z

ij

refers to the

topic that word w

ij

is assigned; α and β are hyper-

parameters of the model; φ

z

ij

and θ

d

i

are per topic

word distributions and per document topic distribu-

tions respectivelywhich are drawn from Dirichlet dis-

tributions.

For inference, Collapsed Gibb Sampling (Griffiths

and Steyvers, 2004) is widely used to estimate the

posterior distribution for latent variables in LDA. In

this procedure, the distribution of a topic for the word

w

ij

= w based on values of other data is computed as

follows.

P(z

ij

= z|z

−ij

, w

z

−ij

, w

z

−ij

, w) ∝

n

d

i

−ij,z

+ α

n

d

i

−ij

+ Zα

×

n

w

−ij,z

+ β

n

−ij,z

+Wβ

(1)

In Equation 1 n

d

i

−ij,z

is the number of words that

are assigned topic z in document d

i

; n

w

−ij,z

is the num-

ber of words (= w ) that are assigned topic z; n

d

i

−ij

is

the total number of words in document d

i

and n

−ij,z

is

the total number of words assigned topic z. −ij in all

the above variables refers to excluding the count for

word w

ij

.

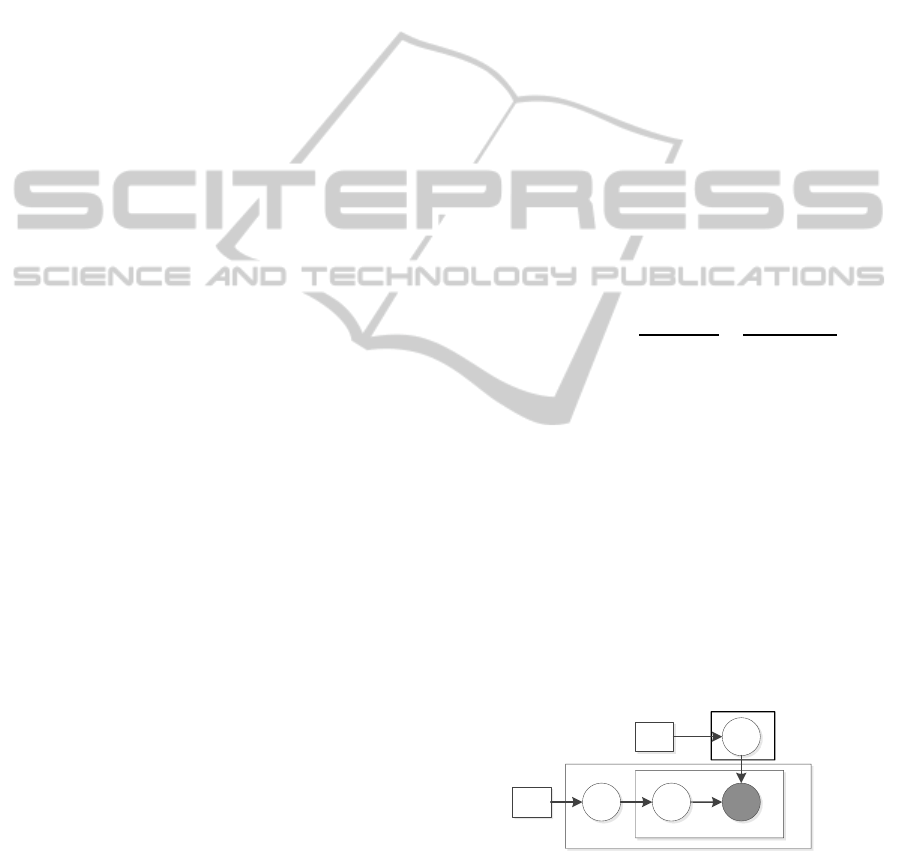

2.2 SA-SLDA

In the SA-SLDA model, WSI and DR are considered

as standalone modules, where DR takes the output

(i.e., word senses) of WSI as input (see Figure 2).

s

ij

α

θ

di

z

ij

Z

Z

Z

¶

z

β

N

di

D

Figure 2: Illustration of the SA-SLDA model.

2.2.1 WSI with HDP

In SA-SLDA, we follow (Yao and Van Durme, 2011)

to employ Hierarchical Dirichlet Processes (HDP)

(Teh et al., 2004) for word sense induction. HDP

UsingWordSenseasaLatentVariableinLDACanImproveTopicModeling

533

known as a nonparametric Bayesian method is often

considered to be advantageous over the parametric

methods like LDA, because LDA requires an exter-

nal input to specify the number of topics while HDP

does not. In our case, numbers of word senses differ

amongst different words. Therefore, we favor to em-

ploy HDP for WSI so that we can equip each word

with different number of senses according to their

contexts.

We perform HDP on each word. In this paper,

we define a word on which the WSI algorithm is per-

formed as a target word and words in the context of a

target word as context words of the target word. For

each context v

ij

of the target word w, the sense s

ij

for each word c

ij

in v

ij

has a nonparametric prior G

ij

which is sampled from a base distribution G

w

. H is

a Dirichlet distribution with hyper-parameter ε . The

context word distribution η

s

given a sense s is gener-

ated from H:η

s

∼ H.

Then we simply take mode sense in the sense dis-

tribution as the sense of the target word.

2.2.2 DR with Word Senses

As shown in Figure 2, we replace word with its word

sense in the gray plate. Then the formal procedure

of document representation in SA-SLDA is given as

follows:

1. For each topic z:

(a) choose φ

z

∼ Dir(β).

2. For each document d

i

:

(a) choose θ

d

i

∼ Dir(α).

(b) for each word w

ij

in document d

i

:

i. choose topic z

ij

∼ Mult(θ

d

i

).

ii. choose sense s

ij

∼ Mult(φ

z

ij

).

Similar to LDA, we also use Collapse Gibbs Sam-

pling (Griffiths and Steyvers, 2004) to do inference

for SA-SLDA. In SA-SLDA, we replace the surface

words with the induced word senses. Therefore, the

topic inference is similar to the classic LDA, where

the condition probability P(z

ij

= z|z

−ij

, s

z

−ij

, s

z

−ij

, s) is evaluated

by

P(z

ij

= z|z

−ij

, s

z

−ij

, s

z

−ij

, s) ∝

n

d

i

−ij,z

+ α

n

d

i

−ij

+ Zα

×

n

s

−ij,z

+ β

n

−ij,z

+ Sβ

(2)

In Equation.2, n

s

−ij,z

is the number of senses with

sense s that are assigned topic z , excluding the sense

of the j-th word; S is the number of senses for the data

set.

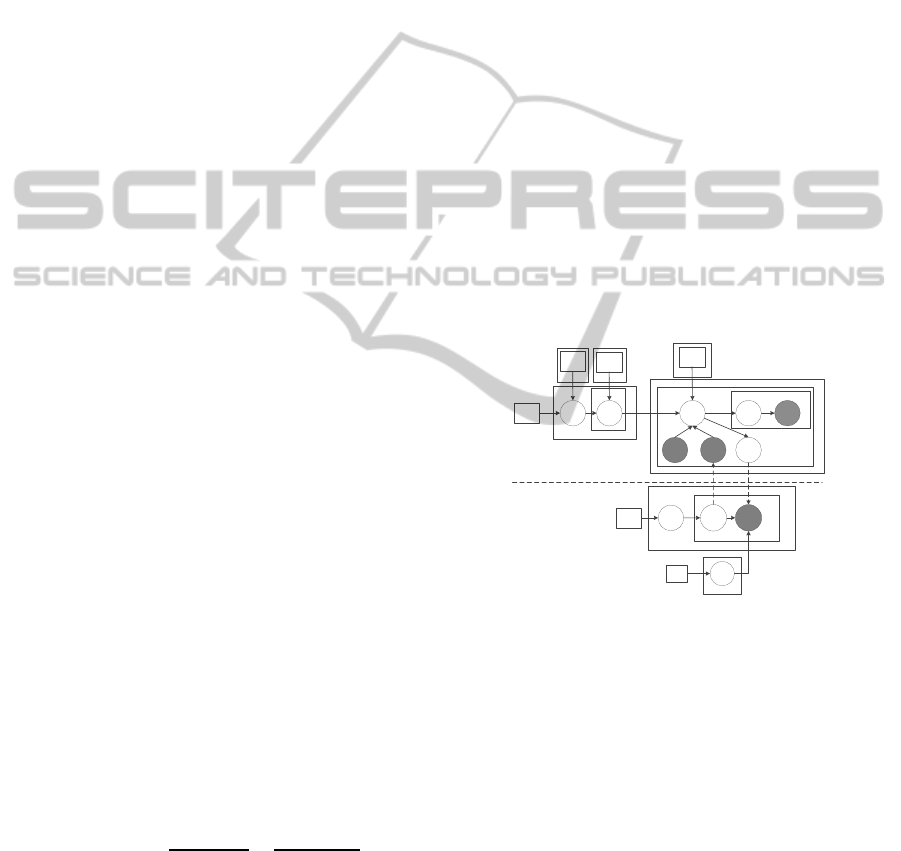

2.3 CO-SLDA

Alternatively, word senses can be incorporated into

LDA in a collaborative manner. We are interested in

whether the topic assigned to a word has a positive

feedback on WSI, which then can be used to refine

the topic distribution. Inspired by this motivation, we

propose a Collaborative SLDA model which takes the

topics of senses from SLDA as the pseudo feedback

for WSI and iteratively infers both topics and word

senses. Specifically, we achieve a point estimate for

the target word in WSI and feed this estimated sense

to DR.

In this model, a three-level HDP algorithm is used

to capture the relationship between word senses and

topics of a target word w (see Figure 3. In the three-

level HDP, for each word type w, we choose for each

topic a probability measure G

wz

which is drawn from

Dirichlet Process DP(ρ

w

, G

w

). For each word w

ij

in document d

i

, given topic z

ij

= z, we use G

wz

as

the base probability measure for the context of w

ij

and draws its own G

ij

from Dirichlet process G

ij

∼

DP(κ

wz

, G

wz

). This means that word w may have dif-

ferent sense distributions in different topics.

c

ijk

κ

w

G

ij

s'

ijk

C

ij

N

di

G

w

H

γ

w

Z

G

wz

ρ

w

s

ij

α θ

di

z

ij

Z

¶

z

β

D

W

W

W

W

z

ij

s

ij

D

N

di

w

ij

Figure 3: Illustration of the SA-SLDA model.

We show the graphical presentation for CO-SLDA

in Figure 3. C

ij

refers to the number of words in

the context window v

j

for word w

ij

in document d

i

.

The above dotted line shows the WSI process while

the below shows the DR process. Given observed

topics {z

ij

}, word senses {s

ij

} are inferred in WSI.

Given observed senses {s

ij

}, topics {z

ij

} are inferred

in DR. The two processes are interchangeably per-

formed. We provide the dashed arrows in Figure 4 to

connect {s

ij

} and {z

ij

} that will change from hidden

to observable during the alternation of two processes.

The word sense induction process is as follows:

1. For each word type w:

(a) choose G

w

∼ DP(γ

w

, H).

2. For each topic z:

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

534

(a) choose G

wz

∼ DP(ρ

w

, G

w

).

3. For each document d

i

:

(a) each context v

j

of word w

ij

:

i. choose G

ij

∼ DP(κ

wz

, G

wz

).

(b) For each context word c

k

of target word w

ij

:

i. choose s

′

ijk

∼ G

ij

.

ii. choose c

ijk

∼ Mult(η

s

ijk

).

iii. set s

ij

= argmax

s

P(s

ij

|G

ij

).

The document representation process is the same

as SA-SLDA. Intuitively, the CO-SLDA model is ad-

vantageous over the SA-SLDA model, because one

word may carry different senses in different topics

while in the same topic occurrences of one word often

refer to the same sense.

For inference, we interchangeably infer two

groups of hidden variables in CO-SLDA:

1. Given that the topic for each word sense z

ij

is ob-

served, we infer the sense distribution G

ij

in the

context window around a target word. This is

achieved through the same scheme as (Teh et al.,

2004). Then we estimate s

ij

for the target word as

sense with the highest probability in G

ij

.

2. Given that the word sense z

ij

is observed, we in-

fer the topic z

ij

for each word sense. This can be

achieved using the same inference scheme as SA-

SLDA.

3 EVALUATION

We use document clustering task to evaluate the accu-

racy of topics in our model.

3.1 Setup

Data Set

Three data sets used in our experiments are extracted

from the following two corpora.

1. TDT4: Following (Kong and Graff, 2005), we

use the English documents from TDT2002 and

TDT2003, i.e., TDT41 and TDT42.

2. Reuters: Documents are extracted from Reuters-

21578 (Lewis, 1997) with the most frequent 20

categories, i.e., Reuters20.

System Parameters

All hyper-parameters are tuned in the TDT42 dataset

and the same ones are applied on the other two

datasets as well. In all experiments, we let the Gibbs

sampler burn in for 2000 iterations and subsequently

take samples 20 iterations apart for another 200 itera-

tions.

As we isolate the WSI process from the document

representation process in SA-SLDA, we present the

parameters accordingly. (1) In the WSI step, we set

the HDP hyper-parameters γ

w

, ρ

w

, ε for every word

type to be γ

w

∼ Gamma (1, 0.001), ρ

w

∼ Gamma

(0.01, 0.028), ε=0.1; (2) In the Document represen-

tation step, we set α=1.5 and β=0.1. The topic num-

ber is set as cluster number in each dataset. In sys-

tem CO-SLDA, (1) in the WSI step we set the hyper-

parameters γ

w

, ρ

w

, ε for every word type to be γ

w

∼

Gamma (8,0.1), ρ

w

∼ Gamma (5,1), κ

w

∼ Gamma

(0.1,0.028), ε=0.1; (2) in the DR step, we set α=1.5

and β=0.1. In LDA, We set α=1.5, β=0.1. The topic

number is set to be equal to the cluster number in each

data set.

Evaluation Metrics

In the experiments, we intend to evaluatethe proposed

topic models in document clustering task. Each topic

in the test dataset is considered as a cluster and each

document is clustered into the topic with the highest

probability. We adopt the evaluation criteria proposed

by (Steinbach et al., 2000). The calculation starts

from maximum F-measure of each cluster. The gen-

eral F-measure of a system is the micro-average of all

the F-measures of the system-generated clusters.

3.2 Results and Discussions

Experimental results are presented in Table 1.

Table 1: F-measure values of the proposed models and the

baseline LDA model.

Medel TDT41 TDT42 Reuters20

LDA 0.744 0.867 0.496

SA-SLDA 0.792 0.870 0.512

CO-SLDA 0.825 0.874 0.597

According to the experimental results, we make

two important observations:

Firstly, SA-SLDA outperforms the LDA baseline

in all cases. This indicates that using word senses

rather than surface words improves the document

clustering results. The improvement ascribes to that

words in LDA are viewed as independent and iso-

lated strings, while in SA-SLDA they are facilitated

with more information of word sense according to the

context.

Secondly, CO-SLDA outperforms SA-SLDA in

all data sets. This indicates that the joint inference

process for topics of words and word senses makes a

positive impact for each other. Two reasons are wor-

thy of noting: (1) In common sense, instances of the

UsingWordSenseasaLatentVariableinLDACanImproveTopicModeling

535

same word type in different topics often have differ-

ent senses while instances in the same topic often re-

fer to the same thing. Since CO-SLDA can jointly

infer topics and word senses, instances of the same

word in the same topic are more likely to be assigned

the same sense while instances in different topics are

likely to be assigned differently. As a result, word

senses will be better identified. (2) Using topics as a

pseudo feedback will facilitate the target words with

topic-specific senses. For example, the word elec-

tion only has one sense in general cases. However,

in the TDT42 data set, topics are labeled in a more

fine-grained perspective. For example, the following

two sentences are labeled to be from two differenttop-

ics as the countries of elections are different: Ilyescu

Wins Romanian Elections, Ghana Gets New Demo-

cratically Elected President. With the joint inference

of topic and sense, we can induce the word election

with two senses, i.e., election#1 and election#2, re-

lated to the electing process in Romania and Ghana

respectively. By incorporating these topic-specific

senses, election with context word Romania is identi-

fied as election#1 and more likely to be assigned topic

z

1

while election with context word Ghana is identi-

fied as election#2 and more likely to be assigned z

2

.

4 RELATED WORK

In Vector Space Model (VSM), it is assumed that

terms are independent of each other and the seman-

tic relations between terms are ignored.

Recently, models are proposed to represent docu-

ments in a semantic concept space using lexical on-

tologies, i.e. WordNet or Wikipedia (Hotho et al.,

2003; Gabrilovich and Markovitch, 2007; Huang and

Kuo, 2010). However, the lexical ontologies are diffi-

cult to be constructed and their coverage can be lim-

ited. In contrast, topic models are used as an alterna-

tive for discovering latent semantic space in corpora

based on the per topic word distribution. LDA (Blei

et al., 2003) as a classic topic model identifies top-

ics of documents by evaluating word co-occurrences.

Various topic models based on the LDA framework

have been developed (Wang et al., 2007). However,

those models all employ the surface word as the ba-

sic unit for document, which is lack of the word sense

interpretation for topics. Some work attempt to inte-

grate word semantics from lexical resources into topic

models (Boyd-Graber et al., 2007; Chemudugunta

et al., 2008; Guo and Diab, 2011). Alternatively, our

models are fully unsupervised and do not rely on any

external semantic resources, which will be extremely

applicable for resource poor languages and domains.

5 CONCLUSIONS

In this paper, we propose to represent topics with dis-

tributions over word senses. In order to achieve this

purpose in a fully unsupervised manner without re-

lying on any external resources, we model the word

sense as a latent variable and induced it from corpora

via WSI. We design several models for this purpose.

Empirical results verify that the word senses induced

from corpora can facilitate the LDA model in doc-

ument clustering. Specifically, we find the joint in-

ference model (i.e., CO-SLDA) outperforms the stan-

dalone model (SA-SLDA) as they the estimation of

sense and topic can be collaboratively improved.

In future, we will extend the proposed topic mod-

els for the cross-lingual information retrieval tasks.

We believe that word senses induced from multilin-

gual documents will be helpful in cross-lingual topic

modeling.

ACKNOWLEDGEMENTS

This work is supported by NSFC (61272233). We

thank the reviewers for the valuable comments.

REFERENCES

Blei, D. M., Ng, A. Y., and Jordan, M. I. (2003). La-

tent dirichlet allocation. J. Mach. Learn. Res., 3:993–

1022.

Boyd-Graber, J. L., Blei, D. M., and Zhu, X. (2007). A topic

model for word sense disambiguation. In EMNLP-

CoNLL, pages 1024–1033. ACL.

Chemudugunta, C., Smyth, P., and Steyvers, M. (2008).

Combining concept hierarchies and statistical topic

models. In Proceedings of the 17th ACM conference

on Information and knowledge management, CIKM

’08, pages 1469–1470, New York, NY, USA. ACM.

Dietz, L., Bickel, S., and Scheffer, T. (2007). Unsupervised

prediction of citation influences. In In Proceedings of

the 24th International Conference on Machine Learn-

ing, pages 233–240.

Gabrilovich, E. and Markovitch, S. (2007). Computing se-

mantic relatedness using wikipedia-based explicit se-

mantic analysis. In Proceedings of the 20th inter-

national joint conference on Artifical intelligence, IJ-

CAI’07, pages 1606–1611, San Francisco, CA, USA.

Morgan Kaufmann Publishers Inc.

Griffiths, T. L. and Steyvers, M. (2004). Finding scientific

topics. PNAS, 101(suppl. 1):5228–5235.

Guo, W. and Diab, M. (2011). Semantic topic models:

combining word distributional statistics and dictio-

nary definitions. In Proceedings of the Conference on

Empirical Methods in Natural Language Processing,

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

536

EMNLP ’11, pages 552–561, Stroudsburg, PA, USA.

Association for Computational Linguistics.

Hotho, A., Staab, S., and Stumme, G. (2003). Wordnet im-

proves text document clustering. In In Proc. of the

SIGIR 2003 Semantic Web Workshop, pages 541–544.

Huang, H.-H. and Kuo, Y.-H. (2010). Cross-lingual docu-

ment representation and semantic similarity measure:

a fuzzy set and rough set based approach. Trans. Fuz

Sys., 18(6):1098–1111.

Kong, J. and Graff, D. (2005). Tdt4 multilingual broad-

cast news speech corpus. Linguistic Data Consortium,

http://www. ldc. upenn. edu/Catalog/CatalogEntry.

jsp.

Lewis, D. D. (1997). Reuters-21578 text categorization test

collection, distribution 1.0. http://www. research. att.

com/˜ lewis/reuters21578. html.

Steinbach, M., Karypis, G., and Kumar, V. (2000). A com-

parison of document clustering techniques. In In KDD

Workshop on Text Mining.

Teh, Y. W., Jordan, M. I., Beal, M. J., and Blei, D. M.

(2004). Hierarchical dirichlet processes. Journal of

the American Statistical Association, 101.

Wang, X., McCallum, A., and Wei, X. (2007). Topical n-

grams: Phrase and topic discovery, with an applica-

tion to information retrieval. In Proceedings of the

2007 Seventh IEEE International Conference on Data

Mining, ICDM ’07, pages 697–702, Washington, DC,

USA. IEEE Computer Society.

Yao, X. and Van Durme, B. (2011). Nonparametric

bayesian word sense induction. In Proceedings of

TextGraphs-6: Graph-based Methods for Natural

Language Processing, pages 10–14. Association for

Computational Linguistics.

UsingWordSenseasaLatentVariableinLDACanImproveTopicModeling

537