Development Process and Evaluation Methods for Adaptive Hypermedia

Martin Bal

´

ık and Ivan Jel

´

ınek

Department of Computer Science and Engineering, Faculty of Electrical Engineering, Czech Technical University

Karlovo n

´

am

ˇ

est

´

ı 13, 121 35 Prague, Czech Republic

Keywords:

Adaptive Hypermedia, Personalization, Development Process, Software Framework, Evaluation.

Abstract:

Adaptive Hypermedia address the fact that each individual user has different preferences and expectations.

Hypermedia need adaptive features to provide an improved user experience. This requirement results

in an increased complexity of the development process and evaluation methodology. In this article,

we first discuss development methodologies used for hypermedia development in general and especially

for user-adaptive hypermedia development. Second, we discuss evaluation methodologies that constitute

a very important part of the development process. Finally, we propose a customized development process

supported by ASF, a special framework designed to build Adaptive Hypermedia Systems.

1 INTRODUCTION

Software development is a complex process, where

modeling and specification on various levels have be-

come a necessity and a standard approach. Web-based

hypermedia systems require a special attention that

has led to evolution of a new line of research – Web

Engineering (Deshpande et al., 2002). A number of

development methodologies have been created to of-

fer new techniques, models and notations. Additional

challenges came with a new category of intelligent,

user-adaptive applications.

User-adaptive systems monitor users’ behavior

and keep track of each individual user’s character-

istics, preferences, knowledge, aims, etc. Some

of the systems focus on providing the user with

relevant items based on the browsing history. Other

systems focus mainly on improving the human-

computer interactions. The collection of personal

data used in the adaptation process is associated

with a specific user. It is called the User Model.

While modeling the adaptive system, it is necessary

to separate the non-adaptive and user-specific aspects

of the application.

In our work, we focus on Adaptive Hypermedia

Systems (AHS). Typical adaptation techniques used

in AHS are categorized as content adaptation, adap-

tive presentation, and adaptive navigation (Knutov

et al., 2009). The categories overlap, as some

of the techniques do not change information

or the possible navigation, but only offer suggestions

to the user by changing the presentation. The de-

sign of adaptation techniques needs to be considered

within the development process.

User-adaptive systems bring additional complex-

ity into the development process and lay higher

demands on system evaluation. This needs to be

considered through all development phases. In order

to guarantee the required behavior, we have to ensure

that the system works correctly during and after adap-

tations (Zhang and Cheng, 2006).

Evaluation of adaptive systems is an important

part of their development process and should not be

underestimated. Currently, there is not much con-

sistency in the evaluation of AHS (Mulwa et al.,

2011). It is important to use an appropriate method

for evaluation (Gena and Weibelzahl, 2007). Evalua-

tion should ensure savings in terms of time and cost,

completeness of system functionality, minimizing re-

quired repair efforts, and improving user satisfaction

(Nielsen, 1993). AHSs are interactive, hypermedia-

based systems. Usually, similar methods as in human-

computer interaction (HCI) field are used. However,

user-adaptive systems introduce new challenges.

The remainder of this paper is structured as fol-

lows. In Section 2, a current state of the art of de-

velopment and evaluation methodologies is being

reviewed. In Section 3, AHS development process

is proposed and associated with the use of Adap-

tive System Framework. Finally, Section 4 concludes

the paper by summarizing results of the research

and indicates the directions of the future work.

107

Balík M. and Jelínek I..

Development Process and Evaluation Methods for Adaptive Hypermedia.

DOI: 10.5220/0004858401070114

In Proceedings of the 10th International Conference on Web Information Systems and Technologies (WEBIST-2014), pages 107-114

ISBN: 978-989-758-024-6

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

2 RELATED WORK

In this section, we will review existing approaches

used in AHS development. First, we will focus on

the development methodologies mainly focused on

design and system architecture. Second, we will re-

view evaluation methodologies and problems related

specifically with user-adaptive system evaluation.

2.1 AHS Development Methodologies

Similar to development of other software prod-

ucts, adaptive-system development needs to be based

on standardized methods. For the design of hy-

permedia applications, several methods have been

developed. In the early period of hypermedia

systems, hypermedia-specific design methodologies

were proposed, for example, Hypermedia Design

Method (HDM) (Garzotto et al., 1993), Relation-

ship Management Methodology (RMM) (Isakowitz

et al., 1995), Enhanced Object-Relationship Model

(EORM) (Lange, 1994) and Web Site Design Method

(WSDM) (De Troyer and Leune, 1998). An Overview

of additional and more recent development method-

ologies for software and Web engineering can be

found in (Arag

´

on et al., 2013; Thakare, 2012). How-

ever, the methodologies developed for hypermedia

systems in general do not take into account the adap-

tivity and user modeling. Therefore, an extended

adaptation-aware methodology is needed to improve

the AHS development process.

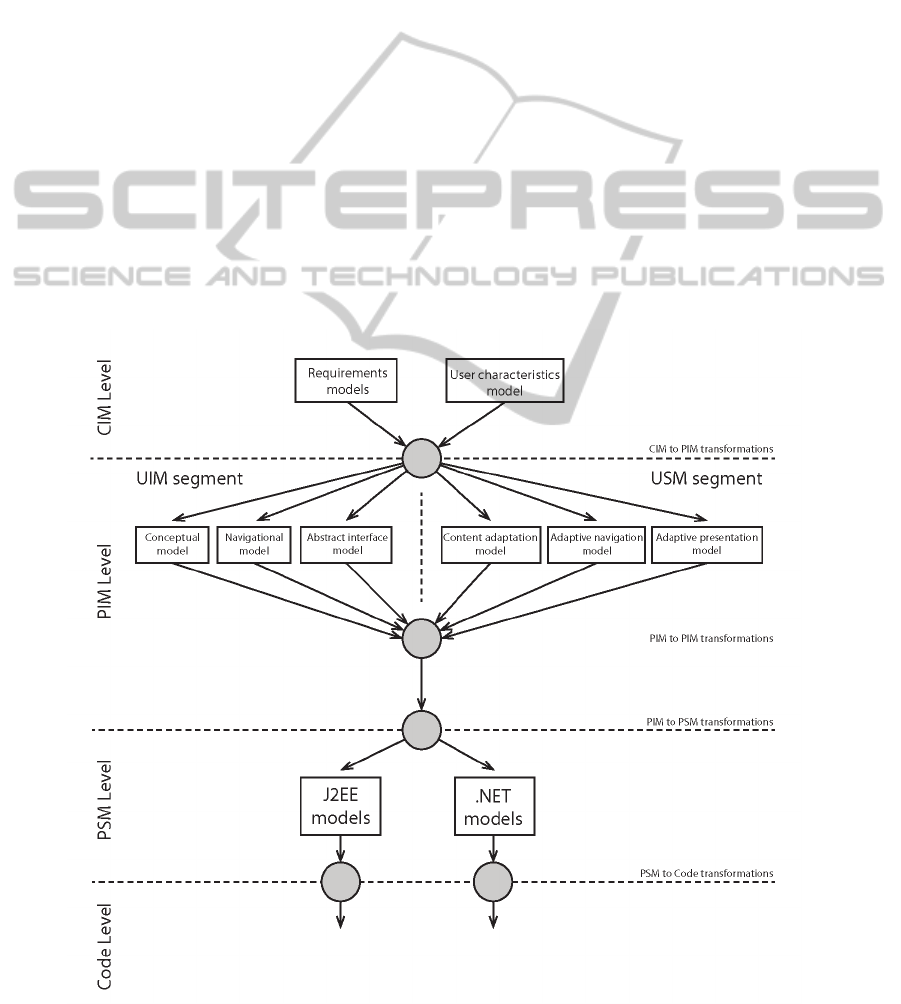

Fig. 1 shows the typical phases of a software-

development process. To abstract complex problems

of the system design, models are used. The models

help to create and validate the software architecture.

Figure 1: Typical phases of a software devel. process.

Model-Driven Architecture (MDA) (Miller and

Mukerji, 2003) was proposed by the Object Manage-

ment Group (OMG) in 2001. This architecture de-

fines four model levels. Computation-Independent

Model (CIM) describes behavior of the system in a

language appropriate for users and business analysts.

This level includes models of requirements and busi-

ness models. Platform-Independent Model (PIM) is

still independent of a specific computer technology,

yet unlike the CIM it includes information essential

for solving the assignment using information tech-

nologies. The PIM is usually created by computer

analyst. The benefit of this level is the reusability for

various implementations and platform independency.

Platform-Specific Model (PSM) combines the PIM

with a particular technology-dependent solution. This

model can include objects tightly related to a specific

programming language environment, e.g., construc-

tors, attribute accessors, or references to classes in-

cluded in the development platform packages. The

model is an abstraction of source code structure and is

used as a base for implementation. Code is the high-

est level of MDA and includes the implementation of

the system.

Adaptive systems usually access large informa-

tion base of domain objects, and their behavior is

based on information stored in the user model. Such

systems are quite complex and therefore, develop-

ment methodology oriented on adaptive hypermedia

is needed.

Object-oriented approach in designing adaptive

hypermedia systems seems to be the most appropri-

ate. Object oriented design is best suited for systems

undergoing complex transitions over time (Papasa-

louros and Retalis, 2002). For object-oriented soft-

ware systems modeling, we have a standard, widely-

adopted, formally defined language – UML (Booch

et al., 1999). To be able to express a variety of system

models, UML provides extension mechanisms in def-

inition of the model elements, description of the nota-

tion and expressing semantic of models. These exten-

sions are stereotypes, tagged values and constraints.

UML stereotypes are the most important extension

mechanism.

There are some projects that utilize UML mod-

eling in the area of adaptive systems. The Munich

Reference Model (Koch and Wirsing, 2001) is an ex-

tension of the Dexter model. It was proposed in the

same period as the well-known Adaptive Hyperme-

dia Application Model (AHAM) (De Bra et al., 1999)

and in a similar way adds a user model and an adapta-

tion model. The main difference between The Munich

Reference Model and AHAM is that AHAM speci-

fies an adaptation rule language, while The Munich

Reference Model uses object-oriented specification.

It is described with the Unified Modeling Language

(UML) which provides the notation and the object-

oriented modeling techniques.

Object-Oriented Hypermedia Design Method

(OOHDM) (Rossi and Schwabe, 2008) is based

on both HDM and the object-oriented paradigm.

It allows the designer to specify a Web application

by using several specialized meta-models. OOHDM

proposed dividing hypermedia design into three

models – a conceptual model, a navigational model

and an abstract interface model. When used to de-

sign a user-adaptive application, most of the personal-

ization aspects are captured in the conceptual model.

WEBIST2014-InternationalConferenceonWebInformationSystemsandTechnologies

108

As an example, we can mention a class model of the

user and user group models (Barna et al., 2004).

Another method to specify design of complex

Web sites is WebML (Ceri et al., 2000). For the phase

of conceptual modeling, WebML does not define its

own notation and proposes the use of standard mod-

eling techniques based on UML. In the next phase,

the hypertext model is defined. This model defines

the Web site by means of two sub-models – compo-

sition model and navigation model. Development of

the presentation model defining the appearance of the

Web site is the next step. Part of the data model is

the personalization sub-schema. The content manage-

ment model specifies how is the information updated

dynamically based on user’s actions. Finally, the pre-

sentation model specifies how the system has to be

adapted to each user’s role (Arag

´

on et al., 2013).

For the purpose of interoperability, storage models

can be represented by a domain ontology. Therefore,

there is a need to represent ontology-based models in

a standardized way. Researchers already identified

this issue and proposed UML profile for OWL and

feasible mappings, which support the transformation

between OWL ontologies and UML models and vice

versa (Brockmans et al., 2006). This is achieved by

the UML stereotypes. Table 1 provides the mappings

for the most important constructs.

Table 1: UML and OWL mappings (Brockmans, 2006).

UML Feature OWL Feature Comment

class, type class

instance individual

ownedAttribute, property,

binary association inverseOf

subclass, subclass,

generalization, subproperty,

N-ary association, class, Requires

association class property decomposition

enumeration oneOf

disjoint, cover disjointWith, unionOf

multiplicity minCardinality, OWL cardinality

maxCardinality, restrictions

FunctionalProperty, declared

InverseFunctionalProperty only for range

package ontology

Special attention should be also devoted to the

development of the content of the adaptive systems.

As it was observed many times – authoring of adap-

tive systems is a difficult task (Cristea, 2003). The

adaptive-system development process can be divided

into four phases: Conceptual Phase, Presentation

Phase, Navigation Phase and Learning Phase (Med-

ina et al., 2003).

During the conceptual phase, the author creates

basic page elements, in the presentation phase the

structure of page elements is defined, in the naviga-

tion phase the navigational map is created and in the

learning phase, adaptive behavior is defined.

2.2 AHS Evaluation Methodologies

Recent research has identified the importance of user-

adaptive systems evaluation. Reviews on the topic

have been published by several researchers (Gena,

2005; Velsen et al., 2008; Mulwa et al., 2011; Albert

and Steiner, 2011). Due to the complexity of adaptive

systems, the evaluation is difficult. The main chal-

lenge lies in evaluating particularly the adaptive be-

havior. Evaluation of adaptive systems is a very im-

portant part of the development process. Moreover, it

is necessary, that correct methods and evaluation met-

rics are used.

Usability is evaluated by the quality of interac-

tion between a system and a user. The unit of mea-

surement is the user’s behavior (satisfaction, comfort)

in a specific context of use (Federici, 2010). De-

sign of adaptive hypermedia systems might violate

standard usability principles such as user control and

consistency. Evaluation approaches in HCI assume

that the interactive system’s state and behavior are

only affected by direct and explicit action of the user

(Paramythis et al., 2010). This, however, is not true in

user-adaptive systems.

Personalization and user-modeling techniques aim

to improve the quality of user experience within the

system. However, at the same time these techniques

make the systems more complex. By comparing the

adaptive and non-adaptive versions, we should deter-

mine the added benefits of the adaptive behavior.

General (non-adaptive) interactive systems ac-

quire from user the data strictly related to the per-

formed task. Adaptive systems, however, require

much more information. This information might not

be required for the current task and can be in the

current context completely unrelated. This is caused

by continuos observation of the user by the sys-

tem. Adaptive systems can monitor visited pages,

keystrokes or mouse movement. Users can be even

asked superfluous information directly. Within the

evaluation process, it is challenging to identify the

purpose and correctness of such a meta-information.

Important difference between evaluation of adap-

tive and non-adaptive systems is that evaluation of

adaptive systems cannot consider the system as a

whole. At least two layers have to be evaluated sepa-

rately (Gena, 2005).

In the next paragraphs, we will summarize the

most important methods used to evaluate adaptive hy-

permedia systems.

Comparative Evaluation

It is possible to assess the improvements gained by

adaptivity by comparing the adaptive system with

DevelopmentProcessandEvaluationMethodsforAdaptiveHypermedia

109

a non-adaptive variant of the system (H

¨

o

¨

ok, 2000).

However, it is not easy to make such comparison. It

would be necessary, to decompose the adaptive ap-

plication into adaptive and non-adaptive components.

Usually adaptive features are an integral part of the

system, and the non-adaptive version could lead to

unsystematic and not optimal results. Additionally, it

might not be clear why the adaptive version is better.

In case of adaptive learning, a typical application

area of adaptation, it is possible to compare the sys-

tem with a different learning technology or with tra-

ditional learning methods. However, the evaluation of

adaptation effects can interfere with look and feel or

a novelty effect (Albert and Steiner, 2011).

Empirical Evaluation

Empirical evaluation, also known as the controlled ex-

periment, appraises theories by observations in exper-

iments. This approach can help to discover failures

in interactive systems, that would remain uncovered

otherwise. For software engineering, formal verifi-

cation and correctness are important methods. How-

ever, empirical evaluation is an important comple-

ment that could contribute for improvement signifi-

cantly. Empirical evaluation has not been applied for

the user modeling techniques very often (Weibelzahl

and Weber, 2003). However, in recent studies, the im-

portance of this approach is pointed out (Paramythis

et al., 2010). This method of evaluation is derived

from empirical science and cognitive and experimen-

tal psychology (Gena, 2005). In the area of adaptive

systems, the method is usually used for the evaluation

of interface adaptations.

Layered Evaluation

For evaluation of adaptive hypermedia systems, usu-

ally approaches considering the system “as a whole”

and focusing of an “end value” are used. Examples of

the focused values are user’s performance or users’s

satisfaction. The problem of this approach is, that

evaluating system as a whole requires building the

whole system before evaluation. This way, the evalu-

ation is not able to guide authors in the development

process. Another problem is, that the reasons behind

unsatisfactory adaptive behavior are not evident.

A solution to the mentioned problems was pro-

posed by Brusilovsky in (Brusilovsky and Sampson,

2004) as a model-based evaluation approach called

layered evaluation. In the exemplary case, two lay-

ers were defined – user modeling layer and adaptation

decision making layer. User modeling (UM) is the

process, where information about user is acquired by

monitoring user-computer interaction. Adaptation de-

cision making is a phase, where specific adaptations

are selected, based on the results of the UM phase.

Both processes are closely interconnected. However,

when evaluating the system as a whole, it is not evi-

dent, which of the phases has been unsuccessful. This

is solved by decomposing evaluation into layers and

evaluating both phases separately. This has also the

benefit, that results of UM process evaluation can be

reused for different decision making modules.

Layered evaluation has gained a high level of at-

tention in the adaptive hypermedia research commu-

nity. That reaffirms the claim that the evaluation of

adaptive systems implicates some inherent difficul-

ties (Mulwa et al., 2011). The original idea is of-

ten used by authors to justify experimental designs of

their evaluation studies.

Process-oriented Evaluation

Evaluation should be considered as an inherent part of

the development cycle. Continuous evaluation should

range from very early phases of the project till the

end. Evaluation should start with requirements analy-

sis and continue at the prototype level. Evaluation of

initial implementations is referred as formative evalu-

ation. Identifying early issues can greatly reduce de-

velopment costs. The quality of the overall system

is evaluated in the final phase of the development cy-

cle and is referred a summative evaluation. The focus

of current evaluations of adaptive systems is mostly

targeted on the summative evaluation. To ensure that

user’s needs are sufficiently reflected, formative eval-

uation must be more intensively used.

User-centered Evaluation

For adaptive systems, especially user-centered eval-

uation approaches are recommended (Velsen et al.,

2008).

Following are the typical user-centered evaluation

methods:

• Questionnaires

Questionnaires collect data from users by answer-

ing a fixed set of questions. They can be used

to collect global impressions or to identify prob-

lems. Advantage is, that large number of partic-

ipants can be accommodated (compared to inter-

views).

• Interviews

In interviews, participants are asked questions by

an interviewer. Interviews can identify individ-

ual and situational factors and help explain, why a

system will or will not be adopted.

WEBIST2014-InternationalConferenceonWebInformationSystemsandTechnologies

110

• Data Log Analysis

The log analysis can focus on user behavior or the

user performance. It is strongly advised to use this

method with a qualitative user-centred evaluation.

• Focus Groups and Group Discussions

Groups of participants discuss a fixed set of top-

ics, and the discussion is led by a moderator. This

method is suitable for gathering a large amount of

qualitative data in a short time.

• Think-aloud Protocols

Participants are asked to say their thoughts out

loud while using the system.

• Expert Reviews

System is reviewed by an expert, who gives his

opinion.

3 AHS DEVELOPMENT

PROCESS

The development methodologies mentioned in sec-

tion 2.1 were developed for non-adaptive hypermedia

systems and therefore, the methodologies do not pro-

vide sufficient support for the adaptation process. By

adding adaptive features, the design complexity in-

creases. Without adequate development support, the

application can become unmaintainable, or the behav-

ior of the application can become inconsistent.

As an example of deficiency, the OOHDM

methodology allows user-role-based personalization

as part of the conceptual model. However, there is

no clear separation of the user-adaptive behavior. Al-

though the WebML defines an explicit personaliza-

tion model for users and user-groups, it is missing

means for expressing and separating various adapta-

tion methods. Other legacy development methodolo-

gies do not consider personalization at all.

In a development methodology, two important

components can be identified. One of them is the lan-

guage, which can be used by a designer to model

the different aspects of the system. The other com-

ponent is the development process, which acts as

the dynamic, behavioral part. The development pro-

cess determines what activities should be carried

out to develop the system, in what order and how.

To specify the development process for user-adaptive

hypermedia systems, we follow the model-driven

architecture (MDA).

Fig. 2 depicts the MDA adopted to user-adaptive

hypermedia systems engineering. The principles are

visualized as a stereotyped UML activity diagram

based on the diagram presented in (Koch et al., 2006).

The process starts with the Computation-Independent

Model (CIM) that defines requirements models and

user characteristics model. Platform-Independent

Model (PIM) is divided into two segments. User In-

dependent Model (UIM) describes the system with-

out its adaptation features and is equivalent to the

standard web engineering design methodology. Three

models, based on the OOHDM, are created – con-

ceptual model, navigational model, and abstract in-

terface model. The other segment consists of the

User Specific Model (USM). USM consists of three

sub-models, that are patterned on adaptation method

categories (Knutov et al., 2009) – content adaptation

model, adaptive navigation model, and adaptive pre-

sentation model.

The user-specific PIM sub-models are closely re-

lated with our theoretical basis of adaptive hyper-

media architecture – the Generic Ontology-based

Model for Adaptive Web Environments (GOMAWE).

The adaptation function, defined as a transforma-

tion between default and adapted hypermedia ele-

ments, is the basis for content adaptation. Transfor-

mations are defined by Inference Rules. Adaptive

navigation defines transformations within the navi-

gational model, and results into the Link-Adaptation

Algorithms in subsequent modeling phases. Adap-

tive presentation is modeled as transformations within

the Adaptive Hypermedia Document Template.

For formal definitions of GOMAWE, see (Bal

´

ık and

Jel

´

ınek, 2013b).

After the models for both the user-independent

and user-specific segments are separately defined,

they can be transformed and merged together to form

the “big picture” of the system. The next step is trans-

forming the PIM into the Platform-Specific model

(PSM). As an example, we show Java and .NET

model, but there are many other possible platforms.

From the PSM, a program code can be possibly gen-

erated.

While the PIM depends usually in large extent on

UML and UML profiles that provide a standard ab-

stract model notation, the PSM, on the other hand,

should refer to software framework packages used

to simplify the development on a specific platform.

In our previous work, we have proposed a software

framework intended to support the development of

user-adaptive hypermedia systems. The Adaptive

System Framework (ASF) (Bal

´

ık and Jel

´

ınek, 2013a)

defines a fundamental adaptive hypermedia system

architecture and implements the most common adap-

tive system components.

One of the important ASF components is the user-

specific data storage. The centralized user model

management is beneficial for the application devel-

opment. Using the adaptation manager, the user pro-

DevelopmentProcessandEvaluationMethodsforAdaptiveHypermedia

111

file and user model properties can be accessed from

any component of the application. Another part of

the data-storage layer is the rule repository. A rule-

repository manager provides an interface for access-

ing and evaluating the inference rules. This interface

can be utilized in the adaptation algorithms, e.g., the

content adaptation algorithm can use conditional rules

to find an alternative content for a specific user.

The design of the application core based on the

ASF framework consists of the following important

steps:

1. Definition of the domain objects and their rela-

tions

2. Definition of the user profile and user model at-

tributes

3. Design of the adaptive algorithms for the desired

behavior

4. Configuration of data sources

5. Binding the data results either to the application

logic or directly to the adaptive UI components

All the steps are supported by the ASF framework.

Based on UML model, the developer implements do-

main objects by using support classes of the frame-

work. User data storage needs only data model spec-

ification (preferably as an ontology). Adaptive algo-

rithms can be reused or extended. And finally, user

interface components can be used to support the pre-

sentation.

The implemented user-adaptive application needs

to be evaluated, and evaluation should be an integral

part of the development process. Various methods

mentioned in Section 2.2 can be used.

Based on the evaluation methodology proposed

in (Lampropoulou et al., 2010), we use a three-

phase evaluation as part of the development pro-

cess. The first phase is a short empirical study,

in the second phase a qualitative and quantitative

measurement is performed, and finally, the third phase

evaluates subjective comments of test session partici-

pants. For the purpose of AHS evaluation, we extend

the second and third phases by the comparison with

a non-adaptive system users control group.

Typical adaptive system evaluation is based on

comparison between adaptive and non-adaptive ver-

sion of the application. ASF framework is well

Figure 2: MDA structure for user-adaptive hypermedia systems engineering.

WEBIST2014-InternationalConferenceonWebInformationSystemsandTechnologies

112

designed for such a comparative evaluation. Each

adaptation-type algorithm strategy supports the non-

adaptive algorithm version. This feature can be used

as an additional user-accessible preference setting, or

it can be administered for special purposes, e.g., to

support the adaptation evaluation session.

To be reasonable, the evaluation needs to be per-

formed with a representative group of users. For such

purposes, adaptive educational applications, where

a large amount of students can participate, is highly

appropriate. Many of the typical aspects of adap-

tive applications can be simulated and evaluated by

students. The tutorials can include theoretical tests,

practical assignments, or test questions used to review

the knowledge of students.

In our adaptive e-learning prototype, we focus

mainly on the user-centered evaluation. In the fist

evaluation phase, the students were asked about

their preferences regarding the online curriculum.

The questions included preference of used adapta-

tion techniques. They were also asked if their results

should be available to the tutor with all details, in

a form of whole class statistics, or completely hid-

den. In the second evaluation phase, we used a data

log analysis to observe the behavior of users, progress

in knowledge and selected preferences. The session

with multiple students is suitable to measure the sys-

tem performance, identify possible bottlenecks and

compare the adaptive system with the non-adaptive

alternative. The evaluation sessions are usually com-

bined with questionnaires, where students answer

questions related to the application content, and they

can provide a feedback about their satisfaction or is-

sues they encounter while using the system. This is

the last of the three evaluation phases. Afterwards,

all the collected data are analyzed, and the results

provide a feedback for system customization and im-

provements.

4 CONCLUSION

Design, modeling and evaluation are fundamen-

tal steps in the development process of software

products. Web technologies and requirements of per-

sonalization add more complexity into the process,

and specialized methodologies are needed. In this pa-

per, we have given an overview of existing method-

ologies and their use in the context of user-adaptive

systems. Further, we have proposed a special method-

ology for adaptive hypermedia, based on MDA

and OOHDM. The development methodology was

extended to include the aspects of user-adaptive sys-

tems. The AHS-specific methodology is important for

improving the development effectiveness and quality

of the resulting product.

In our future work, we aim to use the methodology

in additional prototypes’ development based on ASF.

We will apply the framework in different application

types, and we will focus in more detail on adaptive

systems’ recommendation adaptation features. Fur-

ther, we want to integrate the learning curriculum ap-

plication with other systems and assessments used in

the courses, and we want to utilize the ontology-based

data maintained by the adaptive systems to exchange

the user models of students.

ACKNOWLEDGEMENTS

The results of our research form a part of the scientific

work of a special research group WEBING.

1

REFERENCES

Albert, D. and Steiner, C. M. (2011). Reflections on the

Evaluation of Adaptive Learning Technologies. In

Proceedings of the IEEE International Conference on

Technology for Education (T4E), pages 295–296.

Arag

´

on, G., Escalona, M.-J., Lang, M., and Hilera, J. R.

(2013). An Analysis of Model-Driven Web Engineer-

ing Methodologies. Int. Journal of Innovative Com-

puting, Information and Control, 9(1):413–436.

Bal

´

ık, M. and Jel

´

ınek, I. (2013a). Adaptive System Frame-

work: A Way to a Simple Development of Adaptive

Hypermedia Systems. In The Fifth International Con-

ference on Adaptive and Self-Adaptive Systems and

Applications (ADAPTIVE2013), pages 20–25, Valen-

cia, Spain. IARIA.

Bal

´

ık, M. and Jel

´

ınek, I. (2013b). Generic Ontology-Based

Model for Adaptive Web Environments: A Revised

Formal Description Explained within the Context of

its Implementation. In Proceedings of the 13th IEEE

International Conference on Computer and Informa-

tion Technology (CIT2013), Sydney, Australia.

Barna, P., Houben, G.-j., and Frasincar, F. (2004). Specifi-

cation of Adaptive Behavior Using a General-purpose

Design Methodology for Dynamic Web Applications.

In Proceedings of AdaptiveHypermedia and Adap-

tive Web-Based Systems (AH 2004), Eindhoven, The

Netherlands.

Booch, G., Rumbaugh, J., and Jacobson, I. (1999). The

Unified Modeling Language User Guide, volume 30

of Addison-Wesley object technology series. Addison-

Wesley.

Brockmans, S., Colomb, R. M., Haase, P., Kendall, E. F.,

Wallace, E. K., Welty, C., and Xie, G. T. (2006).

A model driven approach for building OWL DL and

1

Webing research group – http://webing.felk.cvut.cz

DevelopmentProcessandEvaluationMethodsforAdaptiveHypermedia

113

OWL full ontologies. In ISWC’06 Proceedings of the

5th international conference on The Semantic Web,

pages 187–200. Springer-Verlag.

Brusilovsky, P. and Sampson, D. (2004). Layered evalu-

ation of adaptive learning systems. Int. J. Continu-

ing Engineering Education and Life-Long Learning,

14(4-5):402–421.

Ceri, S., Fraternali, P., Bongio, A., and Milano, P. (2000).

Web Modeling Language (WebML): a modeling lan-

guage for designing Web sites. Computer Networks:

The International Journal of Computer and Telecom-

munications Networking, 33(1-6):137–157.

Cristea, A. (2003). Automatic authoring in the LAOS AHS

authoring model. In Hypertext 2003, Workshop on

Adaptive Hypermedia and Adaptive Web-Based Sys-

tems, Nottingham, UK.

De Bra, P., Houben, G.-J., and Wu, H. (1999). AHAM : A

Dexter-based Reference Model for Adaptive Hyper-

media. In Proceedings of the ACM Conference on Hy-

pertext and Hypermedia, pages 147–156. ACM.

De Troyer, O. and Leune, C. (1998). WSDM: a user cen-

tered design method for Web sites. In Proceedings

of the Seventh International WWW Conference, vol-

ume 30, pages 85–94, Brisbane, Australia. Elsevier

Science Publishers B. V.

Deshpande, Y., Murugesan, S., Ginige, A., Hansen, S.,

Schwabe, D., Gaedke, M., and White, B. (2002). Web

engineering. Journal of Web Engineering, 1(1):3–17.

Federici, S. (2010). Usability evaluation : models, meth-

ods, and applications. In International Encyclopedia

of Rehabilitation.

Garzotto, F., Schwabe, D., and Paolini, P. (1993). HDM A

Model-Based Approach to Hypertext Application De-

sign. ACM Trans. on Information Systems, 11(1):1–

26.

Gena, C. (2005). Methods and techniques for the evaluation

of user-adaptive systems. The Knowledge Engineer-

ing Review, 20(01):1.

Gena, C. and Weibelzahl, S. (2007). Usability Engineering

for the Adaptive Web. In Brusilovsky, P., Kobsa, A.,

and Nejdl, W., editors, The adaptive web, pages 720–

762. Springer-Verlag, Berlin, Heidelberg.

H

¨

o

¨

ok, K. (2000). Steps to take before intelligent user in-

terfaces become real. Interacting with Computers,

12(4):409–426.

Isakowitz, T., Stohr, E. A., and Balasubramanian, P. (1995).

RMM: a methodology for structured hypermedia de-

sign. Communications of the ACM, 38(8):34–44.

Knutov, E., De Bra, P., and Pechenizkiy, M. (2009). AH

12 years later: a comprehensive survey of adaptive

hypermedia methods and techniques. New Review of

Hypermedia and Multimedia, 15(1):5–38.

Koch, N. and Wirsing, M. (2001). Software Engineering

for Adaptive Hypermedia Applications? In 8th In-

ternational Conference on User Modeling, pages 1–6,

Sonthofen, Germany.

Koch, N., Zhang, G., and Escalona, M. J. (2006). Model

Transformations from Requirements to Web System

Design. In Proc. Sixth Int’l Conf. Web Eng., pages

281–288.

Lampropoulou, P. S., Lampropoulos, A. S., and Tsihrintzis,

G. A. (2010). A Framework for Evaluation of Mid-

dleware Systems of Mobile Multimedia Services. In

The 2010 IEEE International Conference on Systems,

Man, and Cybernetics (SMC2010), pages 1041–1045,

Istanbul, Turkey.

Lange, D. (1994). An Object-Oriented Design Method for

Hypermedia Information Systems. In Proceedings of

the 27th - Hawaii International Conference on System

Sciences, volume 6, pages 336–375, Hawaii. IEEE

Computer Society Press.

Medina, N., Molina, F., and Garc

´

ıa, L. (2003). Personalized

Guided Routes in an Adaptive Evolutionary Hyperme-

dia System. In Lecture Notes in Computer Science

2809, pages 196–207.

Miller, J. and Mukerji, J. (2003). MDA Guide Version 1.0.

[Accessed: August 24, 2013]. [Online]. Available:

http://www.omg.org/mda/mda files/MDA Guide Ver

sion1-0.pdf.

Mulwa, C., Lawless, S., Sharp, M., and Wade, V. (2011).

The evaluation of adaptive and personalised informa-

tion retrieval systems: a review. International Journal

of Knowledge and Web Intelligence, 2(2-3):138–156.

Nielsen, J. (1993). Usability Engineering. Academic Press,

Boston, MA.

Papasalouros, A. and Retalis, S. (2002). Ob-AHEM: A

UML-enabled model for Adaptive Educational Hy-

permedia Applications. Interactive educational Mul-

timedia, 4(4):76–88.

Paramythis, A., Weibelzahl, S., and Masthoff, J. (2010).

Layered evaluation of interactive adaptive systems:

framework and formative methods. User Modeling

and User-Adapted Interaction, 20(5):383–453.

Rossi, G. and Schwabe, D. (2008). Modeling and imple-

menting web applications with OOHDM. In Rossi,

G., Pastor, O., Schwabe, D., and Olsina, L., editors,

Web Engineering, chapter 6, pages 109–155. Springer.

Thakare, B. (2012). Deriving Best Practices from Develop-

ment Methodology Base (Part 2). International Jour-

nal of Engineering Research & Technology, 1(6):1–8.

Velsen, L. V., van der Geest, T., Klaasen, R., and Stee-

houder, M. (2008). User-centered evaluation of adap-

tive and adaptable systems: a literature review. The

Knowledge Engineering Review, 23(3):261–281.

Weibelzahl, S. and Weber, G. (2003). Evaluating the infer-

ence mechanism of adaptive learning systems. In User

Modeling 2003, pages 154–162. Springer-Verlag.

Zhang, J. and Cheng, B. H. C. (2006). Model-based devel-

opment of dynamically adaptive software. In Proceed-

ing of the 28th international conference on Software

engineering - ICSE ’06, number 1, pages 371–380,

New York, New York, USA. ACM Press.

WEBIST2014-InternationalConferenceonWebInformationSystemsandTechnologies

114