Efficient Registration of Multiple Range Images for Fully Automatic 3D

Modeling

Yulan Guo, Jianwei Wan, Jun Zhang, Ke Xu and Min Lu

College of Electronic Science and Engineering, National University of Defense Technology, Changsha, China

Keywords:

Range Image, 3D Modeling, Local Feature, Feature Matching, Registration.

Abstract:

Multi-view range image registration is a significant and challenging problem for 3D modeling. This paper

presents a reference shape based multi-view range image registration algorithm. First, a set of Rotational

Projection Statistics (RoPS) features are extracted from the input range images. Next, the reference shape

is initialized by selecting a range image from the input. The reference shape is then iteratively updated by

registering itself with the remaining range images. The registration between the reference shape and any range

image is completed by RoPS feature matching. Finally, all input range images are registered according to their

corresponding reference shapes. A number of experiments were performed to test the performance of our

algorithm. The experimental results show that the reference shape based algorithm can perform multi-view

registration on a mixed set of unordered range images corresponding to several different objects. It is also very

accurate and efficient. It outperformed the state-of-the-arts including the spanning tree based and connected

graph based algorithms.

1 INTRODUCTION

3D models of objects play significant roles in an in-

creasing number of applications including cultural

heritage, entertainment, education, medical industry,

manufacturing and robotics (Johnson and Bing Kang,

1999; Assfalg et al., 2007; Alexiadis et al., 2013; Guo

et al., 2013b). A 3D model can either be created

by using Computer Aided Design (CAD) tools or 3D

scanning techniques (Chen and Medioni, 1992). Due

to the increasing availability of low-cost and dense

3D scanners, range images are becoming more ac-

cessible (Guo et al., 2013a; Lei et al., 2013). 3D

modeling from range images have became the main

research trend when dealing with free-form objects

(Dorai et al., 1998). The task of 3D modeling is to

register and integrate several range images which are

acquired from multiple viewpoints so that the surface

of an object can be completely covered (Rusinkiewicz

et al., 2002; Mian et al., 2006a).

Multi-view range image registration can be com-

pleted either manually or automatically. Since auto-

matic 3D modeling does not require any human in-

tervention (e.g., a calibrated scanner and turntable,

or attached markers), it is more applicable to real-

world scenarios compared to its manual counterpart

(Salvi et al., 2007). The main challenge for automatic

3D modeling is the recovering of the correspondence

information between overlapping range image pairs

(Mian et al., 2006a). This problem becomes even

more difficult when the input range images are un-

ordered and from multiple different objects.

Several multi-view registration algorithms have

been proposed to establish correspondences be-

tween unordered range images (Huang and Pottmann,

2005). (Huber and Hebert, 2003) registered all pairs

of input range images to produce a model graph. The

model graph was then used to build a spanning tree

which was pose consistent and globally surface con-

sistent. All multi-view range images were finally

registered based on the spanning tree. Later, (Ma-

suda, 2009), (Bariya et al., 2012) and (Tombari et al.,

2010) also used spanning tree based algorithms to

perform multi-view range image registration. How-

ever, they employed different features, namely Log-

Polar Height Map (LPHM), Signature of Histograms

of OrienTations (SHOT) and exponential map, to reg-

ister any two range images. Given a set of N

m

in-

put range images, the computational complexity of

the spanning tree based algorithms is O

N

2

m

as they

need to exhaustively register every pair of range im-

ages. (Mian et al., 2006a) proposed a connected graph

based algorithm, which is more efficient compared

to the spanning tree based algorithms. (Guo et al.,

96

Guo Y., Wan J., Zhang J., Xu K. and Lu M..

Efficient Registration of Multiple Range Images for Fully Automatic 3D Modeling.

DOI: 10.5220/0004667200960103

In Proceedings of the 9th International Conference on Computer Graphics Theory and Applications (GRAPP-2014), pages 96-103

ISBN: 978-989-758-002-4

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

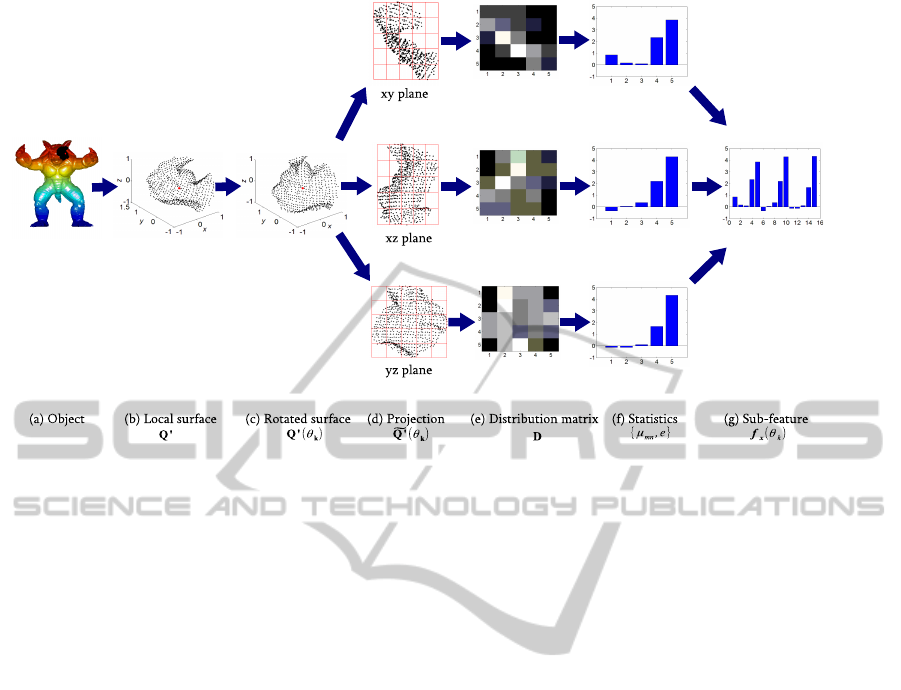

Figure 1: An illustration of the generation of a RoPS feature descriptor for one rotation. Originally shown in (Guo et al.,

2013b).

2013c) proposed a Tri-Spin-Image (TriSI) feature for

range image representation and also used the con-

nected graph based algorithm for multi-view range

image registration. (ter Haar and Veltkamp, 2007)

selected quadruples of range images to form incom-

plete 3D models of an object. These quadruples were

further verified and aligned to obtain the final regis-

tration result. This algorithm is computational effi-

cient. However, it requires that each quadruple should

cover the entire object, and range images which cover

a small part of an object cannot be registered (ter Haar

and Veltkamp, 2007).

In this paper, we propose a fully automatic, accu-

rate and efficient multi-view range image registration

algorithm. The algorithm starts by selecting a range

image from all input range images as the initial ref-

erence shape. The reference shape is then iteratively

updated by performing pairwise registration between

itself and the remaining range images in the search

space. Consequently, all input range images are reg-

istered during the process of reference shape grow-

ing. Performance evaluation results show that the pro-

posed reference shape based algorithm is very accu-

rate. It can accomplish multi-view registration on a

mixed set of unordered range images corresponding

to several different objects. It is also more computa-

tionally efficient compared to the state-of-the-arts, in-

cluding the spanning tree based and connected graph

based algorithms.

The rest of this paper is organized as follows. Sec-

tion 2 presents the local feature extraction and match-

ing techniques. Section 3 describes the reference

shape based multi-viewrangeimage registrationalgo-

rithm. Section 4 presents the experimental results of

our proposed algorithm, with comparison to the state-

of-the-art algorithms.

2 FEATURE EXTRACTION AND

MATCHING

Local feature extraction and matching forms the basis

for the multi-view range image registration algorithm.

2.1 Feature Extraction

The local features extracted from range images should

be highly discriminative and robust to a set of nui-

sances including noise and varying mesh resolutions.

Based on the range image registration performance

achieved by using different local surface features, we

select the Rotational Projection Statistics (RoPS) fea-

ture for our work as it consistently produces the best

results. The superior performance of the RoPS feature

for 3D object recognition can also be found in (Guo

et al., 2013b). An illustration of the generation of a

RoPS feature descriptor is shown in Fig. 1.

Given a range image I

i

(in the form of a point

cloud), we first convert it into a triangular mesh M

i

.

We then detect a set of unique and repeatable fea-

ture points p

p

p

i

k

, k = 1, 2, . . . , N

i

from M

i

by performing

mesh simplification, resolution control and threshold-

ing (Guo et al., 2013b). For each feature point p

p

p

i

k

EfficientRegistrationofMultipleRangeImagesforFullyAutomatic3DModeling

97

in mesh M

i

, a local surface L

i

k

is first cropped from

M

i

for a given support radius r. Then, a unique and

unambiguous Local Reference Frame (LRF) F

i

k

is de-

rived using the eigenvectors of its local surface L

i

k

.

The points on L

i

k

are aligned with this LRF F

i

k

to

make the feature descriptor invariant to rigid trans-

formations (i.e., rotation and translation), resulting in

an aligned local surface

e

L

i

k

.

The local surface

e

L

i

k

is rotated around the x, y and

z axes respectively by a set of angles. For each ro-

tation, the points on the rotated surface are projected

onto three coordinate planes (i.e., the xy, xz and yz

planes). We first obtain an L×L distribution matrix D

of the projected points on each plane, and then calcu-

late five statistics (including central moments µ

11

, µ

21

,

µ

12

, µ

2

and entropy e) for the distribution matrix D.

These statistics for all coordinate planes and rotations

are concatenated to form an overall RoPS feature f

f

f

i

k

.

For more details on the RoPS feature, please refer to

(Guo et al., 2013b).

2.2 Pairwise Range Image Registration

Given a pair of range images M

i

and M

j

, two sets

of RoPS features F

F

F

i

=

f

f

f

i

1

, f

f

f

i

2

, . . . , f

f

f

i

N

i

and F

F

F

j

=

n

f

f

f

j

1

, f

f

f

j

2

, . . . , f

f

f

j

N

j

o

are respectively extracted from the

two range images. For a feature f

f

f

i

k

in M

i

, its cor-

responding feature f

f

f

j

k

in M

j

is obtained by search-

ing for the nearest feature in F

F

F

j

. The pair

f

f

f

i

k

, f

f

f

j

k

are considered a feature correspondence, and their

associated points c

c

c

ij

k

=

p

p

p

i

k

, p

p

p

j

k

are considered a

point correspondence. All features in F

F

F

i

are matched

against these features in F

F

F

j

, resulting in a set of

point correspondences C

ij

=

n

c

c

c

ij

1

, c

c

c

ij

2

, . . . , c

c

c

ij

N

i

o

. For

each point correspondence c

c

c

ij

k

, a rigid transformation

T

ij

k

=

R

ij

k

, t

t

t

ij

k

can be calculated using their point po-

sitions

p

p

p

i

k

, p

p

p

j

k

and LRFs

F

i

k

, F

j

k

. That is,

R

ij

k

=

F

i

k

T

F

j

k

, (1)

t

t

t

ij

k

= p

p

p

i

k

− p

p

p

j

k

R

ij

k

, (2)

where R

ij

k

is the rotation matrix and t

t

t

ij

k

is the transla-

tion vector of the rigid transformation T

ij

k

.

A set of N

i

plausible transformations are calcu-

lated from the point correspondences C

ij

. These

transformations are further grouped and verified to

produce a robust transformation T

ij

. The two range

images M

i

and M

j

are then coarsely registered using

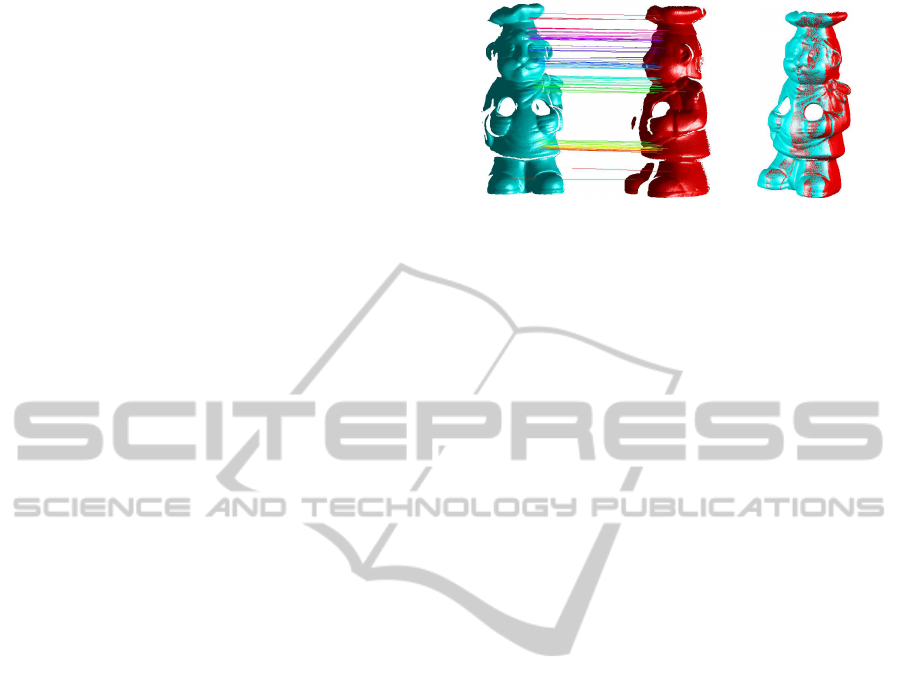

(a) (b)

Figure 2: An illustration of pairwise range image registra-

tion. (a) A pair of range images with the correct point corre-

spondences. (b) Registered range images. (Figure best seen

in color.)

the transformation T

ij

. The registration is further re-

fined using an improved Iterative Closest Point (ICP)

algorithm by repeatedly generating pairs of closest

points in the two range images and minimizing the

residual error (Besl and McKay, 1992). Note that, a

recently proposed spare ICP algorithm (Bouaziz et al.,

2013) can alternatively be used to deal with challeng-

ing datasets affected by noise and outliers. An illus-

tration of pairwise range image registration is shown

in Fig. 2. Fig. 2(a) shows a pair of range images with

the correct point correspondences, Fig. 2(b) shows

the two registered range images.

3 MULTI-VIEW RANGE IMAGE

REGISTRATION

So far we have introduced a RoPS feature matching

based algorithm for pairwise range image registra-

tion. In this section, we propose a reference shape

based algorithm for multi-view range image registra-

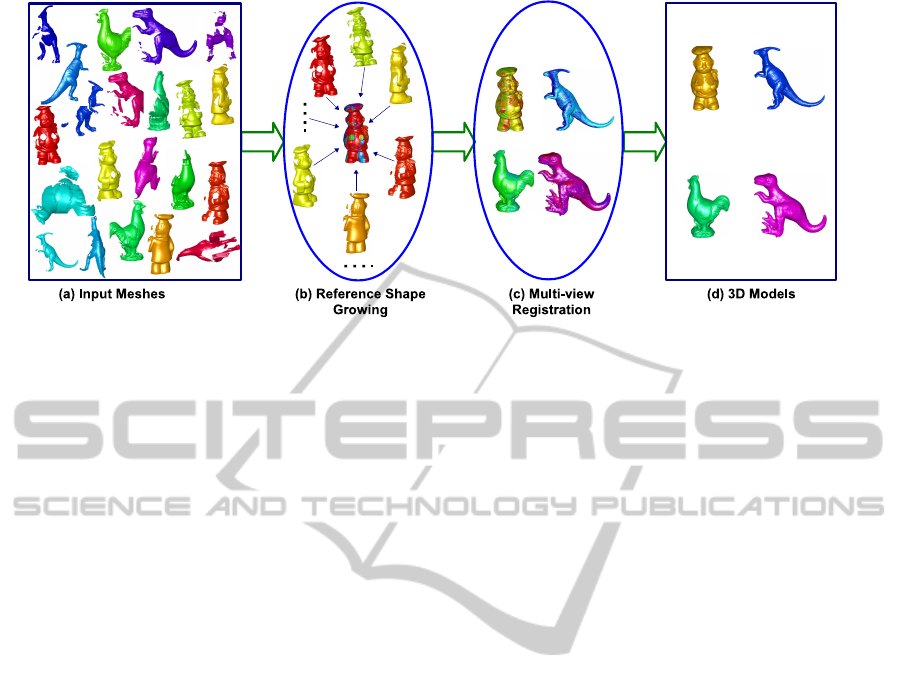

tion. Fig. 3 shows an illustration of the proposed

multi-view range image registration algorithm. More-

over, the whole process is given in Algorithm 1.

Given a set of meshes

M

1

, M

2

, . . . , M

N

m

, we

initialize the search space Φ

Φ

Φ with all the input meshes.

The algorithm then starts by selecting a meshfrom the

search space as the initial reference shape R

1

, which

iteratively grows by performing pairwise registration

between itself and the remaining meshes in the search

space.

For a mesh M

i

in the search space, we use the

RoPS matching based pairwise registration algorithm

to register it to the reference shape R

1

. If the num-

ber of overlapping points is more than τ

o

times of the

number of vertices in M

i

, we consider that M

i

is suc-

cessfully registered to R

1

. We then add the vertices

in M

i

, whose shortest distances to R

1

are more than

the average mesh resolution, to the reference shape

GRAPP2014-InternationalConferenceonComputerGraphicsTheoryandApplications

98

Figure 3: An illustration of the proposed multi-view range image registration algorithm.

R

1

. Consequently, the reference shape R

1

is up-

dated. We then need to generate RoPS features for

the newly updated reference shape. Since the RoPS

features have already been extracted in the previous

reference shape and the mesh M

i

. We therefore, gen-

erate RoPS features for the updated reference shape

by looking for its closest feature points in the previ-

ous reference shape and M

i

. Note that, this approach

greatly improves the computational efficiency of fea-

ture extraction as it does not require any feature calcu-

lation during the process of reference shape growing.

Once the mesh M

i

is checked, it is then removed

from the search space Φ

Φ

Φ, and the transformation in-

formation between M

i

and the reference shape R

1

is

stored. The next mesh M

i+1

in the search space Φ

Φ

Φ is

selected by turn to be registered to the updated ref-

erence shape. The growing process of the reference

shape continues until either all the meshes have been

registered to R

1

, or none mesh in the search space

Φ

Φ

Φ can further be registered to R

1

. Note that, during

the process of iterations, the surface (i.e., points) of

R

1

gradually grows into a whole complete 3D shape.

Meanwhile, the pose of R

1

keeps unchanged. Once

the growing process for the reference shape R

1

stops,

the rigid transformations between all these registered

meshes and R

1

are already known. We then trans-

form these meshes to the coordinate frame of R

1

.

Consequently, these meshes are coarsely registered.

In order to cope with the cases where the meshes

may correspond to several different objects, the algo-

rithm continues to initialize a new reference shape R

2

by picking up a mesh from the remaining meshes in

the search space. The reference shape R

2

grows us-

ing the same technique as for R

1

. Consequently, all

the meshes corresponding to reference shape R

2

are

coarsely registered. This process continues until none

initial reference shape can be built any more. Finally,

all these input meshes can separately be registered to

their corresponding reference shapes.

Once the meshes corresponding to a specific ref-

erence shape are coarsely registered, these registra-

tions are further refined with a multi-view fine reg-

istration algorithm (e.g., (Williams and Bennamoun,

2001)). The multi-view fine registration algorithm

minimizes the overall registration error of multiple

meshes, and distributes any registration errors evenly

over the complete 3D model. Finally, a continuous

and seamless 3D model is reconstructed for each ref-

erence shape by using an integration and surface re-

construction algorithm (Curless and Levoy, 1996).

Note that, the proposed algorithm is fully auto-

matic and can be performed without any manual in-

tervention. It does not require any prior information

about the sensor position, the shapes of objects, view-

ing angles, overlapping pairs, order of meshes, or

number of objects. In our case, a user can treat the

modeling process as a “black box”. The only thing

one needs to do is to import all scanned range images

to the system, and to collect the complete 3D models

after a while of running.

Compared to the spanning tree based algorithms

(Huber and Hebert, 2003; Bariya et al., 2012;

Tombari et al., 2010; Masuda, 2009), the advantages

of the proposed reference shape based algorithm are

obvious. First, it performs multi-view range image

registration more efficiently, as demonstrated in Sec-

tion 4.3. For a set of N

m

range images, its computa-

tional complexity is O(N

m

) compared to O

N

2

m

for

the spanning tree based algorithms. Second, it is ca-

pable to perform registration of multiple range images

corresponding to several different objects, rather than

from only a single object, as further demonstrated in

Section 4.4. Third, it does not suffer from cumulative

registration errors because all meshes of an object are

registered to the same reference shape. In contrast,

the registration errors between any two meshes may

EfficientRegistrationofMultipleRangeImagesforFullyAutomatic3DModeling

99

Algorithm 1: Reference shape based multi-view registra-

tion.

1: Input: Meshes

M

1

, M

2

, . . . , M

N

m

.

2: Initialization: Search space Φ

Φ

Φ ←

M

1

, M

2

, . . . , M

N

m

. Number of reference

shape n

s

← 0.

3: while Φ

Φ

Φ is not empty do

4: n

s

← n

s

+ 1.

5: Initialize reference shape R

n

s

with a mesh

from Φ

Φ

Φ.

6: repeat

7: Select a mesh M

i

from Φ

Φ

Φ and register it to

R

n

s

.

8: if Successfully registered then

9: Update the reference shape R

n

s

by adding

new points from M

i

.

10: Extract RoPS features for the updated

R

n

s

.

11: Store the transformation between R

n

s

and

M

i

.

12: end if

13: Remove the mesh M

i

from Φ

Φ

Φ.

14: until No mesh in Φ

Φ

Φ can be successfully regis-

tered to R

n

s

15: end while

16: Output: Reference shapes

R

1

, R

2

, . . . , R

n

s

,

and the transformations between reference shapes

and their corresponding meshes.

accumulate through the path in a spanning tree based

algorithm.

4 EXPERIMENTAL RESULTS

In this section, we present the performance of our al-

gorithm when tested in different circumstances. We

also compare our algorithm to the state-of-the-arts.

4.1 Experimental Setup

We used the UWA 3D Modeling Dataset (Mian et al.,

2006a) to conduct experiments. The dataset consists

of 22, 16, 16, and 21 range images respectively for

four objects, namely the Chef, Chicken, Parasaurolo-

phus and T-Rex. These range images were acquired

with a Minolta Vivid 910 scanner. We manually

aligned any two range images M

i

and M

j

and further

refined the alignment using the ICP algorithm to cal-

culate the ground truth rotation R

ij

GT

and translation

t

t

t

ij

GT

between them. We then measured the degree of

overlap as the ratio of overlapping points to the aver-

age number of points of the two aligned range images.

For a given pair of range images M

i

and M

j

, the

accuracy of registration is measured by two errors:

the rotation error ε

ij

r

and translation error ε

ij

t

. The for-

mulas for calculating the rotation error ε

ij

r

between

the estimated rotation R

ij

E

and the ground truth rota-

tion R

ij

GT

, and the translation error ε

ij

t

between the es-

timated translation t

t

t

ij

E

and the ground truth translation

t

t

t

ij

GT

, is given in (Mian et al., 2006b). A registration

of two range images was reported as correct if the ro-

tation error was less than 5

◦

and the translation er-

ror was less than 5d

res

, where d

res

stands for average

mesh resolution. Otherwise, it was considered as an

incorrect registration.

4.2 Multi-view Registration of a Single

Object

We performed multi-view registration independently

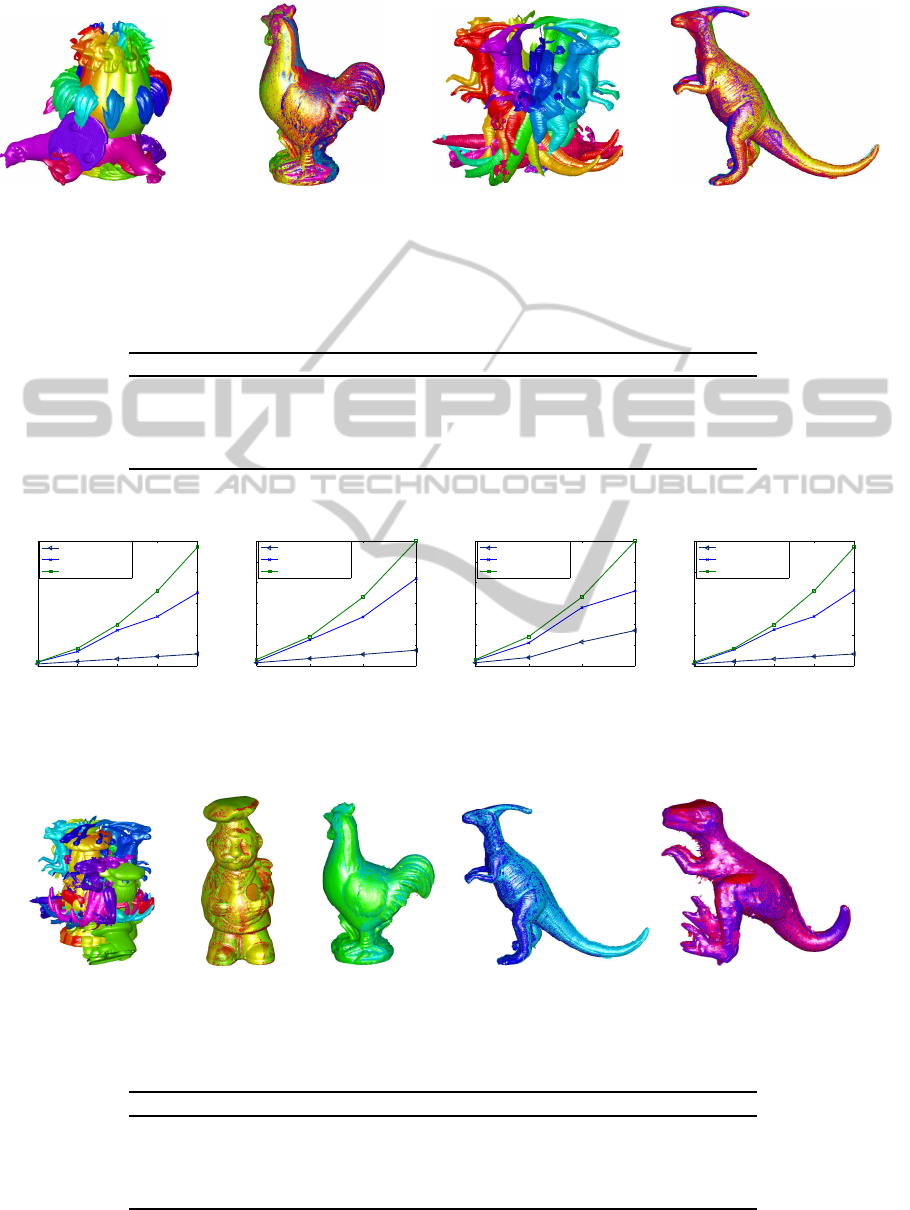

on range images of each individual object. Fig. 4 il-

lustrates the range images and the multi-view coarse

registration results of the Chicken and Parasaurolo-

phus. Although these range images were scanned

from different viewpoints and organized without any

order, they were accurately registered. No visually

noticeable defects or seams can be found in the reg-

istered range images, even in the featureless parts of

the objects (e.g., the tail of the Parasaurolophusin Fig.

4(d)).

In order to quantitatively analyze the accuracy of

our multi-view registration algorithm, we present the

number of correctly registered range images, and the

average registration errors of each individual object

in Table 1. It can be seen that all input range im-

ages of the four individual objects were correctly reg-

istered. The average rotation and translation errors of

the four objects were less than 2.5

◦

and 2d

res

, respec-

tively. Note that these results were achieved by us-

ing only the multi-view coarse registration algorithm.

These yet accurate results can further be improved

by the subsequent fine registration algorithm (e.g.,

the multi-view ICP). Generally,our algorithm enables

multi-view coarse registration to be performed auto-

matically and accurately.

4.3 Robustness to the Number of Input

Meshes

In order to evaluate the computational efficiency of

the multi-view registration algorithm with respect to

the number of input meshes, we progressively se-

lected a subset of the range images to perform multi-

view registration. For each fixed number of input

GRAPP2014-InternationalConferenceonComputerGraphicsTheoryandApplications

100

(a) (b) (c) (d)

Figure 4: An illustration of multi-view coarse registration results. (a) Range images of the Chicken. (b) Multi-view registration

result of the Chicken. (c) Range images of the Parasaurolophus. (d) Multi-view registration result of the Parasaurolophus

(Figure best seen in color).

Table 1: Multi-view coarse registration results on range images of four individual objects.

Chef Chicken Parasaurolophus T-Rex

#range images 22 16 16 21

#registered range images 22 16 16 21

Rotation error ε

r

(

◦

) 2.2117 1.0075 1.0634 1.3722

Translation error ε

t

(d

res

) 1.6460 1.0936 1.6634 1.9165

4 8 12 16 20

0

50

100

150

200

No. of range images

No. of pairwise registrations

Reference shape

Connected graph

Spanning tree

(a) Chef

4 8 12 16

0

20

40

60

80

100

120

No. of range images

No. of pairwise registrations

Reference shape

Connected graph

Spanning tree

(b) Chicken

4 8 12 16

0

20

40

60

80

100

120

No. of range images

No. of pairwise registrations

Reference shape

Connected graph

Spanning tree

(c) Parasaurolophus

4 8 12 16 20

0

50

100

150

200

No. of range images

No. of pairwise registrations

Reference shape

Connected graph

Spanning tree

(d) T-Rex

Figure 5: Robustness with respect to the number of input meshes.

(a) Input meshes (b) Chef (c) Chicken (d) Parasaurolophus (e) T-Rex

Figure 6: Multi-view coarse registration of range images corresponding to multiple objects(Figure best seen in color).

Table 2: Multi-view coarse registration results on mixed range images of the four objects.

Chef Chicken Parasaurolophus T-Rex

#range images 22 16 16 21

#registered range images 22 16 16 21

Rotation error ε

r

(

◦

) 1.8656 1.1674 0.4029 1.3789

Translation error ε

t

(d

res

) 1.3627 1.0976 0.9914 1.9128

EfficientRegistrationofMultipleRangeImagesforFullyAutomatic3DModeling

101

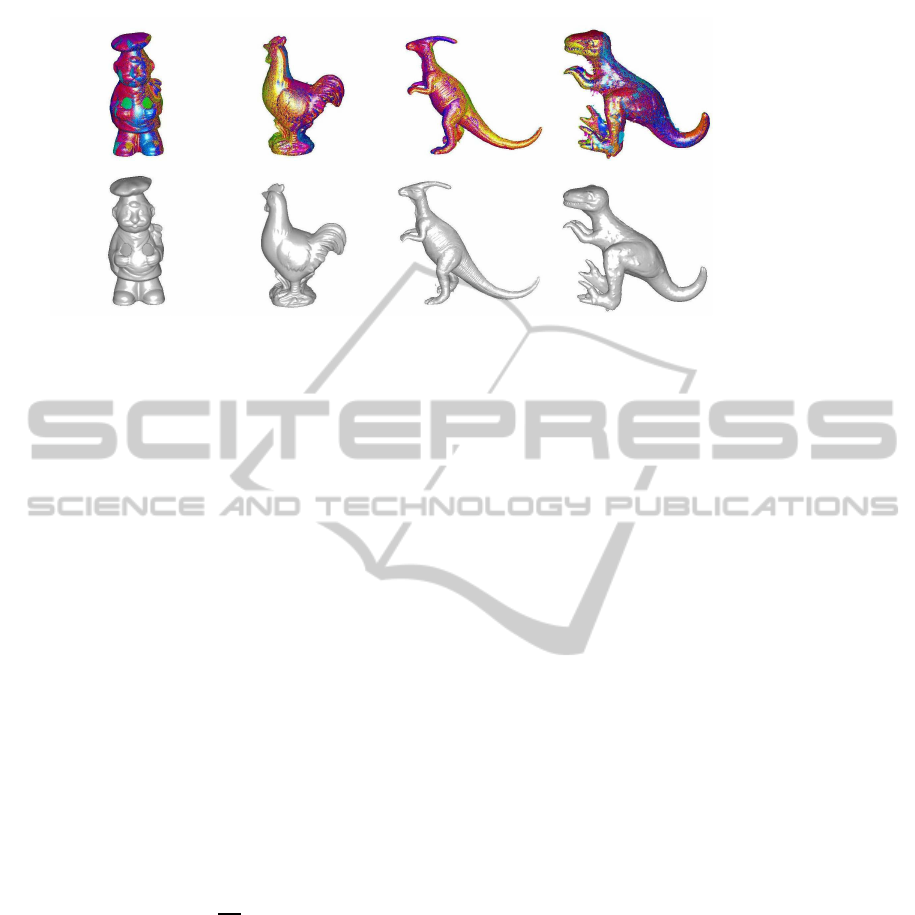

Figure 7: 3D modeling results (Figure best seen in color.)

meshes, we counted the number of pairwise registra-

tions which were needed to complete the multi-view

registration. The results for each of the four objects

are shown in Fig. 5. We also present the results of the

state-of-the-arts including the spanning-tree based al-

gorithms (Huber and Hebert, 2003; Masuda, 2009),

and the connected graph based algorithm (Mian et al.,

2006a). The spanning tree based algorithms required

C

N

m

2

pairwise registrations to perform a multi-view

registration, where N

m

is the number of input range

images and C stands for combinations. Therefore,

their computational complexity is O

N

2

m

. Our refer-

ence shape based algorithm showed a significant im-

provement compared to both the spanning tree based

and the connected graph based algorithms. It usually

completed the multi-view registration of N

m

range im-

ages with only N

m

− 1 pairwise registrations. Taking

the 20 input range images of the Chef as an example

(see Fig. 5(a)), the numbers of pairwise registrations

for the spanning tree based, connected graph based

and reference shape based algorithms were 190, 117

and 19, respectively. The improvement factor of our

reference shape based algorithm over the spanning

tree based algorithm was

190

19

= 10. Moreover, the

advantage in efficiency of the reference shape based

algorithm becomes even more significant as the num-

ber of input range images increases.

4.4 Multi-view Registration of Multiple

Objects

In order to further demonstrate the capability of our

algorithm to simultaneously register multiple mixed

range images corresponding to multiple objects, we

used all the range images of the four objects as an in-

put. These range images were mixed and were reg-

istered using our reference shape based algorithm.

As results, four reference shapes were produced by

our algorithm. The totally 75 input range images are

shown in Fig. 6(a), and the coarse registration results

for the four reference shapes are respectivelyshown in

Fig. 6(b-e). It can be seen that, all these input range

images were separately registered according to their

corresponding reference shapes. Moreover, although

fine registration was not applied to these registration

results, no visually noticeable seams can be found in

any of the registered range images.

We also present the quantitative results in Table

2. These results were almost the same as these re-

ported in Table 1. This observation clearly indicates

that the range image registration accuracy of an ob-

ject could not be affected by the existence of range

images corresponding to other objects. That is, our

algorithm is able to perform multi-view registration

correctly from a mixed and unordered range images

which correspond to several different objects.

4.5 3D Modeling Results

In order to test the whole pipeline for 3D object mod-

eling, we used the range images of the Chef, Chicken,

Parasaurolophus and T-Rex as inputs. We extracted

RoPS features from each range image, and performed

multi-view range image registration using the refer-

ence shape based algorithm. We then integrated the

range images corresponding to each reference shape,

producing a reconstructed complete 3D model. The

multi-view registration results and reconstructed 3D

models of these objects are shown in Fig. 7. These

results clearly demonstrate that our algorithm is capa-

ble of reconstructing 3D models by seamlessly merg-

ing multiple range images.

GRAPP2014-InternationalConferenceonComputerGraphicsTheoryandApplications

102

5 CONCLUSIONS

In this paper, we presented a reference shape based al-

gorithm for multi-view range image registration. We

tested the performance of our algorithm on multiple

range images from either one object or multiple ob-

jects. Experimental results show that the proposed

algorithm can perform multi-view range image reg-

istration on mixed and unordered range images which

correspond to different objects. We also tested the

robustness of our algorithm with respect to varying

numbers of input range images. It is shown that the

proposed algorithm is more computationally efficient

compared to the state-of-the-art methods. We further

demonstrated the effectiveness of the proposed algo-

rithm by performing 3D modeling. Overall, the pro-

posed algorithm is accurate, efficient and robust.

REFERENCES

Alexiadis, D., Zarpalas, D., and Daras, P. (2013). Real-time,

full 3-D reconstruction of moving foreground objects

from multiple consumer depth cameras. IEEE Trans-

actions on Multimedia, 15(2):339–358.

Assfalg, J., Bertini, M., Bimbo, A., and Pala, P. (2007).

Content-based retrieval of 3-D objects using spin im-

age signatures. IEEE Transactions on Multimedia,

9(3):589–599.

Bariya, P., Novatnack, J., Schwartz, G., and Nishino, K.

(2012). 3D geometric scale variability in range im-

ages: Features and descriptors. International Journal

of Computer Vision, 99(2):232–255.

Besl, P. and McKay, N. (1992). A method for registration

of 3-D shapes. IEEE Transactions on Pattern Analysis

and Machine Intelligence, 14(2):239–256.

Bouaziz, S., Tagliasacchi, A., and Pauly, M. (2013). Sparse

iterative closest point. Computer Graphics Forum

(Special Issue of Symposium on Geometry Process-

ing), 32(5):1–11.

Chen, Y. and Medioni, G. (1992). Object modelling by reg-

istration of multiple range images. Image and vision

computing, 10(3):145–155.

Curless, B. and Levoy, M. (1996). A volumetric method

for building complex models from range images. In

23rd Annual Conference on Computer Graphics and

Interactive Techniques, pages 303–312.

Dorai, C., Wang, G., Jain, A. K., and Mercer, C. (1998).

Registration and integration of multiple object views

for 3D model construction. IEEE Transactions on Pat-

tern Analysis and Machine Intelligence, 20(1):83–89.

Guo, Y., Bennamoun, M., Sohel, F., Wan, J., and Lu, M.

(2013a). 3D free form object recognition using rota-

tional projection statistics. In IEEE 14th Workshop on

the Applications of Computer Vision, pages 1–8.

Guo, Y., Sohel, F., Bennamoun, M., Lu, M., and Wan, J.

(2013b). Rotational projection statistics for 3D local

surface description and object recognition. Interna-

tional Journal of Computer Vision, 105(1):63–86.

Guo, Y., Sohel, F., Bennamoun, M., Lu, M., and Wan, J.

(2013c). TriSI: A distinctive local surface descrip-

tor for 3D modeling and object recognition. In 8th

International Conference on Computer Graphics The-

ory and Applications, pages 86–93.

Huang, Q. and Pottmann, H. (2005). Automatic and robust

multi-view registration. Technical report, Tsinghua

University.

Huber, D. and Hebert, M. (2003). Fully automatic regis-

tration of multiple 3D data sets. Image and Vision

Computing, 21(7):637–650.

Johnson, A. E. and Bing Kang, S. (1999). Registration

and integration of textured 3D data. Image and Vision

Computing, 17(2):135–147.

Lei, Y., Bennamoun, M., Hayat, M., and Guo, Y. (2013).

An efficient 3D face recognition approach using local

geometrical signatures. Pattern Recognition.

Masuda, T. (2009). Log-polar height maps for multiple

range image registration. Computer Vision and Image

Understanding, 113(11):1158–1169.

Mian, A., Bennamoun, M., and Owens, R. (2006a).

Three-dimensional model-based object recognition

and segmentation in cluttered scenes. IEEE Trans-

actions on Pattern Analysis and Machine Intelligence,

28(10):1584–1601.

Mian, A., Bennamoun, M., and Owens, R. A. (2006b). A

novel representation and feature matching algorithm

for automatic pairwise registration of range images.

International Journal of Computer Vision, 66(1):19–

40.

Rusinkiewicz, S., Hall-Holt, O., and Levoy, M. (2002).

Real-time 3D model acquisition. In ACM Transac-

tions on Graphics, volume 21, pages 438–446. ACM.

Salvi, J., Matabosch, C., Fofi, D., and Forest, J. (2007).

A review of recent range image registration methods

with accuracy evaluation. Image and Vision Comput-

ing, 25(5):578–596.

ter Haar, F. B. and Veltkamp, R. C. (2007). Automatic mul-

tiview quadruple alignment of unordered range scans.

In IEEE International Conference on Shape Modeling

and Applications, pages 137–146.

Tombari, F., Salti, S., and Di Stefano, L. (2010). Unique

signatures of histograms for local surface description.

In European Conference on Computer Vision, pages

356–369.

Williams, J. and Bennamoun, M. (2001). Simultaneous reg-

istration of multiple corresponding point sets. Com-

puter Vision and Image Understanding, 81(1):117–

142.

EfficientRegistrationofMultipleRangeImagesforFullyAutomatic3DModeling

103