Brain-inspired Sensorimotor Robotic Platform

Learning in Cerebellum-driven Movement Tasks through a Cerebellar

Realistic Model

Claudia Casellato

1

, Jesus A. Garrido

2,3

, Cristina Franchin

1

, Giancarlo Ferrigno

1

,

Egidio D’Angelo

2,4

and Alessandra Pedrocchi

1

1

Politecnico di Milano, Dept of Electronics, Information and Bioengineering, Via G. Colombo 40, 20133 Milano, Italy

2

University of Pavia, Dept of Brain and Behavioral Sciences, Neurophysiology Unit, Via Forlanini 6, I-27100 Pavia, Italy

3

Consorzio Interuniversitario per le Scienze Fisiche della Materia (CNISM), Via Forlanini 6, I-27100 Pavia, Italy

4

IRCCS, Istituto Neurologico Nazionale C. Mondino, Brain Connectivity Center, Via Mondino 2, Pavia, I-27100, Italy

Keywords: Cerebellum, Learning, Vestibular-Ocular Reflex, Plasticity.

Abstract: Biologically inspired neural mechanisms, coupling internal models and adaptive modules, can be an

effective way of constructing a control system that exhibits a human-like behaviour. A brain-inspired

controller has been developed, embedding a cerebellum-like adaptive module based on neurophysiological

plasticity mechanisms. It has been tested as controller of an ad-hoc developed neurorobot, integrating a 3

degrees of freedom serial robotic arm with a motion tracking system. The learning skills have been tried out,

designing a vestibular-ocular reflex (VOR) protocol. One robot joint was used to get the desired head turn,

while another joint displacement corresponded to the eye motion, which was controlled by the cerebellar

model output, used as joint torque. Along task repetitions, the cerebellum was able to produce an

anticipatory eye displacement, which accurately compensated the head turn in order to keep on fixing the

environmental object. Multiple tests have been implemented, pairing different head turn with object motion.

The gaze error and the cerebellum output were quantified. The VOR was accurately tuned thanks to the

cerebellum plasticity. The next steps will include the activation of multiple plasticity sites evaluating the

real platform behaviour in different sensorimotor tasks.

1 INTRODUCTION

Biologically inspired neural mechanisms can be an

effective way of constructing a control system that

exhibits a human-like behavior. In the framework of

distributed motor control, connecting brain-inspired

kinematic and dynamic models, human-like

movement planning strategies and adaptive neural

systems inside the same controller is a very

challenging approach, bridging neuroscience and

robotics.

Motor learning is obviously necessary for

complicated movements such as playing the piano,

but it is also important for calibrating simple

movements like reflexes, as parameters of the body

and environment change over time. Cerebellum-

dependent learning is demonstrated in different

contexts (Boyden et al., 2004; van der Smagt, 2000),

such as multiple forms of associative learning,

where the learning is based on the stimulus-response

association. Eye blinking conditioning, saccadic eye

movements, vestibular ocular reflex and reaching

arm movements are well-known examples of these

mechanisms (Donchin et al., 2012).

The Marr-Albus and Ito models propose that

changes in the strengths of parallel fiber–Purkinje

cell synapses could store stimulus-response

associations by linking inputs with appropriate

motor outputs, following a Hebbian learning

approach. Error-based learning and predictive

outputs are working principles of these cerebellum

models (Marr, 1969; Albus, 1971; Ito, 2006).

We have built up a real human-like sensorimotor

platform with cerebellar-like learning skills. The

sensory systems are integrated, monitoring the

environment and the muscular-skeletal system state.

Based on the neurophysiology of the distributed

neuromotor control system, the robotic control has

568

Casellato C., A. Garrido J., Franchin C., Ferrigno G., D’Angelo E. and Pedrocchi A..

Brain-inspired Sensorimotor Robotic Platform - Learning in Cerebellum-driven Movement Tasks through a Cerebellar Realistic Model.

DOI: 10.5220/0004659305680573

In Proceedings of the 5th International Joint Conference on Computational Intelligence (SSCN-2013), pages 568-573

ISBN: 978-989-8565-77-8

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

been designed as a real-time coupling of multiple

neuronal structures and mechanisms; the cerebellum

model, based on the plasticity principles ascribed to

the synapses between parallel fibers and Purkinje

cells, is expected to learn throughout the task

repetitions (Casellato et al., 2012).

One protocol stressing the cerebellum role has

been designed and implemented: the vestibular-

ocular reflex (VOR). The VOR produces eye

movements which aim at stabilizing images on the

retina during head movement. The VOR tuning is

ascribed mainly to the cerebellum loop, in particular

to the cerebellar flocculus which creates an

inhibitory loop in the VOR circuit. There are a lot of

evidences of its role from lesions, pharmacological

inactivation and genetic disruption studies (Burdess,

1996). The learning is based on the temporal

association of the two stimuli, head turn and motion

of retinal image, i.e. the system learns that one

stimulus will be followed by another stimulus and a

consequent predictive compensatory response is

gradually produced and accurately tuned.

2 METHODS

2.1 Robotic Platform

A flexible, not cumbersome and manoeuvrable

robotic platform has been built and its controller has

been developed in Visual C++.

The main robot is a Phantom Premium 1.0

(SensAble

TM

), with 3 rotational Degrees of Freedom

(DoFs). It is equipped with digital encoders at each

joint and it can be controlled with force and torque

commands. It connects to the PC via the parallel port

interface. It is integrated with a motion capture

device, a VICRA-Polaris (NDI

TM

), which is an

optical measurement system acquiring marker-tools

at 20 Hz. Another robotic device (Phantom Omni,

SensAble

TM

) is included into the platform so as to

impose object motion during the protocol

performance. The controller has been developed

exploiting the OpenHaptics toolkit (SensAble

TM

)

and the Image-Guided Surgery Toolkit (IGSTK).

The robotic DoFs are controlled through torque

signals, by exploiting the low-level access provided

by the Haptic Device Application Programming

Interface (HDAPI). For stability, this control loop

must be executed at a consistent 1 kHz rate; in order

to maintain such a high update rate, the servo loop is

executed in a separate, high-priority thread

(HDCALLBACKS). For the motion tracking system

integration, the IGSTK low-level libraries

(http://www.igstk.org/), whose architecture is based

on Request-Observer-pattern, are used. Each desired

tool is identified by a .rom file, which defines the

unique geometry of the reflective markers

composing the tool itself.

In our configuration, wireless passive tools have

been placed in correspondence of the robotic end-

effector (gaze) and of the objects of interest in the

environment. An a-priori calibration procedure

allows to identify the constant roto-translation

between the reference system of the tracking device

(Visual system) and of the robot (Proprioceptive

system), thus it represents a Body/Eye calibration.

2.2 Cerebellum Model

The cerebellar system hereby implemented and

embedded into the whole control system is based on

the model proposed in (Garrido et al., submitted). It

takes into account the major functional hypotheses

that each cerebellar layer endows, modeling Mossy

Fibers (MF), GRanular layer (GR), Purkinje Cell

layer (PC) and Deep Cerebellar Nuclei (DCN).

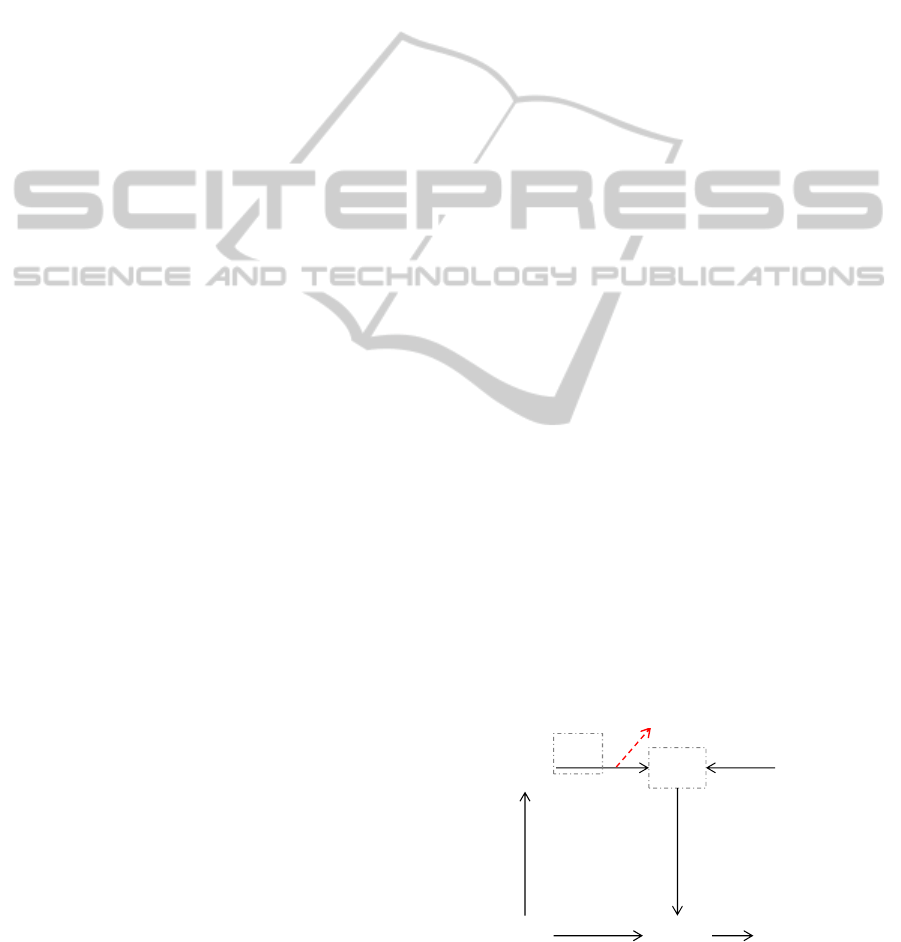

These cerebellar layers have been interconnected, as

shown in Figure 1, where PF (Parallel Fibers) are the

axons of GR cells and the CF are the Climbing

Fibers coming from the Inferior Olive (IO). This

model implements three plasticity mechanisms at

different synapses: PF→PC (w), PC→DCN (b) and

MF→DCN (v). In previous models, the GR layer

has been suggested to generate non-recurrent states

after the stimulus onset in eyeblink-conditioning

tasks (Yamazaki and Tanaka, 2007). The large

number of granular cells (and then of their axons,

i.e. PF) guarantees a reliable pattern separation,

which means that similar input patterns would be

sparsely re-encoded into largely not-overlapping

populations of GR activity. Climbing fibers carry the

error signal, generating complex spikes on PC. A

state-error correlator emulates the Purkinje cell

operability, driving the PF→PC long-term plasticity.

Figure 1: Scheme of the cerebellar network.

MF

GR

PC

PF

IO

CF

DCN

W

b

v

z(t)=

v∙(MF(t))‐ b∙(PC(t))

Brain-inspiredSensorimotorRoboticPlatform-LearninginCerebellum-drivenMovementTasksthroughaCerebellar

RealisticModel

569

Finally, an adder/subtractor module receives the

inputs coming from the mossy fibers (multiplied by

the MF→DCN synaptic weight v) and subtracts the

signal coming from the cerebellar cortex (multiplied

by its own synaptic weight b). Indeed, the

MF→DCN connections are excitatory, while the

PC→DCN ones are inhibitory. In the cerebellum

model used in this work (Figure 1), only one

plasticity site was taken into account: the long-term

depression (LTD) and long-term potentiation (LTP)

at cerebellar cortex (PF→PC); thus, the PC activity

changes along time and along repetitions (PC(t))

depending on the tuneable strength at the synapses

with the PF (w). The synapses strengths v and b are

set constant equal to 1. Thus, the DCN output is

maximum (z=1) when the Purkinje cells do not fire

(PC(t)=0), i.e. the PF→PC weights are depressed

(LTD); whereas the DCN output is minimum (z=0)

when the Purkinje cells are maximally activated

(PC(t)=1), i.e. the PF→PC weights are potentiated

(LTP). LTD is driven by CF, in particular it is

proportionally induced by the frequency of the

generated complex spikes; whereas LTP is

constantly produced when an input activity is

present but in absence of CF stimulation related to

this activity (unsupervised learning). The plasticity

rule on w is defined as follows:

∆

→

1

∙

0

where Δw

PF

j

-PC

i

(t) represents the weight change

between the j-th parallel fiber and the target PC

associated to the muscle (agonist or antagonist), ԑ

i

is

the current activity coming from the associated

climbing fiber (which represents the normalized

error along the executed head-eye movement),

LTP

max

and LTD

max

are the maximum LTP and LTD

values and α is the LTP decaying factor. LTP

max

and

LTD

max

are set 0.015 and 0.15 respectively and α =

1000, to avoid early plasticity saturation.

The somatotopic approach is kept at level of IO,

PC and DCN: a group of IO carries information

about the error (positive and negative separately) of

a specific involved DoF and projects on a

corresponding group of PC. They themselves project

on a corresponding group of DCN, which thus

produce an agonist or antagonist motion (positive

and negative) for each specific controlled DoF.

2.3 Protocol: VOR

The stimulus is the head turn, which is produced by

rapidly imposing a motion to the joint 2 of the

robotic device, with a pre-defined joint time-profile,

occurring in a head-fixed reference system (fixed

robotic reference system). The gaze is defined as the

orientation of the second link of the robot, i.e. the

one linking joint 2 and joint 3.

The vestibular sensory input is used by the GR

layer to generate the system state.

The CF carry the visual error, i.e. the image

retinal slip, computed with data from the optical

tracking system. Assumed that the goal is to fix an

environmental object, this visual error is computed

as the disalignment angle with respect to the stable

condition before head turn, where the gaze direction

and the object center are aligned. Since the

acquisition frequency of the tracking system, the

retinal slip is more delayed or not strictly

synchronized with the faster vestibular information,

which is physiologically meaningful.

During the head turn, the only controller acting

on joint 3 (compensatory eye turn) is the cerebellum,

so as to be fully neurophysiologically plausible.

Indeed, since the required rapid reflexive response to

compensate for head motion, the inaccurate and

delayed feedback cannot be activated. It is worthy to

note that the robotic device was used so as to get the

two involved joints (joints 2 and 3) moving on a

horizontal plane, where the gravity has not to be

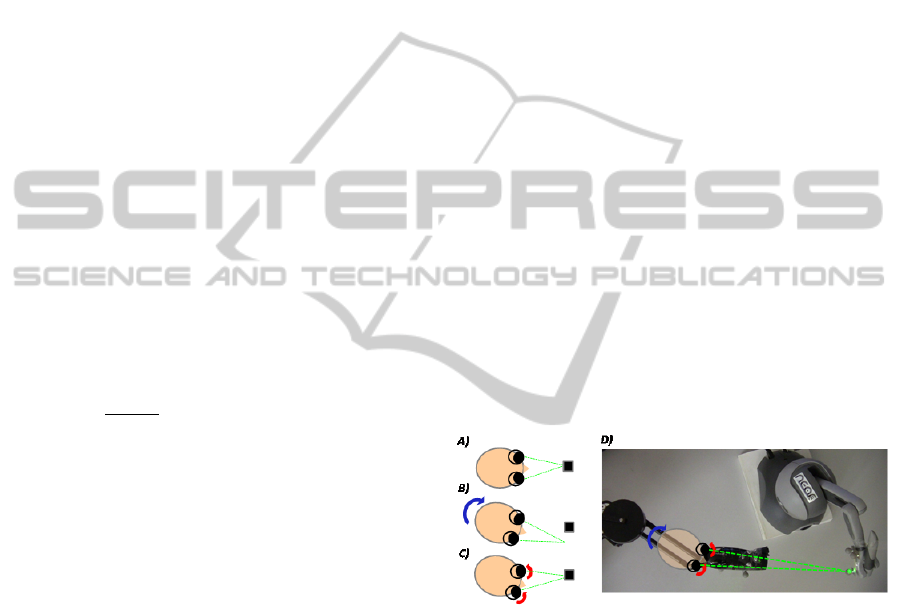

counterbalanced. The protocol and the set-up are

shown in Figure 2.

Figure 2: The VOR protocol. A: stable condition. B: head

turn leading to an image slip. C: head turn and

compensatory eye movement. D: the set-up: Phantom

Premium with the optical tool on the end-effector;

Phantom Omni with the object-tool. The green laser is

attached parallel to the second link (i.e. the gaze direction)

to highlight the gaze point on the environmental scene.

In order to quantify the VOR performance, the

gain at maximum vestibular stimulus has been

computed, as absolute ratio between eye turn and

head turn (angles) at the maximum achieved head

turn. Different sequences of repetitions were tested,

in order to quantify the modulation of VOR with

different stimuli presentation.

• Test 1 –VOR Calibration

We have designed a sequence of 130 trials. In the

first 110 repetitions, a head turn of 22° with respect

IJCCI2013-InternationalJointConferenceonComputationalIntelligence

570

to the rest head-tilt was imposed with a speed equal

to 5.5°/s (head turn duration = 4 s). During the last

20 trials, the head turn was halved (11°, at 2.75°/s).

Each trial lasted 4.5 seconds, thus including a

stabilization phase between consecutive trials (0.5

s), where the head turn did not occur. The eye

motion was driven only by the cerebellum output

(joint 3 torque), with a constant gain equal to 100.

• Test 2: Head Motion + Object Motion

The head motion was paired with additive image

motion; it means that the image motion was due not

only to the head turn but even to the real object

displacement (Boyden et al., 2004). We have tested

a sequence of 110 trials where the object motion was

synchronously added to the head rotation (same

onset instant). The head turn was as in Test 1 (22° in

4 seconds). The object, attached to the Phantom

Omni, was moved at a speed equal to 4 cm/s. Three

conditions were created; the first one was defined as

a standard VOR calibration; the second condition

with the object moving in the same direction as the

head and then the third condition with the object

moving in the opposite direction than the head

rotation.

3 RESULTS

• Test 1 – VOR Calibration

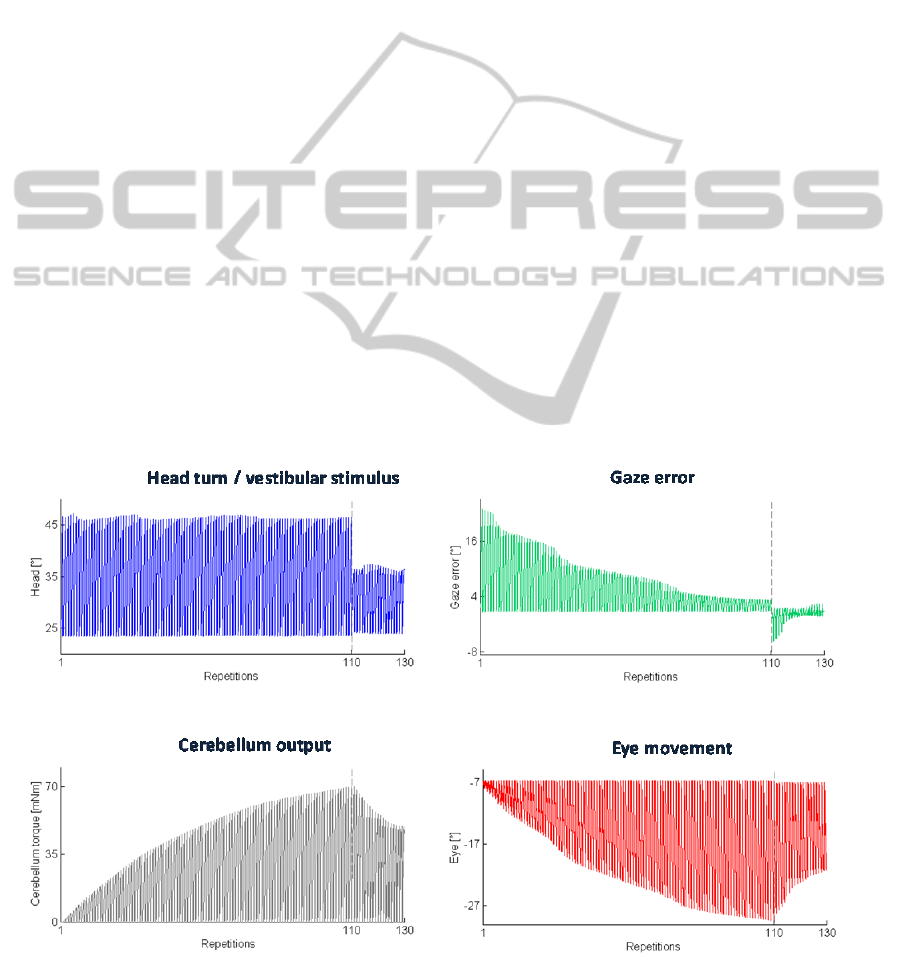

Figure 3 shows the results of these tests.

In the first trials of the task sequence, the VOR is

poorly calibrated, thus head movement results in

image motion on the retina, which would mean

blurred vision. Indeed, in these first trials, the gaze

error reaches values higher than 16° when the head

is maximally turned. Along task repetitions, the

cerebellum-driven motor learning adjusts the VOR

to produce more accurate eye motion, thus reducing

and stabilizing the gaze error.

In the last 20 trials (2

nd

condition), where the

head rotation amplitude is reduced, the first

repetitions are characterized by an eye

overcompensation, as learnt during the previous

condition; it means that the eyes turn too much with

respect to the head motion, overcoming the object.

Very rapidly, the VOR is re-tuned, stabilizing back

the error at the minimum level. From the zoom at the

right-top of the figure, it can be marked that the

shape of the gaze error changes between the two

conditions, since the onset of the head motion is the

same but the head is rotating slower and it reaches a

less turn from the initial head-tilt; thus the

cerebellum-driven eye motion starts correctly but

then it overcompensates the head motion. That is

adjusted within few repetitions of this 2

nd

condition.

Figure 3: Test 1 – VOR Calibration. Top-left: the actual joint 2; top-right: the gaze error; down-left: the cerebellar output

(DCN activity); down-right: the eye movement produced by the cerebellum output.

Brain-inspiredSensorimotorRoboticPlatform-LearninginCerebellum-drivenMovementTasksthroughaCerebellar

RealisticModel

571

The cerebellum output (already multiplied to the

gain, thus equal to the torque provided to joint 3

actuator) and the produced eye motion increase.

Then, in the 2

nd

condition where the head rotation

amplitude is reduced, VOR needs to be decreased;

thus, the cerebellar activity is modulated until it

reaches an analogous value as at the end of the first

condition. The decreasing process is faster than the

increasing one.

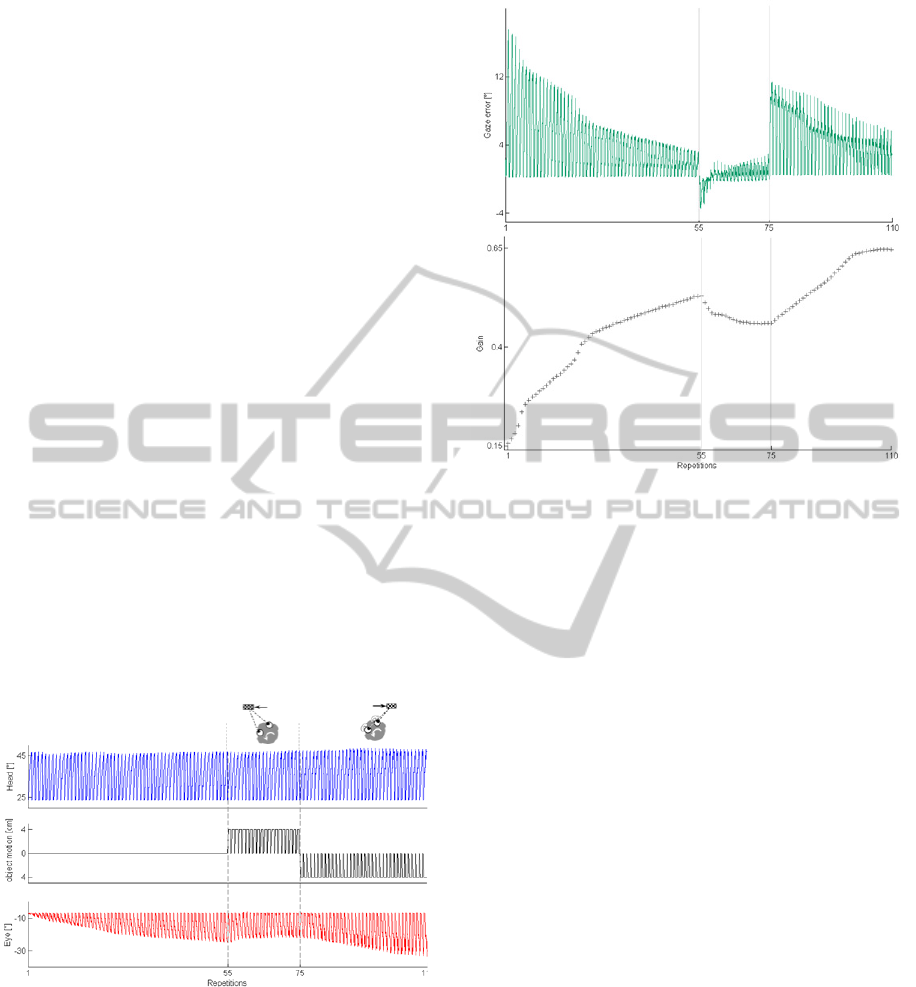

• Test 2: Head Motion + Object Motion

The sequence of 110 trials where the head turn is

constant and the object motion, when occurs, starts

together with the head motion is depicted in Figure

4. After a VOR calibration compensating only for

head turn, the additive object motion represents a

gain-down and a gain-up stimulus (2

nd

and 3

rd

conditions, respectively). Since, as demonstrated in

Test 1, the VOR decreasing is faster, the 2

nd

condition is made up of less repetitions than 3

rd

condition.

Figure 5 is focused on the gaze error and the

gain parameter, which is tuned during the repetitions

in each condition. Unlike Test 1, here the stable gain

that is achieved at the end of each condition is not

the same; indeed, the object motion induces a

modulation of the needed eye motion, even if the

head turn has the same amplitude and duration

across all conditions. Thus, the gain is modified

(gain-down and gain-up).

Figure 4: Test 2 - head motion + object motion. The first

row shows the head angle; the second row depicts the

object movement (by Phantom Omni motion); the third

row represents the produced eye compensatory eye

movement; in the three conditions: the VOR calibration

with fixed object, the VOR gain-down and the gain-up

inducing object motion.

Figure 5: The gaze error and the gain parameter in the task

sequence with gain-down and gain-up stimuli.

4 DISCUSSION

The developed integrated robotic platform with its

controller is able to neurophysiologically reproduce

representative cerebellum-driven motor behaviors.

The cerebellar model can be viewed as a “black

box” associative memory, whose function is

determined by how its inputs and outputs are

connected in the system that is embedded in.

The VOR uses a head turn signal sensed by the

vestibular system to drive an implicit feedfoward

compensation scheme. Purkinje cells activity can

properly tune eye movements.

In the first test about VOR calibration, the two

conditions in row show a sort of generalization of

learning, tuning the VOR response depending on the

features of the head turn stimulus. In

neurophysiological terms, the generalization process

should be based on an overlap of neuronal

activations, i.e. neurons active at low vestibular

stimuli are a subset of those active at higher stimuli.

The second test highlights the VOR tuning with

additive perturbations due to object displacement.

We found that the gain-down trials show a faster

learning rate than the gain-up trials. This asymmetry

is due to the different mechanisms; indeed, since the

system was set to inhibit the DCN activity at the

start of the learning process, increasing in VOR

involves only the LTD mechanism (reduction of PC

IJCCI2013-InternationalJointConferenceonComputationalIntelligence

572

activity and thus increase of DCN activity). On the

other hand, the need of decreasing VOR and the

presence of overcompensation enable

simultaneously the reduction of agonist DCN

activity (PF-PC LTP) and the increase of antagonist

DCN activity (PF-PC LTD). Thus, during the VOR

gain-down, the stiffness of the muscle is temporally

increased and, after some time, it is reduced to the

minimal level.

In summary, a simple model with parallel,

sparsely coded channels, and with a single plasticity

mechanism that alters a subset of these channels, can

go a long way in explaining the general capacity of

motor learning in the VOR to exhibit specificity for

the particular stimuli present during training.

Learning, modulation and extinction proprieties

emerge.

According to the plasticity distribution at

multiple synaptic sites (Gao et al., 2012), the next

steps will be focused on the activation of the other

plasticity rules of the cerebellar model, expecting a

more stable and more accurate learning. The

modulation of these connectivities (MF→DCN and

PC→DCN) should lead to a learning generalization,

which will be tested through multiple tasks, such as

force-field paradigm in multi-joint reaching and

associative protocols such as eye blinking classical

conditioning task (Hwang and Shadmehr, 2005;

Yamamoto et al., 2007; Hoffland et al., 2012).

Moreover, for a more realistic computational

scenario, the sensorimotor platform will embed the

spiking version of this developed multiple-plasticity

cerebellar model (Luque et al., 2011).

5 CONCLUSIONS

The developed platform, a real neuro-robot able to

interact with the environment in multiple forms, is a

flexible and versatile test bed to concretely interpret

specific features of functional biological models, in

terms of neural connectivity, plasticity mechanisms

and functional roles into different closed-loop

sensorimotor tasks. In particular, the focus is on the

most CNS plastic structure, the cerebellum.

ACKNOWLEDGEMENTS

This work has been supported by the EU grant

REALNET (FP7-ICT-270434).

REFERENCES

Albus, J.S. (1971). A Theory of Cerebellar Function. Math

Biosci., 10, 25–61.

Boyden, E.S., Katoh, A., Raymond, J.L. (2004).

Cerebellum-dependant Learning: The Role of Multiple

Plasticity Mechanisms. Annu Rev Neurosci., 27, 581–

609.

Burdess, C. (1996). The Vestibulo-Ocular Reflex:

Computation in The Cerebellar Flocculus. Retrieved

(n.d.) from hxxp://bluezoo.org/vor/vor.pdf.

Casellato, C., Pedrocchi, A., Garrido, J.A., Luque, N.R.,

Ferrigno, G., D’Angelo, E., Ros, A. (2012). An

Integrated Motor Control Loop of a Human-like

Robotic Arm: Feedforward, Feedback and

Cerebellum-based Learning. Biomedical Robotics and

Biomechatronics (BioRob), 2012 June 24-27 4th IEEE

RAS & EMBS International Conference, no., 562-567.

doi: 10.1109/BioRob.2012.6290791.

Donchin, O., Rabe, K., Diedrichsen, J., Schoch, B.,

Gizewski, E.R., Timmann, D. (2012). Cerebellar

Regions Involved in Adaptation to Force Field and

Visuomotor Perturbation. J Neurophysiol., 107, 134–

147.

Gao, Z., van Beugen, B.J., De Zeeuw, C.I. (2012).

Distributed Synergistic Plasticity and Cerebellar

Learning. Nat Rev Neurosci., 13, 619-35.

Garrido, J.A., Luque, N.R., D’Angelo, E., Ros, E.

Distributed cerebellar plasticity implements adaptable

gain control in a manipulation task: a closed-loop

robotic simulation. Submitted to Front. Neural

Circuits.

Hwang, E.J., Shadmehr, R.J. (2005). Internal Models of

Limb Dynamics and The Encoding of Limb State.

Neural Eng., 2(3), 266-78.

Hoffland, B.S., Bologna, M., Kassavetis, P., Teo, J.T.H.,

Rothwell, J.C., Yeo, C.H., van de Warrenburg, B.P.,

Edwards, M.J. (2012). Cerebellar Theta Burst

Stimulation Impairs Eyeblink Classical Conditioning.

J Physiol., 590(4), 887-897.

Ito M. (2006). Cerebellar Circuitry as a Neuronal

Machine. Prog Neurobiol., 78, 272–303.

Luque, N.R., Garrido, J.A., Carrillo, R.R., Coenen, O.J.,

Ros, E. (2011). Cerebellar Input Configuration

Toward Object Model Abstraction in Manipulation

Tasks. Neural Networks, IEEE Transactions on, 22(8),

1321-1328.

Marr, D. (1969). A Theory of Cerebellar Cortex. J

Physiol., 202(2), 437–470.

van der Smagt, P. (2000). Benchmarking Cerebellar

Control. Robotics and Autonomous Systems, 32, 237-

251.

Yamamoto, K., Kawato, M., Kotosaka, S., Kitazawa, S.

(2007). Encoding of Movement Dynamics by Purkinje

Cell Simple Spike Activity during Fast Arm

Movements under Resistive and Assistive Force Field.

J Neurophysiol., 97(2), 1588-99.

Yamazaki, T., Tanaka, S. (2007). The cerebellum as a

liquid state machine. Neural Networks, 20(3), 290-

297.

Brain-inspiredSensorimotorRoboticPlatform-LearninginCerebellum-drivenMovementTasksthroughaCerebellar

RealisticModel

573