Semi-dynamic Calibration for Eye Gaze Pointing System

based on Image Processing

Kohichi Ogata and Kohei Matsumoto

Graduate School of Science and Technology, Kumamoto University, 2-39-1 Kurokami, Kumamoto 860-8555, Japan

Keywords:

Eye Gaze, Iris, Calibration, Image Processing.

Abstract:

In this paper, we propose two semi-dynamic calibration methods for compensating for user’s head movements

for an eye gaze pointing system. Since the user perceives degradation in pointing accuracy during use, an

effective compensatory calibration by the user which does not require additional apparatus or high cost cal-

culation can be a useful solution for the problem. The proposed semi-dynamic calibration methods lead the

user to gaze at 1 or 3 points on the computer screen and reduce the gap between the true eye gaze direction

and the position of the mouse pointer. Experimental results showed that the proposed methods were capable

of pointing the mouse pointer within 20 pixels at a distance of about 60 cm between the user and the display.

1 INTRODUCTION

Eye gaze interface systems have the potential to be

useful multimedia tools and related research and de-

velopment have been continued (Young and Sheena,

1975) (Hutchinson et al., 1989) (Wang and Sung,

2002). However, they still have not been spread to our

daily lives because of their expensiveness and com-

plexity.

We have been developing an eye gaze detection

system by using image processing technique without

infrared light sources. In the system, the eye gaze di-

rection is estimated by detecting the center of the iris

from an eye image obtained with a miniature visible

light camera. The system is capable of a real-time

processing of 30 fps for 320 x 240 pixels with an ac-

curacy of 0.6 and 1.3 degrees in horizontal and verti-

cal directions, respectively (Yonezawa et al., 2008b)

(Yonezawa et al., 2008a) (Yonezawa et al., 2010).

In eye gaze detection systems, user’s head move-

ments during long-term use cause detection errors.

This problem causes unwanted positioning of the

mouse pointer in spite of user’s effort. Therefore,

compensation for the troublesome movements is nec-

essary to reduce the related errors. One of the solu-

tions of the problem is to monitor head related move-

ments with multiple cameras (Talmi and Liu, 1999)

(Yoo and Chung, 2005). Other method requires four

or more infrared light sources (Ko et al., 2008). How-

ever, a compensation method, which does not require

additional apparatus or calculation for direct detec-

tion of the head movements, can be a useful solu-

tion to prevent the system from being complex. In an

eye gaze driven mouse-pointing system, the user per-

ceives degradation in pointing accuracy through the

discrepancy between the true eye gaze direction and

the position of the mouse pointer. In this paper, we

propose two semi-dynamic calibration methods for

compensating for the discrepancy mainly caused by

user’s head movements.

2 SYSTEM OVERVIEW

2.1 System Configuration

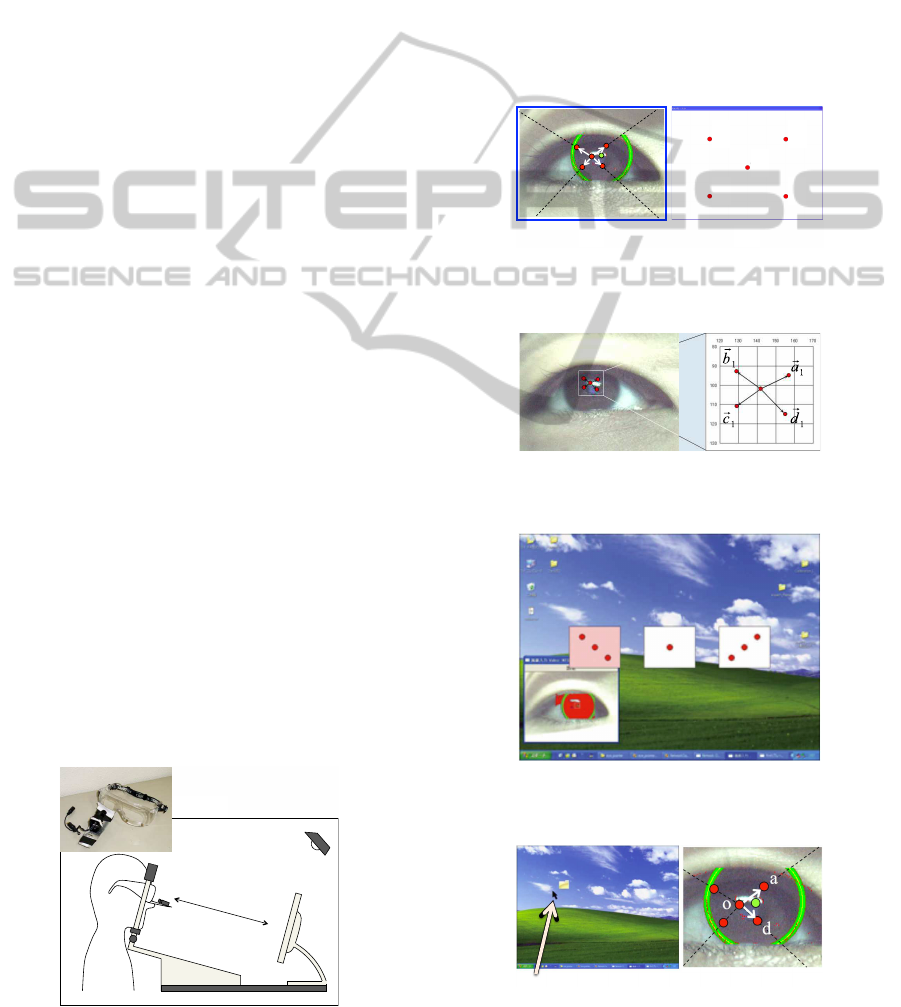

Figure 1 shows an overview of the system. The sys-

tem consists of a small color video camera (Kyohritsu

JPPCM25F 1/3 inch CMOS 0.25 Mpixel) and a desk-

top computer (CPU: Pentium4 3.2 GHz, MEMORY:

1 GB, OS: Windows XP) with an image capture board

(ImaginationPXC200). The camera is attached on the

user’s goggles. A computer display and a 20-W fluo-

rescent table lamp are located in front of the user. A

chin support is used to produce a rest position.

2.2 Iris Center Detection and

Calibration

Figure 2(a) shows an example of detecting the con-

tour of the iris from an eye image obtained with the

233

Ogata K. and Matsumoto K..

Semi-dynamic Calibration for Eye Gaze Pointing System based on Image Processing.

DOI: 10.5220/0004063602330236

In Proceedings of the International Conference on Signal Processing and Multimedia Applications and Wireless Information Networks and Systems

(SIGMAP-2012), pages 233-236

ISBN: 978-989-8565-25-9

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

camera. The two green arcs indicate parts of the iris

contour and the green dot indicates the center of the

iris. These are obtained by circular pattern match-

ing (Yonezawa et al., 2008b). Figure 2(b) shows five

calibration points to be shown on the display screen

in a calibration process in which a mapping function

between the detected iris centers and the calibration

points is calculated. In Figure 2(a), five iris centers

which correspond to the five calibration points in Fig-

ure 2(b) are also shown as red dots.

Figure 3 shows an example of calibration vectors

as a mapping function. An eye gaze direction is es-

timated by linear interpolation using the detected iris

center and the calibration vectors on the eye image.

We used a calibration method by (Fukushima et al.,

1999).

3 SEMI-DYNAMIC

CALIBRATION

Although a chin support is used as a positional refer-

ence and to reduce the head movements of the user, it

is necessary to introduce the mechanism of compen-

sation for the troublesome movements mainly caused

by the head movements into our system for long-term

use. Such a mechanism may lead the system which is

free from the chin support in the future.

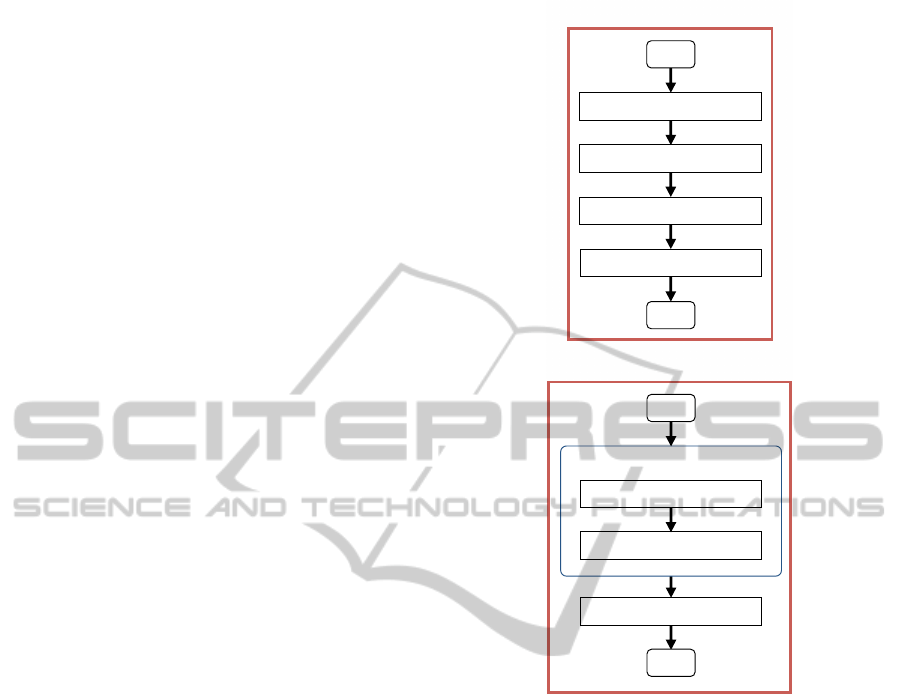

Figures 4 and 5 show overviews of two proposed

semi-dynamic calibration methods. In the semi-

dynamic calibration shown in Figure 4 (Method 1),

three icons which correspond to three types of modi-

fication of the mapping function appear on the screen

after user’s eye blinking with intention. These three

icons correspond to expansion (left icon), parallel

translation (middle icon) and reduction (right icon)

of the mapping vectors shown in Figure 3, respec-

tively. The user can select an icon depending on the

mismatch between the present location of the mouse

pointer and one which the user intends to locate on

the screen. In this Method 1, the mapping function

60 cm

Fluorescent lamp

20W

Goggles

Figure 1: Overview of the eye gaze pointing system.

is modified globally, i.e., on the whole of the display

screen.

In contrast, in the semi-dynamic calibration

shown in Figure 5 (Method 2), two calibration vec-

tors which cover one of the quarter areas having an

eye gaze point are modified. In this case, since the

rough eye gaze point on the screen is known, two vec-

tors to be modified can be automatically determined.

This modification mode is activated by the user’s eye

blinking with intention and the user gazes at three

points which form the beginning and end points of

the two calibration vectors. This method modifies

the mapping function locally and requires less pro-

Iris center

a b

d c

o

(a)

(b)

Figure 2: Detection of the contour of the iris and target

points in the calibration.

Figure 3: Example of calibration vectors as a mapping func-

tion.

Figure 4: Semi-dynamic calibration for global modification

of the mapping function (Method 1).

a

d

o

Mouse pointer!

Figure 5: Semi-dynamic calibration for local modification

of the mapping function (Method 2).

SIGMAP2012-InternationalConferenceonSignalProcessingandMultimediaApplications

234

cedure steps than Method 1. The user can activate the

two modification modes above at any time by the eye

blinking of one second or above.

4 EXPERIMENTS

Experiments for evaluating the proposed methods

were performed for five subjects with normal eye-

sight. The experimental setup is the same as Fig-

ure 1. A 17-inch display with 1024 x 768 pixels

was placed in front of the subject at a distance of

about 60 cm. Figure 6 shows flowcharts of the ex-

periments. The experiments consist of three stages:

Experiment I, II and III. In Experiment I, the usual

calibration was performed and the related parameters

were stored for later use in Experiment II and III. Af-

ter the calibration, the subject was asked to free his or

her head from the chin support and to place it again.

After that, the subject was asked to gaze at 25 target

points that randomly appear one after another on the

screen. In Experiment I, a mouse pointer was not dis-

played on the screen to evaluate the original accuracy

of the eye gaze detection with avoiding user’s adjust-

ment. The accuracy for each target point was calcu-

lated from image data of 30 frames after the subject

pressing a space key on the keyboard. The experi-

ment was followed by Experiment II and III and the

stored calibration parameters were used at the begin-

ning of each experiment to reproduce the same cali-

bration condition as Experiment I. After that, the sub-

ject was asked to move the mouse pointer to 25 target

points that randomly appear one after another. The

pointing accuracy for each target point was calculated

from image data of 30 frames in the same way in Ex-

periment I. In experiment II and III, the subjects used

the semi-dynamic calibration Method 1 and 2 respec-

tively, when necessary.

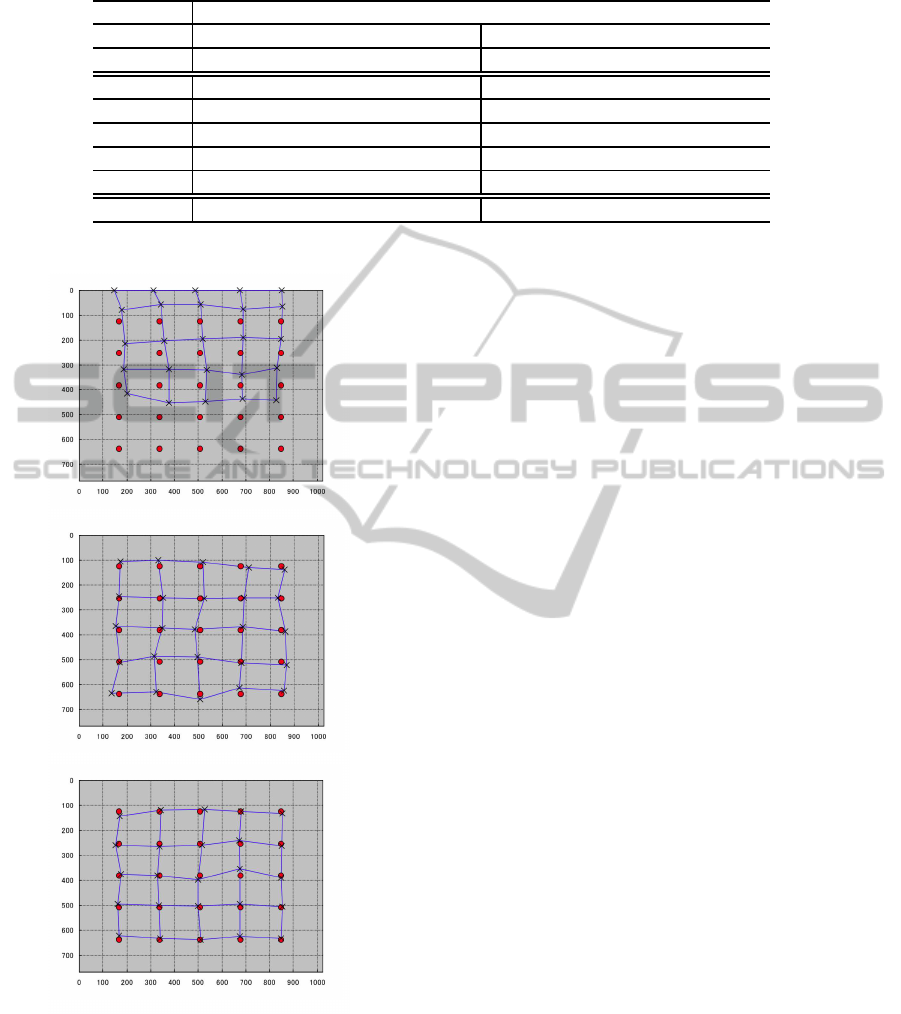

Figure 7 shows examples of pointing accuracy for

Subject 3. In each figure, the arrangement of the 25

target points on the screen is shown as red dots. The

estimated eye gaze points based on the iris detection

are shown as mesh points. Figure 7 (a) shows ex-

tremely large errors and suggests that it is extremely

difficult to place the pointer because of the large dis-

crepancy even if the pointer is displayed. In contrast,

both the semi-dynamic calibration methods Figure 7

(b) and (c) show quite good pointing accuracy.

Table 1 shows the pointing accuracy for all the

subjects. Each value shows the average over the 25

target points. These results suggest that the pointing

accuracy using the semi-dynamic calibration methods

is within 20 pixels in distance which is enough for

pointing an icon in regular size. The average num-

Calibration!

Detection of the iris center!

Head movements!

Start!

Detection of eye-gaze!

End!

Calibration!

Detection of the iris center!

Pointing by eye-gaze!

Start!

End!

Parameters in Experiment I is used!

Experiment I!

Experiment II and III!

Figure 6: Flowcharts for experiments.

bers of applying the method for all the subjects were

8.4 and 7.2 times for Method 1 and 2, respectively.

Questionnaires for assessing the usability showed the

easiness and effectiveness of the proposed methods.

5 CONCLUSIONS

In this paper, semi-dynamic calibration methods for

eye gaze pointing system were proposed for compen-

sation for user’s head movements. Experimental re-

sults showed that the proposed methods were capable

of pointing the mouse pointer within 20 pixels at a

distance of about 60 cm between the user and the dis-

play. These results suggest that the proposed methods

are effective solutions with a reasonable accuracy in

practical use preventing the system from being com-

plex with additional apparatus or calculation for direct

detection of the head movements.

Semi-dynamicCalibrationforEyeGazePointingSystembasedonImageProcessing

235

Table 1: Results of pointing accuracy.

Accuracy [pixels]

@ Method 1 Method 2

Subjects Horizontal Vertical Distance Horizontal Vertical Distance

Subject 1 10.8 10.9 17.2 10.2 10.8 16.1

Subject 2 7.6 9.5 13.5 7.2 10.8 14.5

Subject 3 12.7 11.8 19.3 5.5 9.8 12.1

Subject 4 10.9 10.5 16.6 9.5 10.9 16.3

Subject 5 16.9 14.5 25.1 15.7 18.6 28.6

Average 11.8 11.4 18.3 9.6 12.2 17.5

(a) Without semi-dynamic calibration!

(b) With semi-dynamic calibration Method 1!

(c) With semi-dynamic calibration Method 2!

Figure 7: Examples of pointing accuracy for Subject 3.

ACKNOWLEDGEMENTS

Part of this work was supported by Grant-in-Aid for

Scientific Research (C) from the Ministry of Educa-

tion, Science, Sports and Culture, Japan.

REFERENCES

Fukushima, S., Arai, T., Morikawa, D., Shimoda, H., and

Yoshikawa, H. (1999). Development of eye-sensing

head mounted display. Trans. of the Society of Instru-

ment and Control Engineers, 35:699–707.

Hutchinson, T. E., White, K. P. J., Martin, W., Reichert,

K. C., and Frey, L. A. (1989). Humancomputer in-

teraction using eye-gaze input. IEEE Transactions on

Systems, Man, and Cybernetics, 19:1527–1534.

Ko, Y., Lee, E., and Park, K. (2008). A robust gaze detection

method by compensating for facial movements based

on corneal specularities. Pattern Recognition Letters,

29:1474–1485.

Talmi, K. and Liu, J. (1999). Eye and gaze tracking for visu-

ally controlled interactive stereoscopic displays. Sig-

nal Processing: Image Communication, 14:799–810.

Wang, J. and Sung, E. (2002). Study on eye gaze esti-

mation. IEEE Transactions on Systems, Man, and

Cybernetics-Part B:Cybernetics, 32:332–350.

Yonezawa, T., Ogata, K., Matsumoto, K., Hirase, S., Shi-

ratani, K., Kido, D., and Nishimura, M. (2010). A

study on reducing the detection errors of the center of

the iris for an eye-gaze interface system. IEEJ, 130-C,

3:442–449.

Yonezawa, T., Ogata, K., Nishimura, M., and Matsumoto,

K. (2008a). Development of an eye gaze interface

system and improvement of cursor control function.

In Proceedings of International Conference on Signal

Processing and Multimedia Applications (SIGMAP

2008). SciTePress.

Yonezawa, T., Ogata, K., and Shiratani, K. (2008b). An

algorithm for detecting the center of the iris using

a color image and improvements of its processing

speed. IEEJ, 128-C, 2:253–259.

Yoo, D. H. and Chung, M. J. (2005). A novel non-intrusive

eye gaze estimation using cross-ratio under large head

motion. Computer Vision and Image Understanding,

98:25–51.

Young, L. and Sheena, D. (1975). Survey of eye movement

recording methods. Behavior Research Methods and

Instrumentation, 7:397–429.

SIGMAP2012-InternationalConferenceonSignalProcessingandMultimediaApplications

236