Gesture and Body Movement Recognition in the Military Decision

Support System

Jan Hodicky and Petr Frantis

Communication and Information System Department, University of Defense, 65 Kounicova str., Brno, Czech Republic

Keywords: Military Decision Support System, Common Operational Picture, Visualization, Kinect.

Abstract: The paper deals with the result of the research activity in the field of military decision support system. It

brings a new way of communication between system and commander. Kinect - the low cost gesture and

body movement recognition device was employed to control 3D visualization of real-time battlefield

situation. Experiments confirmed the correctness of Kinect using to support all phases of decision making

process. The quality of operation planning and control was increased.

1 INTRODUCTION

In the military field the decision support systems

play a major role in modern operations. To define

the military decision support system the non-military

definition must be declared. One of the generally

accepted definitions claims that decision support

system (DSS) is a computer technology that can be

used to support complex decision making and

problem solving (Shim, 2002).

In that context DSS is an information system or

system of systems that must:

Help to decision makers (individuals or

group);

Use information and communication

technology (ICT) to deal with data,

information and knowledge gathering,

processing and presentation;

Help to solve non-documented or non-

structured problem;

Support realization of all parts of decision

making process;

Help to identify the best problem solution.

The military decision support system (MDSS)

definition corresponds to previously defined DSS

but is aimed to the real time battlefield domain.

MDSS helps the warfighter to gain and maintain

information superiority in order to achieve command

superiority in war and peace time (Tolk, 2000).

The massive research activity in the MDSS area

is dated back to the 1970. From that moment many

concepts were introduced but the most important

milestone is 1995 when the first command and

control (C2) system was implemented in the US

Army (FBCB2, 2008). C2 system is DSS based on

the geographic information system that provides sets

of capabilities to deal with geo-referenced input,

storage analysis and output. C2 has high demand on

real time visualization of all objects in the

battlefield. The main interface between fighter and

C2 is common operational picture (COP) (Johansen,

2005). Common operational picture is mainly

composed of friendly and enemy forces position and

other tactical data real-time visualization. These

days’ research activities are focused on

improvement of COP reading, presenting and

understanding.

2 SHORTAGE OF CURRENT C2

SYSTEM

The best way how the COP can be understood is its

real time visualization. The latest research revealed

that 3D visualization can significantly improve

battlefield understanding. The new presentation

layer of C2 system with 3D visualization capabilities

has been already presented (Prenosil, 2008).

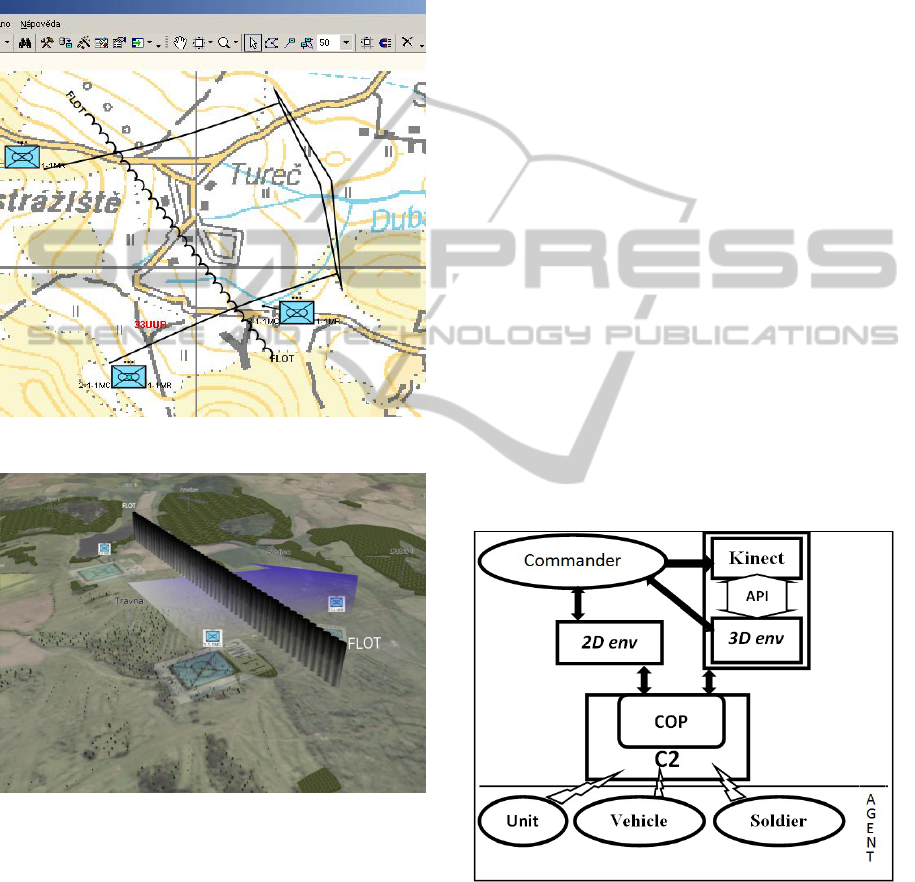

The Figures 1 and 2 demonstrates the COP

visualized in two and three dimensions. The unit

symbols and crucial tactical data are presented in

relation with 3D terrain. COP can be projected by

2D or 3D stereoscopic projection. Thus the

commanders (decision makers) must wear

301

Hodicky J. and Frantis P..

Gesture and Body Movement Recognition in the Military Decision Support System.

DOI: 10.5220/0003971903010304

In Proceedings of the 9th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2012), pages 301-304

ISBN: 978-989-8565-21-1

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

appropriate glasses that are synchronized with stereo

projection to get 3D environment feeling. The 3D

environment is controlled by commander by well-

known devices such as a mouse and keyboard. This

way of controlling is very disturbing in the mission

planning and controlling phase of decision making

process.

Figure 1: COP - visualized units and tactical data in 2D.

Figure 2: COP - visualized units and tactical data in 3D.

The most important issues in current C2 solution

from the human machine interface and commanders

point of view are:

3D visualization solution should be

implemented at low costs;

3D visualization solution must be easily and

quickly configurable and reconfigurable;

3D solution must be deployable as fast as

possible;

COP controlling must be as fast as possible;

COP controlling must be natural and very fast

to learn;

COP controlling must not disturb commander

in its decision making process in command

place.

These facts leaded to a new research activity

focused on implementation of a new way of

communication between commanders and COP.

3 NEW ARCHITECTURE OF C2

SYSTEM

Our team used the Microsoft Kinect motion tracking

device to enhance 3D visualization solution in 2011.

This enabled to the commander to control COP by

gesture and body movement. Microsoft Kinect is a

low-cost gesture and body motion tracking device

that can be connected not only to XBOX 360

console but to PC via USB cable as well. New C2

architecture is shown on the Figure 3. Agents (units,

vehicles, individuals) collect information about

battlefield and sending them to the core of C2

system. In the core of C2 system are the incoming

data analyzed and COP is created. COP is visualized

in 2D or in 3D in the new presentation layer. This

presentation layer contains Kinect application

programing interface (API) that enables commander

to interact with the system in 3D environment.

Figure 3: New architecture of C2 system with Kinect.

The 3D commander workstation is composed of:

3D stereoscopic projector;

5 synchronized glasses (for commander and

its staff);

Projection screen;

Kinetic sensor placed under the projection

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

302

screen;

Standard PC.

The commander is capable to control the 3D

environment by the body movements. Before first

use the system should be calibrated according to his

physique and its initial position (IP). Then he can

immediately control the 3D environment.

Movements from the IP means that camera in 3D

environment is moving in the corresponding

direction. The commander’s right hand controls

camera viewing direction (it replaces computer

mouse operation in 3D environment). The

commander’s left hand controls levels of details in

the scene. When the commander points the left hand

down, 3D environment immediately increase the

level of details in the scene and camera moves closer

to the terrain. If the commander points the left hand

up, 3D environment decreases the level of details

and the distance between camera and terrain

increases. Commander 3D workstation with Kinect

is shown on the Figure 4.

Figure 4: Commander 3D workstation with Kinect.

4 COMMANDER DECISION

MAKING PROCES

Commander decision making process is divided into

two main parts:

Operation planning;

Operation control.

During operation planning the commander and

its staff is dealing with possible variations of task,

maneuver, activity, etc. In the briefing time the

commander and his staff use the COP to clarify their

intention in accordance to geographical 3D data. The

time interval in which the consensus must be done

can be essential. The result of planning process is a

complete documentation for operation (for example

operation order- OPORD).

During the operation control the commander

commands and controls the inferior units to achieve

the created plan. It is a real time process and COP

must correspond with the real situation on the

battlefield. During the battle operation the COP

changes so the commander must correlate the plan

based on the discussion with its staff. Time interval

in which the consensus must be done is crucial.

In both cases decreasing the time interval to get

the consensus is one of the main goals with respect

not to decrease the quality of the decision making

process.

5 EXPERIMENT

In our experiment we wanted to reveal if Kinect

sensor implementation, it means gesture and body

movement recognition of commander that is

controlling the 3D environment (COP), can decrease

the time for making the consensus.

Our experiment was divided into two parts

according to two parts of decision making process

where the COP is used: operation planning and

operation control. In both parts two groups of 5

military students were employed. The one of the

student in each group (G1, G2) was the commander

and the rest was its staff.

In planning operation experiment two groups got

the same task to generate operation order for attack

of a company into defense position of one enemy

platoon in two scenarios. In the first scenario –S1

first group- G1 could use Kinect to control 3D COP,

the other one – G2 couldn’t. Second scenario –S2

was conducted in the opposite way and in a different

terrain. G1 couldn’t use Kinect and G2 could use

Kinect to generate the operation order. The Table 1

shows results of overall time needed to generate

OPORD.

Table 1: Planning operation experiment results.

Scenario S1 S2

Group G1 G2 G1 G2

Kinect X X

time[s] 1750 1920 1720 1640

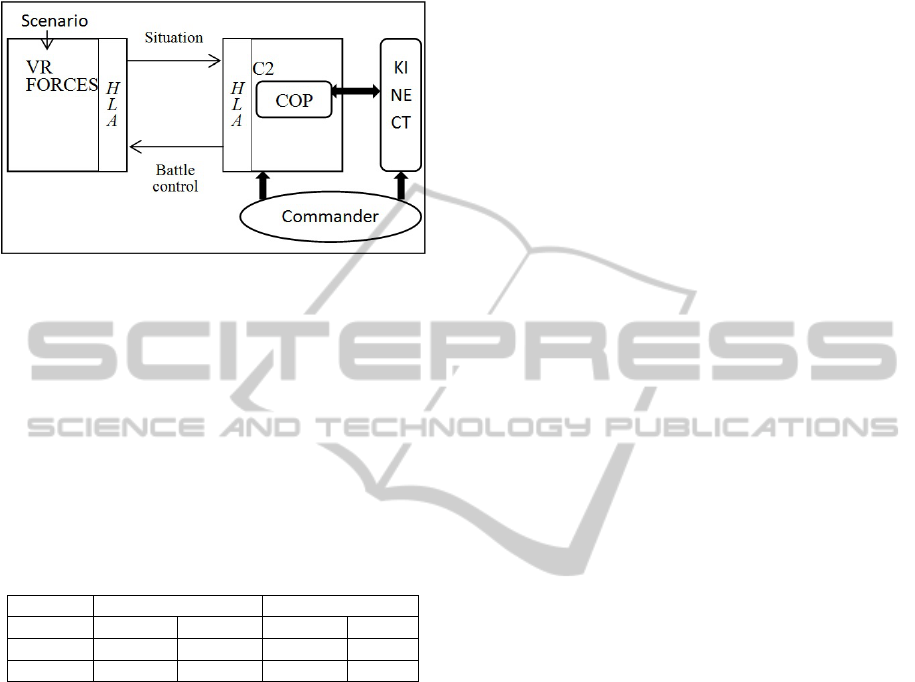

In the operation control experiment the

interconnection between constructive simulator (VR

Forces) and the Czech Army C2 system was

implemented. The VR Forces was set up by

scenarios – S1 a S2 from the operation planning. VR

Forces fed C2 system with scenario and the “real –

time” operation was modeled in VR Forces and COP

GestureandBodyMovementRecognitionintheMilitaryDecisionSupportSystem

303

was directly visualized in 3D environment. Figure 5

shows architecture of the experiment in the

operation control phase with Kinect solution.

Figure 5: Operation control experiment architecture with

Kinect.

The aim of the exercise was to destroy the enemy

platoon based on the previously generated OPORDs.

In the first scenario- S1 commander of the G1 group

could use the Kinect for controlling the 3D

environment during communication with it staff (G2

couldn’t use Kinect). During the second scenario–

the S2 commander of G1 couldn’t use Kinect and

vice versa. The following table shows results of the

overall time needed to destroy all enemy vehicles.

Table 2: Operation control experiment results.

Scenario S1 S2

Group G1 G2 G1 G2

Kinect X X

time[s] 185 220 410 320

6 RESULTS

Results from the first part of the experiment that

focuses on operation planning, reveals that the

commander using Kinect to control the 3D

environment (COP) was able to construct the

operation order in a shorter time interval. The

second part of the experiment identically found out

in that in the simulated environment the time

interval needed to successfully executed operation

order is shorter in case of Kinect using.

After discussion with group of testers the main

benefits of the Kinect solution are:

Easier orientation in the terrain based on the

natural way of controlling the 3D

environment;

Faster way of communication with COP;

Easier explanation of potential maneuvers;

Better understanding of distances between

objects;

Better remembering of the orientation points

in the battlefield;

Better immersion into the virtual battlefield.

7 CONCLUSIONS

The new research activity in the field of military

decision support system brought a new way of

communication between the C2 system and the

commander. Experiments with Kinect confirm the

correctness of idea using the virtual reality devices

to support decision making process in almost all

phases of command and control process. After

getting used to control the 3D environment with

gestures and body movements the overall quality of

operation planning and control increases. This

solution is not limited to military domain, but can be

easily adopted in civilian sphere, for example in

crisis management system solutions. Future research

activity will be aimed on implementation of voice

control of the C2 systems.

REFERENCES

FBCB2. (2008). CG2 C3D Demonstration Application

Employed in U.S. Army AAEF Exercise Tests Real-

Time 3D Visualization of on - the - Move C4ISR Data

from FBCB2 VMF Messages. Retrieved June 10,

2008, from http://www.cg2.com/Press.html.

Johansen, T. (2005). Requirements for a Future COP-

Display Based on Operational Experience. In

Proceedings of RTO Information Systems Technology

Panel (IST) Workshop. Toronto: RTO IST, 4p.

Prenosil, V., et al. (2008). Virtual reality devices in the

modernized conception of Czech C2. [Research report

of military project: VIRTUAL]. Brno: MU Brno.

Shim, J. P., Warkentin at al. (2002). Past, present, and

future of decision support technology. In Decision

Support Systems, 111-126.

Tolk, A., Kunde, D. (2000). Decision Support System –

Technical Prerequisites and Military Requirements. In

C2 Research and Technology Symposium. Monteray:

C2.

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

304