SELF-SUSTAINING LEARNING FOR ROBOTIC ECOLOGIES

D. Bacciu

1

, M. Broxvall

2

, S. Coleman

3

, M. Dragone

4

, C. Gallicchio

1

, C. Gennaro

5

, R. Guzm

´

an

6

,

R. Lopez

6

, H. Lozano-Peiteado

7

, A. Ray

3

, A. Renteria

7

, A. Saffiotti

2

and C. Vairo

5

1

Universit

`

a di Pisa, Pisa, Italy

2

¨

Orebro Universitet,

¨

Orebro, Sweden

3

University of Ulster, Belfast, Northern Ireland, U.K.

4

University College Dublin, Dublin, Ireland

5

ISTI-CNR, Pisa, Italy

6

Robotnik, Valencia, Spain

7

Tecnalia, Donostia/San Sebastin, Spain

Keywords:

Robotic Ecology, Wireless Sensor Network, Learning.

Abstract:

The most common use of wireless sensor networks (WSNs) is to collect environmental data from a specific

area, and to channel it to a central processing node for on-line or off-line analysis. The WSN technology,

however, can be used for much more ambitious goals. We claim that merging the concepts and technology of

WSN with the concepts and technology of distributed robotics and multi-agent systems can open new ways

to design systems able to provide intelligent services in our homes and working places. We also claim that

endowing these systems with learning capabilities can greatly increase their viability and acceptability, by

simplifying design, customization and adaptation to changing user needs. To support these claims, we illus-

trate our architecture for an adaptive robotic ecology, named RUBICON, consisting of a network of sensors,

effectors and mobile robots.

1 INTRODUCTION

Wireless Sensor Networks (WSNs) play an important

role in applications ranging from environmental mon-

itoring to ambient intelligence and AAL solutions. So

far, WSNs have been mostly used to acquire environ-

mental readings and to send them to a central power-

ful processing node for analysis. Pushing forward this

approach, we want to integrate robotic and WSN de-

vices into a smart robotic ecology, aiming to achieve

higher-level and more sophisticated objectives. Sen-

sor nodes have limited computational power, yet suffi-

cient to perform an in-network analysis on the sensor

information. Therefore, we can devise a distributed

learning system comprising both sensor nodes and

robots that cooperatively process the sensed data to

facilitate the achievement of a global goal. In partic-

ular, WSNs and robots are not seen anymore as sepa-

rate entities that act in an independent way. Rather,

they cooperate, as a smart robotic ecology, to pro-

cess raw data to deduce higher-level information and

to achieve a smart goal.

Building systems out of multiple networked

robotic and WSN devices extends the type of applica-

tions that can be considered, reduces their complex-

ity, and enhances the individual values of the devices

involved by enabling new services that cannot be per-

formed by any device by itself. However, current inte-

gration techniques strictly rely on models of the envi-

ronment, of their components, and of their associated

dynamics. Based on these models, they can be used

to find strategies to coordinate the participants of a

robotic ecology and react to perceived changes in the

environment but they lack the ability to proactively

and smoothly adapt to an evolving situation.

Much work has been undertaken in the WSN and

multi-agent communities to apply machine learning

methods to ease the development of systems that

adapt to changing conditions in their operative en-

vironment, reducing the programming effort before

and after deployment, and enabling to process sensory

data from non-stationary environments or for which a

precise model is not available. However, these learn-

ing approaches typically pose strong computational

requirements and the available solutions are strongly

99

Bacciu D., Broxvall M., Coleman S., Dragone M., Gallicchio C., Gennaro C., Guzmán R., Lopez R., Lozano-Peiteado H., Ray A., Renteria A., Saffiotti A.

and Vairo C..

SELF-SUSTAINING LEARNING FOR ROBOTIC ECOLOGIES.

DOI: 10.5220/0003905100990103

In Proceedings of the 1st International Conference on Sensor Networks (SENSORNETS-2012), pages 99-103

ISBN: 978-989-8565-01-3

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

tailored to very specific tasks. All these limitations

make robotic ecologies still difficult to deploy in real

world applications, as they must be tailored to the

specific environment, hardware configuration, appli-

cation, and users, and they can soon become unman-

ageably complex and expensive.

We argue that these problems must be addressed

by developing novel methodologies that couple com-

munication and control to learning for robotic ecolo-

gies. Specifically, we claim that extending WSN with

the concepts and technology of distributed robotics

and multi-agent systems can can open new ways to

design systems able to provide intelligent services in

our homes and working places. We also claim that en-

dowing these systems with learning capabilities can

greatly increase their viability and acceptability, by

simplifying the design, customization and adaptation

to changing user needs. In particular, the learning

infrastructure should provide a general purpose ser-

vice, capable of addressing a large number of differ-

ent tasks, rather than solving a fairly specific, though

highly optimized, duty.

As part of the EU FP7 project RUBICON

(Robotic UBIquitous COgnitive Network), we tackle

these challenges by building on existing solutions to

develop the concept of self-sustaining learning for

robotic ecologies. Specifically, we investigate how all

the participants in the RUBICON ecology can coop-

erate in using their past experience to improve their

performance by autonomously and proactively adjust-

ing their behaviour and perception capabilities in re-

sponse to a changing environment and user needs. We

propose a general purpose learning infrastructure dis-

tributed over the ecology. Moreover, by building on

the ecology concept, we make the learned knowledge

and the learning infrastructure available and acces-

sible to novel participants that dynamically join the

ecology.

2 LEARNING IN WSNS

Learning in WSNs introduces unseen challenges with

respect to adaptive control and data processing in cen-

tralized systems, mostly due to the severe compu-

tational limitations of the sensory nodes and to its

distributed architecture. Artificial Neural Networks

(ANNs) (Haykin, 1998) are one of the most important

learning paradigms, also characterized by interesting

analogies with the distributed nature of WSNs. This

has partly motivated the research on ANNs applica-

tions to WSNs, which can be broadly categorized into

two classes, comprising centralized and distributed

models. The former comprises approaches where the

ANN resides only in selected nodes of the WSN, typ-

ically the sink or the clusterheads. Recurrent ANN

(RNN), better suited to dynamical modeling of WSN,

have been exploited in (Moustapha and Selmic, 2008)

to achieve centralized fault isolation by learning pre-

dictive models of healthy and faulty nodes. The dis-

tributed approach comprises models where learning

units are replicated on each sensor node and are char-

acterized by variable degrees of learning cooperation.

Recently, Reservoir Computing (RC) (Jaeger and

Haas, 2004) has gained interest for its ability in con-

jugating the power of RNN in capturing dynamic

knowledge from sequential information with the com-

putational feasibility of learning by linear models, re-

sulting in suitable models for learning in the compu-

tationally constrained WSN scenario and for being

embedded on-board the sensor nodes. For instance,

(Gallicchio et al., 2011; Bacciu et al., 2011) have pro-

posed an RC application to user’s indoor movements

forecasting using real-world data.

Overall, current work on neural applications to

WSNs is fairly limited in exploitation of the dis-

tributed sensor architecture. Learning techniques

are used to find approximated solutions to very spe-

cific tasks, mostly within static WSN configurations.

Learning solutions have a narrow scope, resulting

in poor scalability, given that different tasks are ad-

dressed by different learning models. Further, these

solutions are centralized or characterized by little co-

operation between the distributed learning units.

3 ADAPTIVE ROBOTIC

ECOLOGY

We envision a robotic ecology, the RUBICON, that

will exhibit tightly coupled interaction across the

behaviour of all of its participants, including mo-

bile robots, wireless sensor and effectors nodes, and

also purely computing nodes. Each participant con-

tributes to a shared collective knowledge and mem-

ory while engaging in collaborative learning with the

other nodes by interacting through communication

channels that are used to exchange both data and

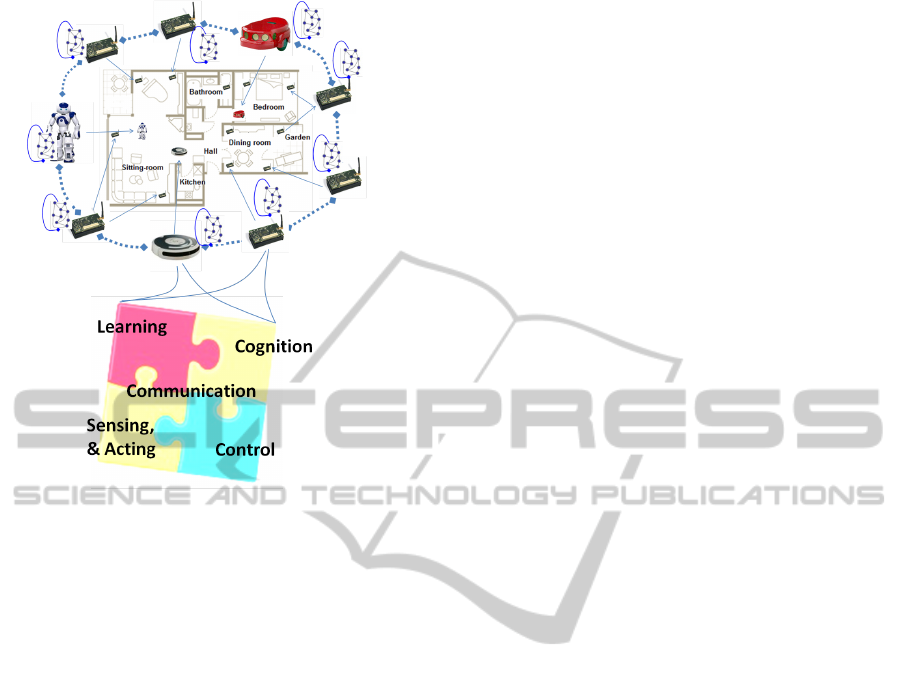

learning-specific signals, see Figure 1.

The self-improvement properties of RUBICON

will be supported by creating a self-sustaining learn-

ing interaction dynamic between all its participants.

In order to recognize situations and activities in the

environment, the devices in the ecology will collec-

tively learn to recognize, prioritize, aggregate and

communicate meaningful events. In turn, novelty-

detection and attention-focusing mechanisms will be

used to drive the behaviour of all the different partic-

SENSORNETS 2012 - International Conference on Sensor Networks

100

Figure 1: System architecture.

ipants in the system, not only to satisfy applications

objectives, but also to validate the performances of

the system and thus provide crucial feedback to all

the participants in the distributed learning.

The self-sustaining nature of our robotic ecology

is seen in the layered architecture of each of its nodes,

also represented in Figure 1. In addition to a Sensing,

Acting & Functional Layer, which comprises sen-

sors, actuators and existing functionalities, the ecol-

ogy includes four layers, respectively: Communica-

tion, Learning, Control, and Cognitive.

The Communication Layer is mainly based on

the two existing Software: the StreamSystem Mid-

dleware (Amato et al., 2010) and the PEIS Ecology

middleware (Saffiotti and Broxvall, 2007). These two

background technologies implements partly overlap-

ping services but with important differences in hard-

ware requirements and with services targeted towards

applications for robotics and for distributed WSNs,

respectively. The PEIS middleware provides auto-

matic discovery, dynamic establishment of P2P net-

works, self-configuration and high-level collaboration

in robotic ecologies through subscription based con-

nections and a tuplespace communication abstraction.

The Stream System framework provides a simple and

effective access to the transducer and actuator hard-

ware on wireless nodes, and a communication ab-

straction based on channels.

The Learning Layer (LL) recognizes and detects

relevant sensed information by providing predictions

depending on the temporal history of the input signals

(e.g. to predict user’s future location depending on its

movement pattern). It processes sensed information

to extract refined goal-significant information, deliv-

ering such information to the Control and Cognitive

layers to support single-node or multi-node control

strategies and high-level reasoning. The LL builds a

Learning Network (LN) on top of the robotic ecology,

that is a flexible environmental memory serving as a

task driven model of the environment that can read-

ily be shared by new nodes connecting to the robotic

ecology, allowing them to share the learned experi-

ence. The memory formation process in the LN is

driven by task-specific supervised learning and feed-

backs provided by the high-level reasoning imple-

mented by the Control and Cognitive Layers. The LN

design is founded on RC models (Jaeger and Haas,

2004), due to their networked structure, which nat-

urally adapts to the distributed nature of the robotic

ecology, and to their trade-off between computational

efficiency and ability to deal with dynamic systems

and noisy data. Each node of the LN hosts an RC

network with a variable number of neurons, that are

connected by remote synapses with neurons residing

on other nodes, creating a distributed RNN.

The Control Layer (CL) ensures that the RUBI-

CON nodes perform their actions in a coordinated and

goal oriented fashion to achieve high-level tasks. One

of the key strengths of a robotic ecology is to be capa-

ble of using alternative means to accomplish its goals

when multiple courses of actions/configurations are

available. However, while having multiple options is

a potential source of robustness, efficiency, and adapt-

ability, the combinatorial growth of possible execu-

tion traces makes difficult to scale to complex ecolo-

gies. Adapting, within tractable time frames, to dy-

namically changing goals and environmental condi-

tions is made more challenging when these conditions

fall outside those envisioned by the system designer.

Finally, supporting varying computational constraints

is a primary priority, as target environments will con-

tain devices such as computers with large processing

and bandwidth capacities, as well as much simpler

devices. To these ends, the CL is framed as multi-

agent system (MAS) where each node in the ecology

is controlled by an autonomous agent with timeline-

based planning (Pecora and Cirillo, 2009), and em-

bedded, agent-based plan monitoring, execution and

co-ordination capabilities (Muldoon et al., 2006).

The key to enabling adaptive, and proactive be-

haviour in such a system will be to improve the abil-

ity of each agent to extract meaning from noisy and

imprecise sensed data, and to learn what goals to pur-

sue, and how to pursue them, from experience, rather

SELF-SUSTAINING LEARNING FOR ROBOTIC ECOLOGIES

101

than by relying on pre-defined strategies. To this end,

we can leverage the vast literature of MAS learning

methods (Sen and Weiss, 1999) (Busoniu et al., 2009)

to use the learning services provided by the LL.

The Cognitive Layer (COG) is essential to close

and animate the loop in our architecture by orches-

trating the LL and the CL to drive the self-sustaining

capabilities of the robotic ecology and reduce its re-

liance from pre-programmed models. While the LN

provides learning functionalities that can be used to

fuse and enhance existing perception abilities and to

predict and classify events and human activities, each

of these learning tasks must be precisely pre-defined,

in terms of the data sources to be provided in input to

each learning module as well as the examples needed

for training their outputs. Similarly, while the CL can

synthesize and coordinate the execution of strategies

to achieve goals set for the whole ecology, its agents

must be explicitly tasked, e.g. by the user, or by avail-

ing of pre-programmed service rules.

The COG is being built using Self-Organizing

Fuzzy Neural Networks (SOFNNs) (Leng et al., 2004;

Prasad et al., 2010) where fuzzy techniques are used

to create or enhance neural networks and that can be

used to learn membership functions and create fuzzy

rules. Recently, research interests in self-organizing

neural network systems have moved on from param-

eter learning to the structure learning phase, with

a minimum of supervision. Our aim is to create

SOFNNs that reflects the knowledge being obtained

by the robotic ecology and autonomously map it to

goals to be achieved by the CL in order to satisfy

generic application requirements while also driving

active exploration to gather new knowledge. The par-

ticular appeal of SOFNNs is their ability for struc-

tural modification through neuron addition and prun-

ing. By linking such a structural adaptation to nov-

elty detection and habituation mechanisms (Mannella

et al., 2012), we aim to create a self-sustaining archi-

tecture that would start from using hand-coded neural

fuzzy rules but that would soon be able to leverage

past experiences to autonomously adapt them to the

context where the robotic ecology is installed.

4 CONCLUSIONS AND FUTURE

WORK

The goal of this position paper was to put forward the

concept of self-sustaining, learning robotic ecologies

as a powerful extension of traditional WSNs. It has

presented the rationale for the adoption and the inte-

gration of a number of techniques for the development

of adaptive applications using this concept. While

all the techniques illustrated in this paper have been

tested in isolation, we believe that their extension

and integration as discussed in this paper promises

to solve many of the problems that still obstruct the

implementation and diffusion of smart robotic envi-

ronments outside research laboratories. Future work

will refine and implement our proposed architecture

and exercise it in realistic settings.

ACKNOWLEDGEMENTS

This work is partially supported by the EU FP7 RU-

BICON project (contract no. 269914).

REFERENCES

Amato, G., Chessa, S., and Vairo, C. (2010). Mad-wise:

A distributed stream management system for wireless

sensor networks. In Software Practice & Experience,

40 (5): 431 - 451.

Bacciu, D., Gallicchio, C., Micheli, A., Chessa, S., and Bar-

socchi, P. (2011). Predicting user movements in het-

erogeneous indoor environments by reservoir comput-

ing. In Bhatt, M., Guesgen, H. W., , and Augusto,

J. C., editors, Proc. of the IJCAI Workshop STAMI

2011, pages 1–6.

Busoniu, L., Babuska, R., and De Schutte, B. (2009). A

comprehensive survey of multi-agent reinforcement

learning. In IEEE Trans. Syst., Man, Cybern. C, Appl.

Rev., vol. 38, no. 2, pages 156–172.

Gallicchio, C., Micheli, A., Barsocchi, P., and Chessa, S.

(2011). User movements forecasting by reservoir

computing using signal streams produced by mote-

class sensors. In To Appear in Proc. of Mobilight

2011. Springer-Verlag.

Haykin, S. (1998). Neural Networks: A Comprehensive

Foundation. Prentice Hall PTR, 2nd edition.

Jaeger, H. and Haas, H. (2004). Harnessing nonlinearity:

Predicting chaotic systems and saving energy in wire-

less communication. Science, 304(5667):78–80.

Leng, G., Prasad, G., and McGinnity, T. M. (2004). An on-

line algorithm for creating self-organizing fuzzy neu-

ral networks. In Neural Networks, volume 17, pages

1477–1493.

Mannella, F., Mirolli, M., and Baldassarre, G. (2012). Brain

mechanisms underlying learning of habits and goal-

driven behaviour: A computational model of devalu-

ation experiments tested with a simulated rat. In In

Tosh, C. (ed.), Neural Network Models. Cambridge

University Press, In Press.

Moustapha, A. and Selmic, R. (2008). Wireless sensor net-

work modeling using modified recurrent neural net-

works: Application to fault detection. IEEE Trans.

Instrum. Meas., 57(5):981 –988.

SENSORNETS 2012 - International Conference on Sensor Networks

102

Muldoon, C., O Hare, G., and O Grady, M. (2006). Afme:

An agent platform for resource constrained devices. In

Proceedings of the ESAW 2006.

Pecora, F. and Cirillo, M. (2009). A constraint-based ap-

proach for plan management in intelligent environ-

ments. In Proc. of the Scheduling and Planning Ap-

plications Workshop at ICAPS09.

Prasad, G., Leng, G., McGinnity, T., and Coyle, D. (2010).

On-line identification of self-organizing fuzzy neu-

ral networks for modelling time-varying complex sys-

tems. In Evolving Intelligent Systems: Methodology

and Applications, John Wiley & Sons., pages 256–296.

Saffiotti, A. and Broxvall, M. (2007). A middleware for

ecologies of robotic devices. In Proc. Of the Int. Conf.

on Robot Communication and Coordination (Robo-

Comm), pp. 16–22.

Sen, S. and Weiss, G. (1999). Learning in multiagent sys-

tems. In Multiagent Systems: A Modern Approach to

Distributed Artificial Intelligence, MIT Press, ch. 6,

pp. 259–298.

SELF-SUSTAINING LEARNING FOR ROBOTIC ECOLOGIES

103