MULTIMODALITY AND MULTIRESOLUTION IMAGE FUSION

Paul M. de Zeeuw, Eric J. E. M. Pauwels and Jungong Han

Centrum Wiskunde & Informatica, P.O. Box 94079, NL–1090 GB, Amsterdam, The Netherlands

Keywords:

Multimodality, Multiresolution, Image Fusion.

Abstract:

Standard multiresolution image fusion of multimodal images may yield an output image with artifacts due to

the occurrence of opposite contrast in the input images. Equal but opposite contrast leads to noisy patches,

instable with respect to slight changes in the input images. Unequal and opposite contrast leads to uncertainty

of how to interpret the modality of the result. In this paper a biased fusion is proposed to remedy this, where

the bias is towards one image, the so-called iconic image, in a preferred spectrum. A nonlinear fusion rule

is proposed to prevent that the fused image reverses the local contrasts as seen in the iconic image. The rule

involves saliency and a local match measure. The method is demonstrated by artificial and real-life examples.

1 INTRODUCTION

Image fusion seeks to combine images in such a way

that all the salient information is put together into

(usually) one image suitable for human perception or

further processing. One can roughly divide the field

into two categories: monomodal and multimodal im-

age fusion. An example of the need for the first can

be found in light microscopy where one encounters

the problem of limited depth-of-field, i.e. only part

of the specimen under consideration will be in focus.

By fusing multiple images with different focus one

acquires an image which has overall focus.

Examples of multimodal imaging are found in the

realm of medical imaging where one seeks to com-

bine CT with MRI images, or PET with MRI images.

Another important example is surveillance imaging

where often one and the same scene is recorded by

cameras operating with different modalities like vi-

sual and infrared (viz. SWIR, MWIR and LWIR).

Typically, in pairs of images opposite contrast may

occur, e.g. see the poles in the top images of Fig-

ure 5. In this paper, we elaborate upon multimodal

image fusion by multiresolution-methods. The latter

requires that images that have to be fused are reg-

istered (aligned). Already the registration of mul-

timodal images requires an approach different from

the case of monomodal images. Registration based

on features like lines and contours appear more suit-

able for such images than registration based on corre-

lation of intensity values, e.g. see (Zitov

´

a and Flusser,

2003) and references therein (recently also (Han et al.,

2011)). We confine ourselves to the mere fusion part.

Section 2 provides a brief recapitulation of the mul-

tiresolution aspects. Section 3 elaborates in detail on

the proposed (biased) fusion rule. This rule is irre-

spective of the particular multiresolution scheme and

of the activity measure.

2 MULTIRESOLUTION IMAGE

FUSION

There exist various categories of techniques for im-

age fusion, but we merely consider methods by means

of the multiresolution (MR) approach. It is founded

on the observation that multiresolution decomposition

of an image allows for localization of features at the

proper scale (resolution). Early proofs of principles

already exist (Burt and Kolczynski, 1993; Li et al.,

1995). The basic idea is demonstrated by Figure 1 (cf.

(Piella, 2003a, Figure 6.6)). At the decomposition

stage the input images (i

A

, i

B

) are transformed into

multiresolution representations (m

A

, m

B

). The trans-

form is symbolized by Ψ. At the combination stage

(C ) the transformed data are fused. In the context of

wavelets, it was proposed to apply the maximum se-

lection rule (Li et al., 1995) for the detail coefficients

as fusion rule. For instance, in the case of two input

images, we select from each duo of geometrically cor-

responding detail coefficients the one that is largest in

absolute value. From the composite multiresolution

representation m

F

thus obtained, the fused image i

F

is derived by application of the backtransform Ψ

−1

.

151

M. de Zeeuw P., J. E. M. Pauwels E. and Han J..

MULTIMODALITY AND MULTIRESOLUTION IMAGE FUSION.

DOI: 10.5220/0003866501510157

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2012), pages 151-157

ISBN: 978-989-8565-03-7

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

i

A

-

Ψ

-

c

A

@

@

@R

i

B

-

Ψ

-

c

B

C

-

c

F

-

Ψ

−1

-

i

F

Figure 1: Simple MR image fusion scheme. Left: MR transform Ψ of the input sources i

A

and i

B

. Middle: combination in

the transform domain. Right: inverse MR transform Ψ

−1

producing the composite image i

F

.

A framework for more sophisticated fusion rules

has been proposed by Burt et al. (Burt and Kolczyn-

ski, 1993), for an overview of rules see (Piella, 2003b;

Piella, 2003a).

2.1 Choice of Multiresolution

For the multiresolution part of the fusion algorithm

many schemes are available to us, ranging from pyra-

mid schemes as Laplacian Pyramids (Burt and Adel-

son, 1983), Steerable Pyramids (Simoncelli and Free-

man, 1995), Gradient Pyramids (Burt and Kolczyn-

ski, 1993), an abundance of wavelets (Mallat, 1989)

and even a multigrid method solving diffusion equa-

tions on a range of grids (De Zeeuw, 2005; De Zeeuw,

2007). So far, our method of choice is the Gradi-

ent Pyramid (GP). Based on anecdotal evidence, it

appears less prone to artifacts like ringing effects.

The latter are often perceived when image regions of

high contrast are fused with the use of (standard, real-

valued) wavelets (Forster et al., 2004). A theoretical

disadvantage of the GP scheme is that it cannot boast

of perfect reconstruction. However, this appears not

to pose a problem in practice for many applications.

Gradient Pyramid. The gradient pyramid (Burt

and Kolczynski, 1993) is derived from a Gaussian

pyramid using a specific kernel. The Gaussian pyra-

mid involves the application of a generating kernel

followed by downsampling. The process is repeated,

producing Gaussians at a sequence of levels. At each

level per pixel (discrete) gradients are computed in 4

separate directions: horizontal, vertical and 2 diago-

nal. At each gradient pyramid level, the gradients are

applied again, leading to a pyramid of second deriva-

tives. These four second derivatives (computed per

level, per pixel) play a role similar to the one of detail

coefficients in discrete wavelet methods. Such detail

coefficients are also referred to as bands. With the last

computed Gaussian as coarsest approximation of the

original image and the above detail coefficients the

original image can be reconstructed accurately, albeit

not perfectly. An annotated MATLAB

R

implementa-

tion of the scheme is available as part of the toolbox

Matifus

1

, see (De Zeeuw et al., 2004).

3 FUSION OF MULTIMODAL

IMAGES

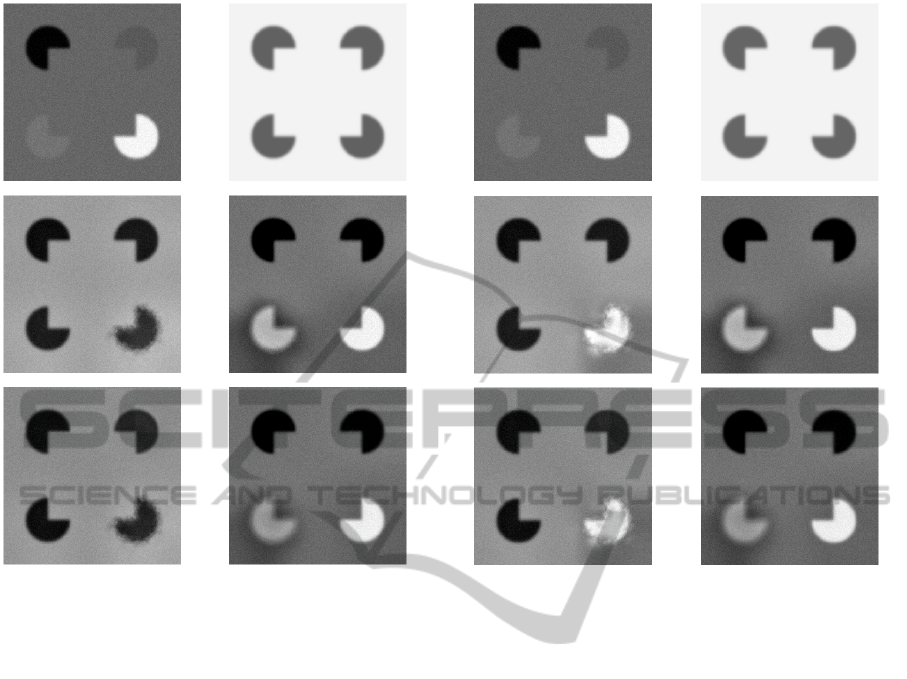

Introduction. As an example of fusion of multi-

modal images we consider two input images where

one resides in the visible spectrum and the other one

in the (far) infrared. This is typically a situation where

opposite contrast may occur. If one considers stan-

dard fusion schemes, it appears that input images al-

ways receive equal treatment: one may interchange

the input images, the output of fusion remains the

same. Here, we abandon this principle. Instead, we

select a so-called iconic image from our set of input

images. The other images are called companion im-

ages. The goal of fusion becomes that the information

held in the iconic image is to be enhanced by the com-

panion input images but without reversing the con-

trast. In the said example, we choose the image in the

visible spectrum as the iconic image. Figure 2 pro-

vides an illustration, albeit an artificial one. It shows

an actual result of our new scheme described below

(Section 3.1). The iconic image contains two objects

with strong contrasts to the background and two ob-

jects with faint contrasts. The other input image (top

right) contains four objects all with strong contrasts.

The image produced by the standard fusion scheme

(middle left) shows two undesirable consequences.

Firstly, the contrast of one of two of the faint objects

is not just enhanced but also, unfortunately, reversed.

Secondly, the bright object in the iconic image is sub-

1

http://homepages.cwi.nl/˜pauldz/Bulk/Codes/MATIFU

S/

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

152

Figure 2: An artificial example. Top left: visual image,

iconic. Top right: far infrared image. Middle left: fused

image, standard fusion rule (selection). Middle right: fused

image, iconic fusion rule (selection). Bottom left: Burt &

Kolczynski fusion rule. Bottom right: iconic fusion rule

(smooth).

stituted by a blurry and dark object. Moreover, the

latter object is unstable in the sense that its intensity

can change dramatically if the companion input image

is changed slightly, this is demonstrated by Figure 3.

The wavering is caused by the selection mechanism

for the detail coefficients and the averaging out of ap-

proximation coefficients at the coarsest grid in case

of opposite contrast. Below we give an outline of the

new fusion scheme, followed by explicit fusion rules

(old and new ones).

Framework. Figure 4 (cp. (Piella, 2003a, Figure

6.7) & (Burt and Kolczynski, 1993, Figure 2)) shows

the general framework with the building blocks of im-

portance, i.e. the computations of match measure, ac-

tivity measure (saliency), the fusion decision and the

combination (weights).

We adopt a notation similar to the one that has

been used in Piella’s thesis (Piella, 2003a, Chapter 6).

Saliency. Contrast in (local) image regions is

sensed by the detail coefficients of the multiresolu-

tion scheme: the larger the coefficients at a pixel, the

Figure 3: Artificial example revisited, slightly altered in-

put images. Top left: visual image, iconic. Top right: far

infrared image. Middle left: fused image, standard fusion

rule (selection). Middle right: fused image, iconic fusion

rule (selection). Bottom left: Burt & Kolczynski fusion

rule. Bottom right: iconic fusion rule (smooth).

higher the contrast can be expected to be. The max-

imum selection rule (Li et al., 1995), already men-

tioned, in the combination stage (C ) is based on this

assumption but wants refinement. Instead of a sep-

arate treatment for each band (per level, per pixel),

we opt for a collective treatment of the detail coeffi-

cients based on the saliency at a pixel. We choose to

measure this collective saliency a

k

(.) as the Euclidean

norm of the detail coefficients over all bands (per level

k, the dot denotes the location of the coefficients per

pixel).

Match Measure. Burt et al. (Burt and Kolczynski,

1993) introduced the use of a (local) match measure

to determine whether to use selection or averaging at

the combination of detail coefficients. In the next sec-

tion we propose a much heavier role, depending on

the value (including sign) we will use it to adjust the

contrast of input images to a favorite spectrum. A

possible measure of choice is local normalized cross-

correlation of vectors over small (e.g. square) regions

of coefficients per band, per level k, per pixel. This

particular choice would involve substraction of aver-

MULTIMODALITY AND MULTIRESOLUTION IMAGE FUSION

153

i

A

i

B

Ψ

c

A

Ψ

c

B

//

Match

m

AB

oo

//

Saliency

s

A

Saliency

s

B

oo

//

Fusion Decision

d

oo

//

Combination

c

F

oo

Ψ

−1

i

F

Figure 4: Generic MR image fusion scheme involving

matching and saliency measuring. Two input sources i

A

and

i

B

are turned into one output composite image i

F

.

ages of detail coefficients which already themselves

correspond to modes of zero average. Therefore we

simplify to the computation of the inner products of

normalized vectors of coefficients. The latter can also

be expressed in terms of angles between the vectors.

Whatever the choice at hand, we assume that a value

of 1 stands for identity, 0 for orthogonality and −1 for

identity but with opposite sign. It measures similarity,

independent of amplitudes,. A possible refinement

would be to consider small geometrical variations of

the local region in one image with respect to the coun-

terpart image and compute the maximum similarity

over the variations. This might be useful in the case

of small registration errors. The local match measure

can be implemented efficiently and without chang-

ing the order of complexity of the fusion method as

a whole.

Outline. After the multiresolution decomposition

of the input images, the fusion proceeds as follows.

One computes the local match measure between the

iconic and the other image(s) (recall that for Gradient

Pyramids the number of bands equals four, for stan-

dard 2D wavelets it equals three). Where the match

measure is positive or close to zero (i.e. no match)

there is no reason to deviate from the standard fusion

rules. Where the match measure is distinctly nega-

tive, this is indicative of locally similar structures but

with opposite contrast and we enforce that the sign

of the coefficient of the composite (fused) image con-

curs with that of the corresponding coefficient of the

iconic image. The above is materialised in the next

section.

3.1 Iconic Fusion Rules

We start by defining a simple decision map for a rather

simple fusion rule

δ

k

I

(.) =

a

k

I

(.)

a

k

I

(.) + a

k

A

(.)

(1a)

δ

k

A

(.) =

a

k

A

(.)

a

k

I

(.) + a

k

A

(.)

, (1b)

where a

k

I

(.) and a

k

A

(.) are activity measures (saliency)

referring to images I and A respectively. Obviously

δ

k

I

(.) + δ

k

A

(.) = 1 and both δ

k

I

(.), δ

k

A

(.) ≥ 0. A stan-

dard choice for the composite coefficient would be the

weighted combination

c

k

F

(.|p) = ω

k

I

(.)c

k

I

(.|p) + ω

k

A

(.)c

k

A

(.|p) (2)

where p denotes the specific band and the weights are

chosen as

ω

k

I

(.) = δ

k

I

(.) and ω

k

A

(.) = δ

k

A

(.).

In case of standard wavelets the number of bands is

3 (horizontal, vertical, diagonal coefficients), in case

of Gradient Pyramids the number of bands is 4 (see

Section 2.1). We refer to the above rule as the smooth

standard fusion rule. Alternatively, a selection (i.e.

thresholded) variant of the above rule would be de-

fined by

ω

k

I

(.) =

1 δ

k

I

(.) ≥ δ

k

A

(.)

0 otherwise

(3a)

ω

k

A

(.) = 1 − ω

k

I

(.). (3b)

One notes the symmetry of the roles of images I and

A. However, as we want to prevent the local con-

trasts of the iconic image from reversing, we are go-

ing to propose a biased scheme. In the paragraph on

saliency we already mentioned the relationship be-

tween contrast in an image and the detail coefficients

of a multiresolution method: the larger the contrast

the larger the detail coefficients. But the relationship

goes further: with respect to contrast one can reverse

the transition from light to dark, by reversing the sign

of the detail coefficients. The new scheme is based on

this observation. Firstly, we compute the local match

measure m

k

IA

(.|p) discussed earlier (I is the iconic im-

age, A the additional input image). That is, per level,

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

154

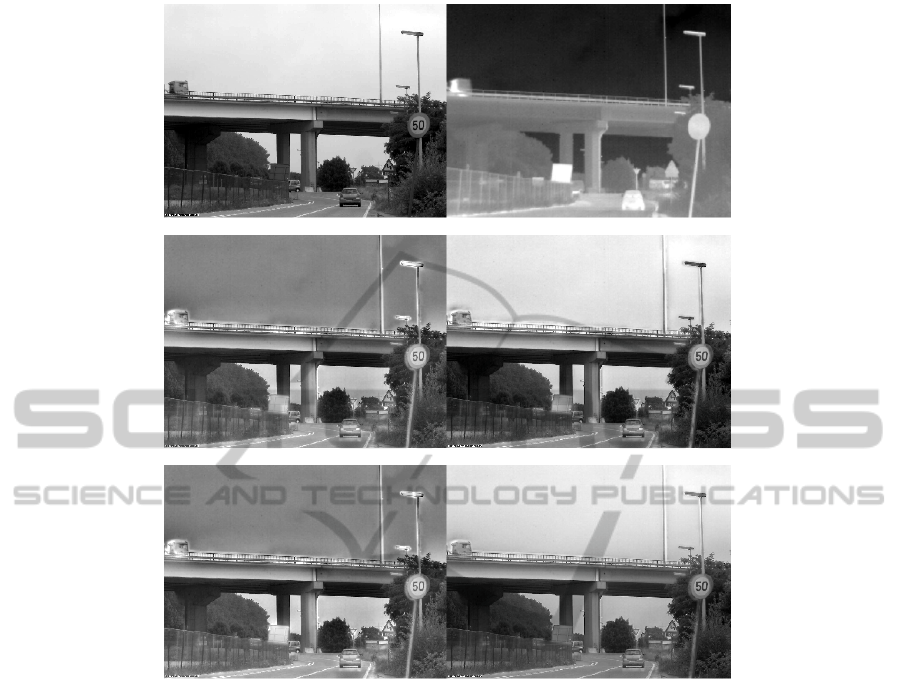

Figure 5: Top left: visual image, iconic. Top right: far infrared image. Middle left: fused image, standard fusion rule

(selection). Middle right: fused image, iconic fusion rule (selection). Bottom left: Burt & Kolczynski fusion rule. Bottom

right: iconic fusion rule (smooth). Top (input) images by courtesy of Xenics.

per band and per location we determine the similar-

ity of the detail coefficients of the input images in a

small region surrounding the location. We put several

requirements to the scheme to be, based on desired

outcomes for local circumstances. For convenience

we introduce

σ

k

I

(.|p) = Sign(c

k

I

(.|p)) and σ

k

A

(.|p) = Sign(c

k

A

(.|p))

(where Sign is the well-known sign function with val-

ues +1 for a positive argument, −1 for a negative ar-

gument and 0 for a zero argument). If signs are op-

posite, we demand that ω

k

A

(.|p) = −δ

k

A

(.), provided

that the absolute local match measure |m

k

IA

(.|p)| ≈ 1.

If the local match measure happens to be around 0

(orthogonality) we want to resort to the above stan-

dard scheme. Likewise, when signs are not opposite

we also want to resort to the standard scheme. These

requirements lead to the following scheme

ω

k

I

(.|p) = δ

k

I

(.),

ω

k

A

(.|p) = δ

k

A

(.)( 1 − |m

k

IA

(.|p)|

(1 − σ

k

I

(.|p)σ

k

A

(.|p)) ).

(4)

Inserting (4) into (2) yields

c

k

F

(.|p) = δ

k

I

(.)c

k

I

(.|p) + δ

k

A

(.)(

(1 − |m

k

IA

(.|p))c

k

A

(.|p)+

|m

k

IA

(.|p)|σ

k

I

(.|p)|c

k

A

(.|p)|).

(5)

We refer to the above rule as the smooth iconic fu-

sion rule. Alternatively, a selection (i.e. thresholded)

variant of rule (4) leads to the following composite

coefficient:

c

k

F

(.|p) =

c

k

I

(.|p) δ

k

I

(.) ≥ δ

k

A

(.)

c

k

A

(.|p) δ

k

I

(.) < δ

k

A

(.) &

m

k

IA

(.|p)

< T

σ

k

I

(.|p)|c

k

A

(.|p)| otherwise

(6)

MULTIMODALITY AND MULTIRESOLUTION IMAGE FUSION

155

for a threshold T , e.g. T =

1

2

. In the case of three or

more input images, one again just selects one image

as the iconic one and the generalisation is straightfor-

ward.

4 MORE RESULTS

Figure 5 shows results for a real-life surveillance ex-

ample (in particular do watch the poles).

The result at the middle left is based on the stan-

dard fusion rule (selection), the result at the middle

right is based on the iconic fusion rule (6) (selection).

In this section, for comparison, we point to additional

results for the smooth variant of the said iconic fusion

(bottom right) and for the rule of Burt & Kolczynski

(bottom left). The latter rule implies that where simi-

larity is low ( m

k

IA

(.|p) < T ) the maximum selection

rule is applied, where similarity is high ( m

k

IA

(.|p) ≥ T

) the rule is presented by ω

k

I

(.) =

1

2

−

1

2

(1 − m

k

IA

(.|p))

(1 − T )

and ω

k

A

(.) = 1−ω

k

I

(.) which comes close to averaging

mode. An important difference with the iconic fusion

rule is that the outcome is symmetric with respect to

interchanging the input images. Contrary to the new

iconic fusion rule which tries, roughly speaking, to

convert the infrared contrasts into visual light con-

trasts and interchanging the input images then would

imply converting visual light contrasts into infrared

contrasts.

4.1 Fusion Metrics

Due to lack of a ground-truth, especially in the con-

text of multimodality, quantitative assessment of fu-

sion is quite a challenge, and still appears an open

problem. Many different metrics have already been

proposed, but they rate algorithms differently (Liu

et al., 2012). A rather general metric as the mutual in-

formation fusion metric persistently favors fusion by

simply averaging input images (Cvejic et al., 2006),

and looks not very suited for our new method. The

choice for a metric is driven by the requirements of

the application (Liu et al., 2012). In future research

we plan to apply the 12 metrics used by the latter, and

possibly devise an additional one of our own making,

to make an objective assessment of our new method.

5 CONCLUDING REMARKS

Within the context of multiresolution schemes a new

fusion rule has been proposed, coined iconic fusion

rule, so as to deal with opposite contrast which might

occur in a set of multimodal images. The rule is a

biased one, with the bias towards the contrasts ob-

served (if any) in an image with a favoured spectrum,

the so-called iconic image. Qualitative evidence for

the soundness of the rule has been given by means

of a few examples. A survey with quantitative assess-

ment of several testproblems and applying a variety of

quality measures is part of future research. Given the

intent of the new method, quite likely a new quality

measure needs to be devised so as to deal with images

with opposite contrast.

ACKNOWLEDGEMENTS

The research leading to these results has received

funding from the European Community’s Seventh

Framework Programme (FP7-ENV-2009-1) under

grant agreement no FP7-ENV-244088 ”FIRESENSE

- Fire Detection and Management through a Multi-

Sensor Network for the Protection of Cultural Her-

itage Areas from the Risk of Fire and Extreme

Weather”. We gratefully used images that have been

provided to us by Xenics (Leuven, Belgium).

REFERENCES

Burt, P. and Adelson, E. (1983). The laplacian pyramid as a

compact image code. IEEE Transactions on Commu-

nications, 31(4):532–540.

Burt, P. J. and Kolczynski, R. J. (1993). Enhanced image

capture through fusion. In Proceedings Fourth Inter-

national Conference on Computer Vision, pages 173–

182, Los Alamitos, California. IEEE Computer Soci-

ety Press.

Cvejic, N., Canagarajah, C. N., and Bull, D. R. (2006). Im-

age fusion metric based on mutual information and

tsallis entropy. Electronic Letters, 42(11):626–627.

De Zeeuw, P. M. (2005). A multigrid approach to image

processing. In Kimmel, R., Sochen, N., and We-

ickert, J., editors, Scale Space and PDE Methods in

Computer Vision, volume 3459 of Lecture Notes in

Computer Science, pages 396–407. Springer-Verlag,

Berlin Heidelberg.

De Zeeuw, P. M. (2007). The multigrid image transform. In

Tai, X.-C., Lie, K. A., Chan, T. F., and Osher, S., ed-

itors, Image Processing Based on Partial Differential

Equations, Mathematics and Visualization, pages 309

– 324. Springer Berlin Heidelberg.

De Zeeuw, P. M., Piella, G., and Heijmans, H. J. A. M.

(2004). A matlab toolbox for image fusion (matifus).

CWI Report PNA-E0424, Centrum Wiskunde & In-

formatica, Amsterdam.

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

156

Forster, B., van de Ville, D., Berent, J., Sage, D., and Unser,

M. (2004). Complex wavelets for extended depth-of-

field: A new method for the fusion of multichannel

microscopy images,. Microscopy Research and Tech-

nique, 65:33–42.

Han, J., Pauwels, E., and de Zeeuw, P. (2011). Visible and

infrared image registration employing line-based ge-

ometric analysis. MUSCLE International Workshop

on Computational Intelligence for Multimedia Under-

standing, Pisa (Italy), Accepted for publication.

Li, H., Manjunath, B. S., and Mitra, S. K. (1995). Multisen-

sor image fusion using the wavelet transform. Graph-

ical Models and Image Processing, 57(3):235–245.

Liu, Z., Blasch, E., Xue, Z., Zhao, J., Lagani

`

ere, R., and

Wu, W. (2012). Objective assessment of multiresolu-

tion image fusion algorithms for context enhancement

in night vision: a comparative study. IEEE Trans. on

Pattern Analysis and Machine Intelligence, 34(1):94–

109.

Mallat, S. (1989). A theory for multiresolution signal de-

composition: the wavelet representation. IEEE Pat-

tern Analysis and Machine Intelligence, 11(7):674–

693.

Piella, G. (2003a). Adaptive Wavelets and their Applica-

tions to Image Fusion and Compression. PhD thesis,

CWI & University of Amsterdam.

Piella, G. (2003b). A general framework for multiresolution

image fusion: from pixels to regions. Information Fu-

sion, 9:259–280.

Simoncelli, E. and Freeman, W. (1995). The steerable pyra-

mid: a flexible architecture for multi-scale derivative

computation. In Proceedings of the IEEE Interna-

tional Conference on Image Processing, pages 444—

447. IEEE Signal Processing Society.

Zitov

´

a, B. and Flusser, J. (2003). Image registration meth-

ods: a survey. Image and Vision Computing, 21:977–

1000.

MULTIMODALITY AND MULTIRESOLUTION IMAGE FUSION

157