A NEW VARIANT OF THE MINORITY GAME

Asset Value Game and Its Extension

Jun Kiniwa

1

, Takeshi Koide

2

and Hiroaki Sandoh

3

1

Department of Applied Economics, University of Hyogo, Kobe, Japan

2

Department of Intelligence and Informatics, Konan University, Kobe, Japan

3

Graduate School of Economics, Osaka University, Toyonaka, Osaka, Japan

Keywords:

Multiagent, Minority game, Mean asset value, Asset value game, Contrarian, Trend-follower.

Abstract:

A minority game (MG) is a non-cooperative iterated game with an odd population of agents who make bids

whether to buy or sell. Based on the framework of MG, several kinds of games have been proposed. However,

the common disadvantage in their characteristics is to neglect past actions. So we present a new variant of

the MG, called an asset value game (AG), in which every agent aims to decrease a mean asset value, that

is, an acquisition cost averaged through the past actions. The AG, however, is too simple to reproduce the

complete market dynamics. So we further consider an improvement of AG, called an extended asset value

game (ExAG), and investigate their features and obtain some results by simulation.

1 INTRODUCTION

Background

A minority game (MG) has been extensively studied

in this decade. It is considered as a model for finan-

cial markets or other applications in physics. It is a

non-cooperative iterated game with an odd popula-

tion size N of agents who make bids whether to buy

or sell. Since each agent aims to choose the group of

minority population, he is called a contrarian. Every

agent makes a decision at each step based on the pre-

diction of a strategy according to the sequence of the

m most recent outcomes of winners, where m is said

to be the memory size of the agents. Though MG is

a very simple model, it captures some of the complex

macroscopic behavior of the markets.

It is also known that the MG cannot capture large

price drifts such as bubble/crash phenomena, but just

can do the stationary state of the markets. This can be

intuitively explained as follows. Suppose that a group

of buyers can keep a majority for a long time. Then

a group of sellers must continuously win in the bub-

ble phenomenon. However, since every agent wants

to win and thus joins the group of sellers one after

another, it will gain a majority soon. That is, the

group of buyers cannot keep a majority, a contradic-

tion. Thus, it is difficult to simulate the bubble phe-

nomenon by MG.

Related Work

Much work has been done for the purpose of adapt-

ing MG to a real financial market. For example, first,

several authors investigated the majority game (MJ),

consisting of trend-followers. Marsili (Marsili, 2001)

and Martino et al. (Martino et al., 2003) investigated a

mixed majority-minority gameby varyingthe fraction

of trend-followers. Second, another way is to incor-

porate more realistic mechanism. A grand canonical

minority game (GCMG) (Challet et al., 2001; Ferreira

et al., 2005; Giardina et al., 2001) is considered as one

of the most successful models of a financial market.

In the GCMG, a set of agents consist of two groups,

called producers and speculators, and the speculators

are allowed not to trade in addition to buy and sell.

Third, it is also useful to improve the payoff function.

Andersen and Sornette(Andersen and Sornette, 2003)

proposed a different market payoff, called $-game, in

which the timing of strategy evaluation is taken into

consideration. Ferreira and Marsili(Ferreira and Mar-

sili, 2005) compared the behavior of the $-game with

that of the MG/MJ. The difficulty of the $-game is

to evaluate its payoff function because we have to

know one step future result. (Kiniwa et al., 2009)

proposed an improved $-game, in which the timing of

evaluation is delayed until the future result is turned

out. Fourth, there are some other kinds of improve-

ment. Liu et al. (Liu et al., 2004) proposed a mod-

15

Kiniwa J., Koide T. and Sandoh H..

A NEW VARIANT OF THE MINORITY GAME - Asset Value Game and Its Extension.

DOI: 10.5220/0003715800150022

In Proceedings of the 4th International Conference on Agents and Artificial Intelligence (ICAART-2012), pages 15-22

ISBN: 978-989-8425-96-6

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

ified MG, where agents accumulate scores for their

strategies from the recent several steps. Recent work

by Challet (Challet, 2008) proposed a more sophis-

ticated model using asynchronous holding positions

which are driven by some patterns. Finally, two books

(Challet et al., 2005; Coolen, 2005) comprehensively

described the history of minority games, mathemati-

cal analysis, and their variations. Beyond the frame-

work of MG, efforts to reproduce the real market dy-

namics are continued (Takayasu et al., 1992; Yamada

et al., 2007).

Motivation

The purpose of this paper is also to improve MG by

the thirdly mentioned above. Though the framework

of MG and its variants seem to be reasonable, we

have a basic question — “Do people always make

decisions by using their strategies depending on the

recent history ?” Some people may just take actions

by considering losses and gains. For example, if one

has a company’s stock which has rapidly risen (resp.

fallen), he will sell (resp. not sell) it soon without us-

ing his strategy as illustrated in Figure 1. Such a situa-

tion gives us the idea of an acquisition cost, or a mean

asset value. In the conventional games, like the origi-

nal MG, an agent forgets the past events and makes a

decision by observing only the price up/down within

the memory size

1

. In our game, however, each agent

evaluates the strategies by whether or not the current

price exceeds his mean asset value. Since the mean

asset value contains all the past events in a sense, he

can increase his net profit by reducing the mean asset

value. We call the game an asset value game, denoted

by AG.

Figure 1: Illustration of our idea.

However, there is still an unsolved problem in AG

that stems from the framework of MG: the payoff

function does not give an action, but just adds points

to desirable strategies. Thus, if the adopted strategy is

1

Recently, several studies (Araujo and Lamb, 2004;

Araujo and Lamb, 2009) in this direction have been made

from the viewpoint of evolutionary learning.

not desirable, the agent has to wait until the desirable

one gains the highest score. So, there is a time lag be-

tween the rapid change of a price and the adjustment

of an agent’s behavior.

To improve the time lag, we allow each agent

another action that satisfies the payoff function with

some probability. If the price rises/falls rapidly and

the difference between the price and agent i’s mean

asset value exceeds some threshold, the agent i may

take the action according to the payoff function (re-

gardless of the strategy). By tuning up the threshold,

etc., we can reproduce the real market dynamics. We

call the variant of AG an extended asset value game,

denoted by ExAG.

Contributions

Our contributions in this paper are summarized as fol-

lows:

• We present a new variant of the MG, called an

asset value game.

• To improve the problem of AG, we further con-

sider an extended AG.

• We investigate the behavior of AG and ExAG in

detail.

The rest of this paper is organized as follows. Sec-

tion 2 states our model, which contains MG, MJ, AG

and ExAG. Section 3 presents an analysis of AG. Sec-

tion 4 describes a simulation model and shows some

experimental results. Finally, Section 5 concludes the

paper.

2 MODELS

In this section, we first describe MG and MJ in Sec-

tion 2.1, then the difference between MG and AG in

Section 2.2. Finally, we describe the difference be-

tween AG and ExAG in Section 2.3.

2.1 Previous Model — MG and MJ

At the beginning of the game, each agent i ∈

{1,...,N} is randomly given s strategies R

i,a

for a ∈

{1,.. .,s} . The number of agents, N, is assumed to be

odd in order to break a tie. Any strategy R

i,a

(µ) ∈ R

i,a

maps an m-length binary string µ into a decision −1

or 1, that is,

R

i,a

: {−1,1}

m

−→ {−1,1}, (1)

where m is the memory of agents. A history H,

e.g., [−1,1,1, ...], is a sequence of −1 and 1 rep-

resenting a winning decision h(t) for each time step

ICAART 2012 - International Conference on Agents and Artificial Intelligence

16

t ∈ T = (0, 1, 2,.. .). The winning decision of MG

(resp. MJ) is determined by the minority (resp. ma-

jority) group of −1 or 1. Each strategy R

i,a

(µ) ∈ R

i,a

is given a score U

i,a

(t) so that the best strategy can

make a winning decision. For the last m winning de-

cisions, denoted by µ = h

m

(t − 1) ⊆ H, agent i’s strat-

egy R

i,a

(µ) ∈ R

i,a

determines −1 or 1 by (1). Among

them, each agent i selects his highest scored strategy

R

∗

i

(µ) ∈ R

i,a

and makes a decision a

i

(t) = R

∗

i

(µ) at

time t ∈ T. The highest scored strategy is represented

by

R

∗

i

(µ) = arg max

a∈{1,...,s}

U

i,a

(t), (2)

which is randomly selected if there are many ones. An

aggregate value A(t) =

∑

N

i=1

a

i

(t) is called an excess

demand. If A(t) > 0, agents with a

i

(t) = −1 win, and

otherwise, agents with a

i

(t) = 1 win in MG, and vice

versa in MJ. Hence the payoffs g

MG

i

and g

MJ

i

of agent

i are represented by

g

MG

i

(t + 1) = −a

i

(t)A(t) and (3)

g

MJ

i

(t + 1) = a

i

(t)A(t), respectively. (4)

The winning decision h(t) = −1 or 1 is added to the

end of the history H, i.e., h

m+1

(t) = [h

m

(t − 1),h(t)],

and then it will be reflected in the next step. After the

winning decision has been turned out, every score is

updated by

U

i,a

(t + 1) = U

i,a

(t) ⊕ R

i,a

(µ) · sgn(A(t)), (5)

where ⊕ means subtraction for MG (addition for MJ)

and sgn(x) = 1 (x ≥ 0), = −1 (x < 0). In other

words, the scores of winning strategies are increased

by 1, while those of losing strategies are decreased

by 1. We simply say that an agent increases selling

(resp. buying) strategies if the scores of selling (resp.

buying) strategies are increased by 1. Likewise the

decrement of scores. Notice that the score is an ac-

cumulated value from an initial state in the original

MG. In contrast, we define it as a value from the last

H

p

steps according to (Liu et al., 2004). That is, we

use

U

i,a

(t+1) = U

i,a

(t)⊕R

i,a

(µ)·sgn(A(t))−U

i,a

(t−H

p

).

(6)

The constant H

p

is not relevant to m, but is only used

for selecting the highest score. Analogous to a fi-

nancial market, the decision a

i

(t) = 1 (respectively,

−1) represents buying (respectively, selling) an asset.

Usually, the price of an asset is defined as

p(t + 1) = p(t) · exp

A(t)

N

. (7)

2.2 Asset Value Game

The difference between MG and our asset value game

is the payoff function. Let v

i

(t) be agent i’s mean

asset value at time t, and u

i

(t) the number of units of

his asset. The payoff function in AG is defined as

g

AG

i

(t + 1) = −a

i

(t)F

i

(t), (8)

where F

i

(t) = p(t) − v

i

(t). The mean asset value v

i

(t)

and the number of asset units u

i

(t) are updated by

v

i

(t + 1) =

v

i

(t)u

i

(t) + p(t)a

i

(t)

u

i

(t) + a

i

(t)

(9)

and

u

i

(t + 1) = u

i

(t) + a

i

(t), (10)

respectively. That is, the payoff function (3) in MG is

replaced by (8) in AG. Without loss of generality, we

assume that v

i

(t), u

i

(t) > 0 for any t ∈ T.

The basic idea behind the payoff function is that

each agent wants to decrease his acquisition cost in

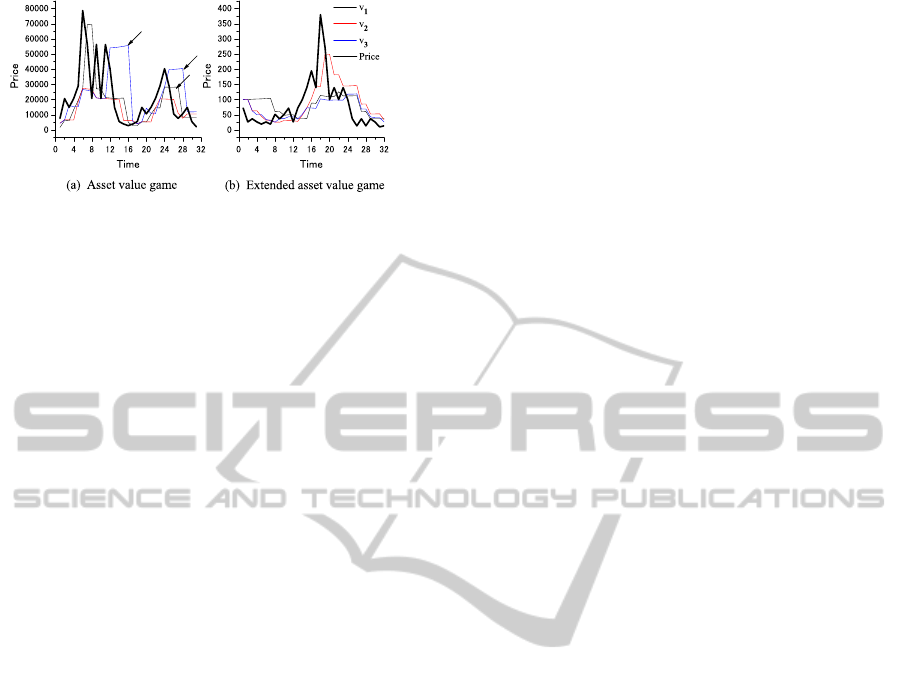

order to make his appraisal gain. Figure 2(a) shows

the relationship between the price and the mean asset

values of N = 3 agents, where the price is represented

by the solid, heavy line. Notice that if the population

size N is small, the price change becomes drastic.

The most important feature of the AG is to ap-

preciate the past gains and losses. Even though an

agent has bought a high-priced asset during the asset-

inflated term (see Figure 1), the mean asset value of

the agent reflects the fact and an appropriate action

compared with the current price is recommended.

2.3 Extended Asset Value Game

Here we consider the drawbacks of AG, and present

an extended AG, denoted by ExAG, to improve them.

Though the AG captures a good feature of an agent’s

behavior, the payoff function indirectly appreciates

desirable strategies. If the adopted strategy is not de-

sirable, the agent has to wait until the desirable one

gains the highest score. So, there is a time lag be-

tween the rapid change of a price and the adjustment

of an agent’s behavior.

More precisely, the movement of price is followed

by the asset values (see arrows in Figure 2(a)). This

behavior can be explained by the following reasons. If

the price rapidly rises, it exceeds almost all the mean

asset values. Then, F

i

(t) = p(t) − v

i

(t) becomes plus

and the a

i

(t) = −1 (i.e., sell) action is recommended.

So, some agents change from trend-followers to con-

trarians in a few steps. During the steps, such agents

remain trend-followers, that is, buy assets at the high

price. Thus, their mean asset values follow the move-

ment of price.

Our solution is to provide another option of the

agent. That is, the agent who has much higher/lower

asset value than the current price can directly act as

the payoff function, called a direct action. However,

A NEW VARIANT OF THE MINORITY GAME - Asset Value Game and Its Extension

17

Figure 2: Price influence on mean asset values.

if so, every agent may take the same action when the

price go beyond every asset value. To avoid such an

extreme situation, we give the direct action with some

probability.

Let K = K

+

(F

i

≥ 0), K

−

(F

i

< 0) be the F

i

’s

threshold over which the agent may take the direct

action, and let λ be some constant. Each agent takes

the same action as the payoff function (without using

his strategy) with probability

p =

1− exp{−λ(|F

i

| − K)} (K ≤ |F

i

|)

0 (|F

i

| < K),

(11)

where

K =

K

+

0 ≤ F

i

K

−

F

i

< 0 such that K

−

< K

+

and takes the action according to his strategy with

probability 1 − p. In short, in ExAG

• agent i takes an action a

i

(t) satisfying g

AG

i

(t +

1) > 0 with probability p, and

• an action a

i

(t) = R

∗

i

(µ) with probability 1 − p.

Figure 2(b) shows the behavior of the price and

the mean asset values for N = 3 agents in our extended

AG, where K

+

= 300, K

−

= 50 and λ = 0.001. Notice

that the change of price in Figure 2(b) is not so drastic

as that in Figure 2(a). In addition, all the values do not

follow the price movement.

3 ANALYSIS OF AG

In this section we briefly investigate the features of

AG. Though we mainly discuss the bubble in the fol-

lowing, similar argumentshold for the crash. For con-

venience, we define a contrarian as follows. If a

i

(t) =

−1 (resp. a

i

(t) = 1) for a history h

m

(t − 1) = {1}

m

(resp. h

m

(t − 1) = {−1}

m

), agent i is a contrarian.

Let t

r

be the first time at which the winning decision

is reversedafter t−m. LetC

MJ

(t), C

AG

(t) and C

MG

(t)

denote the set of contrarians in MJ, AG and MG, re-

spectively. The next theorem means that the bubble

phenomenon is likely to occur in the order of MJ, AG

and MG.

Theorem1. Supposethat the same set of agents expe-

rience h

m

(t −1) = {1}

m

starting from the same scores

since t − m. Then, for any t

′

∈ T = (t,.. .,t

r

− 1) we

have

C

MJ

(t

′

) ⊆ C

AG

(t

′

) ⊆ C

MG

(t

′

).

Proof. First, we show that C

AG

(t) = C

MG

(t) at time

t. Consider an arbitrary agent i. Notice that agent

i has the same score both in AG and in MG. Since

h

m

(t − 1) = {1}

m

, agent i takes the same action based

on the same strategy both in AG and in MG. Thus, we

have C

AG

(t) = C

MG

(t) at time t.

Next, we show that C

AG

(t

′

) ⊆ C

MG

(t

′

) at time

t

′

∈ T. Notice that all the agents in MG increase the

selling strategies for h

m

(t − 1) = {1}

m

. On the other

hand, notice that the agents in AG that have smaller

mean asset values v(t) than the price p(t) increase the

selling strategies for h

m

(t − 1) = {1}

m

. Since every

contrarian refers to the same part (i.e., { 1}

m

) of the

strategy, he does not change his decision during the

interval T. If an agent increases the selling strategies

in AG, it also increases the selling strategies in MG.

Thus we have C

AG

(t

′

) ⊆ C

MG

(t

′

) at time t

′

∈ T.

The similar argument holds for C

MJ

(t

′

) ⊆ C

AG

(t

′

).

⊓⊔

We call an agent a bi-strategist if he can take both

buy and sell actions, that is, has strategies R

i,a

con-

taining both actions, for h

m

(t − 1) = {1}

m

or h

m

(t −

1) = {−1}

m

. The following lemma states that there is

a time lag between the price rising and the action of

agent’s payoff function.

Lemma 1. In AG, suppose that a history H contains

h

m

(t − 1) = {1}

m

. Even if a bi-strategist keeps the

opposite action of the payoff function for H

p

steps, he

takes the same action as the payoff function after the

H

p

+ 1-st step.

Proof. Suppose that a bi-strategist i has a strategy

R

i,a

1

(resp. and a strategy R

i,a

2

) which takes the op-

posite action of (resp. the same action as) the pay-

off function. If i adopts the strategy R

i,a

1

now, the

score difference between R

i,a

1

and R

i,a

2

is at most

2H

p

. Since the difference decreases by 2 for a step,

the scores of R

i,a

1

and R

i,a

2

becomes the same point at

the H

p

-th step. Then, after the H

p

+1-st step, he takes

the strategy R

i,a

2

. ⊓⊔

For simplicity, we assume that the size of H

p

is

greater than m enough.

Lemma 2. In AG, suppose that a history H contains

h

m

(t − 1) = {1}

m

. For any time steps t

1

,t

2

∈ T =

(t, ... ,t

r

− 1), where t

1

< t

2

, we have

C

AG

(t

1

) ⊆ C

AG

(t

2

).

ICAART 2012 - International Conference on Agents and Artificial Intelligence

18

Proof. Suppose that agent i belongs to C

AG

(t

1

). We

show that once the rising price p(t

1

) overtakes the

mean asset value v

i

(t

1

) of agent i, v

i

(t

1

) will not over-

take p(t

1

) as long as p(t

1

) is rising. Since

v

i

(t + 1) − v

i

(t) =

a(p − v)

u+ a

> 0 and 0 <

a

u+ a

< 1,

p > v holds as long as p(t

1

) is rising. Thus, agent i is

contrarian at time t

1

+1. We haveC

AG

(t

1

) ⊆ C

AG

(t

1

+

1), and can inductively show C

AG

(t

1

) ⊆ C

AG

(t

2

). ⊓⊔

We say that the bubble is monotone if h

m

(t − 1) =

{1}

m

holds for any t ∈ T = (t,.. .,t

r

− 1).

Lemma 3. In AG, as long as more than half popula-

tion are bi-strategists, the price in a monotone bubble

will reach the upper bound.

Proof. First, the mean asset values that has been over-

taken by the price will not exceed the price again from

the proof of Lemma 2.

Second, any bi-strategist i with v

i

(t) > p(t) will

take a buying action in the H

p

+ 1 steps from Lemma

1. Since v

i

(t + 1) − v

i

(t) = a(p− 1)/(u+ a) < 0, the

mean asset value decreases. Thus, the rising price will

eventually reach the greatest mean asset value in the

set of contrarians.

Third, since all the bi-strategists increase the sell-

ing strategies, they will take selling actions in H

p

+ 1

steps. After that, A/N < 0 holds and the price falls

down. ⊓⊔

From Lemma 3, the following theorem is straight-

forward.

Theorem 2. In AG, as long as more than half pop-

ulation are bi-strategists, the monotone bubble will

terminate. ⊓⊔

4 SIMULATION

In this section, we present some simulation results.

Our first question with respect to ExAG is :

1. What values are appropriate for the constant λ and

the threshold K in ExAG?

Our next question with respect to AG is :

2. How does the inequality of wealth distribution

vary in AG?

Then, our further questions with respect to several

games are as follows.

3. How widely do the Pareto indices of games differ

from practical data?

4. How widely do the skewness and the kurtosis of

games differ from practical data?

5. How widely do the volatilities differ in several

games?

6. How widely do the volatility autocorrelations of

games differ from practical data ?

We present our basic constants in Table 1. We re-

peated the experiments up to 30 times and obtained

averaged results. The simulation is implemented by

the C language.

Table 1: Basic constants.

Symbol Meaning Value

N Number of agents 501

S Number of strategies 4

m Memory size 4

H

p

Score memory 4

T Number of steps 5000

— Initial agent’s money 10000

— Initial agent’s assets 100

r Investment rate 0.01

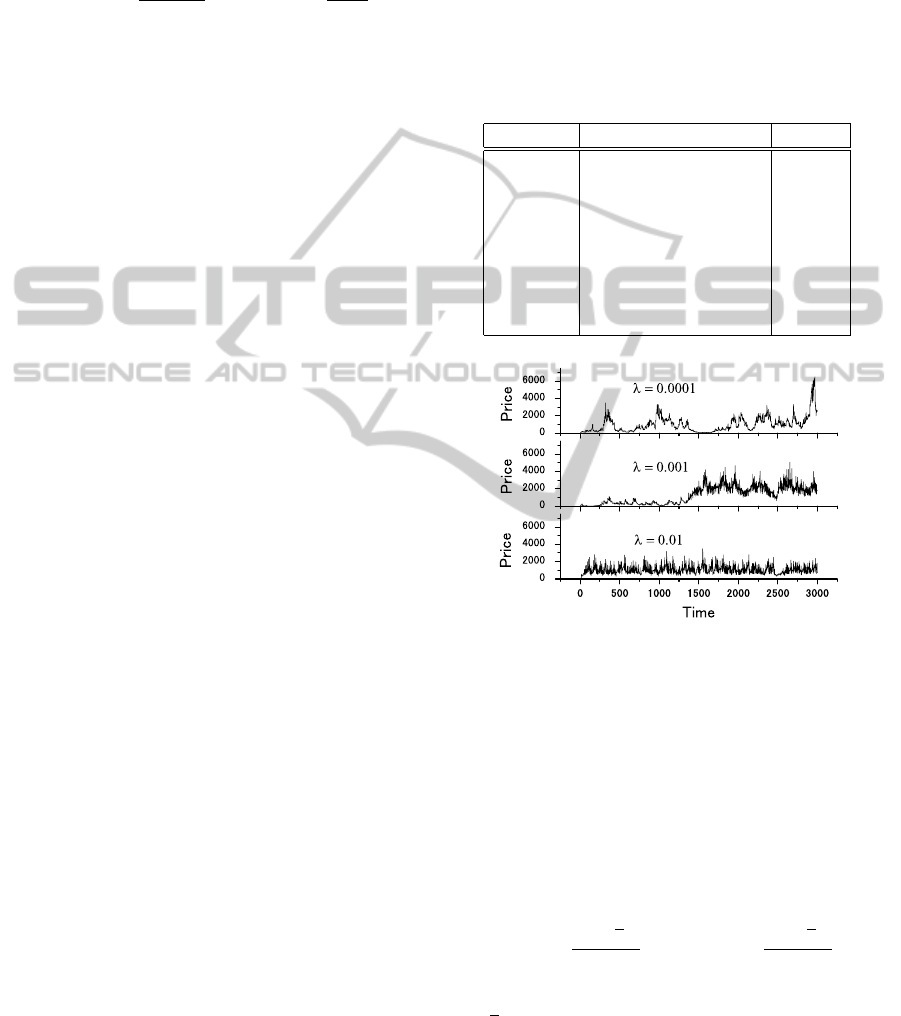

Figure 3: Price behavior for varying λ in ExAG.

For the first issue, Figure 3 shows the patterns of

price behavior for three kinds of λ values. From the

definition of the direct action probability (see (11)),

the smaller the λ becomes, the fewer the number of

direct actions occur. Thus, the ratio of trend-followers

is high for λ = 0.0001 and that of contrarians is high

for λ = 0.01.

In addition, Figure 4 shows the skewness and the

kurtosis for varying the constant λ, where the skew-

ness (α

3

) and the kurtosis (α

4

) are defined as

α

3

=

N

∑

i=1

(x

i

− x)

3

Nσ

3

and α

4

=

N

∑

i=1

(x

i

− x)

4

Nσ

4

,

respectively, for time series variable x

i

and its aver-

age x. If the skewness is negative (respectively, posi-

tive), the left (respectively, right) tail of a distribution

is longer. A high kurtosis distribution has a sharper

peak and longer, fatter tails, while a low kurtosis dis-

tribution has a more rounded peak and shorter, thinner

A NEW VARIANT OF THE MINORITY GAME - Asset Value Game and Its Extension

19

tails. In other words, the more the patterns of price

fluctuation occur, the smaller the kurtosis becomes.

Thus, if λ is small and the reversal movements of con-

trarians are rare, the kurtosis becomes large. On the

other hand, if we vary K

−

with keeping K

+

= 500,

the kurtosis is distributed as shown in Figure 5, where

a regression curve is depicted.

Figure 4: Skewness and kurtosis vs λ in ExAG.

Figure 5: Kurtosis vs K

−

in ExAG.

From the observation above, we set λ = 0.001,

K

−

= 50 and K

+

= 500 in what follows.

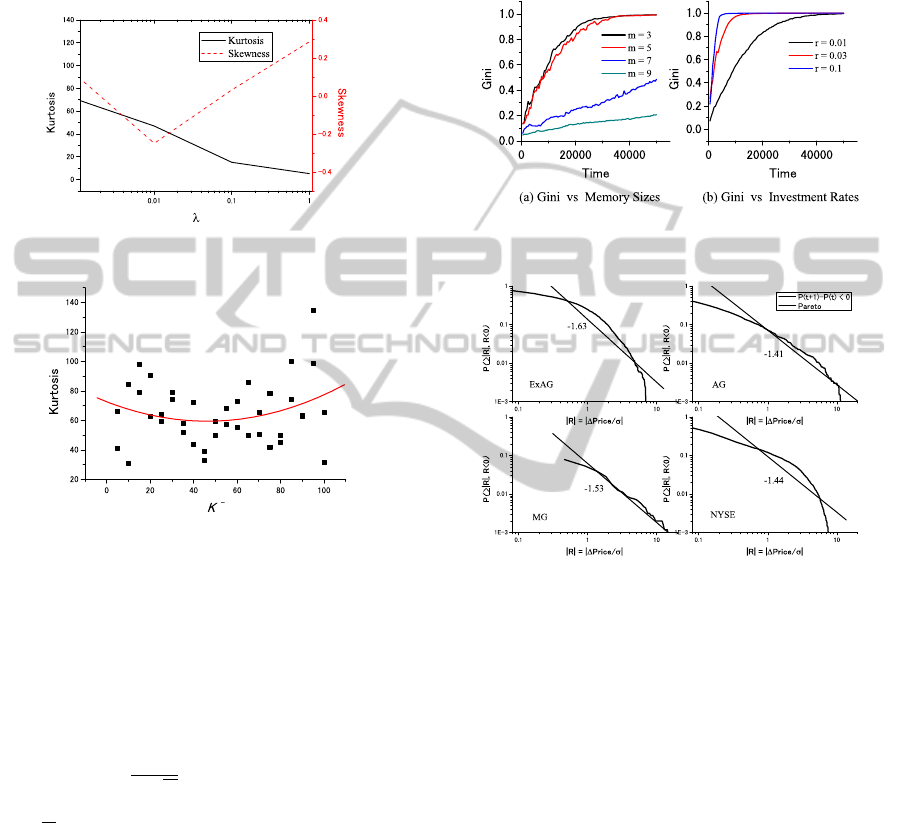

For the second issue, we present our results in Fig-

ure 6. The Gini coefficient is used as a measure of

inequality of wealth distribution. Given a set of N

agents’ wealth (X

1

,X

2

,. ..,X

N

), the Gini coefficient G

is defined as

G =

1

2N

2

X

N

∑

i=1

N

∑

j=1

|X

i

− X

j

|,

where X =

∑

N

i=1

X

i

/N. If G = 0, the wealth is com-

pletely even. If G is close to 1, an agent has a

monopoly on the wealth.

Figure 6(a) shows that the influence of memory

size on the Gini coefficient. It means that the smaller

the memory size is, the wider the inequality of wealth

becomes. If the memory size is small, some success-

ful agents earn much money and the others not. So

their mean asset values are widely distributed in the

long run. Thus, the Gini coefficient tends to be large.

Figure 6(b) shows that the influence of invest-

ment rate on the Gini coefficient. It means that the

larger the investment rate is, the wider the inequal-

ity of wealth becomes. If the investment rate is large,

the successful agents earn much money and the others

not. So their mean asset values are widely distributed

in the long run. Thus, the Gini coefficient tends to be

large.

Figure 6: Influence on Gini coefficient in AG.

Figure 7: Pareto indices for several games and NYSE.

For the third issue, Figure 7 shows the price de-

creasing change distribution for several games and

NYSE, where NYSE is the Dow-Jones industrial av-

erage 20,545 data (1928 /10/1 — 2010/7/26) in New

York Stock Exchange. That is, the normalized de-

creasing change of price |R| = |∆Price/σ| and its dis-

tribution is compared. The straight lines represent the

Pareto indices. At a glance, the curves of ExAG and

AG resemble that of NYSE, which means their dis-

tributions are likewise. The Pareto index of ExAG is

also not far from that of NYSE.

For the fourth issue, we obtained the following re-

sults. Both ExAG and AG have better values of skew-

ness and kurtosis than MG does as shown in Tables 2

and 3, where “stdev.” and “95% int.” mean standard

deviation and 95% confidence interval, respectively.

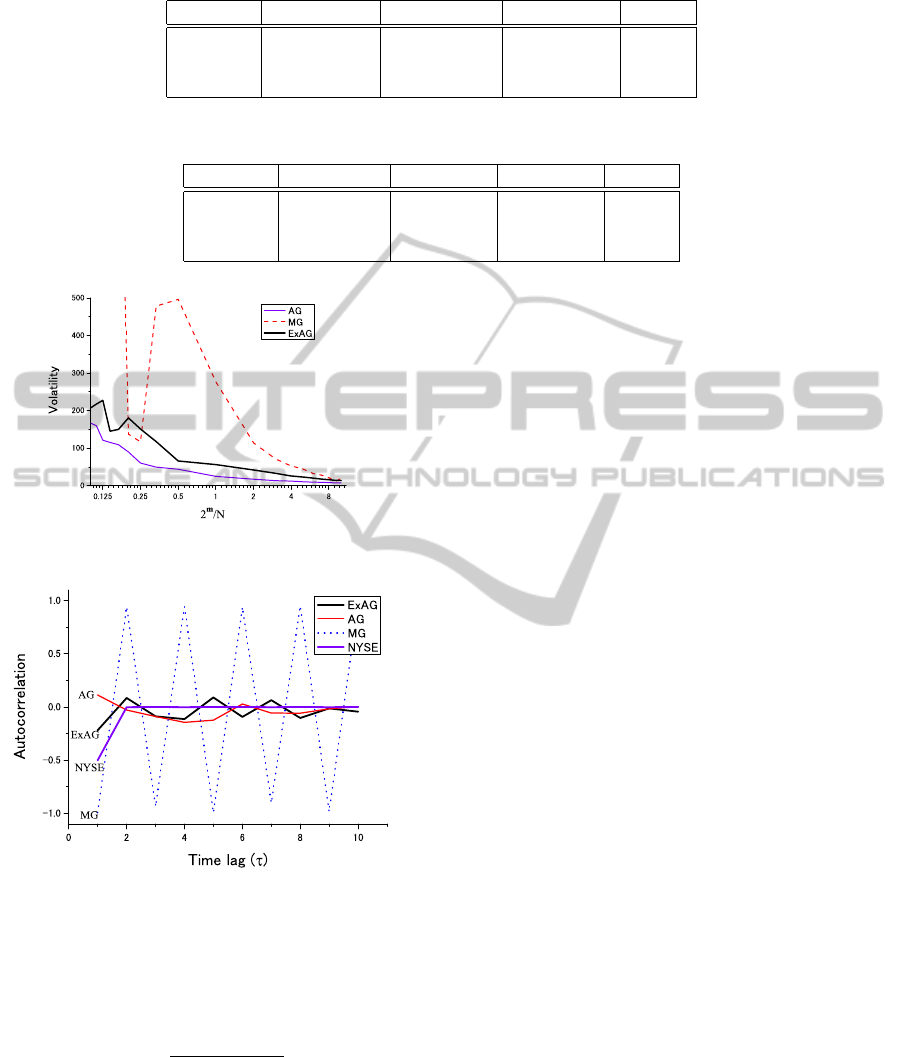

For the fifth issue, we present our results in Figure

8. The volatility is defined as the standard deviation

of the number of excess demand. The figure shows

that the volatility of AG is lower than other games for

ICAART 2012 - International Conference on Agents and Artificial Intelligence

20

Table 2: Skewness.

method ExAG AG MG NYSE

average 0.098 0.39 -0.32 3.725

stdev. 1.82 1.03 1.86 —

95% int. [-0.58,0.77] [0.007,0.77] [-1.02,0.37] —

Table 3: Kurtosis.

method ExAG AG MG NYSE

average 42.3 72.9 148 18.92

stdev. 61.6 96.3 231 —

95% int. [21.3,67.3] [36.9,109] [62.2,235] —

Figure 8: Volatility (N = 51 ∼ 5119, S = 4, m = 9).

Figure 9: Autocorrelation of volatility.

every memory size. This means the memory size does

not have a great impact on the price formation in AG.

For the sixth issue, the autocorrelation function

C(τ) is defined as

C(τ) =

hA(t)A(t + τ)i

hA(t)

2

i

,

where τ is a time lag. The value of C(τ) becomes 1

(respectively, -1) if there is a positive (respectively,

negative) correlation between A(t) and A(t + τ). As

shown in Figure 9, only MG has the alternating,

strong positive/negativecorrelation for every time lag.

Other games, AG and ExAG, have weak correlations

which reduce as the time lag grows. The practical

data, NYSE, has a negative correlation only when the

time lag is τ = 1. Since the excess demand in NYSE

is unknown, we assume the number of agents is equal

to N = 501 and estimate A(t) from the equation (7).

Notice that ExAG has the same (negative) correlation

as NYSE when τ = 1, while AG has the positive cor-

relation.

5 CONCLUSIONS

In this paper, we proposed an asset value game and

an extended asset value game. The AG is a simple

variant of MG such that the only difference is their

payoff functions. Though the AG captures a good

feature of an agent’s behavior, there is a time lag be-

tween the rapid change of a price and the adjustment

of an agent’s behavior. So we consider the ExAG, an

improvement of AG, by using parameters which con-

tain some probabilistic behavior. The ExAG has two

parameters by which the balance of trend-followers

and contrarians can be controlled. We examined sev-

eral values for the parameters and then fixed to speci-

fied values. We obtained several experimental results

which reveals some characteristics of ExAG. The ad-

vantages of ExAG are twofold. First, we can restrict a

drastic movement of price in AG by tuning the param-

eters. Second, we can reduce the time lag generated

by recovering score losses in AG.

Our future work includes investigating the influ-

ence of market intervention, an in-depth analysis of

the AG, and other applications of the games.

ACKNOWLEDGEMENTS

This work was partially supported by Grant-in-Aid

for Scientific Research (C) 22510147 of the Ministry

of Education, Science, Sports and Culture of Japan.

A NEW VARIANT OF THE MINORITY GAME - Asset Value Game and Its Extension

21

REFERENCES

Andersen, J. V. and Sornette, D. (2003). The $-game. The

European Physical Journal B, 31(1):141–145.

Araujo, R. M. and Lamb, L.C. (2004). Towards understand-

ing the role of learning models in the dynamics of the

minority game. In Proceedings of the 16th IEEE In-

ternational Conference on Tools with Artificial Intel-

ligence (ICTAI 2004), pages 727–731.

Araujo, R. M. and Lamb, L. C. (2009). On the use of mem-

ory and resources in minority games. ACM Transac-

tions on Autonomous and Adaptive Systems, 4(2).

Challet, D. (2008). Inter-pattern speculation: beyond mi-

nority, majority and $-games. Journal of Economic

Dynamics and Control, 32(1):85–100.

Challet, D., Marsili, M., and Zhang, Y. C. (2001). Stylized

facts of financial markets and market crashes in mi-

nority games. Physica A: Statistical Mechanics and

its Applications, 294(3-4):514–524.

Challet, D., Marsili, M., and Zhang, Y. C. (2005). Minor-

ity games — interacting agents in financial markets.

Oxford University Press, New York, first edition.

Coolen, A. C. (2005). The mathematical theory of minor-

ity games. Oxford University Press, New York, first

edition.

Ferreira, F. F. and Marsili, M. (2005). Real payoffs and

virtual trading in agent based market models. Physica

A: Statistical Mechanics and its Applications, 345(3-

4):657–675.

Ferreira, F. F., Oliveira, V. M., Crepaldi, A. F., and Campos,

P. R. (2005). Agent-based model with heterogeneous

fundamental prices. Physica A: Statistical Mechanics

and its Applications, 357(3-4):534–542.

Giardina, I., Bouchaud, J. P., and Me´zard, M. (2001). Mi-

croscopic models for long ranged volatility correla-

tions. Physica A: Statistical Mechanics and its Ap-

plications, 299(1-2):28–39.

Kiniwa, J., Koide, T., and Sandoh, H. (2009). Analysis of

price behavior in lazy $-game. Physica A: Statistical

Mechanics and its Applications, 388(18):3879–3891.

Liu, X., Liang, X., and Tang, B. (2004). Minority game and

anomalies in financial markets. Physica A: Statistical

Mechanics and its Applications, 333:343–352.

Marsili, M. (2001). Market mechanism and expectations in

minority and majority games. Physica A: Statistical

Mechanics and its Applications, 299(1-2):93–103.

Martino, A. D., Giardina, I., and Mosetti, G. (2003). Statis-

tical mechanics of the mixed majority-minority game

with random external information. Journal of Physics

A: Mathematical and General, 36(34):8935–8954.

Takayasu, H., Miura, T., Hirabayashi, T., and Hamada, K.

(1992). Statistical properties of deterministic thresh-

old elements — the case of market price. Physica

A: Statistical Mechanics and its Applications, 184(1-

2):127–134.

Yamada, K., Takayasu, H., and Takayasu, M. (2007). Char-

acterization of foreign exchange market using the

threshold-dealer-model. Physica A: Statistical Me-

chanics and its Applications, 382(1):340–346.

ICAART 2012 - International Conference on Agents and Artificial Intelligence

22