Facial Features’ Localization using a Morphological

Operation

Kenz A. Bozed, Ali Mansour and Osei Adjei

Department of Computer Science and Technology, University of Bedfordshire

Park Square, Luton, LU1 3JU, U.K.

Abstract. Facial features’ localization is an important part of various applica-

tions such as face recognition, facial expression detection and human computer

interaction. It plays an essential role in human face analysis especially in

searching for facial features (mouth, nose and eyes) when the face region is in-

cluded within the image. Most of these applications require the face and facial

feature detection algorithms. In this paper, a new method is proposed to locate

facial features. A morphological operation is used to locate the pupils of the

eyes and estimate the mouth position according to them. The boundaries of the

allocated features are computed as a result when the features are allocated. The

results obtained from this work indicate that the algorithm has been very suc-

cessful in recognising different types of facial expressions.

1 Introduction

Developments in digital image processing have grown with different algorithms for

various applications of Computer vision techniques. Such applications have been

reported by Lekshmi et al. [7] for face detection, Hannuksela et al. [3] for facial fea-

ture extraction and Mohamed et al. [10] for face recognition.

Face detection is one the active research applications in these areas. Face detection

is defined by Yang et al. [13] to find the location and the size of the face in the input

image. Some of face detection approaches do not have any assumptions regarding the

number of faces in the image but they assume that a face exists in the image in order

to classify as face and non-face regions [1], [4]. In the facial localization, it is nor-

mally assumed that the input image has at least one face. Generally, facial recognition

problems are based on the features in the face. Salient features can be recognized

easily by human eyes but it is challenging to locate and extract these features using a

machine. The challenges of these applications are associated with pose, structural

components, facial expressions, illumination, occlusion, and image quality of the

subjects [11], [13]. Previous research has been concerned with the applications of face

detection and recognition [9]. Many methods have been developed to locate and ex-

tract facial features. These methods classify into two categories: Feature based and

Holistic. In the feature-based method, face recognition relies on the detection and

localization of facial features and their geometrical relationships [1]. In a holistic

method, a full face image is transformed to a point on a high dimensional space such

as Active Appearance Model (AAM) [8], neural nets [5].

A. Bozed K., Mansour A. and Adjei O. (2010).

Facial Features’ Localization using a Morphological Operation.

In Proceedings of the 1st International Workshop on Bio-inspired Human-Machine Interfaces and Healthcare Applications, pages 35-43

DOI: 10.5220/0002787000350043

Copyright

c

SciTePress

The morphological operation is a well-known technique used in image processing

and computer vision for manipulating image features based on their shapes [2]. How-

ever, some methods need a considerable amount of computational or intensive mem-

ory to implement, and improve the speed and accuracy [7]. Our research aims to de-

velop a simple and an accurate method that can be used in facial systems such as

emotional detection.

In facial detection systems, eyes detection is a significant feature in the human

face, where the detected eyes are easier to locate than other features. Also, the local-

ization of the eyes is a necessary stage to help in the detection of other facial features

which can be used for facial expression analysis as they convey the human expres-

sions.

Although research have been done in this area, the process of solving the problem

of facial features’ detection is still incomplete due to its complexity [6], [7]. For ex-

ample face posture, occlusions and illumination have effects on the performance of

the features’ detection.

In this paper, a facial localization algorithm for salient feature extraction is pre-

sented. The algorithm consists of three steps: (1) a morphological process is applied

to search the darkest parallel features in the upper face as a result of eyes localiza-

tions; (2) the distance between the estimated pupils is used to locate the mouth. (3)

Localization of the salient features is used to compute their boundaries.

2 Facial Feature Localization

Features that are commonly used to characterise the human face are the eyes and

mouth. It is normally assumed that the facial region is present in the input image and

the features are searched within this region. The algorithm is based on the observation

that some features such as the pupils of the eyes are darker than other facial features.

Therefore, morphological operations can be used to detect the location of the eyes.

The morphological operations are compatible with rough feature extraction for their

fast and robust nature [3], [12].

The method proposed in this paper involves the morphological technique to detect

the pupil of the eyes, and then the distance between them is used to detect the position

of the mouth. The method is also simple and less computationally intensive. It has the

advantage of using three facial features points instead of using the holistic face such

as Active Shape Model with 58 facial feature points to locate the features [14].

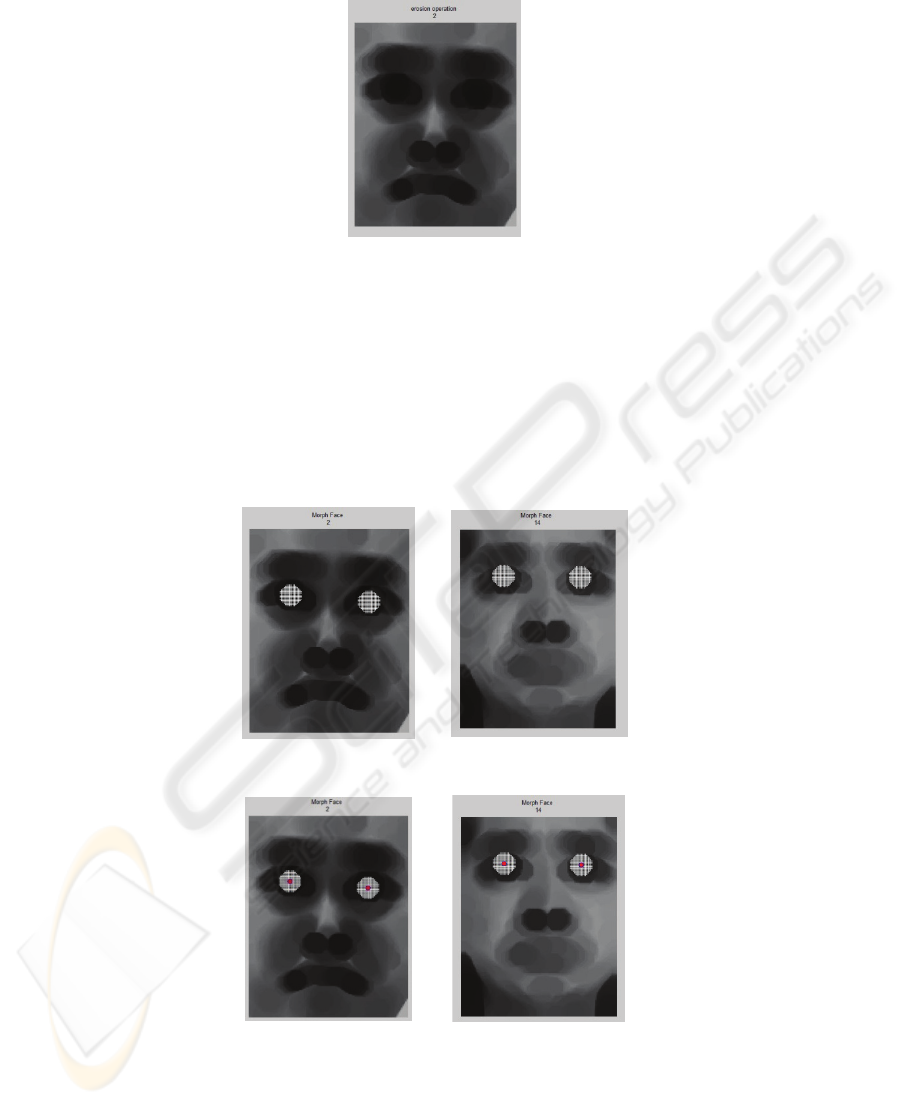

The morphological erosion operation is applied on a grayscale face, using this op-

eration to remove any pixel that is not completely surrounded by other pixels. The

operation is applied when assuming 8-pixels are connected in order to reduce the

unnecessary pixels in the boundaries of the face. Fig. 1 shows the some faces after

applying the erosion operation. The eyes localization is determined based on the dark-

est pixels that are close to each other. The positions of the eyes allow the distance

between them to be computed and also to locate the mouth.

36

Fig. 1. Face after apply the erosion.

2.1 Eyes Detection

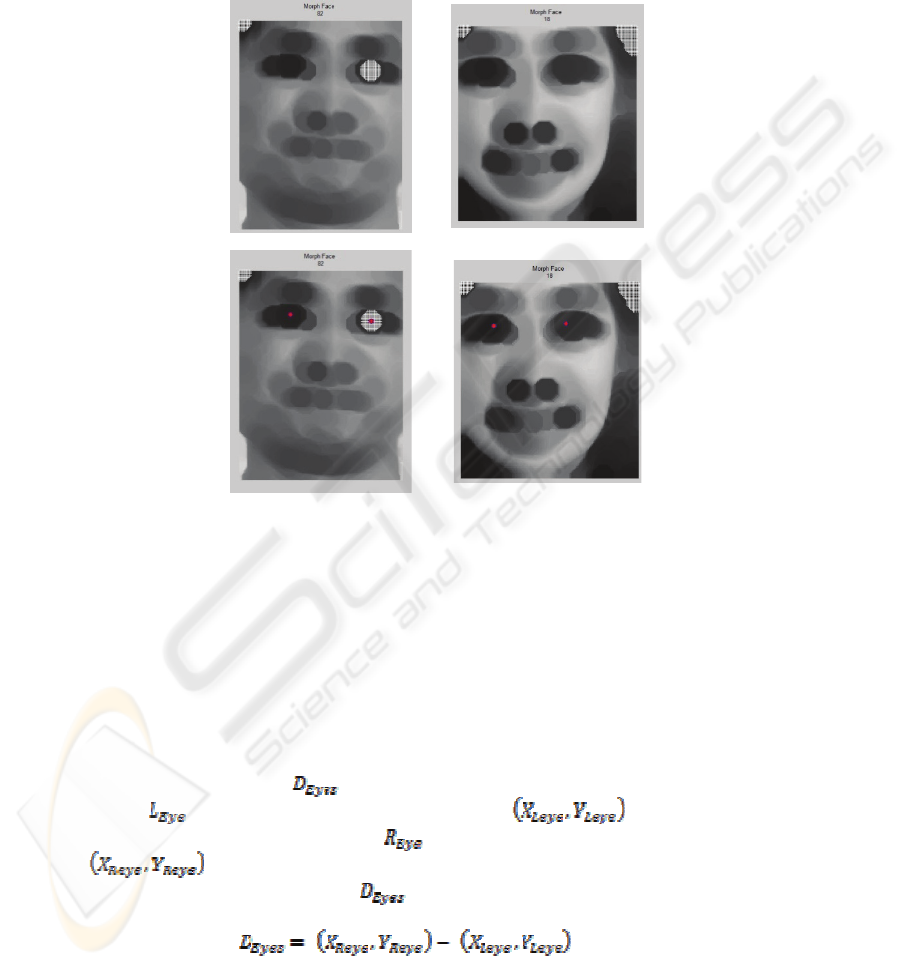

The upper face is scanned individually to search for the pupils of eyes. However,

when the darkest pixel is obtained for every eye, the algorithm is searched again for

all the pixels that have the same value as the darkest one. Fig. 2 shows correct eyes

detection where the search algorithm of the darkest pixel is satisfied. Also, Fig. 3

shows the final eyes detection where the pupil of the eyes estimated the darkest pixels

of each eye.

Fig. 2. The eyes region detection.

Fig. 3. The final eyes detection

37

The location of the pupils is calculated based on the average of the darkest pixel of

each eye. Some experiments gave unsuccessful eyes location detection. These were

corrected by adjusting the distance between them in the order of 15 to 20 pixels. Fig.

4 shows some unsuccessful eyes detection, and correcting this fault detection based

on the distance between the averaged dark pixels.

(a) (b)

(a) Unsuccessful detection is left eye.

(b) Unsuccessful detection is left and right eye.

Upper image is false detection and lower is right re-

detection.

Fig. 4. Re-correct eyes detection.

2.2 Mouth Detection

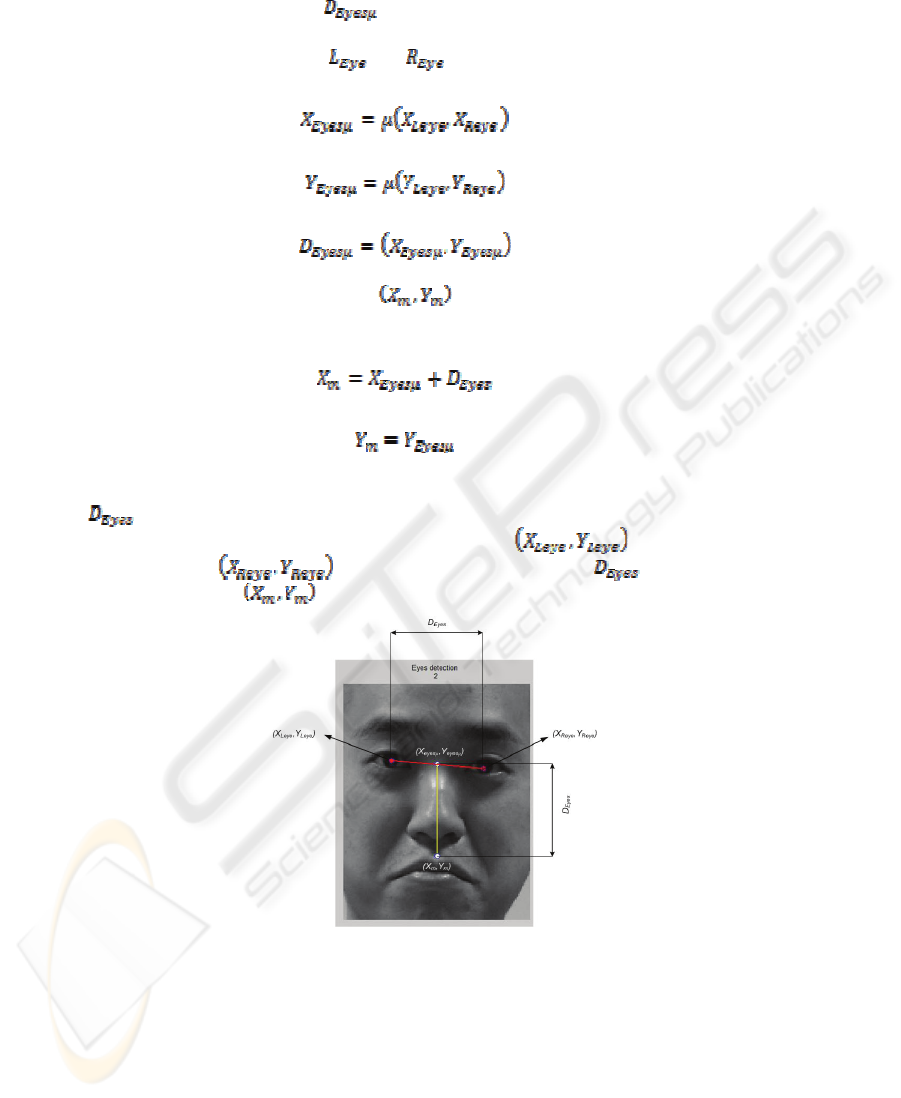

The mouth detection algorithm is presented when the localization of the eyes is

known. Otherwise, the algorithm ignores this face. The mouth position is calculated

according to the distance

between the estimated pupils of both eyes.

The

represents the computed centroid point of the left eye (i.e.

pupil of the left eye), and the

represents the computed centroid point

of the right eye (i.e. pupil of the right eye).

The distance between the eyes

is computed as follows:

(1)

38

The average of the pupils is used to estimate the mouth position that repre-

sents the middle of distance between the eyes illustrated in equation (4). Therefore, it

is computed by averaging the

and as shown in equations (2) and (3).

(2)

(3)

(4)

The centroid point of the mouth

is computed based on equations (1), (2)

and (3) as follows:

(5)

(6)

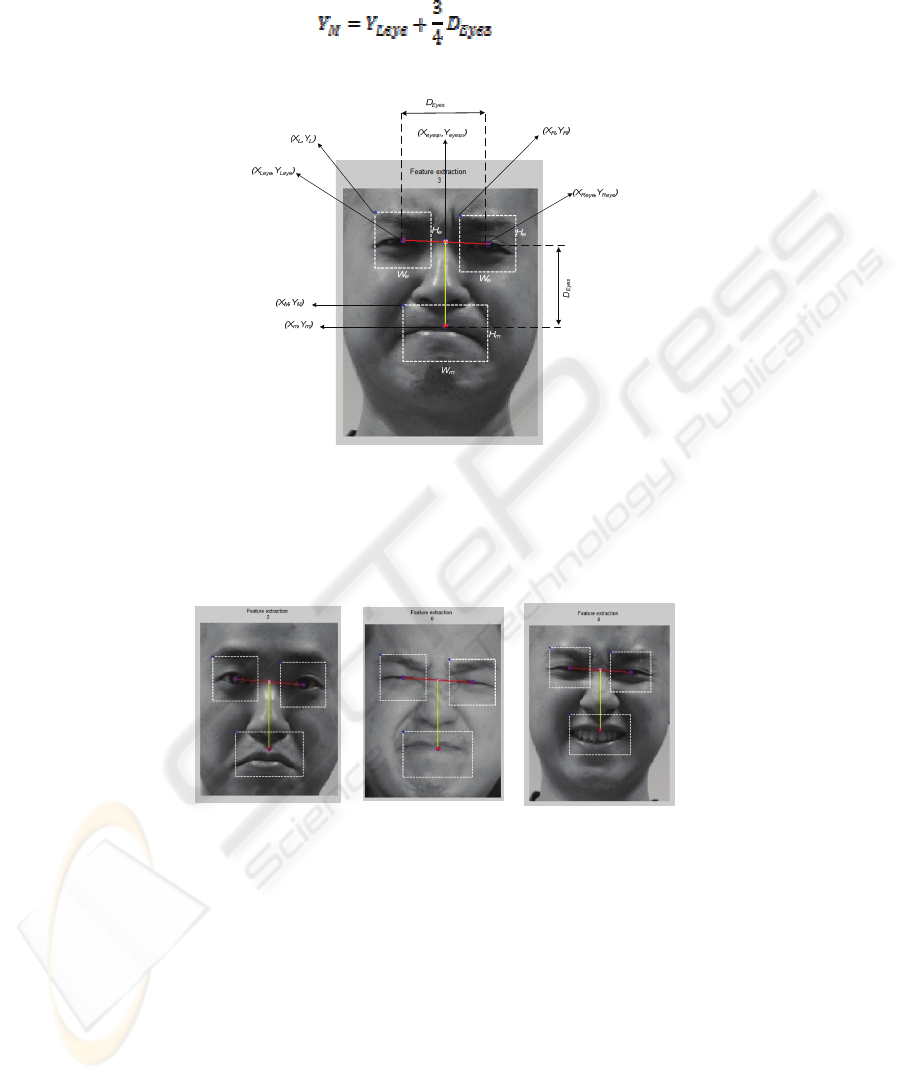

In this work, the facial features are segmented from the face image based on the

.

Fig. 5 illustrates the centroid point of the left eye

, the centroid point

of the right eye

, the distance between the eyes , and the centroid

point of the mouth

.

Fig. 5. Final eyes detection with eyes distance and mouth position estimated.

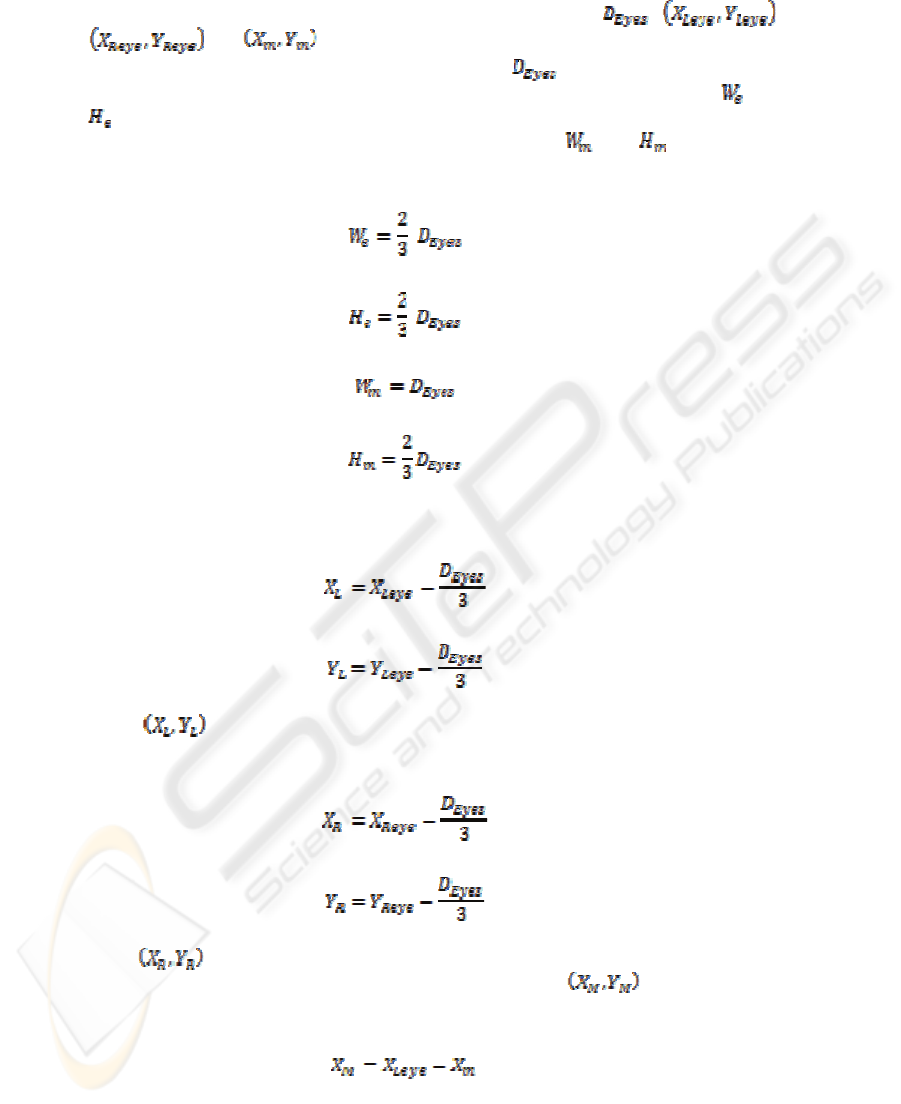

3 Facial Feature Boundaries

39

After the possible facial features are detected, the distances , ,

and are applied to evaluate the features’ boundaries.

The boundaries are determined according to the

based on the experimental

evaluation. The width and height of the facial features are calculated, where

and

are the width and height of the rectangles of each eye illustrated in equations (7)

and (8) respectively. Also, equations (9) and (10) show

and represent the

width and height of the rectangles of the mouth.

(7)

(8)

(9)

(10)

The left eye coordinate can be calculated as:

(11)

(12)

where

is the upper left corner coordinate of the left eye. In the same way, the

right eye coordinate can be calculated as:

(13)

(14)

where

is the upper left corner coordinate of the left eye.

Furthermore, the upper corner of the mouth coordinate

can be calculated

as:

(15)

40

(16)

The boundaries and the centroid points are illustrated in Fig. 6.

Fig. 6. The relationships and positions of facial detection.

Briefly, once the eyes are identified correctly, the mouth is detected from the dis-

tance between the eyes. Then, the boundaries are computed as the following Fig.

shows.

Fig. 7. Some facial features boundaries.

4 Experimental Results

The efficiency of this algorithm was tested on individuals’ images captured as frontal

faces using a digital camera from the same distance and with normal room lighting

conditions. It is well known that the difficulties to locate the features exactly on each

face due to the face structure and the difference of face features. The proposed algo-

rithm located the face features based on the erosion operation on the greyscale facial

41

cropped image and distance between the pupils of the eyes were computed and ig-

nored any image that did not satisfy the location of the eyes correctly. The eyes detec-

tion based on darkest pixels of each eye. As the localization of the eyes is identified,

the mouth location is computed based on the distance between the estimated pupils of

both eyes. The boundaries of facial salient features are computes according to position

based on calculated distance.

This algorithm needs adjusting due to the presence of some incorrect detection of

the location of the eyes as a result of lighting and some occlusions such as glasses. A

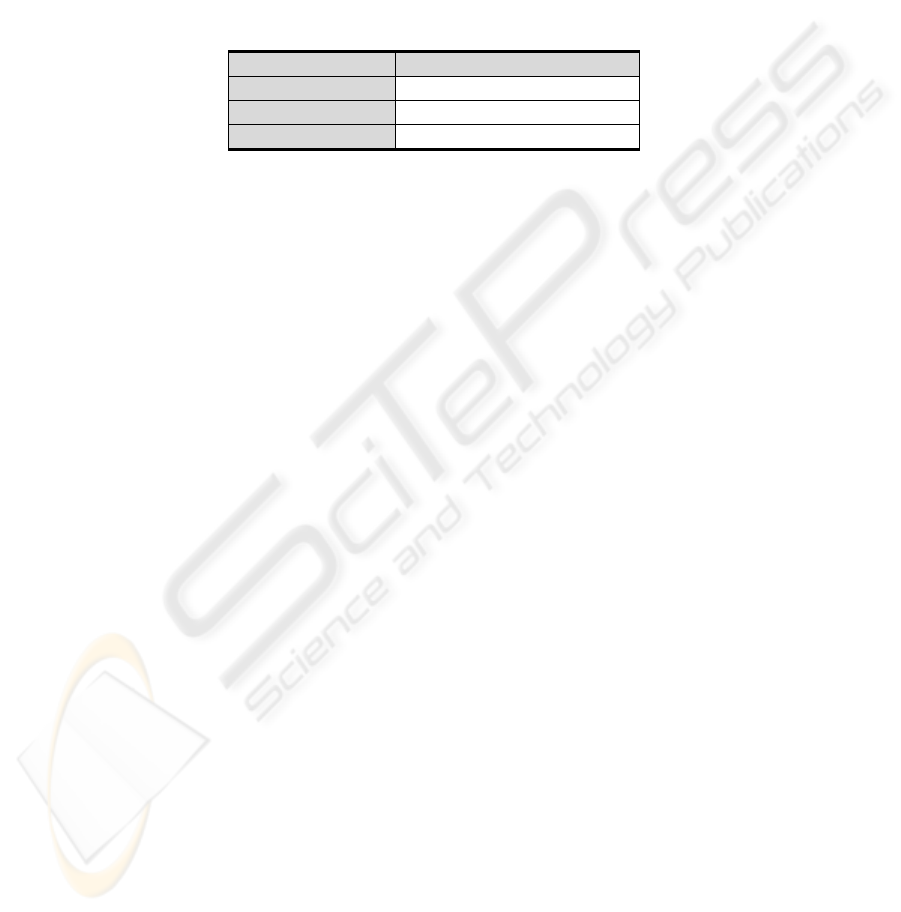

success rate of over 91% has been achieved based on a sample rate of 318 images.

The following table shows the ratio detection for every facial feature.

Features Ratio of Feature detected

Left Eye 96.3%

Right Eye 95.6%

Mouth 91.9%

The experiments’ results show that locating the eyes is more accurate compared to

the mouth. Therefore, further work is needed to increase the accuracy of features

location.

5 Summary and Future Work

This work presents a new algorithm based on morphological process to detect the

eyes localization and use the distance between them to locate the mouth position. The

method defines a morphological operation to extract the important contrast regions of

the face. These features are robust to lighting changes.

Future work will concentrate on improving the mouth detection to reduce the false

rate detection. The false eyes detection can be enhanced as well, which will increase

the ratio of features detected. The outcome of this algorithm can be used in other

facial detection systems such as the analysis of facial expressions.

Acknowledgements. We would like to thank Mr. Xiao Guo, a PhD researcher at

University of Bedfordshire University, Computer Science and Technology, who

kindly allowed the use of his photos in this paper.

References

1. Berbar, M. A., Kelash, H. M., Kandeel, A. A.: Faces and Facial Features Detection in Col-

our Images. IEEE Proceedings of the Geometric Modeling and Imaging. 7 (2006) 209-214

2. Gomez-Gil, P., Ramirez-Cortes, M., Gonzalez-Bernal, J., Pedrero, A.G., Prieto-Castro, C.I.,

Valencia, D.: A Feature Extraction Method Based on Morphological Operators for Auto-

matic Classification of Leukocytes. Seventh Mexican International Conference on Artificial

Intelligence.(2008) 213-219

42

3. Hannuksela, J., Heikkila, J., Pietikainen, M.: A real-time facial feature based head tracker.

Proc. Advanced Concepts for Intelligent Vision Systems, Brussels, Belgium. (2004)267-

272

4. Hsu, R. L., Abdel-Mottaleb, M., Jain A. K.: Face detection in colour images. IEEE Trans-

actions on Pattern Analysis and Machine Intelligence. 24(2002) 696–706

5. Intrator, N., Reisfeld, D., Yeshurun, Y.: Extraction of Facial Features for Recognition using

Neural Networks. Proc. Int. Work on Automatic Face and Gesture Recognition. (1995)

260-265

6. Jiao,Z. , Zhang, W. , Tong,R.: A Method for Accurate Localization of Facial Features.

First International Workshop on Education Technology and Computer Science.3(2009)

261-264

7. Lekshmi, V., Kumar, S., Vidyadharan, D. S.: Face Detection and Localization of Facial

Features in Still and Video Images. First International Conference on Emerging Trends in

Engineering and Technology. (2008) 95-99

8. Mahoor, M. H., Abdel Mottaleb, M : Facial Features Extraction in Colour Images Using

Enhanced Active Shape Model. Automatic Face and Gesture Recognition.4 (2006) 144 -

148

9. Maruf, M., Rezaei, M. R.: Pain Recognition Using Artificial Neural Network. IEEE Inter-

national Symposium on Signal Processing and Information Technology. (2006) 28-33

10. Mohamed, R., Hashim, M. F., Saad, P., Yaacob, S.: Face Recognition using Eigenfaces and

Neural Networks. American Journal of Applied Sciences.2(2006) 1872-1875

11. Phimoltares, S., Lursinsap, C., Chamnongthai, K.: Face detection and facial feature local-

ization without considering the appearance of image context. Image and Vision Computing.

25(2007) 741-753

12. Wong, K. W., Lam, K. M., Siu, W. C.: A robust scheme for live detection of human faces

in colour images. Signal Processing: Image Communication.18(2003) 103-114

13. Yang, M. H., Kriegman, D. J., Ahuja, N: Detecting Faces in Images: A Survey. IEEE

Transactions on Pattern Analysis and Machine Intelligence. 24(2002) 34-58

14. Zanella, V. Vargas, H. Rosas, L.V.: Automatic Facial Features Localization. Computational

Intelligence for Modelling, Control and Automation.1(2005) 290-264

43