USABILITY PROBLEMS IN A HOME TELEMEDICINE SYSTEM

Anders Bruun and Jan Stage

Department of Computer Science, Aalborg University, Selma Lagerlöfs Vej 300, DK-9220 Aalborg East, Denmark

Keywords: Home telemedicine, Usability evaluation, Usability problems.

Abstract: Home telemedicine systems have the potential to reduce health care costs and improve the quality of life for

many patients, including those suffering from chronic illness. This requires that the systems have

functionality that fulfils relevant needs. Yet it also requires that the systems have a high level of usability in

order to enable their users to employ the required functionality, especially if the target user group is elderly

people. This paper reports from a usability evaluation of a home telemedicine system. Five elderly persons

carried out specified tasks with the system, and based on that we identified usability problems with the

system. The problems are presented, analysed in relation to 12 different usability themes and related to

results from other evaluations of similar systems.

1 INTRODUCTION

There is growing interest in devices for home

telemedicine. At world level, the life expectancy will

increase from 2005 to 2050 to 67 years, and in

developing countries to 76.5 years (UN, 2006). This

has considerable consequences for healthcare

budgets. Another key challenge is that the number of

people with chronic illness is increasing and, due to

frequent checkups at hospitals, these patients face

reduced quality of life, as they have limited freedom

to perform their daily activities.

The aim of home telemedicine is to reduce health

care costs and at the same time increase the quality

of life for patients. Home telemedicine systems

allow patients to conduct measurements from their

own home (e.g. glucose measurements for diabetes

patients) and send the results to the hospital. Other

systems put even more emphasis on self-

management by supporting patients to take care of

their own treatment. If home telemedicine systems

are successful, they will reduce the workload of

medical staff at the hospitals and in the patients’

home, and relieve the patients from visits to the

hospital or even hospitalization (Kaufman et al.,

2003).

For home telemedicine systems to be successful,

they must be safe and provide the required

functionality. Many researchers have inquired into

these aspects. Unfortunately, there are numerous

examples of systems that fail despite having the

right functionality, because the prospective users

cannot use the system for its intended purpose. A

problematic or incomprehensible user interface is a

typical source of such problems.

Usability is a measure of the extent to which

prospective users are able to apply a system in their

activities (Rubin, 1994). A low level of usability

means that users cannot work out how to use a

system, no matter how elaborate its functionality is

(Nielsen, 1993).

The potential of home telemedicine systems can

only be realized if the systems have a high level of

usability. Thus a high level of usability is a

prerequisite for achieving savings on the healthcare

costs and a better quality of life for the patients

through use of home telemedicine systems. A high

level of usability is particularly important when the

main user group is elderly people, who may be

constrained by motor, perceptual, cognitive and

general health limitations (Fisk and Rogers, 2002)

and, in addition, may have a low level of computer

literacy.

1.1 Usability Evaluation of Health Care

Systems

A number of research activities have studied home

telemedicine systems and frameworks that aid in

reducing the societal and individual costs of

chronically ill elderly. The focus here has been on

the functionality that is required from such systems.

Examples are technology for ubiquitous biological

monitoring using mobile phones, wearable sensory

302

Bruun A. and Stage J. (2010).

USABILITY PROBLEMS IN A HOME TELEMEDICINE SYSTEM.

In Proceedings of the Third International Conference on Health Informatics, pages 302-309

DOI: 10.5220/0002744603020309

Copyright

c

SciTePress

devices, multi modal platforms, framework and

architectural descriptions and literature reviews of

observed medical effects (Eikerling, et al., 2009;

Fensli and Boisen, 2008; Jaana and Paré, 2006;

Pascual et al., 2008; Sasaki et al., 2009; Sashima et

al., 2008; Souidene et al., 2009; Taleb et al., 2009).

The target user group of these systems is primarily

elderly people.

Kaufman et al. (2003) conducted a case study

where a home telemedicine system for elderly

diabetes patients was evaluated through interviews,

cognitive walkthrough and field usability testing.

The evaluated system featured video conferencing,

transmission of glucose and blood pressure readings,

email, online representation of clinical data and

access to educational materials. The study focuses

on a methodology for conducting usability

evaluation. It also provides a basic overview of

barriers such as individual competencies, system

usability issues and contextual variables. Two user

examples of these barriers are provided.

A significant number of studies deal with health

care systems where the target user group is

professional medical staff. This includes evaluation

of the usability of desktop, mobile and other

healthcare systems with the aim of reducing medical

errors introduced by technology. Examples are

systems designed for supporting handheld

prescription writing, decision support, ordering of

lab tests, patient records, family history tracking etc.

(Ginsburg, 2004; Johnson et al., 2004; Kushniruk et

al., 1996; Kushniruk and Patel, 2004; Kushniruk et

al., 2005; Linder et al., 2006; Peleg et al., 2009;

Peute and Jaspers, 2007).

The research results presented here represent

significant work on the needed functionality of home

telemedicine systems as well as on methods for

evaluating the usability of such systems. There is

also considerable work on usability problems

experienced with systems that are targeted at the

medical staff. Yet much less efforts have been

devoted to identification of usability problems in

systems targeted at patients.

1.2 Objective

In this paper, we present a study where we evaluated

the usability of a home telemedicine system targeted

at elderly people. The aim was to better understand

key usability problems that such users experienced

when using home telemedicine systems. A better

understanding of these problems is vitally important

for future design of home telemedicine systems with

a high level of usability.

In the following we describe the home

telemedicine system and the usability evaluation we

conducted with a group of elderly people (section 2).

Section 3 presents the results with focus on key

usability problems experienced by the users with this

specific system. In section 4, we discuss these

usability problems in relation to results found in

other studies in order to emphasize more general

problems for home telemedicine systems targeted at

patients. Finally, section 5 provides the conclusion.

2 METHOD

In this section, we describe our usability evaluation

of the home telemedicine system.

2.1 Usability Evaluation

System. The system was a telemedicine system

intended for home use by elderly people to monitor

their health. It included a Health Care System device

(HCS) for data collection and transmission with a

display, a speaker and four buttons for interaction,

see Figure 1. As the manufacturer of the HCS

wishes to remain anonymous we do not provide a

reference to the system evaluated.

Y

N

Figure 1: Sketch of the data collection and transmission

device (HCS) of the evaluated healthcare system.

With secondary devices such as blood pressure

meter, blood sugar meter and scales, users are able

to conduct measurements at home and transfer these

to the HCS via Bluetooth, an infrared link or a serial

cable. At regular intervals, the device also asks the

patients various pre-programmed questions

regarding their health.

The system automatically transfers collected data

to a health care center, where a nurse, doctor or

other person is monitoring the health for a group of

elderly patients. The system is sent to the patients in

a package with a manual.

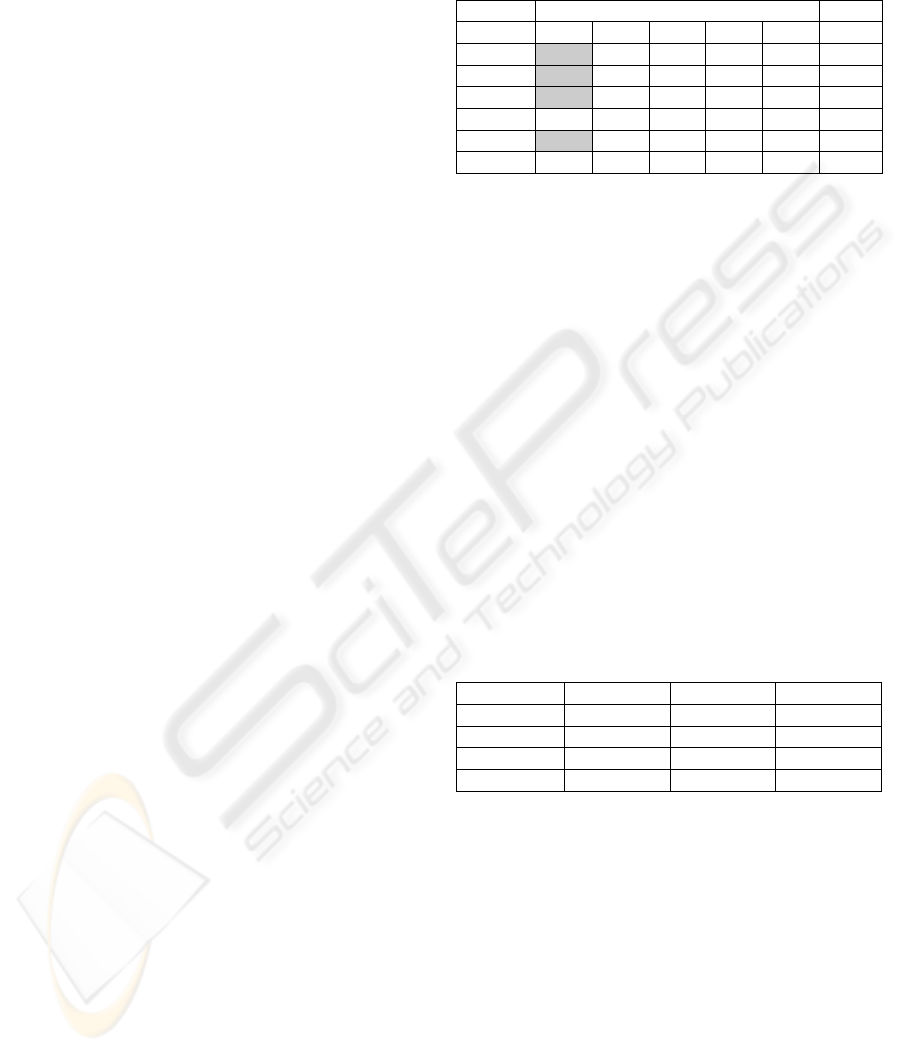

Setting. The tests were conducted in a usability

laboratory, see Figure 2. In Subject room 1, a test

participant was sitting at the table operating the

system. The test monitor was sitting next to the

participant, see Figure 3. Two data loggers and a

USABILITY PROBLEMS IN A HOME TELEMEDICINE SYSTEM

303

technician who controlled cameras and microphones

were in the control room during all tests.

Subject Room 1

Sub

j

ect

Room 2

Control

Room

curtain

operator

Figure 2: The setting in the usability laboratory.

Participants. The system was evaluated with 4 male

and 1 female users. It should be noted that in the

area of Human Computer Interaction it is customary

to conduct formative usability evaluations using 5

test participants as this, from a cost/benefit point of

view, is the most feasible. This number is based on

studies conducted by Nielsen and Landauer (1993)

showing that by using 5 test participants evaluators

are able to identify 85 % of the total number of

usability problems.

Since the system primarily is intended for use by

elderly people, we selected test subjects ranging

from 61 to 78 years of age. None of them had

previous experience with this or any similar system.

Their experience in using electronic equipment in

general varied; two were novices, two were slightly

experienced and the last was experienced.

Table 1: Task assignments used in the usability tests.

Task # Task

1

Connect and install the HCS and secondary devices.

2

Transfer the data from the blood sugar meter to the

HCS. The blood sugar meter is connected using a

cable.

3

Measure the weight and transfer the data from the

scale to the HCS.

4

A new wireless blood sugar meter is used. Transfer

the data from this to the HCS.

5

Clean the equipment.

Six usability evaluators were involved, all

graduate students specializing in human-computer

interaction and working on their master thesis. They

were all experienced in conducting usability

evaluations. None of them had worked with health

care systems before, and none of them knew the

product in advance. In the evaluation, one of them

served as test monitor in all five tests, and two

served as loggers.

Procedure. Before the test started, the test

participants were asked to fill in a questionnaire with

demographic information. The test monitor then

introduced the system and evaluation procedure.

This included an introduction to the think-aloud

protocol. The tasks were given to the test subjects

one at a time. The test monitor’s job was primarily

to ensure that the test participants were thinking

aloud and give them advice if they got completely

stuck in a task. There were five tasks, see Table 1.

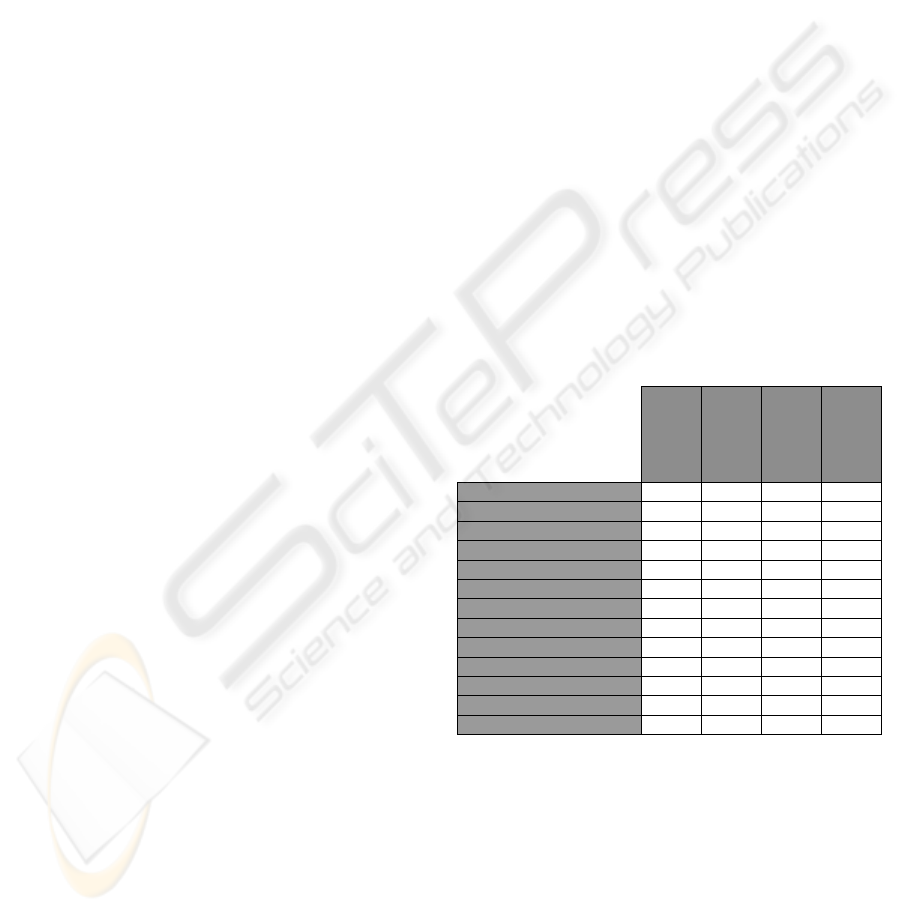

Data Collection. All test sessions were recorded

using video cameras and a microphone. The videos

showed the HCS screen and a small picture in

picture with the user’s face, see Figure 3. We

recorded a total of four hours of video. Two loggers

made written log files during the tests.

Figure 3: A test participant and the test monitor. The

picture is from the video recording. The small picture in

the upper right hand corner shows the interaction.

Data Analysis. The data analysis was carried out

separately applying two different analysis methods:

Video Based Analysis (VBA) and Instant Data

Analysis (IDA), see the following two subsections.

The purpose of using two methods was to get as rich

and extensive a problem list as possible. Each team

employed the procedure described below.

The problem lists from the VBA and IDA

analysis were merged into a total list of identified

usability problems. The test monitor and the data

logger from IDA and the three evaluators from VBA

did this. Disagreements were discussed until

consensus was reached. In cases where the VBA and

IDA lists did not have the same categorization for a

particular problem, the proper categorization was

discussed until agreement was reached. In this

process, some problems were split into more

detailed problems or merged with other problems.

2.2 Video-based Analysis (VBA)

The Video-Based Analysis was conducted in

accordance with Rubin (1994). The three evaluators

HEALTHINF 2010 - International Conference on Health Informatics

304

analysed the video material individually and made a

list of identified usability problems. The severity of

each problem was also categorised as either

“critical”, “serious” or “cosmetic” (Molich, 2000).

The three lists of usability problems were

discussed in the team and merged into one list of

VBA problems. When there was disagreement or

doubt whether problems should be combined or

split, or how they should be categorized, the video

material was reviewed and discussed until

agreement was reached. This included agreement

about the test subjects who experienced each

problem. To measure the evaluator effect, we

calculated the any-two agreement. The result was

40.2%, which is well above the minimum of 6% and

close to the 42% maximum found in other studies

(Hertzum and Jacobsen, 2003).

2.3 Instant Data Analysis (IDA)

The test monitor, one of the data loggers and a

facilitator, who did not observe the tests, conducted

this analysis immediately after all test sessions were

completed. The analysis was conducted according to

the IDA method (Kjeldskov et al., 2004).

The IDA analysis involved three steps:

brainstorm, task review and note review. During

these steps, the facilitator noted and organized all

usability problems on the whiteboard as they were

identified by the test monitor and the logger. After

completing the third step, the problems were

categorized as “critical”, “serious” or “cosmetic”

with the same definition as in the VBA analysis.

Finally, the test monitor and logger left, and the

facilitator wrote up the list of usability problems

from the notes on the whiteboard. The list was

validated and corrected by the test monitor and data

logger the following day.

3 RESULTS

This section describes the usability problems we

identified in the evaluation of the HCS device.

3.1 Task Completion Time and Rate

Table 2 provides an overview of the time it took

participants to complete each task. A grey cell

indicates that a particular participant was unable to

solve this task on his/her own and therefore received

significant help from the test monitor; this is referred

to as task completion rate. All users spent more time

completing task 1 (connection and installation)

compared to any other task, and 4 out of the 5 users

could not complete this task without help.

Table 2: Task completion time and completion rate.

Task

User 1 2 3 4 5 Total

1

33:25 10:10 08:47 07:37 01:15 1:01:14

2

33:44 09:34 04:30 04:54 01:00 53:42

3

28:25 02:26 02:45 05:08 01:24 40:08

4

18:43 02:43 04:24 04:07 01:19 31:16

5

26:05 01:06 03:31 04:45 00:41 36:08

Average

28:09 05:12 05:35 05:18 01:08 45:22

There are noticeable differences between the

time each participants spent on completing the other

four tasks. For task 2, the time varies between 1 and

10 minutes. If a user is facing problems, it is very

difficult to recover. For tasks 3, there is almost a

similar variation. For tasks 4 and 5, there is very

little variation. Tasks 2 and 4 are the same except

that task 2 is with a wired device, while task 4 is

with a wireless device. The difference may be due to

the wireless connection, however there may also be

a learning effect from task 2 to 4.

3.2 Identified Usability Problems

Table 3 shows the number of critical, serious and

cosmetic problems identified using the VBA and

IDA evaluation methods. By merging the VBA and

IDA problem lists we identified a total of 51

usability problems.

Table 3: Number of identified usability problems.

VBA IDA Total

Critical

14 15 14

Serious

15 13 15

Cosmetic

18 7 20

Total

47 35 51

3.3 Usability Themes

To get a better understanding of the different types

of usability problems, we have categorized them in

terms of 12 different usability themes. Below, we

briefly explain the meaning of each theme based on

Nielsen et al. (2006).

Affordance relates to issues on the users

perception versus the actual properties of an object

or interface.

Cognitive load regards the cognitive efforts

necessary to use the system.

Consistency concerns the consistency in labels,

icons, layout, wording, commands etc. on the

different screens.

USABILITY PROBLEMS IN A HOME TELEMEDICINE SYSTEM

305

Ergonomics covers issues related to the physical

properties of interaction.

Feedback regards the manner in which the

interface relays information back to the user on an

action that has been done and notifications about

system events.

Information covers the understandability and

amount of information presented by the interface at

a given moment.

Interaction styles concerns the design strategy

and determines the structure of interactive resources

in the interface.

Mapping is about the way in which controls and

displays correlate to natural mappings and should

ideally mimic physical analogies and cultural

standards.

Navigation regards the way in which the users

navigate from screen to screen in the interface.

Task flow relates to the order of steps in which

tasks ought to be conducted.

User’s mental model covers problems where

the interactive model, developed by the user during

system use, does not correlate with the actual model

applied in the interface.

Visibility regards the ease with which users are

able to perceive the available interactive resources at

a given time.

3.4 Distribution of Identified Problems

Table 4 shows the total number of identified

usability problems distributed on usability themes

and severity ratings. This shows that the users

experienced problems from almost all categories,

except cognitive load and interaction style.

The highest numbers of problems relate to

information and user’s mental model which account

for 17 and 10 problems respectively. Collectively

these themes include 53 % of all identified usability

problems of which 7 are critical, 11 serious and 9

cosmetic. The remaining 10 problems (20 %) relate

to the themes affordance (4), consistency (1),

ergonomics (2), mapping (1), navigation (1) and task

flow (1).

One of the problems related to the information

theme concerned the user manual, which illustrates

two possible ways of connecting the HCS to the

phone line. In the manual layout, the illustrations

were placed on opposite sides of an A5 brochure,

which some of the participants interpreted as a

sequence of steps. This resulted in some participants

trying to connect the device like in the first picture

and afterwards connecting the HCS as described by

the second illustration.

A problem related to the user’s mental model

was identified during connection of the Bluetooth

scale where participants were looking for a cable to

connect this to the HCS, thereby exhibiting that they

did not know how to connect these two devices.

14 problems relate to the themes feedback and

visibility (7 in each theme) and account for 27 % of

the total number of problems. 4 of these problems

are critical, 3 serious and 7 cosmetic.

One of the problems with missing feedback was

identified when participants had answered all of the

pre-programmed questions. When the questions

were completed, the display showed the idle screen

with the company logo and did not provide feedback

to the users of whether they were finished or not.

This resulted in some of the users looking for a way

to finish and others thought they needed to answer

more questions.

A visibility related issue concerned the volume

buttons on the HCS. A participant wanted to

manipulate the volume and could not find the button,

and therefore he tried pressing all other buttons on

the device (“Y”, “N”, up and down) but with no

result.

Table 4: Total number of identified problems distributed

according to usability themes and severity.

Critical

Serious

Cosmetic

Total

Affordance

2 2 4

Cognitive load

Consistency

1 1

Ergonomics

2 2

Feedback

1 3 3 7

Information

5 8 4 17

Interaction style

Mapping

1 1

Navigation

1 1

Task flow

1 1

User’s mental model

2 3 5 10

Visibility

3 4 7

Total

14 15 20 51

3.5 Connection and Installation

As illustrated in Table 2 above, the task completion

times and completion rates indicate that connection

and installation of the HCS (task 1) was particularly

tedious and problematic.

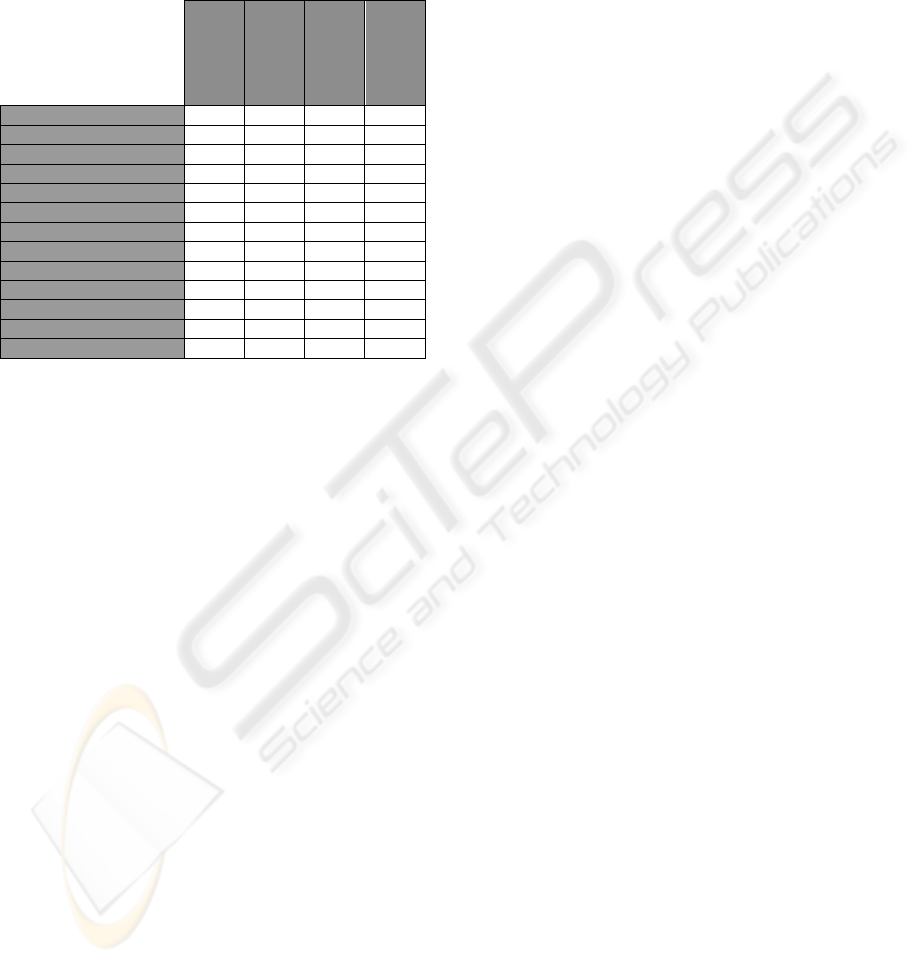

Table 5 shows the distribution of usability

problems on themes, but only for task 1. Thus it

represents a subset of the numbers in Table 4. This

illustrates that 32 of the 51 problems (63 %) were

identified during connection and installation of the

HEALTHINF 2010 - International Conference on Health Informatics

306

device (task 1). Almost all critical and serious

problems were found during this task where 11

usability problems out of a total of 14 (79 %) are

critical, 12 out of 15 (80 %) serious and 9 out of 20

(45 %) cosmetic.

Table 5: Identified problems related to task 1: connecting

and installing the device.

Critical

Serious

Cosmetic

Total

Affordance

1 1 2

Cognitive load

Consistency

Ergonomics

1 1

Feedback

1 1 1 3

Information

3 8 4 15

Interaction style

Mapping

1 1

Navigation

1 1

Task flow

User’s mental model

2 2 2 6

Visibility

3 3

Total

11 12 9 32

For this task, most problems also relate to

information and user’s mental model. The 15

problems with information contain all serious and

cosmetic instances and 3 of the total of 5 critical

problems were observed in task 1. When considering

the 6 problems related to the user’s mental model,

we found that all critical (2) and almost all serious

problems (2 of 3) were encountered in task 1.

The rest of the 11 problems identified in task 1

were distributed on affordance (2), ergonomics (1),

feedback (3), mapping (1), navigation (1) and

visibility (3). It is worth noting that all critical

problems relating to these themes were observed in

task 1.

As an example of an information-related

problem in task 1, most of the participants did not

understand the text “Detecting phone line” displayed

during setup. This resulted in several participants

lifting the nearby phone receiver and pressing

various buttons on the HCS.

Another example regarding information was the

term “Line”, which was represented on the back of

the device and in the manual, but it made no sense to

the participants.

One of the problems regarding the user’s mental

model was when the users, in order to install the

HCS, had to connect a cable from the phone line in

the wall to the correct port on the HCS device in

order to communicate with the remote server.

However, the participants did not know which cable

to use and some mistakenly tried to connect the

phone and the HCS.

Another problem concerning the user’s mental

model was identified when the system asked the user

to input a phone line prefix to bypass local in-

building phone numbers. In our case, the users

needed to press “0” as prefix in order to make the

HCS able to communicate with a server outside the

building. This prefix had to be selected on the HCS,

but some participants pressed “0” on the phone with

no result.

3.6 Qualitative Interviews

As part of the usability evaluation, we conducted a

post-test interview with each user. The users

emphasized that they “felt there were too many

devices and cords that needed to be connected

before the HCS was operational”. It was also stated

that the printed manual should be “redesigned and

include more and better illustrations like the ones

known from Lego and Ikea manuals”.

Comments about technical lingo emphasized that

words like “Detecting” and “Initializing” were

unknown to the participants. Some also expressed

that they needed more system feedback on what to

do;, one of the participants said: “When the device

does this and that, I need further instructions on how

to respond”. The missing feature to enable the user

to undo an action was commented by some of the

participants; one stated that “I pressed the wrong

button when setting the date, but I was unable to go

back and correct the error”.

The interviews also revealed issues not identified

during data analysis of usability problems. Most

participants noted that they received no information

about when and how the HCS was sending results to

the hospital or care center. They were unsure

whether the results were sent automatically or not.

The fonts on the display were perceived as clear

and easy to read, however, some participants

expressed that the soft menus (menus placed in the

bottom area of the display mapping to the “Y” and

“N” buttons, see Figure 1) where hard to read. This

was caused by the relatively steep edge connecting

the plastic cover and the display. The participants

felt that they had to move closer to the device when

reading the soft menu texts.

On the positive side all participants said that the

HCS had good potential. Some expressed “Once the

system is connected it would be easy to use”. They

would all prefer to use this type of device compared

to hospital visits.

USABILITY PROBLEMS IN A HOME TELEMEDICINE SYSTEM

307

4 DISCUSSION

In this section we discuss our findings in relation to

other studies with usability evaluation of health care

systems.

We have found one previous evaluation of a

system for use by patients. This is Kaufman et al.

(2003) who asked their users to solve the following

tasks using a home telemedicine unit (HTU):

Measure glucose level, make blood pressure

readings, access an educational website, send an

email and change the calendar. These tasks differ

partly from our study, but there is an overlap in the

blood glucose reading task. Although most tasks

differed, we identified some similar problems. They

identified problems related to unnecessarily complex

tasks, which can be compared to the problems we

found during connection and installation of the HCS.

Their study also revealed problems concerning non-

transparent screen transitions, which are comparable

to our problems with missing feedback. We

experienced system crashes and restarts that

frustrated several participants, and they noted issues

regarding system instability. Information-related

problems were experienced both in their study,

where the users did not understand the blood

pressure expression “212/89” referring to the

systolic and diastolic values, and in our study the

users also experienced such problems, e.g. they did

understand the terms “initializing” and “detecting”.

Mapping problems were also found in both studies.

Their users could not establish a correspondence

between a set of numbers presented one way on the

blood pressure meter and another way in the PC

application. In our case the users could not establish

a connection between illustrations in the manual and

the actual layout of the physical system. Finally,

both studies identified issues related to the user’s

mental model and visibility.

Kaufman et al. (2003) conducted a nonverbal

analysis through comprehensive microanalysis,

which provided further evidence on participant

experiences. As noted in the paper, nonverbal

analysis is especially useful in situations where

indexicality is challenging, e.g. where the users lack

a clear vocabulary when referencing interface

objects like “scroll bar”, “drop down menu”, “check

box”, “radio button” etc. This type of microanalysis

is important in order to cover as many usability

problems as possible. Yet it is also extremely time

consuming and expensive, which is acknowledged

by the authors.

There is a number of studies that focus on

usability evaluation of systems designed for use by

medical staff (Kushniruk et al., 1996; Kushniruk and

Patel, 2004; Kushniruk et al., 2005). In these studies,

the usability problems they have identified are

distributed over a set of usability themes with

various levels of abstraction ranging from

information content, procedure (task flow),

comprehension of graphics and text (user’s mental

model) and overall system understandability to data

entry and printing. The results from these studies

confirm that information-related problems were

observed in all of these. However, the percentage of

information-related problems differs considerably

from our study, where it is considerably higher. In

Kushniruk et al. (2005) 16% of the identified

problems are information related, while the number

is 7% in Kushniruk and Patel (2004) and Kushniruk

et al. (1996) (these are based on the same

experiment). In our results, 33% of the identified

problems were information related. For problems

regarding the user’s mental model (comprehension)

Kushniruk et al. (1996) observed 5% in this

category. In our study this was 20%, thus we

observed a relatively higher amount of this type of

usability problem. In Kushniruk et al. (2005) and

Kushniruk et al. (1996) the researchers found task

flow related problems (procedure/operation

sequence to be 15% and 6% respectively. Our study

showed a lower percentage of 2% regarding task

flow issues. The majority of issues identified in

Kushniruk et al. (2005) were visibility related with a

higher percentage of 26% compared to the 14% in

our study. Thus, considering usability themes from

systems designed for medical professionals, we

identified a relatively high number of information

related problems. This is also the case for problems

concerning the user’s mental model. On the other

hand we observed a relatively low number of task

flow and visibility related issues.

5 CONCLUSIONS

In this paper, we have presented results from a

usability evaluation of a home telemedicine system.

The purpose of this was to emphasize key problems

that designers of such systems should be aware of.

We identified the major usability problems to be

within these five categories: Difficult to connect and

install the system, the information provided is

difficult to understand, the system does not conform

to the user’s mental model, the feedback is

insufficient and the resources of the system are not

visible to the user.

This result is restricted in the sense that it is

based on a usability evaluation of a single system

with five users. However, we have compared our

HEALTHINF 2010 - International Conference on Health Informatics

308

results to the limited number of related research

results. We found a single evaluation of a system

targeted at patients, like the one we have evaluated,

and there are a few evaluations of systems targeted

at medical staff. Both types of evaluations confirm

the key problems we have identified.

This study has uncovered a surprisingly high

number of usability problems given that the system

has a fairly simple functionality. It would be

interesting to conduct usability evaluations of other

systems targeted at patients, in particular of more

complex systems. It seems like the challenges for

designers of home telemedicine systems are

significant.

REFERENCES

Eikerling, H-J., et al., 2009. Ambient Healthcare Systems -

Using the Hydra Embedded Middleware for Implementing

an Ambient Disease Management System. In

Proceedings of the Second International Conference

on Health Informatics. INSTICC Press.

Fensli, R., Boisen, E., 2008. Human Factors Affecting the

Patient’s Acceptance of Wireless Biomedical Sensors. In

Biomedical Engineering Systems and Technologies,

International Joint Conference. Springer. INSTICC

Press.

Fisk AD, Rogers WA, 2002. Health Care of Older Adults:

The Promise of Human Factors Research. In Human

factors interventions for the healthcare of older adults.

Lawrence Erlbaum.

Ginsburg, G., 2004. Human Factors Engineering:A Tool

for Medical Device Evaluation on Hospital

Procurement Decision-Making. In Journal of

Biomedical Informatics. Elsevier.

Hertzum, M. and Jacobsen, N. E.., 2003 The Evaluator

Effect: A Chilling Fact About Usability Evaluation

Methods. In International Journal of Human

Computer Interaction. Taylor & Francis.

Jaana M., Paré, G., 2006. Home Telemonitoring of

Patients with Diabetes: A Systematic Assesment of

Observed Effects. In Journal of Evaluation in Clinical

Practice. Blackwell Publishing.

Johnson, C. M., et al., 2004. A User-Centered Framework

for Redesigning Health Care Interfaces. In Journal of

Biomedical Informatics, Elsevier.

Kaufman, D. R., et al., 2003. Usability in the Real World:

Assessing Medical Information Technologies in

Patient’s Homes. In Journal of Biomedical

Informatics, Elsevier.

Kjeldskov, J., et al., 2004. Instant Data Analysis:

Evaluating Usability in a Day. In Proceedings of

NordiCHI 2004. ACM Press.

Kushniruk, A. W., et al., 1996. Cognitive Evaluation of

the User Interface and Vocabulary of an Outpatient

Information System. In Proceedings of AMIA Annual

Fall Symposium.

Kushniruk, A.W., et al., 2005. Technology Induced Error

and Usability: The Relationship Between Usability

Problems and Prescription Errors When Using a

Handheld Application. In International Journal of

Medical Informatics, Elsevier.

Kushniruk, A.W., Patel, V. L., 2004. Cognitive and

Usability Engineering Methods for the Evaluation of

Clinical Information Systems. In International Journal

of Medical Informatics, Elsevier.

Linder, J. A., et al., 2006. Decision support for acute

problems: The role of the standardized patient in usability

testing. In Journal of Biomedical Informatics.

Elsevier.

Molich, R., 2000. User-Friendly Web Design (in Danish),

Ingeniøren Books. Copenhagen.

Nielsen, C. M., et al., 2006. It’s Worth the Hassle! The

Added Value of Evaluating the Usability of Mobile

Systems in the Field. In

Proceedings of NordiCHI.

ACM Press.

Nielsen, J., 1993. Usability Engineering. Morgan

Kaufmann. San Diego.

Nielsen, J. and Landauer, TK, 1993. A mathematical

model of the finding of usability problems. In

Proceedings of the SIGCHI conference on Human

factors in computing systems. ACM Press.

Pascual, J., et al., 2008. Intelligent System for Assisting

Elderly People at Home. In Proceedings of the First

International Conference on Health Informatics.

INSTICC press.

Peleg, M., et al., 2009. Using Multi-Perspective

Methodologies to Study Users’ Interactions with the

Prototype Front End of a Guideline-Based Decision

Support System for Diabetic Foot Care. In

International Journal of Medical Informatics,

Elsevier.

Peute, L.W., Jaspers, M.W., 2007. The significance of a

usability evaluation of an emerging laboratory order

entry system. In International Journal of Medical

Informatics, Elsevier.

Rubin, J., 1994. Handbook of Usability Testing, John

Wiley & Sons. New York.

Taleb, T., et al., 2009. ANGELAH: A Framework for

Assisting Elders at Home. In IEEE journal on selected

areas in communications. IEEE.

Sasaki, J., et al., 2009. Experiments of Life Monitoring

Systems for Elderly People Living in Rural Areas. In

Proceedings of the Second International Conference

on Health Informatics. INSTICC Press.

Sashima, A., et al., 2008. Toward Mobile Healthcare

Services by Using Everyday Mobile Phones. In

Proceedings of the First International Conference on

Health Informatics. INSTICC Press.

Souidene W., et al., 2009. Multi-Modal Platform for In-

Home Healthcare Monitoring (EMUTEM). In

Proceedings of the Second International Conference

on Health Informatics. INSTICC Press.

United Nations, 2006. World Population Prospects.

Dept of Economic and Social Affairs. Obtained at:

http://www.un.org/-esa/population/publications/-

wpp2006/-WPP2006_Higlights_rev.pdf (downloaded

august 20, 2009).

USABILITY PROBLEMS IN A HOME TELEMEDICINE SYSTEM

309