STUDY ON ACQUISITION OF LECTURER AND STUDENTS

ACTIONS IN THE CLASSROOM

Mayumi Ueda, Hironori Hattori, Yoshitaka Morimura

Graduate School of Informatics, Kyoto University, Yoshida Nihonmatsu-cho, Sakyo-ku, Kyoto, Japan

Masayuki Murakami

1

, Takafumi Marutani

2

, Koh Kakusho

3

, Michihiko Minoh

3

1

Research Center for Multi-Media Education, Kyoto University of Foreign Studies

6 Kasame-cho, Saiin, Ukyo-ku, Kyoto, Japan

2

Graduate School of Law, Kyoto University, Yoshida Honmachi, Sakyo-ku, Kyoto, Japan

3

Academic Center for Computing and Media Studies, Kyoto University, Yoshida Nihonmatsu-cho

Sakyo-ku, Kyoto, Japan

Keywords: Lecture archiving system, Students’ viewpoint, Detecting student’s face, Faculty development.

Abstract: Recently, there are many lecture archiving system that provide lecture video, presentation slide and written

text/figure on whiteboard. However, the contents of lecture archiving system are decided by the provider of

the service. In this paper, we propose a new lecture archiving method based on students’ viewpoint. Our

archiving method adopts the viewpoint that the majority of students are watching. We conduct verification

of our proposal method, by preliminary experiment. Furthermore, we consider that our acquisition

technology of lecturer and student action helps lecturers to recognize students’ ROI (Region of Interest) in

the classroom.

1 INTRODUCTION

With the development of information technology in

recent years, web-based learning system including

lecture archiving system has become popular. And

the adoption of system to support teaching and

learning activities has been rapidly increasing in the

higher educational institutions. Recently, many

higher educational institutions distributed lecture

materials, including presentation slide and lecture

video, as a contents of lecture archiving system,

OCW (OpenCourseWare) and lecturer’s own web

site.

Nakamura et al. work on creating lecture

contents that catch the attention of students (Naka

mura et. al., 2006). The system highlights important

term to keep pace with the tempo of lecturer’s

explanation. Nagai works on lecture recording that

uses a HDV (high-definition video) camera and

virtual camera work (Nagai, 2008). Le et al. propose

an automatic digest generation method from the

presentation video recorded by commercial software

(Le et. al., 2008). Many software are sold, which can

easily convert footage of lecturer and presentation

slide into web contents, and deliver lecture contents

over Internet.

Many lecture archiving system contains footage

of lecturer, presentation slide, written text/figure on

whiteboard, and interaction between lecturer and

students. The system provides lecture information

that combine several materials given above. Existing

lecture archiving system 1) provide footage of

lecturer depending on lecturer’s movement, 2) set

the direction of the camera beforehand, 3) edit the

video after the lecture. The contents of the system

are decided by the provider, lecturer, teaching

assistant and system administrator. Existing services

don’t consider the interaction between lecturer and

student that occurs in the classroom.

In higher educational institutions, interaction

between lecturer and student is the most basic

activity, and the classroom is the primary place for

448

Ueda M., Hattori H., Morimura Y., Murakami M., Marutani T., Kakusho K. and Minoh M. (2009).

STUDY ON ACQUISITION OF LECTURER AND STUDENTS ACTIONS IN THE CLASSROOM.

In Proceedings of the First International Conference on Computer Supported Education, pages 448-451

DOI: 10.5220/0001982304480451

Copyright

c

SciTePress

such interaction. In this paper, we focus attention on

student’s face orientation, as one of the non-verbal

interaction occur in the classroom. We propose a

new method that generates lecture archiving content

based on students’ attention, that is, using their face

orientation. We consider that our acquisition

technology helps lecturer to grasp students’

condition and ROI (Region of Interest). Lecturers

can use students’ attention for improving lecture

method.

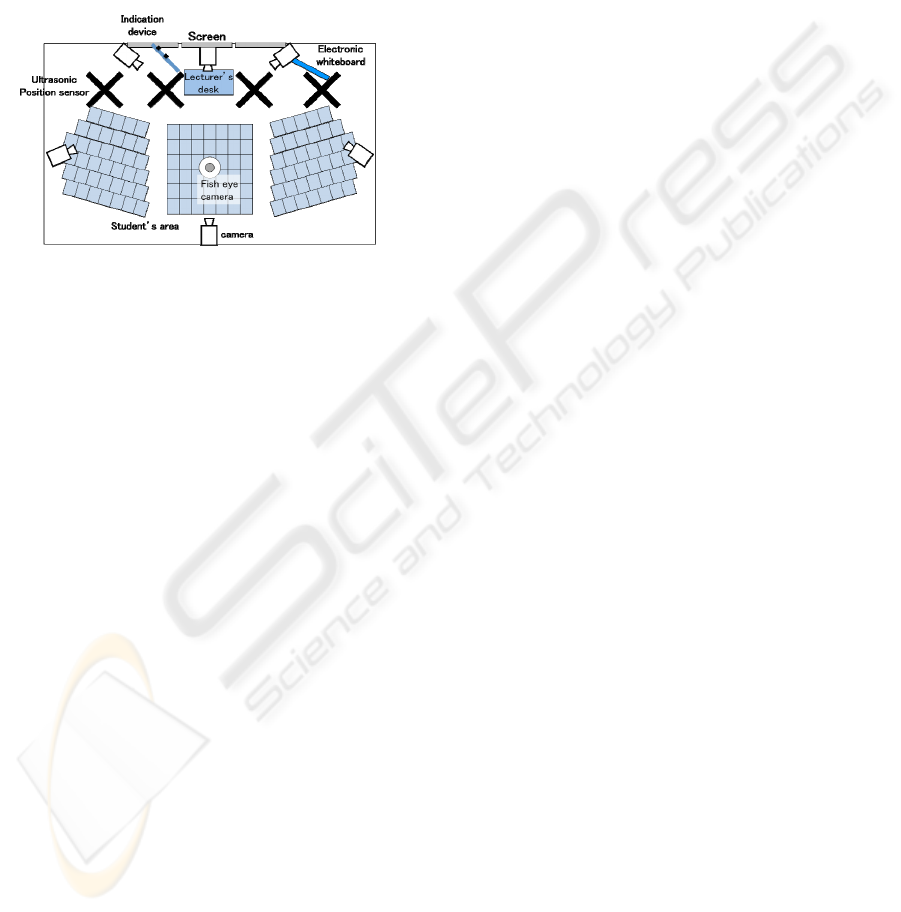

Figure 1: Equipment layout in the classroom.

In Section 2, we describe the overview of our lecture

archiving method. Then in Section 3, we describe

the acquisition of lecturer and students’ actions

occur in the classroom, and we propose a new

lecture archiving system based on interactions

between lecturer and student in Section 4.

2 OVERVIEW OF OUR LECTURE

ARCHIVE SYSTEM

In this section, we describe the overview of lecture

archiving system. We have been developing

automatic lecture archiving system that records

various information, such as lecturer and students’

voice, video, presentation slide and written

text/figure on whiteboard (Marutani et. al., 2006).

Figure 1 shows equipment layout in the

classroom. There are three screens at the front of

classroom; as a student’s view, presentation slide are

displayed on the left and center screen, written

text/figure on the whiteboard are displayed on the

right screen. The cameras located at rear side, right

side and left side of the classroom shoot lecturer,

and the three cameras located at front side are

shooting students. Furthermore, the system acquires

lecturer’s position and movement by the ultrasonic

location sensors, which set on roof, lecturer and

indication device.

Our lecture archiving system records and

synchronizes audio/video of lecturer and students,

presentation slide, written text/figure on the

whiteboard, then provide these data as a lecture

archiving system.

3 ACQUISITION OF ACTIONS IN

CLASSROOM

We have been working for the acquisition of time

sequential context occurs in the classroom, such as

lecturer’s action and movement; utterance, pointing

and writing whiteboard, and student’s action; face-

raising or note-taking (Marutani et. al., 2006),

(Hattori et. al., 2008).

3.1 Acquisition of Lecturer’s Action

In the classroom, there are three major classes of

lecturer’s action based on lecturer’s location and

orientation; descant, explanation of presentation

slide and explanation of text/figure on whiteboard.

Our system acquires these lecturer’s actions using

various sensors in the classroom. Ultrasonic location

sensors; installed on roof, lecturer and indication

device, given location information of lecturer and

pointing information. The archiving system records

the contents of slide and time to changing slide,

furthermore, contents of written text/figure on

whiteboard and time when the text/figure are

written. Then the system acquires lecturer’s actions.

3.2 Acquisition of Students’ Action

Three cameras located at the front of classroom

shoot students’ actions. Left camera is set up at the

top of left side screen, right camera is set up at the

top of right screen, and all three cameras focus on

center zone of students’ area. Then we acquire

students’ face orientation utilizing detecting faces

from recorded images.

Now, we categorize student’s action in the

classroom into four types; hear the lecturer’s

explanations, view a front screen, view a whiteboard

and take lecture notes. We identify these four types

based on installation position of equipments and

student’s face orientation.

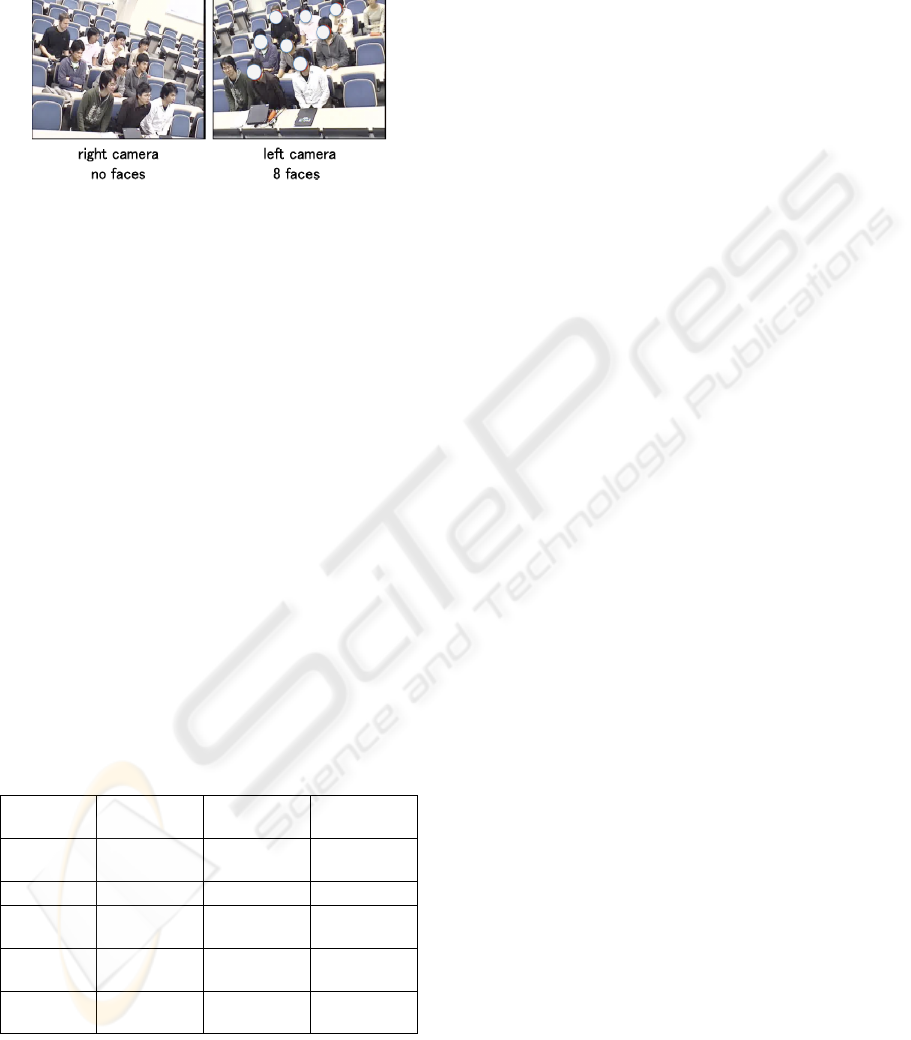

3.3 Detecting Student’s Face

We experiment to verify the possibility of realize the

new archiving method based on student’s face

orientation. In usual, when lecturer explains using

left screen, students view left screen. At that time,

STUDY ON ACQUISITION OF LECTURER AND STUDENTS ACTIONS IN THE CLASSROOM

449

the system detects many faces from the image

shooting by left camera, on the other hands, the

system detects no faces from image shooting by

right camera.

Figure 2: Detection results when lecturer explains using

left screen.

Figure 2 shows the result of face detection when

lecturer explains displayed on left screen. Left figure

shows the detection result of the image shooting by

left camera, there are no faces, and then right figure

shows the detection results of the image shooting by

right camera, there are 8 faces.

3.4 Face Detection Performance

We conduct verification of student’s face detection

by preliminary experiment. 10 trial subjects take

seats in the central area of students’ area in the

classroom. Three cameras located at the front of

classroom shoot students’ actions. Lecturer moves

freely at the front side of classroom; to descant

standing in front of a center screen, to explain

presentation slide standing in front of left screen, to

write text/figure on whiteboard standing in front of

whiteboard. Students turn their face toward

lecturer/screen/whiteboard according to lecturer’s

directions.

Table 1: Face detection performance of sample situation.

Right camera Center camera Left camera

View front

screen

5.08 10 4.42

Note taking 0 0 0

Sit chin in

hand

0 3.7 0

View left

screen

6.11 9.32 0

View

whiteboard

0 7.13 7.12

We conduct verification of student’s face detection

for student’s typical condition in lecture room; hear

the lecturer’s explanations, view a screen, view a

whiteboard, take lecture notes, and put their chin in

their hands. Table 1 shows the average number of

face detected in preliminary experiment. When

students view center screen, 5.08 faces were

detected by right camera, 10 faces were detected by

center camera, and 4.42 faces were detected by left

camera. “0” means no faces were detected. We make

sure that we can use students’ face orientation to

grasp their attention.

4 LECTURE ARCHIVING

METHOD BASED-ON

STUDENTS’ VIEWPOINT

Most of lecture archiving systems provide many

kind of data, such as footage of lecturer, presentation

slide, written text/figure on whiteboard, and so on.

However, we consider that it is difficult for audience

to pay attention every data. Therefore, we propose

new archiving method based on student’s viewpoint

in real classroom.

In consideration of preliminary experiment

described in Section 3, we decide the archiving rule

as follow:

Over two-thirds faces are detected from the

image shooting by left camera, the system

judges most of students view left screen.

Then the system brings the presentation slide

displayed on left screen into our archive data.

Over two-thirds faces are detected from the

image shooting by right camera, the system

judges most of students view whiteboard.

Then the system brings the whiteboard image

into archive data.

Over two-thirds faces are detected from the

image shooting by center camera, and no faces

are detected form other camera, the system

judges most of students view lecturer’s

explanation. Then the system brings the

footages of lecturer into archive data.

There are no cameras detecting over two-thirds

faces, the system judges most of students are

taking lecture notes, and then the system

brings presentation slides into archive data.

Figure 3 shows the image of proposal lecture

archiving method. Horizontal axis is a time scale.

Upper 3 lines are data for archive; lecture video,

presentation slide and written text/figure on

whiteboard. Next 3 lines are data for estimating

students’ viewpoint; the image shooting by left side

camera, center camera and right side camera. And

bottom liee is generated archive data. At first, we

check leftmost row’s three images shooting by left

CSEDU 2009 - International Conference on Computer Supported Education

450

Figure 3: Image of proposal method.

side camera, center camera and right side camera. If

over two-thirds faces are detected from the image

shooting by center camera, and no faces are detected

from other camera, the system judges most of

students view lecturer’s explanation. Then the

system brings the footages of lecturer into archive

data. Then the system checks next image, and the

system decides which image are incorporated into

archive data. By repeating same procedure, the

system generates new archive data. Students can use

these generated new archive data during their

commuting time by using PDA like iPod touch, and

full/traditional archive data are available on PC(web

browser).

We consider that good lecture have some

relationship between lecturer’s action and students’

action, interaction between lecturer and student. For

providing high quality educational service, lecturers

have to grasp students’ condition and then lecturers

should modify lecture materials or refine teaching

method. Our face detection method can help

lecturers for grasping students’ movement.

Therefore, our method can use in FD(faculty

development) field.

5 CONCLUSIONS

In this paper, we try to recognize students’ face

orientation using three types of images shooting by

three cameras. And we propose new lecture

archiving methods based on students’ viewpoint.

We consider that our acquisition technology of

lecturer and students action can use not only our

archiving system, but also grasping students’

condition in faculty development. As for future

works, we verify the effectiveness of our acquisition

technology to apply our technology to real lecture.

ACKNOWLEDGEMENTS

This work is supported in part by Kyoto University

Global COE Program: Informatics Education and

Research Center for Knowledge-Circulating Society

(Project leader: Prof. Katsumi Tanaka, MEXT

Global COE Program, Kyoto University)

REFERENCES

Nakamura, R., et al., 2006. MINO – E-learning System

that Automatically. IASTED Communication, Internet

and Information Technology(CIIT2006), pp.336-340.

Takayuki Nagai, 2008. Simple lecture recording with

HDV camera and virtual camera work. In

Proceedings of IPSJ SIG Technical Reports, 9

th

SIGCMS, pp.56-63. (in Japanese)

Hieu Hanh Le, et al., 2008. Automatic Digest Generation

by Extracting Important Scenes form the Content of

Presentaions. In Proceedings of DEXA2008

Workshops(AIEMPro’08), pp.590-594.

Takafumi Marutani, et al. 2007. Lecture Context

Recognition based on Statistical Feature of Lecture

Action for Automatic Video Recording. In Journal of

IEICE, Vol.J90-D, No.10, pp.2775-2786. (in

Japanese)

Hironori Hattori, et al., 2008. Acquisition of Time

Sequential Context based on Action of Lecturer and

Students. In Proceedings of 2008 IEICE General

Conference, D-15-6. (in Japanese)

STUDY ON ACQUISITION OF LECTURER AND STUDENTS ACTIONS IN THE CLASSROOM

451