PARTICIPATORY SIMULATION AS A TOOL

FOR AGENT-BASED SIMULATION

Matthew Berland

Dept. of Computer Sciences/ICES, Univ. of Texas at Austin, U.S.A.

William Rand

Department of Computer Science, Univ. of Maryland, U.S.A.

Keywords: Participatory simulation, Agent-based model, Agent-based simulation, Complex systems learning.

Abstract: Participatory simulation, as described by Wilensky & Stroup (1999c), is a form of agent-based simulation in

which multiple humans control or design individual agents in the simulation. For instance, in a participatory

simulation of an ecosystem, fifty participants might each control the intake and output of one agent, such

that the food web emerges from the interactions of the human-controlled agents. We argue that participatory

simulation has been under-utilized outside of strictly educational contexts, and that it provides myriad

benefits to designers of traditional agent-based simulations. These benefits include increased robustness of

the model, increased comprehensibility of the findings, and simpler design of individual agent behaviors. To

make this argument, we look to recent research such as that from crowdsourcing (von Ahn, 2005) and the

reinforcement learning of autonomous agent behavior (Abbeel, 2008).

1 INTRODUCTION

In this paper, we argue that participatory simulation

(as pioneered by Wilensky and Stroup, 1999a,

1999b) is a version of crowdsourcing (Wired, 2007)

that is relevant to researchers interested in artificial

intelligence and multi-agent systems.

Crowdsourcing has shown to be useful because it

exploits the knowledge, logic, and inherent

unpredictability of humans (von Ahn, 2005; von

Ahn et al., 2008). In a similar way, simulation with

human participants can be used to examine complex

phenomena more robustly and facilitate the

dissemination of agent-based understanding. As

such, we describe the participatory simulation as a

crowdsourced design strategy, exploiting the power

of the target participants to create solutions to

complex problems with dynamic information.

2 PARTICIPATORY

SIMULATIONS AND

AGENT-BASED MODELS

The growth of the computer as a tool has led to the

growth of computer literacy. As such, many recent

research and commercial projects have been

designed to use the knowledge base of a computer

literate population in combination with large

computing resources. Wikipedia is the canonical

example: millions of individuals use Wikipedia as

their primary encyclopedia, and many thousands of

those users are also primary content creators

(Wikipedia, 2008). For Wikipedia, the power of the

crowd is the power of the site; a critical mass of

reader/producers is necessary for its continued

relevance. The knowledge in Wikipedia is

crowdsourced, in that the content of Wikipedia

derives from the “crowd” of untrained users rather

than a cadre of explicitly trained users.

The central implications of crowdsourced

content are that it has a low resource cost and a low

training cost; the quality of the data, however, is

highly variable. We argue that participatory

553

Berland M. and Rand W. (2009).

PARTICIPATORY SIMULATION AS A TOOL FOR AGENT-BASED SIMULATION .

In Proceedings of the International Conference on Agents and Artificial Intelligence, pages 553-557

DOI: 10.5220/0001786905530557

Copyright

c

SciTePress

simulation, as a form of crowdsourced simulation,

has myriad benefits for designing agent-based

simulations. The benefits are the resulting accessible

models of human behavior data in the context of the

simulation, and the improved comprehensibility of

the model due to its interactive nature. Moreover,

the high variability of the crowd data, which is

problematic in some crowdsourcing applications,

can be valuable in participatory simulations since it

adds robustness to the designed simulation.

2.1 What is an Agent-based

Simulation?

An agent-based simulation (ABS) is a simulation in

which independent agents with local or incomplete

information interact with one another. Agent-based

simulations are often used to model human behavior

or ethology, but they can also be usefully applied to

a variety of target domains, such as physics (Bar-

Yam, 1997), geography (Parker et al., 2003), and

biology (Griffin, 2006). A typical ABS might consist

of sets of individual agents; each of these agents has

some local intelligence and the ability both to

evaluate and act on the environment and agents

around it. The simulation also contains tools to

monitor the behavior and conditions that emerge

from the interactions of the agents. For instance, a

modeler creating an agent-based simulation of the

food distribution in an ant colony might design

behaviors for individual ant roles (e.g., worker,

queen), generate hundreds of independent agents

with behaviors that correspond to their roles, and run

the simulation in a virtual space in which the ant-

agents can interact by collecting food, distributing

food, and consuming food. See Figure 1, below,

from a NetLogo simulation (Wilensky, 1997).

ABS is a particularly powerful abstraction not only

because of its lucid metaphor and parallelism and

ease of encapsulation, but also because it is more

easily comprehensible than many competing

metaphors and strategies (Wilensky & Reisman,

2006). This comprehensibility stems from the

similarity of the ontology of real world to the

ontology of ABS, as contrasted with the ontology of

a differential-equation-based model, for example.

Latour (1987) argues that good academic science

must be inherently communicative, but relatively

little effort has been spent optimizing models for

comprehensibility. This, then, is an exemplary

power of ABS: it is easier for an untrained observer

to understand an ant colony in terms of individual

ant behaviors than in terms of differential equations.

Due to the nature of local simulation, however, it

is often difficult to design programs (or “rule-sets”)

for individual agents such that the solution to a

global goal emerges (Wilensky & Resnick, 1999).

Much like biological ecology, changing one small

aspect of an ABS often results in larger, global

changes. As a result, the ecology of the emergent

agent systems often becomes deeply unpredictable.

Solving problems indirectly and designing

intelligence for localized agents can exhibit several

of the difficulties of general search problems: many

local maxima; behaviors that cause negative or hard

to predict side effects; and often the emergent path is

effectively incomprehensible.

2.2 What is a Participatory

Simulation?

In a participatory simulation, each human designs or

controls an individual agent in an agent-based

simulation rather than having a single designer (or

group of designers) who control the whole

simulation. A participatory simulation (PS) can be

used to make the agent-based simulation even more

understandable; to aid the design of agent rules; to

disseminate the methods of agent-based simulation;

and to make the simulation of human behavior more

accurate.

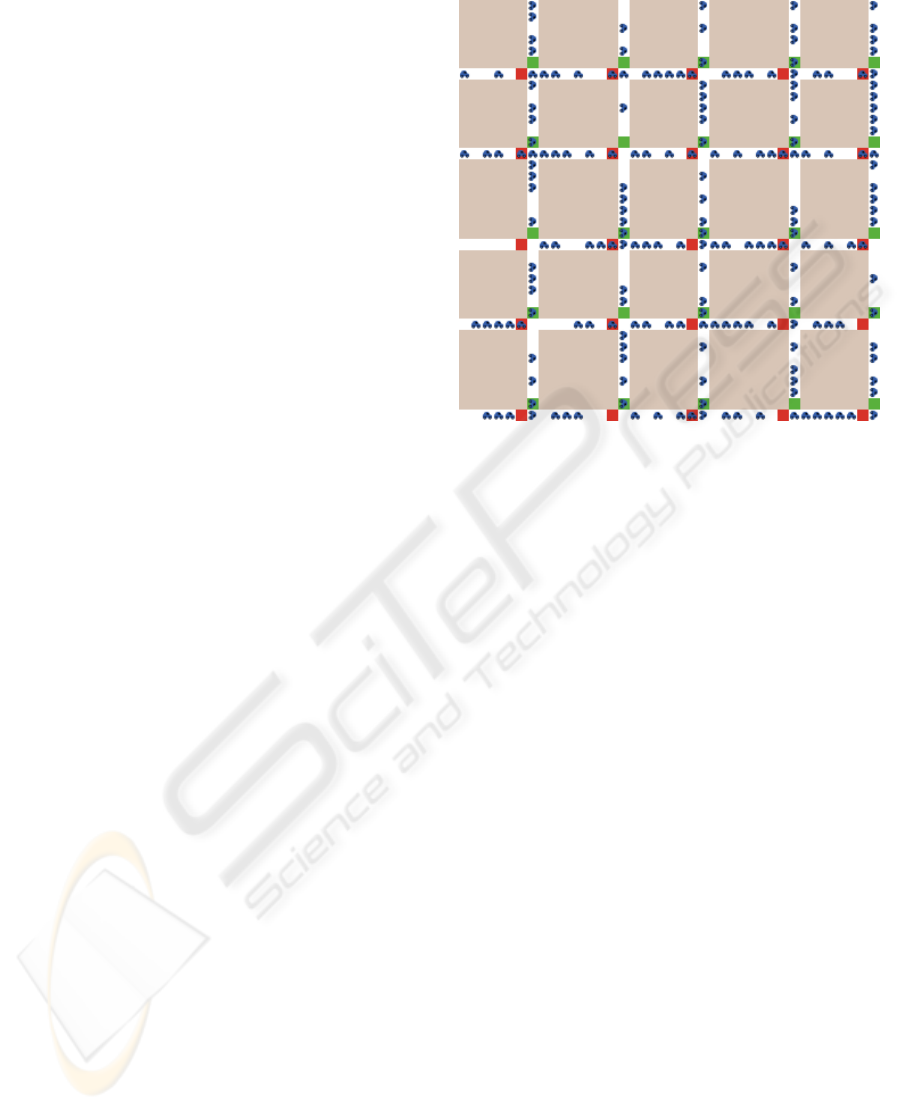

A popular PS in use in elementary and high

schools is Gridlock (Wilensky & Stroup, 1999c). In

one version of Gridlock, 25 or fewer individuals

each control a stoplight at the intersections on a 5x5

road-map grid. If there are exactly 25 participants,

each stoplight is controlled by one and exactly one

human. If there are fewer than 25 participants, some

Figure 1: ABM of ant foraging.

ICAART 2009 - International Conference on Agents and Artificial Intelligence

554

of the stoplights will be controlled by the simulation

itself. The humans involved must collaborate

effectively to control the flow of traffic in the

simulation (see Figure 2, below).

The overarching purpose of Gridlock is not to

develop optimal traffic light patterns, since an

optimized ABS could certainly do a better job than

the humans working with the ABS. Instead, the

value of the PS is two-fold: the PS outputs an

accurate model and record of human behavior in

various traffic settings; and the humans involved

gain a more comprehensive understanding of the

difficulties involved in constructing a complex

traffic model.

The adoption of participatory simulations has

increased in recent years with the spread of video

games. According to a Pew Research study (2008),

Almost 97% of people aged 12 to 17 play video

games regularly, and the PS model works as a kind

of research-oriented video game due to its

interactive nature. Indeed, many recent participatory

simulations draw the connection to video games

explicitly, and the phrases “serious games” and

“game-based learning environments” are often used

to describe systems that are advanced participatory

simulations (e.g., Squire & Jenkins, 2004).

2.3 How Does the ABS+PS Model

Relate to Crowdsourcing?

Wikipedia, ReCAPTCHA (von Ahn, 2008), and

Facebook are inherently crowdsourced, and they rely

on the interaction of the target agents.

Crowdsourcing is a relatively new term applied to a

very old idea: groups of humans can work in parallel

to achieve goals that are too large or complicated for

individuals working either alone or serially. The idea

comes from the reversal of the typical AI paradigm

in which a computer is given the intelligence to

achieve a task that humans cannot.

The relevance of a link is in part determined by

how many users over time find the link relevant to a

particular search. By using millions of searches a

day as data, Google can ensure relatively reliable

search results. A more manageable and relevant

example is ReCAPTCHA (von Ahn et al., 2008): in

ReCAPTCHA, humans solve picture-to-text

translation problems in order to earn rewards (such

as registration for a website, see Figure 3, below).

Participatory simulations are similar to

crowdsourcing in that they rely on a critical mass of

untrained users to gather relevant data. For instance,

a war game in which soldiers use paint guns instead

of bullets is a participatory simulation in the sense

that results are an emergent process of the actions of

Figure 2: Gridlock Participatory Simulation.

the crowd: these simulations would not make sense

without the crowd. A scrimmage soccer match could

be considered a participatory simulation. Novel to

the ABS+PS, however, is the use of modern

computer-based communications towards the

running, recording, and evaluation of the simulation.

In this sense, ABS+PS is a type of crowdsourcing,

the data and content of ABS+PS is created out of the

actions of a multitude of users. Thus, research into

the methodology of ABS+PS can learn from the

crowdsourcing research and vice versa.

2.4 What Does This Have to do With

AI?

The recording and simulation of the participating

human-agents makes the simulation very relevant to

AI/Agent research. Some participatory simulations

(such as Berland, 2008; Collela, 2000; Klopfer &

Squire, 2005) require the participant to formalize

herbehavior (in the form of program code, diagrams,

etc.) in order to fully participate. With a variety of

behaviors formalized or mathematized, the behaviors

can be reused, retested, and subdivided to test for

different outcomes based on different behaviors.

Recent research into SVM’s (Hearst, 1998) and

inverse reinforcement learning (Ng, & Russell,

2000) has found them to be powerful tools in

generating behavior models from data such as these.

Most of the rules of agents in an ABS are currently

designed by humans, but ABS+PS can take

advantage of tools like SVM to data mine the results

PARTICIPATORY SIMULATION AS A TOOL FOR AGENT-BASED SIMULATION

555

Figure 3: reCAPTCHA.

of human participants in order to generate the rules

of an agent such as in an ABS (such as the helicopter

agents in Abbeel, 2008). In addition, since these

rules are extracted from real-time decisions that

human participants make in response to complex

environments, an ABS that is built upon the results

of a PS will be more robust than a simulation that

was hand-engineered. In this way, we can use the

PS to develop a better ABS using modern AI

methods.

2.5 What’s the Value of More

Comprehensible Simulations to AI

Researchers?

Communication and education occupy relatively

small niches at computer science conferences,

perhaps because the work is not easily quantifiable

and rarely approaches the rigor or applicability of

other work in the field. There are, however,

numerous benefits to constructing simulations and

models comprehensibly. For instance, equations are

hard to parse even for experts in the target field

(Wilensky, 1993). Furthermore, statistical

descriptions can be misunderstood and improperly

generalized even by good statisticians (Kahneman &

Tversky, 1973). Clearly, equations and statistics,

while unambiguous, are suboptimal tools for

reporting findings. These difficulties have been

repeatedly acknowledged in data design and

visualization subfields as well as learning theories,

but the findings have not been widely disseminated

(ironically). Several learning theories have shown

that interaction with and motivation around target

content material dramatically increases uptake and

comprehension (Papert, 1980; diSessa, 2000).

Participatory simulations are inherently interactive,

and often that interactivity proves motivating for

participants. Indeed, Wilensky & Stroup (1999a)

give examples in which complex target material is

more quickly learned through the deployment of a

participatory simulation.

2.6 So What’s the Problem?

Though participatory simulations can improve the

robustness and comprehensibility of agent-based

simulations, they are relatively underutilized. Very

few of the papers at top conferences on multi-agent

systems, such as AAMAS, use participatory

simulations, and even fewer take advantage of the

participatory simulations to further improve their

own agent-based simulations and methods. We have

identified a few possible reasons why the PS has

been under-utilized in the ABS community. We list

these reasons and some suggested responses below:

Problem: Human subjects are expensive and time-

consuming.

Rebuttal: With the growth of the web and the

relatively low cost of deploying PS to social

networking software (such as Facebook), this is

becoming increasingly less true. However, it

remains the most obvious and meaningful

impediment to the widespread use of the PS. The

upside is that, with a larger community building

participatory simulations, the community could

easily create repositories where people collaborate.

Problem: Humans are unreliable.

Rebuttal: In many cases in which agent-based

simulations are employed, the target agents are

expected to generally act in their own interest,

whether they represent humans or not. Often some

degree of randomness is added to evaluate the

robustness of the model. Humans produce this self-

interest with randomness effectively, and the

unpredictability that they introduce rarely follows

traditional random distributions (these are discussed

further in Wolfram, 2002). Thus, humans can test

the robustness of the model effectively, and by

examining the results of human actions we can

develop more robust models.

Problem: Education, learning, and communication

are not serious scientific research topics or do not

closely relate to the target scientific field.

Rebuttal: Every field must communicate its ideas

effectively. A finding is useless if its target audience

cannot understand the finding. The PS provides

researchers that use the ABS a relatively easy, low-

cost way to ensure meaningful engagement with the

target material.

3 CONCLUSIONS

We have highlighted the benefits of utilizing

participatory simulations in combination with agent-

ICAART 2009 - International Conference on Agents and Artificial Intelligence

556

based simulations to examine questions in artificial

intelligence. We present these techniques as a form

of crowdsourcing, and show how research into

crowdsourcing can gain from understanding

ABS+PS and vice versa. Crowdsourcing solves

machine-difficult problems by harnessing the power

of crowds of humans. PS can harness the power of

crowds to solve difficult ABS design problems.

Thus, ABS+PS can be used to create more refined

and robust models of complex phenomena.

REFERENCES

Abbeel, P. (2008). Apprenticeship Learning and

Reinforcement Learning with Application to Robotic

Control. Doctoral dissertation, Stanford

Bar-Yam, Y. (1997). Dynamics of Complex Systems.

Cambridge, MA: Perseus Press.

Berland, M. (2008). VBOT: Motivating computational and

complex systems fluencies with constructionist

virtual/physical robotics. Doctoral dissertation,

Evanston, IL: Northwestern Univ.

Collela, V. (2000). Participatory Simulations: Building

Collaborative Understanding through Immersive

Dynamic Modeling. Journal of the Learning Sciences,

9(4), pp. 471-500.

diSessa, A. (2000). Changing minds: Computers, language

and literacy. Cambridge, MA: MIT Press.

Google (2008). Retrieved September 27, 2008 from

http://www.google.com/corporate/tech.html

Griffin, W. A. (2006). Agent Based Modeling for the

Theoretical Biologist. Biological Theory 1(4).

Hearst, M.A. (1998), "Support Vector Machines," IEEE.

Intelligent Systems, pp. 18-28, July/Aug, 2008.

Kahneman, D., & Tversky, A. (1973). On the psychology

of prediction. Psychological Review, 80, 237-251.

Klopfer, E. & Squire, K. (2005). Environmental

Detectives - The Development of an Augmented

Reality Platform for Environmental Simulations. In

Press for Educational Technology Research and

Development.

Latour, B. (1987). Science in action: How to follow

scientists and engineers through society. Cambridge,

MA: Harvard University Press.

Ng, A.Y. & Russell, S. (2000). Algorithms for inverse

reinforcement learning. Proc. 17th International Conf.

on Machine Learning.

Parker, D. C., S. M. Manson, M. A. Janssen, M. J.

Hoffmann, and P. Deadman. (2003). Multi-agent

systems for the simulation of land-use and land-cover

change: A review. Annals of the Association of

American Geographers 93(2), 314-337.

Pew Research (2008). Teens, Video Games and Civics:

Teens' gaming experiences are diverse and include

significant social interaction and civic engagement.

Retrieved September 27, 2008 from

http://www.pewinternet.org/PPF/r/263/report_display.

asp

Squire, K. & Jenkins, H. (2004). Harnessing the power of

games in education. Insight (3)1, 5-33.

von Ahn, L. (2005). Human computation. Doctoral

dissertation. Carnegie Mellon Univ.: Pittsburgh, PA

von Ahn, L., B. Maurer, C. McMillen, D. Abraham, and

M. Blum (2008) reCAPTCHA: Human-based

Character Recognition via Web Security Measures.

Science, pp. 1465-1468, 12 September 2008.

Wikipedia. (2008). In Wikipedia, the free encyclopedia.

Retrieved September 27, 2008, from

http://en.wikipedia.org/wiki/Wikipedia

Wilensky, U. (1993). Connected Mathematics: Building

Concrete Relationships with Mathematical

Knowledge. Doctoral dissertation, Cambridge, MA:

MIT Media Lab.

Wilensky, U. (1997). NetLogo Ants model.

http://ccl.northwestern.edu/netlogo/models/Ants.

Center for Connected Learning and Computer-Based

Modeling, Northwestern University, Evanston, IL.

Wilensky, U., & Reisman, K. (2006). Thinking Like a

Wolf, a Sheep or a Firefly: Learning Biology through

Constructing and Testing Computational Theories --

an Embodied Modeling Approach. Cognition &

Instruction, 24(2), pp. 171-209.

Wilensky, U., & Stroup, W. (1999a). Learning through

Participatory Simulations: Network-Based Design for

Systems Learning in Classrooms. Computer Supported

Collaborative Learning (CSCL'99).

Wilensky, U., & Stroup, W. (1999b). HubNet.

http://ccl.northwestern.edu/netlogo/hubnet.html.

Center for Connected Learning and Computer-Based

Modeling. Northwestern University, Evanston, IL.

Wilensky, U., & Stroup, W. (1999c). NetLogo HubNet

Gridlock model.

http://ccl.northwestern.edu/netlogo/models/HubNetGri

dlock. Center for Connected Learning and Computer-

Based Modeling, Northwestern University, IL.

Wired (2007). What Does Crowdsourcing Really Mean?

Retrieved September 27, 2008, from

http://www.wired.com/techbiz/media/news/2007/07/cr

owdsourcing

Wolfram, S. (2002). A New Kind of Science. Champaign,

IL: Wolfram Media.

PARTICIPATORY SIMULATION AS A TOOL FOR AGENT-BASED SIMULATION

557