MULTI-MODAL PLATFORM FOR IN-HOME HEALTHCARE

MONITORING (EMUTEM)

Wided Souidene, Dan Istrate, Hamid Medjahed

ESIGETEL – LRIT, 1,Rue du Port de Valvins, 77210 Avon, France

Jérôme Boudy, Jean-Louis Baldinger, Imad Belfeki, François Delavault

EPH/Telecom Sud Paris, 9, Rue Charles Fourier, 91011 Evry, France

François Steenkeste

INSERM U558 Toulouse France

Keywords: Telemedicine, Design and development methodologies for Healthcare IT, Interoperability, Semantic

interoperability, Databases and datawarehousing, Datamining, Support for clinical decision-making,

Wearable health informatics.

Abstract: This paper describes a multimodal platform dedicated to in-home healthcare monitoring. This platform

consists in three heterogeneous and complementary systems which are designed to provide a sense of safety

and connectedness for those being monitored. In this article we present a detailed description of the multiple

sensors used to remotely monitor elderly or a patient health. These are: a set of microphones suitably placed

in the home, a wearable device and a set of infrared sensors. This platform is remotely used by the medical

staff in order to help them to take the right decision about the patient and/or elderly situation. It has a couple

of great advantages. First, its good acceptance by the end-users since it is less intrusive than other healthcare

systems. Second, it is reliable and robust since it performs the fusion of outputs of three complementary

healthcare systems.

1 INTRODUCTION

The proportion of elderly is increasing in all

societies throughout the world. As they are

becoming older, they want to preserve their

independence, autonomy and way of life. It is

therefore our duty of scientists to provide the

necessary devices to allow them to live at home

while being safe and in good condition. Thus,

several research teams have developed a number of

systems for in-home healthcare monitoring and

prevention towards day life risks. These systems are

based on the deployment of several sensors in the

care receiver home in order to prevent and/or detect

critical situations. They offer the comfort and

independence of staying at home, the security of

daily monitoring and proper medical attention.

However, there are few reliable systems capable of

preventing critical situations of the elderly before it

takes place. In particular, rare or not reliable are the

systems which predict or detect the fall of the person

with good sensitivity and good specifity.

To provide one answer to this problem, we

assembled a group of researchers from different

background within a consortium (QuoVADis Cf.

Acknowledgment) in order to develop a platform for

several uses and to meet the needs identified above.

The platform developed within this project manages

a system consisting in:

A set of microphones disposed into the

living rooms of the home of the elderly.

A portable device that can measure

ambulatory pulse heart rate, detect posture

and possibly the fall of the person

equipped.

A set of infrared sensors that detect the

presence of the person in a given home part

381

Souidene W., Istrate D., Medjahed H., Boudy J., Delavault F., Baldinger J., Belfeki I. and Steenkeste F. (2009).

MULTI-MODAL PLATFORM FOR IN-HOME HEALTHCARE MONITORING (EMUTEM).

In Proceedings of the International Conference on Health Informatics, pages 381-386

DOI: 10.5220/0001780603810386

Copyright

c

SciTePress

and also the standing posture of the person

in question.

The output of these three heterogeneous systems

are collected, processed and fused through a

multimodal platform (EMUTEM).

In this article, we propose a detailed description

of the multimodal platform called EMUTEM which

could have several uses among them Telemedicine,

healthcare and monitoring. This platform provides a

sense of safety and connectedness for those being

monitored. It also reassures the care-receiver family

and gives them some peace of mind. It could also be

arguably less expensive than the cost of live-in

helpers and caregivers. The proposed platform

collects and analyses the output of three distinct

systems and makes a fusion of 3 modalities in order

to help medical staff to take the right decision about

the monitored person situation.

In the following, we describe in section 2 the

operation of each system above and we detail the

configuration of the EMUTEM platform. In Section

3, we analyze the process of data acquisition.

Finally, we present our findings and perspectives.

2 MULTI-MODAL PLATFORM

FOR IN-HOME HEALTHCARE

MONITORING

It was found that the fall is one of the major causes

of death among the elderly. In France, people aged

65 and over are victims each year of 550 000

accidents with recourse to emergencies. They

account for more than three-quarters to 20 000

deaths annually from Normal Day Life Accidents

(NDLA). A large majority of these NDLAs are the

result of falls.

Faced with this scourge identified as hazardous

to health, safety and lives of the elderly, we tried to

develop a system of in-home healthcare monitoring

to prevent and detect a fall. We brought together the

efforts of three teams to establish a multimodal

platform. The platform manages three heterogeneous

systems: a sound system, a portable device and

infrared sensors.

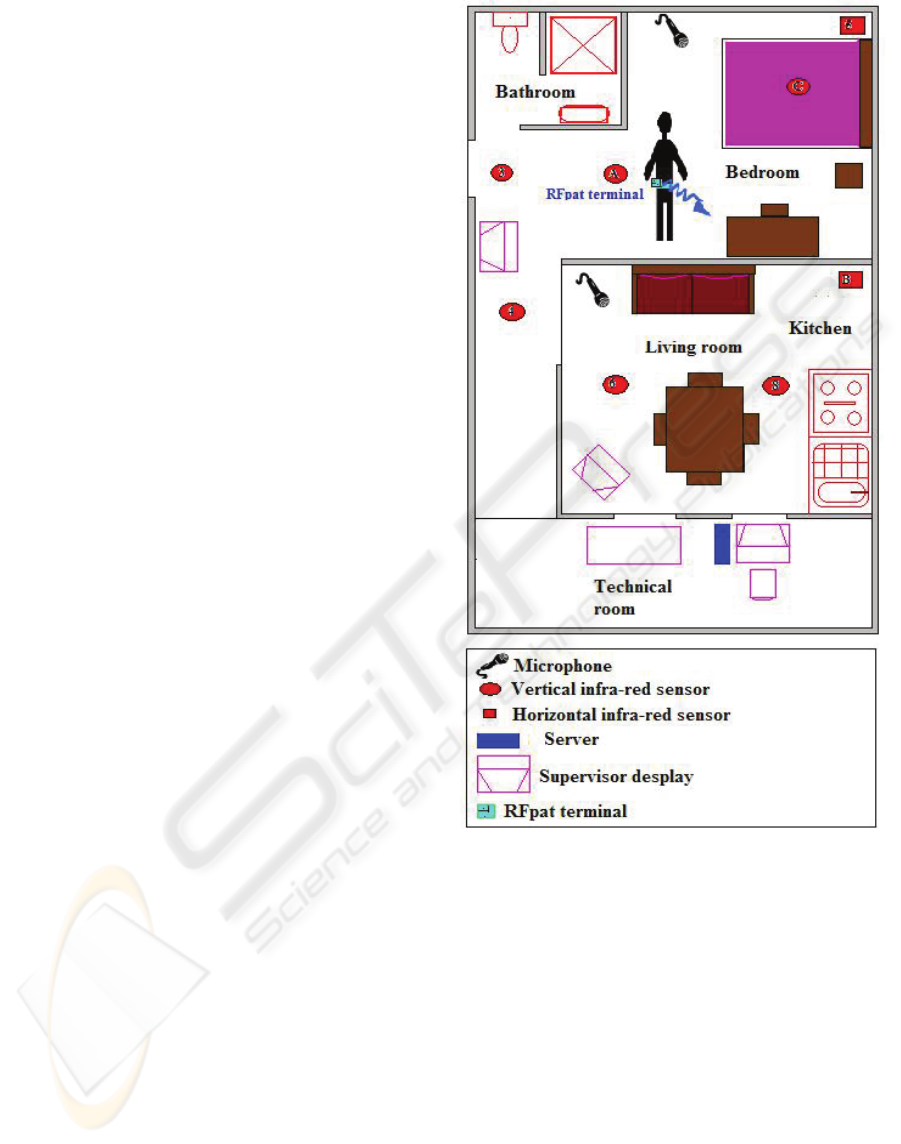

Figure 1 shows a proposed set of

sensor to be installed at home.

In the following each one of these systems will

be described and its contribution to the whole

healthcare monitoring system will be emphasized.

Figure 1: In-home sensor disposal.

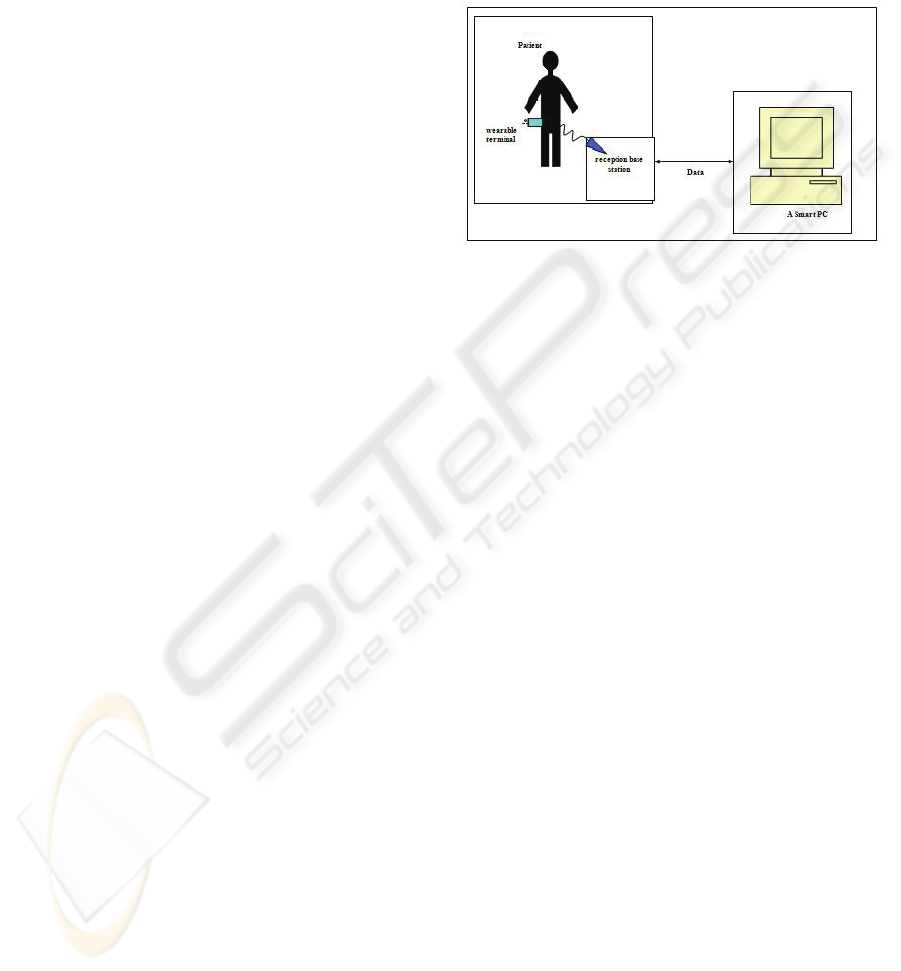

2.1 The Wearable Device (RFPAT)

The wearable device named RFPAT consists in two

fundamental elements (Figure 2):

A mobile terminal: This is a waist wearable

device that the patient or the elderly clips,

for instance, to his belt all the time he is at

home. It measures the person’s vital data

and transmits to a reception home station.

In this article we will use the

denominations wearable device, mobile

device, mobile terminal indifferently to

address the mobile terminal.

A fixed reception base station: This is a

receiver connected to a personal computer

HEALTHINF 2009 - International Conference on Health Informatics

382

(PC). It receives vital signals from the

patient’s mobile terminal,

All the data gathered from the different RFPAT

sensors are processed within the wireless wearable

device. To ensure an optimal autonomy for the

latter, we designed it using low consumption

electronic component

s. Namely, the circuit

architecture is based on different micro-controllers

devoted to acquisition, signal processing and

emission. Hence, the mobile wearable terminal

encapsulates several signal acquisition and

processing modules:

It records various physiological and

actimetric signals

It pre-processes the signals in order to

reduce the impact of environmental noise or

user-motion noise.

This latter point is an important issue for in-

home healthcare monitoring. In fact, monitoring a

person in ambulatory mode is a difficult task to

achieve.

For the RFPAT system, we made the choice to

come up with the noise problem in the acquisition

stage. Then, some digital noise reduction filters and

algorithms were implemented within the portable

device. These filters and algorithms were applied

respectively to all acquired signals: movement data,

posture data and namely the pulse signal (heart rate).

Movement data describes the movement of the

monitored person. It gives us information like: ‘she

is lying’, ‘she is immobile’, ‘she is sitting/standing

up’ etc. Movement data consists also in the

percentage of movement, it computes the total

duration of the movements of the monitored person

for each time slot of 30 seconds (0 to 100% during

30 seconds). The posture data is information about

the person posture: standing up / laying down.

The posture data is a quite interesting

measurement which gives us useful information

about the person’s activity. Thanks to an actimetric

system embedded in the portable device, we can

detect the situations where the person is approaching

the ground very quickly. This information is

interpreted as a ‘fall’ when the acceleration goes

through a certain threshold in a given situation.

The pulse signal is delivered by a

photoplethysmographic sensor connected to the

wearable device. After pre-conditioning and

algorithmic denoising it gives us information about

the heart rate every 30 seconds.

In the ambulatory mode, the challenging process

consists in noise reduction. In (Baldinger, et al.

2004) we afford to reduce the variations of pulse

measurement lower than 5% for one minute

averaging, which remains in conformity with the

recommendations of medical professionals.

Data gathered from the different sensors are

transmitted, via an electronic signal conditioner, to

low power microcontroller based computing unit,

embedded in the mobile terminal.

Figure 2: RFPAT module configuration.

Currently, a fall-impact detector is added to this

system in order to make the detection of falls more

specific.

2.2 The Smart Sound Sensor

In-home healthcare devices face a real problem of

acceptance by end users and also caregivers. Sound

sensors are easily accepted by care receivers and

their family, they are considered are less intrusive

then cameras, smart T-shirts, etc… In order to

preserve the care-receiver privacy while ensuring his

protection and safety, we propose to equip his house

with some microphones. In this context, the

environmental sound is not continuously recorded.

This microphone array allows sound remote

monitoring of the acoustical environment of the

monitored person. The main advantage of this

system consists in carrying in real time (Istrate, et al.

2006a). Hence, we continuously ‘listen’ to the sound

environment in order to detect distress situations and

distress calls. This smart sound sensor described in

(Istrate, et al. 2006b) is made up four modules as

depicted in

Figure 3.

2.2.1 M1 Module: Sound Event Detection

and Extraction

The first module M1 listens continuously to the

sound environment in order to detect and extract

useful sounds or speech. The signal extracted by the

M1 module is processed by M2 module.

MULTI-MODAL PLATFORM FOR IN-HOME HEALTHCARE MONITORING (EMUTEM)

383

2.2.2 M2 Module: Sound/Speech

Classification Module

The second module M2 is a low-stage classification

one. It processes the sound received from module

M1 in order to separate the speech signals from the

sound ones.

Figure 3: ANASON smart sensor.

2.2.3 M3 Module: High-stage Classification

This module operates within each class determined

by the M2 module. It consists in two sub-modules.

In the case of sound label attributed to the signal by

module M2, the sound recognition sub-module M3.1

classifies the signal between eight predefined sound

classes. In case of speech label, the extracted signal

is analyzed by a speech recognition engine in order

to detect distress sentences (M3.2 module).

For both cases, if an alarm situation is identified

(the sound or the sentence is classified into an alarm

class) this information is sent to the data fusion

system. This is done in order to check whether the

other sensors (RfPat and Gardien) detected or not an

alarm.

2.3 The Infrared Motion Sensor

(GARDIEN)

The in-home healthcare monitoring systems have to

solve an important issue of privacy. When

developing our multi-modal platform, we chose the

monitoring modules such that they have the less

intrusive incidence on the monitored elderly

(Banerjee et al., 2003). We equipped our test

apartment with infrared sensors which have two

functionalities:

Localize the person at home: the sensors

are activated by the presence of the person

in a certain room. Only the living rooms

and the bedroom are equipped.

Detect the vertical position of the person: A

specific infra-red sensor is installed in the

living room and/or the kitchen in order to

detect whether the monitored person is

standing up or not. Actually it detects

movement in a fixed altitude of one meter

and a half.

Figure 4: GARDIEN system.

The second functionality is quite useful in order

to confirm or infirm a fall detection from the RFpat

or the ANASON module. These sensors and the

software and hardware which is used to perform the

localization and the vertical position detection is

called GARDIEN (Steenkeste. et al., 1999). Figure 4

represents the GARDIEN system.

2.4 General Interface of the

Multimodal Platform (EMUTEM)

In order to configure and manage the three

modalities above described, we developed a

platform which has different functionalities and that

could be easily used by a caregiver or even by

several members of the monitored person family.

Now, this platform is only used to control and

synchronise the different data acquisition processes.

The front panel of the developed platform is

presented in Figure 5.

HEALTHINF 2009 - International Conference on Health Informatics

384

Figure 5: EMUTEM platform front panel.

Thanks to this platform, the platform manager

can supervise the multimodal data acquisition stage.

The platform manager must first select the

modality to record and then configure its parameters

if needed. For RFpat and Gardien systems, we only

need to specify the IP address and the TC/IP port

number. For ANASON sensor, we need to select the

sound card to be used for data analysis and recording

(if several ones are available). We also specify the

sampling rate and the location of the backup file. For

a final target use, this configuration is only done

once during the very first time the system is installed

in the patient’s or the elderly home.

3 DATA ACQUISITION

3.1 Data Acquisition Protocol

Data acquired using the different systems described

below is stored on the embedded Master PC within a

folder which has a specific name. For our case, we

attribute to the folder a name which is an

identification code number for the patient. Each

recording consists in five files corresponding to the

different modalities. These files are:

A personal table named personnel.xml,

contains the patient’s identifier and some

personal information like age, native

language, usual drugs treatment...etc. All

these data relative to the care-receiver are

protected for his privacy and their use and

transmission is let to his agreement.

A descriptive file named scenario.xml,

describes the reference scenario. This file is

stored during the test phase of the platform.

It is used further to analyse the performance

of each modality.

A sound file which contains sound data

saved in real time, in a wav file with 16 bit

of resolution and a sampling rate of 16

KHz, a frequency usually used for speech

applications.

A clinical data file which contains

physiological and motion data acquired

from RFpat. It stores information about

patient’s posture (laid down or

upright/seated), his agitation (between 0%

and 100%), his cardiac frequency, fall

events and emergency calls. The

acquisition sample rate is 0.03 Hz.

A motion data file acquired every 500 ms

by Gardien subsystem and saved in a

separate text adapted file format. Each line

of this file contains the infra-red sensors

which are excited (they are represented by

hexadecimal numbers from 1 to D) and also

the corresponding date and hour.

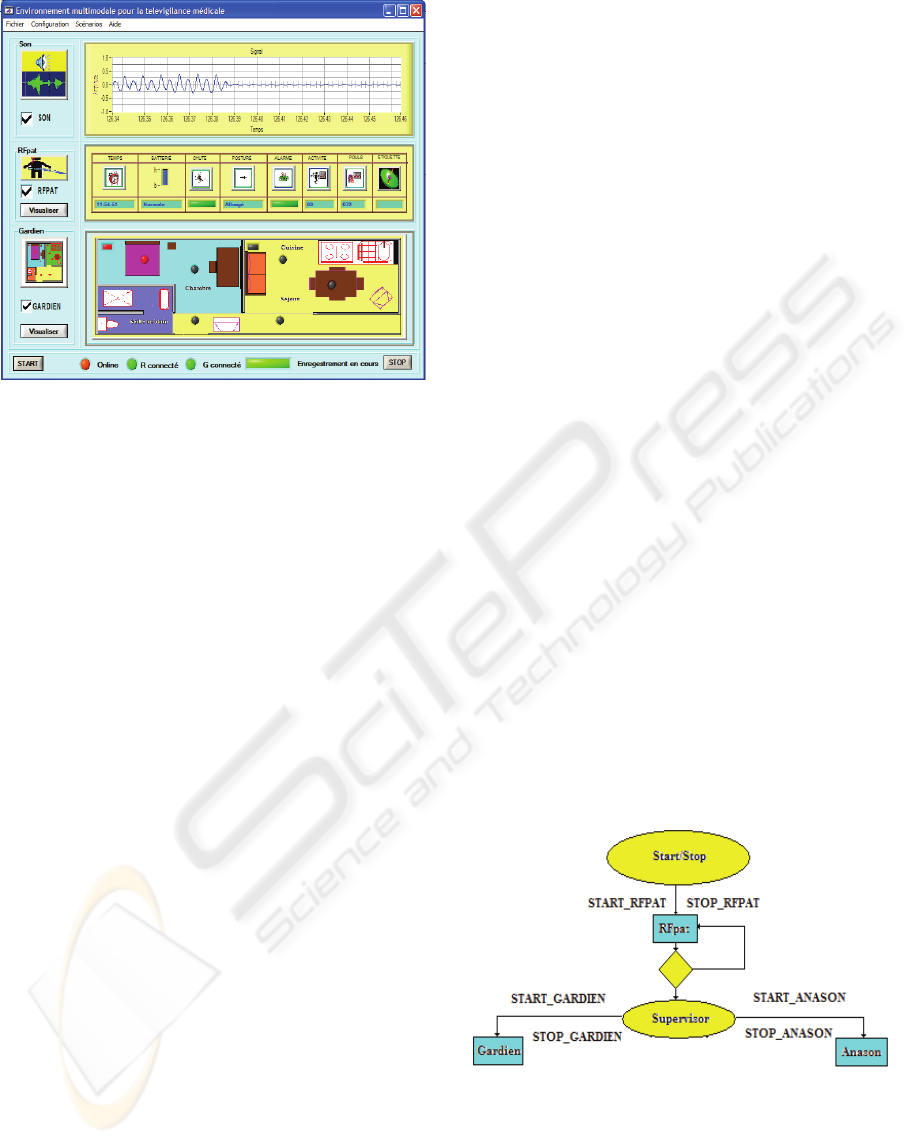

As the acquired signals corresponding to the

different modalities (ANASON, RFpat and Gardien)

have different sample rates, we developed a

synchronisation procedure in order to make the

acquisition protocol synchronous. This operation is

depicted on

Figure 6. It uses the TCP/IP Protocol.

The RFpat modality is launched first, because of his

low acquisition rate. Then, supervisor software

launches Gardien and Anason applications with

TCP/IP commands (Figure 6).

Figure 6: Synchronisation operation between our three

proposed modalities.

3.2 EMUTEM Database Recording

In order to precisely evaluate the performance of

EMUTEM platform, we first use it to record a

MULTI-MODAL PLATFORM FOR IN-HOME HEALTHCARE MONITORING (EMUTEM)

385

multimodal medical database. Our multimodal

database acquisition software described below

provides a very helpful and well-targeted application

to elaborate and assess the data fusion-based

decision methods. The low level data recorded by

our system will be useful for the development of

each modality processing algorithms and their

combination strategies.

In order to index our multimodal database, we

have retained the SAM standard indexing file (Well,

et al., 1992) generally used for Speech Databases

descriptions. The SAM labelling of a sound file

indicates information about the file and describes it

by delimiting the useful part to be used for file

content analysis and processing. For each modality

of the database a corresponding indexation file is

created, we have adapted this type of files to the

specificity of each modality, and we have added

another indexation file for the entire database. This

conceptual indexation model is guided by a-priori

knowledge and the reference scenarios. This aims to

obtain the reference information for our Multimodal

Database, and therefore to generate a novel type of

database to validate different modality signal

processing techniques and approaches of multimodal

data fusion algorithms.

Nowadays, we have enriched our database with

several scenarios played by actors. We already have

the permission of a smart home designer to install

our platform in his facilities which are apartments

with elderly people living in. This will allow us to

better evaluate our developed system and record real

data.

4 CONCLUSIONS AND FUTURE

WORK

During this first step of our collaborative research

work, we developed a multimodal platform which

performs in-home healthcare monitoring and

especially distress situation detection and prediction.

We put together three different modalities in order to

ensure elderly person security in comfortable, non-

intrusive way. We propose a wearable device able to

acquire and process physiological signals, a smart

sound sensor which analyses the environmental

home sounds in order to detect distress situations

and sentences and an infrared sensor array which

localizes the person at home and detects her vertical

position.

Nowadays, we are developing several techniques

in order to fuse different inputs of these systems.

Our ultimate target is to make this in-home

healthcare system more robust towards false alarms

and non detected hazardous situations. This platform

could help medical staff to take the right decision

about the person situation even if they are distant.

ACKNOWLEDGEMENTS

The authors gratefully acknowledge the contribution

of French National Research Association (ANR),

QuoVADis Project.

REFERENCES

Baldinger, J., Boudy, J., Dorizzi, Levrey, J., Andreao, R.,

Perpre, C., et al. (2004). Tele-surveillance system for

patient at home: The medeville system. ICCHP.

Banerjee, S., Steenkeste, F., Couturier, P., Debray, M., &

Franco, A. (2003). Telesurveillance of elderly patients

by use of passive infra-red sensors in a smart home.

Telemed-Telecare.

Istrate, D., Castelli, E., Vacher, M., Besacier, L., &

Serignat, J. (2006a). Information extraction from

sound for medical telemonitoring. IEEE Trans. on

Information Technology in Biomedecine, (pp. 264-

274).

Istrate, D., Vacher, M., & Serignat, F. (2006b). Generic

implementation of a distress sound extraction system

for elder care. IEEE EMBS, (pp. 3309-3312). New

York.

Reynolds, D. (1995). Speaker identification and

verification using gaussian mixture speeker models.

Speech Communications , 91-108.

Schwarz, G. (1978). Estimating the dimension of a model.

Annals of statistics , 461-464.

Steenkeste, F., Bocquet, H., Chan, M., & Vellas, B.

(1999). Remote monitoring system for elders in a

geriatric hospital. International Conference on aging.

Arlington.

Well, D., Barry, J., Grice, W., Fourcin, M., & Gibbon, A.

(1992). SAM ESPRIT PROJECT2589-multilingual

speech input/output assessment, methodology and

standardization. University College London: Final

report. Technical Report SAM-UCLG004.

HEALTHINF 2009 - International Conference on Health Informatics

386