HUMAN SKIN COLOR DETECTION AND APPLICATION TO

ADULT IMAGE DETECTION

Ryszard S. Chora´s

Institute of Telecommunications, University of Technology & Life Sciences

Kaliskiego Street 7, 85-796 Bydgoszcz, Poland

Keywords: Skin color, Y C

b

C

r

color space, skin likelihood image, adult images.

Abstract: In this paper, we aimed at the detection of adult image. The methods of detection mainly focus on the de-

tection/identification of skin region. Skin detection is of the paramount importance in the detection of adult

images. Our algorithm is designed to detect human skin color in Y C

b

C

r

color space. The proposed system

finds skin regions and then generates the skin likelihood image. Since the skin likelihood image contains shape

information as well as skin color information, we used the skin likelihood image to classify the adult images.

1 INTRODUCTION

The rapid development in the field of digital media

has exposed us to huge amounts of non-textual in-

formation such as audio, video, and images. The re-

trieval and classification techniques have been and are

continuously being developed and improved to facili-

tate the exchange of relevant information.

But images can be able to contributing nude content.

Effective filtering and blocking these images is of

paramount importance in an wide computer networks

(e.g. Internet). An image analysis technique is needed

in order to classify adult (nude, objectionable) images

and further block accessing to objectionable sites.

The detection of images containing nudity and

pornography is based on the identification of human

skin and usually based on color with texture as a ad-

dition feature (Fleck, M.M., Forsyth, D.A., Bregler,

C., 1996),(Jones, M.J., Rehg, J.M., 1998),(Chan, Y.,

Harvey, R., Bagham, J., 2000).

There is an extensive literature (J.Z. Wang, J. Li,

G. Wiederhold, O. Firschein, 1998), (A. Bosson, G.

C. Cawley, Y. Chan and R. Harvey, 2002) on the de-

tection of naked images by using features such as

color histograms, texture measures and shape mea-

sures. Bosson et al. finds skin blobs, and then com-

putes the area, centroid, length of the axes of an el-

lipse, eccentricity, solidity, and extent of skin blobs.

The skin color model used by Fleck et al. (Fleck,

M.M., Forsyth, D.A., Bregler, C., 1996) consists of

a manually specified region in a log-opponent color

space. Detected regions of skin pixels form the input

to a geometric filter based on skeletal structure.

Skin-color detection has been employed in many

applications such as face detection, gesture recogni-

tion, human tracking, pornographic image filtering,

etc.

Skin color has proven to be a useful and robust

cue for face detection, localization and tracking. Nu-

merous techniques for skin color modeling and recog-

nition have been proposed during several past years.

Most existing skin segmentation techniques involve

the classification of individual image pixels into skin

and non- skin categories on the basis of pixel color.

The main goal of skin color detection is to build

a decision rule that will discriminate between skin

and non-skin pixels. Identifying skin colored pixels

involves finding the range of values for which most

skin pixels would fall in a given color space. Various

color spaces are used for processing digital images.

For some purposes, one color space may be more ap-

propriate than others.

Jiao et al. (F. Jiao, W. Gao, L. Duan, G. Cui, 2001)

presented an adult image detection method. They first

use the skin color model to detect naked skin areas

roughly. Then the Gabor filter are applied to remove

those non-skin pixels. Some simple features are ex-

tracted to detect adult images. Several representative

features induced from the naked images are used to

verify these skin areas.

388

S. Chora

´

s R. (2008).

HUMAN SKIN COLOR DETECTION AND APPLICATION TO ADULT IMAGE DETECTION.

In Proceedings of the International Conference on Signal Processing and Multimedia Applications, pages 388-392

DOI: 10.5220/0001939003880392

Copyright

c

SciTePress

2 COLOR

Each image is represented using three primaries of the

color space chosen. Most digital images are stored in

RGB color space. RGB color space is represented

with red (R), green (G), and blue (B) primaries and

is an additive system. RGB color space is not per-

ceptually uniform, which implies that two colors with

larger distance can be perceptually more similar than

another two colors with smaller distance, or simply

put, the color distance in RGB space does not repre-

sent perceptual color distance.

RGB is one of the most widely used colorspaces,

however, high correlation between channels, signif-

icant perceptual non-uniformity, mixing of chromi-

nance and luminance data make RGB not a very

favorable choice for color analysis and colorbased

recognition algorithms.

Normalized RGB is a representation, that is eas-

ily obtained from the RGB values by a simple

normalization procedure: r =

R

R+G+B

; g =

G

R+G+B

; b =

B

R+G+B

. The three normalized com-

ponents r, g and b are called pure colors; they con-

tain no information about the luminance. Because

(r+g+b = 1), the third componentdoes not hold any

significant information and can be omitted, reducing

the space dimensionality. It is enough to use only two

components r and g to completely describe the skin

color space.

Y C

r

C

b

is an encoded nonlinear RGB signal for

image compression work. Color is represented by lu-

minance, computed from nonlinear RGB (Poynton,

1995), constructed as a weighted sum of the RGB

values, and two color difference values C

r

and C

b

that

are formed by subtracting luminance from RGB red

and blue components.

Y C

b

C

r

color space has been defined in response

to increasing demands for digital algorithms in han-

dling video information, and has since become a

widely used model in a digital video. It belongs

to the family of television transmission color spaces.

These color spaces separate RGB into luminance and

chrominance information.

Y = 0, 299R + 0, 587G + 0, 114B

C

r

= 0, 713(R − Y ) (1)

C

b

= 0, 564(B − Y )

HSI, HSV, HSL - Hue, Saturation, Intensity

(Value, Lightness), color spaces describe color with

intuitive values. Hue defines the dominant color (such

as red, green, purple and yellow) of an area, satura-

tion measures the colorfulness of an area in propor-

tion to its brightness (Poynton, 1995). The ”inten-

sity”, ”lightness” or ”value” is related to the color lu-

minance. The intuitiveness of the color space com-

ponents and explicit discrimination between lumi-

nance and chrominance properties made these color

spaces popular in the works on skin color segmenta-

tion (Zarit, B.D., Super, B.J., Quek, F.K.H., 2002),

(Sigal, L., Sclaroff, S., Athitsos, V., 2000).

H = arcsin(

(C

r

− 128)

128 · S

); V =

Y

256

S =

p

(C

r

− 128)

2

+ (C

b

− 128)

2

128

(2)

3 SKIN-COLOR MODEL

3.1 Explicitly Defined Skin Region

The statistical skin-color model is generated by means

of a supervised training, using a set of skin-color re-

gions, obtained from a color human body database.

Such images were obtained from people of different

races, ages and gender,with varying illumination con-

ditions.

One method to build a skin classifier is to define

explicitly (through a number of rules) the boundaries

skin cluster in some color space. For example (Peer,

P., Kovac, J., Solina, F., 2003) (R, G, B) is classified

as skin if:

R > 95 and G > 40 and B > 20 and

max{R, G, B} − min{R, G, B} > 15 and

|R − G| > 15 and R > G and R > B.

We have found that skin-color region can be iden-

tified by the presence of a certain set of chrominance

(ie C

r

and C

b

) values that is narrowly and consis-

tently distributed in the Y C

r

C

b

color space. We de-

note RC

r

and RC

b

as the respective ranges of C

r

and

C

b

values that correspond to skin color, which sub-

sequently define our skin-color reference map. The

ranges that we found to be the most suitable for all

the input images that we have tested are RC

r

=

[133, 173] and RC

b

= [77, 127]. This map has been

proven, in our experiments, to be very robust against

different types of skin color (Figure 1).

With this skin-color reference map, the color seg-

mentation can now begin. Since we are utilizing only

the color information, the segmentation requires only

the chrominance component of the input image. The

output of the color segmentation, is a skin bitmap SM

described as

SM (x, y) =

1 if C

r

(x, y) ∈ RC

r

∩ C

b

(x, y) ∈ RC

b

0 otherwise

(3)

HUMAN SKIN COLOR DETECTION AND APPLICATION TO ADULT IMAGE DETECTION

389

Figure 1: The histogram of Y, C

b

, C

r

components of white

(a) and black (b) skin colors.

The output pixel at point (x, y) is classified as

skin-color and set to 1 if both the C

r

and C

b

values

at that point fall inside their respective ranges, RC

r

and RC

b

. Otherwise, the pixel is classified as non-

skin-color and set to 0 (Figure 2).

Figure 2: Original image (a) and skin map (b).

Figure 3: Skin color distribution.

The skin color model based on C

b

and C

r

values

can provide good coverage of all human races. De-

spite their different appearances, these color types be-

long to the same cluster in C

b

C

r

plane (Figure 3). We

classify the popular skin colors of naked images, in-

cluding various lighting conditions, into several cat-

egories. The corresponding compactly chroma his-

togram of each category is compiled in advance.

3.2 Feature Extraction

The performance of the naked image detection ex-

tremely depends on the accurate skin segmentation.

Since the skin property is very smooth, we utilize the

roughness feature to further reject confusion from non

skin stuff with skin like chroma.

3.2.1 Texture Feature from Gabor Wavelet

Transform

For further improved skin detection blobs we propose

skin color filter based on texture features. The Gabor

filter will be eliminated non-skin pixels.

Gabor wavelet based texture is robust to orienta-

tion and illumination change, It is a powerful tool to

extract texture features. Gabor functions are Gaus-

sians modulated by complex sinusoids. In two dimen-

sions they take the form:

g(x, y) = (

1

2πσ

x

σ

y

) exp[−

1

2

(

x

2

σ

2

x

+

y

2

σ

2

y

)+ 2πjW x] (4)

G(u, v) = exp{−

1

2

[

(u − W )

2

σ

2

u

+

v

2

σ

2

v

]} (5)

where σ

u

=

1

2π σ

x

, σ

v

=

1

2π σ

y

.

Gabor wavelets can be obtained by appropriate di-

lations and rotations of g(x, y) through the generating

function:

g

mn

(x, y) = a

−m

g(x

′

, y

′

); a > 1 m = 0, 1, . . . , S − 1

(6)

x

′

= a

−m

(x cos θ+y sin θ), y

′

= a

−m

(−x sin θ+y cos θ)

(7)

θ =

nπ

K

k = integer.

Where k is the number of orientations, given an

image I(x, y), its Gabor wavelets transform is calcu-

lated as:

W

mn

(x, y) =

XX

I(x

1

, y

1

)g

∗

mn

(x − x

1

, y − y

1

) (8)

Where g

∗

is the complex conjugate of g, the tex-

ture feature is given as:

T (x, y) =

q

(

XX

W

2

mn

(x, y)) (9)

In our experiment, we use three scales (m = 0, 1, 2)

and four orientations (n = 0, 1, 2, 3) (Figure 4).

Figure 4: Real (a) and imaginery (b) parts of Gabor

wavelets and Gabor kernels with different orientations (c).

Then texture image is computed from equation 9,

the texture mask image is obtained as:

T M (xy) =

1 if T (x, y) ≤ th

0 if T (x, y) > th

(10)

where th is the texture threshold. Since human skin

is smooth, its texture feature value is relatively low.

Using texture mask, pixels reassemble skin in color

but has high texture feature value will be filtered.

SIGMAP 2008 - International Conference on Signal Processing and Multimedia Applications

390

For each obtained skin color region (blob) we de-

fine a ”rectangular box” with the aspect ratio ar de-

fined as

ar =

|x

right

− x

lef t

|

|y

top

− y

down

|

(11)

where x

right

, x

left

, y

top

, y

down

indicate the

smallest and largest x- and y-coordinates, respec-

tively.

The rectangular box contain a skin color region as

well as several non-skin color region. The skin color

ratio scr is defined as

scr =

T he number of sk in color pixels

Area rectangular box

(12)

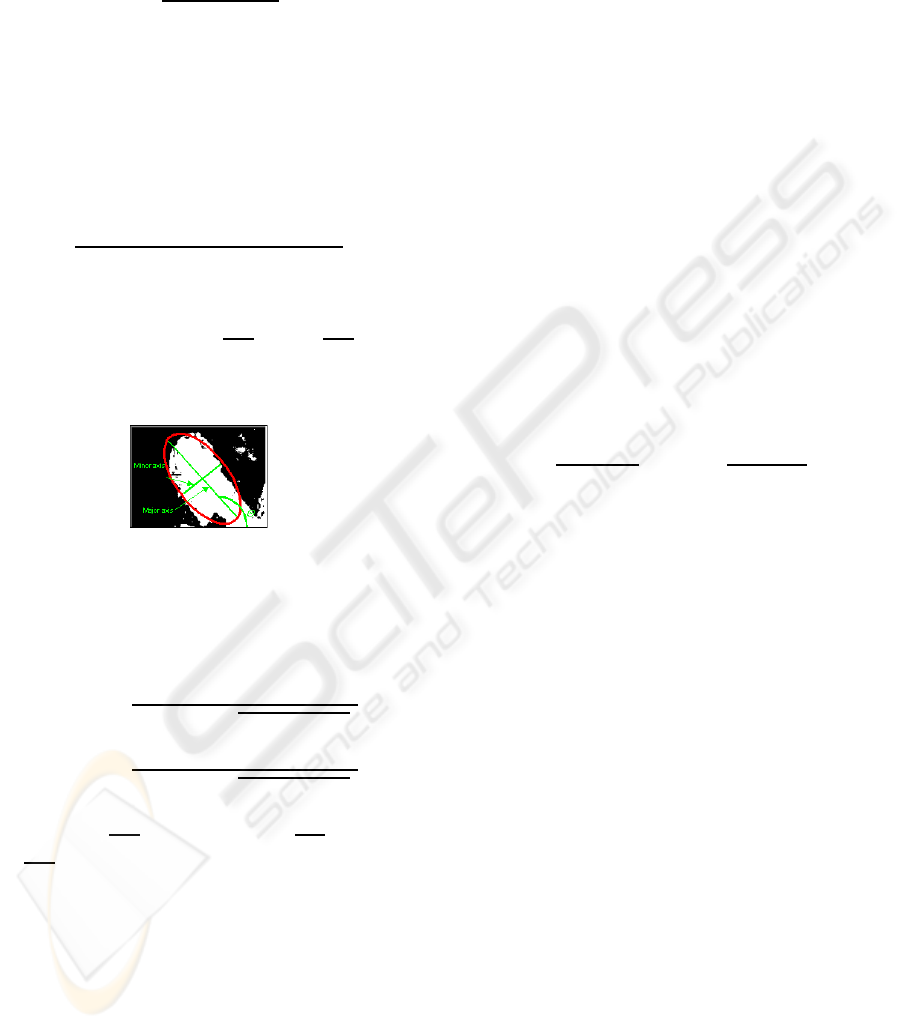

Next, we apply the ellipse model for skin body

blobs (Figure 5). The central position (x

c

, y

c

) of the

blob are estimated as x

c

=

M

10

M

00

; y

c

=

M

01

M

00

where

M

00

, M

10

, M

01

are the first order moment calculated

from the blob pixels.

Figure 5: Blob ellipse model.

The ellipse model for a candidate skin body region

is defined by area, major and minor axis of the ellipse

with following equations

axis

major

=

q

6(p + r +

p

q

2

+ (p − r)

2

) (13)

axis

minor

=

q

6(p + r −

p

q

2

+ (p − r)

2

) (14)

where p =

M

20

M

00

− x

2

c

, q = 2(

M

20

M

00

− x

c

y

c

),

r =

M

02

M

00

− y

2

c

.

The parameters of the ellipse are used to decide

the body skin area.

The nudity detection algorithm works in the fol-

lowing manner:

1. Calculate the corresponding Y C

b

C

r

values from

the RGB values. Label each pixel as skin or non-

skin and identify connected skin pixels to form

skin regions.

2. Use the Gabor filter to improve skin regions and

eliminate non-skin pixels.

3. Define a ”rectangular box” for the largest skin

blobs and calculate ar, scr. Fit the skin blobs

using a simple geometric shape such as ellipse.

Calculate the parameters of the ellipse.

4. If the percentage of skin pixels relative to the im-

age size is less than 15 percent, the image is not

nude. If the ranges of ar and sar are ar =

[0.35, 0.85] and scr = [0.75, 0.85] the image is

nude.

4 EXPERIMENTAL RESULTS

AND CONCLUSIONS

In this paper, we use the color information of image

to detected the regions skin in nude image. Because

the shape and texture information of image is useful

information, in classifying nude image is necessary.

The detection rate and false alarm rate are ex-

pressed as a percentage, which represent in equation

15

DR =

T P

T P + F N

; F AR =

F P

T P + F P

(15)

where T P = True Positive, F P = False Positive

and F N = False Negative.

The detection rates and false alarm rates for the test-

ing set are respectively DR = 93% and F AR = 7%.

These results show that the proposed segmentation al-

gorithm could provide the face segmentation more ef-

ficient and it also has less affect from they fast or slow

moving object in video sequences.

REFERENCES

A. Bosson, G. C. Cawley, Y. Chan and R. Harvey (2002).

Non- retrieval:blocking pornographic images. In Pro-

ceedings of the International Conference on the Chal-

lenge of Image and Video Retrieval, volume 2383

of Lecture Notes in Computer Science, pages 50–60.

Springer, London.

Chan, Y., Harvey, R., Bagham, J. (2000). Using colour fea-

tures to block dubious images. In Proc. Eusipco.

F. Jiao, W. Gao, L. Duan, G. Cui(2001). Detecting adult im-

age using multiple features. In Proceedings of IEEE

International Conference on Info-tech and Info-net,

pages 378–383.

Fleck, M.M., Forsyth, D.A., Bregler, C. (1996). European

Conference on Computer Vision, volume II, chap-

ter Finding naked people, pages 593–602. Springer-

Verlag.

HUMAN SKIN COLOR DETECTION AND APPLICATION TO ADULT IMAGE DETECTION

391

Jones, M.J., Rehg, J.M. (1998). Statistical color models

with application to skin detection. Technical Report

CRL 98/11 11, Compaq Cambridge Research Labora-

tory.

J.Z. Wang, J. Li, G. Wiederhold, O. Firschein (1998). Sys-

tem for screening objectionable images. Computer

Communications, 21(15):1355–1360.

Peer, P., Kovac, J., Solina, F. (2003). Human skin colour

clustering for face detection. In EUROCON 2003 -

International Conference on Computer as a Tool.

Poynton, C. A. (1995). Frequently

asked questions about colour. In

ftp://www.inforamp.net/pub/users/poynton/doc/colour/.

Sigal, L., Sclaroff, S., Athitsos, V. (2000). Estimation and

prediction of evolving color distributions for skin seg-

mentation under varying illumination. In Proc. IEEE

Conf. on Computer Vision and Pattern Recognition,

volume 2, pages 152–159.

Zarit, B.D., Super, B.J., Quek, F.K.H. (2002). Comparison

of five color models in skin pixel classification. In

ICCV99 Intl Workshop on recognition, analysis and

tracking of faces and gestures in Real-Time systems,

pages 58–63.

SIGMAP 2008 - International Conference on Signal Processing and Multimedia Applications

392