A GENERIC ARCHITECTURE FOR A COMPANION ROBOT

∗

Bas R. Steunebrink, Nieske L. Vergunst, Christian P. Mol, Frank P. M. Dignum

Mehdi Dastani and John-Jules Ch. Meyer

Intelligent Systems Group, Institute of Information and Computing Sciences

Utrecht University, The Netherlands

Keywords:

Social robot, architecture.

Abstract:

Despite much research on companion robots and affective virtual characters, a comprehensive discussion on a

generic architecture is lacking. We compile a list of possible requirements of a companion robot and propose

a generic architecture based on this list. We explain this architecture to uncover issues that merit discussion.

The architecture can be used as a framework for programming companion robots.

1 INTRODUCTION

Recently, research in companion robots and affective

virtual characters has been increasing steadily. Com-

panion robots are supposed to exhibit sociable be-

havior and perform several different kinds of tasks

in cooperation with a human user. Typically, they

should proactively assist users in everyday tasks and

engage in intuitive, expressive, and affective interac-

tion. Moreover, they usually have multiple sensors

and actuators that allow for rich communication with

the user. Of course, the task of designing and building

a companion robot is highly complex.

Companion robots and affective virtual charac-

ters have already been built up to quite advanced

stages. However, teams wishing to research compan-

ion robots often have to start from scratch on the soft-

ware design part, because it is hard to distill a firm

framework from the literature to use as a basis. Of

course many figures representing architectures have

been published, but it remains difficult to find out

how existing companion robots really work internally.

This may be due to most publications focusing on test

results of the overall behaviors rather than on explain-

ing their architectures in the level of detail required

for replication.

The lack of emphasis on architectures may be

caused by much of the research on companion robots

being driven by the teams’ research goals, resulting in

their architectures mostly being designed to support

∗

This work supported by SenterNovem, Dutch Compan-

ion project grant nr: IS053013.

just the desired behaviors instead of being generic for

companion robots. If there were a good generic ar-

chitecture for companion robots, a (simple) default

implementation could be made, providing (new) re-

searchers with a framework that they could use as a

starting point. Depending on the application domain

and research goals, some default implementations of

modules constituting the architecture may be replaced

to achieve the desired custom behavior, while other

modules can just be readily used to complete the soft-

ware of the companion robot.

Of course, anyone wishing to build the software

of a companion robot can just start up his/her favorite

programming environment and try to deal with prob-

lems when they occur, but obviously this is not a very

good strategy to follow. Instead, designing and dis-

cussing an architecture beforehand raises interesting

issues and allows questions to be asked that otherwise

remain hidden. Indeed, there are many non-trivial

choices that have to be made, pertaining to e.g. dis-

tribution and assignment of control among processes,

synchronization of concurrent processes, which pro-

cess is to convert what data into what form, where

to store data in what form, which process has ac-

cess to which stored data, which process/data influ-

ences which other process and how, the types of ac-

tion abstractions that can be distinguished (e.g. strate-

gic planning actions, dialogue actions, locomotion ac-

tions), the level of action abstraction used for reason-

ing, who converts abstract actions into control sig-

nals, how are conflicts in control signals resolved,

what are the properties of a behavior emerging from

315

R. Steunebrink B., L. Vergunst N., P. Mol C., P. M. Dignum F., Dastani M. and Ch. Meyer J. (2008).

A GENERIC ARCHITECTURE FOR A COMPANION ROBOT.

In Proceedings of the Fifth International Conference on Informatics in Control, Automation and Robotics, pages 315-321

DOI: 10.5220/0001509103150321

Copyright

c

SciTePress

a chosen wiring of modules, what defines the char-

acter/personality of a companion robot (is it stored

somewhere, can its parameters be tweaked, or does it

emerge from the interactions between the modules?).

Answers to these and many other questions may not

be obvious when presented with a figure representing

an architecture, but these issues can be made explicit

by proposing and discussing one.

In this paper we introduce an architecture which

is generic for companion robots and explain it in as

much detail as possible in this limited space. This

architecture contains the components necessary to

produce reasonably social behavior given the mul-

timodality of a companion robot’s inputs and out-

puts. We do not claim that the proposed architecture

represents the ultimate companion robot architecture.

Rather, the aim of this paper is to provoke a discus-

sion on the issues and choices involved in designing

the software of a companion robot. Ultimately, this

work could lead to the implementation of a generic

framework that could be used as a basis for the soft-

ware of new companion robots.

This paper is outlined as follows. In Section 2,

we gather a list of possible requirements for a com-

panion robot and introduce our architecture satisfying

these requirements. In Section 3, we treat the func-

tional components in the architecture in more detail.

In Section 4, we connect the functional components

by explaining the interfaces between them. We dis-

cuss some related work in Section 5 and finish with

conclusions and plans for future research in Section

6.

2 POSSIBLE REQUIREMENTS

FOR A COMPANION ROBOT

In order to come up with a generic architecture suit-

able for companion robots, we must first investi-

gate the possible requirements for a companion robot.

These requirements are optional, meaning that only

the ‘ultimate’ companion robot would satisfy them

all. In practice however, a companion robot does

not have to. The actual requirements depend on var-

ious factors, such as the application area of the robot

and its hardware configuration. However, below we

compile a list, as exhaustive as possible, of possible

requirements which a generic architecture must take

into account.

First of all, a companion robot should be able to

perceive the world around it, including auditory, vi-

sual, and tactile information. The multimodality of

the input creates the need for synchronization (e.g.,

visual input and simultaneously occurring auditory

input are very likely to be related), and any input

inconsistent over different modalities should be re-

solved. Moreover, input processors can be driven

by expectations from a reasoning system to focus the

robot’s attention to certain signals. Of course, any in-

coming data must be checked for relevancy and cate-

gorized if it is to be stored (e.g., to keep separate mod-

els of the environment, its users, and domain knowl-

edge).

A companion robot should be able to communi-

cate with the user in a reasonably social manner. This

means not only producing sensible utterances, but

also taking into account basic rules of communication

(such as topic consistency). In order to maintain a ro-

bust interaction, a companion robot must always be

able to keep the conversation going (except of course

when the user indicates that he is done with the con-

versation). This also involves real-time aspects; e.g.,

to avoid confusing or boring the user, long silences

should not occur in a conversation.

Additionally, a companion robot is likely to be de-

signed for certain specific tasks, besides communicat-

ing with its users. Depending on e.g. the domain for

which the companion robot is designed and the type

of robot and the types of tasks involved, this may call

for capabilities involving planning, physical actions

such as moving around and manipulating objects, or

electronic actions (e.g., performing a search on the

internet or programming a DVD recorder). Proactive-

ness on part of the robot is often desirable in tasks

involving cooperation.

A companion robot should also exhibit some low-

level reactive behaviors that do not (have to) enter

the reasoning loop, such as blinking and following

the user’s face, and fast reactive behaviors such as

startling when subjected to a sudden loud noise. To

make the interactions more natural and intuitive, a

companion robot should also be able to form and ex-

hibit emotions. These emotions can be caused by

cognitive-level events, such as plans failing (disap-

pointment), goal achievement (joy), and perceived

emotions from the user (if negative: pity). Reac-

tive emotions like startle or disgust can also influ-

ence a robot’s emotional state. Moreover, emotions

can manifest themselves in many different ways; e.g.,

facial expressions, speech prosody, selecting or aban-

doning certain plans, etc.

Finally, a companion robot should of course pro-

duce coherent and sensible output over all available

modalities. Because different processes may produce

output concurrently and because a companion robot

typically has multiple output modalities, there should

be a mechanism to synchronize, prioritize, and/or

merge these output signals; e.g., speech should co-

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

316

incide with appropriate lip movements, which should

overrule the current facial animation, but only the part

that concerns the mouth of the robot (provided it has

a mouth with lips).

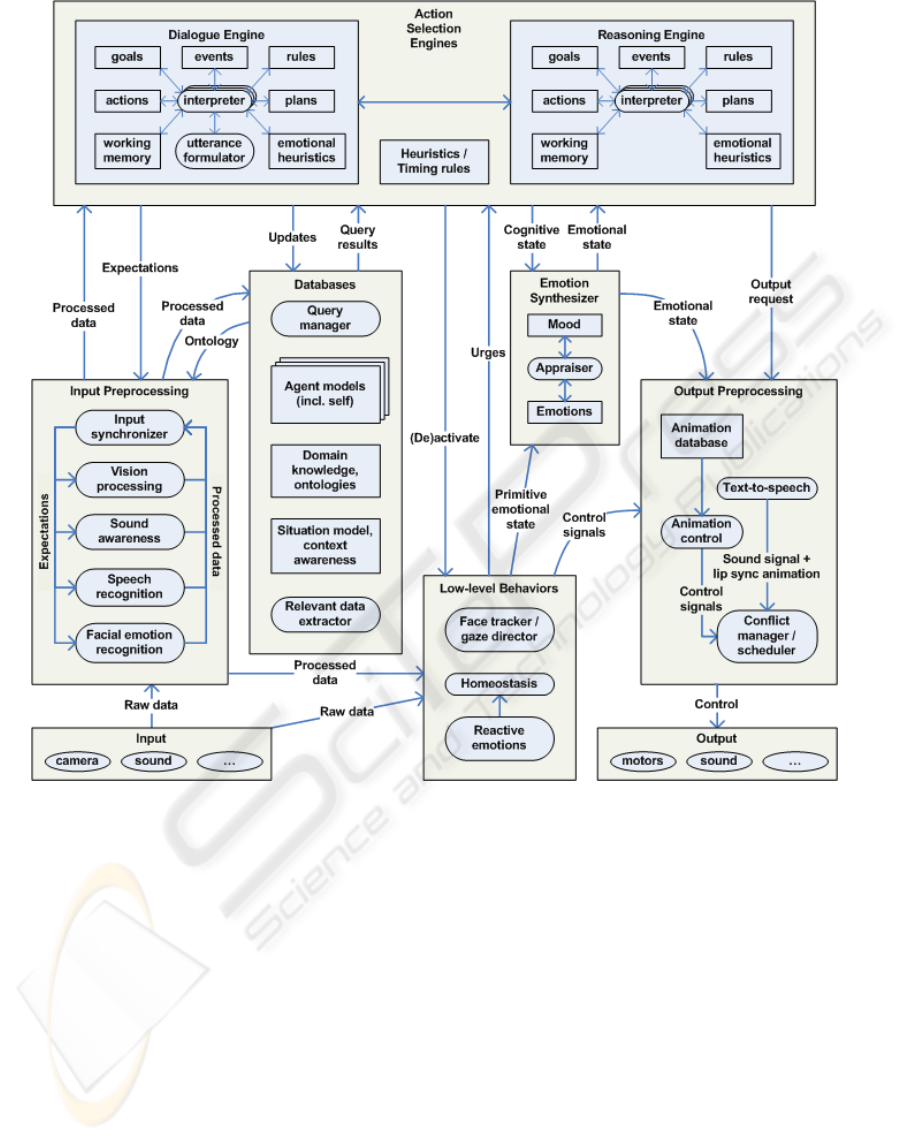

In Figure 1, we present a generic architecture for

companion robots which accounts for the require-

ments described above. Note that we abstract from

specific robot details, making the architecture useful

for different types of companion robots. We empha-

size again that this is an architecture for an ‘ultimate’

companion robot; in practice, some modules can be

left out or implemented empty.

3 FUNCTIONAL COMPONENTS

CONSTITUTING THE

ARCHITECTURE

In this section we describe the ‘blocks’ that constitute

the proposed architecture. The interfaces (‘arrows’)

between the components are explained in Section 4.

To begin with, the architecture is divided into

eight functional components (i.e. the larger boxes

encompassing the smaller blocks). Each functional

component contains several modules that are func-

tionally related. Modules drawn as straight boxes rep-

resent data storages, the rounded boxes represent pro-

cesses, and the ovals represent sensors and actuators.

Each process is allowed to run in a separate thread, or

even on a different, dedicated machine.

No synchronization is forced between these pro-

cesses by the architecture; they can simply send in-

formation to each other (see Section 4), delegating

the task of making links between data coming in from

different sources to the processes themselves. Below,

each of the eight functional components is described,

together with the modules they encompass.

Input Modalities. A companion robot typically has

a rich arsenal of input modalities or sensors. These

are grouped in the lower left corner of Figure 1, but

only partially filled in. Of course, different kinds of

companion robots can have different input modalities,

of which a camera and a microphone are probably the

most widely occurring. Other sensors may include

touch, (infrared) proximity, accelerometer, etc.

Input Preprocessing. It is impractical for a reason-

ing engine to work directly with most raw input data,

especially raw visual and auditory data. Therefore,

several input preprocessing modules must exist in or-

der to extract salient features from these raw inputs

and convert these to a suitable data format. Some in-

put modalities may even require multiple preprocess-

ing modules; for example, one audio processing mod-

ule may extract only speech from an audio signal and

produce text, while another audio processing module

may extract other kinds of sounds to create a level of

‘sound awareness’ for the companion robot.

Note that some of these input preprocessing mod-

ules may be readily available as off-the-shelf software

(most notably, speech recognizers), so a generic archi-

tecture must provide a place for them to be plugged

in.

Furthermore, there may be need for an input syn-

chronizer that can make links between processed data

from different modalities, in order to pass it as a sin-

gle event to another module. The input synchronizer

may initially be implemented empty; that is, it sim-

ply passes all processed data unchanged to connected

modules. The input synchronizer can also be used to

dispatch expectations that are formed by the action

selection engines to the input preprocessing modules,

which can use these expectations to facilitate feature

recognition.

Low-level Behaviors. Low-level behaviors are au-

tonomous processes that compete for control of actu-

ators in an emergent way. Some behaviors may also

influence each other and other modules. Examples

of low-level behaviors include face tracking and gaze

directing, blinking, breathing, and other ‘idle’ anima-

tions, homeostasis such as the need for interaction,

sleep, and ‘hunger’ (low battery power), and reactive

emotions such as startle and disgust.

Action Selection Engines. The ‘heart’ of the ar-

chitecture is formed by the action selection engines.

These are cognitive-level processes that select actions

based on collections of data, goals, plans, events,

rules, and heuristics. The outputs that they produce

can generally not be directly executed by the actua-

tors, but will have to be preprocessed first to appro-

priate control signals. Note that the interpreters of the

action selection engines are depicted as layered to in-

dicate that they can be multi-threaded.

The reasoning engine may be based on the BDI

theory of beliefs, desires, and intentions (Bratman,

2002), deciding which actions to take based on per-

cepts and its internal state. It should be noted that

in terms of the BDI theory, the databases component

plus the working memories of the action selection en-

gines constitute the robot’s beliefs.

An action selected by the reasoning engine may be

sent to an output preprocessing module, but it can also

consist of a request to initiate a dialogue. Because dia-

logues are generally complex and spread over a longer

period of time, a dedicated action selection engine

may be needed to successfully have a conversation.

A GENERIC ARCHITECTURE FOR A COMPANION ROBOT

317

Figure 1: A generic architecture for a companion robot. The architecture takes into account the possible (or rather, probable)

existence of multiple input modalities, multiple input preprocessing modules for each input modality, databases for filtering,

storing, and querying relevant information, action selection engines for complex, goal-directed, long-term processes such as

conversing, planning, and locomotion, an emotion synthesizer producing emotions that influence action selection and ani-

mations, multiple (reactive) low-level behaviors that can compete for output control, multiple output preprocessing modules

including a conflict manager, and finally, multiple output modalities. Straight boxes stand for data storages, rounded boxes

for processes, and ovals for sensors/actuators. The interfaces (arrows) between different modules indicate flow of data or

control; the connections and contents are made more precise in the text. Note that only the ‘ultimate’ companion robot would

fully implement all depicted modules; a typical companion robot implementation will probably leave out some modules or

implement them empty, awaiting future work.

This dialogue engine contains an extra process called

an utterance formulator; the task of this module is to

convert an illocutionary act to fully annotated text, i.e.

the exact text to utter together with information about

speed, emphasis, tone, etc. This text can then be con-

verted to audio output by the text-to-speech module

(in the output preprocessing component).

A similar discussion about separating dialogues

and (strategic) planning can be held for locomotion.

In our research we have worked with stationary com-

panion robots that focus on dialogues and facial ani-

mations. But there can of course be companion robots

with advanced limbs and motions. For such robots

there may be need for a third action selection engine,

dedicated to motion planning. In the proposed archi-

tecture, there is room for additional dedicated engines

in the functional component of action selection en-

gines.

Finally, the architecture provides for a module

called heuristics / timing rules. This is a collection

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

318

of heuristics for balancing control between the differ-

ent action selection engines, as they are assumed to be

autonomous processes. The different engines will get

priorities in different cases; e.g., the plans of the dia-

logue engine will get top priority if a misunderstand-

ing needs to be repaired. On the other hand, if the

dialogue engine does not have any urgent issues, the

reasoning engine will get control over the interaction

in order to address its goals. Furthermore, it can ver-

ify whether the goals of the different action selection

engines adhere to certain norms that apply to the com-

panion robot in question, as well as provide new goals

based on timing rules; e.g., to avoid long silences, the

robot should always say something within a few sec-

onds, even if the reasoning engine is still busy.

Databases. We have created a distinct functional

component in the architecture where data is stored in

different forms. This data includes domain knowl-

edge, ontologies, situation models, and profiles of the

robot itself and of other agents. The ontologies and

domain knowledge are (possibly static) databases that

are used by the input preprocessing modules to find

data representations suitable to the action selection

engines and databases. The agent profiles store infor-

mation about other agents, such as the robot’s interac-

tion histories with these modeled agents, the common

grounds between the robot and each modeled agent,

and the presumed beliefs, goals, plans, and emotions

of each modeled agent. These agent models also in-

clude one of the robot itself, which enables it to reason

about its own emotions, goals, etc.

In order to provide a consistent interface to these

different databases, a query manager must be in place

to handle queries, originating from the action selec-

tion engines. A special situation arises when the robot

queries its own agent model, for there already exist

modules containing the goals, plans, and emotions of

the robot itself. So the query manager should ensure

that queries concerning these types of data can get

their results directly from these modules.

Finally, a relevant data extractor takes care of

interpreting incoming data in order to determine

whether it can be stored in a more suitable format;

e.g., if visual and auditory data from the input pre-

processing component provides new (updated) infor-

mation about the environment of the robot, it is in-

terpreted by the relevant data extractor and stored

in the situation model. Moreover, simple spatial-

temporal reasoning may be performed by the relevant

data extractor. If advanced spatial-temporal reasoning

is needed for some companion robot, it may be better

to delegate this task to a separate input preprocessing

module.

Emotion Synthesizer. Typically, companion robots

must show some level of affective behavior. This

means responding appropriately to emotions of a (hu-

man) user, but also includes experiencing emotions it-

self in response to the current situation and its internal

state. The emotions that concern this functional com-

ponent are those of the companion robot itself and are

at a cognitive level, i.e., at the level of the action se-

lection engines. Examples of emotions are joy when

a goal is achieved, disappointment when a plan fails,

resentment when another agent (e.g. a human user)

gains something at the expense of the robot, etc. More

reactive emotions (e.g., startle) can be handled by a

low-level behavior.

The emotion component consists of three parts.

The appraiser is a process that triggers the creation

of emotions based on the state of the action selec-

tion engines. The intensity of triggered emotions is

influenced by the robot’s mood (the representation

of which may be as simple as a single number) and

a database of previously triggered emotions. This

database of emotions then influences the action se-

lection engines (by way of their emotional heuris-

tics module) and the animations of the robot, e.g., by

showing a happy or sad face.

Output Preprocessing. Different processes may try

to control the robot’s actuators at the same time; obvi-

ously, this calls for conflict management and schedul-

ing of control signals. Moreover, some modules may

produce actions that cannot be directly executed, but

instead these abstract actions need some preprocess-

ing to convert them to the low-level control signals

expected by the robot’s actuators. E.g., the dialogue

engine may want some sentence to be uttered by the

robot, but this must first be converted from text to a

sound signal before it can be sent to the loudspeaker.

This functionality is provided by the text-to-speech

module, which is also assumed to produce corre-

sponding lip sync animations.

For companion robots with a relatively simple mo-

tor system, it suffices to have a single module for

animation control which converts abstract animation

commands to low-level control signals. This can be

done with the help of an animation database contain-

ing sequences of animations that can be invoked by

name and then readily played out. For companion

robots with a complex motor system, the animation

control module may be replaced by a motion engine

(which is placed among the other action selection en-

gines), as discussed above. In this case, an anima-

tion database may still fulfill an important role as a

storage of small, commonly used sequences of motor

commands.

Finally, actuator control requests may occur con-

A GENERIC ARCHITECTURE FOR A COMPANION ROBOT

319

currently and be in conflict with each other. It is

the task of the conflict manager to provide the actua-

tors with consistent control signals. This can be done

by choosing between conflicting requests, scheduling

concurrent requests, or merging them. These choices

are made on a domain-dependent basis.

Output Modalities. All output modalities or actua-

tors are grouped in the lower right corner of Figure 1.

Similarly with the input modalities, these will be dif-

ferent for different kinds of companion robots, but a

typical companion robot will probably have at least

some motors (for e.g. facial expressions and locomo-

tion) and a loudspeaker. Other actuators may include

lights, radio, control of other electronic devices, etc.

4 INTERFACES BETWEEN

FUNCTIONAL COMPONENTS

In this section, we explain the meaning of the inter-

faces between the functional components. For cos-

metic reasons, the ‘arrows’ in Figure 1 appear to lead

from one functional component to another, while they

actually connect one or more specific modules inside

a functional component to other modules inside an-

other functional component. References to arrows in

Figure 1 are marked in boldface.

Raw Data that is obtained by the input sensors

is sent to the input preprocessing component for pro-

cessing. Needless to say, data from each sensor is sent

to the appropriate processing module; e.g., input from

the camera is sent to the vision processing and facial

emotion recognition modules, while input from the

microphone is sent to the sound awareness and speech

recognition modules. Any module inside the low-

level behaviors component is also allowed to access

all raw input data if it wants to perform its own feature

extraction. In addition to raw data, low-level behav-

iors also have access to the Processed data from the

modules inside the input preprocessing component.

After the processed data is synchronized (or not) by

the input synchronizer, it is sent to the action selec-

tion engines, where it is placed in the events mod-

ules inside the engines. The processed data is also

sent to the databases, where the relevant data extractor

will process and dispatch relevant data to each of the

databases; e.g., context-relevant features are added to

the situation model, while emotions, intentions and

attention of a user that are recognized by the various

input preprocessing modules are put in the appropri-

ate agent model. Furthermore, the action selection

engines can form Expectations about future events.

These expectations are sent from the action selection

engines back to the input synchronizer, which subse-

quently splits up the expectations and sends them to

the appropriate input processing modules. They can

then use these expectations to facilitate processing of

input.

All processing modules in the input preprocess-

ing component have access to Ontology information,

which they might need to process raw data properly;

e.g., the vision processing module might need onto-

logical information about a perceived object in order

to classify it as a particular item. This also ensures

the use of consistent data formats. The processing

modules can obtain this ontological information via

the query manager in the databases component, which

takes care of all queries to the databases. Updates to

the databases can be performed by the action selec-

tion engines. The updates are processed by the rel-

evant data extractor, which places the data in a suit-

able format in the appropriate database, in the same

way as the processed data from the input preprocess-

ing component. Query results can be requested by

the action selection engines from the databases. The

query manager processes the query and searches the

proper database(s), guaranteeing a coherent interface

to all databases.

(De)activate signals can be sent from the action

selection engines to the low-level behaviors compo-

nent. These signals allow the action selection engines

some cognitive control over the robot’s reactive be-

havior; e.g., if needed, the face tracker can be acti-

vated or deactivated, or in some special cases the re-

active emotions can be turned off. Urges arising from

the low-level behaviors can be made into goals for the

action selection engines. For example, if the home-

ostasis module detects a low energy level, a goal to

go to the nearest electricity socket can be added to the

goals of the motion engine.

The action selection engines provide their Cog-

nitive state to the emotion synthesizer. The cog-

nitive state can be used by the appraiser to synthe-

size appropriate emotions. In addition to the cogni-

tive state, a Primitive emotional state is also sent to

the appraiser, where it can influence the intensity of

cognitive-level emotions and the robot’s mood. The

current Emotional state, which is a compilation of

the collection of triggered emotions, is sent to the ac-

tion selection engines. The emotional heuristics in-

side the action selection engines then determine how

the interpreter is influenced by these emotions. The

animation control module inside the output prepro-

cessing component also receives the emotional state

of the agent, so that it can select a suitable facial ex-

pression from the animation database representing the

current emotional state.

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

320

Output Requests are sent from the interpreters

inside action selection engines to the output prepro-

cessing component. Of course, the different kinds of

output requests are sent to different modules inside

the output preprocessing; e.g., (annotated) utterances

from the dialogue engine’s utterance formulator are

sent to the text-to-speech module, while any actions

from the action selection engines that involve motors

are sent to the animation control module. The syn-

chronization of all output signals is taken care of by

the conflict manager, as explained in the previous sec-

tion. Finally, Control signals are gathered and syn-

chronized by the conflict manager inside the output

preprocessing component and sent to the appropriate

output modality.

5 DISCUSSION AND RELATED

WORK

We do not claim that the presented architecture is

perfect, and although we claim that it is generic for

companion robots, it is probably not unique. Another

team setting out to make a generic companion robot

architecture will probably come up with a different

figure. However, we expect the level of complexity

of alternative architectures to resemble that of the one

presented here, as it takes many components and pro-

cesses to achieve reasonably social behavior. It should

be noted that the intelligence of the system may not

lie within the modules, but rather in the wiring (the

“arrows”). The presentation of an architecture should

therefore include a discussion on the particular choice

of interfaces between modules. We draw confidence

in our architecture from the fact that mappings can be

found between this one and the architectures of exist-

ing companion robots and affective virtual characters,

several of which we discuss next.

Breazeal (Breazeal, 2002) uses competing behav-

iors for the robot Kismet in order to achieve an emerg-

ing overall behavior that is sociable as a small child.

Kismet has a number of different response types with

activation levels that change according to Kismet’s in-

teraction with a user. In Kismet’s architecture, the Be-

havior System and Motivation System can be mapped

on our low-level behaviors; however, it lacks cogni-

tive reasoning (obviously this was not necessary for

its application), which is provided by our action se-

lection engines. Other components in Kismet’s archi-

tecture pertain to input and output processing, which

map to corresponding preprocessing modules in our

architecture.

Max, the “Multimodal Assembly eXpert” devel-

oped at the University of Bielefeld (Kopp, 2003), can

also be mapped to our architecture. For example, it

uses a reasoning engine that provides feedback to the

input module to focus attention to certain input sig-

nals, which is similar to our expectations. It also has

a lower-level Reactive Behavior layer that produces

direct output without having to enter the reasoning

process, and a Mediator that performs the same task

as our conflict manager (i.e. synchronizing output).

However, Max only has one (BDI) reasoning engine,

where we have provided for two or more action selec-

tion engines.

6 CONCLUSIONS AND FUTURE

RESEARCH

In this paper, we have presented a generic architecture

for a companion robot. We do not claim that it should

be the foundation of the ‘ultimate’ companion robot;

rather, we have presented this architecture in order to

make many of the issues encountered when program-

ming a companion robot explicit, so that these issues

can be appropriately discussed.

The implementation of our companion robot ar-

chitecture is yet to be finished, although it should be

noted that it does not have to be fully implemented in

all cases. Some of the functional components can be

left out, simplified, or even extended (depending on

the application) or programmed empty (awaiting fu-

ture work), while for some modules, off-the-shelf or

built-in software can be used. We are using the Philips

iCat (Van Breemen, 2005) as a platform for develop-

ing a proof of concept of the proposed architecture.

Ultimately, it can be used as a framework on top of

which the software of new companion robots can be

developed.

REFERENCES

Bratman, M., Intention, Plans, and Practical Reasoning,

Harvard U. Press, 1987.

Breazeal, C.L., Designing Sociable Robots, MIT Press,

2002.

Van Breemen, A.J.N. iCat: Experimenting with Ani-

mabotics, AISB 2005 Creative Robotics Symposium,

England, 2005.

Kopp, S., Jung, B., Lessmann, N., Wachsmuth, I. Max – A

Multimodal Assistant in Virtual Reality Construction.

In KI-K

¨

unstliche Intelligenz 4/03, p. 11-17, 2003.

A GENERIC ARCHITECTURE FOR A COMPANION ROBOT

321