INTEGRATED GLOBAL AND OBJECT-BASED IMAGE RETRIEVAL

USING A MULTIPLE EXAMPLE QUERY SCHEME

Gustavo B. Borba, Humberto R. Gamba

CPGEI, Universidade Tecnol

´

ogica Federal do Paran

´

a, Av. Sete de Setembro 3165, Curitiba-PR, Brazil

Liam M. Mayron, Oge Marques

Department of Computer Science and Engineering, Florida Atlantic University, Boca Raton, FL - 33431, USA

Keywords:

Content-based image retrieval, object-based image retrieval, multiple example query, ROI extraction.

Abstract:

Conventional content-based image retrieval (CBIR) systems typically do not consider the limitations of the

feature extraction-distance measurement paradigm when capturing a user’s query. This issue is compounded

by the complicated interfaces that are featured by many CBIR systems. The framework proposed in this work

embodies new concepts that help mitigate such limitations. The front-end includes an intuitive user interface

that allows for fast image organization though spatial placement and scaling. Additionally, a multiple-image

query is combined with a region-of-interest extraction algorithm to automatically trigger global or object-based

image analysis. The relative scale of the example images are considered to be indicative of image relevance

and are also considered during the retrieval process. Experimental results demonstrate promising results.

1 INTRODUCTION

Among the different types of queries used in content-

based image retrieval (CBIR) systems, the most

widely adopted is query by example (QBE). In this ap-

proach, the user presents a query image (also known

as an example image) to the system and expects sim-

ilar or relevant images as the result. The framework

of a general QBE system can be summarized in the

following steps:

1. One or more features, such as color, texture, or

spatial structure, are extracted from images in the

image database and from the query image. These

low-level characteristics are stored in a feature

vector (FV).

2. A distance function compares the query image FV

to all FVs in the database - the ultimate measure

of image similarity.

3. The images in the database are sorted according to

their calculated distances, from low (most similar)

to high (least similar).

4. Finally, the first t most similar images are pre-

sented to the user. This is called the retrieved set.

Usually t is returned by a cut function, but a con-

stant can be also be used.

QBE is efficient because it is a compact, fast and

generally natural way for specifying a query. While

keyword or text based queries can be effective in very

narrow domains, QBE is useful in broad databases

where verbal specifications of the query are imprecise

or impractical (Castelli and Bergman, 2002).

However, the QBE framework is not always accu-

rate in capturing the user’s true intentions for provid-

ing a particular query image. The main reasons for

this are the limitations related to the feature extrac-

tion and distance measurement steps in the previous

list. The user’s intended query information is not al-

ways perceived in the extracted feature of the images

and, hence, FV distances are not guaranteed to be cor-

rect. Similarity judgement based on a single extracted

quantity (distance) is gross and ineffective reduction

of the human user’s desires.

Another point of weakness in QBE is the inher-

ent difficulty in translating visual information into the

semantic concepts that are understood by the human

user. Indeed, this is one of the central challenges of

the visual information retrieval field, commonly re-

ferred to as the semantic gap (Smeulders et al., 2000).

Several approaches have been purposed to overcome

the semantic gap including the use of image descrip-

tors that best approximate the way in which humans

143

B. Borba G., R. Gamba H., M. Mayron L. and Marques O. (2007).

INTEGRATED GLOBAL AND OBJECT-BASED IMAGE RETRIEVAL USING A MULTIPLE EXAMPLE QUERY SCHEME.

In Proceedings of the Second International Conference on Computer Vision Theory and Applications - IU/MTSV, pages 143-148

Copyright

c

SciTePress

perceive visual information (Manjunath et al., 2001),

(Renninger and Malik, 2004), or the inclusion of a

user’s willingness as a dynamic part of the system

(Rui et al., 1998), (Santini and Jain, 2000).

Another approach to problem considers that, in

many situations, the user is focused on an object,

or region-of-interest (ROI) within the image. Such

systems are named region- or object-based image re-

trieval and they perform a search based on local in-

stead of global image features (Carson et al., 2002),

(Garc

´

ıa-P

´

erez et al., 2006). Moreover, it is possible to

use more than a single example image when perform-

ing a QBE. This technique is called multiple example

query (Assfalg et al., 2000), (Tang, 2003).

In this work a new method for combining a mul-

tiple example query on both global- and region-based

scenarios is presented. An attention-driven ROI ex-

traction algorithm (Marques et al., 2007) and a new

interface, the Perceptually-Relevant Image Search

Machine (PRISM), are used. The novelty behind the

PRISM interface is that it allows, in a simple way, to

select images from a database and scale them accord-

ing to user interest and perceived relevance.

The remainder of this paper presents an overview

of the system (Section 2), experimental results (Sec-

tion 3), and, finally, conclusions (Section 4).

2 ARCHITECTURE

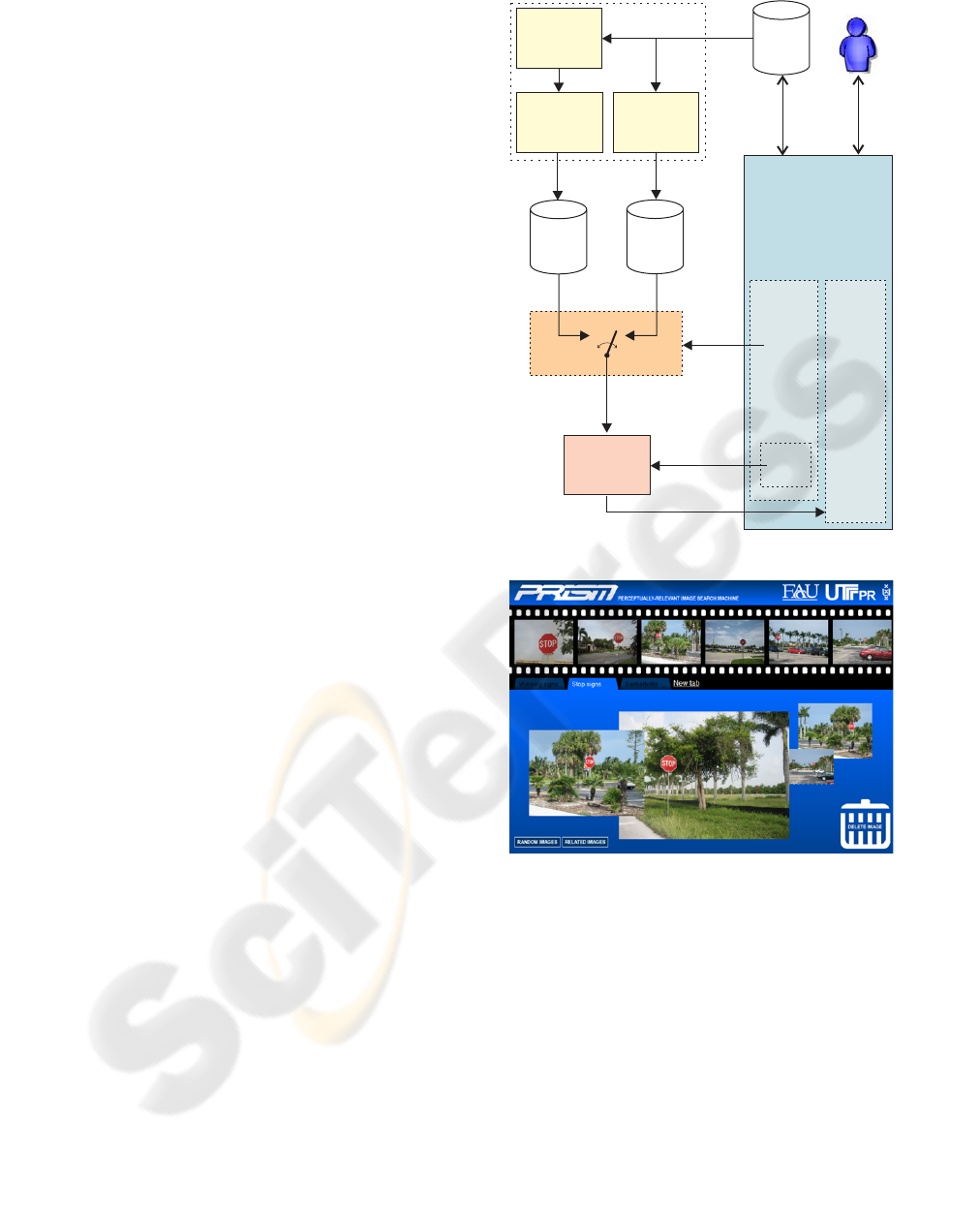

A block diagram of our proposed QBE framework is

given in Figure 1.

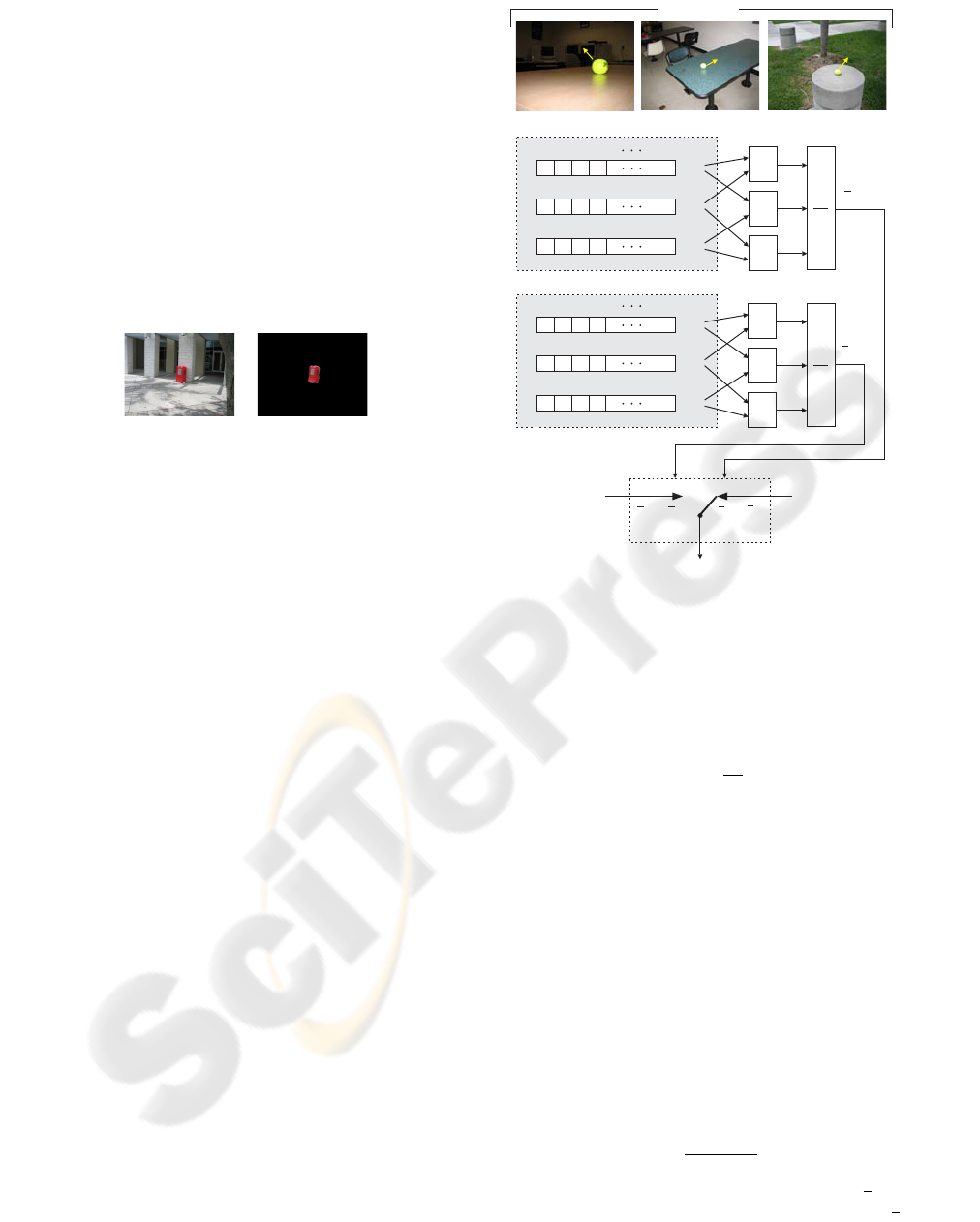

2.1 Interface Level: Prism

PRISM is a general environment that allows the cap-

ture of a user’s relative interest in particular images.

PRISM also enables the incorporation of a variety of

image retrieval methods, including content-based im-

age retrieval, content-free image retrieval, and seman-

tic annotation (Mayron et al., 2006). The initial view

of the PRISM desktop is shown in Figure 2. The top

part of the interface is the “film strip”, the only source

of new images. The user drags images from the film

strip into the main content area. An image may be

deleted from the film strip by dragging it to the trash

can icon in the lower-right corner. When an image is

removed from the film strip the vacant space is im-

mediately replenished, ensuring that the film strip is

always full. The lower section of the PRISM inter-

face is the tabbed workspace. In PRISM tabs are used

to organize individual groups of images, expanding

the work area while avoiding overwhelming the user

with too many visible images at one time.

Global

Feature

Extraction

Search

and

Retrieval

Global/ROI

selection

ROI

Feature

Extraction

Global

FV

Raw

Images

ROI

FV

ROI

Extraction

Retrieved

Images

Query

Images

Preprocessing

Scale

Factor

PRISM

User

Figure 1: A general view of the system architecture.

Figure 2: The PRISM interface.

The proposed CBIR system takes advantages of

PRISM’s ability to capture subjective user queries ex-

pressed by grouping and scaling images. Two or more

example images are dragged from the film strip to the

workspace. The selected images are than scaled by

the user according to their relevance. That is, larger

images indicate increasing relevance. From this point

onwards a QBE is performed, taking into account user

interest based on ROI (local) or global characteristics

of the images as well as image scale. The system is

able to clearly capture user query concepts, deciding

automatically between a global- or ROI-based search

using image scale factors.

VISAPP 2007 - International Conference on Computer Vision Theory and Applications

144

2.2 Preprocessing

In the offline preprocessing stage images are seg-

mented by an attention-driven ROI extraction algo-

rithm. This algorithm performs a set of morphologi-

cal operations over the computed Itti-Koch (Itti et al.,

1998) and Stentiford (Stentiford, 2001) models of vi-

sual attention. The result is a very good ROI (or object

segmentation). See Figure 3 for an example. Since

we are currently working with a database that contains

salient by design objects, segmented salient regions

should always correspond to the semantic objects in

the images. However, occasionally, the ROI extrac-

tion output was refined by removing false positives.

Figure 3: Input vs. output example for the ROI extraction

algorithm.

Another interesting point of this algorithm is that

it does not make use of any a priori object informa-

tion, such as shape or color, running in a fully unsu-

pervised way (a complete description can be found

in (Marques et al., 2007)). After ROI extraction

the global and ROI FVs are computed by feature

extraction modules, Figure 1. Both use the same

descriptor: a 256-cell quantized HMMD (MPEG-7-

compatible) color histogram (Manjunath et al., 2001).

The computed FVs are stored in the global and ROI

FV databases.

2.3 Global/roi Selection

If more than one query image is presented in the

PRISM workspace a decision process takes place.

The aim of this global/ROI selection decision is to se-

lect the global or ROI information for search and re-

trieval module input. This block compares the query

images FVs and fires a global- or ROI-based search

accordingly. Figure 4 depicts its operation.

In the case of the input example images in the top

of Figure 4, the user’s ROI-based search intention is

clear, since the tennis ball’s (ROIs) features are more

similar between themselves than the global features.

A simple approach based on the average coefficient

of determination (squared correlation, r

2

) is used for

detecting the FVs degree of similarity. The r

2

ranges

from 0 to 1 and represents the magnitude of the linear

relationship between two vectors.

In Figure 4, given p(> 1) query images, two inde-

pendent groups of FVs of length k = 256 are consid-

ered: one from the ROIs, D

Li

(n), and other from the

global images, D

Gi

(n), where i is the query image,

D

D

D

L1

L

G

L

G

L2

Lp

r

r

r

r

r

2

2

2

2

2

r

2

r

2

D

D

D

G1

G2

Gp

r

2

r

2

r

2

>

L

G

r

r

2

2

<

ROI

ROI-based

search

Global

Query images

ROI feature vectors

Global feature vectors

S

S

p

p

1

23

4

k

n=

n=

1

23

4

k

D

L1

D

G1

D

D

D

Lp

Gp

G2

D

L2

Figure 4: Functional diagram for Global/ROI selection and

example for 3 input images (p=3). For these query images,

an ROI-based search will be performed. G-Global, L-Local.

with i ∈ {1, . . . , p} and n ∈ {1, . . . ,k}. The coefficient

of determination, r

2

s

(c), within each group, for all FVs

pairs is given by equation (1).

r

2

s

(c) =

a

e f

(1)

where

a =

k

k

∑

n=1

D

sx

(n)D

sy

(n) −

k

∑

n=1

D

sx

(n)

k

∑

n=1

D

sy

(n)

2

(2)

e = k

k

∑

n=1

D

sx

(n)

2

−

k

∑

n=1

D

sx

(n)

2

(3)

f = k

k

∑

n=1

D

sy

(n)

2

−

k

∑

n=1

D

sy

(n)

2

(4)

s denotes the group, with s ∈ {L,G}, c is the number

of combinations of the p feature vectors, taken 2 at a

time (x and y), c ∈ {1, . . . ,C

2

p

} and

C

2

p

=

p!

2(p − 2)!

. (5)

The average coefficients of determination, r

2

s

, of

each group are then compared. Groups with high r

2

s

INTEGRATED GLOBAL AND OBJECT-BASED IMAGE RETRIEVAL USING A MULTIPLE EXAMPLE QUERY

SCHEME

145

value means that the FVs are more similar and hence

more similar are the raw images.

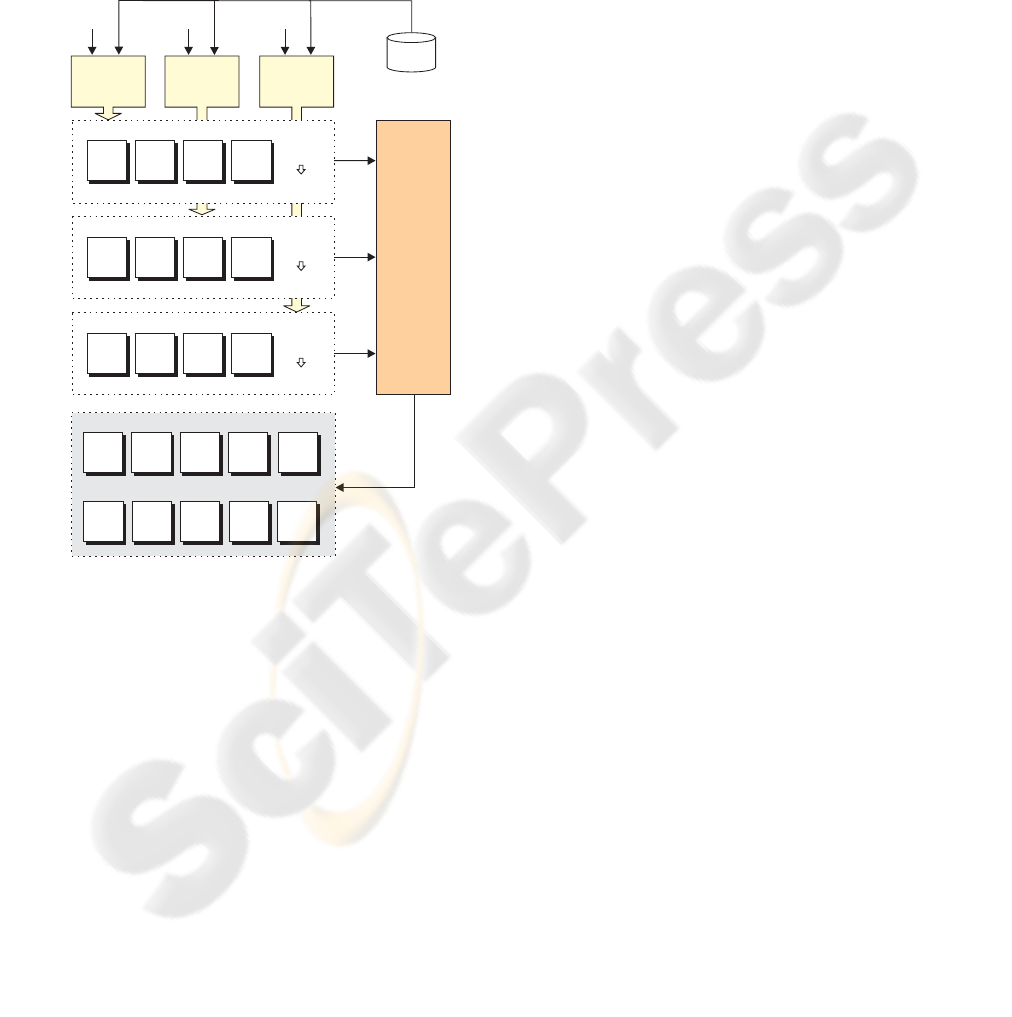

2.4 Search and Retrieval

Once the search type is set the search and retrieval

stage (Figure 1) can finally be performed. Figure 5

illustrates the operations for p > 1 general query im-

ages, Q

i

.

W

R

L1

=4

1

11

1

Relevance

Scores

Individual Retrievals

Queries

FV

Distances

and

Rank

Global or ROI

image

()t <

t

R

L1

=4

21

2

image

()j <

j

R

L1

=4

31

3

image

()m <

m

R

L1

=3

12

1

image

()r <

r

R

L1

=2

13

1

image

()g <

g

R

L1

=1

= 2.0

Scale

factor

200%

Q

1

200%

Q

2

50%

Q

3

100%

14

1

image

()w

w

R

L1

=3

22

2

image

()d <

d

R

L1

=2

23

2

image

()y <

y

R

L1

=1

24

2

image

()a

a

R

L1

=3

32

3

image

()g <

g

R

L1

=2

33

3

image

()e <

e

R

L1

=1

34

3

image

()d

d

W

2

= 0.5

Scale

factor

50%

W

3

= 1.0

Scale

factor

100%

S

=8

1

image

t

image

d

image

y

image

g

image

r

image

m

image

w

image

e

image

j

image

a

Final Retrieval

S

=7

2

S

=6

3

S

=4

4

S

=2.5

5

S

=2

6

S

=2

7

S

=2

8

S

=1

9

S

=0.5

10

Distances

and

Rank

Distances

and

Rank

Figure 5: Functional diagram of the search and retrieval.

module of the system. Example for 3 queries (p = 3), 4

images retrieved per query (t = 4) and arbitrary scale fac-

tors of 200, 50 and 100%. Note that the image γ appears in

individual retrievals 1 and 3, so their relevance scores are

summed. A similar operation is done to image δ, that ap-

pears in retrievals 2 and 3. Images with the same S

j

have

relevances proportional to their Wi, as happens to images ω,

ε and ϕ.

In the first step, individual retrievals of a fixed

number of t images are made for each query. The dis-

tance between Q

i

FV, D

i

(n), and all database images

FVs, D

b

(n), is computed using the L1 measure:

L1

i

(b) =

k

∑

n=1

|D

i

(n) − D

b

(n)|, (6)

where i is the query image and b the database image.

The t most relevant images, R

ih

, are ranked from the

most (smaller distance) to the least similar, according

to

R

ih

= t − h + 1, (7)

where h is the retrieved image, with h ∈ {1, . . . , t}.

Block relevance score in Figure 5 groups these in-

dividual retrievals into a final retrieval. The system

looks at user’s subjective degree of relevance, repre-

sented by query images scales captured by PRISM.

This is achieved using the scale factor (perceptual re-

size) of Q

i

as a weight W

i

, which is multiplied by each

rank R

ih

. The result of this weighting operation is a

relevance score S

j

, given by:

S

j

= W

i

R

ih

, (8)

where j is the image into the final retrieval, with

j ∈ {1, . . . , u} and u is the number of different images

among all individual retrievals. If the same image ap-

pears in different retrievals the S

j

are summed, so as

to increase it’s relevance and assure a single occur-

rence of this image into the final retrieval.

In the case of images with the same S

j

, their rel-

evance is treated as follows: a) if they come from

individual retrievals with different Wi, the one with

the greater Wi is considered more relevant; b) if they

come from individual retrievals with the same Wi, the

most relevant is the one which was queried first (its

correspondent query image was pushed first into the

workspace).

Note that the use of a single example image does

not make sense here since it is not possible to decide

whether local or global features are to be inspected.

3 EXPERIMENTAL RESULTS

In this section, experimental results of the proposed

system are shown. The examples in Figure 6 cover

different query scenarios, providing a good view of

the system performance. The number of retrieved im-

ages per individual query is t = 5 for all experiments.

3.1 Database

The raw images database consists of 315 images with

one salient object per image. In the database, there

are five different semantic ROIs categories: mini bas-

ketball, blue plate, yellow sign, tennis ball and red

ground objects. The use of a salient by design objects

database is important for a meaningfully analysis of

the system operation and results.

3.2 Discussion

In Figure 6, Query a, two query images of outdoor red

objects over different backgrounds were specified by

VISAPP 2007 - International Conference on Computer Vision Theory and Applications

146

parameter of the query, the large scale factor on Q

1

,

against the reductions on Q

2

and Q

3

, denotes that the

tennis balls with a stamped black brand are of special

interest. A close look in the first four images of the

retrieved set shows that this query specification was

attended. Finally, note that the system should return

15 images in the final retrieval, but 12 images were

presented. In this example, this occurred because 3

of the 15 images in the individual retrievals (Figure

5) appeared twice. So, that repeated images had their

relevance scores summed, and appeared just once in

the final retrieval.

4 CONCLUSION

The architecture presented in this paper incorpo-

rates different techniques for visual content access:

object-based image retrieval, the ordinary global-

based image retrieval and multiple examples query.

Most CBIR systems use these approaches isolated or

weakly integrated, while here they were truly com-

bined. Moreover, by using the PRISM interface it

is possible to get explicit information from the user

about the relevance of each example image. This is

done by means of images scale. Taking these charac-

teristics together the system is able to more faithfully

capture what users have in mind when formulating a

query, as was demonstrated by experimental results.

The obtained results should encourage CBIR de-

velopers to put effort not only towards the traditional

feature extraction-distance measurement paradigm,

but also into the improvement of the architectural as-

pects concerning the capture of user query concepts.

In the next stages of this work we intend to explore

in more detail the information provided by the user

to the interface, such as the arrangement of example

images and the features of images dragged to the trash

can.

ACKNOWLEDGEMENTS

This research was partially sponsored by UOL

(www.uol.com.br), through its UOL Bolsa Pesquisa

program, process number 200503312101a and by the

Office of Naval Research (ONR) under the Center for

Coastline Security Technology grant N00014-05-C-

0031.

REFERENCES

Assfalg, J., Del Bimbo, A., and Pala, P. (2000). Using

multiple examples for content-based image retrieval.

In IEEE International Conference on Multimedia and

Expo (I), pages 335–338.

Carson, C., Belongie, S., Greenspan, H., and Malik, J.

(2002). Blobworld: Image segmentation using ex-

pectation-maximization and its application to image

querying. IEEE Transactions on PAMI, 24(8):1026–

1038.

Castelli, V. and Bergman, L. D. (2002). Image Databases:

Search and Retrieval of Digital Imagery. John Wiley

& Sons, Inc., New York, NY, USA.

Garc

´

ıa-P

´

erez, D., Mosquera, A., Berretti, S., and Del

Bimbo, A. (2006). Object-based image retrieval us-

ing active nets. In ICPR (4), pages 750–753. IEEE

Computer Society.

Itti, L., Koch, C., and Niebur, E. (1998). A model of

saliency-based visual attention for rapid scene anal-

ysis. IEEE Trans. on PAMI, 20(11):1254–1259.

Manjunath, B. S., Ohm, J. R., Vinod, V. V., , and Yamada,

A. (2001). Color and texture descriptors. IEEE Trans.

Circuits and Systems for Video Technology, Special Is-

sue on MPEG-7, 11(6):703–715.

Marques, O., Mayron, L. M., Borba, G. B., and Gamba,

H. R. (2007). An attention-driven model for group-

ing similar images with image retrieval applications.

EURASIP Journal on Applied Signal Processing (ac-

cepted).

Mayron, L. M., Borba, G. B., Nedovic, V., Marques, O.,

and Gamba, H. R. (2006). A forward-looking user

interface for cbir and cfir systems. In IEEE Inter-

national Symposium on Multimedia (ISM2006), San

Diego, CA, USA.

Renninger, L. W. and Malik, J. (2004). When is scene iden-

tification just texture recognition? Vision Research,

44(19):2301–2311.

Rui, Y., Huang, T., Ortega, M., and Mehrotra, S. (1998).

Relevance feedback: A power tool for interactive

content-based image retrieval. IEEE Transactions on

Circuits and Systems for Video Technology, 8(5):644–

655.

Santini, S. and Jain, R. (2000). Integrated browsing and

querying for image databases. IEEE MultiMedia,

7(3):26–39.

Smeulders, A., Worring, M., Santini, S., Gu pta, A., and

Jain, R. (2000). Content-based image retrieval at

the end of the early years. IEEE Trans. on PAMI,

22(12):1349–1380.

Stentiford, F. (2001). An estimator for visual attention

through competitive novelty with application to im-

age compression. In Picture Coding Symposium, pp.

25-27, Seoul, Korea.

Tang, J. Acton, S. (2003). An image retrieval algorithm us-

ing multiple query images. In ISSPA’03: Proceedings

of the 7th International Symposium on Signal Process-

ing and Its Applications, pages 193–196. IEEE.

VISAPP 2007 - International Conference on Computer Vision Theory and Applications

148