Food Recognition for Dietary Monitoring during Smoke Quitting

Sebastiano Battiato

1 a

, Pasquale Caponnetto

2

, Oliver Giudice

1

, Mazhar Hussain

1

, Roberto Leotta

1

,

Alessandro Ortis

1, ∗ b

and Riccardo Polosa

2 c

1

Department of Mathematics and Computer Science, University of Catania, Viale A. Doria, 6, 95125 Catania, Italy

2

Center of Excellence for the Acceleration of Harm Reduction, University of Catania,

Via Santa Sofia 89, 95123 Catania, Italy

∗

Corresponding Author

Keywords:

Food Recognition, Dietary Monitoring, AI for Health Applications.

Abstract:

This paper presents the current state of an ongoing project which aims to study, develop and evaluate an

automatic framework able to track and monitor the dietary habits of people involved in a smoke quitting

protocol. The system will periodically acquire images of the food consumed by the users, which will be

analysed by modern food recognition algorithms able to extract and infer semantic information from food

images. The extracted information, together with other contextual data, will be exploited to perform advanced

inferences and to make correlations between eating habits and smoke quitting process steps, providing specific

information to the clinicians about the response to the quitting protocol that are directly related to observable

changes in eating habits.

1 INTRODUCTION

Food recognition from digital images for the analy-

sis of dietary habits has become an important aspect

in health monitoring application in different domains.

On the other hand, food monitoring is a crucial part

of human life since the health is strictly affected by

diet (Nishida et al., 2004). The impact of food in peo-

ple life led research efforts to develop new methods

for automatic food intake monitoring and food log-

ging (Kitamura et al., 2010). This paper presents the

current state of the FoodRec project, which objective

is the study, development and evaluation of state of

the art digital technologies to define a framework able

to track the dietary habits of an observed person, and

make correlations with the smoking cessation process

that the subject is performing. The system will peri-

odically acquire images of the food eaten by the pa-

tient over time, that will then be processed by food

recognition algorithms able to detect and extract se-

mantic information from the images containing food.

The extracted data will be exploited to infer the di-

etary habits, the kind and amount of taken food, how

much time the user spends eating during the day, how

a

https://orcid.org/0000-0001-6127-2470

b

https://orcid.org/0000-0003-3461-4679

c

https://orcid.org/0000-0002-8450-5721

many and what times the user has a meal, etc. Infer-

ences performed on different days can be compared

and further processed to perform analysis on user’s

habits changes and other inferences related to user’s

behaviour, such as increase of junk food intake and

mood changes over time. The recording and seman-

tic organization of daily habits can help a doctor to

have a better opinion with respect to the patient’s be-

haviour, quitting treatment response and hence his

health needs. So far, many efforts have been spent

in the application of technology on smoke monitor-

ing (Ortis et al., 2020a) and food recognition (Alle-

gra et al., 2020), this project represents the first at-

tempt of the application of Artificial Intelligence (AI)

and multidisciplinary competences for the definition

of a framework able to drive and support people who

are trying to stop smoking, by acting on multiple as-

pects simultaneously. The Food Recognition project

(FoodRec) is granted by the Foundation for a Smoke-

Free World (FSFW)

1

. The reminder of the paper is

organized as follows. Section 2 describes the project

pipeline, which is organized into six main phases (see

Figure 1). Section 3 presents the expected outcomes

of the project’s outputs with respect to the smoking

quitting support given by the developed system for

1

Project webpage: https://www.coehar.it/project/

food-recognition-project/

160

Battiato, S., Caponnetto, P., Giudice, O., Hussain, M., Leotta, R., Ortis, A. and Polosa, R.

Food Recognition for Dietary Monitoring during Smoke Quitting.

DOI: 10.5220/0010492701600165

In Proceedings of the International Conference on Image Processing and Vision Engineering (IMPROVE 2021), pages 160-165

ISBN: 978-989-758-511-1

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

Figure 1: FoodREC project’s phases.

dietary monitoring. Section 4 concludes the paper by

describing the current state of the project and propos-

ing future directions.

2 FoodRec: RESEARCH PLAN

The project involves several phases, which are

sketched by the chart shown in Figure 1. The dia-

gram shows five main phases, the first ones are re-

lated to preliminary studies and research, whereas the

last ones regard the development of algorithms and

software, toward the final deploy of the obtained so-

lutions. With respect to the diagram in Figure 1, we

can group the project’s phases into two main macro-

tasks, which are detailed in the following paragraphs.

2.1 State of the Art Evaluation

After initial procedures (see Figure 1) have been com-

pleted, the research staff focused on the preliminary

investigation of the tasks and research problems re-

lated to the project purposes. This included the study

of the state of the art related to food recognition and

dietary monitoring technologies. As a result, a report

concerning the existing products and approaches in

terms of algorithms and smartphone apps has been

produced and published in (Allegra et al., 2020), de-

tailing the features and performances of each evalu-

ated solution. The results of the study in (Allegra

et al., 2020), revealed that modern food recognition

techniques can support the traditional self-reporting

approaches for eating diary, however, more efforts

should be devoted to the definition of large scale la-

belled image datasets. The new dataset design should

focus on the quality of annotations related to the type

of food, areas, quantities and calories of each food

item depicted in an image. So far, state-of-the-art fo-

cused on specific tasks performed in controlled con-

ditions. The extreme variability of food appearance

makes this task challenging. Especially for ingredi-

ents inference and, hence, for nutritional values es-

timation. The study concludes that food recognition

for dietary monitoring is still an in-progress technol-

ogy, and more efforts are needed to reach standards

for reliable medical protocols, such as smoke quitting

programmes.

2.2 Applied Research and Development

After the study of the state of the art, and conse-

quent analysis and definition of current limits and

challenges, the research moved to the applied research

and development phase. This phase has a dual objec-

tive. One is related to the development of the tech-

nological aspects of the framework, the other one is

related to the development of analysis algorithms.

The iOS/Android FoodRec smartphone app for

image acquisition and analysis, and dietary monitor-

ing has been released, and is currently under testing

by selected users. The mobile app FoodRec has been

designed with the objective of providing a smart and

accessible system for the daily eating habits monitor-

ing of the users, with the definition of a dietary diary.

The innovation that characterize the FoodRec app is

the automatisms related to the food analysis and asso-

ciated inferences. Indeed, the user just uploads a pic-

ture on the system, then all inferences are performed

automatically, by means of Computer Vision and Ar-

tificial Intelligence technologies.

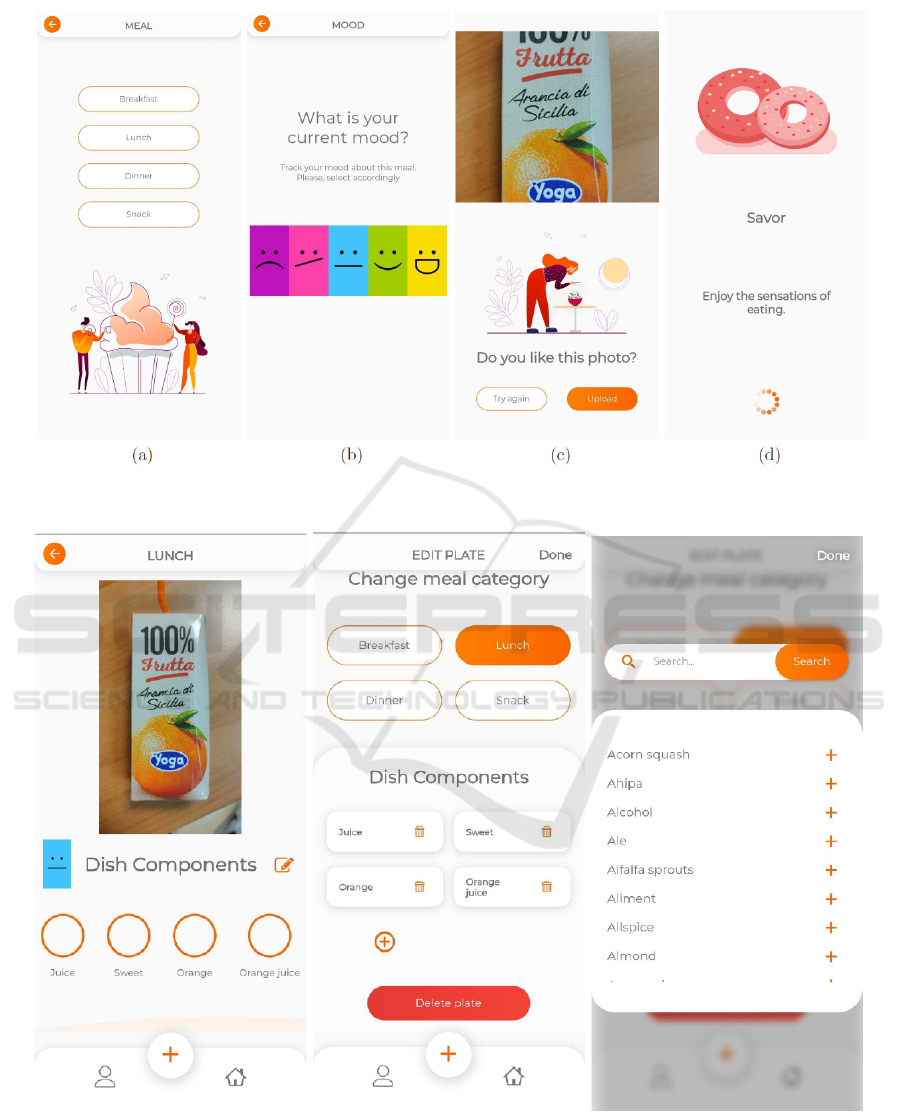

Figure 2 shows the main interface screens of the

FoodRec app. First, a meal over four possibilities is

chosen (a), then the app requires to state the mood

associated to the meal (b), then the picture is taken

(c) and uploaded (d). The app automatically learns

the daytimes associated to food intake, and sends a

notification to the user if the meal has not been in-

serted yet at the expected time. After the image is

uploaded to the server, the recognition algorithms are

applied, and the resulting inferences are shown in the

app interface, as in the example shown in Figure 2.

At this step, the user can edit the results (if needed)

and confirm the new record for the eating diary. The

information about user corrections are exploited for

the further improvements of the algorithms, as well

as their specialization with respect to the specific user

habits.

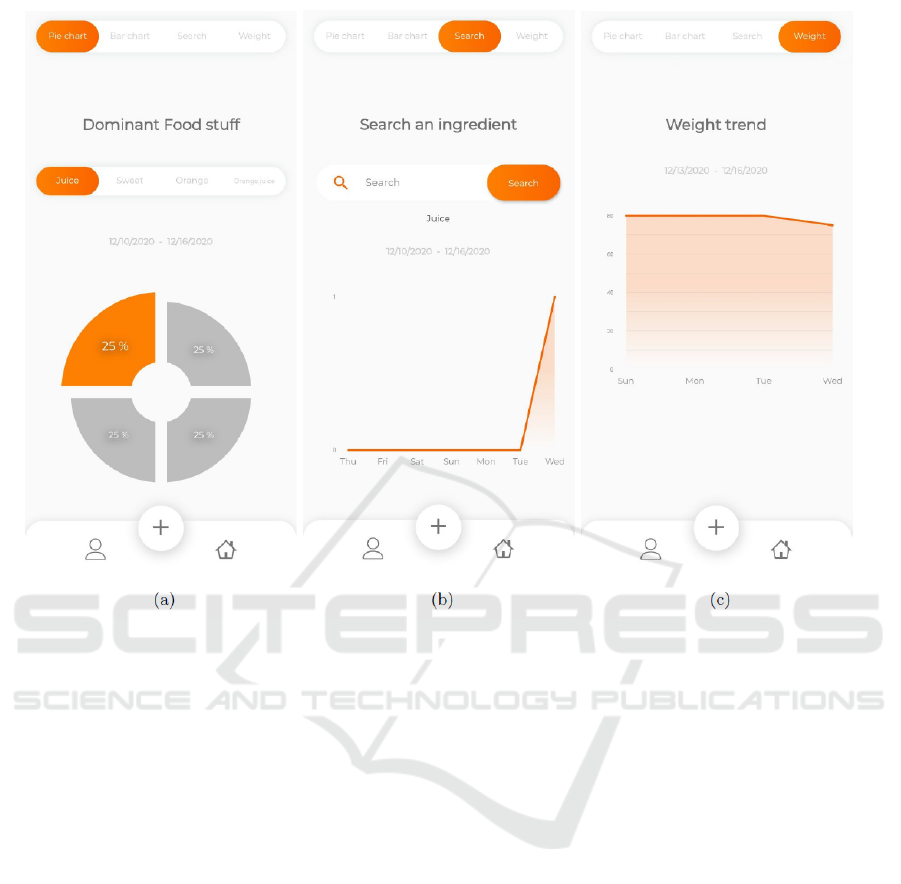

FoodRec developed features also include water in-

take and weight tracker. Moreover, the user can in-

spect the statistics related to his/her eating habits, in-

cluding the dominant food categories, ingredients, as

well as temporal visualizations of specific parame-

ters (see Figure 3).

Food Recognition for Dietary Monitoring during Smoke Quitting

161

Figure 2: FoodRec example screens. Meal selection (a), mood associated to the meal (b), picture upload, motivational

sentence (d).

Figure 3: FoodRec interface showing the results of the food recognition system.

The analysis algorithms will comprise several

steps, including image normalization, registration,

feature extraction, food detection and classification.

The research team is currently evaluating new meth-

ods and techniques for the improve of the perfor-

mances of the food recognition algorithms exploited

by the system. In particular, the efforts are devoted to

three main tasks:

IMPROVE 2021 - International Conference on Image Processing and Vision Engineering

162

Figure 4: FoodRec in-app statistics.

• Food Segmentation: the aim of this task is the seg-

mentation of the multiple food items that are de-

picted in a meal picture. This will output the areas

of the pixels associated to each food item.

• Food Classification: this classic task combined

with the food segmentation output will provide a

semantic segmentation of the input image, which

details at pixel level the parts of the image related

to specific food categories.

• Volume Estimation: this task represents one of the

most difficult aimed achievements. Indeed, the

objective of this task is to estimate the volume of

each food item. This task results very challenging

because it involves the estimation of 3D informa-

tion from monocular vision, at very small scale

detail.

At this current stage, research methods related to

the above mentioned tasks have been applied only on

images available from state of the art in food recogni-

tion and image segmentation (Badrinarayanan et al.,

2017) (Long et al., 2015) (Chen et al., 2014) (Noh

et al., 2015) (Ronneberger et al., 2015). However, we

plan to specialize such algorithms on the data com-

ing from the FoodRec app, which is specific with re-

spect to our purposes. The proposed system aims to

recognize food items of specific users and monitor

their habits. This task significantly differs from the

recognition of any food instance depicted by a pic-

ture, such as happens in the development of general

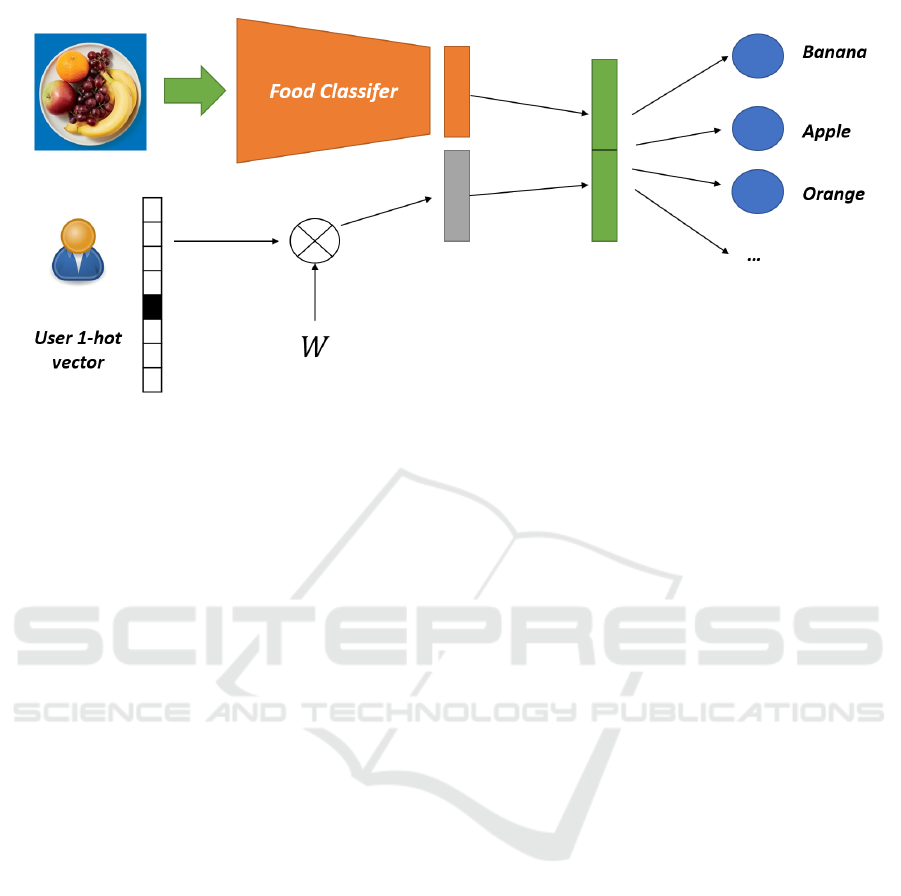

purposes food recognition systems. Figure 5 shows

the proposed architecture. In particular, a common

multi-label food classifier is composed by a Convo-

lutional Neural Network which defines a meaningful

feature representation for the input images, based on

the training task. Then, the representation is fed to

multiple logistic units (i.e., blue circles in the Fig-

ure) which are activated if the associated food item is

present in the picture. The proposed architecture will

take into account the specific user that uploaded the

picture. Indeed, since the proposed system is aimed

to systematically analyse and infer user habits, our

objective is to add to the food classification pipeline

a bias related to the user. As consequence, the indi-

vidual logistic activations will be fed with a feature

that is obtained by concatenating the image and user

feature. The latter one, is represented by the weight

matrix W in Figure 5, which will be learned from the

users’ habits during the training stage.

Food Recognition for Dietary Monitoring during Smoke Quitting

163

Figure 5: Food recognition proposed architecture. Blue circles depict independent logistic activations for specific classes,

which are activated by the presence of the food item in the visual content taking into account the bias given by the user.

3 EXPECTED OUTCOMES

Abstinence from smoking is associated with several

negative effects, including irritability, gain of weight

and eating disorders, especially in the first period of

abstinence. All these effects are connected one each

others. The output of the food recognition system

will provide indications about the user dietary habits

and anomalies, at different times during the smoke

quitting progress. The evaluation strategy will lever-

age well-known statistical methodologies for assess-

ing correlations between the observed data and known

information about the smoking quitting treatment.

4 STATE OF THE PROJECT AND

FUTURE DIRECTIONS

At this stage, the initial procedures, preliminary

investigation and research planing phases of the

project (see Figure 1) have been completed. The other

phases, except the final deploy, are currently being

carried out. Furthermore, the FoodRec app has been

tested and evaluated with a small controlled group of

test users. The tests were carried out for a period of

about four months that began on 12 August 2020 and

ended on 02 December 2020, with the participation of

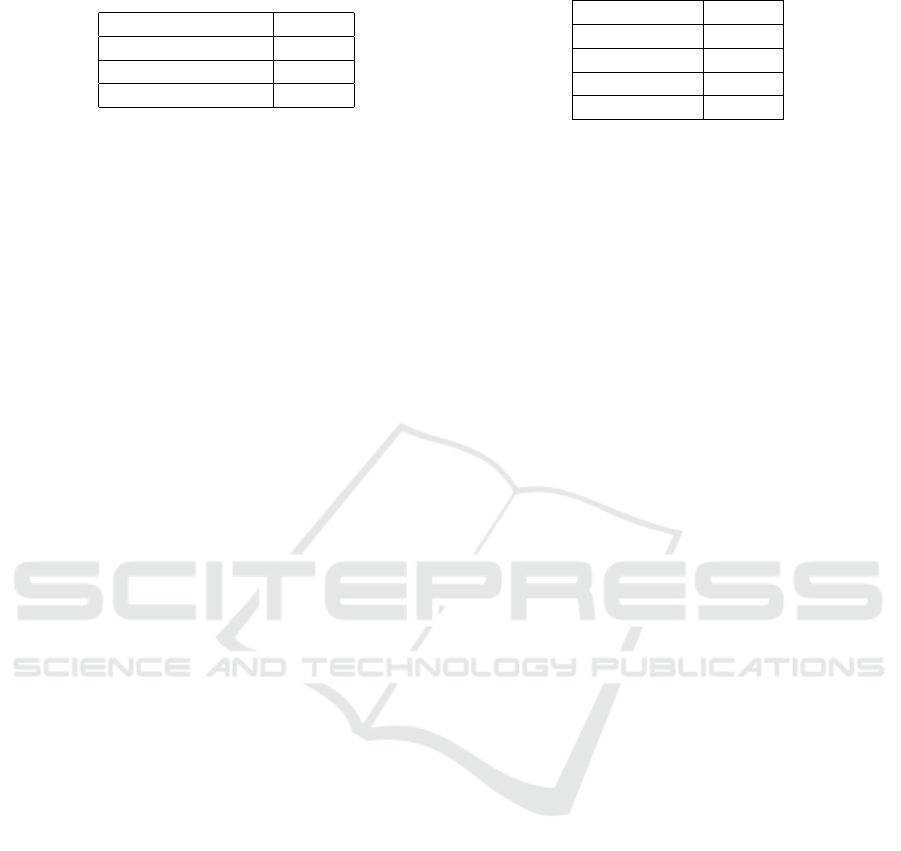

149 people aged between 19 and 60. The Table 1 sum-

marizes the mainly statistics and activities performed

by the users in the aforementioned tests. In partic-

ular, the Table 1a shows the number of interactions

there were among the users and the main features of

FoodRec (i.e., the upload of a meal’s photo or the up-

date of the drunk water), instead the Table 1b reports

the distribution of the uploaded photos among the fol-

lowing categories: breakfast, lunch, snack and dinner.

Once the tests have been ended, the users participated

to a survey panel, reporting the feedback with respect

to the app usage, which will be exploited to further

improve the app features. The next step will be the

evaluation on a larger audience of users in real-case

scenarios (i.e., not controlled users). Such ”on the

wild” evaluation will produce a large set of real-case

images from real users of the system, which will be

exploited to develop novel algorithms and inference

methods for the specific purposes of the project.

The developed dietary monitoring system could

be extended to work with videos recorded by a fixed

camera system, considering a set of cameras record-

ing the scene from different fixed points of view.

The collected data about the mood associated to food

images (see Figure 2-b) can be combined with ap-

proaches related to sentiment analysis based on im-

ages (Ortis et al., 2020b). Such approaches can be

investigated in order to automatically infer the mood

of the user (e.g., depression, happiness, etc.) based on

the dietary monitoring, avoiding to ask the user about

his/her mood.

ACKNOWLEDGEMENTS

This project is founded with the help of a grant from

the Foundation for a Smoke-Free World, Inc. (FSFW

COE1-05).

IMPROVE 2021 - International Conference on Image Processing and Vision Engineering

164

Table 1: FoodRec - Usage statistics.

Usage Statistics Counts

Participants 149

Meals upload 1657

Drinks update 721

(a) Usage frequencies.

Meal Type Counts

Breakfast 396

Lunch 553

Snack 305

Dinner 403

(b) Meals type frequencies.

REFERENCES

Allegra, D., Battiato, S., Ortis, A., Urso, S., and Polosa,

R. (2020). A review on food recognition technology

for health applications. Health Psychology Research,

8(3).

Badrinarayanan, V., Kendall, A., and Cipolla, R. (2017).

Segnet: A deep convolutional encoder-decoder ar-

chitecture for image segmentation. IEEE transac-

tions on pattern analysis and machine intelligence,

39(12):2481–2495.

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., and

Yuille, A. L. (2014). Semantic image segmentation

with deep convolutional nets and fully connected crfs.

arXiv preprint arXiv:1412.7062.

Kitamura, K., De Silva, C., Yamasaki, T., and Aizawa, K.

(2010). Image processing based approach to food bal-

ance analysis for personal food logging. In 2010 IEEE

International Conference on Multimedia and Expo,

pages 625–630. IEEE.

Long, J., Shelhamer, E., and Darrell, T. (2015). Fully con-

volutional networks for semantic segmentation. In

Proceedings of the IEEE conference on computer vi-

sion and pattern recognition, pages 3431–3440.

Nishida, C., Uauy, R., Kumanyika, S., and Shetty, P. (2004).

The joint who/fao expert consultation on diet, nutri-

tion and the prevention of chronic diseases: process,

product and policy implications. Public health nutri-

tion, 7(1a):245–250.

Noh, H., Hong, S., and Han, B. (2015). Learning de-

convolution network for semantic segmentation. In

Proceedings of the IEEE international conference on

computer vision, pages 1520–1528.

Ortis, A., Caponnetto, P., Polosa, R., Urso, S., and Battiato,

S. (2020a). A report on smoking detection and quit-

ting technologies. International journal of environ-

mental research and public health, 17(7):2614.

Ortis, A., Farinella, G. M., and Battiato, S. (2020b). Survey

on visual sentiment analysis. IET Image Processing,

14(8):1440–1456.

Ronneberger, O., Fischer, P., and Brox, T. (2015). U-net:

Convolutional networks for biomedical image seg-

mentation. In International Conference on Medical

image computing and computer-assisted intervention,

pages 234–241. Springer.

Food Recognition for Dietary Monitoring during Smoke Quitting

165