Understanding the Impact of Image Quality in Face Processing

Algorithms

Patricia Alejandra Pacheco Reina

1,2

, Armando Manuel Gutiérrez Menéndez

1,2

,

José Carlos Gutiérrez Menéndez

1,2

, Graça Bressan

2

and Wilson Ruggeiro

2

1

Polytechnic School, University of São Paulo, São Paulo, Brazil

2

Laboratory of Computer Networks and Architecture, University of São Paulo, São Paulo, Brazil

Keywords: Face Image Quality, Face Processing, Image Distortions.

Abstract: Face processing algorithms are becoming more popular in recent days due to the great domain of application

they can be used in. As a consequence, research about the quality of face images is also increasing. Several

papers concluded that image quality does impact the performance of face processing algorithms, with low-

quality images having a detrimental effect on performance. However, there is still a need for a comprehensive

understanding of the extent of the impact of specific distortions like noise, blur, JPEG compression, and

brightness. We’ve conducted a study evaluating the performance of three face processing algorithms with

images under different levels of the aforementioned distortions. The study’s results placed noise and blur with

Gaussian distributions, as the main distortions affecting performance. A detailed description of the adopted

methodology, as well as the results obtained from the study, is presented in this paper.

1 INTRODUCTION

In 2020, an increase in the use of efficient face

processing algorithms was evidenced due to the

demand for this technology in many services that

require a type of personal identification. This was due

to the social distancing and confinement caused by

the epidemiological issues related to the COVID-19

virus worldwide. Face processing technology is

widely used for security and access control through

identification, verification, and liveness processes.

Other methods like gender classification, age

estimation, and emotion detection are also gaining

attention thanks to their application in advertising and

recommendation systems. As a consequence,

research about the quality of face images is also

increasing, with the general consensus being that

image quality is an important factor in the

performance of face processing algorithms.

A recent study by (Mehmood and Selwal, 2020)

made a review about face recognition methods and

the factors affecting their accuracy. The study divided

the algorithms into appearance-based methods,

feature-based methods, and hybrid methods; and

evaluated their strengths and limitations while listing

the main factors affecting face recognition.

According to the authors, the main factors related to

image quality affecting face recognition performance

are illumination, occlusion, noise, and low-

resolution.

In a survey by (Li et al., 2019) about image quality

in face recognition, the authors stated that the main

challenges lay in the first stages of the face

recognition pipeline: face detection and face

alignment. According to this survey, face detection is

particularly impacted by low-resolution images, and

for the case of face alignment, the best performing

algorithms aren’t trained to consider image

distortions, so it could be concluded that in the

presence of low-quality images, their performance

will suffer.

A paper by (Jaturawat and Phankokkruad, 2017)

evaluated the face recognition accuracy of three well-

known algorithms: Eigenfaces (Turk and Pentland,

1991), Fisherfaces (Belhumeur, Hespanha and

Kriegman, 1997), and LBPH (Chen et al., 2009),

under unconstrained conditions, considering a variety

of pose and expressions, as well as different light

exposures, noise levels, and resolution. All three

algorithms showed poor performance across the

experiments.

Research has also been conducted to tackle this

issue outside of the face recognition domain. In

Reina, P., Menéndez, A., Menéndez, J., Bressan, G. and Ruggeiro, W.

Understanding the Impact of Image Quality in Face Processing Algorithms.

DOI: 10.5220/0010486501450152

In Proceedings of the International Conference on Image Processing and Vision Engineering (IMPROVE 2021), pages 145-152

ISBN: 978-989-758-511-1

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

145

(Mahmood et al., 2019), the authors placed occlusion,

illumination, and noise as the main factors affecting

facial expression recognition in unconstrained

environments. Similarly, a paper by (Kang et al.,

2018), concluded that optical and motion blur

negatively affect the performance of age estimation

algorithms.

Relevant work on the topic of image quality was

conducted by (Dodge and Karam, 2016). The authors

studied the effects of several distortions on the

performance of four deep learning architectures

focused on image classification. The authors

concluded that Gaussian blur and Gaussian noise had

the biggest impact on deep learning architectures,

while the other distortions affected to a lesser degree.

The literature available on this matter supports the

premise that image quality does influences face

processing performance. However, there is still a lack

of comprehension about the impact of specific image

distortions. With the exception of resolution, whose

impact is been greatly researched and documented (Li

et al., 2019), our knowledge about other distortions’

impact on face processing algorithms is limited. We

know that images degraded by distortions such as

noise, blur, lack or excess of brightness, etc, might be

poorly processed by these algorithms, as is outlined

in the papers above. However, a deeper understanding

of that impact and the extent to which it is relevant for

face processing would be useful to accurately address

this issue and propose adequate solutions.

With that motivation, we’ve conducted a study to

further comprehend the impact of image quality in

face processing algorithms. Three face processing

algorithms were tested with images under different

levels of Gaussian noise, Gaussian blur, motion blur,

low and high brightness, and JPEG compression. The

results of the study are presented in this paper.

Section 2 describes the adopted methodology, Section

3 presents the results obtained with each type and

degree of distortion, and Sections 4 and 5 outline the

summary and the conclusions of the study.

2 EXPERIMENTAL SETUP

The methodology adopted for the study is based on

the work of (Dodge and Karam, 2016). However, a

few changes were made to adapt it to our goal. The

main differences in our approach are that the selected

algorithms are focused on different tasks as opposed

to one, and that each algorithm was tested with a

dataset and a set of metrics corresponding to the task

in question. Also, three additional distortions were

considered as a part of our study: motion blur, low

brightness, and high brightness.

Details about the algorithms, the datasets, the

metrics, and the distortions are discussed below.

2.1 Face Processing Algorithms

The algorithms evaluated in the study are FaceNet,

Deep Age Estimation (DEX), and Deep Alignment

Network (DAN), focused on face recognition tasks,

age estimation, and face alignment respectively.

These algorithms are based on Deep Learning

architectures and have achieved state-of-the-art

results in their respective tasks.

FaceNet is a deep learning system that generates

face embeddings for face recognition tasks, such as

face identification and face verification, proposed by

(Schroff and Philbin, 2015). The main contribution of

this work is the introduction of a new loss for deep

learning architectures, specifically made for face

recognition purposes: the triplet loss. FaceNet uses

two DCNN as base architectures: the Zeiler&Fergus

(Zeiler and Fergus, 2014) style networks and the

Inception (Szegedy et al., 2015) type networks. For

this study, an implementation of the FaceNet system

based on the Inception architecture was chosen, and

the algorithm’s performance was evaluated using the

accuracy, and the validation rate under a fixed False

Alarm Rate (FAR) of 0.001.

The DEX algorithm consists of a deep learning

architecture for apparent and real age estimation

from a single face image and without the use of

facial landmarks (Rothe, Timofte and Van Gool,

2018). The pipeline of the entire system consists of

four main stages: face detection, face alignment and

resize, feature extraction, and age estimation. To

measure the model’s performance the authors used

the mean absolute error (MAE) in years and the e-

error (Escalera et al., 2015) for datasets where there

is no ground-truth. In this study, we evaluate the

MAE values for the real and the apparent age

estimations.

The DAN method consists of a Convolutional

Neural Network for image alignment proposed by

(Kowalski, Naruniec and Trzcinski, 2017). The

proposal is inspired by the Cascade Shape

Regression (CSR) (Xiong and De La Torre, 2013)

framework, which consists of a combination of a

sequence of regressors to approximate nonlinear

mapping between the initial shape of the face and

the desired frontal face (Xiong and De La Torre,

2013). In the DAN algorithm, those regressors are

implemented using deep neural networks. The

authors used the Mean Error, as well as the Failure

IMPROVE 2021 - International Conference on Image Processing and Vision Engineering

146

Rate as metrics to support their results, so for this

study, we evaluate its performance using both

metrics.

2.2 Datasets

As stated before, for each algorithm, a corresponding

set of images was selected according to their task.

Additionally, the chosen datasets had previously been

used to validate the algorithms, as is exposed in

(Schroff and Philbin, 2015), (Clapes et al., 2018), and

(Kowalski, Naruniec and Trzcinski, 2017).

The Labelled Faces in the Wild (LFW) (Huang et

al., 2007) was employed to evaluate the performance

of the FaceNet algorithm. The LFW dataset is

composed of 13233 face images corresponding to

5749 individuals. All images were extracted from the

internet, available as 250x250 pixel JPEG images,

most of them in color. The images are the result of the

Viola-Jones (Viola and Jones, 2001) face detection

algorithm and have been rescaled and cropped to the

aforementioned size. The dataset comprehends a

variety of scenarios in head pose, lighting, focus,

resolution, facial expression, age, gender, race,

accessories, make-up, occlusions, background, and

photographic quality.

To evaluate the performance of the DEX

algorithm, the Real and Apparent Age (APPA-

REAL) dataset (Clapes et al., 2018) was used. The

dataset contains 7591 images of 7000 individuals

with ages ranging from 0 to 91 years, in

unconstrained environments, and with varying

resolutions. The APPA-REAL allows testing age

estimation algorithms in both real and apparent age.

For the study, the validation set containing 1978

images was used.

Lastly, the challenging subset of the 300W dataset

was used to assess the performance of the DAN

method. This subset is called IBUG (Sagonas et al.,

2013) and consists of 135 images obtained from the

Internet, with variations in pose, expression,

illumination condition, and resolution. The dataset

provides landmark annotations for face alignment,

obtained employing the Multi-PIE annotation scheme

(Gross et al., 2010).

2.3 Distortions

To illustrate the effects of image quality in face

processing algorithms, four different distortions were

contemplated: noise, blur, brightness, and JPEG.

Noise can be caused by low-quality camera

sensors, or by the environmental conditions at the

moment of the acquisition (Mehmood and Selwal,

2020). For this study, we modeled the noise as a

Gaussian distribution with 0 mean and variance

ranging from 0.01 to 0.1 in steps of 0.01.

Blur can result from unfocused camera lenses or

moving targets (Huang et al., 2019). Additionally,

blurred images can simulate low-resolution images

due to the lack of details. For this study, we simulated

both motion blur and Gaussian blur. The motion blur

was achieved by filtering the images with different

sized kernels with value 1/(kernel size), and the

Gaussian noise effect was achieved by varying the

kernel’s standard deviation from 1 to 9 in steps of 1.

One way to simulate low and high illumination

conditions is through brightness. In that sense, we

simulated 10 stages for both high and low brightness

by altering the brightness factor using the Pillow

library for Python. For low brightness we altered the

brightness factor from 1 to 0, in steps of 0.1; and for

high brightness, the established range was 1.2-3.0,

with steps of 0.2.

JPEG compression is often cited as a distortion to

study due to its intrinsic characteristics, meaning, it is

a type of compression that provokes loss in the final

result. As was stated in the study carried on by

(Dodge and Karam, 2016), it is interesting to analyze

if the algorithms are affected by the quality of the

compression and in what measure it is relevant. To

evaluate the influence of JPEG compression in the

performance of the algorithms, the Pillow library was

used to obtain 10 levels of quality ranging from 5 to

95 in steps of 10.

3 RESULTS

To comprehend the results obtained from the

experiments, it is important to understand their

methodology. The DEX and DAN algorithms are

focused on one task each, so the experiments

consisted of evaluating their performance on their

specific task, through the selected metrics, and under

images with different distortions at different

magnitudes. However, FaceNet is a more complex

system designed to generate embedding for face

recognition tasks such as face identification and face

verification. Face identification consists of assigning

an identity to a face through a one-to-many operation,

where the embeddings of the unknown face are

compared with the ones in the dataset in order to

output the corresponding identity. Face verification,

on the other hand, is a one-to-one operation, where

the task is to check if the person’s embeddings are

close enough to the embeddings of the identity he or

she claims to be.

Understanding the Impact of Image Quality in Face Processing Algorithms

147

To evaluate the FaceNet performance, the

experiments followed the same methodology

proposed by (Huang et al., 2007), where the system

has to classify a pair of images as belonging to the

same person or different ones, according to

previously established pairs of matched and

mismatched persons from the dataset. In other

words, the experiments will be evaluating the

algorithm’s performance in a verification-like

operation.

The website for the LFW (LFW Face Database :

Main, 2018) dataset states that it is “very difficult to

extrapolate from performance in verification to

performance in 1:N recognition”, although, given the

nature of these two tasks, it is safe to assume that any

changes in the algorithm performance during

verification, will be more noticeable during

identification.

The results obtained with each experiment are

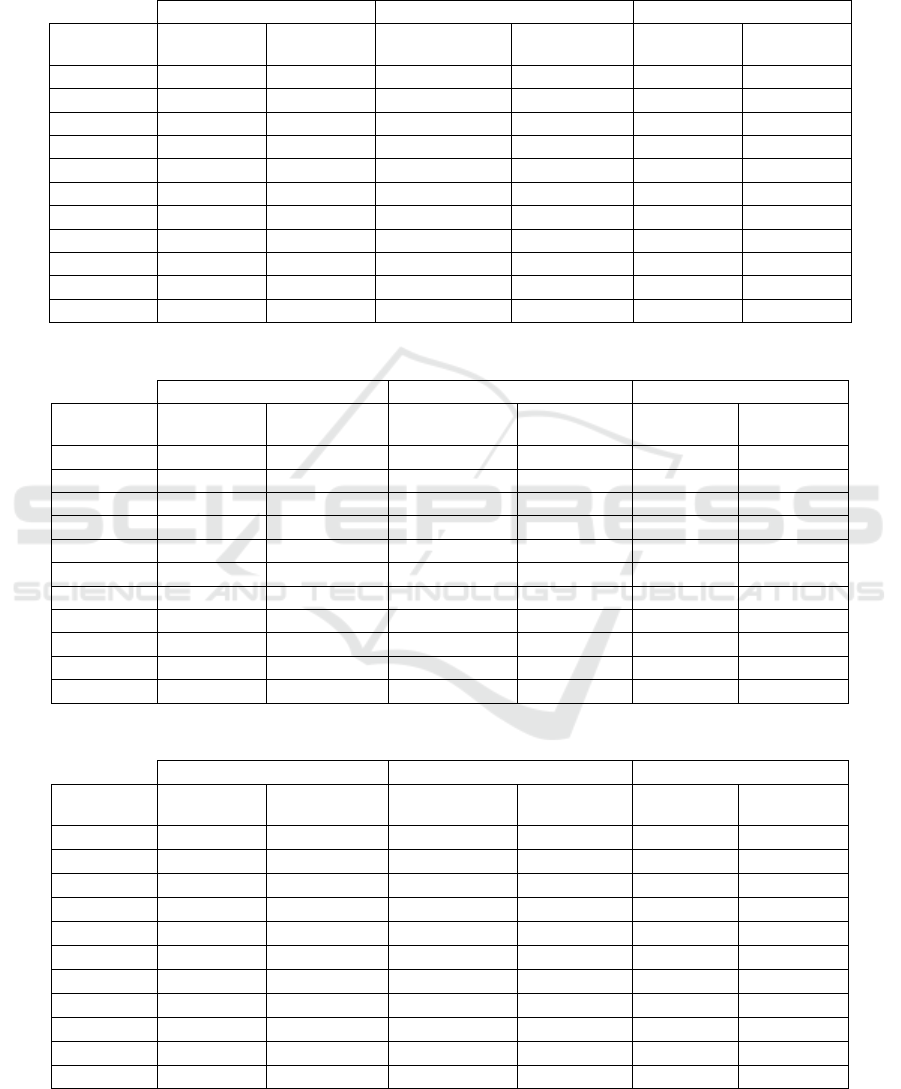

shown in tables 1 to 6. The values in the first rows

correspond to the algorithms’ performance with the

original images, which was considered as the

reference for comparison.

3.1 Noise

Table 1 shows the behavior of all metrics across the

different levels of Gaussian noise. A significant

decrease in performance can be observed in all three

algorithms.

For the case of FaceNet, both metrics were

affected, however, there is a noticeable difference

between the accuracy of the model and the validation

rate when the FAR is set to 0.001. Even at the lowest

variance levels, the validation rate suffers

considerably more compared to the accuracy. The

algorithm appears to be robust in terms of accuracy,

however, as was stated before, a bigger impact could

be seen in the identification task.

The results obtained with the DEX algorithm

show the mean absolute error significantly increasing

in both classifications. In both cases, a 100% drop in

performance was quickly reached, as the values

doubled rapidly. On the other hand, after variance =

0.06, the errors plateaued.

Similar to the previous algorithms, DAN’s

performance worsens under the presence of noise.

Both metrics were greatly impacted even at the lower

variance values, however, the failure rate was

significantly more affected than the mean error.

3.2 Blur

3.2.1 Gaussian Blur

Table 2 shows the results obtained with images with

Gaussian blur. Like the previous experiment, all the

metrics were severely affected.

The results obtained with FaceNet show a bigger

decrease in performance than in the previous

experiment. A significant decline in both accuracy

and validation rate is observed after a standard

deviation of 3.0, where up to that point the accuracy

stayed above 0.97, and the validation rate was

approximately 0.85, however, from that on, both

metrics started decreasing at a higher rate.

The MAE values for the apparent and the real

age classification with the DEX algorithm are also

shown in Table 2. It is interesting to observe a slight

improvement in both metrics at the lowest levels of

gaussian blur. Since blurring techniques are used for

denoising, might be the case that some of the images

in the dataset were noisy, and the smoothness caused

by those levels of blur helped achieve better results.

From that point on, both metrics worsen

significantly.

Similar to the noise experiments, the DAN’s

performance worsens under the presence of gaussian

blur. However, an interesting phenomenon occurred

where the mean error was more affected by Gaussian

blur than by noise, but the failure rate, although poor

in performance, achieved better results during this

experiment than the one before.

3.2.2 Motion Blur

The results obtained with the motion blur experiment

are shown in Table 3. Contrary to the behavior

observed with noise and blur with Gaussian

distributions, motion blur impacted significantly less

than the previous distortions.

The overall accuracy in the FaceNet algorithm

stayed almost constant across all kernel sizes, slightly

decreasing towards the bigger ones. The validation

rate at FAR = 0.001 suffered more than the accuracy,

however, its minimum value was considerably higher

than the values obtained in the previous experiments.

Motion blur also had a lesser impact on the DEX

algorithm than the previous distortions. Table 3

shows a slight improvement in both metrics under the

smaller kernels, as was the case with gaussian blur.

After that, both metrics worsen as the kernel size

increase.

A smaller impact on performance was observed in

the DAN algorithm as well. Both metrics increase as

IMPROVE 2021 - International Conference on Image Processing and Vision Engineering

148

the kernels get bigger, however, their behavior differs

from each other as the failure rate increases at a higher

rate than the mean error.

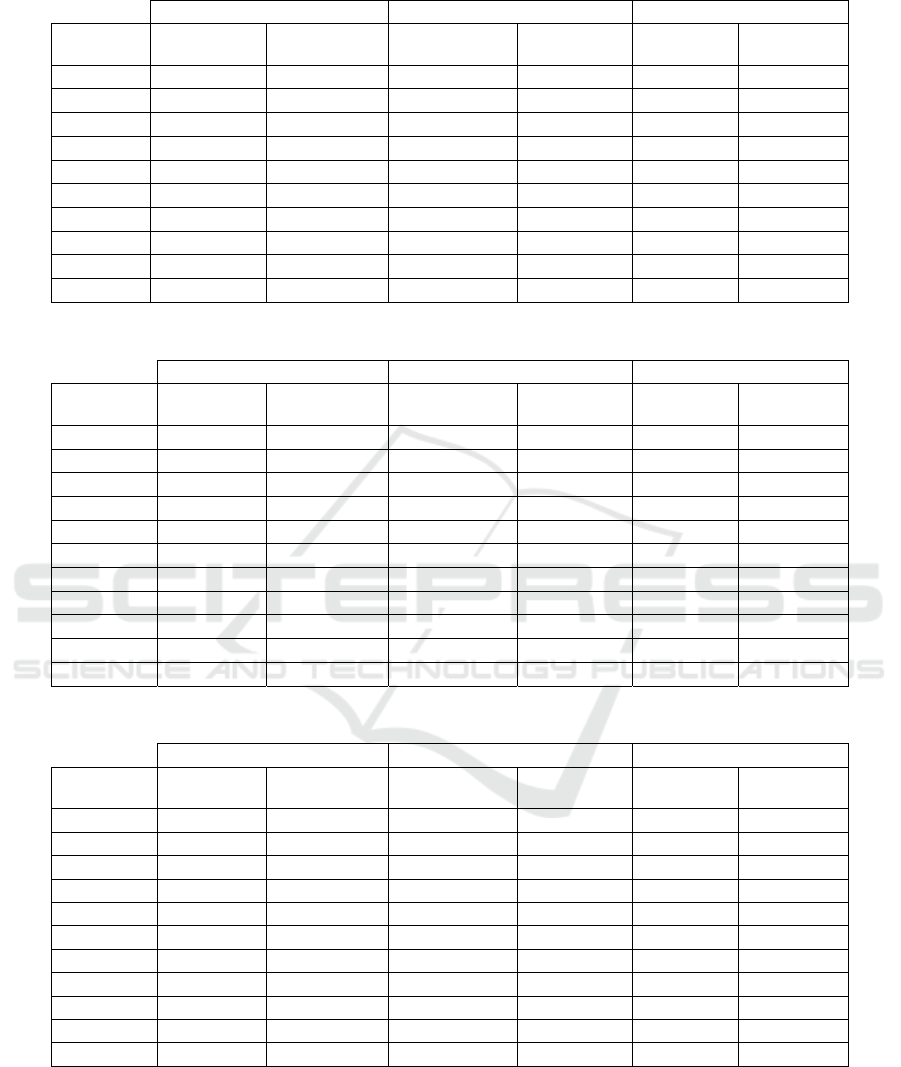

3.3 Brightness

3.3.1 Low Brightness

Low brightness’s effect is shown in Table 4. The

FaceNet and the DAN algorithms proved to be robust

when dealing with this type of image. Their metrics

display little variation for most of the brightness

factors, changing only with severely degraded

images, which correspond to images with little to no

brightness.

For the case of the DEX algorithm, although less

affected than in previous experiments, a more

noticeable decrease in performance was observed

when compared with the other algorithms.

3.3.2 High Brightness

The performance achieved with high brightness

images is shown in Table 5. The results indicate that

excess brightness has a slightly bigger impact than the

opposite situation. All algorithms experienced a

greater drop in performance at the lower and medium

levels of brightness degradation during this

experiment than during the previous one. However,

the overall impact of high brightness is still relatively

small, especially when compared with Gaussian blur

and Gaussian noise.

3.4 JPEG Compression

The last distortion analyzed was the JPEG

compression. In this case, the goal was to observed

the effect of different qualities of compression in the

performance of the algorithms. Table 6 shows that the

three algorithms are robust under different

compression qualities. The only noticeable impact

occurred, in all three of them, at the lowest quality

factors.

4 SUMMARY

The results obtained with the experiments show that

even though the distortions did not affect the

algorithms’ performance in the same measure,

patterns can be observed. In that sense, a series of

remarks can be outlined regarding the impact of each

distortion in these algorithms.

First, noise and blur, in their Gaussian

distribution, constitute the bigger threats to face

processing performance in terms of image quality.

Both distortions noticeably impacted the

algorithms’ metrics even at the lowest levels of

degradation.

Second, even though Gaussian blur severely

impacted the performance of all three algorithms,

motion blur didn’t have the same effect. The results

show significantly less influence throughout the

majority of kernel sizes. This is an interesting result

because it indicates that not all blur constitutes a

threat to performance, unfocused images and lack of

detail have a bigger impact on performance than

motion.

Third, brightness and JPEG compression seem to

have a small impact on performance. According to the

graphs, noticeable impact is perceived only when the

images are severely degraded.

5 CONCLUSIONS

The focus of this paper was to study the behavior of

three different face processing algorithms under the

presence of noise, blur, brightness, and JPEG

compression, at different magnitudes. The goal was

to draw conclusions about the impact of these

distortions on face processing algorithms and obtain

a more insightful understanding of the influence of

image quality in these types of algorithms.

Based on the results, a series of remarks were

summarized in the previous section. From that, we

can conclude that the analyzed algorithms, and

potentially others, are unsuited for unconstrained

environments where noise and blur resembling

Gaussian distributions might be present. On the

positive side, their deployment in scenarios with

different conditions of JPEG compression and

brightness, would not be as compromised unless the

images are severely distorted.

The main contribution of this work is providing a

comprehensive study about the impact of several

image distortions in face processing algorithms.

Where most studies focused on one task, ours

comprehended several ones within the face

processing domain, which allowed us to extract

common patterns that arise when dealing with low-

quality images.

The information presented in this paper is useful

to develop adequate solutions for face image quality

assessment methods, oriented to improve face

processing performance with images of different

qualities. In that sense, our future work will be

Understanding the Impact of Image Quality in Face Processing Algorithms

149

focused on identifying the type and degree of the

distortions present in face images. We believe that

having that information beforehand, in conjunction

with the results presented in this paper, would lead to

the development of more robust face processing

systems.

REFERENCES

Belhumeur, P. N., Hespanha, J. P. and Kriegman, D. J.

(1997) ‘Eigenfaces vs. Fisherfaces: Recognition

Using Class Specific Linear Projection’, IEEE

Transactions on Pattern Analysis and Machine

Intelligence, 19(7), pp. 711–720.

Chen, L. et al. (2009) ‘Face recognition with statistical

local binary patterns’, Proceedings of the 2009

International Conference on Machine Learning and

Cybernetics, 4(February), pp. 2433–2439. doi:

10.1109/ICMLC.2009.5212189.

Clapes, A. et al. (2018) ‘From apparent to real age:

Gender, age, ethnic, makeup, and expression bias

analysis in real age estimation’, IEEE Computer

Society Conference on Computer Vision and Pattern

Recognition Workshops, 2018–June, pp. 2436–2445.

doi: 10.1109/CVPRW.2018.00314.

Dodge, S. and Karam, L. (2016) ‘Understanding how

image quality affects deep neural networks’, in 2016

8th International Conference on Quality of

Multimedia Experience, QoMEX 2016. Institute of

Electrical and Electronics Engineers Inc., pp. 1–6. doi:

10.1109/QoMEX.2016.7498955.

Escalera, S. et al. (2015) ‘ChaLearn Looking at People

2015: Apparent Age and Cultural Event Recognition

datasets and results’, in 2015 IEEE International

Conference on Computer Vision Workshop (ICCVW).

Gross, R. et al. (2010) ‘Multi-PIE’, in Proc Int Conf

Autom Face Gesture Recognit, pp. 807–813. doi:

10.1016/j.imavis.2009.08.002.

Huang, G. B. et al. (2007) ‘Labeled Faces in the Wild: A

Database for Studying Face Recognition in

Unconstrained Environments’, Tech Report.

Huang, R. et al. (2019) ‘Image Blur Classification and

Unintentional Blur Removal’, IEEE Access. Institute

of Electrical and Electronics Engineers Inc., 7, pp.

106327–106335. doi:

10.1109/ACCESS.2019.2932124.

Jaturawat, P. and Phankokkruad, M. (2017) ‘An

evaluation of face recognition algorithms and

accuracy based on video in unconstrained factors’,

Proceedings - 6th IEEE International Conference on

Control System, Computing and Engineering,

ICCSCE 2016, (November), pp. 240–244. doi:

10.1109/ICCSCE.2016.7893578.

Kang, J. S. et al. (2018) ‘Age estimation robust to optical

and motion blurring by deep residual CNN’,

Symmetry, 10(4). doi: 10.3390/sym10040108.

Kowalski, M., Naruniec, J. and Trzcinski, T. (2017) ‘Deep

Alignment Network: A Convolutional Neural

Network for Robust Face Alignment’, in 2017 IEEE

Computer Society Conference on Computer Vision

and Pattern Recognition Workshops, pp. 2034–2043.

doi: 10.1109/CVPRW.2017.254.

LFW Face Database : Main (2018). Available at:

http://vis-www.cs.umass.edu/lfw/ (Accessed: 25

January 2021).

Li, P. et al. (2019) ‘Face Recognition in Low Quality

Images: A Survey’,

ACM Comput. Surv, 1(April-).

doi: 10.1145/nnnnnnn.nnnnnnn.

Mahmood, A. et al. (2019) ‘Recognition of Facial

Expressions under Varying Conditions Using Dual-

Feature Fusion’, Hindawi: Mathematical Problems in

Engineering, 2019, pp. 1–13. doi:

10.1155/2019/9185481.

Mehmood, R. and Selwal, A. (2020) ‘A Comprehensive

Review on Face Recognition Methods and Factors

Affecting Facial Recognition Accuracy’, Lecture

Notes in Electrical Engineering, 597(January), pp.

455–467. doi: 10.1007/978-3-030-29407-6.

Rothe, R., Timofte, R. and Van Gool, L. (2018) ‘Deep

Expectation of Real and Apparent Age from a Single

Image Without Facial Landmarks’, International

Journal of Computer Vision. Springer US, 126(2–4),

pp. 144–157. doi: 10.1007/s11263-016-0940-3.

Sagonas, C. et al. (2013) ‘300 Faces in-the-Wild

Challenge: The first facial landmark localization

Challenge’, in 2013 IEEE International Conference

on Computer Vision Workshops.

Schroff, F. and Philbin, J. (2015) ‘FaceNet: A Unified

Embedding for Face Recognition and Clustering’, in

2015 IEEE Conference on Computer Vision and

Pattern Recognition (CVPR). Boston, MA, pp. 815–

823. doi: 10.1109/CVPR.2015.7298682.

Szegedy, C. et al. (2015) ‘Going deeper with

convolutions’, in 2015 IEEE Conference on Computer

Vision and Pattern Recognition (CVPR). Boston, MA,

pp. 1–9.

Turk, M. A. and Pentland, A. P. (1991) ‘Face Recognition

Using Eigenfaces’, in Proceedings. 1991 IEEE

Computer Society Conference on Computer Vision

and Pattern Recognition, pp. 586–591. doi:

10.1109/CVPR.1991.139758.

Viola, P. and Jones, M. (2001) ‘Rapid Object Detection

using a Boosted Cascade of Simple Features’, in IEEE

Conference on Computer Vision and Pattern

Recognition.

Xiong, X. and De La Torre, F. (2013) ‘Supervised Descent

Method and its Applications to Face Alignment’, in

2013 IEEE Conference on Computer Vision and

Pattern Recognition.

Zeiler, M. D. and Fergus, R. (2014) ‘Visualizing and

Understanding Convolutional Networks’, in 13th

European Conference on Computer Vision – ECCV

2014.

IMPROVE 2021 - International Conference on Image Processing and Vision Engineering

150

APPENDIX

Table 1: Gaussian noise experiment results.

FACENET DEX DAN

Noise

Variance

Accuracy Validation

Rate

MAE Apparent

Age (Years)

MAE Real

Age (Years)

Mean Error Failure Rate

0,00 0,9965 0,98567 6,46788 7,6086 0,052 0,0518

0,01

0,989 0,926 11,835 12,659 0,075 0,237

0,02

0,977 0,853 13,219 14,062 0,108 0,415

0,03

0,965 0,751 14,087 14,940 0,134 0,556

0,04

0,948 0,618 14,511 15,361 0,164 0,659

0,05

0,924 0,393 14,757 15,551 0,197 0,763

0,06

0,897 0,330 14,958 15,778 0,233 0,844

0,07

0,876 0,183 15,096 15,913 0,255 0,867

0,08

0,845 0,109 15,112 15,948 0,289 0,911

0,09

0,825 0,052 15,196 16,012 0,312 0,963

0,10

0,782 0,048 15,129 15,956 0,342 0,985

Table 2: Gaussian blur experiment results.

FACENET DEX DAN

Standard

deviation

Accuracy Validation

Rate

MAE Apparent

Age (Years)

MAE Real

Age (Years)

Mean Error Failure Rate

0 0,9965 0,9857 6,4679 7,6086 0,0524 0,0519

1

0,9957 0,9747 6,3348 7,5249 0,0553 0,1111

2

0,9899 0,9348 7,7535 8,6961 0,0866 0,2222

3

0,9758 0,8490 8,7475 10,0413 0,1508 0,4148

4

0,9587 0,6483 9,7207 11,0002 0,2484 0,5138

5

0,9192 0,4717 10,5318 11,8231 0,3410 0,5630

6

0,8515 0,2130 11,1935 12,4259 0,4268 0,6296

7

0,7840 0,0920 11,7378 12,9049 0,4960 0,7407

8

0,7388 0,0610 12,2777 13,3167 0,5495 0,7926

9

0,7055 0,0437 12,6175 13,6724 0,5942 0,8222

10

0,6792 0,0390 12,9041 13,9599 0,6312 0,8596

Table 3: Motion blur experiment results.

FACENET DEX DAN

Kernel Size Accuracy Validation

Rate

MAE Apparent

Age (Years)

MAE Real

Age (Years)

Mean Error Failure Rate

0 0,9965 0,9857 6,4679 7,6086 0,0524 0,0519

3

0,9953 0,9853 6,2926 7,4780 0,0527 0,0593

5

0,9942 0,9767 6,3769 7,6107 0,0548 0,0889

7

0,9927 0,9650 6,6799 7,9063 0,0624 0,1556

9

0,9925 0,9417 7,0470 8,2531 0,0730 0,2296

11

0,9890 0,9240 7,4316 8,6433 0,0875 0,2963

13

0,9852 0,9043 7,7827 9,0208 0,1028 0,3556

15

0,9810 0,8623 8,1101 9,3635 0,1207 0,3926

17

0,9748 0,7930 8,4178 9,6787 0,1399 0,4444

19

0,9663 0,7397 8,7187 9,9900 0,1584 0,5037

21

0,9583 0,6803 8,9849 10,2712 0,1546 0,5333

Understanding the Impact of Image Quality in Face Processing Algorithms

151

Table 4: Low brightness experiment results.

FACENET DEX DAN

Brightness

Factor

Accuracy Validation

Rate

MAE Apparent

Age (Years)

MAE Real

Age (Years)

Mean Error Failure Rate

1,0 0,9965 0,9857 6,4679 7,6086 0,0524 0,0519

0,9

0,9963 0,9850 6,7060 7,8234 0,0525 0,0519

0,8

0,9965 0,9850 6,8995 8,0156 0,0528 0,0667

0,7

0,9963 0,9830 7,1050 8,2215 0,0529 0,0667

0,6

0,9962 0,9760 7,4271 8,5286 0,0534 0,0667

0,5

0,9960 0,9753 7,6763 8,8173 0,0543 0,0963

0,4

0,9953 0,9683 8,1112 9,2502 0,0558 0,0963

0,3

0,9935 0,9610 8,7266 9,8644 0,0627 0,1407

0,2

0,9857 0,9267 9,6779 10,8681 0,0878 0,2815

0,1

0,5475 0,0003 12,3576 13,4337 0,2446 0,7556

Table 5: High brightness experiment results.

FACENET DEX DAN

Brightness

Factor

Accuracy Validation

Rate

MAE Apparent

Age (Years)

MAE Real

Age (Years)

Mean Error Failure Rate

1,0 0,9965 0,9857 6,4679 7,6086 0,0524 0,0519

1,2

0,9952 0,9863 6,4763 7,6331 0,0524 0,0519

1,4

0,9942 0,9767 6,8012 8,0657 0,0532 0,0667

1,6

0,9920 0,9480 7,4323 8,6951 0,0547 0,0741

1,8

0,9822 0,8830 8,1965 9,4396 0,0591 0,0963

2,0

0,9695 0,7690 9,0058 10,2465 0,0623 0,1111

2,2

0,9540 0,6890 9,7599 11,0076 0,0651 0,1333

2,4

0,9318 0,5743 10,3806 11,6264 0,0687 0,1778

2,6

0,9085 0,4737 10,8840 12,0786 0,0729 0,1926

2,8

0,8882 0,3850 11,2700 12,4501 0,0764 0,2148

3,0

0,8618 0,3043 11,6299 12,7698 0,0819 0,2667

Table 6: JPEG compression experiment results.

FACENET DEX DAN

JPEG

Quality

Accuracy Validation

Rate

MAE Apparent

Age (Years)

MAE Real

Age (Years)

Mean Error Failure Rate

0 0,9965 0,9857 6,4679 7,6086 0,0524 0,0519

3

0,9958 0,9857 6,4862 7,6254 0,0524 0,0519

5

0,9962 0,9850 6,6462 7,7662 0,0525 0,0519

7

0,9957 0,9843 6,4717 7,6117 0,0527 0,0519

9

0,9960 0,9873 6,8818 7,9820 0,0528 0,0519

11

0,9957 0,9837 7,3550 8,4265 0,0530 0,0593

13

0,9955 0,9837 6,5798 7,7979 0,0530 0,0741

15

0,9958 0,9793 6,6900 7,8536 0,0536 0,0667

17

0,9955 0,9830 7,3014 8,4085 0,0535 0,0593

19

0,9932 0,9670 7,4256 8,6271 0,0552 0,0741

21

0,9507 0,5370 10,226 11,371 0,0848 0,2889

IMPROVE 2021 - International Conference on Image Processing and Vision Engineering

152