Guided Inquiry Learning with Technology:

Investigations to Support Social Constructivism

Clif Kussmaul

1a

and Tammy Pirmann

2b

1

Green Mango Associates, LLC, 730 Prospect Ave, Bethlehem, PA, U.S.A.

2

College of Computing & Informatics, Drexel University, Philadelphia, PA, U.S.A.

Keywords: Computer Supported Collaborative Learning, Process Oriented Guided Inquiry Learning, POGIL, Social

Constructivism.

Abstract: Education needs to be more scalable and more effective. Process Oriented Guided Inquiry Learning (POGIL)

is an evidence-based social constructivist approach in which teams of learners work on activities that are

specifically designed to guide them to understand key concepts and practice important skills. This paper

describes a series of investigations of how technology might make POGIL more effective and more scalable.

The investigations include a survey and structured discussions among leaders in the POGIL community, a UI

mockup and a working prototype, and experiences piloting the prototype in a large introductory course. These

investigations show that instructors are interested in using such tools to provide richer learning experiences

for students and better reporting to help instructors monitor progress and facilitate learning. The course pilot

demonstrates that a prototype could support a large class and identifies areas for future work.

1 INTRODUCTION

Worldwide, the demand for education continues to

increase, while learner backgrounds and aptitudes

grow more diverse. Thus, we need to make education

more scalable and more effective. The ICAP Model

(Chi, Wylie, 2014) describes how learning outcomes

improve as student behaviors progress from passive

(P) to active (A) to constructive (C) to interactive (I).

Thus, students should interact with each other and

construct their own understanding of key concepts

using social constructivist approaches such as Peer

Instruction, Peer-Led Team Learning, and Process

Oriented Guided Inquiry Learning (POGIL). This

paper explores ways that technology could make

social constructivism more effective and scalable.

The rest of this paper is organized as follows.

Section 2 briefly summarizes relevant background.

Section 3 describes a set of related investigations: UI

mockups and a working prototype; a survey and

structured discussions with POGIL community

leaders; and experiences with the prototype in an

introductory computing course. Section 4 provides

conclusions and considers some future directions.

a

https://orcid.org/0000-0003-1660-7117

b

https://orcid.org/0000-0003-1797-5843

2 BACKGROUND

2.1 Computer Assisted Instruction

For over 50 years, developments in computing have

been applied to education (e.g., Rath, 1967).

Typically, a system for Computer Assisted

Instruction (CAI) or an Intelligent Tutoring System

(ITS) presents a question, evaluates responses,

provides feedback, and chooses subsequent questions

(e.g., Sleeman, Brown, 1982; Graesser, Conley,

Olney, 2012). However, Baker (2016) notes that

widely used ITS are often quite simple, and advocates

for systems “that are designed intelligently and that

leverage human intelligence” (p. 608). Computer

Supported Collaborative Learning (CSCL) seeks to

use technology to help students learn collaboratively

(e.g., Goodyear, Jones, Thompson, et al, 2014; Stahl,

Koschmann, Suthers, Sawyer, 2021). Jeong and

Hmelo-Silver (2016) describe desirable affordances

for CSCL that align with social constructivism: joint

tasks; ways to communicate; shared resources;

productive processes; co-construction; monitoring

and regulation; and effective groups.

Kussmaul, C. and Pirmann, T.

Guided Inquiry Learning with Technology: Investigations to Support Social Constructivism.

DOI: 10.5220/0010458104830490

In Proceedings of the 13th International Conference on Computer Supported Education (CSEDU 2021) - Volume 1, pages 483-490

ISBN: 978-989-758-502-9

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

483

2.2 POGIL

Process Oriented Guided Inquiry Learning (POGIL)

is an evidence-based approach to teaching and

learning that involves a set of synergistic practices

(Moog, Spencer, 2008; Simonson, 2019). In POGIL,

students work in teams of three to five to interact and

construct their own understanding of key concepts. At

the same time, students practice process skills (also

called professional or soft skills) such as teamwork,

communication, problem solving, and critical

thinking. Each team member has an assigned role to

focus attention on specific skills; e.g., the manager

tracks time and monitors team behavior, the recorder

takes notes, and the presenter interacts with other

teams and the instructor. The roles rotate so that all

students take each role and practice all skills. The

instructor is not a lecturer, but an active facilitator

who observes teams, provides high-level direction

and timing, responds to student questions, guides

teams that struggle with content or with process skills,

and leads occasional short discussions.

A POGIL activity consists of a set of models

(e.g., tables, graphs, pictures, diagrams, code) each

followed by a sequence of questions. Each team

works through the activity, ensuring that every

member understands every answer; when students

explain answers to each other, all of them understand

better. POGIL activities use explore-invent-apply

(EIA) learning cycles in which different questions

prompt students to explore the model, invent their

own understanding of a concept, and then apply this

learning in other contexts.

For example, in an introductory computer

science (CS) activity, the first model describes a

simple game, and questions guide teams to identify

and analyze strategies to play the game, leading teams

to discover a tradeoff between algorithm complexity

and speed (Kussmaul, 2016). Websites have sample

activities for a variety of disciplines (http://pogil.org),

and numerous CS activities (http://cspogil.org).

POGIL was developed for college level general

chemistry, and has expanded across a wide range of

disciplines (e.g., Farrell, Moog, Spencer, 1999;

Douglas, Chiu, 2013; Lenz, 2015; Hu, Kussmaul,

Knaeble, Mayfield, Yadav, 2016). POGIL is used in

small (<30) to large (>200) classes. In a literature

review, 79% (34 of 43) studies found positive effects

and one found negative effects (Lo, Mendez, 2019).

The POGIL Project is a non-profit organization

that works to improve teaching and learning by

fostering an inclusive community of educators. The

Project reviews, endorses, and publishes learning

activities, and runs workshops and other events. The

Project has been identified as a model “community of

transformation” for STEM education (Kezar, Gehrke,

Bernstein-Sierra, 2018).

2.3 POGIL with Technology

Prior to COVID, POGIL was primarily used in face-

to-face settings, and students wrote or sketched

answers on paper. Activities were distributed as PDFs

or printed workbooks. Some instructors are exploring

how technology could enhance POGIL in traditional,

hybrid, and online settings. Tools (e.g., clickers,

phone apps, learning management systems) can

collect and summarize student responses, particularly

in larger classes. Collaborative documents (e.g.,

Google Docs) make it easier to copy code or data to

and from other software tools.

The pandemic has forced instructors and students

to adapt to hybrid and online learning (e.g., Flener-

Lovitt, Bailey, Han, 2020; Reynders, Ruder, 2020;

Hu, Kussmaul, 2021), often using video conferencing

tools (e.g., Zoom, Google Hangouts, Skype) and

collaborative documents. This highlights the

importance of social presence, personal connections,

and interactive learning for students, and has thus

raised awareness and interest in social constructivism

and supporting tools. Software tools also have the

potential to leverage interactive models (e.g.,

simulations, data-driven documents, live code,

collaborative documents). Networked tools have the

potential to provide near real time data and feedback

to students and instructors, which is common in CAI,

but less common in social constructivism.

3 INVESTIGATIONS

This section describes a set of investigations,

including a user interface mockup, a survey and a

structured discussion among POGIL practitioners, a

web-based prototype, and experiences using it.

The mockup and prototype are similar to many

CAI systems and ITS. They seek to follow the advice

(summarized above) from Baker (2016) and Jeong

and Hmelo-Silver (2016). However, POGIL provides

key differences, including structured student teams,

the learning cycle structure, and active instructor

facilitation. Teams typically respond to a question

every minute or so, so the speed and correctness of

their responses should provide valuable insights into

how they work and learn, and how POGIL could

better support student learning.

CSEDU 2021 - 13th International Conference on Computer Supported Education

484

3.1 User Interface Mockup

In 2018, a user interface (UI) mockup (in HTML and

JavaScript) was developed to stimulate discussion

and reflection, and gather informal feedback from the

POGIL community.

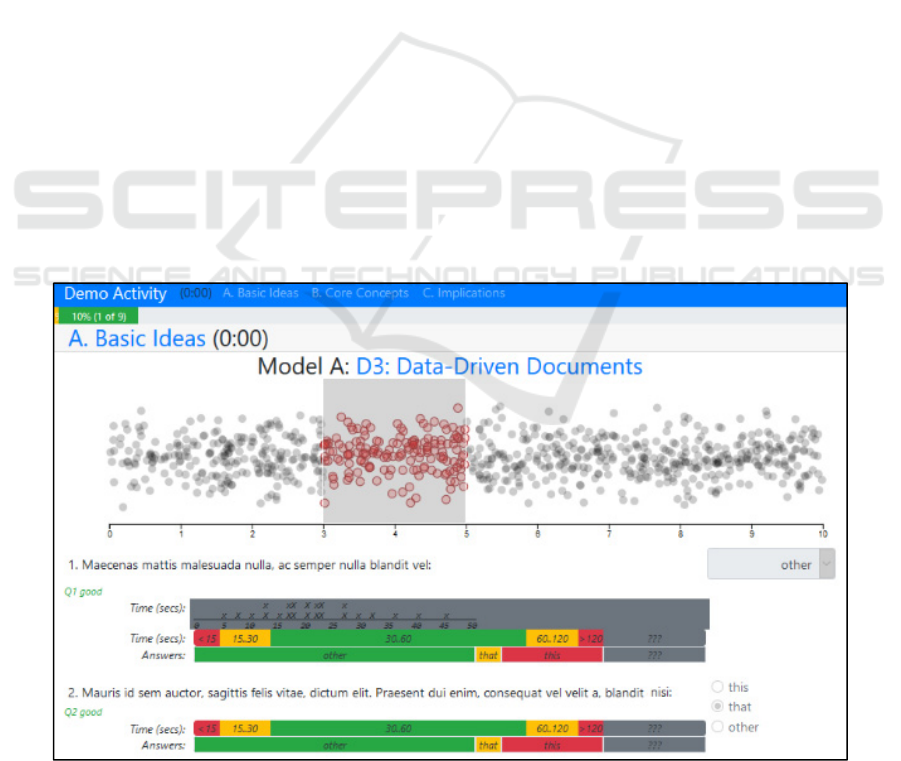

Figure 1 shows a sample view. (The model is a

placeholder and the question text is lorem ipsum to

focus on visual form rather than content.) The header

(blue) has the activity title, a timer, and a list of

sections, for easy navigation. The timer shows the

time left in the activity, to help teams manage time

effectively. Below the header is a status bar (yellow

and green) showing the team’s progress (10% of the

activity), and how often they responded correctly.

Below the header is the section title, which could also

include a countdown timer. The section starts with a

model; the figure shows a Data-Driven Document

(D

3

) (Bostock, Ogievetsky, Heer, 2011), but models

could also use text, static figures, or other interactive

tools. Below the model is a sequence of questions.

The questions can take several forms: multiple

choice, checkboxes, numeric value, short or long text,

etc. When the team responds correctly, they see the

next question. They can also receive feedback,

perhaps with a hint for a better response (green).

As described above, a POGIL instructor is an

active facilitator, who continually monitors progress,

assists teams that have problems with content or

process, and leads short discussions. Thus, the

instructor’s view adds histograms (grey) and/or bar

graphs (red, yellow, and green) for each question to

show the distribution of responses and timing. The

instructor could drill down to see which teams are

struggling and might need help, or to find and revise

questions that might be difficult or confusing.

3.2 Faculty Survey

In June 2019, a survey was sent to community leaders

at the POGIL National Meeting. The response rate

was 70% (47 of 65). Respondents included college

(n=36) and K-12 (n=9) instructors. Disciplines

included chemistry (n=38), biology (n=8), and others

(n=7). Typical class sizes were <25 (n=23), 25-50

(n=10), and >50 (n=6). These values seem typical of

the POGIL community, except that the latter involves

a larger fraction of K-12 instructors.

Respondents rated the availability of three

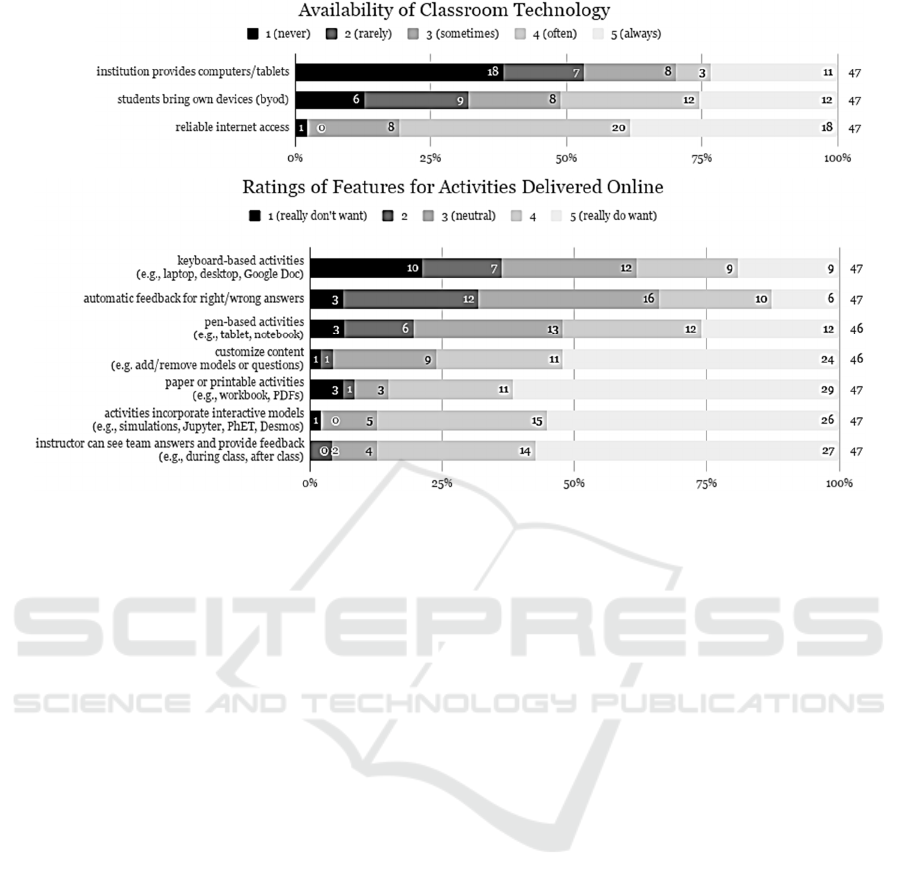

categories of technology. Figure 2 (top) summarizes

responses, from least to most common. Half (n=25)

were at institutions that never or rarely provide

computers, and only 14 often or always provide

computers. In contrast, about half (n=24) had students

who often or always bring their own devices, and only

15 had students who never or rarely bring devices.

Nearly all had reliable internet access. Thus, tools

should be web-based and device independent.

Respondents also rated their interest in seven

potential features. Figure 2 (bottom) summarizes

Figure 1: Mockup with pacing cues, an interactive model, and questions with automatic feedback. The instructor’s view adds

the distribution of time and responses for each question.

Guided Inquiry Learning with Technology: Investigations to Support Social Constructivism

485

Figure 2: Summary of responses for available classroom technology (top) and features for online activities (bottom). Items

are listed on the left, from least to most common. The stacked bars show the number of instructors with each response, from

1 (most negative) on the left to 5 (most positive) on the right. Total responses are shown on the right.

responses, from least to most popular. Respondents

were split on keyboard-based activities; 17 didn’t

want them, and 18 did. This might reflect POGIL’s

traditional use of paper activities where students draw

or label diagrams and other content. Respondents

were also split on automated feedback; 15 didn’t want

it, and 16 did. This might reflect unfamiliarity with

such tools, or a strong belief in the instructor as an

active facilitator. Pen-based activities were more

popular; 9 didn’t want them, and 24 did.

Respondents were strongly in favor of the other

four features. Most (n=35) wanted the ability to

customize content, although this could result in

activities that don’t meet all POGIL criteria. Most

(n=40) wanted the option of paper or printable

activities; again, this is common in POGIL. Nearly all

(n=41) wanted interactive models and ways to

monitor responses and provide feedback. Thus, these

became high priorities for the prototype (see below).

3.3 Community Discussion

In June 2020, a POGIL National Meeting session

invited participants to “explore the opportunities and

constraints that technology can provide”, “explore a

prototype … for POGIL-style activities”, and “have

structured discussions about the opportunities and

challenges”. Twelve participants were selected to

provide diverse perspectives, and worked in three

groups. In each of three segments, they considered

potential benefits and risks for three audiences: (a)

students, (b) instructors and authors, and (c) The

POGIL Project. Groups then discussed what they had

written and identified themes and insights, which

were then shared with the larger group. Segments (a)

and (b) were preceded by demos of the web-based

prototype (described below).

All feedback was copied into a Freeplane mind

map (http://freeplane.org). Statements with multiple

items were split, and similar items were clustered.

Table 1 summarizes the most common benefits and

risks for each audience; the numbers in parentheses

indicate the number of references to each idea.

For students, the top benefits included flexible

learning activities in a variety of settings, and a wider

variety of models and representations. The top risks

included less interaction, more cognitive load, less

emphasis on process skills, and technology,

accessibility, and usability problems.

For instructors and activity authors, the top

benefit was access to data, and specifically real time

monitoring of student progress, and data to improve

activities, compare classes, and support research.

Other benefits included a single integrated platform

and sharing activities with other instructors. The top

risks included less interaction with students, and

added effort to learn new tools and practices and to

develop activities and facilitate them in classes.

CSEDU 2021 - 13th International Conference on Computer Supported Education

486

Table 1: Summary of ideas from structured discussions among POGIL community leaders, to identify potential benefits and

risks of a web-based environment for POGIL style activities. Numbers in parentheses indicate number of comments.

Potential Benefits Potential Risks

Students

(12) Flexibility for face-to-face, synchronous,

asynchronous, and hybrid settings (during pandemic).

(9) Variety of models and representations, including

simulations (e.g., PhET), easier use of color.

(12) Other: student personalization, ease of use, multiple

response types, accessibility, lower cost, flexibility,

and reduced impact of “loud people”.

(14) Less student-student interaction,

less discussion and collaboration.

(6) More cognitive load and less student focus.

(6) Technology issues (rural access, devices),

accessibility.

(4) Less emphasis on process skills.

(9) Other: usability, cost, multiple formats, learning curve.

Instructors & Authors

(24) Data generally (including 6 less specific responses).

(9) Monitor student progress and answers in real time,

and provide feedback, particularly in large classes.

(9) Access to student responses to compare classes,

improve activities, study outcomes more broadly.

(12) Integrated platform with activity, responses,

feedback, reporting out, etc. (vs. using multiple tools).

(9) Less work overall, compared to instructors creating

and adapting their own online materials and tools.

(12): Other: avoid classroom limitations, predefined

feedback, use activities from different sources.

(12) Less student-instructor interaction,

due to watching dashboard instead of students.

(10) Learning curve for tools and facilitation practices.

(7) Increased effort to author or adapt materials using

new tools (especially answer-specific feedback).

(15) Other: cost and reliability, student privacy, focus on

answers not process, too little or too much flexibility.

The Project

(13) Data to improve activities and support research.

(12) Broader access to POGIL materials,

including more adopters, faster dissemination.

(8) Increased revenue (without publishers in middle).

(7) Push to digital given COVID and other trends.

(4) Other: accessibility / ADA, community support.

(24) Time and cost to create system, convert materials,

update and support.

(10) Security and privacy for student data (and activities).

(8) Equity and accessibility.

(9) Other: goes against POGIL philosophy, diverse needs,

misuse, copyright, subscriptions, third party platform.

Benefits and risks for The POGIL Project were

similar to those for instructors and authors. Added

benefits included broader access to POGIL-style

materials, and possible income to support the Project.

3.4 Web-based Prototype

Based on the mockup and instructor feedback, a web-

based platform is being developed to support POGIL

and similar forms of social constructivism, and to

help instructors create, facilitate, assess, and refine

learning activities. Guided Inquiry Learning with

Technology (GILT) builds on work with social

constructivism, POGIL, CAI, ITS, CSCL, and web-

based collaboration tools. As described below, GILT

focuses on key elements used in POGIL and related

approaches, includes features for collaboration and

research, and leverages existing tools when possible

to avoid overdesign or duplication of effort. (GILT is

a single page application using the Mongo, Express,

Angular, Node (MEAN) software stack.)

With GILT, teams work in a browser, which saves

their responses and questions for later review and

analysis. In hybrid or online settings, students interact

virtually (e.g., in Zoom or Hangouts). An instructor

can manage teams and activities, monitor team

progress, and review and comment on team

responses. An activity author can create and edit

activities, and review student responses and timings.

In POGIL, each activity is deliberately designed

with a sequence of questions about a model. In GILT,

models can be also dynamic and interactive,

including videos, simulations such as NetLogo

(Wilensky, Stroup, 1999) or PhET (Perkins, Adams,

Dubson, et al, 2005), coding environments such as

repl.it (https://repl.it) or Scratch (Resnick, Maloney,

Monroy-Hernández, 2009), and other components,

such as Data-Driven Documents (D

3

) (Bostock,

Ogievetsky, Heer, 2011). Questions can take varied

forms including plain text, multiple choice, numeric

sliders, etc. GILT could also include questions to help

assess process skills, mood, and effectiveness.

In POGIL, teams discuss each question and agree

on a response; if it is incorrect, the instructor might

ask a leading question or - if several teams have the

same difficulty - lead a short class discussion. The

instructor’s notes for an activity might include sample

answers and discussion prompts. GILT can provide

predefined feedback for common team responses to

partially automate this process (particularly when

teams are remote or asynchronous), and an author can

review a report on the most common responses.

Guided Inquiry Learning with Technology: Investigations to Support Social Constructivism

487

Defining feedback can be time consuming but one

author’s work could benefit many instructors.

In GILT, an instructor can quickly see the status

of each team and the class as a whole, lead classroom

discussions on difficult questions, and check in with

teams having difficulty. Similarly, an activity author

can explore the most difficult questions, the most

common wrong responses, and useful correlations

(e.g., by institution, gender/ethnicity).

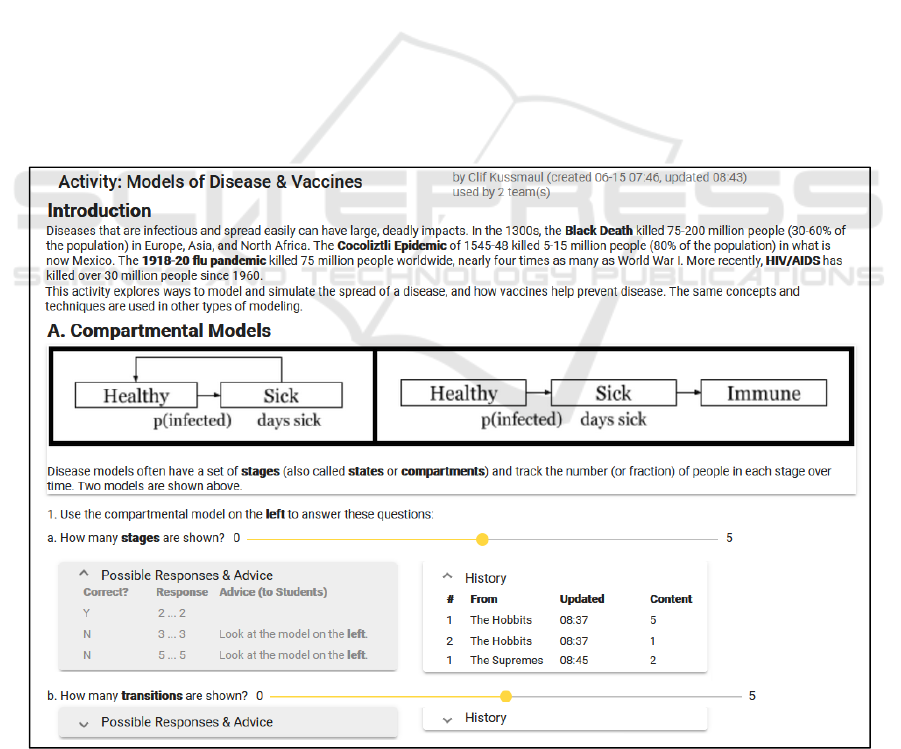

Figure 3 shows a sample view of a model and the

first few questions in an activity. The model has some

text with background information and two

compartmental models. The questions include text,

sliders for numeric responses, and checkboxes for

multiple choice. For an instructor or author, each

question also includes possible responses and advice

defined by the activity author, and a history of all

student responses. Question 1a has one correct

response, and two incorrect responses with advice to

students. One team gave two incorrect responses. An

instructor could also add team-specific feedback,

particularly in settings where the instructor can’t

easily speak to individual teams.

Thus, GILT seeks to help balance key tensions in

the learning experience. Guiding students to discover

concepts leads to better understanding but can take

longer. Student teams enhance learning but can allow

some students to be passive and let others do the

work. Automated feedback can help students who are

stuck, but can also encourage random guessing. An

instructor can provide valuable guidance and support,

but might not always be available.

GILT tracks every student response, when it

occurred, and when each team starts and stops work

on each part of an activity. This can provide near real

time feedback to teams and instructors, summary

reports for instructors and activity authors, and rich

evidence for researchers. For example, authors could

examine the distribution of responses and timings to

see the impact of adding, editing, or removing

elements of an activity. An author could use AB

testing to test different forms of a question, different

sequences of questions, or different models, and

decide which best support student learning. Similarly,

researchers could track team and instructor behavior

using minute-by-minute data on which views,

elements, or subcomponents are used, similar to

established classroom observation protocols (e.g.,

Sawada, 2002; Smith, Jones, Gilbert, et al, 2013).

Figure 3: View of a GILT activity, including a model with text and graphics, and questions with numeric sliders.

The instructor’s view adds possible correct and incorrect responses (shaded); and history of student responses.

CSEDU 2021 - 13th International Conference on Computer Supported Education

488

3.5 Course Pilot & Student Feedback

In Fall 2020, GILT was piloted in weekly sessions of

an introductory computing course with five sections

of ~80 students (i.e., ~20 teams per section). The

instructor and the GILT developer worked together to

migrate learning activities, identify and resolve

problems, and clarify future priorities. Migrating

activities was usually straightforward, and could

probably be supported by undergraduate assistants.

Most teams used videoconferencing (e.g., Zoom) to

see a shared screen and each other. The user interface

was revised to improve clarity for students, and to add

information for instructors. It was helpful for the

instructor to be able to quickly see the range of

student responses, especially for questions that

prompted students to develop insights or conclusions.

The instructor reviewed and analyzed end-of-

term student course evaluations. Feedback on GILT

focused on the POGIL-style class and activities, more

than the software platform, and was similar to that in

traditional POGIL classes – some students articulate

how social constructivism helps them understand

concepts and develop skills, and a few dislike teams

or believe they would learn more through lectures.

Some students wanted smaller teams, and the use of

roles was polarizing – some students found them very

helpful, others disliked them. As in traditional POGIL

classes, it might help for the instructor to more

frequently articulate and demonstrate the advantages

of POGIL over lecture.

Feedback on the learning activities included

ambiguous wording and some repetitive questions.

Feedback on the GILT platform focused on some

problems saving responses, linking to external sites,

and navigating within GILT. Some issues were

addressed during the term, and others are in progress.

Here are four student quotes (three positive, one

negative; lightly edited for spelling and grammar):

“… one aspect that should absolutely be retained

… is the GILT worksheet activities. I feel like they

were robust and … where I learned the most … so I

ended up learning all that information in a fairly

reasonable amount of time.”

“These activities were easy to do and introduced

us to various new topics while also giving us

experience working in a team. I liked how our roles

during these activities rotated each week and allowed

us to contribute to our group in a different way each

time. … the GILT activities were effective because

they allowed us to learn new concepts while

discussing questions among team members.”

“… completing questions [in] GILT is amazing.

Not only because it would put us through a new realm

of knowledge, but because it forced us to involve in a

healthy and fruitful discussion with our peers and

helps us to create a new bonding. In fact, I also made

a few close friends from this course.”

“I feel as though the lecture sessions should be

more lecture based and not worksheet filling based.

… I might have learned more if I was being told the

information, and had to get the answers down on a

sheet to show I was paying attention. Because doing

the GILT's helped me learn the concepts, but I feel as

though if there was a secondary way of learning the

information that would have helped me learn better.”

4 CONCLUSIONS

This paper has described a set of investigations on

how technology could support social constructivism,

including UI mockups, a survey and structured

discussion among leading POGIL practitioners, a

working prototype, and a pilot in a large course.

These investigations have yielded useful insights.

Leading POGIL instructors are interested in tools that

can support POGIL practices, particularly for classes

that are large or physically distributed (e.g., due to a

pandemic). Compared to traditional paper activities,

software tools could support diverse contexts and

interactive models, provide near real time data and

reports to help instructors facilitate learning, and

enhance communication between teams. These

benefits are tempered by concerns about reduced

interactions among students and with teachers, and

the time required to learn new tools, migrate learning

activities, and adapt teaching and learning practices.

Piloting GILT in a large introductory course

demonstrated that the prototype could support teams

and provide useful data for instructors and activity

authors. The pilot also identified some areas for

improvement and potential enhancements.

In the future, we will continue to implement, test,

and refine technology-based tools to support POGIL.

Current priorities include:

• Enhance reporting and dashboards with charts

and natural language processing.

• Support responses using tables, matching,

sorting, and perhaps sketches.

Guided Inquiry Learning with Technology: Investigations to Support Social Constructivism

489

ACKNOWLEDGEMENTS

This material is based in part upon work supported by

the US National Science Foundation (NSF) grant

#1626765. Any opinions, findings and conclusions or

recommendations expressed are those of the author(s)

and do not necessarily reflect the views of the NSF.

The POGIL Project (http://pogil.org) and the

broader POGIL community have provided invaluable

advice, encouragement, and support.

REFERENCES

Baker, R. S., 2016. Stupid tutoring systems, intelligent

humans. Int’l Journal of Artificial Intelligence in

Education, 26(2), 600–614.

Bostock, M., Ogievetsky, V., Heer, J., 2011. D

3

Data-

Driven Documents. IEEE Tran. on Visualization and

Computer Graphics, 17(12), 2301–2309.

Chi, M. T. H., Wylie, R., 2014. The ICAP framework:

Linking cognitive engagement to active learning

outcomes. Educational Psychologist, 49(4), 219–243.

Douglas, E. P., Chiu, C.-C., 2009. Use of guided inquiry as

an active learning technique in engineering. Proc of the

Research in Engineering Education Symposium.

Queensland, Australia.

Farrell, J. J., Moog, R. S., Spencer, J. N., 1999. A guided-

inquiry general chemistry course. Journal of Chemical

Education, 76(4), 570.

Flener-Lovitt, C., Bailey, K., Han, R., 2020. Using

structured teams to develop social presence in

asynchronous chemistry courses. Journal of Chemical

Education, 97(9), 2519–2525.

Goodyear, P., Jones, C., Thompson, K., 2014. Computer-

supported collaborative learning: Instructional

approaches, group processes and educational designs.

In J. M. Spector, M. D. Merrill, J. Elen, M. J. Bishop

(Eds.), Handbook of Research on Educational

Communications & Technology (p. 439–451). Springer.

Graesser, A. C., Conley, M. W., Olney, A., 2012. Intelligent

tutoring systems. In APA Educational Psychology

Handbook, Vol 3: Application to Learning & Teaching

(pp. 451–473). American Psychological Association.

Hu, H. H., Kussmaul, C., 2021. Improving online

collaborative learning with POGIL practices. In Proc.

of the ACM Technical Symp. on CS Education

(SIGCSE), (online).

Hu, H. H., Kussmaul, C., Knaeble, B., Mayfield, C., Yadav,

A., 2016. Results from a survey of faculty adoption of

POGIL in Computer Science. Proc. of the ACM Conf.

on Innovation and Technology in CS Education,

Arequipa, Peru. 186–191.

Jeong, H., Hmelo-Silver, C. E., 2016. Seven affordances of

computer-supported collaborative learning: How to

support collaborative learning? How can technologies

help? Educational Psychologist, 51(2), 247–265.

Kezar, A., Gehrke, S., Bernstein-Sierra, S., 2018.

Communities of transformation: Creating changes to

deeply entrenched issues. The Journal of Higher

Education, 89(6), 832–864.

Kussmaul, C., 2016. Patterns in classroom activities for

process oriented guided inquiry learning (POGIL).

Proc. of the Conf. on Pattern Languages of Programs,

Monticello, IL, 1–16.

Lenz, L., 2015. Active learning in a Math for Liberal Arts

classroom. PRIMUS, 25(3), 279–296.

Lo, S. M., Mendez, J. I., 2019. L: Learning—The Evidence.

In S. R. Simonson (Ed.), POGIL: An Introduction to

Process Oriented Guided Inquiry Learning for Those

Who Wish to Empower Learners (p. 85–110). Stylus

Publishing.

Moog, R. S., Spencer, J. N. (Eds.), 2008. Process-Oriented

Guided Inquiry Learning (POGIL). (ACS Symposium

Series vol. 994), American Chemical Society.

Perkins, K., Adams, W., Dubson, M., Finkelstein, N., Reid,

S., Wieman, C., LeMaster, R., 2005. PhET: Interactive

simulations for teaching and learning physics. The

Physics Teacher

, 44(1), 18–23.

Rath, G. J., 1967. The development of Computer-Assisted

Instruction. IEEE Trans. on Human Factors in

Electronics, HFE-8(2), 60–63.

Resnick, M., Maloney, J., Monroy-Hernández, A., Rusk,

N., Eastmond, E., Brennan, K., Millner, A.,

Rosenbaum, E., Silver, J., Silverman, B., Kafai, Y.,

2009. Scratch: Programming for all. Communications

of the ACM, 52(11), 60–67.

Reynders, G., Ruder, S. M., 2020. Moving a large-lecture

organic POGIL classroom to an online setting. Journal

of Chemical Education, 97(9), 3182-3187.

Sawada, D., Piburn, M. D., Judson, E., 2002. Measuring

reform practices in science and mathematics

classrooms: The Reformed Teaching Observation

Protocol. School Science & Math., 102(6), 245–253.

Simonson, S. R. (Ed.), 2019. POGIL: An Introduction to

Process Oriented Guided Inquiry Learning for Those

Who Wish to Empower Learners. Stylus Publishing.

Sleeman, D., Brown, J. S. (Eds.), 1982. Intelligent Tutoring

Systems. Academic Press.

Smith, M. K., Jones, F. H. M., Gilbert, S. L., Wieman, C.

E., 2013. The Classroom Observation Protocol for

Undergraduate STEM (COPUS): A new instrument to

characterize university STEM classroom practices.

CBE—Life Sciences Education, 12(4), 618–627.

Stahl, G., Koschmann, T., Suthers, D., 2021. Computer-

supported collaborative learning. In R. K. Sawyer (Ed.),

Cambridge Handbook of the Learning Sciences (3rd

ed.). Cambridge University Press.

Wilensky, U., Stroup, W. 1999. Learning through

participatory simulations: Network-based design for

systems learning in classrooms. Proc. of the Conf. on

Computer Support for Collaborative Learning,

Stanford, CA, 80-es.

CSEDU 2021 - 13th International Conference on Computer Supported Education

490