Analysis of the Message Queueing Telemetry Transport Protocol for Data

Labelling: An Orthopedic Manufacturing Process Case Study

Mangolika Bhattacharya, Reenu Mohandas, Mihai Penica, Mark Southern, Karl Vancamp

and Martin J. Hayes

University of Limerick, Limerick, Ireland

Keywords:

Data Cleaning, Digital Manufacturing, Industry 4.0., Internet of Things (IoT), Message Queuing Telemetry

Transport (MQTT) Protocol, Message-Oriented Middleware (MOM).

Abstract:

The recent paradigm shift in the industrial production systems, known as Industry 4.0, changes the work

culture in terms of human machine interaction. Human labours are assisted by smart devices and machines as

in human-machine cooperation and human-machine collaboration. For enhancing this process, data processing

and analyses are needed. Therefore, data collection has become one of the most essential functions of large

organizations. In this work, a data engineering experiment for a grinding process within a commercial orthotics

manufacturing company is presented. The data collection and labelling is assessed for time stamp latency using

the Message Queuing Telemetry Transport (MQTT) protocol. This step is necessary to determine if alarm

prediction or ‘front running’ is feasible. The paper analyses the procured dataset and discusses its merits

as an alarm predictor, using sparsity indicators and concludes that a new investment in sensor infrastructure

is necessary. This work highlights some of the limits of performance that exist for the use of MQTT with

existing sensor infrastructure when retrofitting machine learning based alarm prediction in an industrial use

case setting. A road-map for potential solution to this problem is provided which needs to be assessed by the

company management before further progress can be made.

1 INTRODUCTION

Data cleaning and labelling has now become a ma-

jor limiting factor in the application of digital tech-

nology within the manufacturing industries (Yin and

Kaynak, 2015). By integrating smart devices, apply-

ing self-learning solutions and self-directional capa-

bilities, development costs are reduced while flexibil-

ity, speed and quality of production is increased. With

the arrival of 5G, the Industrial Internet of Things

(IIoT) and intelligent sensor-cloud powered systems

are evolving swiftly. A sensor-cloud system is a blend

of wireless sensor networks with an integrated cloud

computing capability that is combined with universal

physical sensing ability, high-speed computation and

huge storage (Wang et al., 2017). IoT gateways are

used to communicate with sensors that are agnostic

with respect to physical layer connectivity. Subse-

quent pre-processing and filtering are applied to the

data being generated by the suite of sensors/devices to

optimise transmission load on the network. The pro-

cessed data is transmitted to the cloud servers with the

use of standard protocols, via. the Message Queu-

ing Telemetry Transport (MQTT) protocol. MQTT

has become popular in Industry 4.0 applications due

to its lightweight instruction set and low-power con-

sumption properties. This makes MQTT ideal for

battery powered sensors connected by wireless net-

works in a possibly ad hoc fashion. In this paper, data

is collected using a dedicated Programmable Logic

Controller (PLC) for a particular (orthotic grinding)

commercial orthopedics manufacturing process. Al-

though the MQTT protocol is well-suited for agile

access to process variables, we found that a number

of non-linear time varying artifacts are exhibited by

the collected data. The dataset comprises a set of

variables, associated values and cloud based times-

tamps that are validated in an irregular fashion by

process operators. Moreover, the dataset consists of

both input parameters to the machine and the alarms

that have been generated in response to performance

abnormalities that run outside established confidence

limits. The objective of the work is to create an intel-

ligent system that can use machine learning to capture

and ultimately predict alerts so that corrective action

can be taken before occurrence of catastrophic events

Bhattacharya, M., Mohandas, R., Penica, M., Southern, M., Vancamp, K. and Hayes, M.

Analysis of the Message Queueing Telemetry Transport Protocol for Data Labelling: An Orthopedic Manufacturing Process Case Study.

DOI: 10.5220/0010450402150222

In Proceedings of the 6th International Conference on Internet of Things, Big Data and Security (IoTBDS 2021), pages 215-222

ISBN: 978-989-758-504-3

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

215

thereby improving product quality and minimising

downtime. This paper focuses on the problems that

are caused by the latency issues in large databases due

to the application of MQTT and suggests potential so-

lutions. This paper is organized as follows. Section 2

discusses related work and concepts in this area of re-

search. Section 3 presents a case study on storage of

data in the industry and the problems associated with

it. Section 4 analyzes the MQTT experimental obser-

vations. Section 5 proposes a new system architecture

for real-time data analysis that works well in simula-

tion. Finally, Section 6 concludes the paper and sug-

gests a roadmap for installation of the architecture on

the actual commercial process.

2 PRELIMINARIES AND

RELATED WORK

The work in this paper considers data engineering

challenges for Cyber Physical System (CPS) applica-

tions in Industry 4.0. Such applications require op-

timized manufacturing processes for smart allocation

of intelligent manufacturing subsystems for the pur-

pose of production. The objective is to support cus-

tomer optimized individual (so called ‘Batch size 0’)

product manufacturing. This intelligent control of

subsystem utilization enables cycle time, reliability,

security, logistics and circular economy/sustainability

considerations to be actively managed. Therefore, the

first objective of the the work is to select an informa-

tion transfer protocol that directly Industry 4.0 aims of

resource efficient production through the use of state-

of-the-art CPS infrastructure (M

¨

oller, 2016).

2.1 Industry 4.0

Industry 4.0 denotes the arrival of Internet of

Things(IoT), smart devices, sensor networks, and the

entire cyber-physical systems (CPS) which power the

cloud based manufacturing systems (Vaidya et al.,

2018). First industrial revolution was powered by the

invention of water and steam powered engines and

machines. Second industrial revolution or which can

be termed as “Industry 2.0” was made possible due

to the arrival of the mass production of goods using

electrical engines and machines. Third industrial rev-

olution or “Industry 3.0” marks the beginning of au-

tomation using PLCs and IT infrastructure. Industry

4.0 is an era of digital manufacturing. Digital manu-

facturing is a process of smart manufacturing, which

fuses the virtual world with the real world through the

cyber-physical infrastructure (M

¨

oller, 2016). This is

aimed to improve the quality and quantity of goods

being manufactured. Industry 4.0 dwells on the prin-

ciples of interoperability, virtualization, decentral-

ization, real-time capability, service orientation and

modularity (Khan et al., 2017).

2.2 MOM Protocols for Industry 4.0

Message-oriented middleware (MOM) is software

and/or hardware infrastructure that dynamically as-

sists the transmission and receipt of messages in

an IoT network. MQTT is a particular instance of

the MOM model based protocol (Banks and Gupta,

2014). MQTT was developed by Andy Stanford-

Clark of IBM in 1999 (Banks and Gupta, 2014)

(Luzuriaga et al., 2015). Other commercial exam-

ples of the MOM protocol include the Advanced Mes-

sage Queuing Protocol (AMQP), and the Data Distri-

bution Service (DDS) protocols. AMQP, created by

John O’Hara at JPMorgan Chase in London in 2003

(Kramer, 2009) (Luzuriaga et al., 2015), provides en-

cryption for the purpose of security(Luzuriaga et al.,

2015) (Novelli et al., 2018). This makes AMQP more

secure but at the same time more resource heavy and

not optimized in terms of power consumption. For

this reason AMQP is not seen as being appropriate

for industry 4.0 applications. DDS is an MOM based

protocol that does not employ a brokerage system

for event driven data flow. (Pardo-Castellote, 2003)

(Yang et al., 2012). DDS systems generally con-

sist of a “databus” which connects the publisher to

the relevant subscriber. A vector of parameters are

used to control the flow of information from the pub-

lisher to the subscriber thereby making it extremely

suitable for secure real-time access. It is necessar-

ily resource-heavy in its implementation and not suit-

able at present for deterministic low-power consum-

ing systems. DDS was initially developed for critical

military applications. DDS does not include a broker

but a set of parameters which control the quality-of-

service (QoS) through management of the informa-

tion flow from the publishers to the subscribers. This

fast and direct control of flow of information in DDS

protocol makes it an excellent choice for real-time

data analysis if it can be deployed in energy limited

low power devices and sensor networks. The use of

DDS is an on going research theme outside the scope

of this paper and will be considered in future work.

2.3 MQTT Fundamentals

MQTT has 3 types of QoS (Silva et al., 2018). QoS 0

denotes that the messages are delivered at most once,

and either the publisher or the subscriber stores the

message. QoS 1 means the publisher stores the mes-

IoTBDS 2021 - 6th International Conference on Internet of Things, Big Data and Security

216

sage, sends it at least once and keeps sending the mes-

sage until a confirmation is received from the sub-

scriber. QoS 2 denotes that both the publisher and the

subscriber store the message until both the parties re-

ceive confirmation that the message has been received

by the subscriber at least once. MQTT does not have

out-of-order delivery of messages, which creates la-

tency issues that need to be mitigated for real time

control applications. Separate factors such as network

congestion and packet-losses need to be managed dy-

namically to minimise latency. This work considers

a light-weight commercial implementation of MQTT

that makes it suitable for low-power applications. To

summarize, it can be said that AMQP is resource-

heavy, power consuming but a secure implementa-

tion. DDS is suitable for real-time applications but

not yet suitable for low-power and light-weight appli-

cations. MQTT is, at present, the only protocol that is

capable of being deployed in an Industry 4.0 suitable

for light-weight and low-power setting only when the

latency issues are small enough to allow a floor level

on information throughput. This paper considers how

this floor level on performance can be dynamically

assessed in a real time setting.

In this orthotics use case, the process relates to

grinding of particular knee orthotics, to provide knee

replacement solutions, with a comprehensive suite of

orthopaedic knee implant products and instruments. It

is an ultimate objective that last minute setpoint data

can be transmitted to the process so that personalised

medical applications can be supported.

MQTT is used to send data collected by a number

of PLC supported sensors to the cloud (Silva et al.,

2018). MQTT utilizes a publish-subscribe standard

for the transmission of data. In (Silva et al., 2018)

the authors dynamically investigated the latency in

transmission of data using the MQTT protocol and

reported significant real time control issues.

In order to address the latency issue that has been

identified as the key limiting factor for performance,

the authors of (Peralta et al., 2017) presented a ’fog’

based computing scheme that also analysed the over-

all network energy consumption for a cluster of IIoT

nodes and performed simulations on real data-sets us-

ing machine learning algorithms. They then com-

pared the results with traditional MQTT schemes.

The authors of (Khan et al., 2017) considered the

data engineering challenges presented by the use of

MQTT in real-time digital manufacturing processes.

This work actively considered the problem of data

transformation wherein raw heterogeneous data is

made suitable for interoperability with a number of

different machines acting within a particular process.

This approach enabled the prediction of machine fail-

Figure 1: IoT architecture.

ure that is considered here. Other problems that were

considered include data integration and modelling,

real-time access to the data, privacy and security, and

data presentation. This work assesses the impact of

latency issues on prediction. Other DDS type pro-

tocols have not been implemented as yet but will be

considered in future work.

3 DATA STORAGE AND

LABELLING CHALLENGES

A generalised diagram of the cloud architecture con-

sidered in this work is given in Fig. 1. The PLC is any

industrial computer which is adapted for the direct

control of a component of the manufacturing process

and provides process fault diagnosis where appropri-

ate for real time feedback (Bolton, 2015). The Open

Platform Communication Unified Architecture (OPC

UA) server is the software platform considered here

for access to all PLC data and and is the MQTT broker

in this use case. The data is transferred from the OPC

UA client, and from there to the cloud. The Gate-

way in this setting is an industrial grade computer,

containing an application which connects to the OPC

UA server section 3.1. The critical data is filtered out

at this level and sent to the cloud using a standard-

ised MQTT protocol. The data visualisation is written

in java using a spring boot framework in conjunction

with NoSQL database and MongoDB.

3.1 OPC UA

The OPC UA standard, here controls all the commu-

nication that takes place on devices within machines,

between machines, and from machines to systems in

a convergence of Information Technology (IT) and

Operational Technology (OT) for the grinding pro-

cess within a commercial orthotics setting. The OPC

UA is used with any software platform, i.e. it is scal-

able from minimum embedded controllers to massive

Analysis of the Message Queueing Telemetry Transport Protocol for Data Labelling: An Orthopedic Manufacturing Process Case Study

217

cloud infrastructures, but the Java platform is used

here for reasons of convenience in relation to inter-

facing in real time with the NoSQL database.

3.2 Application of MQTT Protocol: The

Methodology

As discussed in the previous section, this MQTT in-

stance runs with a relatively small data packet size in

order to keep the consumption of the power supply

low (Atmoko et al., 2017). The physical layer pro-

tocol is ‘data-agnostic’, i.e. data can be transmitted

in a variety of heterogeneous formats such as binary,

text, XML, or JSON that is specified by the particular

sensor that is employed on the machine. The PLC is

responsible for individual sensor handshaking proto-

cols.

This instance of MQTT requires two main soft-

ware components:

• MQTT Client is the web platform developed to

obtain the data.

• MQTT Broker is required to handle publish and

subscribe data. A Linux platform handles the free

available brokers (mosquitto and HiveMQ in this

use case).

The data obtained from the process resulted in

dataframes of two types of data, namely telemetry and

alarm variables. More details on the dataset is dis-

cussed in sections 4.1 and 4.2.

MQTT protocol follows First-In-First-Out (FIFO)

method. When an event occurs in the machine which

raises an alarm, the MQTT protocol does not allow

the event to be recorded in the server instantly but

awaits it in a queue. Since the PLC contains over

500 telemetry variables, the queue at a certain time

can be filled with a lengthy stream of data. This may

cause significant delay in the recording of the alarm

into the server. This time lag can vary depending on

many factors such as the PLC being offline, number

of awaiting notifications etc. Another probable rea-

son for this random offset is the inbuilt clock in PLC

which gets out-of-sync with the internet time.

4 MQTT DATASET

OBSERVATIONS

This section deals with problems associated with the

analysis of the MQTT data. Most data errors are

detected during the processing of the downloaded

dataset from the cloud. We present the data cleaning

in a step-by-step process, involving cycles of screen-

ing, diagnosing, and editing of suspected data abnor-

malities.

Initially the data is downloaded from MongoDB in

CSV files and imported into a pandas dataframe.

There are over 500 variables in the PLC. The size of

our database is over 30 GB, collected for a period of

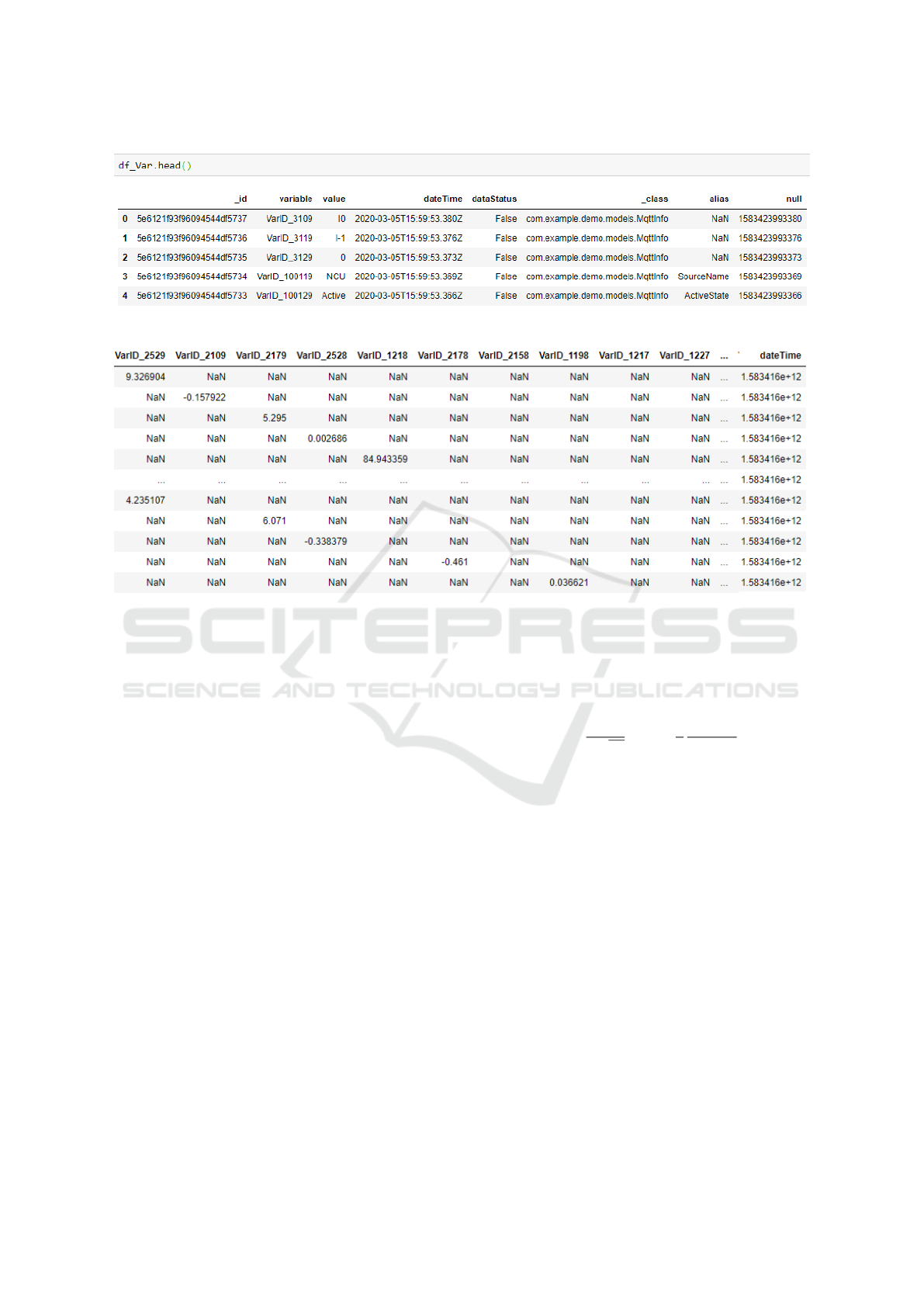

3 months. Fig. 2 illustrates the first five rows of the

data received from the database after initial cleaning.

4.1 Telemetry Variables

The telemetry variables refer to the process variables

which generate values during the operation of the

PLC, such as the speed of a spindle. Telemetry vari-

ables have:

• id: an identifier,

• variable: a variable name,

• dateTime: time at which the variable is uploaded

to the cloud,

• dataStatus: True/False i.e. live/cached data,

• alias: description of the variable.

Once the telemetry variables are downloaded from

the database as given in fig. 2, they are restructured

with variable IDs in the columns and cloud times-

tamps in the rows. The data that is received from the

database, has True/False tags. This is because, IoT

cloud service broadcasts data at a predefined inter-

val, which in this use case is 1 second. However, due

to the issues defined in section 3.2, MQTT protocol

broadcast cached data which are tagged as false. As

given in fig. 3, the false data is filtered out for analysis

purposes, which makes the resultant variable matrix

quite sparse. The condition number, κ, of the variable

matrix (A), is calculated as

κ =

σ

max

(A)

σ

min

(A)

≈ 10

10

(1)

where σ

max

and σ

min

are the maximum and minimum

values obtained after singular value decomposition of

A. The high value of κ makes the post processing of

the variable matrix quite difficult.

4.2 Alarm Variables

The alarm variables refer to the occurrence of differ-

ent alarms during the operation of the PLC. The alarm

data is similar to the telemetry variables except for the

presence of one additional parameter i.e. the alarm

timestamp. Alarm variables have:

• id: ordinal number of an alarm,

• variable: acknowledgement criterion for an

alarm,

IoTBDS 2021 - 6th International Conference on Internet of Things, Big Data and Security

218

Figure 2: Data downloaded from database after initial cleaning.

Figure 3: Variable matrix (A) with True values.

• dateTime: time at which the variable is uploaded

to the cloud,

• dataStatus: True/False i.e. live/cached data,

• Time stamp: the time of the alarm occurring in

the PLC, as per the PLC clock.

• alias: description of the alarm.

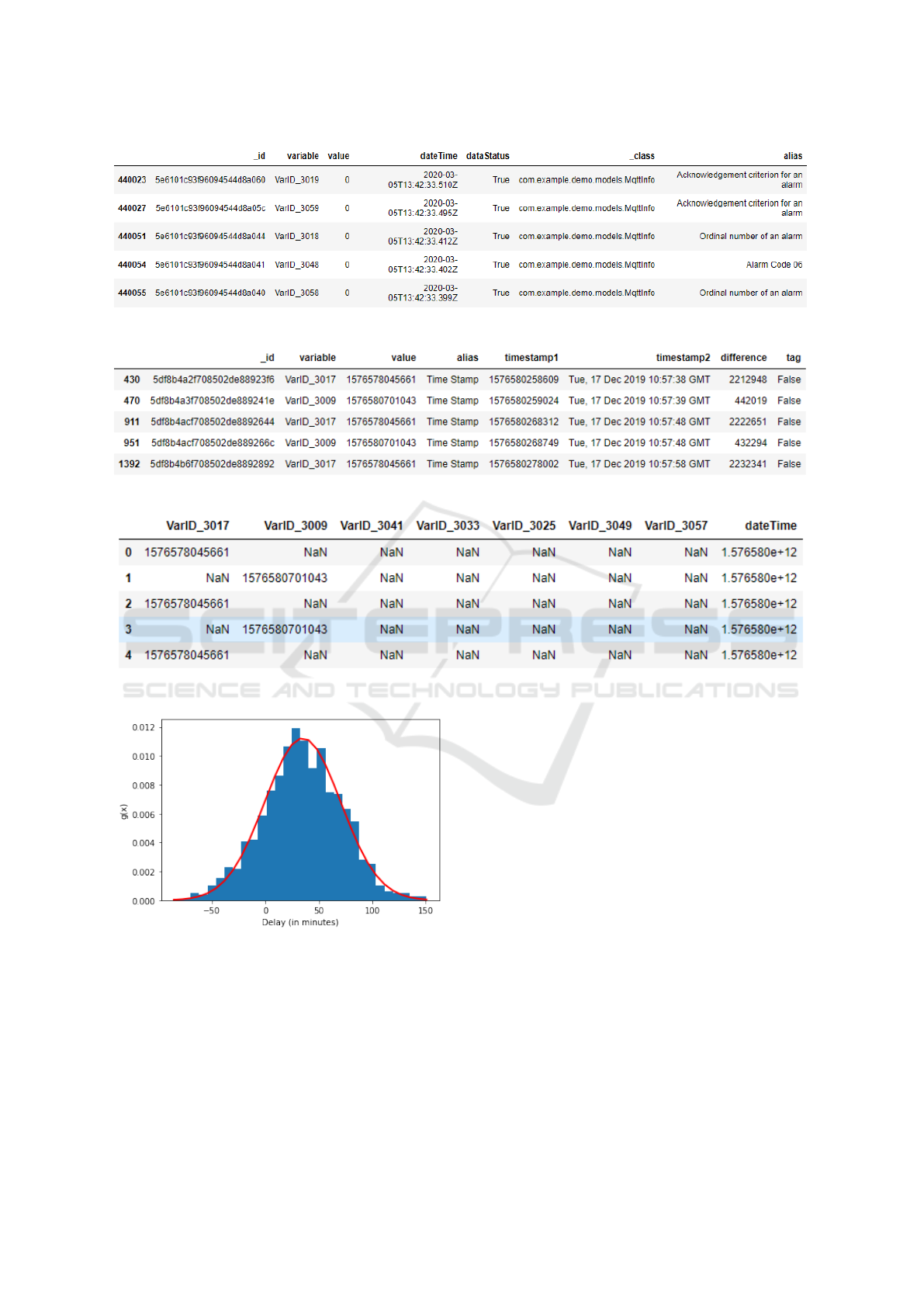

Fig. 4, denotes a snippet of the alarm variables

and fig. 5 denotes the extracted alarm timestamps.

As given in fig. 5, the alarm timestamp contains

the time of the alarm according to the clock inside

PLC, denoted by the column value. However, the

actual/internet time of uploading of the alarm to the

cloud by MQTT, is given by the column timestamp1,

i.e. the unix time value of column timestamp2.

Fig. 6 denotes our alarm matrix created to front-

run the alarms with the variables (fig. 3). However,

due to the sparsity in the variable matrix and the valid-

ity of the alarm timestamps as discussed next, ‘front-

running’, i.e., prediction of alarms is simply not pos-

sible. The alarms have a cloud timestamp and a ma-

chine timestamp, while the telemetry variables only

have a cloud timestamp. For the alarm variables the

time window between the machine time tags versus

the cloud time tags is non linear and time varying.

There exists a mean and standard deviation of 35 min-

utes each on this window. This is a major limiting

factor on the utility of this sparse dataset for alarm

prediction. Fig. 7, displays the gaussian distribution

g(x) of the delay in between the machine and cloud

timestamps. Here,

g(x) =

1

σ

√

2π

exp

−

1

2

(x −µ)

2

σ

2

(2)

where x denotes the distribution, µ and σ denote the

mean and standard deviation of the distribution re-

spectively.

Any attempt to clean this data amounts to the cre-

ation of a synthetic dataset from raw data. Synthetic

tag timestamping injects error into the creation of the

synthetic data that renders it useless for alarm front

running. This is due to the inability to produce a cor-

relation function between the telemetry variables and

alarms with any certainty due to the sparsity in the

alarm and telemetry data. The outcome of this study

is therefore that the alarms cannot be predicted us-

ing existing PLC based interfaces and that new sen-

sor hardware needs to be deployed in order to predict

alarms. This is useful intelligence in its own right for

the company involved in this work. The initial design

brief was to determine the limit of performance of the

existing setup.

Analysis of the Message Queueing Telemetry Transport Protocol for Data Labelling: An Orthopedic Manufacturing Process Case Study

219

Figure 4: Alarm Values.

Figure 5: Alarm Timestamps.

Figure 6: Alarm matrix with True values.

Figure 7: Probability density function of the delay.

5 PROPOSED SYSTEM FOR

REAL-TIME DATA ANALYTICS

In order to advise the company on the next steps that

are necessary to make the dataset suitable for any

alarm throwing analysis/post processing, an architec-

ture has been proposed for the upgrade of the existing

data collection system. This availability of the data is

often, in the authors experience, not known a priori by

the industrial client and a project such as this is often

very useful in terms of educating a company as to the

limit of performance for a particular machine learn-

ing experiment. It is often the case that, just because

a large quantity of data is being collected, there is an

expectation that these vast quantities of data will per

force lead to actionable manufacturing intelligence.

However, studies such as these can often need to be

carried out in order to once again confirm the law of

garbage in leading to garbage out. For many client

companies their current system architecture needs to

be health checked in terms of the time syncing of

timestamps before new investments will be made in

sensor infrastructure. This is a necessary stage before

proper post processing and then real-time data analy-

sis can be performed.

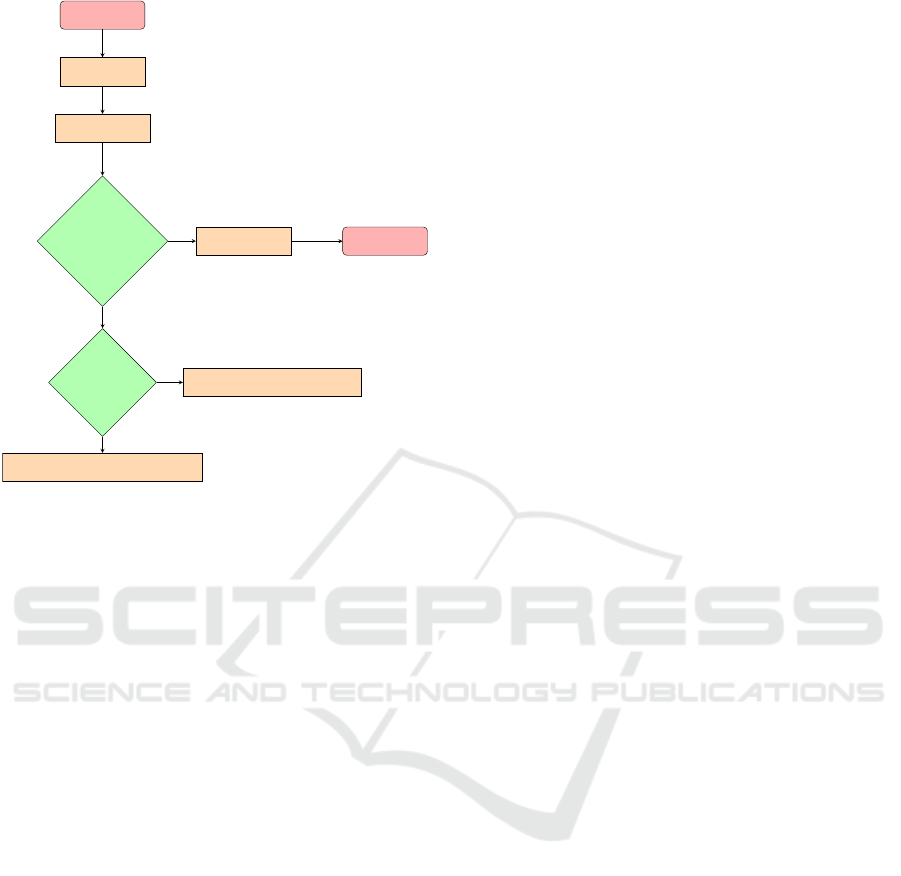

For this client the following architecture has been

proposed to enable predictive modelling. Fig. 8 illus-

trates the proposed system architecture in form of a

flow chart. The steps of the flow chart are discussed

as follow:

IoTBDS 2021 - 6th International Conference on Internet of Things, Big Data and Security

220

Start

Sync the data

Timestamp the data

Is data feasible

for real-time

data analytics?

Post processing only

Stop

Edge/Cloud Edge processing (suitable with MQTT)

Cloud processing (not suitable with MQTT)

no

yes

Figure 8: Proposed system.

• Maintenance of PLC clocks in the factory floor so

that they are synced with internet time.

• For all variables, telemetry and alarm, the data

needs to be time tagged by the machine when it

is captured. Currently, the telemetry variables are

not time tagged by the machine.

• Once the above steps are completed, it is checked

if the data is feasible for real-time analytics or

if the data is suitable only for post-processing.

MQTT batches up the data before sending it to

the cloud. This prevents real-time data analysis,

as it requires a constant stream of data. However,

when the data is batched up before sending, such

as in the case study presented in this paper, post

processing with a feasibility study can be done as

long as it has associated time tags denoting when

the measurements are made.

• For real-time data analysis batching has to be re-

moved between the act of capturing the data and

the act of processing because processing is done

in the cloud or at the edge.

Real-time data analysis can be done in two ways:

– Edge Computing: For processing at the edge,

the data and time tags are captured, processed,

and then batched prior to being sent to the

cloud. However, edge processing can only be

performed in form of a closed loop correction.

This means that to perform real-time data anal-

ysis, the processing of the data takes place at

the edge and the result is notified to the operator

to make changes in the PLC, before the data is

uploaded in the cloud. Edge processing is suit-

able with MQTT as the corrective action takes

place before the data is batched up and sent to

the cloud.

– Cloud Computing: For processing in the

cloud, the data and time tags can be captured,

sent to the cloud, and processed in real-time,

then saved in the cloud, with batching removed.

Cloud processing requires a constant stream of

data and therefore MQTT may not be used for

real-time data analysis via cloud. Other IoT

protocols, such as DDS, can be useful to facili-

tate cloud computing.

The initial deployment cost of option 1. above

is more significant than its cloud based alternative.

There is a significant opex cost associated with the

data transfer to the cloud. It is up to the business con-

cerned to make a decision regarding which option best

suits its business model. Once this decision is made

we will revisit this analysis in order to determine the

alarm tagging that is necessary for real-time process

control.

6 CONCLUSION

Predictive modelling and real-time data analysis use

cases are gaining popularity as the cost of data collec-

tion and transmission from sensors continue to drop.

This paper has considered the application of MQTT

in one such data streaming application for a grinding

process within a commercial orthotics manufacturing

company. Significant problems have been highlighted

in the application of the MQTT protocol to this pro-

cess as poor time tagging was exhibited within the

dataset. The current setting of time tagging leads to

the creation of sparse matrices for alarm prediction.

Therefore, reliable front running of the grinding pro-

cess is not possible with the present sensor infrastruc-

ture. Two design solutions have been presented to the

company to mitigate this fundamental problem. We

are presently awaiting a decision to be made that is

dependent on the current post COVID 19 climate that

is still taking shape. In future work, an Edge based

MQTT protocol will be considered and its perfor-

mance will be benchmarked against the correspond-

ing DDS protocol to determine which approach is the

most useful for alarm prediction in this commercial

process.

Analysis of the Message Queueing Telemetry Transport Protocol for Data Labelling: An Orthopedic Manufacturing Process Case Study

221

REFERENCES

Atmoko, R., Riantini, R., and Hasin, M. (2017). Iot real

time data acquisition using mqtt protocol. Journal of

Physics Conference Series, 853(1).

Banks, A. and Gupta, R. (2014). Mqtt version 3.1. 1. OASIS

standard, 29:89.

Bolton, W. (2015). Programmable logic controllers.

Newnes.

Khan, M., Wu, X., Xu, X., and Dou, W. (2017). Big data

challenges and opportunities in the hype of industry

4.0. In 2017 IEEE International Conference on Com-

munications (ICC), pages 1–6.

Kramer, J. (2009). Advanced message queuing protocol

(amqp). Linux Journal, 2009(187):3.

Luzuriaga, J. E., Perez, M., Boronat, P., Cano, J. C.,

Calafate, C., and Manzoni, P. (2015). A comparative

evaluation of amqp and mqtt protocols over unstable

and mobile networks. In 2015 12th Annual IEEE Con-

sumer Communications and Networking Conference

(CCNC), pages 931–936.

M

¨

oller, D. P. (2016). Digital manufacturing/industry 4.0. In

Guide to Computing Fundamentals in Cyber-Physical

Systems, pages 307–375. Springer.

Novelli, L., Jorge, L., Melo, P., and Koscianski, A. (2018).

Application protocols and wireless communication

for iot: A simulation case study proposal. In 2018 11th

International Symposium on Communication Systems,

Networks Digital Signal Processing (CSNDSP), pages

1–6.

Pardo-Castellote, G. (2003). Omg data-distribution service:

architectural overview. In 23rd International Confer-

ence on Distributed Computing Systems Workshops,

2003. Proceedings., pages 200–206.

Peralta, G., Iglesias-Urkia, M., Barcelo, M., Gomez, R.,

Moran, A., and Bilbao, J. (2017). Fog computing

based efficient iot scheme for the industry 4.0. In 2017

IEEE International Workshop of Electronics, Con-

trol, Measurement, Signals and their Application to

Mechatronics (ECMSM), pages 1–6.

Silva, D. R. C., Oliveira, G. M. B., Silva, I., Ferrari, P., and

Sisinni, E. (2018). Latency evaluation for mqtt and

websocket protocols: an industry 4.0 perspective. In

2018 IEEE Symposium on Computers and Communi-

cations (ISCC), pages 01233–01238.

Vaidya, S., Ambad, P., and Bhosle, S. (2018). Industry 4.0–

a glimpse. Procedia Manufacturing, 20:233–238.

Wang, T., Li, Y., Chen, Y., Tian, H., Cai, Y., Jia, W., and

Wang, B. (2017). Fog-based evaluation approach for

trustworthy communication in sensor-cloud system.

IEEE Communications Letters, 21(11):2532–2535.

Yang, J., Sandstr

¨

om, K., Nolte, T., and Behnam, M. (2012).

Data distribution service for industrial automation. In

Proceedings of 2012 IEEE 17th International Confer-

ence on Emerging Technologies Factory Automation

(ETFA 2012), pages 1–8.

Yin, S. and Kaynak, O. (2015). Big data for modern indus-

try: challenges and trends [point of view]. Proceed-

ings of the IEEE, 103(2):143–146.

IoTBDS 2021 - 6th International Conference on Internet of Things, Big Data and Security

222