FakeWhastApp.BR: NLP and Machine Learning Techniques for

Misinformation Detection in Brazilian Portuguese WhatsApp Messages

Lucas Cabral

1

, Jos

´

e Maria Monteiro

1

, Jos

´

e Wellington Franco da Silva

1

, C

´

esar Lincoln Mattos

1

and Pedro Jorge Chaves Mour

˜

ao

2

1

Computer Science Department, Federal University of Cear

´

a, Fortaleza, Cear

´

a, Brazil

2

State University of Cear

´

a, Fortaleza, Brazil

pjmourao cs@hotmail.com

Keywords:

Misinformation Detection, Fake News Detection, Natural Language Processing, WhatsApp, Social Media.

Abstract:

In the past few years, the large-scale dissemination of misinformation through social media has become a

critical issue, harming the trustworthiness of legit information, social stability, democracy and public health.

Thus, developing automated misinformation detection methods has become a field of high interests both in

academia and in industry. In many developing countries such as Brazil, India, and Mexico, one of the primary

sources of misinformation is the messaging application WhatsApp. Despite this scenario, due to the private

messaging nature of WhatsApp, there still few methods of misinformation detection developed specifically for

this platform. In this work we present the FakeWhatsApp.BR, a dataset of WhatsApp messages in Brazilian

Portuguese, collected from Brazilian public groups and manually labeled. Besides, we evaluated a series of

misinformation classifiers combining Natural Language Processing-based techniques of feature extraction and

a set of well-know machine learning algorithms, totaling 108 different scenarios. Our best result achieved a

F1 score of 0.73, and the analysis of errors indicates that they occur mainly due to the predominance of short

texts that accompany media files. When texts with less than 50 words are filtered, the F1 score rises to 0.87.

1 INTRODUCTION

The rise of social media platforms revolutionized how

we produce, share, and consume information, greatly

improving its transmission velocity and available vol-

ume. The boundaries between information produc-

tion and sharing are blurring fastly. However, while

social networks made wider access to good informa-

tion, its highly decentralized and unregulated environ-

ment allows the mass proliferation of misinformation

(Vosoughi et al., 2018; Guo et al., 2019; Su et al.,

2020). Through these platforms, misinformation can

deceive thousands of people in a short time, bringing

great harm to individuals, companies, or even soci-

ety. Misinformation is a broad concept that can be

defined as misrepresented information, including fab-

ricated, misleading, false, fake, deceptive, or distorted

information (Su et al., 2020). This comprehensive

definition covers a variety of specific, and sometimes

overlapping, types of such as fake news (Lazer et al.,

2018), rumor(Shu et al., 2017), deception (Maalej,

2001) and hoaxes. In particular, the term fake news,

despite specifically describe intentionally misleading

information written as journalistic news, has become

very present in popular culture and sometimes is in-

formally used as a misinformation synonym.

Misinformation is usually created with malicious

intentions to manipulate public opinion, harm indi-

viduals, organizations, or social groups, and obtain

economic or political gains. Moreover, misinfor-

mation spreads faster, deeper, and broader in social

media than legit information. Further, due to the

high volume of information that we are exposed to

when using social media, humans have a limited abil-

ity to distinguish true information from misinforma-

tion (Vosoughi et al., 2018; Qiu et al., 2017). The

widespread of misinformation causes a major social

problem, as breaks the trustworthiness of legit in-

formation, harming the democracy, justice, economy,

public health, and security (Guo et al., 2019).

In this context, automatic misinformation detec-

tion has attracted the interest of different commu-

nities. In a broad definition, misinformation detec-

tion (MID) is the task of assessing the appropriate-

ness (truthfulness, credibility, veracity or authentic-

ity) of claims in a piece of information (Su et al.,

Cabral, L., Monteiro, J., Franco da Silva, J., Mattos, C. and Mourão, P.

FakeWhastApp.BR: NLP and Machine Learning Techniques for Misinformation Detection in Brazilian Portuguese WhatsApp Messages.

DOI: 10.5220/0010446800630074

In Proceedings of the 23rd International Conference on Enterprise Information Systems (ICEIS 2021) - Volume 1, pages 63-74

ISBN: 978-989-758-509-8

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

63

2020). Early detection of misinformation could pre-

vent it’s spread, thus reducing its damage. MID can

be exploited by various approaches, including human-

crafted rules, traditional machine learning models,

neural networks and combining machine learning and

natural language processing (NLP).

The combination of machine learning and natural

language processing (NLP) to extract features from

text achieved great results in the literature. NLP-

based approaches rely on the hypothesis that inten-

tionally misleading texts has linguistic patterns that

distinguishes from non-misleading texts. This ap-

proach has been extensively used with data collected

from platforms as Facebook

1

(Granik and Mesyura,

2017) and Twitter

2

(Zervopoulos et al., 2020). How-

ever, in many developing countries such as Brazil,

India, and Mexico, one of the primary sources of

misinformation is the messaging application What-

sApp

3

. The purpose of WhatsApp is allow users to

privately send messages to each other through their

smartphones. Despite being mostly used for indi-

vidual conversations, WhatsApp has the resources of

conversation groups, where up to 256 users can partic-

ipate, and forwarding messages, which facilitate the

quick dissemination of misinformation. In Brazil’s

case, about 35% deceptive news is shared through

WhatsApp (Newman et al., 2020), and 40,7% of these

messages are shared after being disproved (Resende

et al., 2018; Resende et al., 2019).

Despite this scenario, due to the private messag-

ing nature of WhatsApp, there still few methods of

MID developed specifically for this platform. When

comes to NLP-based approaches, the performance of

a model is highly dependent on the linguistic patterns,

topics, and vocabulary present in the data used to train

it. Due to its unique nature of private messenger and

its broad user base, the content shared through What-

sApp and the way its users express themselves varies

significantly compared to public social networks like

Facebook and Twitter (Waterloo et al., 2018; Rosen-

feld et al., 2018). Then, a model trained with texts

collected from Twitter or Facebook may have a poor

performance when used to classify WhatsApp mes-

sages. Thus, in this context, to obtain a good NLP-

based MID is necessary to train the prediction model

with WhatsApp data.

In order to fill this gap, we built a large-scale,

labeled, anonymized, and public dataset formed by

WhatsApp messages in Brazilian Portuguese (PT-

BR), collected from public WhatsApp groups. Then,

we conduct a series of classification experiments us-

1

https://www.facebook.com/

2

https://twitter.com/

3

https://www.whatsapp.com/

ing combinations of Bag-Of-Words features and clas-

sical machine learning methods to answer the follow-

ing research questions:

1. How challenging is the task of misinformation de-

tection in WhatsApp messages using NLP-based

techniques?

2. Which combination of pre-processing methods,

word-level features and classification algorithms

are best suited for this task?

3. Which are the limitations of an NLP-based ap-

proach?

Our results show that a purely NLP-based approach

using traditional Bag-of-Words features has limited

performance due to the particularities of WhatsApp

messages, especially the predominance of short mes-

sages that follows media files (audios, images, or

videos). Our best result achieved a F1 score of 0.73,

and the analysis of errors indicates that they occur

mainly due to the predominance of short texts that ac-

company media files. When texts with less than 50

words are filtered, the F1 score rises to 0.87. To the

best of our knowledge, there is no previous work that

performed MID in a large-scale corpus of WhatsApp

messages in PT-BR.

The remainder of this paper is organized as fol-

lows. Section 2 presents the main related work. Sec-

tion 3 describes the process used to create a large-

scale, labeled, anonymized, and public dataset of

WhatsApp messages in PT-BR. Section 4 details our

experimental setup for MID. Section 5 reports and

discuss the results. Conclusions and future work are

presented in 6.

2 RELATED WORK

Several works attempt to detect misinformation in dif-

ferent languages and platforms. Most of them use

news in English or Chinese languages. Besides, Web-

sites and social media platforms with easy access to

data, like Twitter, for example, are amongst the main

sources used to build misinformation datasets.

Despite the large number of works investigating

the misinformation detection problem, few of the

search for suitable solutions for the Brazilian Por-

tuguese language (PT-BR). In this context, (Mon-

teiro et al., 2018) presented the first and largest Fake

News’ corpus in Brazilian Portuguese (PT-BR), called

Fake.Br. This corpus was built manually, collect-

ing Fake News on the Web and, semi-automatically,

searching for the actual news related to each Fake

News, generating an equal amount of negative and

positive examples. In all, the dataset has 7,200 items

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

64

(or news), with 3,600 true and 3,600 false. In addi-

tion, the authors evaluated some classifiers (Naıve-

Bayes, Random Forest, and Multilayer Perceptron).

After, the work presented in (Silva et al., 2020) inves-

tigated the use of different features and algorithms in

order to detect fake news, exploring the Fake.Br cor-

pus (Monteiro et al., 2018).

However, it is important to highlight that What-

sApp is unique in several ways relative to other so-

cial networks. A particularly novel aspect of What-

sApp messaging is its close integration with large

public groups. These are openly accessible groups,

frequently publicized on well-known websites, and

typically themed around particular topics, like pol-

itics, religion, soccer, etc. The study presented in

(Garimella and Tyson, 2018) is a pioneering work in

collecting and analyzing WhatsApp’ messages. The

authors built a dataset by crawling 178 public groups,

containing 45K users and 454K messages, from dif-

ferent countries and languages, such as India, Pak-

istan, Russia, Brazil, and Colombia. Nevertheless,

no solution to the misinformation detection problem

was presented. In (Gaglani et al., 2020), the authors

contextualize the problem of spreading fake news on

WhatsApp, especially in India and Brazil, and pro-

poses a strategy for the automatic detection of fake

news. A total of 10 group chats are scraped for one

week to get 1000 multilingual messages. After clean-

ing the data, the multilingual data was translated into

English by employing the google translate API. So,

the proposed approach for misinformation detection

does not consider the particularities of each language.

Thus, despite the efforts of the scientific com-

munity, there is still a need for a large-scale corpus

containing WhatsApp messages in Portuguese. It is

worth mentioning that texts extracted from WhatsApp

are quite different from those collected through Web-

sites, fact-checkers, or other kinds of social media

platforms, such as Twitter. WhatsApp messages in-

clude conversation, opinions, humorous and satirical

texts, prayers, commercial offers, news, short texts,

emojis, and others. Then, using the Fake.Br corpus,

for example, to automatic misinformation detection in

WhatsApp is not a suitable approach. In this scenario,

(Faustini and Cov

˜

oes, 2019) is a seminal work. In the

experiments, three different datasets were explored in

order to detect fake news: Fake.Br (news from web-

sites), a Twitter corpus, obtained using the Twitter

API, and a small WhatsApp corpus. It is worth men-

tioning that the WhatsApp corpus was obtained from

texts on the website boatos.org and have only 177

messages, where 165 are fake and 12 are true. Some

papers present a few initiatives in order to gathering,

analyzing, and visualize public groups in WhatsApp

(Resende et al., 2018; Machado et al., 2019; Resende

et al., 2019). Nevertheless, the collected data were

not labeled, no dataset has been made publicly avail-

able, and no solution to the misinformation detection

problem was presented. Table 1 shows a comparative

analysis between the datasets of WhatsApp messages

in Brazilian Portuguese found in the literature.

3 THE FakeWhatsApp.Br

DATASET

In order to develop automatic misinformation detec-

tion approaches, that are suitable for WhatsApp mes-

sages in Brazilian Portuguese, a critical aspect is a

need for a large-scale labeled dataset. However, to the

best of our knowledge, there is no corpus for Brazilian

Portuguese with these characteristics. To fill the gap

of the lack of a large-scale labeled corpus of What-

sApp messages in Brazilian Portuguese we built the

FakeWhatsApp.Br, inspired by (Silva et al., 2020).

The work of (Rubin et al., 2015) suggests a

methodological guideline for building corpora of de-

ceptive content, which includes: the corpus must con-

tain truthful texts and their corresponding untruthful

versions, in order to allow finding patterns and regu-

larities in “positive and negative instances”; the texts

in the corpus should be in plain text format; the texts

should have similar sizes to avoid bias in learning;

the texts should belong to a specific time interval, as

writing style changes in time; and the corpus should

keep the related metadata information (e.g., the URL

of the news, the authors, publication date, and num-

ber of comments and visualizations) because it can be

useful for fact checking algorithms.

3.1 Data Collecting

Unlike other social media, such as Twitter and Face-

book, and due to its private chat nature, there is no

public API to collect data from WhatsApp in an au-

tomated manner. Thus, creating a dataset of What-

sApp messages poses a technical, and even ethical,

challenge. To tackle this issue, we take an approach

similar to (Garimella and Tyson, 2018; Resende et al.,

2018).

Initially, we seek for public groups with political

themes during the Brazilian general elections cam-

paign in 2018. The groups were found by search-

ing for “chat.whatsapp.com/” on the Web and man-

ually analyzing its content. We established a rule to

join only groups with at least 100 or more users to

explore only relevant content groups, whereas What-

sApp has a limitation of a maximum of 256 users by

FakeWhastApp.BR: NLP and Machine Learning Techniques for Misinformation Detection in Brazilian Portuguese WhatsApp Messages

65

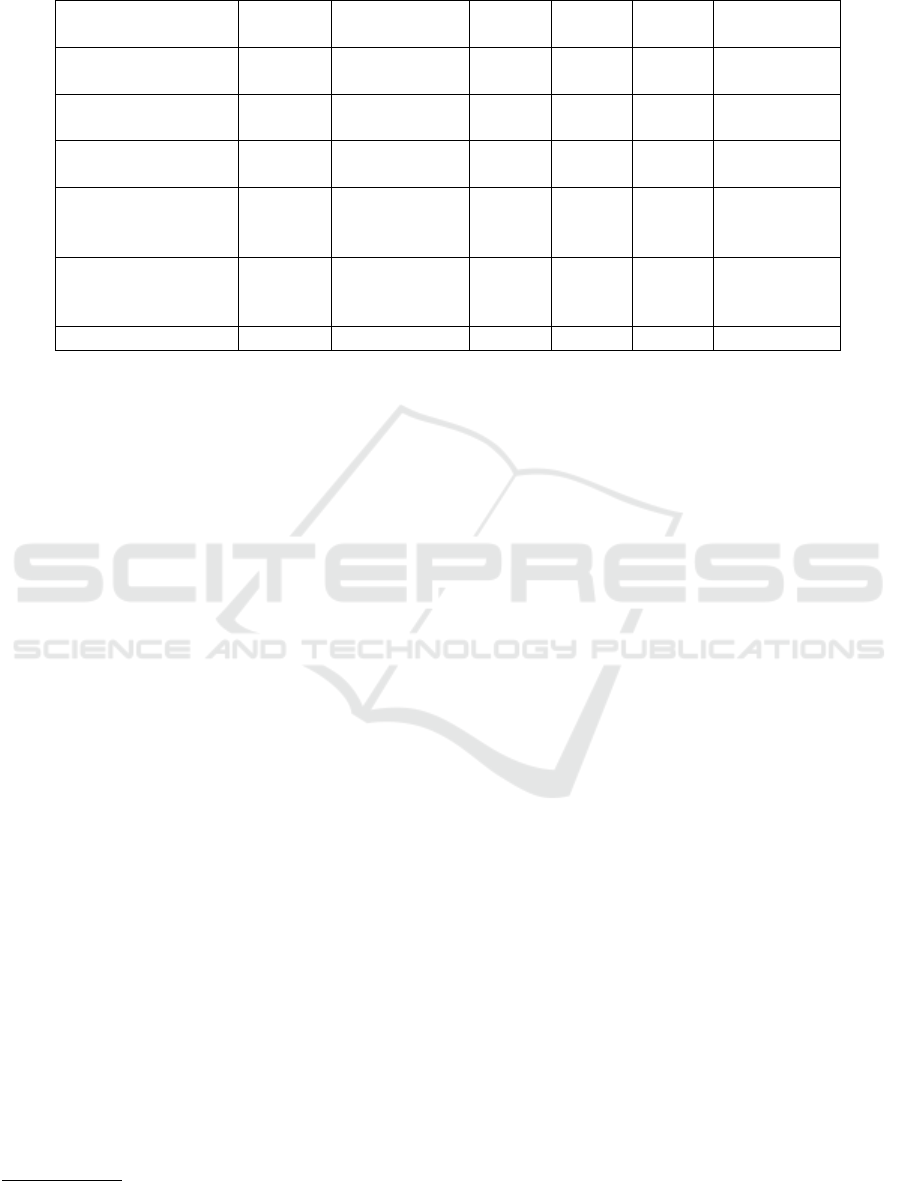

Table 1: Datasets of WhatsApp Messages in Brazilian Portuguese. Hyphen (-) means that the information could not be found

in the work.

Work Labeled Total of Text

Messages

Groups Users MID Publicly

Available

(Faustini and

Cov

˜

oes, 2019)

Yes 177 - - Yes Yes

(Resende et al.,

2018)

No 169,154 127 6,314 No No

(Machado et al.,

2019)

No 298,892 130 - No No

(Resende et al.,

2019)/ Truck

Drivers’ Strike

No 95,424 141 5,272 No No

(Resende et al.,

2019)/ Election

Campaign

No 591,162 136 18,725 No No

FakeWhastApp.BR Yes 5,284 59 14,784 Yes Yes

group. After careful selection, we joined 59 public

groups. Next, we created a WhatsApp account to join

the selected groups. So, we collected messages from

July to November of 2018. After this period, we ex-

tracted all content and metadata, building a data ma-

trix, where each row corresponds to a message sent

in a group. The matrix columns are the date and

hour that the message was sent, the sender’s phone

number, the international phone code, the Brazilian

state (if the user is from Brazil), the content (text) of

the message, the word and character counts, and if

the message contained media such as audio, image

or video. Nevertheless, since we are finding to iden-

tify misinformation in the WhatsApp message text,

the FakeWhatsApp.Br dataset does not contain me-

dia files. Besides, we also count how many times the

same message text appears in the dataset. For doing

so, we only consider messages with identical textual

content that had more than five words, to filter com-

mon messages such as greetings. We call the mes-

sages in which the textual content appears more than

once in the dataset “viral messages”.

3.2 Data Anonymization

We took into consideration privacy issues by

anonymizing users’ names and cell phone numbers.

For this, we create an anonymous and unique ID for

each user by using a hash function on their phone

number. Similarly, we create an anonymous alias for

each group. Since the groups are public, our approach

does not violate WhatsApp’s privacy policy

4

.

Figure 1 illustrates the FakeWhatsApp.Br dataset

at this time, before data labeling. The FakeWhat-

4

https://www.whatsapp.com/legal/privacy-policy

sApp.Br dataset has 282,601 WhatsApp messages

from users and groups from all Brazilian states. It

is important to note that although FakeWhatsApp.Br

dataset has several metadata associated with each

message, in this work we will use exclusively the tex-

tual data in order to build misinformation detection

models. However, these metadata will be used in fu-

ture works to improve the performance of the pro-

posed misinformation detection approaches.

3.3 Corpus Labeling

Building a large-scale dataset is one of the biggest

challenges for the automatic detection of misinforma-

tion. However, data labeling is another challenge be-

cause we need to specify whether a part of the text is

true or false based on the truth. Notes can generally be

made by specialized journalists or fact-checking sites.

Next, we will describe the used method for label-

ing the WhatsApp messages’ textual content. In or-

der to create a high-quality corpus, the process used

for data labeling was entirely manual. A human spe-

cialist checked the content of each message and deter-

mined if it contains misinformation or not. Since this

process is time-consuming, we chose to labeled only

the unique viral messages, resulting in a much smaller

subset with 5,284 unique messages. This decision is

backed by the work of (Vosoughi et al., 2018), where

is shown that misinformation spreads faster, deeper,

and wider in social networks than true information.

We argue that in that way we avoid having peer-to-

peer conversation data in the corpus, allowing us to

create and validate classification models focused on

detecting misinformation which are most spread and

harmful. The subset of viral messages contains a va-

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

66

Figure 1: Sample from the collected data before labelling.

riety of types of messages, such as fake news, ru-

mors, hoaxes, true news, political advertising, opin-

ions, satires, jokes, election polls and hate speech. We

classified all theses messages with the general defini-

tion of misinformation adopted in (Su et al., 2020) and

consider the labels 1 (contains misinformation) and 0

(does not contain misinformation).

As follows we summarize our labeled guideline

and offer parts of messages as examples (and it’s

translations) for each situation, without the emoti-

cons:

1. If the text contains verifiable untrue claims, we

annotate it as misinformation. For that purpose,

we made extensive use of trustful Brazilian’s fact-

checking platforms, such as Ag

ˆ

encia Lupa

5

and

Boatos.org

6

.

E.g.: “Bolsa Ditadura se transformou em

ind

´

ustria: VC sabia que 20mil anistiados, en-

tre eles, Chico Buarque, Gilberto Gil, Cae-

tano Veloso, Marieta Severo, Taiguara, Lula,

Z

´

e Dirceu, Fernando Henrique Cardoso, re-

cebem o Bolsa Ditadura mensalmente e s

˜

ao isen-

tos de pagar Imposto de Renda? Sendo que

dos 20 mil, 10 mil recebem indenizac¸

˜

oes men-

sais acima do teto constitucional(R$ 33.763,00)

Essa esquerda maldita tira dos cofres p

´

ublicos

mensalmente a bagatela de R$ 365.000.000,00

(Trezentos e sessenta e cinco milh

˜

oes) pagos por

n

´

os, ot

´

arios!”

7

.

Translation: “Dictatorship Grant turned into in-

dustry: did you know that 20,000 amnesties,

including Chico Buarque, Gilberto Gil, Cae-

tano Veloso, Marieta Severo, Taiguara, Lula, Z

´

e

Dirceu, Fernando Henrique Cardoso, receive the

Dictatorship Grant monthly and are exempt from

paying Tax Income? Of the 20 thousand, 10 thou-

sand receive monthly indemnities above the con-

5

http://piaui.folha.uol.com.br/lupa/

6

http://www.boatos.org/

7

https://www.aosfatos.org/noticias/nao-e-verdade-que-

governo-paga-bolsa-ditadura-20-mil-anistiados-politicos/

stitutional ceiling (R$ 33,763.00) This cursed left

removes from the public coffers monthly the trifle

of R$ 365,000,000.00 (Three hundred and sixty-

five million) paid for us suckers!”

2. If the text contains claims that cannot be proven

and that are imprecise, biased, alarmist or are

harmful to groups or individuals, we annotate it

as misinformation.

E.g.: “O golpe da esquerda

´

e o seguinte: a viagem

do Ciro Gomes

`

a Europa foi proposital! Um teatro

p/ colocar a seguinte narrativa em pr

´

atica: ele sai

de cena, ou seja, teoricamente n

˜

ao est

´

a apoiando

Haddad, de repente ele volta (e de fato, de acordo

c/ o Estad

˜

ao ele est

´

a chegando hoje) qdo n

˜

ao ter

´

a

mais nenhuma pesquisa a ser divulgada, para q

n

˜

ao se “comprove”, se de fato aconteceria, a es-

calada q Haddad “ter

´

a” de milh

˜

oes de votos em

2 dias. Enfim, o fato novo, que a imprensa j

´

a

avisadamente, a todo tempo publicou, que seria

a

´

unica coisa p/ virada nos votos! Ou seja, tudo

articulado, para acontecer exatamente como no 1

◦

turno, onde o apoio do Lula fez o poste crescer em

poucos dias 20 ptos percentuais, v

˜

ao tentar vender

a ideia que o apoio do Ciro de

´

ultima hora, fez

reverter ao Haddad todos os votos que ele teve,

justificando o golpe nas urnas! Precisamos divul-

gar isto em massa, numa velocidade recorde, para

minar o efeito, antes mesmo de ocorrer! (...)”

Translation: “The coup of the left is as follows:

Ciro Gomes’ trip to Europe was purposeful! A

act to put the following narrative into practice: he

leaves the scene, that is, theoretically he is not

supporting Haddad, suddenly he comes back (and

in fact, according to Estad

˜

ao he is arriving today)

when he will have no more election pools to be re-

leased, so that it is not ”proven”, if in fact it would

happen, the escalation that Haddad ”will have” of

millions of votes in 2 days. Anyway, the new fact,

which the press has already warned, published all

the time, which would be the only thing to change

the votes! That is, everything articulated, to hap-

FakeWhastApp.BR: NLP and Machine Learning Techniques for Misinformation Detection in Brazilian Portuguese WhatsApp Messages

67

pen exactly as in the 1st round, where Lula’s sup-

port made the post grow in a few days by 20 per-

centage points, they will try to sell the idea that

Ciro’s last-minute support, made Haddad revert

all the votes he had, justifying the coup in the elec-

tions! We need to disclose this en masse, at record

speed, to undermine the effect, even before it oc-

curs! (...)”.

3. By decision of the Brazilian Superior Electoral

Court, informal electoral polls, which do not meet

formal requirements and scientific rigors, were

banned in the 2018 elections. Thus, we anno-

tate messages containing such polls as misinfor-

mation.

E.g.: “Vota a

´

ı e repassa!!! Vamos ver se o

ibope est

´

a certo? https://pt.surveymonkey.com/r/

W85R38F”

Translation: “Vote and forward!!! Let’s see if

the IBOPE is right? https://pt.surveymonkey.com/

r/W85R38F”

4. Some of the messages are short texts originally

accompanied by media content (image, audio, or

video) which not readily accessible. In those

cases, we search on the Web for the media con-

tent and, if we find the media, we assign a label

following the previous criteria.

E.g.: “Antes de decidir seu voto ouc¸a o que diz o

padre Marcelo Rossi”.

8

Translation: “Before deciding your vote, listen

to what Father Marcelo Rossi says”.

5. If the original media content cannot be found, we

look for indications of Item 2 in the text itself.

E.g.: “Olha o que os partidos de esquerda defen-

dem E se votarmos viraremos isso”.

Translation: “Look what the leftist parties de-

fend. And if we vote we will turn it into this”.

6. If none of the previous indications is found in the

text, we consider it as not containing misinforma-

tion. We take careful consideration when the text

is an opinion instead of a claim or is humorous, as-

signing a non-misinformation label in both cases.

E.g.: “Relaxando no sof

´

a, barriguinha plusize,

9mm na cintura, sem coldre, no pelo, com saque

cruzado, relogio Cassio modelo 1985 no punho e

xingando comunistas no insta... Esse

´

e meu Pres-

idente!”.

Translation: “Relaxing on the couch, plus size

tummy, 9mm at the waist, no holster, with crossed

loot, Cassio model 1985 watch on the wrist and

8

https://www.boatos.org/religiao/padre-marcelo-rossi-

grava-audio-brasil-bolsonaro-comunismo.html

cursing communists at the Instagram... This is my

President!”.

During the labeled process, we observed that some

of the messages text could be found on other social

media, such as YouTube, Twitter, and Facebook. Out

of a total of 5,284 messages, 610 (11.5%) could be

found on different media. Out of these, 85 (14%)

were found on Twitter, 236 (38.7%) on Facebook, 240

(39.3%) on YouTube. The remaining 49 (8%) were

found on various Web pages, like blogs, news por-

tals, etc. The majority of the messages were exclu-

sive to WhatsApp. Some of these use a formatting

specific to the platform, e.g., the underscore on both

sides of the text used to format the text as italic, or

the asterisk on both sides of the text to bold it. A high

quantity and variety of emoticons were also perceived

in some messages, thus reinforcing the evidence that

WhatsApp messages have their particularities.

After the labeling process, the FakeWhatsApp.BR

corpus contains 2,193 unique messages annotated as

misinformation (label 1) and 3,091 unique messages

annotated as non-misinformation (label 0). In Table 2,

we present basic statistics about the corpus, including

some traditional NLP features based on the number

of tokens, types, characters, as well as the average

number of shares, i.e., the frequency of the message

in the original dataset.

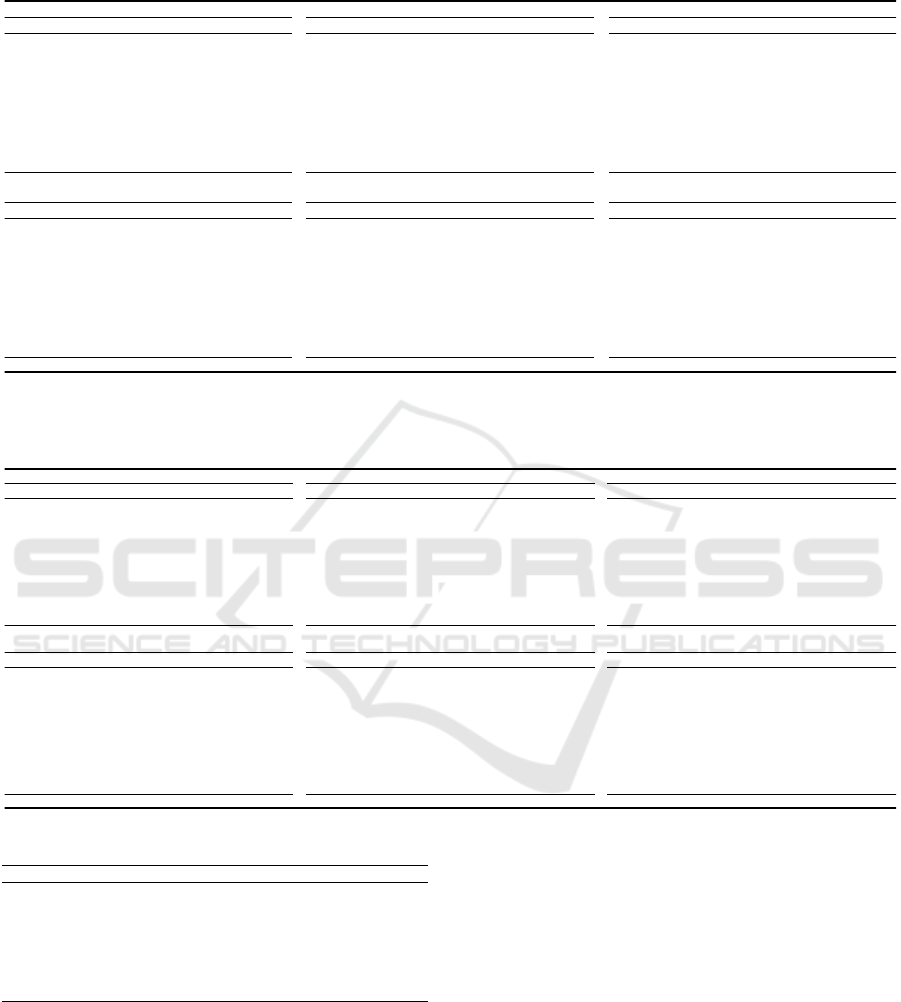

As expected, the messages labeled as misinforma-

tion were, on average, more shared in the groups. We

can see in Table 2 that the majority of messages of the

corpus are short texts, but the distribution of the num-

ber of tokens have a heavy tail, with a minority of very

long texts. We also point out that the average number

of tokens and types is much higher in the messages

with misinformation. This difference in the size of

the messages can be problematic for machine learn-

ing classification algorithms, creating a bias about the

text size (Rubin et al., 2015).

4 EXPERIMENTAL EVALUATION

To answer the research questions presented in Sec-

tion 1 and provide a baseline for the misinformation

detection problem in WhatsApp messages in Brazil-

ian Portuguese, we carefully designed a set of exper-

iments using the FakeWhatsApp.Br dataset. We have

explored different combinations between features and

classification algorithms. To obtain robust statistical

results, we performed our experiments using k-fold

cross-validation, with k = 5 folds.

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

68

Table 2: FakeWhatsApp.Br basic statistics.

Statistics Non-misinformation Misinformation

Count of unique messages 3,091 2,193

Mean and standard deviation of number of tokens in messages 51.27 ± 126.28 106.55 ± 169.31

Minimum number of tokens 6 6

Median number of tokens 20 34

Maximum number of tokens 2,664 2,203

Mean and standard deviation of number of types in messages 38.03 ± 66.44 73.78 ± 97.79

Average size of words (in characters) 6.24 5.66

Type-token ratio 0.91 0.85

Mean and standard deviation of shares 3.32 ± 3.90 4.83 ± 6.81

4.1 Features and Classification

Algorithms

Different text’s feature extraction methods were eval-

uated. However, we focus our experiments in tradi-

tional Bag-Of-Words (BoW) text representation. We

choose to not use pre-trained embedding vectors due

to the large presence of misspelled words, emoticons

and neologisms in the corpus, thus resulting in sev-

eral out-of-vocabulary words. In addition, we seek to

establish a baseline for the automatic misinformation

detection problem in WhatsApp messages in Brazil-

ian Portuguese. So, BoW features are suitable for this

purpose due to its simplicity, processing speed and its

wide use in text classification problems.

Then, we explored vectors created with binary

BoW and with TF-IDF, converting the text to low-

ercase and using whitespaces and punctuation marks

as token separators. Emojis are abundant in the texts

and are part important of the dialect used in What-

sApp and so we chose to keep them in tokenization.

However, as combinations of emojis can generate dif-

ferent kinds of tokens, we separate all emojis with

whitespaces, thus creating a token for each emoji. We

also normalize URLs, maintaining only it’s domains

name. In the same manner, we normalize the Brazil-

ian’s text laugh, written as a sequence with a varying

number of the letter k, which we convert to a unique

dummy feature “kkkk” (somewhat equivalent to the

english’s “LoL”). Due to the corpus’ lexical diversity,

the resulting vectors have large dimension and spar-

sity.

Still, besides using only unigrams as tokens, we

also varied the n-gram range, experimenting the com-

bination of unigrams, bigrams and trigrams. Even if

this results in a larger vector space, from our knowl-

edge of the domain, we believe that the combination

of bigrams and trigrams can reveal distinguishable

patterns that are present in messages with misinfor-

mation in our dataset. Lastly, to compare the im-

pact of more advanced pre-processing techniques to

reduce vector space, we include a set of experiments

with utilizes steps of lemmatization and stop words

removal in the pre-processing.

Thus, we combine these different vectorization

approaches (binary BoW or TF-IDF), the n-grams

range (unigrams, bigrams and trigrams) and the use

of extra steps of pre-processing (lemmatization and

stop words removal), creating a total of 12 different

features scenarios.

In each of these scenarios, we perform experi-

ments with a selection of 9 machine learning classi-

fication algorithms, broadly used in text classification

tasks (Pranckevi

ˇ

cius and Marcinkevi

ˇ

cius, 2017): lo-

gistic regression (LR), Bernoulli (if the features are

BoW) or Complement Naive-Bayes (if features are

TF-IDF) (NB) (Kim et al., 2006; Rennie et al., 2003),

support vector machines with a linear kernel (LSVM),

SVM trained with stochastic gradient descent (SGD),

SVM trained with a RBF kernel (Prasetijo et al.,

2017) (SVM), K-nearest neighbors (KNN), random

forest (RF), gradient boosting (GB) and multilayer

perceptron neural network (MLP).

For all algorithms, we used the implementation

from the Python library scikit-learn (Pedregosa et al.,

2011). The MLP used a batch size of 64 and a early

stopping training strategy, where 10% of training data

is set aside as validation and terminate training when

validation score is not improving by at least 0.001 for

5 consecutive epochs. All the others hyperparameters

for this and the others models are used as the default.

It is important to note that the chosen set of algorithms

encompasses different families of machine learning

algorithms: linear models (LR), generative models

(NB), instance-based learning (KNN), support vector

machines (LSVM, SVM and SGD), ensemble meth-

ods - bagging (RF) and boosting (GB), and neural net-

works (MLP). Although we do not perform a system-

atic selection of hyperparameters for each model, the

variety of the tested approaches should give us infor-

FakeWhastApp.BR: NLP and Machine Learning Techniques for Misinformation Detection in Brazilian Portuguese WhatsApp Messages

69

mation of which learning strategy can be more well

suited to this problem and establishes a baseline.

Considering all combinations between features,

pre-processing and classification methods, we per-

formed a total of 108 experiments, which should give

us information to answer Research Question 1 and

Research Question 2.

4.2 Performance Metrics

To evaluate the performance of each experiment, we

adapt the metrics used in (Silva et al., 2020) consider-

ing the formulation of our problem and goal. As men-

tioned previously, we tackle the problem as a binary

classification task, where the misinformation repre-

sents the positive class (and also the class of interest)

and the non-misinformation represents the negative

class. Below we list the chosen evaluation metrics:

• False Positive Rate (FPR): the proportion of mes-

sages without misinformation incorrectly classi-

fied as misinformation. The lower, the better.

• Precision (PRE): proportion of messages classi-

fied as misinformation and that truly belong to the

misinformation class. The higher, the better.

• Recall (REC): proportion of misinformation cor-

rectly classified. The higher, the better.

• F1-score (F1): harmonic average between preci-

sion and recall.

As we use a k-fold cross validation, the mean and

standard deviation of each metric will be presented.

After these experiments, we choose the best classifier

and features, retrain it with a randomly separated train

set (80% of the total data) and test it on the remaining

20% of the data. To answer Research Question 3, we

did a qualitative analysis of the false positive and false

negative results of the best classifier, identifying and

categorizing the possible reasons of the errors, and so

the limitations of a NLP-based approach.

5 RESULTS

In order to allow future research work in this task, as

well for reproducibility of the experiments, the source

code and the FakeWhatsApp.Br corpus are publicly

available at a public online repository

9

.

The experimental results are summarized in Ta-

bles 3 and 4, where we present the results for BoW

and TF-IDF features, respectively. In each Table we

present the results of each features’ scenario that vary

9

https://github.com/cabrau/FakeWhatsApp.Br.

with the n-gram range and with the use of lemmatiza-

tion and stopwords removal. The sub tables in each

Table are organized as follows:

a) only unigrams;

b) unigrams and bigrams;

c) unigrams, bigrams and trigrams;

d) only unigrams, stopwords removal and lemmati-

zation;

e) unigrams and bigrams, stopwords removal and

lemmatization;

f) unigrams, bigrams and trigrams, stopwords re-

moval and lemmatization;

From Tables 3 and 4 we can note that none classifier

was always superior in every scenarios. However, the

MLP, LSVM, SGD and LR methods performed con-

sistently well in all scenarios. In the other hand, the

NB, KNN and RF methods had the worst results, con-

sidering the F1-score.

Although the difference between the best scores

is low (3.2% improvement from the maximum to the

minimum), we can see that the results did improve

with the use of bigrams and trigrams. Comparing the

scores in sub tables a), b) and c), from both Tables, we

see consistent improvement of the results. As we ex-

pected, bigrams and trigrams tokens contains relevant

information in this domain, relative to frequent top-

ics in messages with misinformation during the time-

period in which the data was collected.

Similarly, when we compare sub tables a), b), c)

with e) and f), we see that the use of lemmatization

and stop words removal also slightly improved the

scores only when using bigrams and trigrams. As for

the vectorization method, BoW features had a better

performance when using only unigrams (sub tablesa)

and d)) and were outperformed by TF-IDF features

when using bigrams and trigrams.

Table 5 summarizes the top 10 best results for all

the experiments. Considering the F1-score the results

are very close, and allows us to see that the best results

were achieved with TF-IDF, a higher n-gram range,

the removal of stopwords and lemmatization, as well

as the use of LSVM, MLP and SGD methods.

From the results, we can assess how challeng-

ing the problem of detecting misinformation in What-

sApp is, answering the Research Question 1, since we

did not obtain any F1 score above 0.74. Comparing

with the results obtained by (Silva et al., 2020) in the

Fake.Br corpus, which obtained a F1 score of 0.965

using a combination of TF-IDF and linguistic features

with an ensemble strategy, we see room for improve-

ment. However, it’s important to highlight that even

the problems are similar, the datasets contains many

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

70

Table 3: Results with binary BoW features. MLP, LSVM, SGD and LR methods performed consistently well in most scenar-

ios. In general, the results improved when stopword removal, lemmatization and bigrams and trigrams were used.

a) BOW-1. Features: 23,422 b) BOW-1,2. Features: 156,182 c) BoW-1,2,3. Features: 384,783

Model FPR PRE REC F1 Model FPR PRE REC F1 Model FPR PRE REC F1

LR 0.183 ± 0.00 0.761 ± 0.02 0.677 ± 0.02 0.715 ± 0.00 LR 0.180 ± 0.01 0.775 ± 0.02 0.655 ± 0.03 0.709 ± 0.01 LR 0.179 ± 0.01 0.782 ± 0.02 0.641 ± 0.03 0.704 ± 0.01

NB 0.229 ± 0.00 0.753 ± 0.01 0.342 ± 0.03 0.469 ± 0.03 NB 0.222 ± 0.00 0.834 ± 0.02 0.281 ± 0.02 0.420 ± 0.03 NB 0.256 ± 0.01 0.637 ± 0.01 0.804 ± 0.02 0.710 ± 0.01

LSVM 0.205 ± 0.01 0.718 ± 0.01 0.674 ± 0.02 0.695 ± 0.01 LSVM 0.188 ± 0.01 0.757 ± 0.03 0.657 ± 0.02 0.702 ± 0.01 LSVM 0.185 ± 0.01 0.772 ± 0.03 0.633 ± 0.03 0.695 ± 0.01

SGD 0.202 ± 0.01 0.718 ± 0.02 0.704 ± 0.02 0.710 ± 0.01 SGD 0.198 ± 0.01 0.728 ± 0.01 0.683 ± 0.02 0.705 ± 0.02 SGD 0.197 ± 0.01 0.725 ± 0.02 0.710 ± 0.04 0.716 ± 0.02

SVM 0.185 ± 0.01 0.786 ± 0.01 0.593 ± 0.06 0.674 ± 0.04 SVM 0.186 ± 0.01 0.819 ± 0.01 0.520 ± 0.07 0.633 ± 0.05 SVM 0.193 ± 0.01 0.844 ± 0.01 0.445 ± 0.06 0.579 ± 0.05

KNN 0.219 ± 0.00 0.850 ± 0.04 0.291 ± 0.02 0.433 ± 0.02 KNN 0.220 ± 0.00 0.871 ± 0.04 0.267 ± 0.04 0.406 ± 0.05 KNN 0.220 ± 0.00 0.890 ± 0.04 0.255 ± 0.04 0.394 ± 0.05

RF 0.189 ± 0.01 0.813 ± 0.02 0.513 ± 0.06 0.626 ± 0.05 RF 0.193 ± 0.01 0.826 ± 0.01 0.469 ± 0.06 0.595 ± 0.04 RF 0.194 ± 0.00 0.850 ± 0.02 0.432 ± 0.05 0.570 ± 0.04

GB 0.197 ± 0.00 0.765 ± 0.01 0.565 ± 0.03 0.649 ± 0.01 GB 0.202 ± 0.00 0.758 ± 0.01 0.551 ± 0.04 0.636 ± 0.02 GB 0.202 ± 0.00 0.757 ± 0.01 0.551 ± 0.04 0.637 ± 0.02

MLP 0.192 ± 0.01 0.754 ± 0.03 0.652 ± 0.05 0.696 ± 0.01 MLP 0.184 ± 0.00 0.765 ± 0.03 0.664 ± 0.05 0.708 ± 0.02 MLP 0.188 ± 0.01 0.762 ± 0.03 0.645 ± 0.05 0.696 ± 0.02

d) BOW-1-STOPWORDS-LEMMA. Features: 19,455 e) BOW-1,2-STOPWORDS-LEMMA. Features: 129,745 f) BoW-1,2,3-STOPWORDS-LEMMA. Features: 261,665

Model FPR PRE REC F1 Model FPR PRE REC F1 Model FPR PRE REC F1

LR 0.181 ± 0.00 0.764 ± 0.01 0.678 ± 0.02 0.718 ± 0.00 LR 0.173 ± 0.00 0.794 ± 0.01 0.648 ± 0.02 0.713 ± 0.01 LR 0.172 ± 0.00 0.806 ± 0.02 0.625 ± 0.03 0.703 ± 0.02

NB 0.228 ± 0.00 0.764 ± 0.02 0.327 ± 0.03 0.457 ± 0.02 NB 0.224 ± 0.00 0.879 ± 0.03 0.238 ± 0.01 0.374 ± 0.01 NB 0.273 ± 0.01 0.620 ± 0.01 0.819 ± 0.01 0.706 ± 0.01

LSVM 0.203 ± 0.00 0.716 ± 0.01 0.690 ± 0.02 0.703 ± 0.01 LSVM 0.180 ± 0.00 0.774 ± 0.01 0.655 ± 0.02 0.709 ± 0.01 LSVM 0.176 ± 0.01 0.790 ± 0.02 0.640 ± 0.02 0.707 ± 0.02

SGD 0.198 ± 0.01 0.731 ± 0.01 0.669 ± 0.01 0.699 ± 0.01 SGD 0.184 ± 0.01 0.764 ± 0.03 0.663 ± 0.05 0.708 ± 0.02 SGD 0.184 ± 0.01 0.770 ± 0.02 0.642 ± 0.05 0.698 ± 0.02

SVM 0.186 ± 0.01 0.784 ± 0.01 0.588 ± 0.06 0.670 ± 0.03 SVM 0.186 ± 0.00 0.833 ± 0.01 0.497 ± 0.05 0.621 ± 0.04 SVM 0.195 ± 0.00 0.861 ± 0.01 0.415 ± 0.05 0.558 ± 0.04

KNN 0.219 ± 0.00 0.829 ± 0.03 0.311 ± 0.03 0.451 ± 0.03 KNN 0.217 ± 0.00 0.858 ± 0.04 0.297 ± 0.04 0.438 ± 0.04 KNN 0.217 ± 0.00 0.902 ± 0.04 0.259 ± 0.04 0.400 ± 0.05

RF 0.182 ± 0.00 0.803 ± 0.01 0.576 ± 0.05 0.669 ± 0.03 RF 0.189 ± 0.01 0.848 ± 0.01 0.461 ± 0.05 0.595 ± 0.04 RF 0.195 ± 0.01 0.853 ± 0.02 0.425 ± 0.05 0.565 ± 0.04

GB 0.196 ± 0.00 0.783 ± 0.00 0.529 ± 0.05 0.630 ± 0.03 GB 0.198 ± 0.00 0.778 ± 0.00 0.522 ± 0.03 0.624 ± 0.02 GB 0.197 ± 0.00 0.783 ± 0.00 0.521 ± 0.03 0.625 ± 0.02

MLP 0.182 ± 0.00 0.760 ± 0.01 0.684 ± 0.02 0.720 ± 0.00 MLP 0.173 ± 0.00 0.781 ± 0.00 0.678 ± 0.02 0.726 ± 0.01 MLP 0.171 ± 0.00 0.795 ± 0.02 0.657 ± 0.03 0.718 ± 0.01

Table 4: Results with TF-IDF features. In general, the results improved slightly when compared to binary BoW features,

especially when using bigrams and trigrams, stopword removal and lemmatization. The same classification methods stood

out: MLP, LSVM, SGD.

a) TFIDF-1. Features: 23,422 b) TFIDF-1,2. Features: 156,182 c) TFIDF-1,2,3. Features: 384,783

Model FPR PRE REC F1 Model FPR PRE REC F1 Model FPR PRE REC F1

LR 0.197 ± 0.01 0.745 ± 0.03 0.636 ± 0.04 0.685 ± 0.02 LR 0.206 ± 0.01 0.725 ± 0.02 0.633 ± 0.03 0.675 ± 0.01 LR 0.216 ± 0.01 0.699 ± 0.03 0.676 ± 0.03 0.686 ± 0.02

NB 0.229 ± 0.00 0.753 ± 0.01 0.342 ± 0.03 0.469 ± 0.03 NB 0.222 ± 0.00 0.834 ± 0.02 0.281 ± 0.02 0.420 ± 0.03 NB 0.245 ± 0.01 0.651 ± 0.01 0.753 ± 0.03 0.697 ± 0.01

LSVM 0.197 ± 0.00 0.726 ± 0.01 0.703 ± 0.02 0.714 ± 0.01 LSVM 0.197 ± 0.01 0.721 ± 0.02 0.729 ± 0.03 0.724 ± 0.02 LSVM 0.210 ± 0.01 0.695 ± 0.02 0.767 ± 0.03 0.729 ± 0.01

SGD 0.203 ± 0.01 0.715 ± 0.02 0.705 ± 0.02 0.710 ± 0.01 SGD 0.203 ± 0.01 0.709 ± 0.02 0.731 ± 0.03 0.720 ± 0.01 SGD 0.212 ± 0.02 0.691 ± 0.02 0.776 ± 0.02 0.731 ± 0.02

SVM 0.184 ± 0.00 0.773 ± 0.02 0.635 ± 0.04 0.696 ± 0.02 SVM 0.195 ± 0.01 0.752 ± 0.02 0.623 ± 0.05 0.680 ± 0.02 SVM 0.203 ± 0.01 0.729 ± 0.02 0.648 ± 0.04 0.685 ± 0.02

KNN 0.323 ± 0.01 0.569 ± 0.01 0.727 ± 0.01 0.638 ± 0.01 KNN 0.313 ± 0.02 0.577 ± 0.02 0.715 ± 0.03 0.639 ± 0.02 KNN 0.309 ± 0.02 0.581 ± 0.02 0.722 ± 0.02 0.644 ± 0.02

RF 0.196 ± 0.00 0.809 ± 0.03 0.485 ± 0.04 0.605 ± 0.02 RF 0.192 ± 0.00 0.835 ± 0.02 0.462 ± 0.04 0.593 ± 0.03 RF 0.198 ± 0.00 0.829 ± 0.02 0.437 ± 0.04 0.570 ± 0.03

GB 0.203 ± 0.00 0.746 ± 0.02 0.581 ± 0.03 0.652 ± 0.01 GB 0.201 ± 0.00 0.752 ± 0.02 0.578 ± 0.03 0.652 ± 0.01 GB 0.207 ± 0.00 0.737 ± 0.03 0.588 ± 0.04 0.651 ± 0.01

MLP 0.201 ± 0.01 0.720 ± 0.02 0.701 ± 0.00 0.710 ± 0.01 MLP 0.197 ± 0.01 0.722 ± 0.03 0.730 ± 0.03 0.725 ± 0.01 MLP 0.212 ± 0.01 0.695 ± 0.02 0.751 ± 0.03 0.721 ± 0.01

d) TFIDF-1-STOPWORDS-LEMMA. Features: 19,455 e) TFIDF-1,2-STOPWORDS-LEMMA. Features: 129,745 f) TFIDF-1,2,3-STOPWORDS-LEMMA. Features: 261,665

Model FPR PRE REC F1 Model FPR PRE REC F1 Model FPR PRE REC F1

LR 0.188 ± 0.00 0.766 ± 0.01 0.621 ± 0.03 0.685 ± 0.01 LR 0.193 ± 0.01 0.743 ± 0.02 0.662 ± 0.03 0.699 ± 0.02 LR 0.202 ± 0.01 0.723 ± 0.02 0.675 ± 0.03 0.698 ± 0.02

NB 0.228 ± 0.00 0.764 ± 0.02 0.327 ± 0.03 0.457 ± 0.02 NB 0.224 ± 0.00 0.879 ± 0.03 0.238 ± 0.01 0.374 ± 0.01 NB 0.228 ± 0.01 0.672 ± 0.01 0.741 ± 0.02 0.704 ± 0.01

LSVM 0.196 ± 0.00 0.729 ± 0.01 0.694 ± 0.02 0.711 ± 0.00 LSVM 0.197 ± 0.01 0.716 ± 0.02 0.749 ± 0.02 0.732 ± 0.01 LSVM 0.211 ± 0.01 0.692 ± 0.02 0.778 ± 0.02 0.732 ± 0.01

SGD 0.199 ± 0.00 0.723 ± 0.01 0.694 ± 0.01 0.708 ± 0.00 SGD 0.204 ± 0.00 0.704 ± 0.01 0.758 ± 0.01 0.730 ± 0.01 SGD 0.219 ± 0.01 0.680 ± 0.02 0.787 ± 0.01 0.729 ± 0.01

SVM 0.181 ± 0.00 0.785 ± 0.01 0.620 ± 0.02 0.692 ± 0.01 SVM 0.187 ± 0.01 0.764 ± 0.02 0.637 ± 0.03 0.694 ± 0.02 SVM 0.193 ± 0.01 0.751 ± 0.02 0.639 ± 0.03 0.690 ± 0.02

KNN 0.241 ± 0.01 0.660 ± 0.02 0.643 ± 0.03 0.651 ± 0.02 KNN 0.253 ± 0.01 0.642 ± 0.01 0.656 ± 0.02 0.649 ± 0.01 KNN 0.253 ± 0.01 0.641 ± 0.01 0.656 ± 0.01 0.648 ± 0.01

RF 0.183 ± 0.00 0.819 ± 0.02 0.541 ± 0.03 0.651 ± 0.02 RF 0.187 ± 0.01 0.841 ± 0.02 0.482 ± 0.05 0.611 ± 0.04 RF 0.194 ± 0.01 0.832 ± 0.01 0.453 ± 0.05 0.585 ± 0.04

GB 0.200 ± 0.00 0.769 ± 0.01 0.532 ± 0.03 0.628 ± 0.02 GB 0.200 ± 0.00 0.767 ± 0.01 0.538 ± 0.03 0.631 ± 0.02 GB 0.201 ± 0.00 0.768 ± 0.01 0.531 ± 0.02 0.628 ± 0.01

MLP 0.192 ± 0.00 0.740 ± 0.02 0.681 ± 0.02 0.709 ± 0.00 MLP 0.203 ± 0.01 0.709 ± 0.01 0.733 ± 0.01 0.721 ± 0.01 MLP 0.203 ± 0.00 0.704 ± 0.01 0.760 ± 0.01 0.731 ± 0.00

Table 5: Best general results.

Placing Experiment Vocabulary FPR PRE REC F1

1 TFIDF-1,2-STOPWORDS-LEMMA-LSVM 129745 0.197 0.717 0.750 0.733

2 TFIDF-1,2,3-STOPWORDS-LEMMA-LSVM 261665 0.211 0.692 0.778 0.733

3 TFIDF-1,2,3-STOPWORDS-LEMMA-MLP 261665 0.204 0.705 0.761 0.731

4 TFIDF-1,2,3-SGD 384783 0.212 0.692 0.777 0.731

5 TFIDF-1,2-STOPWORDS-LEMMA-SGD 129745 0.204 0.704 0.759 0.730

6 TFIDF-1,2,3-STOPWORDS-LEMMA-SGD 261665 0.219 0.681 0.787 0.730

7 TFIDF-1,2,3-LSVM 384783 0.210 0.696 0.768 0.729

8 BOW-1,2-STOPWORDS-LEMMA-MLP 129745 0.173 0.781 0.679 0.726

9 TFIDF-1,2-MLP 156182 0.197 0.722 0.731 0.726

10 TFIDF-1,2-LSVM 156182 0.198 0.721 0.729 0.724

differences, since the Fake.Br corpus is composed of

only text in journalistic style collected from websites,

while in the FakeWhatsApp.Br the texts are predom-

inantly short and stylistic varied, containing not only

news, but also rumors, satirical and humorous texts,

political propaganda, and others. In the following

Subsection we analyze the failures of one of the best

models.

5.1 Error Analysis

As described in Subsection 4.2, to take a deeper look

at the limitations of our approach, we retrained and

evaluated one of the best combination of features

and method using a randomly separated train and test

sets in a 80%-20% proportion. We used the LSVM

method with TF-IDF vectors, unigrams, bigrams and

trigrams, stopwords removal and lemmatization.

The test set has 1057 instances, of which 618

(58.4%) are negative (non-misiformation) and 439

(41.6%) are positive (misinformation). The resulting

confusion matrix of the classification is presented in

Table 6. We conducted a qualitative analysis of the

246 errors, formed by 105 (43% of total errors) false

negative, that is, misinformation erroneously classi-

FakeWhastApp.BR: NLP and Machine Learning Techniques for Misinformation Detection in Brazilian Portuguese WhatsApp Messages

71

fied as non-misinformation, and by 141 (57% of to-

tal errors) false positives, non-misinformation erro-

neously classified as misinformation. We consider the

false negatives more critical in this context, since the

goal of a automated detection system would be alert

human users, that could do their own fact-checking

and reach a conclusion. So, a false positive could be

later proven as such, but a false negative may not be

taken in consideration for fact-checking.

Table 6: Confusion matrix for the test with LSVM.

Predicted class

Actual class

0 1 Total

0 True Negative: 477 (45.13%) False Positive: 141 (13.34%) 618

1 False Negative: 105 (9.93%) True Positive: 334 (31.60%) 439

Total 582 475 1057

We categorized the texts wrongly classified in the fol-

lowing types, with examples and it’s translations for

English:

• Short Text with External Information: a short

text that is followed by a media file (image, au-

dio or video), or a URL to a Web page. As most

of useful information in the message is not in the

text itself, it’s difficult for a pure NLP approach to

detect a pattern to make a correct classification;

False Negative E.g.: “Escuta a fala sensata e in-

teligente do Miguel Falabella”

10

Translation: “Listen to Miguel Falabella’s sensi-

ble and intelligent speech.”

• Short Text: in the case of a false negative, it is

a short claim with a false allegation without a ad-

ditional source of information. However, as hap-

pens in the previous type, the classifier may be

biased to the size of text. In case of the false pos-

itives, it’s short text with a opinion or a alert that

it’s not untrue but the use of alarmist words may

be misleading;

False Negative E.g.: “As Operadoras *Tim* a

*Claro* e a *Oi* fazem 26 anos hoje. Envie

isto para 20 pessoas, em seguida olhe seu saldo

no *222/544/805* e voc

ˆ

e ganha *R$900,00* em

cr

´

editos v

´

alidos por *120* meses. Funciona

mesmo acabou de cair no meu”

11

Translation: “Cell Phone Operators Tim, Claro

and Oi turn 26 today. Send this to 20 people, then

look at your balance at 222/544/805 and you earn

R$ 900.00 in credits valid for 120 months. Actu-

ally works, just happens with me.”

10

https://g1.globo.com/fato-ou-fake/noticia/2018/10/26/

e-fake-que-miguel-falabella-gravou-audio-sobre-cenario-

pos-eleicao.ghtml

11

https://www.boatos.org/tecnologia/tim-claro-oi-

creditos-gratis.html

Table 7: Percentage of each kind of error in false negatives

and in false positives.

Type

Percentage of

false negative

Percentage of

false positive

Short text with external information 71.2% 59.6%

Short text 20.2% 17%

Other 8.6% 23.4%

• Others: This broad category includes long texts

of different types. The false negatives can be

opinions, satires or rumors, which mix true in-

formation with incorrect, inaccurate or extremely

biased information. Although this class contains

more textual information, the similarity with opin-

ions labeled as non-misinformation may lead the

model to the error. For the false positive, may

be opinion or humorous texts, prayers or political

propaganda, with a linguistic style that resembles

misinformation messages;

False Negative E.g.: “Gente Apenas Minha

Opini

˜

ao ent

˜

ao Vamos l

´

a. No Dia 06 de Junho

TSE Derruba o Voto Impresso de Autoria do dep-

utado Federal Candidato a presidente Jair Bol-

sonaro. No Dia 06 de Setembro Jair Bolsonaro

Sofre um Atentado que Seria pra MATAR. Um

Dia Antes da Eleic¸

˜

ao Dia 06; Coincid

ˆ

encia Se

Juntar as Datas dar Certos 666. Agora Bolsonaro

corre novo Risco. . . A que Interessa Isso ? Nova

Ordem Mundial? Marconaria ? iluminati ? Pec¸o

que Compartilhem E Faca Chegar ao Bolsonaro

Breno Washington MG Juntos somos fortes”

Translation: “Guys, this is just my opinion so

let’s go. On June 6, the paper vote proposal by the

federal deputy candidate for president Jair Bol-

sonaro is overturned. On September 6, Jair Bol-

sonaro suffers an attack that was supposed to kill

him. One day before the election on the 6th; coin-

cidence joining dates gives 666. Now Bolsonaro

is at new risk. . . to whom it matters? New world

order? Masonry? Iluminati? I ask you to share

and make it to the Bolsonaro. Breno Washington

MG. Together we are strong”

The proportion of each type of text is shown in Table

7. We see from this Table that short texts with exter-

nal information were the main cause of errors of both

types, but it was more critical for false negatives. The

short texts were the second type with more false nega-

tive, while the others type was a minority of false neg-

ative. However, the contrary happens when we look

to the false positive, where the other type of texts are

in second place and the short texts are a minority.

This results indicate that the model is in fact

biased to the size of the text, tending to classify

long texts as misinformation and short texts as non-

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

72

misinformation. It’s necessary to take in consider-

ation that short texts with external information can

be very challenging to classify with a BoW ap-

proach alone, since short texts are usually noisier,

less topic-focused and do not provide enough word

co-occurrence or shared context. Therefore, machine

learning methods that rely on the word frequency usu-

ally fail to achieve desire accuracy due to the data

sparseness (Song et al., 2014).

As a last experiment, we select only the messages

with 50 or more words, a subset of 1555 messages

(29.4% of the original dataset), and repeat the same

train and test procedure used in this subsection. As

expected, performance increases significantly, achiev-

ing a F1 score of 0.87, a recall of 0.95, a precision of

0.80, and a false positive rate of 0.22. However, this

is a change of the original task, since short messages

are majority in the context of WhatsApp messages.

Specific strategies must be developed for that issue.

6 CONCLUSIONS

The fast spread of misinformation through WhatsApp

messages poses as major social problem. In this work,

we presented a large-scale, labelled and public dataset

of WhatsApp messages in Brazilian Portuguese. In

addition, we performed a wide set of experiments

seeking out to build a solution to the misinformation

detection problem, in this specific context. Our find-

ings help us to answer the research questions:

1. How challenging is the task of misinformation de-

tection in WhatsApp messages using NLP-based

techniques? We experimented a varied combina-

tion of BoW features and machine learning classi-

fication methods, resulting in a total of 108 com-

binations, and performed 5-fold cross validation

in each combination. Our best results achieved a

F1-score of 0.733, which may serve as a baseline

for future work. The results shows that trustful

misinformation detection in WhatsApp messages

is still a open problem.

2. Which combination of pre-processing methods,

word-level features and classification algorithms

are best suited for this task? Our experiments

showed that the methods MLP, LSVM and SGD

achieved the best results in nearly every sce-

nario of features. For TF-IDF vectors, the re-

sults improved when were used unigrams, bi-

grams and trigrams as tokens, stopwords removal

and lemmatization, which was the best scenario.

3. Which are the limitations of an NLP-based ap-

proach? Finally, the qualitative analysis of the

errors of our best results showed that the major-

ity of errors occurred in short texts that refers to

a media file or a website, thus resulting in a lack

of information for the model and limiting the per-

formance of a pure NLP-based approach. This

analysis also indicates that the model was biased

to classify long messages as misinformation, due

the average difference in size of the two classes.

When we filtered short texts from the dataset and

repeated the classification experiment, the perfor-

mance improved substantially, with a F1-score of

0.87.

In future work, we intend to investigate how the meta-

data associated with the message (senders, times-

tamps, groups where it was shared, etc) can be com-

bined with textual features to improve classification.

We also intend to investigate the task of multi-modal

misinformation detection, extracting features from

text and media files using a Deep Leaning approach.

Finally, as misinformation varies over time, we in-

tend to investigate semi-automatic methods for build-

ing continuously labeled WhatsApp’s datasets.

ACKNOWLEDGEMENTS

This study was financed in part by the CNPq, Con-

selho Nacional de Desenvolvimento Cient

´

ıfico e Tec-

nol

´

ogico - Brasil.

REFERENCES

Faustini, P. and Cov

˜

oes, T. (2019). Fake news detection

using one-class classification. In 2019 8th Brazilian

Conference on Intelligent Systems (BRACIS), pages

592–597.

Gaglani, J., Gandhi, Y., Gogate, S., and Halbe, A. (2020).

Unsupervised whatsapp fake news detection using se-

mantic search. In 2020 4th International Conference

on Intelligent Computing and Control Systems (ICI-

CCS), pages 285–289. IEEE.

Garimella, K. and Tyson, G. (2018). Whatsapp, doc? a first

look at whatsapp public group data. arXiv preprint

arXiv:1804.01473.

Granik, M. and Mesyura, V. (2017). Fake news detec-

tion using naive bayes classifier. In 2017 IEEE First

Ukraine Conference on Electrical and Computer En-

gineering (UKRCON), pages 900–903. IEEE.

Guo, B., Ding, Y., Yao, L., Liang, Y., and Yu, Z. (2019). The

future of misinformation detection: New perspectives

and trends.

Kim, S.-B., Han, K.-S., Rim, H.-C., and Myaeng, S. H.

(2006). Some effective techniques for naive bayes text

classification. IEEE transactions on knowledge and

data engineering, 18(11):1457–1466.

FakeWhastApp.BR: NLP and Machine Learning Techniques for Misinformation Detection in Brazilian Portuguese WhatsApp Messages

73

Lazer, D. M. J., Baum, M. A., Benkler, Y., Berinsky, A. J.,

Greenhill, K. M., Menczer, F., Metzger, M. J., Nyhan,

B., Pennycook, G., Rothschild, D., Schudson, M., Slo-

man, S. A., Sunstein, C. R., Thorson, E. A., Watts,

D. J., and Zittrain, J. L. (2018). The science of fake

news. Science, 359(6380):1094–1096.

Maalej, Z. (2001). Discourse Studies, 3(3):376–378.

Machado, C., Kira, B., Narayanan, V., Kollanyi, B., and

Howard, P. (2019). A study of misinformation in

whatsapp groups with a focus on the brazilian presi-

dential elections. WWW ’19, page 1013–1019, New

York, NY, USA. Association for Computing Machin-

ery.

Monteiro, R. A., Santos, R. L., Pardo, T. A., De Almeida,

T. A., Ruiz, E. E., and Vale, O. A. (2018). Contri-

butions to the study of fake news in portuguese: New

corpus and automatic detection results. In Interna-

tional Conference on Computational Processing of the

Portuguese Language, pages 324–334. Springer.

Newman, N., Fletcher, R., Schulz, A., Andi, S., and

Nielsen, R.-K. (2020). Reuters institute digital news

report 2020. Report of the Reuters Institute for the

Study of Journalism.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V.,

Thirion, B., Grisel, O., Blondel, M., Prettenhofer,

P., Weiss, R., Dubourg, V., Vanderplas, J., Passos,

A., Cournapeau, D., Brucher, M., Perrot, M., and

Duchesnay, E. (2011). Scikit-learn: Machine learning

in Python. Journal of Machine Learning Research,

12:2825–2830.

Pranckevi

ˇ

cius, T. and Marcinkevi

ˇ

cius, V. (2017). Compari-

son of naive bayes, random forest, decision tree, sup-

port vector machines, and logistic regression classi-

fiers for text reviews classification. Baltic Journal of

Modern Computing, 5(2):221.

Prasetijo, A. B., Isnanto, R. R., Eridani, D., Soetrisno, Y.

A. A., Arfan, M., and Sofwan, A. (2017). Hoax de-

tection system on indonesian news sites based on text

classification using svm and sgd. In 2017 4th Interna-

tional Conference on Information Technology, Com-

puter, and Electrical Engineering (ICITACEE), pages

45–49. IEEE.

Qiu, X., Oliveira, D. F., Shirazi, A. S., Flammini, A., and

Menczer, F. (2017). Limited individual attention and

online virality of low-quality information. Nature Hu-

man Behaviour, 1(7):0132.

Rennie, J. D., Shih, L., Teevan, J., and Karger, D. R. (2003).

Tackling the poor assumptions of naive bayes text

classifiers. In Proceedings of the 20th international

conference on machine learning (ICML-03), pages

616–623.

Resende, G., Melo, P., Sousa, H., Messias, J., Vascon-

celos, M., Almeida, J., and Benevenuto, F. (2019).

(mis)information dissemination in whatsapp: Gather-

ing, analyzing and countermeasures.

Resende, G., Messias, J., Silva, M., Almeida, J., Vascon-

celos, M., and Benevenuto, F. (2018). A system for

monitoring public political groups in whatsapp. In

Proceedings of the 24th Brazilian Symposium on Mul-

timedia and the Web, WebMedia ’18, page 387–390,

New York, NY, USA. Association for Computing Ma-

chinery.

Rosenfeld, A., Sina, S., Sarne, D., Avidov, O., and Kraus,

S. (2018). A study of whatsapp usage patterns and

prediction models without message content. arXiv

preprint arXiv:1802.03393.

Rubin, V. L., Chen, Y., and Conroy, N. K. (2015). Deception

detection for news: three types of fakes. Proceedings

of the Association for Information Science and Tech-

nology, 52(1):1–4.

Shu, K., Sliva, A., Wang, S., Tang, J., and Liu, H. (2017).

Fake news detection on social media: A data mining

perspective.

Silva, R. M., Santos, R. L., Almeida, T. A., and Pardo,

T. A. (2020). Towards automatically filtering fake

news in portuguese. Expert Systems with Applications,

146:113199.

Song, G., Ye, Y., Du, X., Huang, X., and Bie, S. (2014).

Short text classification: A survey. Journal of multi-

media, 9(5):635.

Su, Q., Wan, M., Liu, X., and Huang, C.-R. (2020). Mo-

tivations, methods and metrics of misinformation de-

tection: An nlp perspective. Natural Language Pro-

cessing Research, 1:1–13.

Vosoughi, S., Roy, D., and Aral, S. (2018). The spread of

true and false news online. Science, 359:1146–1151.

Waterloo, S. F., Baumgartner, S. E., Peter, J., and Valken-

burg, P. M. (2018). Norms of online expressions

of emotion: Comparing facebook, twitter, instagram,

and whatsapp. new media & society, 20(5):1813–

1831.

Zervopoulos, A., Alvanou, A. G., Bezas, K., Papamichail,

A., Maragoudakis, M., and Kermanidis, K. (2020).

Hong kong protests: Using natural language process-

ing for fake news detection on twitter. In IFIP Inter-

national Conference on Artificial Intelligence Appli-

cations and Innovations, pages 408–419. Springer.

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

74