Faceshield HUD: Extended Usage of Wearable Computing

on the COVID-19 Frontline

Mateus C. Silva

1,3 a

, Ricardo A. R. Oliveira

1 b

, Thiago D’angelo

1

, Charles T. B. Garrocho

1,4 c

and Vicente J. P. Amorim

2 d

1

Departamento de Computac¸

˜

ao, Instituto de Ci

ˆ

encias Exatas e Biol

´

ogicas, Universidade Federal de Ouro Preto, Brazil

2

Departamento de Computac¸

˜

ao e Sistemas, Instituto de Ci

ˆ

encias Exatas e Aplicadas, Universidade Federal de Ouro Preto,

Brazil

3

Instituto Federal de Educac¸

˜

ao, Ci

ˆ

encia e Tecnologia de Minas Gerais, Campus Avanc¸ado Itabirito, Brazil

4

Instituto Federal de Educac¸

˜

ao, Ci

ˆ

encia e Tecnologia de Minas Gerais, Campus Ouro Branco, Brazil

Keywords:

Wearable Computing, Edge Computing, Smart Healthcare, IoT, COVID-19.

Abstract:

Wearable Computing brings up novel methods and appliances to solve various problems in society’s routine

tasks. Also, it brings the possibility of enhancing human abilities and perception throughout the execution

of specialist activities. Finally, the flexibility and modularity of wearable devices allow the idealization of

multiple appliances. In 2020, the world faced a global threat from the COVID-19 pandemic. Healthcare

professionals are directly exposed to contamination and therefore require attention. In this work, we propose

a novel wearable appliance to aid healthcare professionals working on the frontline of pandemic control. This

new approach aids the professional in daily tasks and monitors his health for early signs of contamination.

Our results display the system feasibility and constraints using a prototype and indicate initial restrictions for

this appliance. This proposal also works as a benchmark for the aid in health monitoring in general hazardous

situations.

1 INTRODUCTION

With the hardware miniaturization, the inclusion of

Graphics Processing Unit (GPU), and processor sup-

portive Artificial Intelligence‘s (AI) appliances in

System On Chip (SoC), wearable computers turn to

be also classified as edge computing devices (Chen

et al., 2017). This perspective implies better and

more flexible electronics and modular Computers-on-

Chips. These devices can perform a higher engage-

ment on local processing tasks (Kim et al., 2017).

Also, given their network capabilities, they can feed

data with a higher abstraction level to an edge-server-

based or cloud-based applications (Ren et al., 2017).

A significant factor in wearable computing is the

context-awareness (Grubert et al., 2016). At a first

glimpse, this information relates to detecting changes

a

https://orcid.org/0000-0003-3717-1906

b

https://orcid.org/0000-0001-5167-1523

c

https://orcid.org/0000-0001-8245-306X

d

https://orcid.org/0000-0001-9043-6489

in the environment’s conditions in pervasive applica-

tions (Surve and Ghorpade, 2017). Nonetheless, an-

other essential part of the context-awareness is the

user’s conditions monitoring (Silva et al., 2019; Amft,

2018), henceforth named user-awareness.

According to Kliger and Silberzweig (Kliger and

Silberzweig, 2020), the COVID-19 is a coronavirus

disease caused by a novel coronavirus. The main

identified symptoms are fever, cough, myalgia, and

fatigue. Prachand et al. (Prachand et al., 2020)

state that a compelling factor in the fight against the

pandemic is the healthcare professionals’ exposure

to contamination risks. Still, according to Kliger

and Silberzweig (Kliger and Silberzweig, 2020),

face masks and face shields are among the recom-

mended personal protection equipment (PPE) adopted

by healthcare professionals to avoid contamination.

In this work, we propose the architecture for a

novel wearable appliance to help the professionals in

the frontline of the COVID-19 engagement. The pro-

posed appliance has two main goals. The first one is

to gather information from environment signals using

Silva, M., Oliveira, R., D’Angelo, T., Garrocho, C. and Amorim, V.

Faceshield HUD: Extended Usage of Wearable Computing on the COVID-19 Frontline.

DOI: 10.5220/0010444308930900

In Proceedings of the 23rd International Conference on Enterprise Information Systems (ICEIS 2021) - Volume 1, pages 893-900

ISBN: 978-989-758-509-8

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

893

a camera as a smart sensor. The other one is to moni-

tor the medical professionals’ health conditions using

internal measurement sensors. We also prototyped a

version of the proposed architecture to test its feasi-

bility and features.

This device is a Head-Up Display (HUD) adapted

to a safety face shield. This perspective follows the

usage of an extra protection layer, recommended by

the WHO for protection against direct contamination

(Organization et al., 2020). Also, the proposed appli-

ance seeks to provide context-awareness, both from

the environmental conditions and user-awareness per-

spectives. Thus, the main contribution of this work

is:

• An architecture for a HUD based on a face shield

to aid healthcare professionals on the COVID-19

frontline.

This text’s remainder is organized as follows: In Sec-

tion 2, we discuss the theoretical basis for this work

and the most related articles to the proposal. Section

3 presents the architecture proposal, with the required

elements to gather and produce the proposed appli-

ance. In Section 4, we present the developed proto-

types to validate the ideas, as well as the tests applied

to evaluate the proposal. We present the results of

these tests in Section 5 and discuss the outcomes of

this work in Section 6.

2 THEORETICAL REFERENCES

AND RELATED WORK

In this section, we present the theoretical background

and basic concepts applied in this proposal. We also

analyze the literature to understand the most related

recent works and how they contribute and differ from

our proposal.

2.1 Wearable and Edge Computing

The trend of developing novel wearable devices with

networking capabilities and forming an intercon-

nected environment enables creating a wearable Inter-

net of Things (IoT) (Cooney et al., 2018). These sys-

tems are based on edge miniaturized computers that

can retrieve, store, and transmit environmental and

user data (Jia et al., 2018).

Edge-Based Wearable Systems are vastly used in

activity recognition (Salkic et al., 2019), healthcare

and augmented (Manogaran et al., 2019) or assisted

cognition (Zhao et al., 2020). These perspectives

mean that edge wearable devices explore both envi-

ronmental perception and user-awareness in the gen-

eral context-awareness goal. Recent works show a

new trend in exploring both aspects of the global

context-awareness concept (Silva et al., 2019). Using

these concepts, we expect to propose a wearable sys-

tem architecture to help in the COVID-19 frontline.

2.2 Head-Up Display (HUD)

A HUD is an instrument to present information in a

display superimposed by the environment (Weintraub

et al., 1985). HUDs are widely used in automotive

(Betancur et al., 2018; Wang et al., 2018) and avia-

tion (Stanton et al., 2018; Blundell et al., 2020) appli-

ances. These devices are a way for wearable systems

to increase cognition and environmental perception.

From the healthcare perspective, there are some

validated devices healthcare (Kim et al., 2017), espe-

cially for surgical appliances (Liounakos et al., 2020).

Even evaluating augmented reality tools in healthcare,

most of the works provide surgical stress simulation

for educational purposes (Gerup et al., 2020).

Nevertheless, there is still no perspective or re-

lated work regarding using a HUD connected to front-

line professionals’ face shield. The only similar ap-

proach is a face mask HUD created to increase fire-

fighters’ cognition in action (Rumsey and Le Dantec,

2019). Thus, this idea presents a novelty in academic

approaches that can improve frontline conditions for

workers facing the COVID-19 pandemics.

2.3 Edge Computing Smart Health

Monitoring

A final relevant aspect of this perspective is the usage

of edge computing in the smart health monitoring sys-

tem. The advance of the IoT and the hardware minia-

turization enhance AI algorithms’ usage on edge de-

vices (Lin et al., 2019). In the context of wearable

computing, the primary areas previously explored in

smart healthcare include children’s safety, infant and

older adults care, chronic disease management, mili-

tary, sports medicine, and preventive medicine (Cas-

selman et al., 2017).

These same concepts and design principles are

vastly employed in healthcare appliances (Chen et al.,

2018). For instance, these systems can monitor pa-

tients in healthcare facilities (Vippalapalli and Anan-

thula, 2016), authentication using authentication us-

ing Electroencephalogram (EEG) signal (Zhang et al.,

2018), and early detection of disease symptoms (Al-

hussein and Muhammad, 2019). All these concepts

are relevant to the construction of the architecture pre-

sented in this work.

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

894

3 ARCHITECTURE PROPOSAL

In this section, we apply this knowledge to propose

a novel high-level architecture of the wearable appli-

ance. We chose the protective face shield as a baseline

for this architecture development.

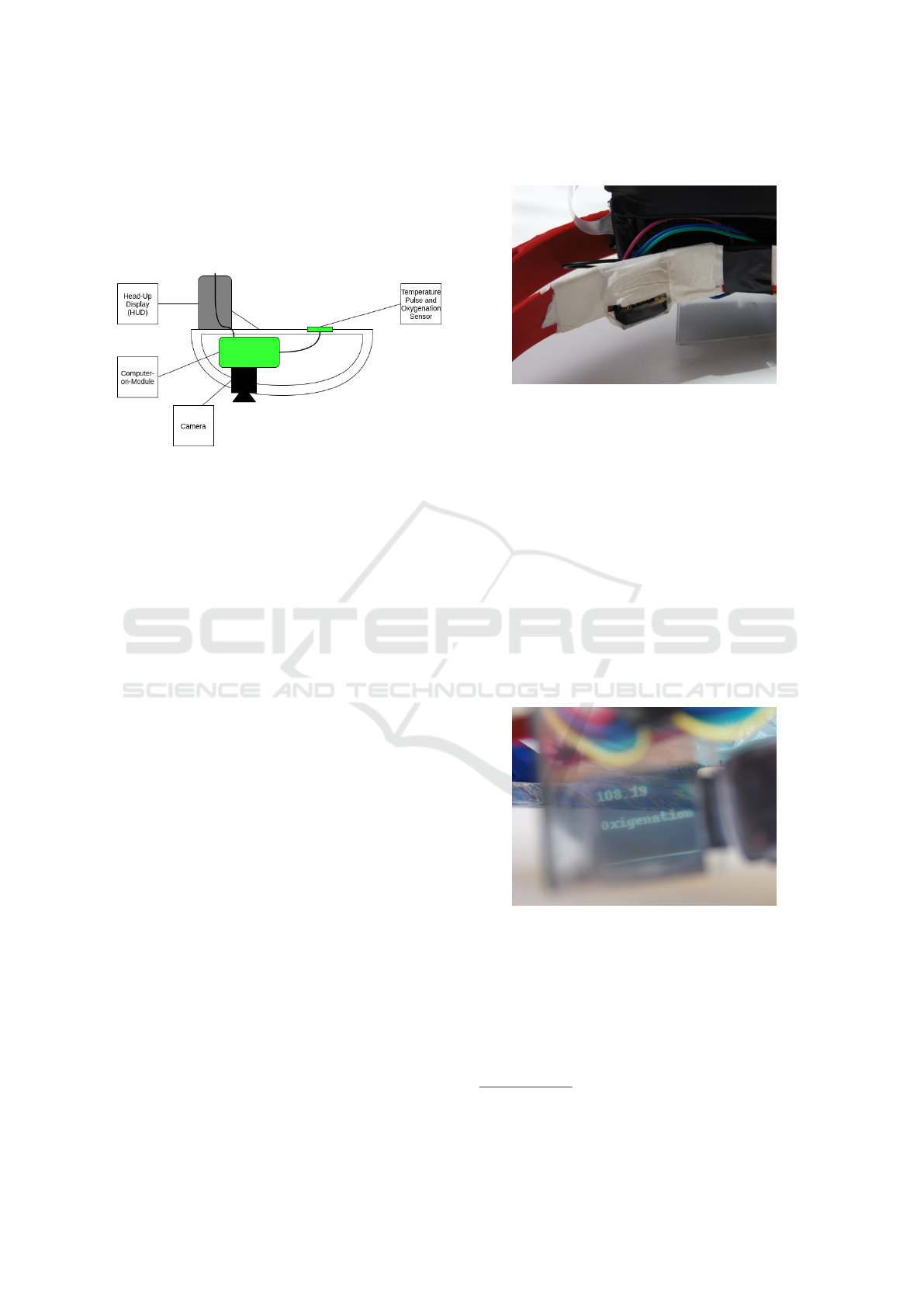

Figure 1: Schematic View of the Proposed Prototype.

The proposed architecture has one element to sense

the environment, one sensor to overview the user’s

health conditions, and a HUD interface. All these

elements integrate using an embedded computer-on-

module, powered by a battery. Figure 1 displays a

schematic view of the elements organization. For

sensing the environment, we propose the usage of a

camera. At the first moment, this sensor allows ac-

cessing the patients’ medical records remotely. The

internal application recognizes the patient using a

QR-Code, displaying the most relevant information

using the HUD. For sensing the user’s health condi-

tions, we propose the usage of a pulse-oximetry and

temperature sensor. This module provides informa-

tion about the users’ temperature, blood oxygenation,

and pulse conditions throughout the usage period.

The interface for user awareness is a built-in HUD. It

uses a small Organic Light-Emitting Diode (OLED)

display with a semi-reflexive surface and a lens to

produce the desired see-through effect. As the dis-

play is small-sized, only a limited set of information

can be displayed. These elements integrate with an

ARM-based single-core computer-on-module. This

board has wireless networking characteristics to inte-

grate with the local network. This feature allows the

transmission of the user’s data and the reception of

information about patients stored in the local servers.

4 PROTOTYPING AND

VALIDATION TESTS

This section presents the produced prototype to vali-

date this idea and the tests used to evaluate its perfor-

mance.

4.1 Prototype Description

Figure 2: Pulse-Oxymeter and Temperature Sensor Place-

ment.

To produce the prototype, at first, we started with a

3D-printed face shield base. This base is the same

volunteers use to create face shield masks. Over this

base, we settled all the necessary elements to create

the proposed application. The computer-on-module

was a Raspberry Pi Zero W. This solution has a single-

core ARMv7 processor on a Broadcom BCM2835

chipset. This CPU bears up to 1GHz of clock fre-

quency. It has 512MB of RAM and a wireless board

with an 802.11 b/g/n WLAN connection, Bluetooth

4.1, and BLE protocols. We used a plastic case to

arrange the computer-on-module and connected the

other elements to it using wires. The whole produced

solution weighs circa 200g, including the battery.

Figure 3: HUD See-through Display.

For sensing the patients’ health conditions, we used

a MAX30100

1

pulse-oximeter. This sensor provides

information to calculate the users’ pulse, blood oxy-

genation, and temperature. It is powered using a 3.3V

output built on the computer-on-module and commu-

nicates using an I

2

C/SMBus serial connection. Figure

2 displays the placement of this sensor in the proto-

1

https://datasheets.maximintegrated.com/en/ds/

MAX30100.pdf

Faceshield HUD: Extended Usage of Wearable Computing on the COVID-19 Frontline

895

type. We used a Raspicam V2 module

2

for sensing

the environment. This appliance has an 8 megapix-

els still resolution, providing 1080p, 720p, and 480p

video modes, and is accessible using the V4L2 Linux

driver. It has 62.2 degrees of horizontal field-of-view

and 48.8 degrees of vertical field-of-view. This de-

vice connects to the central computer using the MIPI

camera serial interface.

Finally, the user interface is a HUD, which dis-

plays the information in front of the user’s right eye.

For this matter, we used a 96x64 pixels OLED dis-

play

3

in a 3D-printed case. This module communi-

cates using an SPI serial connection. The reflexive

surface was created using a semi-reflexive membrane

in front of an acrylic layer. We placed a lens with

the correct calculated focal distance to display the in-

formation at the correct distance. Figure 3 shows an

example of the information available using this model

of the see-through display.

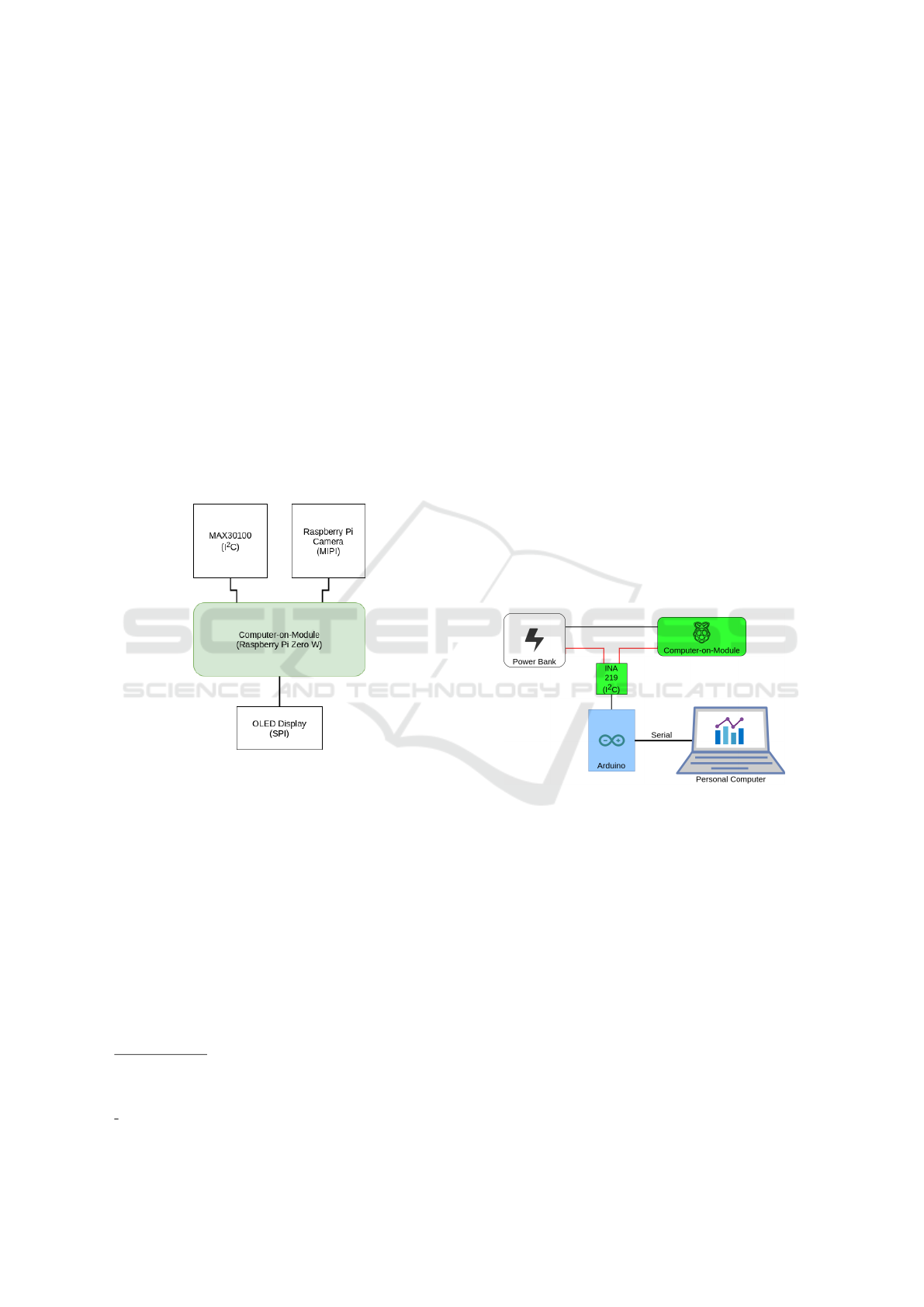

Figure 4: Data Flow for the Proposed Prototype.

The computer-on-module continuously acquires data

from its sensors. Using its wireless capabilities, it

sends this data to a server to store the information

and retrieves some information based on the sensed

data. Finally, it produces the HUD screen’s feedback

frame according to the received answer and the sen-

sors’ data. Figure 4 represents the systems’ data flow

from the information pictured in this section.

4.2 Validation Tests

In this subsection, we present the test set used to val-

idate the proposed solution. For testing matters, we

will test different aspects of the system. A wear-

2

https://www.raspberrypi.org/documentation/hardware/

camera/

3

https://img.filipeflop.com/files/download/Datasheet

SSD1331.pdf

able appliance is an energy-constrained device (Hong

et al., 2019; Gia et al., 2018). Also, the processing

consumption is a relevant constraint in this context

(S

¨

or

¨

os et al., 2015). Finally, we also want to validate

some functional aspects of the system. Thus, our test

set considers:

1. A current consumption profiling test, to ob-

serve how the proposed device behaves due to

processing charge;

2. A full battery discharge test, for probing the en-

ergy constraint and autonomy;

3. A functional validation test, to observe how the

system reads the provided data.

For the first two tests, we used a data acquisition sys-

tem to provide real-time information about the con-

sumption using a sensor and a microcontroller. The

sensor was an INA219 current consumption sensor,

and the microcontroller was an Arduino Uno. Figure

5 displays the configuration for this probe.

For a single value output, we take the average

measurement of 20 samples spaced at approximately

100 samples/s. The final sampling rate for obtaining

a single value was approximately 4.5 samples/s.

Figure 5: Current Consumption Probe Configuration.

In the current consumption profiling test, we ob-

serve how the system consumes energy in different

stages of its functioning. For this matter, we per-

formed a current consumption test considering vari-

ous stages of the system functioning. We performed

a 210-second run using a 5V power source. In this

experiment, the device runs the following states in the

approximate time intervals:

1. Device off – 0s-10s;

2. Boot – 10s-65s;

3. SSH enabled – Idle – 65s-110s;

4. Run application – 110s-180s;

5. SSH enabled – Idle – 180s-200s;

6. Device off – 200s-210s.

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

896

With this metric, we expect to analyze the current

consumption of the system’s different possible states.

Also, we expect to understand the system’s energetic

constraints better. For broader comprehension, we

also perform the next described test.

In the full battery discharge test, we expect to

analyze two different aspects. At first, we want to

evaluate the system autonomy given a specific power

source. Also, we want to evaluate the consump-

tion steadiness throughout the whole execution time.

This evaluation considers quantitative and qualitative

traits. Current consumption without massive leaps

mainly displays robustness on the behavior. Also, it is

necessary to determine the autonomy of this system,

considering the other presented constraints.

Finally, we also perform a functional valida-

tion test. With this, we evaluate the system’s feasi-

bility and additional features through its fundamen-

tal aspects. We analyze both user-awareness and

environment-awareness sensing tools, considering the

information extraction and transmission to an external

edge server appliance. We enable the usage of Edge

AI to evaluate the traits of the presented data within

this appliance.

5 VALIDATION TESTS RESULTS

In this section, we present the results for these tests

and preliminary discussions and conclusions.

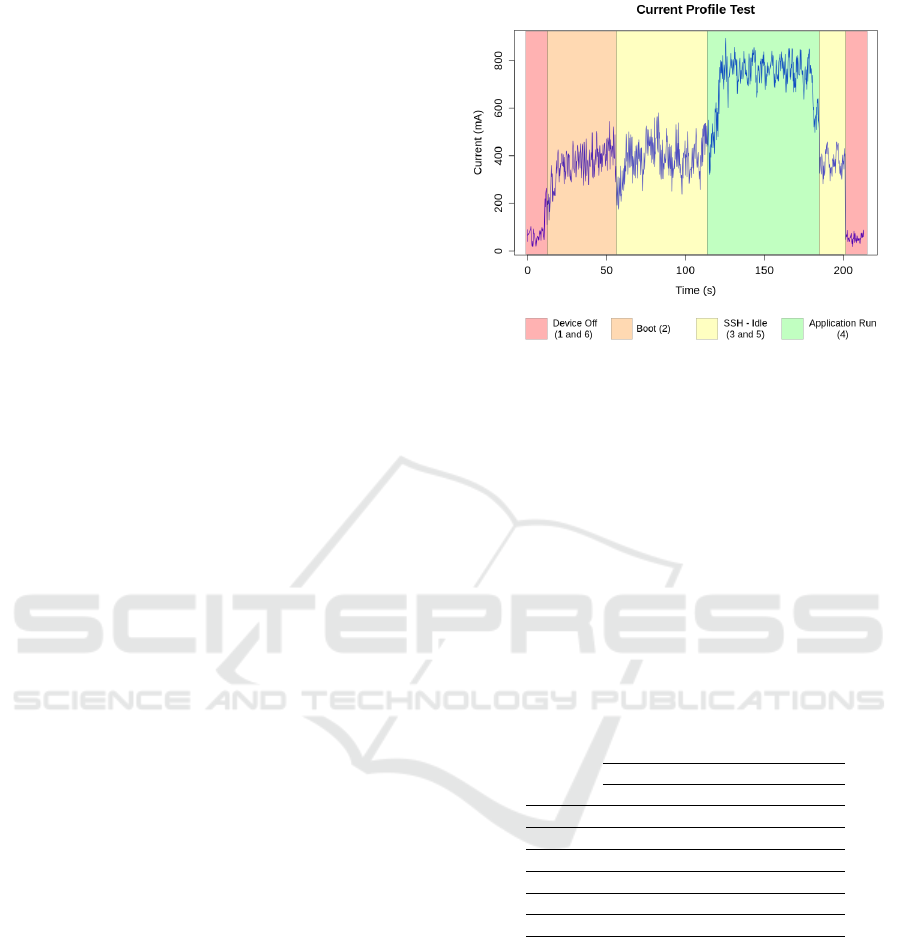

5.1 Current Consumption Profiling Test

The first proposed experiment is the current consump-

tion profiling test. In this scope, we want to de-

scribe the functioning of the system throughout dif-

ferent stages. For this matter, we divided the test time

into six stages: Device off (1), Boot (2), SSH enabled

- Idle (3), Run application (4), SSH enabled - Idle

(5), and Device off (6). This test represents roughly a

“symmetrical” startup, execution, and shutdown from

the prototype. Figure 6 displays the results obtained

from this experiment.

In red, we display the probe readings when the

system was off (Stages 1 and 6). These results display

some noise but roughly represent the “zero-state” of

this system. In orange, we display the current con-

sumption results in the system boot (Stage 2). In this

case, it is possible to see that the reading values in-

crease until reaching a stable state.

In yellow, we display the results for the “SSH en-

abled - Idle” stages (3 and 5). In these intervals, the

system was on, and the ssh connection was estab-

lished. Nonetheless, the application was not running

Figure 6: Current Consumption Profiling Test Result.

yet, configuring an idle state. At the connection estab-

lishment, we observe some instability in the current

consumption, which reaches a stable state right after.

Finally, in green, we display the current consump-

tion result for the application run time. In this case,

the device starts the application, acquiring and trans-

mitting data. In this case, the prototype is consuming

the fully required resources. From the data, it is pos-

sible to see that the current increases during the sys-

tem start-up, reaching a stable state after some sec-

onds. The system also displays a slope decrease on

the current, reaching a stable level in the shutdown’s

idle state. Table 1 displays the average current con-

sumption for each stage.

Table 1: Profiling Test Results.

Current (mA)

min. max. avg.

Stage 1 3 86 45.53 ± 22.27

Stage 2 56 529 331.4 ± 86.6

Stage 3 232 565 384.4 ± 65.8

Stage 4 744 927 831.4 ± 32.6

Stage 5 268 444 359.5 ± 45.0

Stage 6 3 73 40.63 ± 14.0

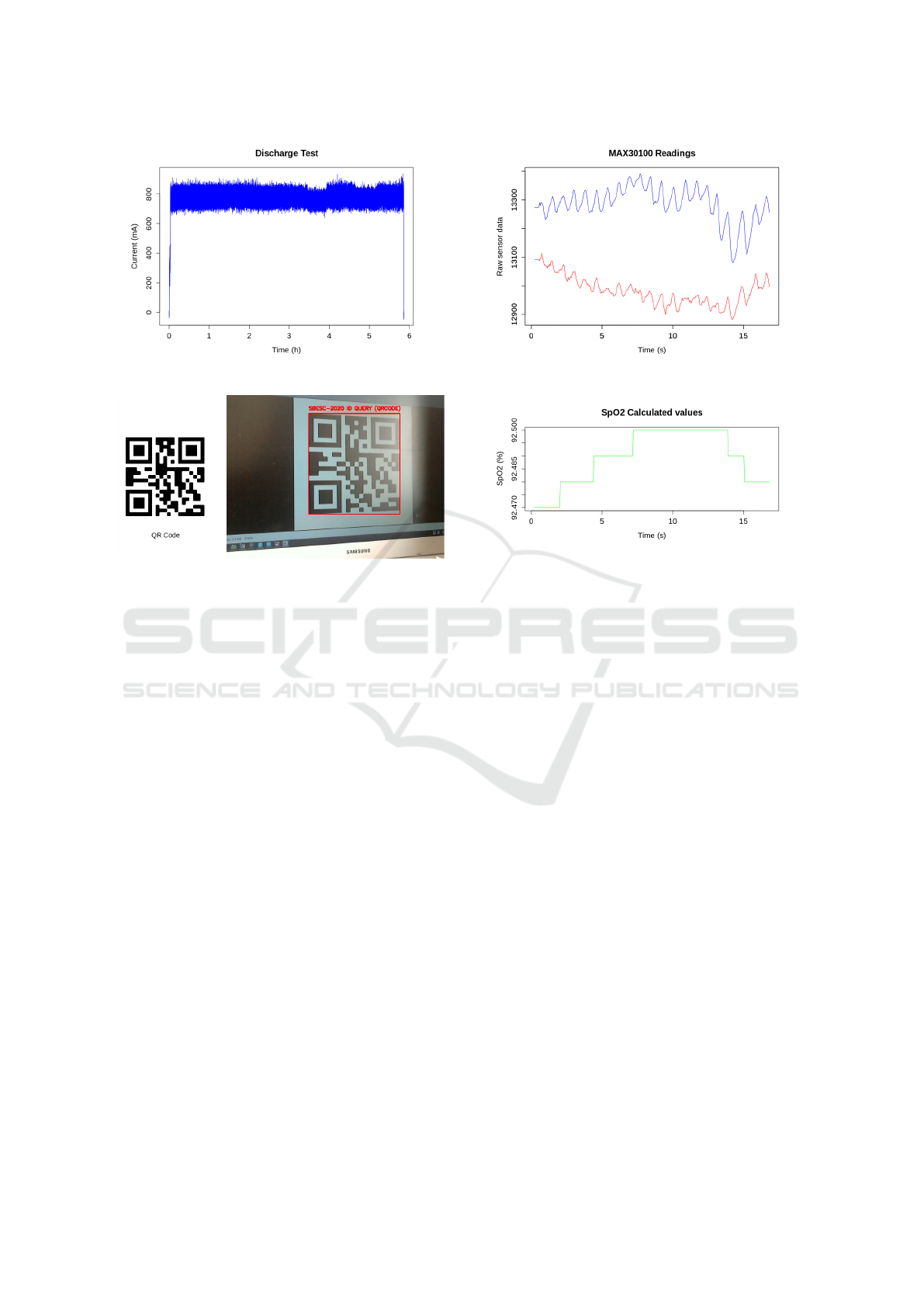

5.2 Full Battery Discharge Test

After profiling the current consumption for each pre-

sented stage, we also performed a discharge test. In

this experiment, we use a small battery as a candidate

for bearing the appliance and measure the autonomy

and average current consumption. Figure 7 displays

the measurement results for the whole test period.

We used a lightweight 4000 mAH power bank

as the system battery for this test. The average cur-

rent was 779.3 ± 58.7 mA during the test, with a

registered peak of 939 mA. The prototype presented

Faceshield HUD: Extended Usage of Wearable Computing on the COVID-19 Frontline

897

Figure 7: Discharge Test Result.

Figure 8: QR Code Acquisition Validation.

a steady behavior during the whole test execution,

which indicates robustness for this appliance. Also,

the autonomy was circa 6 hours of straight opera-

tion. These results corroborate with the profiling test

results, indicating the solution feasibility and pre-

dictable behavior. This information also allows the

selection of an adequate power source according to

healthcare workers’ actual demands.

5.3 Functional Validation Test

Finally, we performed a functional validation on the

prototype to check its feasibility in a scenario that ap-

proaches the final user’s context. In the proposed ap-

pliance, we expect to extract two different kinds of in-

formation. We expect to recognize information from

the environment using a camera and extract informa-

tion from the user through a built-in sensor.

At first, we verified the feasibility of the external

sensor. Initially, it only works as a detector for a QR

code to retrieve other intelligent sensors’ information.

Thus, our conjecture considers the wearable camera

as an image extracting sensor. The system acquires

and sends frames to an edge computing server for pro-

cessing.

For this matter, we integrated a streamer applica-

tion into the appliance. Thus, at any moment, the edge

computing server can establish a connection with the

wearable, acquiring a single frame and processing it

Figure 9: MAX30100 Probe Readings.

Figure 10: SpO

2

Readings Obtained from the Computer-

on-Module.

to search for the QR code with an identification query.

Therefore, we developed a simple application that can

retrieve data from the wearable device and scan it for

a QR code. Figure 8 displays the final result for this

example.

After validating the external data acquisition pro-

cess, we also needed to validate the user’s health con-

ditions data acquisition. In this prototype, we used

the MAX30100, which provides information from

temperature and pulse-oxymetry. In this application,

the wearable calculates the blood oxygen saturation

(SpO

2

) data considering measurements from IR and

red light LED pulses. The absorption of red light

and IR light differs in hemoglobin with and without

oxygen. Figure 9 displays the probe sensor sampling

readings at a rate of around 23 samples/s for circa 15

seconds. The blue line displays the infrared pulses’

readings, while the red line presents the readings for

the red light pulses.

Typically, the data can be interpreted through

the AC components normalized by the DC compo-

nents (Tremper and Barker, 1989). The computer-

on-module interprets the readings as SpO

2

. Figure

10 shows the acquired data from the computer-on-

module. The pulse information can be obtained sim-

ply by counting the number of peaks in a specified

time interval. The generated data can be evaluated

later on the edge computing server appliance with ma-

chine learning techniques to analyze the time series.

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

898

The analyzes carried on the prototype provide

functional and non-functional validation of all aspects

of the proposed architecture. We validated the main

elements necessary for the prototype to integrate into

the proposed appliance through the functional analy-

sis. In the non-functional analysis, we determine the

constraints for a robust operation using the proposed

system.

6 CONCLUSIONS AND FUTURE

WORKS

In this text, we propose a wearable device architecture

to improve the healthcare professionals’ conditions in

the COVID-19 frontline. This device is based on a

computer-on-module, enabling the external acquisi-

tion of data and further processing using Edge AI. We

also developed a prototype to validate the functional

and non-functional aspects of the proposed solution.

Our proposed device base is a protective face

shield. This device is a part of the protective gear

used by healthcare professionals against contamina-

tion from the COVID-19. The appliance is centered in

a computer-on-module capable of acquiring data from

sensors and providing user feedback using a HUD.

We produced a prototype to validate this architec-

ture, containing the necessary elements to perform

a set of tasks. This approach acquires data from

a camera and transmits it to a web-based applica-

tion. The approach acquires data from a pulse oxime-

ter and calculates oxygen saturation, transmitting the

pre-processed data to create a time-series.

To validate this prototype and appliance, we pro-

posed three tests. At first, we create a constraint

profile from the appliance evaluating the energy con-

sumption in different scenarios. Then, we perform a

full discharge test to evaluate the autonomy and ro-

bustness of this system. Finally, we performed a set

of validation tests of the functional aspects of this sys-

tem.

At first, we evaluated the results for the profiling

test. Our experiment displays a predictable behav-

ior given in a particular state. When the prototype is

turned on and in an idle state, we expect the consump-

tion of circa 300-330 mA for maintaining the neces-

sary networking and operating system tasks. When

the application is fully operational, we expect an in-

crease of circa 500 mA, reaching an average of around

830 mA.

Both these constraints were observed in the dis-

charge test. This experiment displayed a stable func-

tioning, with an autonomy of around six hours using

a small battery. Finally, in these conditions, we per-

formed all desired tasks displayed in the functional

validation test.

These results enforce the feasibility of this device,

which can aid in remote healthcare in medical facil-

ities. Future work should evaluate the usage of mul-

tiple devices in an integrated network to evaluate the

constraints given an appliance closer to the real con-

text.

ACKNOWLEDGEMENTS

The authors would like to thank CAPES, CNPq, and

the Federal University of Ouro Preto for support-

ing this work. This study was financed in part by

the Coordenac¸

˜

ao de Aperfeic¸oamento de Pessoal de

N

´

ıvel Superior - Brasil (CAPES) - Finance Code 001.

REFERENCES

Alhussein, M. and Muhammad, G. (2019). Automatic voice

pathology monitoring using parallel deep models for

smart healthcare. IEEE Access, 7:46474–46479.

Amft, O. (2018). How wearable computing is shaping dig-

ital health. IEEE Pervasive Computing, 17(1):92–98.

Betancur, J. A., Villa-Espinal, J., Osorio-G

´

omez, G.,

Cu

´

ellar, S., and Su

´

arez, D. (2018). Research top-

ics and implementation trends on automotive head-up

display systems. International Journal on Interactive

Design and Manufacturing (IJIDeM), 12(1):199–214.

Blundell, J., Scott, S., Harris, D., Huddlestone, J., and

Richards, D. (2020). With flying colours: Pilot perfor-

mance with colour-coded head-up flight symbology.

Displays, 61:101932.

Casselman, J., Onopa, N., and Khansa, L. (2017). Wearable

healthcare: Lessons from the past and a peek into the

future. Telematics and Informatics, 34(7):1011–1023.

Chen, M., Li, W., Hao, Y., Qian, Y., and Humar, I. (2018).

Edge cognitive computing based smart healthcare sys-

tem. Future Generation Computer Systems, 86:403–

411.

Chen, Z., Hu, W., Wang, J., Zhao, S., Amos, B., Wu, G., Ha,

K., Elgazzar, K., Pillai, P., Klatzky, R., et al. (2017).

An empirical study of latency in an emerging class

of edge computing applications for wearable cognitive

assistance. In Proceedings of the Second ACM/IEEE

Symposium on Edge Computing, pages 1–14.

Cooney, N. J., Joshi, K. P., and Minhas, A. S. (2018). A

wearable internet of things based system with edge

computing for real-time human activity tracking. In

2018 5th Asia-Pacific World Congress on Computer

Science and Engineering (APWC on CSE), pages 26–

31. IEEE.

Gerup, J., Soerensen, C. B., and Dieckmann, P. (2020).

Augmented reality and mixed reality for healthcare

education beyond surgery: an integrative review. Int J

Med Educ, 11:1–18.

Faceshield HUD: Extended Usage of Wearable Computing on the COVID-19 Frontline

899

Gia, T. N., Sarker, V. K., Tcarenko, I., Rahmani, A. M.,

Westerlund, T., Liljeberg, P., and Tenhunen, H.

(2018). Energy efficient wearable sensor node for iot-

based fall detection systems. Microprocessors and Mi-

crosystems, 56:34–46.

Grubert, J., Langlotz, T., Zollmann, S., and Regen-

brecht, H. (2016). Towards pervasive augmented re-

ality: Context-awareness in augmented reality. IEEE

transactions on visualization and computer graphics,

23(6):1706–1724.

Hong, S., Gu, Y., Seo, J. K., Wang, J., Liu, P., Meng, Y. S.,

Xu, S., and Chen, R. (2019). Wearable thermoelectrics

for personalized thermoregulation. Science advances,

5(5):eaaw0536.

Jia, G., Han, G., Xie, H., and Du, J. (2018). Hybrid-lru

caching for optimizing data storage and retrieval in

edge computing-based wearable sensors. IEEE Inter-

net of Things Journal, 6(2):1342–1351.

Kim, J., Gutruf, P., Chiarelli, A. M., Heo, S. Y., Cho, K.,

Xie, Z., Banks, A., Han, S., Jang, K.-I., Lee, J. W.,

et al. (2017). Miniaturized battery-free wireless sys-

tems for wearable pulse oximetry. Advanced func-

tional materials, 27(1):1604373.

Kliger, A. S. and Silberzweig, J. (2020). Mitigating risk of

covid-19 in dialysis facilities. Clinical Journal of the

American Society of Nephrology, 15(5):707–709.

Lin, X., Li, J., Wu, J., Liang, H., and Yang, W. (2019). Mak-

ing knowledge tradable in edge-ai enabled iot: A con-

sortium blockchain-based efficient and incentive ap-

proach. IEEE Transactions on Industrial Informatics,

15(12):6367–6378.

Liounakos, J. I., Urakov, T., and Wang, M. Y. (2020).

Head-up display assisted endoscopic lumbar discec-

tomy—a technical note. The International Journal

of Medical Robotics and Computer Assisted Surgery,

16(3):e2089.

Manogaran, G., Shakeel, P. M., Fouad, H., Nam, Y., Baskar,

S., Chilamkurti, N., and Sundarasekar, R. (2019).

Wearable iot smart-log patch: An edge computing-

based bayesian deep learning network system for

multi access physical monitoring system. Sensors,

19(13):3030.

Organization, W. H. et al. (2020). Advice on the use of

masks in the context of covid-19: interim guidance, 6

april 2020. Technical report, World Health Organiza-

tion.

Prachand, V. N., Milner, R., Angelos, P., Posner, M. C.,

Fung, J. J., Agrawal, N., Jeevanandam, V., and

Matthews, J. B. (2020). Medically-necessary, time-

sensitive procedures: A scoring system to ethically

and efficiently manage resource scarcity and provider

risk during the covid-19 pandemic. Journal of the

American College of Surgeons.

Ren, J., Guo, H., Xu, C., and Zhang, Y. (2017). Serving at

the edge: A scalable iot architecture based on trans-

parent computing. IEEE Network, 31(5):96–105.

Rumsey, A. and Le Dantec, C. A. (2019). Clearing the

smoke: The changing identities and work in firefight-

ers. In Proceedings of the 2019 on Designing Interac-

tive Systems Conference, pages 581–592.

Salkic, S., Ustundag, B. C., Uzunovic, T., and Golubovic,

E. (2019). Edge computing framework for wearable

sensor-based human activity recognition. In Interna-

tional Symposium on Innovative and Interdisciplinary

Applications of Advanced Technologies, pages 376–

387. Springer.

Silva, M. C., Amorim, V. J., Ribeiro, S. P., and Oliveira,

R. A. (2019). Field research cooperative wearable sys-

tems: Challenges in requirements, design and valida-

tion. Sensors, 19(20):4417.

S

¨

or

¨

os, G., Semmler, S., Humair, L., and Hilliges, O. (2015).

Fast blur removal for wearable qr code scanners. In

Proceedings of the 2015 ACM International Sympo-

sium on Wearable Computers, pages 117–124.

Stanton, N. A., Roberts, A. P., Plant, K. L., Allison, C. K.,

and Harvey, C. (2018). Head-up displays assist heli-

copter pilots landing in degraded visual environments.

Theoretical issues in ergonomics science, 19(5):513–

529.

Surve, A. R. and Ghorpade, V. R. (2017). Pervasive context-

aware computing survey of context-aware ubiquitous

middleware systems. International Journal of Engi-

neering10. 1.

Tremper, K. K. and Barker, S. J. (1989). Pulse oximetry.

Anesthesiology: The Journal of the American Society

of Anesthesiologists, 70(1):98–108.

Vippalapalli, V. and Ananthula, S. (2016). Internet of things

(iot) based smart health care system. In 2016 Interna-

tional Conference on Signal Processing, Communica-

tion, Power and Embedded System (SCOPES), pages

1229–1233. IEEE.

Wang, W., Zhu, X., Chan, K., and Tsang, P. (2018). Digi-

tal holographic system for automotive augmented re-

ality head-up-display. In 2018 IEEE 27th Interna-

tional Symposium on Industrial Electronics (ISIE),

pages 1327–1330. IEEE.

Weintraub, D. J., Haines, R. F., and Randle, R. J. (1985).

Head-up display (hud) utility, ii: Runway to hud tran-

sitions monitoring eye focus and decision times. In

Proceedings of the Human Factors Society Annual

Meeting, volume 29, pages 615–619. SAGE Publica-

tions Sage CA: Los Angeles, CA.

Zhang, Y., Gravina, R., Lu, H., Villari, M., and Fortino,

G. (2018). Pea: Parallel electrocardiogram-based au-

thentication for smart healthcare systems. Journal of

Network and Computer Applications, 117:10–16.

Zhao, S., Wang, J., Leng, H., Liu, Y., Liu, H., Siewiorek,

D. P., Satyanarayanan, M., and Klatzky, R. L. (2020).

Edge-based wearable systems for cognitive assis-

tance: Design challenges, solution framework, and

application to emergency healthcare.

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

900