The Perception Modification Concept to Free the Path of An

Automated Vehicle Remotely

Johannes Feiler and Frank Diermeyer

Institute of Automotive Technology, Technical University of Munich, Boltzmannstr. 15, Garching b., München, Germany

Keywords: Teleoperation, Remote Assistance, Indirect Control, Perception Correction, Object Detection, Manipulation.

Abstract: Inner-city, automated vehicles will face situations in which they leave their operational design domain. That

event may lead to an undesired vehicle standstill. Consequently, the vehicle’s independent continuation to its

desired destination is not feasible. The undesired vehicle standstill can be caused by uncertainties in object

detection, by environmental circumstances like weather, by infrastructural changes or by complex scenarios

in general. Teleoperation is one approach to support the vehicle in such situations. However, it may not be

clear which teleoperation concept is appropriate. In this paper, a teleoperation concept and its implementation

to free the path of an automated vehicle is presented. The situation to be resolved is that a detection hinders

the automated vehicle to proceed. However, the detection is either a false positive or it is an indeterminate

object which can be ignored. The teleoperator corrects the object list and the occupancy grid map. Thereby,

the teleoperator enables the automated vehicle to continue its path. The preliminary tests show that the

teleoperation concept enables teleoperators to resolve the respective scenarios appropriately.

1 INTRODUCTION

The SAE J3016 (J3016, 2018) subdivides the

automation of vehicles into six different levels of

driving automation. The levels range from level 5

‘Full Driving Automation’ to level 0 ‘No Driving

Automation’. In level 5 automation, no driver has to

be present neither for supervision or as a fallback

system. Furthermore, the automation system is not

limited to an operational design domain. In contrast

to level 5, level 4 systems are limited to a specific

operational design domain which can be classified as

environmental, geographical, or time-of-day

restricted (J3016, 2018) among others. Therefore,

level 4 systems are expected to be launched prior to

level 5 systems. Whenever the automation system

leaves its operational design domain, it has to come

to a safe state. A safe state means a standstill for

inner-city vehicles in many cases. As a consequence,

passengers would be stranded. This should be

avoided. Therefore, the motivation is to enable the

automated vehicle (AV) to continue driving.

Teleoperation is a possible solution. The vehicle

will request help from the control center via the

mobile network (Feiler et al., 2020). A teleoperator

connects to the vehicle and resolves the problem. This

technology comes with several challenges.

First, the teleoperator has to be aware of the

situation, that led to the help request. Camera streams

and further vehicle sensor information are visualized

to the teleoperator. Progress was made in improving

video streaming (Gnatzig et al., 2013; Liu et al., 2016;

Kang et al., 2018; Tang et al., 2013) and in increasing

teleoperator immersion (Bout et al., 2017; Georg und

Diermeyer, 2019; Tang Chen, 2014; Hosseini, 2018).

Second, the teleoperator has to resolve the

situation reliably and safely. Different methods of

interacting with the vehicle are possible. This

spectrum ranges from low-level control commands to

high-level teleoperation concepts. A low-level

control concept is the direct control, where the

teleoperator sets the desired velocity, the desired

steering wheel angle and the desired gear. Direct

control was implemented several times in the research

context (Gnatzig et al., 2013; Liu et al., 2016; Ross et

al., 2008). Progress was made in analyzing and

reducing system latency (Ross et al., 2008; Blissing

et al., 2016; Georg et al., 2020) and in designing

teleoperation assistance systems (Hosseini, 2018;

Chucholowski, 2015). However, the teleoperators are

imposed by a high workload (Georg et al., 2018; Liu

et al., 2016). As soon as a teleoperator steers a vehicle

with low-level control commands, the teleoperator is

responsible for avoiding accidents during the

Feiler, J. and Diermeyer, F.

The Perception Modification Concept to Free the Path of An Automated Vehicle Remotely.

DOI: 10.5220/0010433304050412

In Proceedings of the 7th International Conference on Vehicle Technology and Intelligent Transport Systems (VEHITS 2021), pages 405-412

ISBN: 978-989-758-513-5

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

405

vehicle’s motion. Due to the control from a remote

location and system latency, the teleoperator can be

overwhelmed by the imposed mental workload.

Therefore, the motivation is to decrease the

teleoperator’s mental workload. In general, there are

two approaches to reduce the teleoperator’s mental

workload: (1) Improvement of data representation

(better videos and visualizations, head-mounted

display, sensory feedback) and (2) Simplification of

control tasks. Because several improvements

regarding (1) have already been made, this article

addresses simplification of control.

Summarizing the previous paragraphs, the

motivation is that an AV is stranded and that possible

teleoperation solutions place a high workload on

teleoperators. The objective of this paper is to develop

a teleoperation concept that enables the AV to

proceed and that places a lower workload on

teleoperators than direct control. Section 2 gives an

overview of high-level teleoperation concepts, their

application and related architectural considerations.

Section 3 states the identified research gap and shows

the requirements to be met by the concept. Section 4

outlines the concept and section 5 its exemplary

implementation. Section 6 lists the conducted tests

and section 7 discusses the concept and its

implementation.

2 RELATED WORK

This section illustrates an overview of high-level

teleoperation concepts for road vehicles, the state of

the art with respect to a related perception

modification implementation, the common present

AV architecture, and some of its challenges.

2.1 High-level Teleoperation Concepts

for Road Vehicles

High-level teleoperation concepts are control

concepts that transfer abstract control actions or

signals to the vehicle, other than direct control

signals. In other publications, they are called indirect

control concepts (Gnatzig et al., 2013; Tikanmäki et

al., 2017).

An example of a high-level teleoperation concept

is the trajectory-control of Gnatzig et al. (2015, S. 53).

The control signal is in the format of trajectories.

Therefore, the control interface is on the level of

guidance in accordance to Donges (1982). The

teleoperator sets trajectories and supervises the

vehicle’s motion. Study results showed that the

teleoperators steered the vehicle more stable than

with direct control under a video streaming latency of

600 milliseconds. However, the teleoperators’

workload was not measured. Furthermore, the

trajectory control concept made only use of the

vehicle’s control algorithms, but not of its perception

or planning capabilities. Therefore, it is suggested,

that the teleoperator’s workload might still be

increased.

A similar teleoperation concept was patented by

Biehler et al. (2017). Biehler suggests, that a

teleoperator proposes actions in form of paths or

trajectories. The trajectory is transmitted to the

vehicle and the vehicle follows it under supervision

or autonomously. However, it is questionable, why

should the vehicle be able to proceed driving

autonomously after the teleoperator’s actions, when it

could not do the task autonomously just a moment

ago. Trajectory planners usually scan the complete

free space for a drivable path. If no drivable path is

found by the algorithm but a drivable path exists, the

problem probably lies in perception, not planning.

Therefore, the concept of teleoperator-provided

actions and onboard responsibility is questionable.

A high-level teleoperation concept could be that

the teleoperator confirms, modifies or rejects

behavior suggestions of the vehicle. Similar ideas to

safely control an AV remotely are patented. It is

worth mentioning, teleoperator confirmations,

modifications or rejections for the AV behavior

suggestions (Fairfield et al., 2019; Lockwood et al.,

2019), or the marking of non-passable corridors

(Levinson et al., 2017). However, no references to

implementations or evaluations could be found.

Therefore, ideas and concepts for high-level

teleoperation concepts already exists. They have the

potential to enable an AV to proceed and to keep the

teleoperator’s workload low. However, no

implementation or evaluation of workload can be

found. Nevertheless, it is assumed, that high-level

teleoperation concepts have the potential to decrease

teleoperators’ workload due to simplification of

control tasks. In order to be able to make statements

regarding the feasibility and the workload of high-

level teleoperation concepts, they have to be

implemented, compared and evaluated.

2.2 Object Connotation Modification in

Shared Autonomy

Pitzer et al. designed and tested a shared autonomy

concept between a handling robot and a human

operator (Pitzer et al., 2011). The robot’s task is to

pick and relocate selected objects. The human

operator can support the robot’s perception, if the

VEHITS 2021 - 7th International Conference on Vehicle Technology and Intelligent Transport Systems

406

robot cannot find a certain object. The operator

selects the desired object on a monitor and the robot

then uses this information to pick the specified object

without further user help. Pitzer et al. pursued a

similar concept as in this paper, where the robot’s

perception is assisted, the context and the

implementation however differ significantly.

An Uber Technologies patent (Kroop et al., 2019)

considers a similar idea as developed to the one in this

paper. The patent states, that the self-driving vehicle

identifies indeterminate objects, sends the encoded

sensor data to a back-end and receives the required

information in order to resolve the actual situation.

However, no publication containing an

implementation can be found. Furthermore, little

information about technical challenges is elaborated.

For example, perception is commonly represented in

different ways. However, the handling of other

representations than objects is not covered.

2.3 Software Architecture of

Automated Vehicles

Perception modification requires an interface to the

perception output. This interface has to be provided

by the automation architecture. For example, the

sense-plan-act architecture allows such an interface.

The end-to-end architecture generally not. Common

architectures are introduced to be aware of the correct

context.

Currently, two approaches are widely used to

define the software architecture for automated

driving. First, end-to-end automation (Xu et al., 2016;

Xiao et al., 2019; Bojarski et al., 2016; Pan et al.,

2017; Zhang, 2019) and second, modular sense-plan-

act architecture (Pendleton et al., 2017). Given the

higher number of publications, modular sense-plan-

act architecture is more common in research than end-

to-end automation. Sense-plan-act architecture is

divided into submodules and each of these is

currently a research topic by itself. One of the

challenging parts is the perception module. The goal

of perception is to provide the contextual

representation of the vehicle’s surroundings. This is

usually done by locating relevant objects and

understanding their semantic meaning. It must also be

mentioned, that there is already an ETSI norm on

Collective Perception Service that standardizes

perception messages for V2X-communication (ETSI

TR 103 562, 2019).

2.4 Preventing False Negatives Leads

to False Positives

False positives remain a challenge to perception, even

if there are algorithms that reduce their occurrence.

For example, Gies et al. (2018) track and fuse objects

of different detection algorithms while considering

constraints such as physical, module and digital map

constraints in order to reduce false positives and

uncertain states. Furthermore, Durand et al. (2019)

introduced a confidence score using the number of

sensors covering a detection, the detection’s lifetime

and the respective sensor failure history. Moreover,

Kamann et al. (2018) developed a model to calculate

radar wave propagation in order to remove reflections

from the raw sensor output that would lead to false-

positive detections. The mentioned algorithms as well

as many others reduce the number of false positives

(Jo et al., 2017; Bauer et al., 2019; Mita et al., 2019).

However, some false positive detections persist (Jo et

al., 2017; Bauer et al., 2019).

In general, one premise for AV is that relevant

objects must not be overlooked. An overlooked object

is called a false negative. However, preventing the

occurrence of false negatives comes with the rise of

the occurrence of false positives detections without

corresponding real objects. At the moment, the

dilemma in object detection is that the number of false

negatives and false positives cannot be minimized

simultaneously. The reason is that a confidence score

threshold has to be set, that results in the following

trade-off: the lower the threshold is, the fewer objects

are overlooked. But this also means, that more false

positives can occur. On the other hand, the higher the

threshold is, the fewer false positives occur, but the

higher the number of overlooked objects is. Since

overlooked objects might lead to a crash, false

negatives are unacceptable for an AV.

Therefore, false positive detections will occur in

the future. False positive detections can hinder the

AV from reaching its destination.

2.5 Indeterminate Objects

Indeterminate objects are detections that are

insufficiently interpreted or incorrectly classified by

the detection algorithm. Such objects might not be

familiar to the detection algorithm when the AV is

launched. Reasons for that could be missing labeled

data during the training process of the detection

algorithm or the vehicle operation in an unforeseen

domain. Therefore, the detection algorithm interprets

such detections inappropriately. Examples could be

some kind of debris, lost cargo, packaging, branchlets

The Perception Modification Concept to Free the Path of An Automated Vehicle Remotely

407

or steaming gully covers. Furthermore,

misinterpreted lane markings or road signs might be

an issue. Some of those detections would not hinder a

human’s drive. Therefore, the AV could proceed.

3 OBJECTIVE AND

REQUIREMENTS

The following research gap has been identified from

the related work. It is not researched yet, if a

teleoperator can correct the AV perception so that the

AV can continue to drive. It is suggested that such a

teleoperation concept places less workload on

teleoperators than direct control. Therefore, the aim

of this article is to present the feasibility and the

implementation of a high-level teleoperation concept

that enables the teleoperator to correct the AV

perception. The high-level teleoperation concept

should meet the following requirements.

First, it has to solve a specific scenario: The AV

trajectory planner does not find a drivable path due to

a mistaken obstructive detection. The mistaken

obstructive detection can be a false positive or an

indeterminate object as mentioned in sections 2.4 and

2.5.

The second requirement is that the teleoperator

should only intervene minimally in the system. The

underlying assumption is, that the workload is low if

the teleoperator has to do little.

The third requirement is that its usage has to be

safe. The teleoperator has to be able to distinguish

between scenarios in which the concept can be

applied and in which not. Moreover, the teleoperator

has to comprehend the consequences of the

perception correction. Finally, the teleoperator has to

be able to withdraw the perception correction if

something unexpected happens.

4 THE PROPOSED CONCEPT

In consideration of the mentioned requirements, a

concept is proposed that enables the teleoperator to

mark areas as drivable. Therefore, the AV is able to

continue the drive.

4.1 Integration into an Automated

Vehicle System Architecture

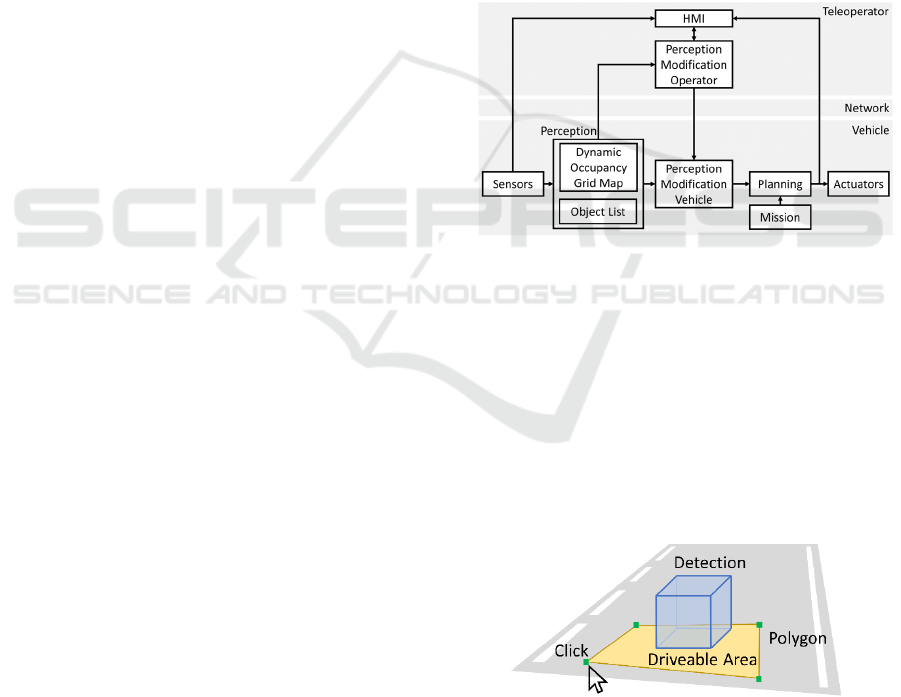

The goal of the presented method is to support the AV

in situations where the vehicle’s trajectory planner

cannot find a feasible trajectory due to a mistaken

obstructing detection. The automation architecture at

hand is the sense-plan-act architecture as depicted at

the bottom of Figure 1. The interface is placed

between the output of the perception module and the

input to the planning module. The module is called

perception modification as visualized in Figure 1.

At the vehicle, data routing in the sense-plan-act

architecture is changed. Under normal vehicle

operation, the output of perception is the direct input

for planning. In perception modification mode

however, the perception modification vehicle module

is placed between the perception and planning

module. Consequently, the input to the planning

module is the possibly modified output from the

perception modification module. The perception is as

commonly used represented by a dynamic occupancy

grid map and an object list (Pendleton et al., 2017;

Gies et al., 2018).

Figure 1: Architecture of the perception modification

concept.

4.2 The Perception Modification

Module

The teleoperator interacts with a human-machine-

interface (HMI). The HMI has two essential

functions: First, it provides the teleoperator with the

required information about the current situation.

Second, it accepts the teleoperator’s input in regard to

the drivable area. Figure 2 depicts these functions.

Figure 2: Schematic depiction of the teleoperator’s HMI.

Detections are illustrated as 3D objects with their actual

dimensions. The driveable area can be marked.

The required information about the current situation

is:

VEHITS 2021 - 7th International Conference on Vehicle Technology and Intelligent Transport Systems

408

Video streams

Objects

Dynamic occupancy grid map

Mission or desired behavior

Current trajectory

The perception modification module must have at

least these functions:

Take the drivable area as an input

Recognize detections within that area

Tag those detections as ‘ignored’

The teleoperator is responsible for supervision of

the marked area. As soon as the marked area is not

drivable anymore, the teleoperator has to withdraw

the marked area. In order to have enough time to do

so, the maximum speed of the AV should be restricted

while driving based on the modified perception data.

The consequences of the perception modification

have to be comprehended by the teleoperator.

Therefore, two modes within the perception

modification are proposed: Planning mode and

Driving mode. In planning mode, the consequences

of the perception correction are only visualized, but

not performed. This includes the affected detections

and the planned vehicle’s trajectory. During driving

mode, the vehicle acts according to the modified

perception.

4.3 Shared Responsibility

The teleoperator only has responsibility for the

marked area. Therefore, the remaining area is not

affected by the perception modification and will still

be handled by the vehicle. Furthermore, the plan and

act part of the sense-plan-act architecture is carried

out by the vehicle completely independently. The

teleoperator does not intervene in any modules other

than the perception modification module.

The additional responsibility of the teleoperator is

it to identify situations that are not resolvable with the

perception modification concept. Examples are

objects that must not be ignored or occlusions or blind

spots that hinder the teleoperator’s view.

5 IMPLEMENTATION

The following section describes the experimental

implementation of the perception modification

concept in a simulation environment.

5.1 System Setup

The experimental setup aims to resemble an AV and

its behavior in the situation where a false-positive is

blocking the all possible trajectories of the vehicle. A

simulator is utilized to provide sensor data, ego

vehicle motion and the environment. The author used

the LGSVL simulator for that purpose (Rong et al.,

2020). The considered software modules were

implemented in C++ and made use of the ROS

Melodic framework. Both a grid map representation

as well as an object list were implemented and

presented to demonstrate the compatibility with the

system architecture. The implemented setup based on

the architecture depicted in Figure 1.

5.2 The Algorithms

The HMI module and the perception modification

module are the central modules under consideration

here.

Figure 3 depicts the HMI, that is mainly based on

Georg and Diermeyer (2019). It visualizes the

vehicle’s position on a 3D plane and projects the

camera feeds onto a sphere which moves along with

the vehicle.

Figure 3: The teleoperator’s view in the HMI module.

Detected objects (blue), lidar reflections (red points), the

occupancy grid map (red rectangles) and the current

trajectory (white lines) are visualized as 3D objects.

The HMI was extended. Whenever the

teleoperator clicks within that visualization, the 3D

position of the click is processed by the perception

modification module. Keyboard presses are treated

similarly. With these features, the teleoperator creates

polygons on the floor. These polygons are interpreted

by the perception modification module as the drivable

area. With keyboard presses, the status of the

perception modification module is changed from

‘planning’ to ‘driving’ and back. In planning mode,

the teleoperator creates polygons and sees the

potential effect of those changes to the vehicle

behavior. The vehicle’s new planned path is

visualized. However, the planned path is not

conducted by the vehicle in ‘planning’ mode. First,

the teleoperator has to confirm and switch into

‘driving’ mode. In driving mode, the vehicle drives

along the planned path.

The grid map module at the vehicle side aims to

resemble a dynamic occupancy grid map similar to

The Perception Modification Concept to Free the Path of An Automated Vehicle Remotely

409

published grid maps (Nuss et al., 2016). For the sake

of simplicity, the grid map at hand is constructed

based on the single-layer lidar information and makes

use of the ANYbotics grid map library (Fankhauser

und Hutter, 2016).

The detection module is also based on the

reflections provided by the single-layer lidar sensor.

The module provides 3D objects described with

position, dimension and velocity.

The planning module as depicted in Figure 1 is

kept comparably elementary. A module creates a

straight path based on the current position with a fixed

maximum velocity. A collision detection module

reacts to occupied fields or hindering objects and

reduces the absolute velocity of the velocity profile of

the straight path. Therefore, the vehicle reacts to

hindering objects with a standstill.

5.3 Simulating Non-existing Hindering

Objects

The grid map module and the object detection module

are extended to create synthetic false positives. This

feature reproduces the situations described in

subsection 3.3. In the case of a false positive detection

in the object list, an object without a real physical

representation in form of lidar reflections can be

created. Figure 4 shows such a false positive detection

in front of the ego vehicle. Similarly, synthetic false

positives can be created in the grid map.

Figure 4: False positive detected object (red) in front of the

ego vehicle enclosed by a clicked polygon (green points,

orange area).

6 RESULTS

The following tests were conducted in order to show

the feasibility of the perception modification concept

and to draw conclusions for further improvements

and open questions. The tests cases are:

False positive in the object list and no wrong

detection in the grid map.

False positive in the grid map and no wrong

detection in the object list.

False positive in the grid map and in the object

list.

False positive in grid map 1m in front of a real

object and no wrong detection in object list.

Correct detection

Therefore, the test cases cover the range of

applications of the perception modification concept.

In the scenarios representing an unnecessary stand

still, the perception modification concept enabled the

teleoperator to modify the incorrect perception and

the vehicle to continue driving. Situations regarding a

correct standstill were recognized as such.

7 DISCUSSION

The conducted tests show the feasibility of the

perception modification concept. However, further

considerations are presented to enable

implementation on the real vehicle.

Additional latency to the system setup arises due

to the transmission of the object list and the grid map

representation over the mobile network twice. This is

not considered in the system setup yet. However, its

influence can be estimated. Mean ping times are

estimated to be around 45 to 59 milliseconds

(Neumeier et al., 2019). The additional latency would

be equal or higher than these values. Only the

teleoperator has to compensate this latency during the

supervision task. The vehicle-internal automation

pipeline is not affected by that latency.

Finally, maps are not considered at the moment.

They are usually part of an AV and can provide

mistaken obstructing objects as well. In that case, the

current interface could be extended to be capable of

modifying map data.

8 CONCLUSIONS

A perception modification concept to free the path of

an AV is developed and demonstrated in this paper.

The results of the simulation test cases show that the

teleoperation concept enables teleoperators to resolve

the respective situations appropriately. The situation

addressed is a mistaken hindering detection that

causes a standstill of the AV. The detection is either

a false positive or an indeterminate object which can

be ignored. The teleoperator marks the drivable area

and enables the AV to continue its drive.

It is expected that the teleoperators are therefore

imposed with less workload compared to direct

VEHITS 2021 - 7th International Conference on Vehicle Technology and Intelligent Transport Systems

410

teleoperation concepts. This has to be shown in future

studies.

ACKNOWLEDGEMENTS

Johannes Feiler initiated the idea of the paper and

implemented the perception modification concept.

The teleoperation system at hand is a collaborative

achievement of the institute teleoperation team,

which consists of Simon Hoffmann, Andreas

Schimpe, Domagoj Majstorovic and Jean-Michael

Georg. Dr. Frank Diermeyer contributed to the

essential concept of the research project. He revised

the manuscript critically for important intellectual

content and gave final approval of the version to be

published. He agrees with all aspects of the work.

Matthias Vogelmeier contributed with ideas in his

prior master thesis about the object list manipulation

in this work. This research is accomplished within the

“UNICARagil” project. We acknowledge the

financial support for the project by the Federal

Ministry of Education and Research of Germany

(BMBF).

REFERENCES

Bauer, Daniel; Kuhnert, Lars; Eckstein, Lutz (2019): Deep,

spatially coherent Inverse Sensor Models with

Uncertainty Incorporation using the evidential

Framework. In: 2019 IEEE Intelligent Vehicles

Symposium (IV).

Biehler, Martin; Geigenfeind, Mario; Peters, Bardo (2017):

Verfahren und System zum Fernsteuern eines

Fahrzeugs. Veröffentlichungsnr: WO 2019/024963 A1

WIPOPCT.

Blissing, Björn; Bruzelius, Fredrik; Eriksson, Olle (2016):

Effects of Visual Latency on Vehicle Driving Behavior.

In: Association for Computing Machinery (Hg.): ACM

Trans. Appl. Percept., Bd. 14. 14. Aufl., S. 1–12.

Bojarski, Mariusz; Testa, Davide Del; Dworakowski,

Daniel; Firner, Bernhard; Flepp, Beat; Goyal, Prasoon

et al. (2016): End to End Learning for Self-Driving Cars.

In: CoRR.

Bout, Martijn; Brenden, Anna Pernestål; Klingegård,

Maria; Habibovic, Azra; Böckle, Marc-Philipp (2017):

A Head-Mounted Display to Support Teleoperations of

Shared Automated Vehicles, S. 62–66. DOI: 10.1145/

3131726.3131758.

Chucholowski, Frederic Emanuel (2015): Eine

vorausschauende Anzeige zur Teleoperation von

Straßenfahrzeugen. Beseitigung von

Zeitverzögerungseffekten im Fahrer-Fahrzeug-

Regelkreis. Dissertation. TUM.

Donges, Edmund (1982): Aspekte der Aktiven Sicherheit

bei der Führung von Personenkraftwagen. In:

Automobil-Industrie 27 (02), S. 183–190.

Durand, Sonia; Benmokhtar, Rachid; Perrotton, Xavier

(2019): 360° Multisensor Object Fusion and Sensor-

based Erroneous Data Management for Autonomous

Vehicles. In: IEEE Sensors Applications Symposium.

Fairfield, Nathaniel; Herbach, Joshua Seth; Furman, Vadim

(2019): Remote assistance for autonomous vehicles in

predetermined situations. Veröffentlichungsnr: US 102

41508 B2.

Fankhauser, Péter; Hutter, Marco (2016): A Universal Grid

Map Library: Implementation and Use Case for Rough

Terrain Navigation. In: Robot Operating System (ROS)

– The Complete Reference (Volume 1). Unter Mitarbeit

von A. Koubaa: Springer.

Feiler, Johannes; Hoffmann, Simon; Diermeyer, Frank

(2020): Concept of a Control Center for an Automated

Vehicle Fleet. In: IEEE International Conference on

Intelligent Transportation Systems.

Georg, Jean-Michael; Diermeyer, Frank (2019): An

Adaptable and Immersive Real Time Interface for

Resolving System Limitations of Automated Vehicles

with Teleoperation. In: 2019 IEEE International

Conference on Systems, Man and Cybernetics (SMC).

IEEE International Conference on Systems, Man and

Cybernetics. Bari, Italy, S. 2659–2664.

Georg, Jean-Michael; Feiler, Johannes; Diermeyer, Frank;

Lienkamp, Markus (2018): Teleoperated Driving, a

Key Technology for Automated Driving? Comparison

of Actual Test Drives with a Head Mounted Display

and Conventional Monitors. In: International

Conference on Intelligent Transportation Systems.

Georg, Jean-Michael; Feiler, Johannes; Hoffmann, Simon;

Diermeyer, Frank (2020): Sensor and Actuator Latency

during Teleoperation of Automated Vehicles. In: IEEE

Intelligent Vehicles Symposium 2020.

Gies, Fabian; Danzer, Andreas; Dietmayer, Klaus (2018):

Environment Perception Framework Fusing Multi-

Object Tracking, Dynamic Occupancy Grid Maps and

Digital Maps. In: International Conference on

Intelligent Transportation Systems.

Gnatzig, S.; Chucholowski, Frederic; Tang, Tito; Lienkamp,

M. (2013): A System Design for Teleoperated Road

Vehicles.

Gnatzig, Sebastian (2015): Trajektorienbasierte Tele-

operation von Straßenfahrzeugen auf Basis eines

Shared-Control-Ansatzes. Technische Universität

München, München. Lehrstuhl für Fahrzeugtechnik.

Hosseini, Amin (2018): Conception of Advanced Driver

Assistance Systems for Precise and Safe Control of

Teleoperated Road Vehicles in Urban Environments.

Dissertation, München.

Jo, Jun; Tsunoda, Yukito; Stantic, Bela; Liew, Alan Wee-

Chung (2017): A Likelihood-Based Data Fusion Model

for the Integration of Multiple Sensor Data: A Case Study

with Vision and Lidar Sensors. In: Robot Intelligence

Technology and Applications 4, S. 489–500.

Kamann, Alexander; Held, Patrick; Perras, Florian;

Zaumseil, Patrick; Brandmeier, Thomas; Schwarz,

The Perception Modification Concept to Free the Path of An Automated Vehicle Remotely

411

Ulrich T. (2018): Automotive Radar Multipath

Propagation in Uncertain Environments. In:

International Conf. on Intelligent Transportation Systems.

Kang, Lei; Zhao, Wei; Qi, Bozhao; Banerjee, Suman

(2018): Augmenting Self-Driving with Remote Control.

Challenges and Directions, S. 19–24. DOI:

10.1145/3177102.3177104.

Kroop, Benjamin; Ross, William; Heine, Andrew (2019):

Teleassistance Data Encoding for Self-Driving

Vehicles. Angemeldet durch Uber Technologies Inc.

Veröffentlichungsnr: US 10,202,126 B2.

Levinson, Jesse; Kentley, Timothy; Ley, Gabriel; Gamara,

Rachad; Rege, Ashutosh (2017): Teleoperation system

and method for trajectory modification of autonomous

vehicles. Veröffentlichungsnr: WO 2017/079219 Al.

Liu, Ruilin; Kwak, Daehan; Devarakonda, Srinivas; Bekris,

Kostas; Iftode, Liviu (2016): Investigating Remote

Driving over the LTE Network. In: ACM International

Conf. on Automotive User Interfaces and Interactive

Vehicular Applications 2016, S. 264–269.

Lockwood, Amanda; Gogna, Ravi; Linscott, Gary;

Caldwell, Timothy; Kobilarov, Marin; Orecchio, Paul

et al. (2019): Interactions between vehicle and

teleoperations system. Veröffentlichungsnr: US 2019/

0011912 A1.

Mita, Seiichi; Yuquan, Xu; Ishimaru, Kazuhisa; Nishino,

Sakiko (2019): Robust 3D Perception for any

Environment and any Weather Condition using

Thermal Stereo. In: 2019 IEEE Intelligent Vehicles

Symposium (IV).

Neumeier, Stefan; Walelgne, Ermias Andargie; Bajpai,

Vaibhav; Ott, Jörg; Facchi, Christian (2019):

Measuring the Feasibility of Teleoperated Driving in

Mobile Networks. In: 2019 Network Traffic Measure-

ment and Analysis Conf. DOI: 10.23919/TMA.2019.

8784466.

Nuss, Dominik; Reuter, Stephan; Thom, Markus; Yuan,

Ting; Krehl, Gunther; Maile, Michael et al. (2016): A

Random Finite Set Approach for Dynamic Occupancy

Grid Maps with Real-Time Application. Ulm

University, Ulm. Institute of Measurement, Control and

Microtechnology. Online verfügbar unter http://

arxiv.org/pdf/1605.02406v2.

Pan, Yunpeng; Cheng, Ching-An; Saigol, Kamil; Lee,

Keuntaek; Yan, Xinyan; Theodorou, Evangelos A.;

Boots, Byron (2017): Agile Off-Road Autonomous

Driving Using End-to-End Deep Imitation Learning. In:

CoRR abs/1709.07174.

Pendleton, Scott; Andersen, Hans; Du, Xinxin; Shen,

Xiaotong; Meghjani, Malika; Eng, You et al. (2017):

Perception, Planning, Control, and Coordination for

Autonomous Vehicles. In: MDPI Machines, Bd. 5, S.

1–54.

Pitzer, Benjamin; Styer, Michael; Bersch, Christian;

DuHadway, Charles; Becker, Jan (2011): Towards

perceptual shared autonomy for robotic mobile

manipulation. In: IEEE International Conf. on Robotics

and Automation. 2011 IEEE International Conf. on

Robotics and Automation (ICRA). Shanghai, China,

09.05.2011 - 13.05.2011.

Rong, Guodong; Shin, Byung Hyun; Tabatabaee, Hadi; Lu,

Qiang; Lemke, Steve; Možeiko, Mārtiņš et al. (2020):

LGSVL Simulator: A High Fidelity Simulator for

Autonomous Driving. Online verfügbar unter http://

arxiv.org/pdf/2005.03778v3.

Ross, Bill; Bares, John; Stager, David; Jackel, Larry;

Perschbacher, Mike (2008): An Advanced

Teleoperation Testbed. In: Field and Service Robotics.

Tang, Tito; Chucholowski, Frederic; Yan, Min; Lienkamp,

Markus (2013): A Novel Study on Data Rate by the

Video Transmission for Teleoperated Road Vehicles.

In: International Conf. on Intelligent Unmanned

Systems. 9. Aufl.

Tang Chen, Tito Lu (2014): Methos for improving the

control of teleoperated vehicles. Dissertation.

Technische Universität München, München. Lehrstuhl

für Fahrzeugtechnik.

J3016, June, 2018: Taxonomy and Definitions for Terms

Related to Driving Automation Systems for On-Road

Motor Vehicles.

ETSI TR 103 562, 2019-12: Technical Report 103 562 -

V2.1.1.

Tikanmäki, Antti; Bedrník, Tomáš; Raveendran, Rajesh;

Röning, Juha (2017): The remote operation and

environment reconstruction of outdoor mobile robots

using virtual reality. In: Proceedings of 2017 IEEE

International Conf. on Mechatronics and Automation.

Xiao, Yi; Codevilla, Felipe; Gurram, Akhil; Urfalioglu,

Onay; López, Antonio M. (2019): Multimodal End-to-

End Autonomous Driving. In: Computer Vision and

Pattern Recognition. Online verfügbar unter http://arxiv.

org/pdf/1906.03199v1.

Xu, Huazhe; Gao, Yang; Yu, Fisher; Darrell, Trevor

(2016): End-to-end Learning of Driving Models from

Large-scale Video Datasets. In: CoRR.

Zhang, Jiakai (2019): End-to-End Learning for

Autonomous Driving. New York University, New York.

Department of Computer Science.

VEHITS 2021 - 7th International Conference on Vehicle Technology and Intelligent Transport Systems

412