To What Scale Are Conversational Agents Used by Top-funded

Companies Offering Digital Mental Health Services for Depression?

Aishah Alattas

1a

, Gisbert W. Teepe

2b

, Konstantin Leidenberger

3c

, Elgar Fleisch

1,2,3 d

,

Lorraine Tudor Car

4,5 e

, Alicia Salamanca-Sanabria

1f

and Tobias Kowatsch

1,2,3 g

1

Future Health Technologies, Singapore-ETH Centre, Campus for Research Excellence and Technological Enterprise

(CREATE), Singapore

2

Department of Management, Technology, and Economics (D-MTEC), ETH Zurich, Zurich, Switzerland

3

Institute of Technology Management (ITEM), University of St. Gallen, St. Gallen, Switzerland

4

Family Medicine and Primary Care, Lee Kong Chian School of Medicine, Nanyang Technological University Singapore,

Singapore

5

Department of Primary Care and Public Health, School of Public Health, Imperial College London, London, U.K.

lorraine.tudor.car@ntu.edu.sg, alicia.salamanca@sec.ethz.ch, tkowatsch@ethz.ch

Keywords: Conversational Agent, Depression, Mental Health, Venture Capital, Top-funded.

Abstract: There is strong support in the literature for the use of conversational agents (CAs) in digital mental healthcare

along with a recent increase in funding within digital mental health, indicating the fast growth of the industry.

However, it is unknown to what extent CAs are leveraged in these digital interventions for depression. The

aim of this study is to therefore explore the scale of CA use in top-funded digital mental health companies

targeting depression and describe what purposes they are used for. Companies were identified through

searching venture capital databases and screened for the presence and purpose of use of CAs in their

interventions for depression. It was found that only 7 out of the 29 top-funded companies used a CA in their

intervention. The most common purpose of CA use was education, followed by assistance, training and

onboarding. None of the interventions used CAs for elderly assistance, diagnosis or prevention. These results

indicate that the industry uptake of CAs in digital interventions for depression within top-funded companies

is low. Future work can look into using CAs in areas which this analysis found they are not currently used

such as in tailoring to different target populations and in preventing depression.

1 INTRODUCTION

Conversational agents (CAs), also known as chatbots,

are computer programs that use techniques from

artificial intelligence and machine learning to mimic

humanlike verbal, written and visual behaviours to be

able to converse and interact with human users

(Vaidyam et al., 2019). The first well-established CA,

ELIZA, was designed in 1966 and programmed to

emulate a Rogerian psychotherapist via typed text

a

https://orcid.org/0000-0003-4777-900X

b

https://orcid.org/0000-0002-2264-9797

c

https://orcid.org/0000-0003-3304-6673

d

https://orcid.org/0000-0002-4842-1117

e

https://orcid.org/0000-0001-8414-7664

f

https://orcid.org/0000-0002-2756-5592

g

https://orcid.org/0000-0001-5939-4145

(Weizenbaum, 1966). Presently, over fifty years later,

the use of CAs is widespread in healthcare and has

been used in multiple points in the continuum of care

such as in prevention through the modification of

lifestyle behaviours, diagnosis and detection, and

treatment and monitoring for a multitude of health

conditions (Tudor Car et al., 2020; Schachner et al.,

2020; Berube et al., 2020).

Since the days of ELIZA, interest in CAs have

fluctuated; however, the boom in technological

Alattas, A., Teepe, G., Leidenberger, K., Fleisch, E., Car, L., Salamanca-Sanabria, A. and Kowatsch, T.

To What Scale Are Conversational Agents Used by Top-funded Companies Offering Digital Mental Health Services for Depression?.

DOI: 10.5220/0010413308010808

In Proceedings of the 14th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2021) - Volume 5: HEALTHINF, pages 801-808

ISBN: 978-989-758-490-9

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

801

advancements together with the increasing focus on

mental health in the past twenty years has seen a

significant amount of research in the use of CAs

particularly in mental health conditions (Gaffney et

al., 2019). This is owing to the utility of CAs in

bridging the gap in the perceived trade-off between

barriers to receiving standard face-to-face therapies

for mental illness such as waiting lists, costs,

geography and stigma and the often static nature of

self-guided digital mental health interventions that do

not respond dynamically to individual needs and

preferences (Miner et al., 2016). In this way, CAs

have the potential to create a therapeutic alliance with

the user, which is a key feature of the overall

effectiveness of therapy on patient outcomes such as

symptom severity, treatment compliance and patient

satisfaction, without the involvement of a human

therapist (Lopez et al., 2019).

In a recent review in the mental health setting,

CAs were used for a variety of purposes including

therapy, training, screening, self-management,

counselling, education and diagnosing (Abd-alrazaq

et al., 2019). Another review reported on the

effectiveness of CAs for mental health applications

which showed that they are effective in reducing

levels of depression, anxiety and perceived stress as

well as increasing levels of self-esteem and

psychological wellbeing (Tudor Car et al., 2020). In

terms of user experience, ratings of engagement,

satisfaction, acceptance and helpfulness were in

favour of the use of CAs in these mental health

applications (Tudor Car et al., 2020). Moreover, it has

also been found that the risk of harm from the use of

CAs in mental health is extremely low, with only 1 in

759 participants reported to have a related adverse

event incident (Vaidyam et al., 2019). This particular

incident involved a patient with schizophrenia

developing paranoia and refusing to continue using a

CA intended to promote antipsychotic medication

adherence after a few days, which highlights the

caution that may need to be taken when using CAs in

those with psychotic presentations (Bickmore et al.,

2010).

While mental health disorders in general account

for a large proportion of global disease burden,

depressive disorders are a particular area of concern

as they are the third leading cause of all-age years

lived with disability after low back pain and headache

disorders (James et al., 2018). Depression is

estimated to affect an estimated over 300 million

people worldwide and can greatly impair functioning

and quality of life both through the direct effects of

depressive symptoms as well as through its

association with poor physical health outcomes,

resulting in increased morbidity and mortality (Liu et

al., 2020). Furthermore, latest figures report an

almost 50% increase in the global incident cases of

major depressive disorder worldwide over the past

thirty years, calling for urgent action to actively

intervene and control its occurrence and exacerbation

(Liu et al., 2020).

The manifestation and expression of depression is

complex, with a large amount of heterogeneity both

between and within individuals depending on the

different contexts and challenges faced at different

times. Longitudinal studies have shown that those

experiencing depression may spend up to almost 40%

of their time being asymptomatic, and that even when

symptomatic, patients had a mean of 1 symptom

severity level fluctuation per year with more

fluctuations being associated with poorer function

and quality of life (Vergunst et al., 2013).

Currently, most digital health interventions

targeting depression are modelled to be able to

manage acute symptoms, but do not consider these

important time and context specific aspects that can

alter the experience, and therefore required

intervention for depression (Kornfield et al., 2020).

This mismatch has been cited as a possible reason for

the high levels of dropouts and non-adherence rates

to digital interventions for depression, presenting an

opportunity for the adaptive nature of CAs to

accommodate the temporal dynamics that are at play

in efforts to increase retention and consequently

effectiveness levels of such tools.

Although the evidence base for CAs in mental

health has seen a steady increase over the past two

decades with promising results on their ability to

provide effective, engaging and scalable digital

mental health interventions, little is known on their

actual uptake in the industry. In 2019 alone, venture

capital companies invested a record high of 637

million US Dollars in over 60 mental health

companies, a notable proportion of which went

specifically into digital mental health (Shah and

Berry, 2020). In fact, the latest reports show that a

new record in the deal volume in digital mental health

companies was reached with 68 deals in the third

quarter of 2020 alone (CB insights, State of

Healthcare Q3’20 Report). It thus piques an interest

in the extent to which these new, highly-funded

companies are leveraging CAs in their digital mental

health interventions.

This paper aims to explore the scale at which CAs

are used by top-funded digital mental health

intervention companies and describe what purposes

they are used for. To gain a deeper insight, the scope

of analysis will be narrowed down to those top-

Scale-IT-up 2021 - Workshop on Scaling-Up Healthcare with Conversational Agents

802

funded companies with a main focus on targeting

depression, seeing its high prevalence, burden and

potential for CA technology to enhance therapeutic

approaches in this area. On top of this, the literature

also indicates depression as the most commonly

targeted disorder in the use of CAs in mental health

(Gaffney et al., 2019 ; Abd-alrazaq et al., 2019).

2 METHOD

2.1 Companies

Companies were searched using two venture capital

databases, Crunchbase Pro and PitchBook, which

have been identified to be among the most

comprehensive and accurate of the commonly used

venture capital databases in academic reports and

articles as well as used by investors (Retterath and

Braun, 2020). The search terms (Depression OR

Mental Health) AND (Health care OR Apps OR

Digital health OR Big Data OR Artificial

Intelligence) were entered into Crunchbase Pro and

(Depression OR Mental Health) AND (Digital

Health OR Application Software OR Big Data OR

Artificial Intelligence & Machine Learning OR

Mobile) entered into PitchBook to identify the

companies that were relevant to the field of digital

mental health through October 24, 2020. The

differences in search terms between the two venture

capital databases are a reflection of the specific

verticals that exist on their search options. A filter to

only include the companies that received more than a

million US dollars was also applied when searching

in both databases in order to limit the amount of

companies returned that would not be eventually

included in our list of top-funded digital mental health

companies.

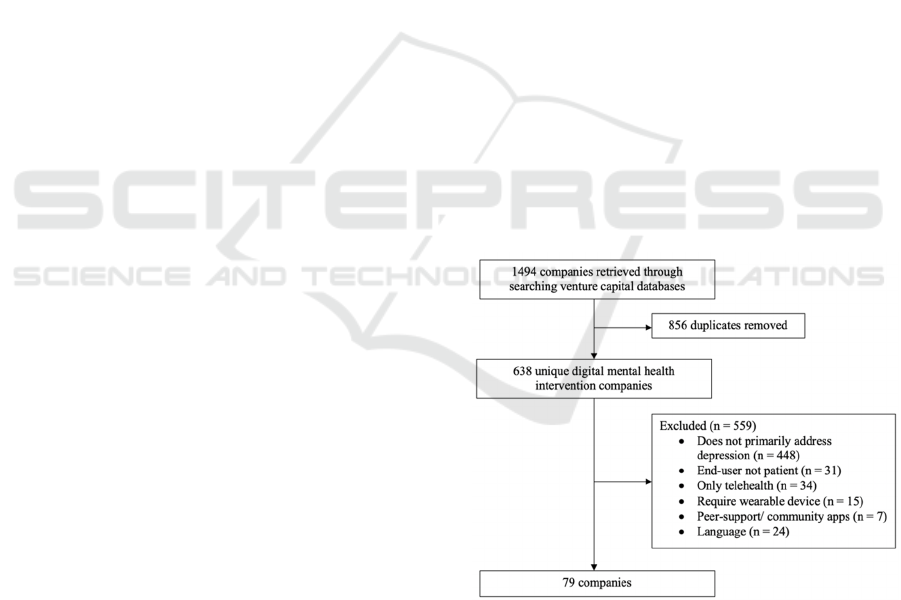

Figure 1 represents the company selection process

based on an inclusion and exclusion criteria defined a

priori. We excluded companies that did not primarily

address depression, that is, for example, companies

offering general health and wellness interventions in

terms of diet and exercise which make

unsubstantiated claims to be able to improve mood

and help with depression. We also excluded

companies where the end-user is not the “patient”,

where patient can be defined as the individual who

will receive intervention for depressive symptoms.

Hence, digital mental health solutions targeted at

healthcare providers for managing their caseload

remotely were excluded as the sphere of this study

focuses on the use in CAs in the therapeutic

engagement with individuals with depression.

For similar reasons, companies that only

offered telehealth services such as triaging and

matching between service users and service providers

without any additional active components were

excluded. Here, we take the definition of “active

components” from the multiphase optimisation

strategy which states a component is “active” if there

is empirical evidence of its therapeutic effectiveness

via measures including but not limited to statistical

significance or effect size (Collins et al., 2007). Other

excluded companies were those that required the use

of a wearable device as this technology has been cited

as being a barrier to the use of digital health

interventions due to factors such as comfort, design,

battery life and connectivity issues (Loncar-Turukalo

et al., 2019), although companies in which the use of

a wearable device was an optional add-on feature

remained included. Those that were purely peer

support or forum platforms and those that were not

available in the English language were also excluded.

The final list of companies was validated on their

credibility, potential utility and validity as an

intervention for depression by two experts with

extensive industry and academic experience in the

field of digital mental health. This was done to ensure

that the major players within the digital mental health

field were not overlooked, while remaining focussed

on the specific area of depression that is being

investigated in this study.

Figure 1: Flow chart of the company selection process.

2.2 Conversational Agent (CA)

A CA was coded to be present in the digital mental

health intervention if there was evidence of the use of

a dialogue system that processes and automatically

responds to human language via text, speech or visual

stimuli. For those interventions that could be

To What Scale Are Conversational Agents Used by Top-funded Companies Offering Digital Mental Health Services for Depression?

803

accessed, the presence or absence of a CA was

established through direct use of the platform and for

those interventions that were unable to be accessed,

this was established through reviewing the

information provided by the intervention company on

their website as well as in peer-reviewed literature.

To determine the purposes that the CAs are used for,

we have coded our findings based on an adapted

version of a taxonomy of CAs in health proposed by

Montenegro et al (Montenegro et al., 2019). Table 1

provides a description of the coded CA uses.

Table 1: Coding framework used to classify the purpose of

use of CAs present in digital mental health interventions.

Purpose of

use of CA

Description

Assistance The CA provides assistance to increase

or improve a behaviou

r

Training The CA trains the user in a health related

b

ehaviou

r

Elderly

assistance

The CA improves a health condition in

the elderly population

Diagnosis The CA gives a mental health diagnosis

Education The CA provides information to increase

mental health literac

y

Prevention The CA aims to prevent the onset, a

deterioration or a relapse of a mental

health condition

Onboarding The CA is used to facilitate the

onboarding process

Questions

and

services

The CA is used for a support purpose

such as technical support or answering

frequently asked questions

2.3 Analysis

The list of digital mental health companies to be

included in our analysis, the presence or absence of a

CA and the purposes that they serve in their

respective interventions were evaluated

independently by two authors (AA and KL) based on

predefined criteria. Any disagreements were resolved

through a discussion and eventual consensus between

the two authors, or brought up to a third author for

adjudication (GWT) where necessary.

3 RESULTS

Out of the 79 companies extracted from the venture

capital databases that met our inclusion and exclusion

criteria, we analysed the 30 top-funded companies as

they accounted for 97.6% of the total funding in all

79 companies, giving a representative and

comprehensive insight into the pattern of common

features present in these interventions. During our

analysis, one of the companies had put a temporary

halt on the intervention being made available on the

market until further notice and was excluded. This

resulted in the final list consisting of 29 top-funded

digital mental health companies.

7 of the 29 interventions (24%) used a CA,

with the most common purpose of use being

education, which was seen in 6 of the 7 (86%) of

interventions that used a CA. This was closely

followed by assistance, training and onboarding

which were each seen in 5 of the 7 (71%)

interventions that used a CA. Only 1 (14%)

intervention used a CA for questions and services.

None of the interventions used CAs for the purposes

of elderly assistance, diagnosis or prevention. Besides

the one intervention that used a CA solely for

onboarding purposes, the other 6 out of 7

interventions used a CA for multiple purposes. The

combination of purposes of use of CA of assistance,

training and education were prevalent in 5 of the 6

interventions that used a CA for multiple purposes.

Table 2 shows a list of the 7 digital mental health

intervention companies that use a CA, a description

of the intervention they offer and how the CA is used.

4 DISCUSSION

4.1 Principal Findings

The results of this analysis indicate that the scale at

which conversational agent and chatbot technology is

utilised by the 29 top-funded digital mental health

intervention companies is rather low at 24%. Some

parallels can be drawn with previous reviews of the

features of CAs in mental health, particularly that

training was reported to be a common use of CAs in

digital mental health interventions and that mental

health diagnosis was reported to be on the rarer side

of CA usage purpose, or in the instance of this

exploratory analysis, not used for this purpose at all

(Abd-alrazaq et al., 2019). Differences were also

seen, one being that while education was the most

common use of CAs in this analysis, other reviews

report education to be among the least common use

of CAs in mental health (Abd-alrazaq et al., 2019). It

should be noted however that this difference is only

striking with regards to ranking and that looking at

absolute values, the difference becomes much less

stark with this study reporting 6 interventions that use

CAs for education and the other reporting 4 (Abd-

alrazaq et al., 2019).

Scale-IT-up 2021 - Workshop on Scaling-Up Healthcare with Conversational Agents

804

Table 2: Description of intervention and CA use of the 7 out of 29 top-funded digital mental health intervention companies

for depression.

Top-funded

com

p

anies

Description Purpose of CA

Happify The Happify platform provides engaging

activities and games that target overcoming

negative thoughts, stress and life’s challenges

for better overall mental health.

Assistance,

Training,

Education,

Woebot Woebot is an artificial intelligence based chat

tool that is used as a delivery mechanism for a

suite of clinically-validated therapy programs

including psychoeducation, mood monitoring

and in-the-moment guidance that target

behavioural health domains such as sleep, grief

and financial worry as well as clinical domains

like depression, anxiety and substance abuse.

Assistance,

Training,

Education,

Onboarding

Unmind Unmind is a workplace mental health platform

that aims to empower employees to measure,

understand and improve their mental wellbeing

through self-guided programs and in-the-

moment exercises.

Education,

Onboarding,

Questions and Services

Shine Shine describes itself as a daily self-care app

rooted in Acceptance Commitment Therapy

which provides daily meditations, journaling,

mood tracking and the option to connect to an

online communit

y

of users.

Assistance,

Training,

Education

My Online Therapy The My Online Therapy platform offers

matching services with a clinical psychologist

relevant to users’ specific needs, symptoms and

circumstances, video or chat therapy with the

matched therapist and evidence-based self-care

tools.

Onboarding

Wysa Wysa is an artificial intelligence based chat tool

that “will listen and ask the right questions to

help you figure things out”. The platform also

includes self-care tool packs that users can

choose based on specific issues they are facing

such as mood, anxiety, trauma, body image,

slee

p

and loneliness.

Assistance,

Training,

Education,

Onboarding

Youper Youper is an artificial intelligence based therapy

platform that supports users’ mental health

anytime and anywhere through “talking to

Youper like texting a therapist or caring friend”.

Users start by completing a Mental Health

Checkup to personalize Youper to their needs.

There are options to set daily check ins and track

and monitor symptom reduction.

Assistance,

Training,

Education,

Onboarding

4.2 Target Population

Interestingly, the finding that none of the chatbots

were used for the purpose of elderly assistance

mirrors the landscape of a lack of interventions for

depression targeted towards geriatric populations,

despite the high prevalence, poor prognosis and

unique features that contribute to depression in this

demographic such as age related diseases and frailty,

loss of freedom and social isolation (Parkar, 2015).

This brings up a discussion on target populations,

where with the exception of 1 of the companies using

a CA targeted towards employees, the other 6

companies are not targeted towards any specific

demographic.

It is well-established that the factors influencing

the aetiology, expression and prognosis of depression

differ in different populations such as in women

(Albert, 2015), adolescents (Cheung et al., 2018) and

dependent on different cultural backgrounds (Haroz et

To What Scale Are Conversational Agents Used by Top-funded Companies Offering Digital Mental Health Services for Depression?

805

al., 2017). Individually-tailored internet-based or

computerised cognitive behavioural therapy for

depression where users navigate by selecting among

pre-defined response options through which

subsequent content is tailored has been shown to be

effective in reducing depressive symptoms in

naturalistic community settings (Twomey et al.,

2017). CAs can build upon this evidence as a useful

tool to tap into these differences than exist between

populations, to give tailored intervention for

depression that meets individuals unique contexts and

needs in a way that also addresses the benefits of

communicating digitally in a human-like manner.

4.3 Therapeutic Application

The combination of assistance, training and education

that emerged as a core set of purposes that featured in

the CAs in this study can also be thought of as key

elements in popular, evidence-based psychotherapies

for depression in face-to-face and remote virtual

settings such as cognitive behavioural therapy and

mindfulness. This is in that these psychotherapeutic

paradigms are largely focused on education in terms

of psychoeducating individuals on the consequences

of certain habitual patterns of thoughts, feelings and

behaviours, training them to notice these patterns and

providing assistance in implementing techniques to

change maladaptive patterns into more adaptive ones

that can help alleviate symptoms of depression and

improve functioning (Beck, 2011)( Segal and

Teasdale 2018). Indeed, reviews have supported that

it is common for chatbots in mental health to be used

to deliver therapeutic components rooted in cognitive

behavioural therapy, mindfulness and other therapies,

which also aligns with our findings (Tudor Car et al.,

2020; Gaffney et al., 2019).

The finding that none of the CAs were used for

prevention purposes is also in line with the current

landscape, where the prevention of depression has

been largely neglected in comparison to detection and

treatment (Ormel et al., 2019). Reports have

identified reasons for this lack of focus on prevention

to be due to lack of targeting of proximal

determinants of depression such as negative

affectivity, low self-control and poor social and

problem-solving skills as well as prevention of

depression not being structurally and socially

embedded (Ormel et al., 2019). These barriers are

areas that CAs can potentially help to overcome, for

example, in targeting proximal determinants that

exist in the general population, structurally

embedding prevention strategies at national levels

through ease and cost-effectiveness of scalability and

socially embedding through the relatability that

comes with CA communication styles.

In relation to communication styles, although the

mimicking of human-like conversation is widely

reported as a benefit that CAs posses, evidence exists

that may reflect otherwise, especially when sensitive

information is being disclosed such as would be

within mental health and depression. Specifically, not

only was it reported that face-to-face interviews

garnered less accurate responses that are higher in

social desirability compared to the same questions

presented via CA, but that CAs that gave more

relevant and tailored responses to user input also

increased socially desirable response bias compared

to CAs that gave generic responses regardless of user

input (Schuetzler et al., 2018). Other studies have also

supported this notion through findings more relevant

to mental health in that participants who were told

that they were talking to a computer displayed more

intense expressions of sadness than those who were

told that the CA was controlled by a human (Lucas et

al., 2014).

These insights should be taken into consideration

when CAs are being used for therapeutic applications

for depression as it may not serve users in terms

wellbeing and therapeutic outcomes to compromise

on the accuracy of their input in the interest of

tailoring efforts. The key in this instance would be to

determine the minimum amount of tailoring required

for users to develop a therapeutic alliance with the CA

without the CA reaching conversational capability

levels that are too high and thus elicit less accurate

socially desirable responses (Schuetzler et al., 2018).

Miner et al., (2017) has concisely described these

considerations as gaining a better understanding of

users’ “imagined audience” when discussing mental

health with CAs which is highly unclear as opposed

to a traditional in-person consultation where there is

a concrete, visible audience to which the service

users’ impressions and expectations are attached.

4.4 Limitations

The interpretation of the findings of this investigation

into the extent of use of CAs in top-funded digital

mental health companies with interventions targeting

depression should be taken with caution as there is a

high level of variability in the operationalisation of

the terms used to classify the purposes for use of CAs.

For example, Abd-alrazaq et al. (2019) makes a

distinction between CAs that provide therapy versus

training, based on whether the CAs function is rooted

in an established therapeutic paradigm for the former

rather than general skills training such as social skills

Scale-IT-up 2021 - Workshop on Scaling-Up Healthcare with Conversational Agents

806

or job interview skills for the latter. The same was

seen in a review of embodied CAs in clinical

psychology conducted by Provoost et al. (2017),

where in addition to social skills training, the specific

framework of cognitive behavioural therapy was in a

category of its own. A reasonable explanation for

such differences could be that these two reviews

included interventions for autism, of which many are

more focused specifically on social skills training

compared to depression which does not necessitate

such a distinction.

Additionally, the scope of this observational

investigation only focuses on the purpose of use of

CAs in digital mental health interventions targeting

depression. It does not account for the many other

different aspects of CAs that work together and can

influence how effective their integration is on clinical

and user experience outcomes. These include design

characteristics such as whether or not the CA is

embodied, duration of interaction with the CA, its

speech and/or textual output, personality and

aesthetic features like resemblance to human figures

versus other non-human characters (Stal et al., 2020;

Scholten et al., 2017). Existing randomised-

controlled trials tend to look at each of these features

in isolation from the others, which may not be able to

capture the full picture of what makes a CA effective

for a certain person with a certain condition at a

certain time. Making use of optimisation approaches

such as factorial trials, micro-randomised trials or

system identification experiments can be useful in

answering such questions, in order to maximise the

effectiveness and reach of the unique benefits that

CAs can bring to digital mental health interventions

(Hekler et al., 2020).

4.5 Conclusions

Although numerous reviews have provided

compelling support for the added benefits that

integrating a CA into digital mental health

interventions for depression can bring, industry

uptake, at least at the level of top-funded companies,

seems to be relatively low. Despite this, due to the

nascency and the rapidly evolving nature of the field

of digital therapeutics for depression and CA

technology, one could expect the scale at which these

CA tools are implemented to increase over the

coming years, especially with trends such as the

increased number of people who solely use

technology without any in-person visits to address

mental health concerns (Miner et al., 2017). Identified

areas where CAs can be of particular benefit include

in addressing the differing needs that exist in the

intervention of depression between different

demographic groups as well as addressing intra-

individual fluctuations through tailoring and in

preventing depression through targeting its proximal

determinants and embedding prevention structurally

and socially through ease of dissemination.

Future research should look into the establishment

of a taxonomy for categorising the purpose of use of

CAs depression in order to facilitate communication

and comparison. Another important aspect is in

integrating different elements of CA design features

to address a wider scope of determining the efficacy

of CAs in digital interventions. Emerging digital

interventions for depression would benefit from a

collaborative approach between research and

industry, using best practices from each sector to

leverage on the potential that CAs may have on digital

mental health therapeutics. In this regard, the level of

rigor that is encompassed within research settings

combined with industry business models that allow

for rapid and vast dissemination of products lends

itself well to using iterative design processes to

investigate, conceptualise and ultimately create

solutions that can meet the needs of underserved

populations.

REFERENCES

Abd-Alrazaq, A. A., Alajlani, M., Alalwan, A. A., Bewick,

B. M., Gardner, P., & Househ, M. (2019). An overview

of the features of chatbots in mental health: A scoping

review. International Journal of Medical Informatics,

132, 103978.

Albert, P. (2015). Why is depression more prevalent in

women? Journal of Psychiatry & Neuroscience, 40(4),

219–221.

Beck, J. (2011). Cognitive behavior therapy: basics and

beyond. The Guilford Press.

Bickmore, T. W., Puskar, K., Schlenk, E. A., Pfeifer, L. M.,

& Sereika, S. M. (2010). Maintaining reality: Relational

agents for antipsychotic medication adherence.

Interacting with Computers, 22(4), 276–288.

Bérubé, C., Schachner, T., Keller, R., Fleisch, E.,

Wangenheim, F. V., Barata, F., & Kowatsch, T. (2020).

Voice-based Conversational Agents for the Prevention

and Management of Chronic and Mental Conditions: A

Systematic Literature Review (Preprint).

CB Insights. (2020). State of Healthcare Q3’20 Report.

Cheung, A. H., Zuckerbrot, R. A., Jensen, P. S., Laraque,

D., Stein, R. E., & GLAD-PC STEERING GROUP.

(2018). Guidelines for Adolescent Depression in

Primary Care (GLAD-PC): Part II. Treatment and

Ongoing Management. Pediatrics, 141(3).

Collins, L. M., Murphy, S. A., & Strecher, V. (2007). The

Multiphase Optimization Strategy (MOST) and the

Sequential Multiple Assignment Randomized Trial

To What Scale Are Conversational Agents Used by Top-funded Companies Offering Digital Mental Health Services for Depression?

807

(SMART). American Journal of Preventive Medicine,

32(5).

Gaffney, H., Mansell, W., & Tai, S. (2019). Conversational

Agents in the Treatment of Mental Health Problems:

Mixed-Method Systematic Review. JMIR Mental

Health, 6(10).

Haroz, E., Ritchey, M., Bass, J., Kohrt, B., Augustinavicius,

J., Michalopoulos, L., … Bolton, P. (2017). How is

depression experienced around the world? A systematic

review of qualitative literature. Social Science &

Medicine, 183, 151–162.

Hekler, E., Tiro, J. A., Hunter, C. M., & Nebeker, C. (2020).

Precision Health: The Role of the Social and Behavioral

Sciences in Advancing the Vision. Annals of

Behavioral Medicine, 54(11), 805–826.

James, S. L., Abate, D., Abate, K. H., Abay, S. M.,

Abbafati, C., Abbasi, N., … Murray, C. J. L. (2018).

Global, regional, and national incidence, prevalence,

and years lived with disability for 354 diseases and

injuries for 195 countries and territories, 1990–2017: a

systematic analysis for the Global Burden of Disease

Study 2017. The Lancet, 392(10159), 1789–1858.

Kornfield, R., Zhang, R., Nicholas, J., Schueller, S. M.,

Cambo, S. A., Mohr, D. C., & Reddy, M. (2020).

"Energy is a Finite Resource": Designing Technology

to Support Individuals across Fluctuating Symptoms of

Depression. Proceedings of the 2020 CHI Conference

on Human Factors in Computing Systems.

Liu, Q., He, H., Yang, J., Feng, X., Zhao, F., & Lyu, J.

(2020). Changes in the global burden of depression

from 1990 to 2017: Findings from the Global Burden of

Disease study. Journal of Psychiatric Research, 126,

134–140.

Loncar-Turukalo, T., Zdravevski, E., Silva, J. M. D.,

Chouvarda, I., & Trajkovik, V. (2019). Literature on

Wearable Technology for Connected Health: Scoping

Review of Research Trends, Advances, and Barriers.

Journal of Medical Internet Research, 21(9).

Lopez, A., Schwenk, S., Schneck, C. D., Griffin, R. J., &

Mishkind, M. C. (2019). Technology-Based Mental

Health Treatment and the Impact on the Therapeutic

Alliance. Current Psychiatry Reports, 21(8).

Lucas, G. M., Gratch, J., King, A., & Morency, L.-P.

(2014). It’s only a computer: Virtual humans increase

willingness to disclose. Computers in Human Behavior,

37, 94–100.

Miner, A. S., Milstein, A., & Hancock, J. T. (2017). Talking

to Machines About Personal Mental Health Problems.

Jama, 318(13), 1217.

Miner, A., Chow, A., Adler, S., Zaitsev, I., Tero, P., Darcy,

A., & Paepcke, A. (2016). Conversational Agents and

Mental Health.

Proceedings of the Fourth International

Conference on Human Agent Interaction - HAI '16.

Montenegro, J. L. Z., Costa, C. A. D., & Righi, R. D. R.

(2019). Survey of conversational agents in health.

Expert Systems with Applications, 129, 56–67.

Ormel, J., Cuijpers, P., Jorm, A. F., & Schoevers, R. (2019).

Prevention of depression will only succeed when it is

structurally embedded and targets big determinants.

World Psychiatry, 18(1), 111–112.

Parkar, S. (2015). Elderly Mental Health: Needs. Mens

Sana Monographs, 13(1), 91.

Provoost, S., Lau, H. M., Ruwaard, J., & Riper, H. (2017).

Embodied Conversational Agents in Clinical

Psychology: A Scoping Review. Journal of Medical

Internet Research, 19(5).

Retterath, A., & Braun, R. (2020). Benchmarking Venture

Capital Databases. Available at SSRN.

Schachner, T., Keller, R., & Wangenheim, F. v. (2020).

Artificial Intelligence-Based Conversational Agents for

Chronic Conditions: Systematic Literature Review.

Journal of Medical Internet Research, 22(9).

Scholten, M. R., Kelders, S. M., & Gemert-Pijnen, J. E. V.

(2017). Self-Guided Web-Based Interventions:

Scoping Review on User Needs and the Potential of

Embodied Conversational Agents to Address Them.

Journal of Medical Internet Research, 19(11).

Schuetzler, R. M., Giboney, J. S., Grimes, G. M., &

Nunamaker, J. F. (2018). The influence of

conversational agent embodiment and conversational

relevance on socially desirable responding. Decision

Support Systems, 114, 94–102.

Segal, Z. V., Williams, J. M. G., & Teasdale, J. D. (2018).

Mindfulness-based cognitive therapy for depression.

The Guilford Press.

Shah, R. N., & Berry, O. O. (2020). The Rise of Venture

Capital Investing in Mental Health. JAMA Psychiatry.

Stal, S. T., Kramer, L. L., Tabak, M., Akker, H. O. D., &

Hermens, H. (2020). Design Features of Embodied

Conversational Agents in eHealth: a Literature Review.

International Journal of Human-Computer Studies,

138, 102409.

Tudor Car, L., Dhinagaran, D. A., Kyaw, B. M., Kowatsch,

T., Joty, S., Theng, Y.-L., & Atun, R. (2020).

Conversational Agents in Health Care: Scoping Review

and Conceptual Analysis. Journal of Medical Internet

Research, 22(8).

Twomey, C., O’Reilly, G., & Meyer, B. (2017).

Effectiveness of an individually-tailored computerised

CBT programme (Deprexis) for depression: A meta-

analysis. Psychiatry Research

, 256, 371–377.

Vaidyam, A. N., Wisniewski, H., Halamka, J. D.,

Kashavan, M. S., & Torous, J. B. (2019). Chatbots and

Conversational Agents in Mental Health: A Review of

the Psychiatric Landscape. The Canadian Journal of

Psychiatry, 070674371982897.

Vergunst, F. K., Fekadu, A., Wooderson, S. C., Tunnard, C.

S., Rane, L. J., Markopoulou, K., & Cleare, A. J.

(2013). Longitudinal course of symptom severity and

fluctuation in patients with treatment-resistant unipolar

and bipolar depression. Psychiatry Research, 207(3),

143–149.

Weizenbaum, J. (1966). ELIZA—a computer program for

the study of natural language communication between

man and machine. Communications of the ACM, 9(1),

36–45.

Scale-IT-up 2021 - Workshop on Scaling-Up Healthcare with Conversational Agents

808