Greedy Scheduling: A Neural Network Method to Reduce Task Failure

in Software Crowdsourcing

Jordan Urbaczek

1

, Razieh Saremi

1 a

, Mostaan Lotfalian Saremi

1 b

and Julian Togelius

2 c

1

School of Systems and Enterprises, Stevens Institute of Technology, Hoboken, NJ, U.S.A.

2

Tandon School of Engineering, New York University, NYC, NY, U.S.A.

Keywords:

Crowdsourcing, Task Scheduling, Task Similarity, Task Failure, Neural Network, TopCoder.

Abstract:

Highly dynamic and competitive crowdsourcing software development (CSD) marketplaces may experience

task failure due to unforeseen reasons, such as increased competition over shared supplier resources, or un-

certainty associated with a dynamic worker supply. Existing analysis reveals an average task failure ratio of

15.7% in software crowdsourcing markets.These lead to an increasing need for scheduling support for CSD

managers to improve the efficiency and predictability of crowdsourcing processes and outcomes. To that end,

this research proposes a task scheduling method based on neural networks, and develop a system that can

predict and analyze task failure probability upon arrival. More specifically, the model uses a range of input

variables, including the number of open tasks in the platform, the average task similarity between arriving

tasks and open tasks, the winner’s monetary prize, and task duration, to predict the probability of task failure

on the planned arrival date and two surplus days. This prediction will offer the recommended day associated

with lowest task failure probability to post the task. The model on average provided 4% lower failure probabil-

ity per project. The proposed model empowers crowdsourcing managers to explore potential crowdsourcing

outcomes with respect to different task arrival strategies.

1 INTRODUCTION

Crowdsourced Software Development (CSD) has

been used increasingly to develop software applica-

tions(Stol and Fitzgerald, 2014) (Stol and Fitzgerald,

2014). Crowdsourcing mini software development

tasks leads to lower accelerated development (Saremi

et al., 2017). In order for a CSD platform to func-

tion efficiently, it must address both the needs of task

providers as demands and crowd workers as suppli-

ers. Any kind of skew in addressing these needs

leads to task failure in the CSD platform. Generally,

planning for CSD tasks that are complex, indepen-

dent, and require a significant amount of time, effort,

and expertise (Stol and Fitzgerald, 2014) is challeng-

ing. For the task provider, requesting a crowdsourc-

ing service is even more challenging due to the uncer-

tainty of the similarity among available tasks in the

platform and the arrival of new tasks (Difallah et al.,

2016)(Saremi, 2018)(Saremi et al., 2019). The avail-

a

https://orcid.org/0000-0002-7607-6453

b

https://orcid.org/0000-0001-8657-7748

c

https://orcid.org/0000-0003-3128-4598

ability of crowd workers’ skill sets and consistency

of performance history is also uncertain (Karim et al.,

2016)(Zaharia et al., 2010). These factors raise the

issue of receiving qualified submissions, since crowd

workers may be interested in multiple tasks from dif-

ferent task providers based on their individual utility

factors (Faradani et al., 2011).

It has been reported that crowd workers are

more interested in working on tasks with similar

concepts, monetary prize, technologies, complexi-

ties, priorities, and duration (Gordon, 1961)(Faradani

et al., 2011)(Yang and Saremi, 2015)(Difallah et al.,

2016)(Mejorado et al., 2020). However, attracting

workers to a large group of similar tasks may cause

zero registration, zero submissions, or unqualified

submissions for some tasks due to lack of availability

from workers(Khanfor et al., 2017)(Khazankin et al.,

2011). Moreover, lower level of task similarity in the

platform leads to higher chance of task success and

workers’ elasticity(Saremi et al., 2020).

For example, in Topcoder a well-known Crowd-

sourcing Software platform, an average of 13 tasks

arrive daily and are added to an average list of 200 ex-

isting tasks. There is an average of 137 active workers

410

Urbaczek, J., Saremi, R., Saremi, M. and Togelius, J.

Greedy Scheduling: A Neural Network Method to Reduce Task Failure in Software Crowdsourcing.

DOI: 10.5220/0010407604100419

In Proceedings of the 23rd International Conference on Enterprise Information Systems (ICEIS 2021) - Volume 1, pages 410-419

ISBN: 978-989-758-509-8

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

to take the tasks at that period, which leads to an av-

erage of 25 failed tasks each day. According to this

example, there will be a long queue of tasks waiting

to be taken. Considering the fixed submission date,

such a waiting line may result in failed tasks. These

challenges have traditionally been addressed with task

scheduling methods.

The objective of this study is to provide a task

schedule recommendation framework for a software

crowdsourcing platform in order to improve the suc-

cess and efficiency of software crowdsourcing. In this

study, we first present a motivational example to ex-

plain the current task status in a software crowdsourc-

ing platform. Then we propose a task scheduling ar-

chitecture using a neural network strategy to reduce

probability of task failure in the platform.

More specifically, the system uses a range of input

variables, including the number of open tasks in the

platform, the average task similarity between arriving

tasks and open tasks, the winner’s monetary prize, and

task duration, to predict the probability of task failure

on the planned arrival date and two surplus days. This

prediction will offer the recommended the day asso-

ciated with lowest task failure probability to post the

task. The proposed system represents a task schedul-

ing method for competitive crowdsourcing platforms

based on the workflow of Topcoder, one of the pri-

mary software crowdsourcing platforms. The evalua-

tion results provided on average 4% lower task failure

probability.

The remainder of this paper is structured as fol-

lows. Section II introduces a motivational example

that inspires this study. Section III presents back-

ground and review of available works. Section IV

outlines our research design and methodology. Sec-

tion V presents the case study and model evaluation,

and Section VI presents the conclusion and outlines a

number of directions for future work.

2 MOTIVATING EXAMPLE

The motivation example illustrates a real crowdsourc-

ing software development (CSD) project on the Top-

Coder platform. It was comprised of 41 tasks with a

total project duration of 207 days, with an average of

8 days per task. The project experienced a 47% task

failure ratio, which means 19 of the 41 tasks failed. 6

tasks failed due to client requests ( i.e 14% failure)

and 7 tasks failed due to failed requirements (17%

failure). The remaining 8 tasks (i.e 14% failure) failed

due to zero submissions.

If we ignore the task failed based on client re-

quest and failed requirements, 28 tasks remain, (see

Figure 1: Overview of Tasks’ Status and Similarity Level in

the Platform.

Figure 2: Number of Open Tasks in Platform upon Task

Arrival.

figure1). Deeper analysis reveals that most of the

failed tasks entered the task pool with a similarity

above 80% when compared with the available tasks.

Also, as figure 2 illustrates, each task competes with

an average of 145 similar open tasks upon arrival. The

number of open tasks can directly impact the ability of

a task to attract suitable workers and lead to task fail-

ure. It is reported that the degree of task similarity in

the pool of tasks directly impacts the task competition

level to attract registrants and task success (Saremi

et al., 2020).

It seems task failure is a result of task competi-

tion level in the platform. This observation motivates

us to investigate more and provide a task scheduling

recommendation model which helps reduce the prob-

ability of task failure in the platform.

3 RELATED WORK

3.1 Task Scheduling in Crowdsourcing

Different characteristics of machine and human be-

havior create delays in product release(Ruhe and

Greedy Scheduling: A Neural Network Method to Reduce Task Failure in Software Crowdsourcing

411

Saliu, 2005). This phenomenon leads to a lack of

systematic processes to balance the delivery of fea-

tures with the available resources (Ruhe and Saliu,

2005). Therefore, improper scheduling would result

in task starvation (Faradani et al., 2011). Parallelism

in scheduling is a great method to create the chance

of utilizing a greater pool of workers (Ngo-The and

Ruhe, 2008; Saremi and Yang, 2015). Parallelism en-

courages workers to specialize and complete tasks in

a shorter period. The method also promotes solutions

that benefit the requester and can help researchers to

clearly understand how workers decide to compete on

a task and analyze the crowd workers performance

(Faradani et al., 2011). Shorter schedule planning can

be one of the most notable advantages of using CSD

for managers (Lakhani et al., 2010).

Batching tasks in similar groups is another ef-

fective method to reduce the complexity of tasks

and it can dramatically reduce costs(Marcus et al.,

2011). Batching crowdsourcing tasks would lead to

a faster result than approaches which keep workers

separate(Bernstein et al., 2011). There is a theoret-

ical minimum batch size for every project according

to the principles of product development flow (Rein-

ertsen, 2009). To some extent, the success of soft-

ware crowdsourcing is associated with reduced batch

size in small tasks. Besides, the delay scheduling

method (Zaharia et al., 2010) was specially designed

for crowdsourced projects to maximize the probabil-

ity that a worker receives tasks from the same batch of

tasks they were performing. An extension of this idea

is introduced a new method called “fair sharing sched-

ule” (Ghodsi et al., 2011). In this method, various re-

sources would be shared among all tasks with differ-

ent demands to ensure that all tasks would receive the

same amount of resources. For example, this method

was used in Hadoop Yarn. Later, Weighted Fair Shar-

ing (WFS) (Difallah et al., 2016) was presented as a

method to schedule batches based on their priority.

Tasks with higher priority are to be introduced first.

Another proposed crowd scheduling method is

based on the quality of service (QOS) (Khazankin

et al., 2011). This is a skill-based scheduling method

with the purpose of minimizing scheduling while

maximizing quality by assigning the task to the most

available qualified worker. This scheme was created

by extending standards of Web Service Level Agree-

ment (WSLA) (Ludwig et al., 2003). The third avail-

able method method is HIT-Bundle (Difallah et al.,

2016). HIT-Bundle is a batch container which sched-

ules heterogeneous tasks into the platform from dif-

ferent batches. This method makes for a higher posi-

tive outcome by applying different scheduling strate-

gies at the same time. The method was most re-

cently applied in helping crowdsourcing-based ser-

vice providers meet completion time SLAs (Hirth

et al., 2019). The system works by recording the old-

est task waiting time and running a stimulative eval-

uation to recommend the best scheduling strategy for

reducing the task failure ratio.

3.2 Task Similarity in Crowdsourcing

Generally, workers tend to optimize their personal

utility factor when registering for a task (Faradani

et al., 2011). It is reported that workers are more in-

terested in working on similar tasks in terms of mone-

tary prize (Yang and Saremi, 2015), context and tech-

nology (Difallah et al., 2016), and complexity level.

Context switch generates a reduction in workers’ effi-

ciency (Difallah et al., 2016). However, workers usu-

ally try to register for a greater number of tasks than

they can complete (Yang et al., 2016). It is reported

that a high task similarity level negatively impacts

task competition level and team elasticity (Saremi

et al., 2020). A combination of these observations led

to task failure due to: 1) receiving zero registrations

for a task based on a low degree of similar tasks and

a lack of available skillful workers (Yang and Saremi,

2015), and 2) receiving non-qualified submissions or

zero submissions based on a lack of time to work on

all the tasks registered by the worker(Archak, 2010).

3.3 Challenges in Crowdsourcing

Considering the highest rate for task completion and

submission acceptance, software managers will be

more concerned about the risks of adopting crowd-

sourcing. Therefore, there is a need for a better

decision-making system to analyze and control the

risks of insufficient competition and poor submissions

due to the attraction of untrustworthy workers. A tra-

ditional method of addressing this problem in the soft-

ware industry is task scheduling. Scheduling is help-

ful in prioritizing access to resources. It can help man-

agers optimize task execution in the platform to attract

the most reliable and trustworthy workers. Normally,

in traditional methods, task requirements and phases

are fixed, while cost and time are flexible. In a time-

boxed system, time and cost are fixed, while task re-

quirements and phases are flexible (Cooper and Som-

mer, 2016). However, in CSD all three variables are

flexible. This characteristic creates a huge advantage

in crowdsourcing software projects.

Generally, improper scheduling could lead to task

starvation (Faradani et al., 2011), since workers with

greater abilities tend to compete with low skilled

workers (Archak, 2010). In this case, users are more

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

412

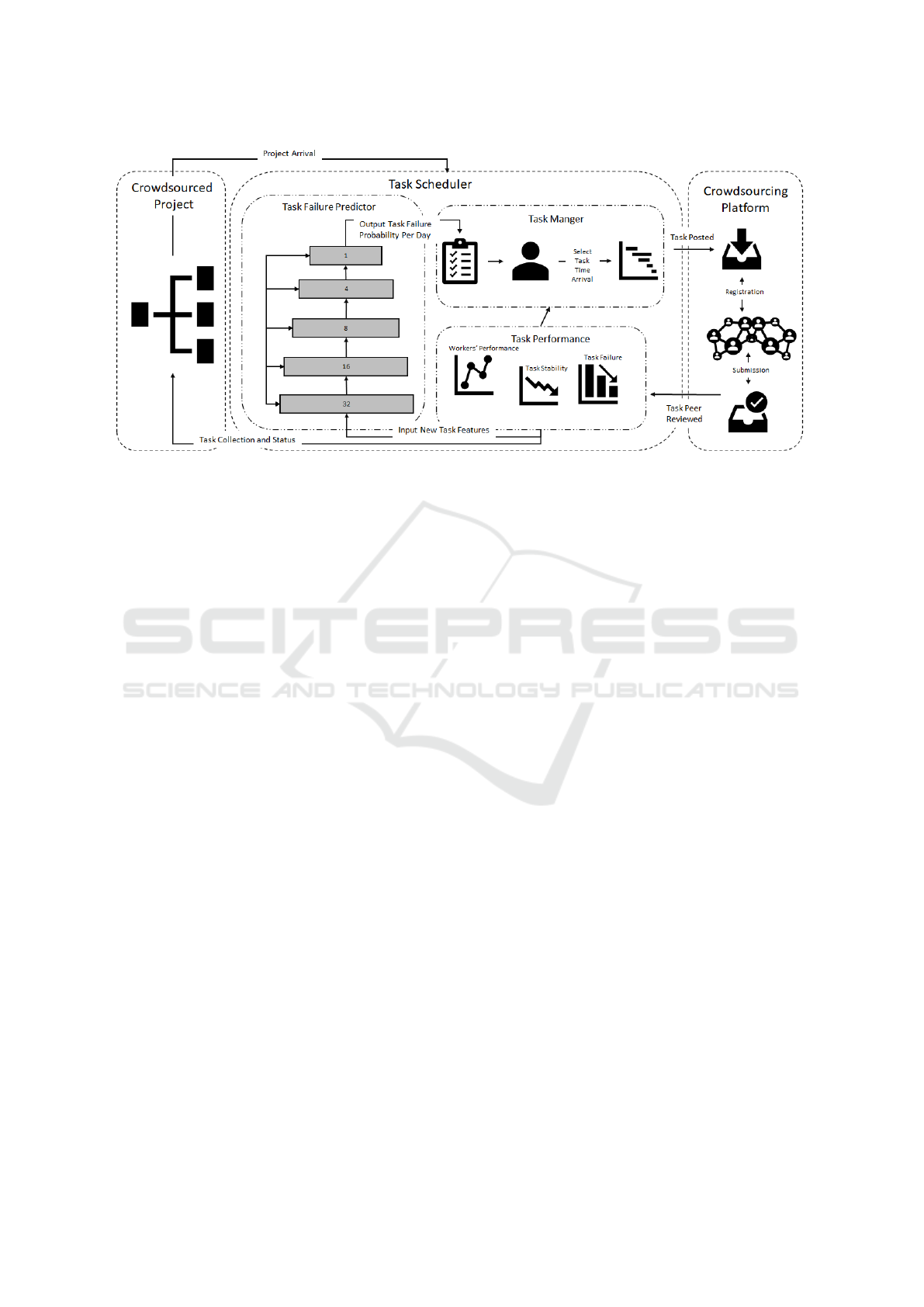

Figure 3: Overview of Scheduling Architecture.

likely to choose tasks with fewer competitors (Yang

et al., 2008). Also, workers who intentionally choose

to participate in less popular tasks could potentially

enhance winning probabilities, even if workers share

similar expertise. It brings some severe problems

in the crowd workers trust system to continue per-

forming on a task and causes a lot of dropped and

non-completed tasks. Moreover, tasks with relatively

lower monetary prizes have a high probability of reg-

istration and completion, which results in only 30%

of problems in the platform being solved (Rapoport

and Chammah, 1966). Lower priced tasks may at-

tract higher numbers of workers to compete and con-

sequently increases the chance of starvation for more

expensive tasks and project failure.

The above issues indicate the importance of task

scheduling in the platform in order to attract the right

amount of trustworthy workers and expertise that will

result in a shortened task release time.

4 RESEARCH DESIGN AND

METHODOLOGY

To solve the scheduling problem, we designed a

model to predict the probability of task failure and

recommend arrival date based on comparing pre-

dicted task failure probabilities. We utilized a neural

network model to predict the probability of task fail-

ure per day. Then we add a search-based optimizer to

recommend arrival day with lowest failure probabil-

ity. This architecture can be operated on any crowd-

sourcing platform; however, we focused on TopCoder

as the target platform. In this method, task arrival date

is suggested based on the degree of task similarity in

the platform and the reliability of available workers

to make a valid submission. Figure 3 presents the

overview of the task scheduling architecture. Each

task is uploaded in the task scheduler. The Task fail-

ure predictor analyzes the probability of failure of

an arriving task in the platform based on the number

of similar tasks available that day, average similarity,

task duration, and associated monetary prize. Then

the model recommends a probability of task failure

for the assigned date with two days surplus. In next

step, the task manager selects the most suitable arrival

date among the three recommended days and sched-

ules the task to be posted. The result of task perfor-

mance in the platform is to be collected and reported

to the client along with the input used to recommend

the posting date.

4.1 Dataset

The gathered data set contains 403 individual projects

including 4,908 component development tasks and

8,108 workers from Jan 2014 to Feb 2015, extracted

from Topcoder website. Tasks are uploaded as com-

petitions in the platform and Crowd software workers

register for and complete the challenges. On average,

most of the tasks have a life cycle of 14 days from the

first day of registration to the submission’s deadline.

When a worker submits their final files, their submis-

sion is reviewed by experts to and labeled as a valid or

invalid submission. Table1 summarizes the task fea-

tures available in the data set.

Greedy Scheduling: A Neural Network Method to Reduce Task Failure in Software Crowdsourcing

413

Table 1: Summary of Metrics Definition.

Type Metrics Definition

Task registration start date (TR) The first day of task arrival in the platform and when

workers can start registering for it. Range: (0, ∞))

Task submission end date (TS) Deadline by which all workers who registered for task

have to submit their final results. Range: (0, ∞)).

Tasks

attributes

Task registration end date

(TRE)

The last day that a task is available to be registered for.

Range: (0, ∞)).

Monetary Prize (P) Monetary prize (USD) offered for completing the task

and is found in task description. Range: (0, ∞)).

Technology (Tech) Required programming language to perform the task.

Range: (0, #Tech))

Platforms (PLT) Number of platforms used in task. Range: (0, ∞)).

Task Status Completed or failed tasks

# Registration (R) Number of registrants that sign up to compete in complet-

ing a task before registration deadline. Range: (0, ∞).

Tasks per-

formance

# Submissions (S) Number of submissions that a task receives before sub-

mission deadline. Range: (0, # registrants].

# Valid Submissions (VS) Number of submissions that a task receives by its submis-

sion deadline that have passed the peer review. Range: (0,

# registrants].

4.2 Input to the Task Scheduler

It is reported that task monetary prize and task

duration (Faradani et al., 2011)(Yang and Saremi,

2015)(Lotfalian Saremi et al., 2020) are the most im-

portant factors in raising competition level for a task.

In this research, we are adding the variables consid-

ered in our observations (i.e number of open tasks and

average task similarity) to the reported list of impor-

tant factors as input of the presented model. To help

in understanding of the qualities of the task schedul-

ing tool, the input variables of the model, including

average task similarity, task duration, task monetary

prize, and number of open tasks are defined below. A

definition for the probability of task failure in the plat-

form, used as the reward function to train the neural

network model, can also be found below.

First we need to understand the degree of task

similarity among a set of simultaneously open tasks

in the platform.

Def.1: Task Similarity (Sim

i, j

) is the similarity be-

tween two tasks T

i

and T

j

is defined as the weighted

sum of all local similarities across the features listed

in Table 2:

Sim

i, j

= W

1

∗ Dist

1

(T

i

,T

j

) + ... +W

n

∗ Dist

n

(T

i

,T

j

)

Def.2: Task Duration (D

i

) is the total available time

from task (i) registration start date (T R

i

) to submis-

sions end date (T S

i

):

D

i

=

n

∑

i=0

T S

i

− T R

i

Def.3: Actual Prize (P

i

) is the summation of the prize

that the winner (PW

i

) and runner up( PR

i

) will receive

after passing peer review.

P

i

=

n

∑

i=0

PW

i

+ PR

i

Def.4: Number of Open Tasks per day (NOT

d

) is the

Number of tasks (T

j

) that are open for registration

when a new task (T

i

) arrives on the platform.

NOT

d

=

n

∑

j=0

T

j

where,T RE

j

>= T R

i

Def.5: Average Task Similarity per day (AT S

d

) is the

average similarity score (Sim

i, j

) between the new ar-

riving task (T

i

) and currently open tasks (T

j

) on the

platform.

AT S

d

=

∑

n

i, j=0

Sim

i, j

NOT

d

where,T RE

j

>= T R

i

Def.6: Task Failure Rate per day, (T F

d

) is the proba-

bility that a new arriving task (T

i

) does not receive a

valid submission and fails given its arrival date.

T F

d

= 1 −

∑

n

i=0

V S

i

NOT

i

where,T RE

j

>= T R

i

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

414

Table 2: Features used to measure task distance.

Feature Description of distance measure Dist

i

Task Monetary Prize (P) (Prize

i

- Prize

j

) = Prize

Max

Task registration start date (TR) (T R

i

- T R

j

) = Di f f T R

Max

Task submission end date (TS) T S

i

- T S

j

) = Di f f

T

S

Max

Task Type (Type

i

== Type

j

) ? 1 : 0

Technology (Tech) Match(Tech

i

:Tech

j

)=NumberO f Techs

Max

Platform (PL) (PL

i

== PL

j

) ? 1 : 0

Detailed Requirement (Req

i

∗ Req

j

)/(|Req

i

| ∗ |Req

i

|)

4.3 Output of the Task Scheduler

The goal of the proposed model is not only to make

sure that we can predict the probability of failure for

a new arriving task given the arrival date, but also

recommend the most suitable posting day to decrease

the task failure rate with the surplus of two days. To

determine the most optimal arrival date, we run the

model and evaluate the result for arrival day, one day

after, and two days after. To predict the probability

of task failure in future days, we need to determine

the number of expected arriving tasks and associated

task similarity scores compared to the open tasks in

the future.

Def.7: Rate of Task Arrival per day (TA

d

), Consider-

ing that the registration duration (difference between

opening and closing dates) for each task is known at

any given point in time, the rate of task arrival per day

is defined as the ratio of the number of open tasks per

day NOT

d

over the total duration of open tasks per

day D

d

.

TA

d

=

NOT

d

∑

n

j=0

D

j

By knowing the rate of task arrival per day, the num-

ber of open tasks for future days can be determined.

Def.8: Number of Open Tasks in the Future OT

f ut

is

the number of tasks that are still open given a future

date NOT

f ut

, in addition to the rate of task arrival per

day TA

d

multiplied by the number of days into the

future ∆T

days

.

OT

tm

= NOT

tm

+ TA

d

∗ ∆T

days

Also there is a need to know the average task similar-

ity in future days.

Def.9: Average Task Similarity in the Future ATS

f ut

,

is defined as the number of tasks that are still open

given a future date NOT

f ut

multiplied by the average

task similarity of this group of tasks AT S

f ut

, the aver-

age task similarity of the current day AT S

d

multiplied

by the rate of task arrival per day TA

d

and the the

number of days into the future ∆T

days

.

AT S

f ut

= NOT

f ut

∗ AT S

f ut

+ AT S

d

∗ TA

d

∗ ∆T

days

4.4 Task Failure Predictor

A fully connected feed forward neural network was

trained to predict task failure probability based on the

four features described above. The network is con-

figured with five layers of size 32, 16, 8, 4, 2, and

1. Training was implemented in in batch sizes of 8

for 50 epochs and the mean-squared error loss func-

tion was used. Before running the model, the data

set was split into a train/test group and validation

group. The train/test group was 80% of the data set

and the validation group was 20%. We applied a K-

fold (K=10) cross-validation method on the train/test

group to train the prediction of task failure probability

in the neural network model. For each fold, the 10%

testing portion of the train/test group was cycled and

the remaining 90% was used as train data. We used

early stopping to avoid over fitting. The trained model

provided a loss equal to 0.04 with standard deviation

of 0.002.

The task manager then uses the output of the task

failure predictor to recommend the task arrival day

with the minimum failure probability for the schedule

plan. Applying the presented model on the full data

set from data set introduced in section IV-A, i.e. all

4908 tasks, yielded a reduction of 8% ( i.e 75% to

67%) in the probability of task failure in the platform.

5 CASE STUDY AND MODEL

EVALUATION

To study the applicability of the proposed method in

the real world, we used the system to reschedule the

project from the motivating example. (This example

is a small part of the full data set; results on the full

data set are presented above.) The reschedule result is

discussed below:

Greedy Scheduling: A Neural Network Method to Reduce Task Failure in Software Crowdsourcing

415

5.1 Result of the Task Failure Predictor

After testing, the data from the motivation example

was provided to the system for evaluation with the

goal of figuring out the arrival day with the lowest

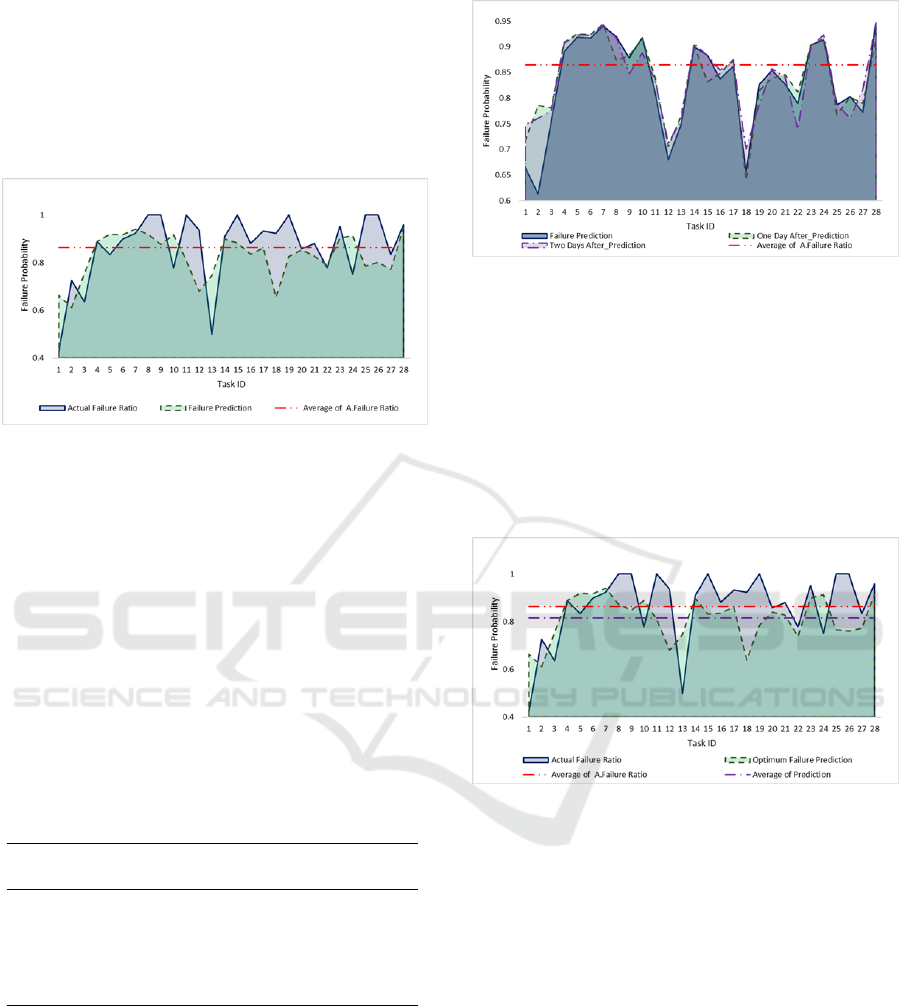

failure probability per task. Figure 4 presents the ini-

tial results of the model.

Figure 4: Comparison of initial Task Failure Prediction and

Actual Failure.

As shown in figure 4, the presented model’s arrival

date recommendations for the tasks in the sample pro-

vide an average failure prediction of 0.83, which is

0.03 lower than the actual scheduling failure. The

result of initial failure probability prediction by the

model in closer to the mean of actual failure, with

standard deviation of 0.09. The duration of the project

extended an extra day under the new scheduling rec-

ommendation, while the probability of task failure

was reduced by almost 4%. Table 3 summarized the

statistics of actual failure and prediction failure for the

project.

Table 3: Summary of actual and predicted failure probabil-

ities of project.

Statistics Actual

P(failure)

Predicted

P(failure)

Min 0.42 0.61

Max 1 0.94

Mean 0.86 0.83

Median 0.90 0.84

Std 0.15 0.09

In next step, the model provides predictions of task

failure probability for one and two days after the ac-

tual arrival date of the task. This result is used by the

task manager to determine if the task should be posted

in the future instead of today. Figure 5 illustrates the

result of the failure prediction of all the three dates.

Tasks 8,15,18,20,25,28 received the lowest pre-

diction of failure probability on the second day with

an average prediction of 0.81. Tasks 9, 10, 15, 22,

Figure 5: Details of Failure Prediction per Task for all level

of predictions.

23, 26 received the lowest prediction of failure prob-

ability on day 3 with an average prediction of 0.8.

The rest of the tasks received the lowest prediction

of failure probability on the first day with an average

of 0.81. However, not only was the average of all the

three predictions lower than the actual failure predic-

tion, but also most of the prediction points in all three

days were lower than the average of actual failure.

Figure 6: Comparison of Probability of Task Failure Predic-

tion for final Schedule and Actual Schedule.

With access to the 3-day outlook of task failure pre-

dictions from the model and the evaluation in figure

5, the task manager can more effectively schedule

tasks by choosing the minimum task failure proba-

bility from the model results for each task. Figure 6

presents the failure probability for the project follow-

ing the lowest failure prediction per task in compar-

ison with the actual task failure. It is clear that the

model’s recommended schedule provides a lower and

more stationary probability of task failure with an av-

erage of 0.81, while the average probability of task

failure for the actual task schedule is 0.86. The rec-

ommended task scheduling plan provides a minimum

failure prediction of 0.61 and maximum of 0.94 with

standard deviation of 0.09. The resulting accuracy of

the model is 0.896.

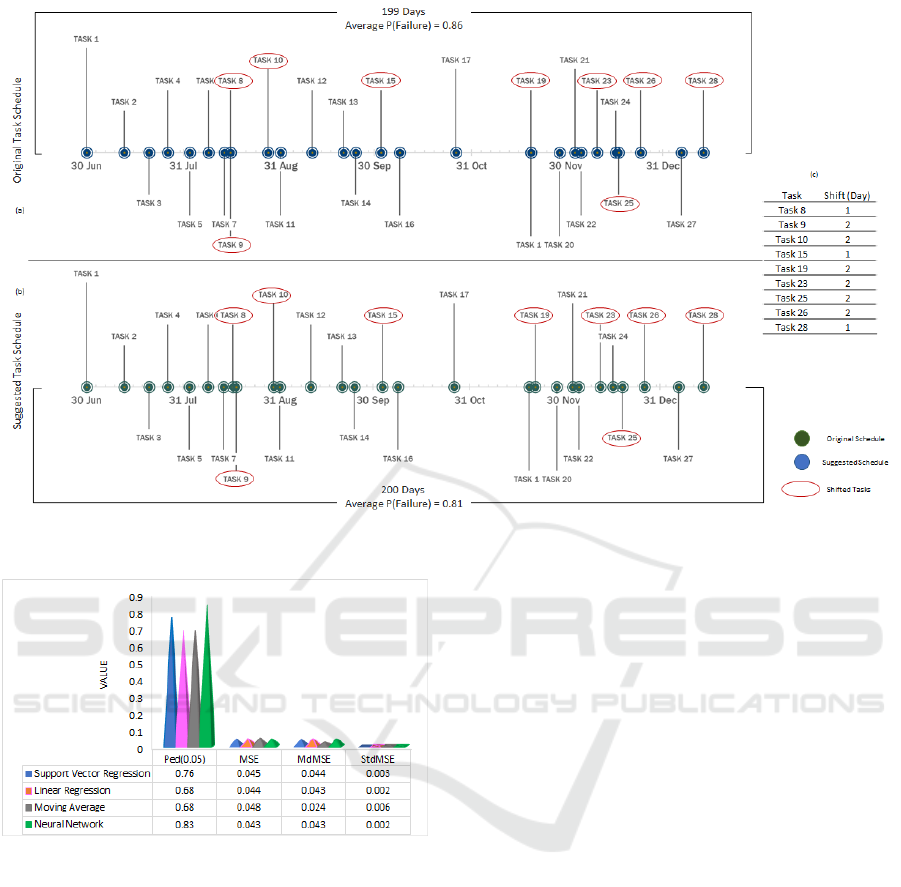

Figure 8 illustrates the summary of original task

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

416

Figure 7: MSE for Probability of Task Failure per Task.

timeline v.s. final task time line. 8(a) presents

the original project timeline, and 8(b) shows the

suggested project timeline by the presented model.

8(c)represents the duration of each task when shifted

to achieve lowest failure probability.

To evaluate the model performance, we applied

the Mean Square Error (MSE) metric to estimate the

difference between the actual failure probability and

the predicted failure probability of the same arrival

day according to available data. Figure 7 presents the

MSE for failure prediction per task. The average MSE

is 0.09 with a minimum of 0.001 for task 3 and a max-

imum of 0.23 for task 1, with a standard deviation of

0.06.

5.2 Model Evaluation

To compare the performance of the proposed model,

we applied a K-fold (K=10) cross-validation on the

data set to predict the probability of task failure based

on four different prediction approaches. The esti-

mated probabilities of task failure are used to compute

four popular performance measures that are widely

used in current prediction systems for software devel-

opment: 1- Mean Square Error (MSE), 2- Median of

Mean Square Error (MdMSE), 3- Standard Deviation

of mean Square Error (StdMSE), 4- Percentage of the

estimates with Mean Square Error less than or equal

to N% (Pred(N)).

The primary result of this analysis is shown in

figure 9. It is clear that Neural Network analy-

sis has a better predictive performance according to

Pred(0.05) and also has almost the lowest error rate

with the MSE of 0.043%. The SVR recreation is the

runner up in terms of performance with the MSE of

0.045%. Interestingly, the moving average and lin-

ear regression provided the same level of performance

based on Pred(0.05), while linear regression provides

the lower MSE of 0.044.

5.3 Threats to Validity

First, the study only focuses on competitive CSD

tasks on the TopCoder platform. Many more plat-

forms do exist, and even though the results achieved

are based on a comprehensive set of about 5,000 de-

velopment tasks, the results cannot be claimed to be

externally valid. There is no guarantee the same re-

sults would remain exactly the same in other CSD

platforms.

Second, there are many different factors that may

influence task similarity, task success, and task com-

pletion. Our similarity algorithm and task failure

probability-focused approach are based on known

task attributes in TopCoder. Different similarity

algorithms and task failure probability-focused ap-

proaches may lead us to different, but similar results.

Third, the result is based on tasks only. Workers’

network and communication capabilities are not con-

sidered in this research. In the future, we need to add

this level of research to the existing one.

6 CONCLUSION AND FUTURE

WORK

CSD provides software organizations access to an in-

finite, online worker resource supply. Assigning tasks

to a pool of unknown workers from all over the globe

is challenging. A traditional approach to solving this

challenge is task scheduling. Improper task schedul-

ing in CSD may cause zero task registrations, zero

task submissions or low qualified submissions due

to uncertain worker behavior, and consequentially,

task failure. This research presents a new schedul-

ing method based on a neural network. The method

reduces the probability of task failure in CSD plat-

forms. The experimental result show a reduction in

project failure probability of up to 4% while main-

taining the same project duration.

In future research, we will focus on expanding the

model to a more complicated framework that incorpo-

rates available worker similarity and considers the im-

pact of the workers’ competition performance regard-

ing the task success to further improve efficiency in

the scheduling model. Moreover, the presented neu-

ral network model will be used as a fitness function in

an evolutionary algorithm based scheduling.

Greedy Scheduling: A Neural Network Method to Reduce Task Failure in Software Crowdsourcing

417

Figure 8: Project Timeline.

Figure 9: Performance of Task Failure Probability by Each

Approach.

REFERENCES

Archak, N. (2010). Money, glory and cheap talk: analyz-

ing strategic behavior of contestants in simultaneous

crowdsourcing contests on topcoder. com. In Proceed-

ings of the 19th international conference on World

wide web, pages 21–30.

Bernstein, M. S., Brandt, J., Miller, R. C., and Karger, D. R.

(2011). Crowds in two seconds: Enabling realtime

crowd-powered interfaces. In Proceedings of the 24th

annual ACM symposium on User interface software

and technology, pages 33–42.

Cooper, R. G. and Sommer, A. F. (2016). The agile–stage-

gate hybrid model: A promising new approach and a

new research opportunity. Journal of Product Innova-

tion Management, 33(5):513–526.

Difallah, D. E., Demartini, G., and Cudr

´

e-Mauroux, P.

(2016). Scheduling human intelligence tasks in multi-

tenant crowd-powered systems. In Proceedings of

the 25th international conference on World Wide Web,

pages 855–865.

Faradani, S., Hartmann, B., and Ipeirotis, P. G. (2011).

What’s the right price? pricing tasks for finishing on

time. In Workshops at the Twenty-Fifth AAAI Confer-

ence on Artificial Intelligence.

Ghodsi, A., Zaharia, M., Hindman, B., Konwinski, A.,

Shenker, S., and Stoica, I. (2011). Dominant resource

fairness: Fair allocation of multiple resource types. In

Nsdi, volume 11, pages 24–24.

Gordon, G. (1961). A general purpose systems simula-

tion program. In Proceedings of the December 12-14,

1961, eastern joint computer conference: computers-

key to total systems control, pages 87–104.

Hirth, M., Steurer, F., Borchert, K., and Dubiner, D. (2019).

Task scheduling on crowdsourcing platforms for en-

abling completion time slas. In 2019 31st Interna-

tional Teletraffic Congress (ITC 31), pages 117–118.

IEEE.

Karim, M. R., Messinger, D., Yang, Y., and Ruhe, G.

(2016). Decision support for increasing the effi-

ciency of crowdsourced software development. arXiv

preprint arXiv:1610.04142.

Khanfor, A., Yang, Y., Vesonder, G., Ruhe, G., and

Messinger, D. (2017). Failure prediction in crowd-

sourced software development. In 2017 24th Asia-

Pacific Software Engineering Conference (APSEC),

pages 495–504. IEEE.

Khazankin, R., Psaier, H., Schall, D., and Dustdar,

S. (2011). Qos-based task scheduling in crowd-

ICEIS 2021 - 23rd International Conference on Enterprise Information Systems

418

sourcing environments. In International Confer-

ence on Service-Oriented Computing, pages 297–311.

Springer.

Lakhani, K. R., Garvin, D. A., and Lonstein, E. (2010).

Topcoder (a): Developing software through crowd-

sourcing. Harvard Business School General Manage-

ment Unit Case.

Lotfalian Saremi, M., Saremi, R., and Martinez-Mejorado,

D. (2020). How much should i pay? an empirical

analysis on monetary prize in topcoder. In Interna-

tional Conference on Human-Computer Interaction,

pages 202–208. Springer.

Ludwig, H., Keller, A., Dan, A., King, R. P., and Franck, R.

(2003). Web service level agreement (wsla) language

specification. Ibm corporation, pages 815–824.

Marcus, A., Wu, E., Karger, D., Madden, S., and Miller,

R. (2011). Human-powered sorts and joins. arXiv

preprint arXiv:1109.6881.

Mejorado, D. M., Saremi, R., Yang, Y., and Ramirez-

Marquez, J. E. (2020). Study on patterns and effect of

task diversity in software crowdsourcing. In Proceed-

ings of the 14th ACM/IEEE International Symposium

on Empirical Software Engineering and Measurement

(ESEM), pages 1–10.

Ngo-The, A. and Ruhe, G. (2008). Optimized resource al-

location for software release planning. IEEE Transac-

tions on Software Engineering, 35(1):109–123.

Rapoport, A. and Chammah, A. M. (1966). The game of

chicken. American Behavioral Scientist, 10(3):10–28.

Reinertsen, D. (2009). The principles of product develop-

ment flow: second generation lean product develop-

ment (vol. 62): Celeritas redondo beach.

Ruhe, G. and Saliu, M. O. (2005). The art and science of

software release planning. IEEE software, 22(6):47–

53.

Saremi, R. (2018). A hybrid simulation model for crowd-

sourced software development. In Proceedings of

the 5th International Workshop on Crowd Sourcing in

Software Engineering, pages 28–29.

Saremi, R., Lotfalian Saremi, M., Desai, P., and Anzalone,

R. (2020). Is this the right time to post my task? an

empirical analysis on a task similarity arrival in top-

coder. In Yamamoto, S. and Mori, H., editors, Hu-

man Interface and the Management of Information.

Interacting with Information, pages 96–110, Cham.

Springer International Publishing.

Saremi, R., Yang, Y., and Khanfor, A. (2019). Ant

colony optimization to reduce schedule acceleration

in crowdsourcing software development. In Interna-

tional Conference on Human-Computer Interaction,

pages 286–300. Springer.

Saremi, R. L. and Yang, Y. (2015). Empirical analysis on

parallel tasks in crowdsourcing software development.

In 2015 30th IEEE/ACM International Conference on

Automated Software Engineering Workshop (ASEW),

pages 28–34. IEEE.

Saremi, R. L., Yang, Y., Ruhe, G., and Messinger, D.

(2017). Leveraging crowdsourcing for team elastic-

ity: an empirical evaluation at topcoder. In 2017

IEEE/ACM 39th International Conference on Soft-

ware Engineering: Software Engineering in Practice

Track (ICSE-SEIP), pages 103–112. IEEE.

Stol, K.-J. and Fitzgerald, B. (2014). Two’s company,

three’s a crowd: a case study of crowdsourcing soft-

ware development. In Proceedings of the 36th Inter-

national Conference on Software Engineering, pages

187–198.

Yang, J., Adamic, L. A., and Ackerman, M. S. (2008).

Crowdsourcing and knowledge sharing: strategic user

behavior on taskcn. In Proceedings of the 9th ACM

conference on Electronic commerce, pages 246–255.

Yang, Y., Karim, M. R., Saremi, R., and Ruhe, G. (2016).

Who should take this task? dynamic decision sup-

port for crowd workers. In Proceedings of the 10th

ACM/IEEE International Symposium on Empirical

Software Engineering and Measurement, pages 1–10.

Yang, Y. and Saremi, R. (2015). Award vs. worker

behaviors in competitive crowdsourcing tasks. In

2015 ACM/IEEE International Symposium on Empir-

ical Software Engineering and Measurement (ESEM),

pages 1–10. IEEE.

Zaharia, M., Borthakur, D., Sen Sarma, J., Elmeleegy, K.,

Shenker, S., and Stoica, I. (2010). Delay scheduling:

a simple technique for achieving locality and fairness

in cluster scheduling. In Proceedings of the 5th Eu-

ropean conference on Computer systems, pages 265–

278.

Greedy Scheduling: A Neural Network Method to Reduce Task Failure in Software Crowdsourcing

419