Measuring Inflation within Virtual Economies using Deep Reinforcement

Learning

Conor Stephens

1,2

and Chris Exton

1,2

1

Computer Systems and Information Science, University of Limerick, Ireland

2

Lero, Science Foundation Ireland Centre for Software Research, Ireland

Keywords:

Reinforcement, Learning, Games Design, Economies, Multiplayer, Games.

Abstract:

This paper proposes a framework for assessing economies within online multiplayer games without the need

for extensive player testing and data collection. Players have identified numerous exploits in modern online

games to further their collection of resources and items. A recent exploit within a game-economy would be in

Animal Crossing New Horizons a multiplayer game released in 2020 which featured bugs that allowed users

to generate infinite money (Sudario, 2020); this has impacted the player experience in multiple negative ways

such as causing hyperinflation within the economy and scarcity of resources within the particular confines of

any game. The framework proposed by this paper can aid game developers and designers when testing their

game systems for potential exploits that could lead to issues within the larger game economies. Assessing

game systems is possible by leveraging reinforcement learning agents to model player behaviour; this is shown

and evaluated in a sample multiplayer game. This research is designed for game designers and developers to

show how multi-agent reinforcement learning can help balance game economies. The project source code is

open source and available at: https://github.com/Taikatou/economy research.

1 INTRODUCTION

Video game economies suffer from a massive inter-

nal problem when compared to real-world economies.

The creation of the currency in-game is tied to player

mechanics; an example would be when players re-

ceive money when they defeat a monster. Systems

or mechanics that result in the creation of economic

value is effectively printing money. This core issue

has been the cause of rampant inflation within Mas-

sively multi-player online games (MMO) (Achter-

bosch et al., 2008). An early example was in Asherons

Call: the in-game currency became so inflated that

shards were used instead of money. Similarly, the de-

velopers of Gaia Online would donate $250 to char-

ity if the players discarded 15 trillion Gold (Gold was

the in-game currency used for transactions) (Haufe,

2014). Game designers would create a counterweight

to prevent problems within the game’s internal bal-

ance. Sinkholes are key tools for game’s designers

when designing economies within the game’s systems

that remove money from the economy. Sinkholes

are mechanics that take currency out of the games

system, sometimes referred to as ”Drains” within

machination diagrams (Adams and Dormans, 2012).

Sinkholes have taken the form of Auction Fees and

Consumable Items only available from NPC’s (Non-

Playable Characters). However, players will endeav-

our to avoid these taxes and attempt to amass vast

amounts of currency and items as quickly as possi-

ble.

Game systems with MMO titles have been shown

to implode due to un-intended player behaviour. Ul-

tima Online, an incredibly successful early MMO re-

leased in 1997 was an early example of the issues

players could present to game designers. The cre-

ator Richard Garriott explained in an interview how

players destroyed the virtual ecology within seconds

by over-hunting (Hutchinson, 2018). Within the game

where the spawning of new monsters was regulated to

a specific frequency; when the game went live prob-

lems started to surface, resources were being depleted

at an alarming rate, destroying the game’s ecosystem

due to players over hunting herbivores for fun. To

solve this problem future games did not restrict the

rate that enemies would spawn, MMO games such as

World of Warcraft and Star Wars: The Old Repub-

lic uses sinkholes to manage the imbalance set within

their economies.

The framework that we propose assesses inflation

within an in-game economy by measuring the price

of resources within the game over time. In this frame-

work the economy is simulated by using reinforce-

ment learning agents playing as both the supplier and

consumer within a simplified game economy both

444

Stephens, C. and Exton, C.

Measuring Inflation within Virtual Economies using Deep Reinforcement Learning.

DOI: 10.5220/0010392804440453

In Proceedings of the 13th International Conference on Agents and Artificial Intelligence (ICAART 2021) - Volume 2, pages 444-453

ISBN: 978-989-758-484-8

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

aiming to gain more wealth through the game sys-

tems. This research aims to show the power of re-

inforcement learning for both Game Balance (Beyer

et al., 2016) ensuring the game is challenging and fair

for different types of players and also assessing the

game’s economy for potential inflation allowing key

stakeholders to better understand how changes within

the virtual economy can influence player interactions

such as trade without releasing the game or extensive

user testing. This paper is built upon two clear objec-

tives:

1. Measure the upper limits of inflation within a mul-

tiplayer game economy;

2. Provide a tool for simulating different changes to

a game’s economy for analysis.

2 RELATED WORKS

This work builds upon many different fields of game’s

design such as automated game design, game balance

and game theory as well as concepts from artificial in-

telligence and simulation such as deep reinforcement

learning and Monte Carlo simulations. The history of

these fields are briefly described below with relevance

to this paper.

2.1 Automated Game Design

Game Generation systems perform automated, intel-

ligent design of games (Nelson and Mateas, 2007),

whilst having a lot of similarities with procedural con-

tent generation; Game Generation is a sub discipline

of Automated Games Design with the latter being a

larger collection of tools, techniques and ambitions,

such as collaborative design tools and testing frame-

works for games designers. Automated Game Design

(AGD) strives not to create content and assets for pre-

existing games systems which comprises of goals, re-

sources and mechanics (Sellers, 2017); AGD strives

to create these systems allowing unique experiences

and interactions to be generated.

2.1.1 History of Game Design Systems

AGD was created to develop rulesets for games given

a common structure such as chess and strategy board

games. The first example of such a system was

Metagame (Pell, 1992). This was partly due to break-

throughs in Artificial Intelligence (AI). It was also

partly due to the extensive history of these games and

the recognition they received in academia, highlight-

ing the importance of such a genre and further explo-

ration of the different design possibilities contained

within the well established framework and brought

public acknowledgement to the suitability and eligi-

bility of the pursuit of further creation and exploration

within this space.

After the mid 2000’s the study, development and

research into modern video games ballooned in cor-

relation to their growing popularity and financial suc-

cess of the wider games industry. In the beginning

of the 2000’s proceduralism was becoming success-

ful in games such as The Marriage and Braid (Tre-

anor et al., 2011) Games with proceduralism as a core

mechanism of the narrative are process-intensive. In

these games, expression is found primarily in the

player’s experience as it results from interaction with

the game mechanics and dynamics (Hunicke et al.,

2004).

This marked the period of an explosion of AGD

systems emerging from UC Santa Cruz. The first

would be Untitled System which is capable of creat-

ing mini-games from natural text input. This system

was the first AGD system to change the visuals of the

game based on the input by having access to an im-

age database. Game-o-matic was the second AGD

system that created a buzz in short succession (Tre-

anor et al., 2012). Game-O-Matic is a videogame

authoring tool and generator that creates games that

represent ideas. Through using a simple concept map

input system, Game-O-Matic is able to assemble sim-

ple arcade style game mechanics into videogames that

represent the ideas represented in the concept map.

Game-O-Matic gave a higher quality experience pro-

viding a more interactive and engaging process when

seeding ideas (seeding refers to a number or vector

used to initialize a random number generator, within

Game-O-Matic. The seed refers to the terms used to

describe the desired game).

2.2 Automated Game Testing

Developers and researchers have been experimenting

with machine learning to test their games. This testing

has a lot of different use cases and contexts depending

on the department spearheading the initiative. Qual-

ity assurance departments may consider employing

trained bots/learning agents to test the game levels

and stories to detect bugs. Examples include: miss-

ing collision within the level geometry; finding holes

in the narrative events that can hard/soft lock players

from progressing; modl.ai is an excellent example of

a company which offers this service to games devel-

opers and publishers. Dev-Ops and the monitization

groups of the game company could employ A/B test-

ing to assess the quality of tutorials, in-game-currency

and menu systems on different user groups. Game de-

Measuring Inflation within Virtual Economies using Deep Reinforcement Learning

445

signers may also use artificial intelligence and simu-

lations as a way of assessing the quality metrics such

as fairness (of level design) or session-length of the

game they are developing and by extension testing,

such efforts are made easier by using modern tool

such as Unity Simulations a cloud based service pro-

vided by Unity 3D game engine. Unity Simulation is

an excellent resource for testing game balance auto-

matically with different parameters.

2.2.1 Play Testing

Play testing is an integral component of user testing

games to ensure a fun and engaging experience for

players, designed to review design decisions within

interactive media such as games and to measure the

aesthetic experience of the game compared to the de-

signer’s intentions (Fullerton, 2008). Play testing is a

lengthy process both in terms of complexity, cost and

time. Several approaches have been presented over

the past few years to automate some steps of the pro-

cess (Stahlke and Mirza-Babaei, 2018). These solu-

tions aim to allow faster, more accurate iterations of

designs and greater efficiency during the professional

game development cycle. An example of this in prac-

tice is King’s automated play testing of their match-

3 ”Crush Saga” games where they trained agents

to mimic human behaviour and using these agents

within simulations, designers can test new level de-

signs (Gudmundsson et al., 2018).

2.3 Game Balance

Game Designers rely on a variety of practices to en-

sure fair and fun experiences for players by analysing

mechanics and systems. In order for designers to

create these experiences, the designer assesses the

fairness, challenge, meaningful choices and random-

ness of the different events and challenges within the

game. Several terms have emerged over the years to

define and explain aesthetics within common genres,

one such term is ’Game Feel’ (Swink, 2009) a prac-

tice of making the mechanics i.e. the ”moving parts”

of a game more impactful by adding effects such as:

• Screenshake;

• Particle Effects;

• Post processing.

An oversimplification of game balance can be defined

as ”do the players feel the game is fair” (Becker and

G

¨

orlich, 2019). To successfully balance a game a va-

riety of considerations and tolerances depending on

the game, genre and audience must be considered.

2.3.1 Cost Curve Analysis

Game Balance was traditionally achieved using ana-

lytical methods such as cost-curve analysis, spread-

sheet and Excel macros. These tools have evolved

into machination diagrams, a more visual and interac-

tive framework to test internal game balance (Adams

and Dormans, 2012). Each of these methods follows a

reductive process that allows the designers to see the

impact when values are changed within the games’

internal systems. Cost-curve analysis as an example

associates every mechanics, systems and items within

the game with a benefit and a cost (Carpenter, 2003).

Traditionally, the cost increases in line with the bene-

fit of a game mechanic or resource. This relationship

between each item forms the shape of the curve. Dur-

ing the balancing process a designer can see if any

individual mechanic character or item deviates too

much from the curve. Small imbalances are allowed,

as choosing the most efficient or effective item creates

an interesting decision for the player. However game

mechanics that are obviously too high on the cost

curve are traditionally identified for a redesign or a

small nerf. This approach works well with traditional

arcade games and role playing games. However, this

approach is less effective in multiplayer games, that

have high dependence on intransitive relationships,

which can be described as Rock-Paper Scissors me-

chanics.

2.3.2 Balancing Collectable Card Game Decks

New research into balancing decks of cards within

the collectable card game Hearthstone (de Mesen-

tier Silva et al., 2019) showed how genetic algorithms

combined with simulated play can show how many

viable options there are for players and how to iden-

tify critical compositions and decks that would be

used if a card within the deck changes. This research

shows how fair games are created with genetic algo-

rithms by changing the available mechanics given to

both players within a complicated problem domain

(over 2000 cards to evaluate). It also opens up sev-

eral possibilities of testing the probability of victory

for any two players based on their deck contents and

identifying key cards that decks rely on. The key re-

search identified from this paper is how to evaluate the

health of the games META game, (Which describes

how the game is played strategically by players at any

certain time) by using entropy within the viable decks

used by Artificial Intelligence.

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

446

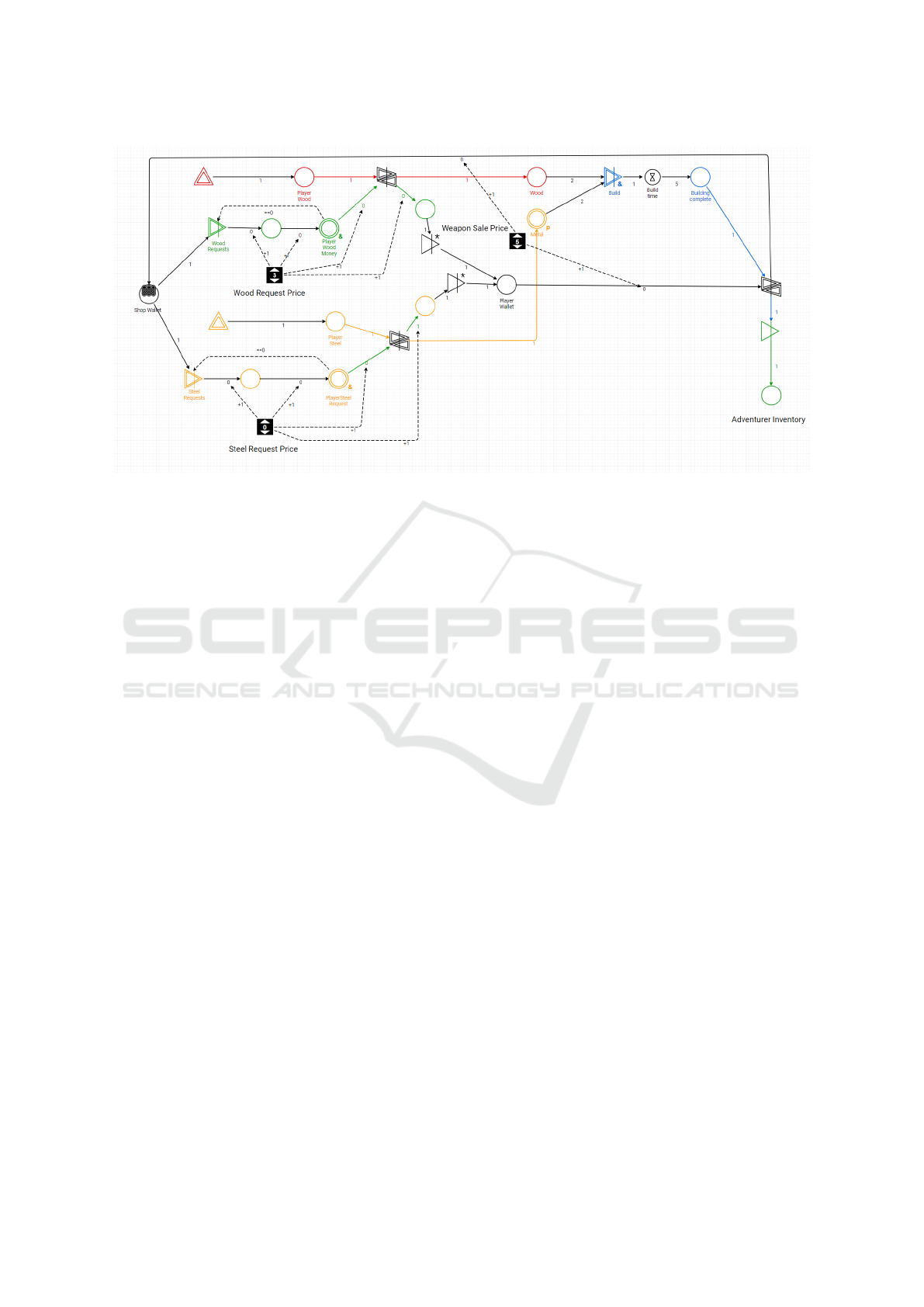

Figure 1: Crafting Machinations Diagram.

3 HISTORY OF ECONOMIES IN

GAMES

MMOs feature some of the most impressive economic

interactions between agents found in video games.

Lots of research has been done to explore virtual

economies (Castronova, 2002). This research identi-

fied some necessary mechanics that are required to al-

low fluctuations in value for virtual currencies. These

mechanics which include the creation of the currency

using in-game mechanics can be formulated as: ‘Be

directly connected to real-world currency’. An early

example of this tie between physical and game cur-

rency can be found in Entropia Universe, a free-to-

play MMO released in 2003 where 1 USD is tied to

100 PED (Project Entropia Dollars) on a fixed ex-

change rate (Kieger, 2010). There are ethical con-

cerns for this mechanic regarding how the currency is

stored and how it can be used for gambling as a key

game mechanic.

Game economies include an extensive range of

possibilities ranging from Mana in Magic The Gath-

ering to Gil in Final Fantasy. When designers

talk about balancing game economies, they generally

mean balancing mechanics that feature conversion be-

tween two resources. In game economies, game de-

signers control and carefully balance the economy,

defining how players obtain the currency and by how

much of each currency key resources cost in the game.

This paper discusses game economies that are signif-

icantly affected by supply and demand between mul-

tiple players. To facilitate this specific in-game me-

chanics, a first requirement would be allowing play-

ers to sell items within the world, the second would

be having different requirements for different types

of players incentivising trading and cooperation.

MMO’s markets and currencies feature inflation

because the players influence both the supply and de-

mand of items. This is very different from traditional

single-player games where prices of items in shops

are a key balance consideration. Having agents in the

world can have severe design implications regarding

player progression and difficulty achieving particular

quests and challenges. Within single player games

the prices of items can be set to allow sufficient chal-

lenge without giving the player an un-surmountable

hurdle to cross, multiplayer games can feature sup-

ply and demand which can affect the players ability

to progress within the game world. Other techniques

found in ’free to play’ games are illiquidity (Players

have to convert one currency into another before they

can make certain purchases).

Due to the infinite nature of enemies and game

play found within MMO games; item scarcity is hard

to predict within any specific item unless it is made

available to only the smallest groups of players. Many

games create prestige and scarcity by allowing play-

ers to own physical space (for example land and prop-

erty) in Second Life or spaceships and space-stations

(as it is done in Eve Online). This economy is differ-

ent from the game play based economy where prop-

erty is innately a finite resource that can be traded end-

lessly. It also has different types of values based on

location and amenities.

Larger Massive Multiplayer Online (MMO)

Measuring Inflation within Virtual Economies using Deep Reinforcement Learning

447

games such as RuneScape and World of Warcraft

(WoW) have tackled the issue of hyperinflation

throughout the games lifetime. MMO’s have exper-

imented with integrating real-world financial policies

to curb inflation. These solutions include creating a

reserve currency to put a minimum value in the in-

game currency. It is also worth implementing illiquid-

ity in the transnational mechanics within the games

auctions and trading systems. These design consider-

ations are slow to develop and it is difficult to measure

the effects accurately before the game’s release.

4 TESTING ECONOMIES

Artificial Intelligence techniques have provided some

means for testing and evaluating economic policy and

fiscal design. Salesforce showed how using a learning

environment; different tax policies can be tested and

assessed on specific economies (Zheng et al., 2020).

The AI economist is capable of using reward signals

from the learning environment such as the positive af-

firmation of earning money and the punishment of be-

ing taxed to create incentives for the agents to relax

and enjoy their day and to work within their society

and contribute. This work highlights the great poten-

tial in finding optimal tax rates and policies to gener-

ate the most income for the government without cre-

ating disincentives for the agents working when they

could be resting.

Games developers have traditionally used mod-

elling techniques to test economies within games

(Adams and Dormans, 2012). Machinations is a

graph-based programming language created by Joris

Dormans with a syntax that supports the flow of re-

sources between players. A modern equivalent is the

fantastic Machinations.io web app that modernises

the previous machinations toolset. Machinations al-

low the game designers to model the creation flow

and destruction of resources within the economy. It

also allows resources to be exchanged and converted

within a visual interactable simulation.

5 METHODS

This research aims to measure inflation within a game

economy. This is achieved by using a game economy

as a learning environment for reinforcement learning

agents and tracking their trades within an accelerated

simulation of the game world. Industry standard tools

are used for the game engine and sample code to make

this work more accessible for game development pro-

fessionals. The process to achieve this can be broken

into 3 steps:

1. Implement a simple MMO economy using Unity

game engine;

2. Train learning agents to play the game allowing

them to create, sell and win resources from the

game world;

3. Assess the game economy by deploying the

trained learning agents within the game world and

record the price of transactions.

This framework assesses sample virtual

economies within a simulated MMO game us-

ing learning agents to simulate the needs and desires

of different players. The sample economy is a

two way economy between players that focuses on

crafting and selling items and players concentrate

on adventuring and consuming items. Both sets

of agents within this learning environment are

dependent on cooperation and bartering between

each other to progress in the game. Both crafting

and adventuring agents are rewarded with the money

they are making. The implementation for the agent’s

policy was possible using ml-agents an open-source

plugin for unity (Juliani et al., 2018) that allows for

the development of reinforcement learning agents

within Unity.

5.1 Economy Design

Traditional MMO economies are facilitated with sup-

ply and demand provided by the game’s players. The

demand within the in-game economy is achieved by

having players needing different types of weapons

and resources to achieve their short term objectives

within the game. Examples include how Warriors and

Mages in World of Warcraft would desire different

equipment, allowing both to sell unwanted or use-

less equipment to each other. This example project

features a similar dynamic between adventurer and

craftsman agents. Craftsman agents spend money to

receive resources to make equipment which they sell

for a profit. Adventurer agents in an opposite ap-

proach use the equipment to collect resources that

they sell to craftsman agents. Both agents have to

cooperate to prosper within this economy, allowing a

wide variety of interactions and influences. The sup-

ply is achieved by having mechanics within the game

that creates and changes different resources; these in-

clude loot drops from battles, and crafting resources

into more valuable weapons, that will sell at a higher

price to buyers.

The game’s internal economy was tested as a

machination diagram, as shown in Figure 1. The

economy is based on Craftsman and Adventurer

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

448

agents agreeing on the price of resources and items

to facilitate trade. This agreement within the sys-

tem can be seen in the two types of Registers within

the machination diagram. The first register type is

for the Craftsman agent that allows them to set the

price of the request. Moreover, it is an interactive

pool that Adventurer agents can use to agree on the

price. This interaction locks negotiations for both par-

ties. The second type of register within this diagram is

the price for the crafted weapon. This register allows

the craftsman to change the price of the weapon even

when they have no stock of the weapon. When an

Adventurer agent interacts with the Trade node, this

exchanges the item in the shop with the Adventurer

agent’s money. The values chosen for the different

outcomes were based on play testing the economy de-

sign with different parameters to ensure neither the

Adventurer nor the Craftsman runs out of money,

which could bring the simulation and economy to a

standstill. This was possible using Monte Carlo tech-

niques to simulate players using the Machinations.io

(Raychaudhuri, 2008). The economy as shown in Fig-

ure 1 was simulated over 1000 time-steps within the

environment over 20 iterations for each configuration

of the items specific statistics. An example of the bal-

ancing process can be seen in Figure 5 which shows

the steady upwards direction of the economy and the

constant progression and purchasing throughout the

simulation. A Monte Carlo search for games design

is a popular strategy for exploring the search space of

strategies and game states (Zook et al., 2019) (Zook

and Riedl, 2019).

5.2 Adventure Agents

Each Adventurer agent can access three unique game

systems where they can interact with other agents.

The first game system is the request system, simi-

lar to a guild in World Of Warcraft; Adventurers can

see all active resources that Crafting agents requested

and the reward when the request is complete. Adven-

turer agents can choose up to five individual requests

to fulfil. The second ability is the Adventurer abil-

ity; agents are able to travel within the virtual world.

There are four different environments Forest, Moun-

tain, Sea and Volcano each with varying types of en-

emies to encounter. When the agent enters an area,

a random battle occurs which is designed similarly

to Pokemon battles; the authors used Brackeys turn-

based combat framework for the implementation of

this system. Players can choose to Attack, Heal or

Flee during combat. If either the players’ or enemies’

health points drop to zero the game is over. If the

player was victorious, a loot drop takes place with

Figure 2: Battle Interface.

items and probabilities based on the enemy that the

agent defeated. Specific loot values for different en-

emy types can be found in Table 1. The user interface

for this battle mode can be found in Figure 2. The

main challenge of the battle system is to manage the

player’s health points between battles and battling en-

emies that have sufficient chance to drop resources

needed for each agent’s accepted requests.

The third ability of the Adventurer agent is the

shopping ability agents have access to. All the Craft-

ing agents have shops and Adventurer Agents can pur-

chase items from them. Each weapon is placed within

the Adventurer’s inventory and a weapon is equipped

if it is the strongest weapon in the agents inventory..

Better equipment allows the Adventurers to encounter

stronger enemies and receive more loot. If the agent’s

health drops to zero, it loses the battle and suffers a

loss of 5 units of gold from the Adventurer agents

wallet.

5.3 Crafting Agents

Each Crafting agent has three different abilities. The

first is the ability to input requests for materials which

include Wood, Metal, Gems and Dragon Scales.

Within the request system, each agent can change the

number of each request’s resource they require and

the price they are willing to pay for it. This system

is similar to a guild request found in many traditional

MMO’s. From this marketplace, Adventurer agents

will take requests which they can fulfil through bat-

tling and exploration. The second ability each Craft-

ing agent has is crafting; each agent can craft a variety

of weapons using the resources they have received.

The third and final ability of the Crafting agent is

how the agents interact with their shopfront. Agents

can put items in their shopfronts to replenish stock.

They also have the ability to increase and decrease

the price of each item. There are five unique weapons

that a Craftsman could create with different crafting

Measuring Inflation within Virtual Economies using Deep Reinforcement Learning

449

Table 1: Enemy Loot Drops.

Area Name Health Damage Wood Metal Gem Scale

Forest Owl 10 2 1

Forest Buffalo 10 5 2 1

Mountain Bear 15 5 2 2

Mountain Gorilla 20 5 2 2 1

Sea Narwhale 15 6 1 1 2

Sea Crocodile 22 4 2 2

Volcano Snake 20 5 2 1

Volcano Dragon 25 6 2 1 2

requirements; this can be seen in Table 2.

5.4 Agent Sensors

Each agent is trained using Soft Actor-Critic policies

using multi-agent reinforcement learning; this is pos-

sible by using Unity’s excellent ML-Agents package

(Juliani et al., 2018). ML-Agents allows developers

and designers to train learning agents within Unity

game environments. The knowledge is achieved by

using Self Play within the environment (Silver et al.,

2017). Self Play improves the learning process by

having multiple agents within the environment use

previous versions of the agent’s policy. Self Play

stabilises the agents learning by allowing a posi-

tively trending reward within the learning environ-

ment. This was implemented within the learning envi-

ronment by having half of the Adventurer and Crafts-

man agents infer their decisions from previous ver-

sions of the policy. Both Crafting and Adventurer

learning agents switch between active abilities and

receive signals from the statistics and environment.

These include the price of items within the shop, the

health and damage data during battles and the con-

tent within the agents’ inventories. Agents have dis-

crete actions with different contexts depending on the

agent’s currently enabled ability.

5.5 Economy Sinks

Both the adventurer, crafting and virtual environ-

ment for the game were designed to sustain a healthy

supply-demand curve to regulate the prices between

the different weapons and resources. However, the

base environment has a traditional feature of online

game economies that have been the cause of previ-

ous instances of hyperinflation. Such hyperinflation

is caused by the infinite monsters within the adventur-

ing system, potentially allowing the cost of resources

to plummet for the Crafting agents. The game is de-

signed to prevent hyperinflation by having excess re-

wards removed from the in-game economy. This is

achieved by having each Adventurer agent discard

items if they do not have a current request for that

item. This mechanic is similar mechanic to how Mon-

ster Hunter’s request system works by having unique

issues for the early application preventing the player

collecting all potentially early game items within their

first journey into the wild area.

5.6 Training

The reward signal for both Adventurer and Crafts-

man agents was to incentivise the agents to coordi-

nate with each other. This was achieved by rewarding

the Craftsman agent for selling items with the reward

signal being the percentage profit made during a sale.

The learning agent is rewarded when it earns money.

This is achieved when an agent completes a resource

request. The Adventurer agent is penalised with a

penalty of -0.1 to punish the agent for its failure. Ad-

venturer agents have a similar reward signal; every

time they earn money, they receive a proportional re-

ward signal ranging between 0-0.8 based on the value

of money they have gained. Adventurers earn money

when they complete a resource request. Both agent’s

policies are updated using Soft-Actor Critic a learning

algorithm for reinforcement learning (Haarnoja et al.,

2018). Soft Actor-Critic allows higher levels of en-

tropy within the system, enabling further exploration

of the stochastic action distribution.

Each episode during training is started by reset-

ting every agent’s inventory and wallet, giving them

the starting value of 100 gold units and no weapons.

When the Ultimate Sword is both crafted by the

Craftsman agent and sold to an Adventurer agent, the

episode is determined to be over, as there is no fu-

ture content that agents have yet to experience. Each

agent’s neural network has 128 units in each of its

two hidden layers between input and output neurons.

We previously explored having intrinsic rewards dur-

ing the training process however we observed no sig-

nificant increase in performance within the learning

environment; with a significant increase to the com-

putation cost whilst training each agent’s policy. This

reward signal was achieved by supplying a small cu-

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

450

Table 2: Weapon Stats & Crafting Requirements.

Item Name Damage Durability Wood Metal Gem Scale

Req. Req. Req. Req.

Beginner 7 5 2 2

Intermediate 9 6 3 3

Advanced 10 7 2 2 1

Epic 12 7 2 2 2

Master 13 8 3 3 3

Ultimate 15 10 3 3 3 2

riosity reward to encourage exploration of the possi-

ble scenarios within the learning environment.

5.7 Data Collection

After successful training of both the Craftsman and

Adventurer agents’ policies, the trained models were

then used within the learning environment to generate

and collect data. Data was collected by recording sale

prices of weapons within the simulation. This was

recorded as Comma Separated Value data alongside

the time within the simulation that the exchange took

place.

6 RESULTS

The sale price of Beginner and Advanced swords are

shown in Figure 3 and Figure 4 respectively. The

trend lines in both diagrams show a clear upwards tra-

jectory within the virtual economy but their shapes

provide a more interesting picture of this game’s

economy. Between the 5th and 10th hour of simula-

tion the price increases of both weapons. This could

be seen as a higher level of demand for the item from

the Adventurer agents. This is quickly followed by a

decrease in price representing a small dip within the

game’s economy, potentially relieving the burden on

the Adventurer agents within the economy and hav-

ing the burden of more competitive prices affecting

the Shop agents reward signal.

7 DISCUSSION & FUTURE

WORK

Economies within games are set to grow in both im-

portance and scale (Toyama et al., 2019) (Tomi

´

c,

2017), which makes robust testing of the systems be-

fore releasing them to the public a vital precaution to

ensure consistent profitability of the game through-

out its life-cycle. Testing virtual economies has the

Figure 3: Sale value of Beginner Swords.

Figure 4: Sale value of Advanced Swords.

potential of allowing companies to protect their as-

sets whilst earning higher and more consistent rev-

enue during the products life cycle. Other techniques

to test game economies such as machinations and

analysis of player data allows developers to assess

the health of the game’s economy. However, these

approaches are possible only during prototyping and

after the release of the game. Our approach offers

a middle ground that allows key stakeholders of the

game to test the same economic systems found within

the game. Moreover, it allows player motivation to

be modelled in the form of the reward signal found

within our learning environment.

Future work within this research should explore

the effectiveness of this approach in different types of

economies with other starting parameters. Comparing

different economies would make it possible to evalu-

ate the effect of inflation within economies containing

positive or negative feedback loops. Monopoly with a

very high level of positive feedback within the game

Measuring Inflation within Virtual Economies using Deep Reinforcement Learning

451

has a history of exploding inflation and competition as

a core premise. Comparing this economy to a more

serious game with a negative feedback loop such as

This War of Mine could highlight the exact difference

in flow between these two very different experiences.

The authors propose using this framework in a

more extensive online game with multiple mechan-

ics for gaining money and resources. This could po-

tentially allow game designers to assess the impact

of different play styles and systems on the games in-

ternal economy. This research could be extended by

training another neural network whose responsibility

is to view transactions between agents in order to pre-

dict inflation within the economy during the simula-

tion. This neural network could be used within the

game to prevent fraud or hyperinflation in a way simi-

lar to how credit card companies prevent fraud in their

systems.

ACKNOWLEDGEMENTS

We would like to thank Lero, the Science Foundation

Ireland centre for Software Research for their contin-

ued help and support.

REFERENCES

Achterbosch, L., Pierce, R., and Simmons, G. (2008). Mas-

sively multiplayer online role-playing games: The

past, present, and future. Comput. Entertain., 5(4).

Adams, E. and Dormans, J. (2012). Game Mechanics: Ad-

vanced Game Design. New Riders Publishing, USA,

1st edition.

Becker, A. and G

¨

orlich, D. (2019). Game balancing – a

semantical analysis.

Beyer, M., Agureikin, A., Anokhin, A., Laenger, C., Nolte,

F., Winterberg, J., Renka, M., Rieger, M., Pflanzl, N.,

Preuss, M., and Volz, V. (2016). An integrated process

for game balancing.

Carpenter, A. (2003). Applying risk analysis to play-

balance rpgs.

Castronova, E. (2002). On Virtual Economies. Game Stud-

ies, 3.

de Mesentier Silva, F., Canaan, R., Lee, S., Fontaine, M. C.,

Togelius, J., and Hoover, A. K. (2019). Evolving the

hearthstone meta. CoRR, abs/1907.01623.

Fullerton, T. (2008). Game Design Workshop. A Playcen-

tric Approach to Creating Innovative Games. pages

248–276.

Gudmundsson, S., Eisen, P., Poromaa, E., Nodet, A., Pur-

monen, S., Kozakowski, B., Meurling, R., and Cao, L.

(2018). Human-like playtesting with deep learning.

pages 1–8.

Haarnoja, T., Zhou, A., Abbeel, P., and Levine, S. (2018).

Soft actor-critic: Off-policy maximum entropy deep

reinforcement learning with a stochastic actor. vol-

ume 80 of Proceedings of Machine Learning Re-

search, pages 1861–1870, Stockholmsm

¨

assan, Stock-

holm Sweden. PMLR.

Haufe, V. (2014). Hyperinflation in gaia online — know

your meme. https://knowyourmeme.com/memes/

events/hyperinflation-in-gaia-online. (Accessed on

08/05/2020).

Hunicke, R., Leblanc, M., and Zubek, R. (2004). Mda: A

formal approach to game design and game research.

AAAI Workshop - Technical Report, 1.

Hutchinson, L. (2018). War Stories: Lord British created an

ecology for Ultima Online but no one saw it.

Juliani, A., Berges, V., Vckay, E., Gao, Y., Henry, H., Mat-

tar, M., and Lange, D. (2018). Unity: A general plat-

form for intelligent agents. CoRR, abs/1809.02627.

Kieger, S. (2010). An exploration of entrepreneurship

in massively multiplayer online role-playing games:

Second life and entropia universe. Journal For Virtual

Worlds Research, 2.

Nelson, M. and Mateas, M. (2007). Towards automated

game design. pages 626–637.

Pell, B. (1992). Metagame in Symmetric Chess-like Games.

Technical report (University of Cambridge. Computer

Laboratory). University of Cambridge Computer Lab-

oratory.

Raychaudhuri, S. (2008). Introduction to monte carlo sim-

ulation. In 2008 Winter Simulation Conference, pages

91–100.

Sellers, M. (2017). Advanced Game Design: A Systems

Approach. Game Design. Pearson Education.

Silver, D., Hubert, T., Schrittwieser, J., Antonoglou, I., Lai,

M., Guez, A., Lanctot, M., Sifre, L., Kumaran, D.,

Graepel, T., Lillicrap, T. P., Simonyan, K., and Has-

sabis, D. (2017). Mastering chess and shogi by self-

play with a general reinforcement learning algorithm.

CoRR, abs/1712.01815.

Stahlke, S. N. and Mirza-Babaei, P. (2018). Usertesting

Without the User: Opportunities and Challenges of an

AI-Driven Approach in Games User Research. Com-

put. Entertain., 16(2).

Sudario, E. (2020). The broken economy of animal

crossing: New horizons — cutiejea. https://www.

cutiejea.com/real-life/acnh-economy/. (Accessed on

12/30/2020).

Swink, S. (2009). Game Feel: A Game Designer’s Guide to

Virtual Sensation. Morgan Kaufmann Game Design

Books. Taylor & Francis.

Tomi

´

c, N. (2017). Effects of micro transactions on video

games industry. Megatrend revija, 14:239–257.

Toyama, M., C

ˆ

ortes, M., and Ferratti, G. (2019). Analysis

of the microtransaction in the game market: A field

approach to the digital games industry.

Treanor, M., Blackford, B., Mateas, M., and Bogost, I.

(2012). Game-o-matic: Generating videogames that

represent ideas. In Proceedings of the The Third Work-

shop on Procedural Content Generation in Games,

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

452

PCG’12, page 1–8, New York, NY, USA. Association

for Computing Machinery.

Treanor, M., Schweizer, B., Bogost, I., and Mateas, M.

(2011). Proceduralist readings: How to find meaning

in games with graphical logics.

Zheng, S., Trott, A., Srinivasa, S., Naik, N., Gruesbeck,

M., Parkes, D. C., and Socher, R. (2020). The ai

economist: Improving equality and productivity with

ai-driven tax policies.

Zook, A., Harrison, B., and Riedl, M. O. (2019). Monte-

carlo tree search for simulation-based strategy analy-

sis.

Zook, A. and Riedl, M. O. (2019). Learning how design

choices impact gameplay behavior. IEEE Transac-

tions on Games, 11(1):25–35.

APPENDIX

Figure 5: Monte Carlo testing of Economy Design.

Figure 6: Monte Carlo Item Purchase Frequency.

Measuring Inflation within Virtual Economies using Deep Reinforcement Learning

453