Towards a Model of Empathic Pedagogical Agent for Educating Children

and Teenagers on Good Practices in the Use of Social Networks

Joaqu

´

ın Taverner

a

, Emilio Vivancos

b

and Vicente Botti

c

Valencian Research Institute for Artificial Intelligence (VRAIN). Universitat Polit

`

ecnica de Val

`

encia, Valencia, Spain

Keywords:

Intelligent Agent, Affective Agent, Privacy Risk, Social Network.

Abstract:

Social networks have been a revolution for our society. The rapid expansion of these networks results in more

and more children and teenagers using them regularly. However, despite the fact that most social networks

have privacy control systems, most young people do not use these controls because teenagers are usually

unaware of the privacy risks that exist in the Internet. This inadequate use of social media can lead to different

social problems such as cyber-bullying, grooming, or sexting. The best way to prevent these risks is through

education. In this paper we propose an empathic pedagogical agent model for education on good practices

in the use of social networks. The agent interacts empathetically with users through the social network. The

user’s emotion is recognized through a camera and is processed in real time to obtain the emotion. The agent

analyzes the recognized emotion and the users’ actions and looks for the best strategy to advice and educate

the teenager in the correct use of the social network.

1 INTRODUCTION

Today’s society is increasingly moving towards vir-

tual environments. Video calls, instant messaging,

and social networks have transformed the way we

communicate and relate to others. The incorpora-

tion of these new technologies into our daily lives,

has been accelerated this year by the pandemic pro-

duced by COVID-19. People find several advan-

tages such as instant communication, the search for

lost old friends, the improvement of the prospects

of finding a job, or the communication in times of

confinement. The democratisation of the use of the

Internet is resulting in more and more children and

teenagers using them. In fact, today the young people

are the biggest consumers of technology and social

networks (Garc

´

ıa-Pe

˜

nalvo and Kearney, 2016). How-

ever, young people are often not aware of the privacy

risk they are exposed to when using the Web (Ale-

many et al., 2019). An inappropriate use of social

networks can have negative personal, professional,

and emotional consequences (Machimbarrena et al.,

2018). These consequences are aggravated when they

occur in children and teenagers, as they do not have

sufficient emotional capacity to deal with certain sit-

uations that may arise from the inappropriate use of

a

https://orcid.org/0000-0002-5163-5335

b

https://orcid.org/0000-0002-0213-0234

c

https://orcid.org/0000-0002-6507-2756

social networks. Therefore, for a social transition to-

wards virtualised environments to be effective, it is

necessary to design educational models focused on

teaching the correct use of the Internet. An appro-

priate use of the web can turn it into a pedagogi-

cal tool that allows a great dissemination of knowl-

edge (Karal et al., 2017). Artificial intelligence can

help in this educational task through the use of intel-

ligent agents acting as tutors in the use of social net-

works. Intelligent agents have already been used in

education with good results (Obaid et al., 2018; Ser-

holt and Barendregt, 2016). In particular, agents with

empathic abilities, capable of recognising and show-

ing emotions, have been successfully used in educa-

tional tasks by increasing the sociability and partici-

pation of young people (Rodrigues et al., 2015). Fur-

thermore, empathic intelligent agents are perceived as

much more reliable than traditional agents by children

and teenagers. This advantage makes empathic agents

more suitable for tutoring (Paiva et al., 2017). In this

paper we propose a model of empathic pedagogical

agent to be used as a tutor to train young people in the

correct use of a social network.

The rest of this paper is organized as follow. In

section 2, we discuss the problem of privacy risk in

the Internet and the most important proposals regard-

ing pedagogy with agents and education in the use of

social networks. Section 3 introduces a empathetic

pedagogical agent model for education on social net-

works. The agent will interact with students in an af-

Taverner, J., Vivancos, E. and Botti, V.

Towards a Model of Empathic Pedagogical Agent for Educating Children and Teenagers on Good Practices in the Use of Social Networks.

DOI: 10.5220/0010345504390445

In Proceedings of the 13th International Conference on Agents and Artificial Intelligence (ICAART 2021) - Volume 1, pages 439-445

ISBN: 978-989-758-484-8

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

439

fective way by recognizing and expressing emotions.

Finally, the main conclusions and future works are

presented in Section 4.

2 RELATED WORK

Social networks have revolutionised various aspects

of society. They have not only transformed the way

we communicate and relate to others, but have also

affected other aspects such as politics, business, cul-

ture, or the way we perceive reality. Social networks

also allow us to meet new people and share our ideas.

However, not everything is an advantage in social

networks. These networks have become a perfect

medium for the dissemination of fake news and disin-

formation campaigns. Fake news try to give a biased

view of many events in order to manipulate people’s

opinion.

Given the high level of social exposure to which

users of social networks are subjected, there is a risk

of losing privacy. In addition, an inappropriate com-

ment or an unfortunate photo can harm our personal

image and damage us socially and emotionally. In

fact, the privacy risk has become one of the main con-

cerns of users of this type of network (Alemany et al.,

2019). Currently, most social networks have privacy

management systems that allow users to control the

audience of their publication. However, these sys-

tems are often ignored by users, both because they are

not aware of the potential risks of exposure of a spe-

cific publication and because users are not concerned

about privacy. This fact is further aggravated when

young people are involved since they do not have suf-

ficient social and emotional skills to deal with certain

situations that may arise when using social networks

(Micheli, 2016).

Nowadays, the younger population is becoming

the main users of social networks. The European

General Data Protection Regulation (GDPR) (Jas-

montaite and De Hert, 2015) allows young people

over 16 to transfer their personal data to be processed

by social network providers without the consent or the

authorization of the parental responsibility holder. In

addition, this regulation allows EU members to re-

duce this age to 13 years. Consequently, children

and teenagers spend several hours a day using their

smartphones. During this time, they receive hundreds

of advertisements and messages that, frequently are

hoaxes and fake news. This misinformation is often

spread to create a negative view of everything that is

different: religious belief, political ideas, sexual ten-

dency, immigrants, people with disabilities,. . . This

misinterpretation and manipulation of real events can

affect the emotional development of teenagers and

children, because they have less critical thinking and

tools to analyse and protect themselves of fake news.

Moreover, during pandemic confinement, young peo-

ple have spent many hours connected to the Inter-

net, often without parental supervision (G

´

omez-Gal

´

an

et al., 2020). How can they filter all the messages that

receive? Ballesteros and Pizaco (Ballesteros and Pi-

cazo, 2018) estimated that only 22% of teenagers be-

tween 14 and 16 years old have received training on

how to filter the information they find on the Internet.

On the other hand, many young people use social

networks as a social showcase in which they look for

group acceptance: the greater the number of follow-

ers and friends, the better their perception of their sta-

tus on the social network. However, most young peo-

ple do not know how to handle their privacy in social

network environments (Silva et al., 2017). Therefore,

when making a publication they do not consider the

risk that sharing this information may suppose. This

is because they do not have the necessary skills to de-

termine what kind of information poses a risk when

disseminating it. This level of public exhibition can

have negative consequences. Recent studies show that

the abusive and inappropriate use of social networks

can lead to negative effects such as addiction, cyber-

bullying, grooming, sexting, and paradoxically, social

isolation (Machimbarrena et al., 2018; Primack et al.,

2019).

2.1 Pesedia: A Pedagogical Social

Network

In recent years, some proposals for assessing privacy

on social networks have been made (Acquisti et al.,

2017; Alemany et al., 2020; Botti-Cebri

´

a et al., 2020).

Most of these proposals involve the definition of met-

rics and mechanisms to improve trust and avoid the

vulnerability of privacy in social networks (Ruiz-Dolz

et al., 2019; Taverner et al., 2018b). One of these

proposals is found in (Argente et al., 2017) in which

Pesedia, a social network designed specifically to ed-

ucate in the correct use of social networks, is pre-

sented. Pesedia offers an interface that is very sim-

ilar to the interfaces of current social networks like

Facebook. Young people have their own wall where

they can create and share publications. They can also

send private messages or establish groups. The net-

work also allows to establish friendship relationships

and trust levels in order to teach the appropriate pri-

vacy measures by providing the appropriate level of

privacy for the publications. Pesedia allows the addi-

tion of plugins to increase its functionality. For exam-

ple, in (Alemany et al., 2019) two mechanisms were

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

440

included to assist in the decision-making process of

selecting the privacy level of a publication. The aim

of these mechanisms was to alert users about the vis-

ibility of publications in order to make them aware of

the privacy risk involved when posting. These mech-

anisms were based on the model of soft-paternalism,

in which specific interventions are made in the actions

performed by the student without affecting his/her

freedom of choice.

2.2 Pedagogical Intelligent Agents

While the establishment of metrics and mechanisms

has proven to be effective in educating about good

practices in social networks, they lack the interactive

experience that an intelligent agent can offer. Intel-

ligent agents are capable of reasoning and reacting

proactively to a perceived change in the environment.

Therefore, they are able to interact with the user by re-

acting to his/her actions. Intelligent agents have been

used in recent years to perform tutoring tasks in edu-

cational environments improving the motivation, so-

cialization, understanding, and attitudes of students

(Rodrigues et al., 2015). For example, an educational

pedagogical agent model for tutoring children on the

autism spectrum is proposed in (Grawemeyer et al.,

2012). Similarly, in (Barr

´

on-Estrada et al., 2012) an

educational pedagogical agent model for helping in

homework is presented. That agent was developed to

operate in a social network in which students perform

their homework while participating in a common so-

cial experience.

Many proposals made in the field of affective

computing focus on the study of empathy as a mech-

anism to improve the human-machine interaction

(Paiva et al., 2017). Empathy is a construct used

in different domains such as ethology, philosophy,

psychology, or neuroscience to explain different so-

cial behaviours. These behaviours are generally fo-

cused on the ability to understand and share emo-

tions, moods, and mental states of others, as well

as the behaviours derived from this understanding,

such as prosocial behaviour or altruism (Cuff et al.,

2016; Stueber, 2013). Empathy encourages the devel-

opment of emotional bonds, allowing human beings

to constitute themselves as social and moral beings

(Kauppinen, 2014). In fact, recent neuroscientific

studies relate some social disorders, such as autism

spectrum disorder or psychopathy, with a deficit in

the brain areas involved in empathic processes (Blair,

2008). One of the most recurrent definitions of empa-

thy is the one proposed by M. L. Hoffman (Hoffman,

2001), in which empathy is defined as a psychological

process that makes a person have feelings more simi-

lar or congruent with the other person’s situation than

with his/her own situation. Therefore, it is not nec-

essary for empathic responses to be identical or ap-

proximate to the mood of the other, but according to

Hoffman any emotional reaction compatible with the

mood of the other can be considered empathy. Ac-

cording to Hoffman’s definition, to simulate the pro-

cesses inherent within empathy, software agents must

be able to perceive and recognize emotions or moods

and react accordingly by simulating a behavior appro-

priated to the perceived emotion or mood.

The area of artificial intelligence has evolved by

developing models of agents with different affective

and empathic abilities (Alfonso et al., 2015; Alfonso

et al., 2017; Taverner et al., 2018a; Yalc¸ın, 2020).

Most of these models use a dimensional representa-

tion for emotions and mood (Taverner et al., 2020).

One of the most widely used dimensional represen-

tation is the Circumplex Model of Affect that uses

two dimensions to represent emotions: pleasure and

arousal (Russell, 1980). The use of this dimensional

model allows the representation and simulation of af-

fective processes such as emotion elicitation, emo-

tional contagion, or empathy.

Empathic agents have been used in different con-

texts and have proved to be more reliable and more

credible, thus reducing the stress and frustration of

human users and improving human-machine interac-

tion (Paiva et al., 2017). The development of agents

with empathy has also been useful in the educational

field. For example, the research performed in (Ro-

drigues et al., 2015) used empathic agents to prevent

bullying. In this experiment it was found that agents

with empathic skills increased the sociability and in-

volvement of young users. The participants played

a game in which the agents recognized and showed

emotions. During the game, a series of social situ-

ations were presented in which an agent was bullied

by other agents with the intention of driving the stu-

dents’ behaviour through empathy. Empathic agents

have also been used for tutoring in educational en-

vironments. For example, in (Obaid et al., 2018) a

robot tutor with empathic skills was proposed. The

study showed that the empathic agent improved the

predisposition of young people to participate in learn-

ing experiences. In the same way, in (Serholt and

Barendregt, 2016) a model of a robot tutor with em-

pathy is presented. This model is able to recognize

children’s emotions and interact with them through a

touch screen. The results of experiments show that

the empathic robot tutor was able to elicit and main-

tain the social engagement of the students.

Considering the promising results of previous

works, where was shown that empathy increases trust

Towards a Model of Empathic Pedagogical Agent for Educating Children and Teenagers on Good Practices in the Use of Social Networks

441

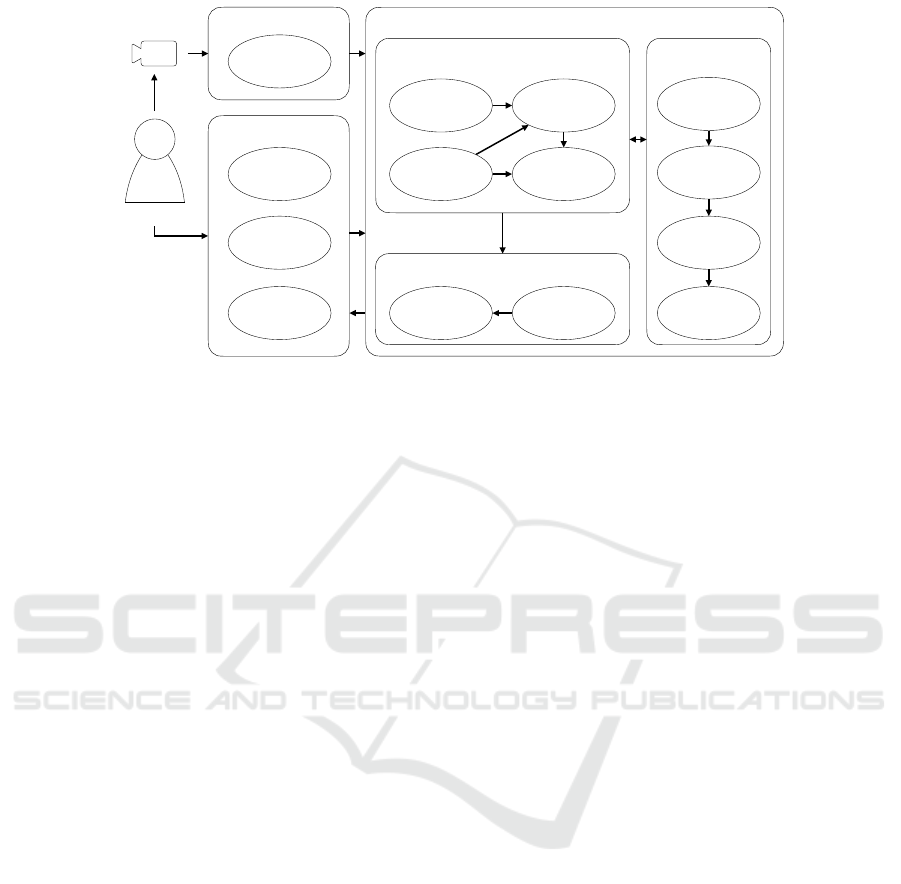

Emotion recognition

Empathic agent architecture

Af fective processes Rational processes

Communication

Social network

Emotion

representation

Face emotion

recognition

Privacy risk

estimator

Behavior

control

User

Belief

revision

Options

Filter

Plan

selection

Event

appraisal

Empathic

modulation

Af fective

options

Emotion

expression

Execute

action

Agent avatar

manager

Figure 1: The proposed model for a empathic pedagogical agent in a social network.

and engagement, we propose to employ an empathic

agent to increase the efficiency of the learning process

of young people in the correct use of social networks.

3 PROPOSAL

As mentioned in the previous sections, irresponsible

use of social networks can compromise the user’s pri-

vacy. Therefore, it is necessary to develop tools that

allow young users to understand the risks to which

they are exposed and to learn good practices in the use

of social networks with the aim of maintaining their

privacy. In this paper we present an educational ped-

agogical agent model with empathetic abilities. Our

agent interacts with the user through the social net-

work. The agent will monitor the user’s actions on the

network to analyse his/her behaviour. When the user

prepares a publication, the agent analyses the privacy

risk associated with the publication and recommends

the best strategy for avoiding privacy risks. Follow-

ing a soft-paternalism strategy, the user will be free

to decide how to carry out the publication. Once the

publication has been made, the agent will react ac-

cording to whether the user has followed its advice or

not.

In order to improve the interaction with the user,

the agent must have certain social and emotional ca-

pacities to react with an appropriate behaviour. One

of the best approaches to generating this social be-

haviour is through agents that simulate empathy. Our

proposed agent uses empathy as a tool to build trust

with the user improving its educational task. Through

empathy, our agent is able to react appropriately ac-

cording to the emotion or mood of its young interlocu-

tor. Following the definition of empathy proposed by

Hoffman, our agent will be able to recognize the emo-

tion in the teenager and to produce a behavior accord-

ing to that emotion. The model that we propose in this

paper uses a real-time facial emotion recognition sys-

tem. Once the emotion is recognised, the agent repre-

sents the emotion internally using a multidimensional

representation model (Taverner et al., 2020) and gen-

erates an empathic response in accordance with the

perceived emotion. In addition, the agent will have a

proactive emotional behaviour depending on the ac-

tions performed by the user in the social network.

Figure 1 shows the model for the interaction be-

tween the young user and the agent in the social net-

work. The framework proposed in this work consists

of three main components: the social network based

on Pesedia, the emotion recognition system, and the

empathic intelligent agent.

3.1 Pesedia

Our proposed framework uses three plugins of the

Pesedia social network: the privacy risk estimator,

the behavior control, and the agent avatar manager.

On the one hand, the privacy risk estimator is re-

sponsible for estimating the potential audience for a

publication. This estimation is obtained by measur-

ing the number of potential users that can access to

the publication according to the degree of privacy that

the user has selected (Alemany et al., 2018). On the

other hand, the behavior control is used to capture the

interactions that the user makes within the social net-

work, for example, when pushing a “like” button or

when posting an item. Finally, the agent avatar man-

ager displays the agent’s facial expressions and mes-

sages on the social network window. Figure 2 shows

a screenshot of Pesedia with the agent avatar inter-

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

442

face on the right. When the young user makes a com-

ment, the privacy risk estimator warns the user of the

risk involved in such publication. In the example in

Figure 2, the user has provided a location that could

lead to a high privacy risk. The agent warns the user

that the publication has a high risk and recommends

to modify the audience. The final decision belongs

to the user: the teenager can continue with the publi-

cation or modify the audience. Depending on his/her

decision, the agent will adapt its behaviour by using

different messages and emotional expressions.

3.2 The Emotion Recognition System

The emotion recognition system consists of a video

camera and a classifier based on convolutional neural

networks that is locally installed in the device used

by the teenager. This system captures the image in

real-time and through a cascade classifier (Sharifara

et al., 2014) obtains the position of the user’s face.

Then the face image is sent to a convolutional neu-

ral network installed in the computer used by the

user. This convolutional neural network obtains the

most likely emotion according to the user’s facial

expression (Fuentes et al., 2020). To avoid micro-

expressions the system performs a controlled analysis

using a multiple frame analysis. It is important to re-

mark that the empathic agent can only be as good as

the accuracy of the recognised emotion.

3.3 Empathic Intelligent Agent

Experts recommend improving the critical thinking of

young people. This process involves detecting and

analysing all the messages and information that the

teenager receives, but frequently young people navi-

gate the Internet without the supervision of any adult.

In this kind of scenarios, an intelligent agent super-

vising all the teenager’s activity on the Internet can

provide the advice and emotional support that absent

adults cannot give. Our proposal of architecture for

this intelligent agent is composed of three modules:

the rational processes module, the affective processes

module, and the communication module.

Intelligent agents must be able to reason and es-

tablish plans. In our proposal the rational processes

module is based on the BDI architecture model. A

BDI agent is composed of a set of beliefs, desires,

and intentions. The beliefs represent the informa-

tion that the agent has about the state of the environ-

ment in which it is located, in this case the social net-

work. The desires are objectives that the agent wants

to achieve. In our model, the objectives are a set of

pedagogical actions such as persuading the user to

change the privacy of a message. Finally, the inten-

tions are the objectives that the agent selects to exe-

cute them.

The affective processes module is composed of

four processes: the appraisal process, the emotion

representation process, the empathic modulation pro-

cess, and the selection of affective options process.

The appraisal process appraises any action of the

young users and determines the emotion. Appraisal

theories hold that when a stimulus is procured, a set

of processes are triggered to evaluate that stimulus

(Taverner et al., 2020). As a result of these processes

an emotion is generated. In our model, when the

agent perceives an action of the user in the social net-

work, the agent evaluates this action according to its

beliefs and its desires and, as result, an emotion is

triggered. This emotion is represented in a two di-

mensional space based on the dimensions of pleasure

and arousal by the emotion representation process.

Then, the empathic modulation process uses the di-

mensional representation to approximate the emotion

elicited by the appraisal process to the emotion rec-

ognized in the user’s face. As a result of this process,

an empathic emotion that will be more in line with

the user’s emotional state is generated. Finally, this

empathic emotion is used by the selection of affective

options process to identify the applicable plans.

The last component is the communication mod-

ule. This component is responsible for transmitting

the actions that must be performed to the agent event

manager. It is composed of two processes: the exe-

cute action process and the emotion expression pro-

cess. The first one is in charge of executing the plans

that have been selected by the plan selection process

from the plans generated in the affective options and

the filter processes. These plans may contain educa-

tional actions, privacy recommendations, or affective

expressions. Finally, the emotion-expression process

selects the facial expression that the agent must show,

and sends it to the agent avatar manager (see Fig 2).

4 CONCLUSIONS AND FUTURE

WORK

Today, social networks are an essential part of many

young people’s lives. However, using social networks

without the necessary skills represents a privacy risk.

The best tool to fight the privacy risk is education. In

this work we have proposed a model of a pedagogical

empathic agent for education in the use of social net-

works. The agent interacts with young users warning

them about privacy when a publication is made. The

agent interacts with the user through the social net-

Towards a Model of Empathic Pedagogical Agent for Educating Children and Teenagers on Good Practices in the Use of Social Networks

443

The risk to your

privacy is high, I

think you should

only share it with

your group of

friends.

Activity Members Groups

Search

Share your thoughts

140 characters remaining

Activity

Post

All

Mine Friends

Edit details

Change image

Account settings

New messages [1 new]

Friends [0 new]

I'm staying home today

Alice is now friend with Bob 1 hour ago

Privacy risk alert

The message contains information about

your location.

Continue posting

Modify audience

Sent to: All

Welcome Alice!

Figure 2: Screenshot of the Pesedia social network with the agent avatar interface on the right side.

work. To make this interaction as natural as possible

we have proposed the use of empathy that has been

used before in teaching tasks obtaining good results.

Our empathic pedagogical agent model will be

tested on Pesedia social network to determine if it im-

proves the perception of the risk of privacy of young

people. Further experiments will be performed to test

whether the agent is able to provide young people

with the necessary skills to manage privacy risk in so-

cial networks.

ACKNOWLEDGEMENTS

This work is partially supported by the Spanish

Government project TIN2017-89156-R; Generalitat

Valenciana and European Social Fund by the FPI

grant ACIF/2017/085; GVA-CEICE project PROM-

ETEO/2018/002; and TAILOR, a project founded

by EU Horizon 2020 research and innovation pro-

gramme under GA No 952215.

REFERENCES

Acquisti, A., Adjerid, I., Balebako, R., Brandimarte, L.,

Cranor, L. F., Komanduri, S., Leon, P. G., Sadeh, N.,

Schaub, F., Sleeper, M., et al. (2017). Nudges for pri-

vacy and security: Understanding and assisting users

choices online. ACM Computing Surveys (CSUR),

50(3):1–41.

Alemany, J., del Val, E., Alberola, J., and Garc

´

ıa-Fornes,

A. (2018). Estimation of privacy risk through cen-

trality metrics. Future Generation Computer Systems,

82:63–76.

Alemany, J., del Val, E., Alberola, J., and Garc

´

ıa-Fornes,

A. (2019). Enhancing the privacy risk awareness

of teenagers in online social networks through soft-

paternalism mechanisms. International Journal of

Human-Computer Studies, 129:27–40.

Alemany, J., del Val, E., and Garc

´

ıa-Fornes, A. (2020). As-

sisting users on the privacy decision-making process

in an osn for educational purposes. In International

Conference on Practical Applications of Agents and

Multi-Agent Systems, pages 379–383. Springer.

Alfonso, B., Vivancos, E., and Botti, V. (2017). Toward

formal modeling of affective agents in a BDI archi-

tecture. ACM Transactions on Internet Technology

(TOIT), 17(1):5.

Alfonso, B., Vivancos, E., Botti, V., and Hern

´

andez, P.

(2015). Building emotional agents for strategic de-

cision making. In Proceedings of the International

Conference on Agents and Artificial Intelligence, vol-

ume 2, pages 390–397. SCITEPRESS.

Argente, E., Vivancos, E., Alemany, J., and Garc

´

ıa-Fornes,

A. (2017). Educating in privacy in the use of so-

cial networks. Education in the Knowledge Society,

18(2):107–126.

Ballesteros, J. C. and Picazo, L. (2018). Icts and their influ-

ence on adolescent socialization. (in spanish).

Barr

´

on-Estrada, M. L., Zatarain-Cabada, R., P

´

erez, Y. H.,

et al. (2012). An intelligent and affective tutoring sys-

tem within a social network for learning mathemat-

ics. In Ibero-American Conference on Artificial Intel-

ligence, pages 651–661. Springer.

Blair, R. J. R. (2008). Fine cuts of empathy and the

amygdala: dissociable deficits in psychopathy and

autism. Quarterly journal of experimental psychol-

ogy, 61(1):157–170.

Botti-Cebri

´

a, V., del Val, E., and Garc

´

ıa-Fornes, A. (2020).

Automatic detection of sensitive information in educa-

tive social networks. In Conference on Complex, In-

telligent, and Software Intensive Systems, pages 184–

194. Springer.

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

444

Cuff, B. M., Brown, S. J., Taylor, L., and Howat, D. J.

(2016). Empathy: A review of the concept. Emotion

review, 8(2):144–153.

Fuentes, J. M., Taverner, J., Rincon, J. A., and Botti, V.

(2020). Towards a classifier to recognize emotions us-

ing voice to improve recommendations. In Interna-

tional Conference on Practical Applications of Agents

and Multi-Agent Systems, pages 218–225. Springer.

Garc

´

ıa-Pe

˜

nalvo, F. J. and Kearney, N. A. (2016). Networked

youth research for empowerment in digital society:

The wyred project. In Proceedings of the Fourth In-

ternational Conference on Technological Ecosystems

for Enhancing Multiculturality, page 39. Association

for Computing Machinery.

G

´

omez-Gal

´

an, J., Mart

´

ınez-L

´

opez, J.

´

A., L

´

azaro-P

´

erez, C.,

and Sarasola S

´

anchez-Serrano, J. L. (2020). So-

cial networks consumption and addiction in col-

lege students during the covid-19 pandemic: Educa-

tional approach to responsible use. Sustainability,

12(18):7737.

Grawemeyer, B., Johnson, H., Brosnan, M., Ashwin, E.,

and Benton, L. (2012). Developing an embodied ped-

agogical agent with and for young people with autism

spectrum disorder. In International Conference on In-

telligent Tutoring Systems, pages 262–267. Springer.

Hoffman, M. L. (2001). Empathy and moral development:

Implications for caring and justice. Cambridge Uni-

versity Press.

Jasmontaite, L. and De Hert, P. (2015). The eu, children

under 13 years, and parental consent: a human rights

analysis of a new, age-based bright-line for the pro-

tection of children on the internet. International Data

Privacy Law, 5(1):20–33.

Karal, H., Kokoc, M., and Cakir, O. (2017). Impact of the

educational use of facebook group on the high school

students proper usage of language. Education and In-

formation Technologies, 22(2):677–695.

Kauppinen, A. (2014). Empathy, emotion regulation, and

moral judgment. Oxford University Press.

Machimbarrena, J. M., Calvete, E., Fern

´

andez-Gonz

´

alez,

L.,

´

Alvarez-Bard

´

on, A.,

´

Alvarez-Fern

´

andez, L., and

Gonz

´

alez-Cabrera, J. (2018). Internet risks: An

overview of victimization in cyberbullying, cyber dat-

ing abuse, sexting, online grooming and problematic

internet use. International journal of environmental

research and public health, 15(11):2471.

Micheli, M. (2016). Social networking sites and low-

income teenagers: between opportunity and in-

equality. Information, Communication & Society,

19(5):565–581.

Obaid, M., Aylett, R., Barendregt, W., Basedow, C., Cor-

rigan, L. J., Hall, L., Jones, A., Kappas, A., K

¨

uster,

D., Paiva, A., et al. (2018). Endowing a robotic tu-

tor with empathic qualities: Design and pilot evalu-

ation. International Journal of Humanoid Robotics,

15(06):1850025.

Paiva, A., Leite, I., Boukricha, H., and Wachsmuth, I.

(2017). Empathy in virtual agents and robots: a sur-

vey. ACM Transactions on Interactive Intelligent Sys-

tems (TiiS), 7(3):1–40.

Primack, B. A., Karim, S. A., Shensa, A., Bowman, N.,

Knight, J., and Sidani, J. E. (2019). Positive and neg-

ative experiences on social media and perceived so-

cial isolation. American Journal of Health Promotion,

33(6):859–868.

Rodrigues, S. H., Mascarenhas, S., Dias, J., and Paiva,

A. (2015). A process model of empathy for virtual

agents. Interacting with Computers, 27(4):371–391.

Ruiz-Dolz, R., Heras, S., Alemany, J., and Garc

´

ıa-Fornes,

A. (2019). Towards an argumentation system for as-

sisting users with privacy management in online social

networks. In CMNA@ PERSUASIVE, pages 17–28.

Russell, J. A. (1980). A circumplex model of affect. Journal

of Personality and Social Psychology, 39(6):1161–

1178.

Serholt, S. and Barendregt, W. (2016). Robots tutoring

children: Longitudinal evaluation of social engage-

ment in child-robot interaction. In Proceedings of

the 9th nordic conference on human-computer inter-

action, pages 1–10.

Sharifara, A., Rahim, M. S. M., and Anisi, Y. (2014). A

general review of human face detection including a

study of neural networks and haar feature-based cas-

cade classifier in face detection. In 2014 International

Symposium on Biometrics and Security Technologies

(ISBAST), pages 73–78. IEEE.

Silva, C. S., Barbosa, G. A., Silva, I. S., Silva, T. S.,

Mour

˜

ao, F., and Coutinho, F. (2017). Privacy for chil-

dren and teenagers on social networks from a usability

perspective: a case study on facebook. In Proceedings

of the 2017 ACM on Web Science Conference, pages

63–71.

Stueber, K. (2013). Empathy. In E. N. Zalta

(Ed.), The Standord Encyclopedia of Philosophy

(http://plato.stanford.edu/entries/empathy/). Stanfotd:

The Metaphysics Research Lab, Stanford University.

Taverner, J., Alfonso, B., Vivancos, E., and Botti, V. J.

(2018a). Modeling personality in the affective agent

architecture GenIA3. In International Conference on

Agents and Artificial Intelligence, pages 236–243.

Taverner, J., Ruiz, R., del Val, E., Diez, C., and Alemany,

J. (2018b). Image analysis for privacy assessment in

social networks. In International Symposium on Dis-

tributed Computing and Artificial Intelligence, pages

1–4. Springer.

Taverner, J., Vivancos, E., and Botti, V. (2020). A fuzzy ap-

praisal model for affective agents adapted to cultural

environments using the pleasure and arousal dimen-

sions. Information Sciences.

Taverner, J., Vivancos, E., and Botti, V. (2020). A

multidimensional culturally adapted representation of

emotions for affective computational simulation and

recognition. IEEE Transactions on Affective Comput-

ing, pages 1–1.

Yalc¸ın,

¨

O. N. (2020). Empathy framework for embodied

conversational agents. Cognitive Systems Research,

59:123–132.

Towards a Model of Empathic Pedagogical Agent for Educating Children and Teenagers on Good Practices in the Use of Social Networks

445