Heterogeneous Preferences and Patterns of Contribution in

Cybersecurity as a Public Good

Mazaher Kianpour

a

Department of Information Security and Communication Technology, Norwegian University of Science and Technology,

Teknologivegen 22, 2815 Gjøvik, Norway

Keywords:

Cybersecurity Economics, Social Preferences, Public Goods, Agent-based Modeling.

Abstract:

This paper presents an agent-based model of contribution to cybersecurity as a participatory public good.

Ineffective cybersecurity measures pose serious threats and risks to the development and stability of infor-

mation societies in the world. Hence, different doctrines and thesis have been suggested to explore how this

domain should be treated by the public and private stakeholders. Cybersecurity as a public good is one of

these doctrines that accordingly, cybersecurity is non-rivalrous and non-excludable. In this paper, we present a

model of social preferences reflecting the concepts of altruism, individualism, aggressiveness, and reciprocity.

It describes an agent-based model simulating a repeated public goods game among a set of defenders that

are in an uncertain environment with incomplete and imperfect information. In the model, defenders have a

probability to choose contribution or being a free-rider, depending on their own preferences and facing with

revealed preferences of other defenders. This model implements a utility maximization that applies to each

individual, modeling the existence of free-riders, punishments, and interdependency of decisions on the social

context. The results of this simulation show that, over time, defenders update their preferences in reaction to

the behavior of other defenders and the experience of cyber-attacks. Moreover, they indicate a high level of

contribution to the provision of cybersecurity as a public good and the effectiveness of punishment on increas-

ing the contributions. This paper demonstrated how agent-based models can be used to examine this doctrine

and investigate whether this doctrine complies with the unique characteristics of cybersecurity.

1 INTRODUCTION

Behavioral economics has discovered systematic hu-

man behavior in many domains including cybersecu-

rity. These behaviors, such as nonlinear probability

weighting, conditional cooperation, and loss aversion,

show that human behavior is inherently noisy and het-

erogeneous. Recent research gives a nuanced view of

the results from experimental games

1

in which the no-

tion that humans are purely self-regarded is rejected.

Although this is not a new discussion among exper-

imental economists, we believe that this notion has

not been studied in the context of cybersecurity eco-

nomics. The results from (Kianpour et al., 2019)

show that social preferences in cybersecurity decision

making is not insignificant and have a moderating ef-

fect on the behavior of actors.

a

https://orcid.org/0000-0003-2804-4630

1

The ultimatum game, the gift exchange game, trust

game and the public goods game are among the widely

replicated experimental games to build this evidence.

Cybersecurity covers a vast domain that includes de-

signing and development of robust systems against

attacks, deployment of methods to detect anomalies

and guarantee the system’s resilience, and defining

response and recovery mechanisms to attacks. All

these are essential requirements of societies that rely

on digital infrastructure. Moreover, it is estimated that

more than $5trillion assets will be at risk in the next

five years due to the rapid digitalization. Hence, in-

vestment in cybersecurity and how this domain should

be treated by the public and private sectors has been a

hot topic among the business leaders.

In 2011, Mulligan and Schneider proposes to

frame and manage cybersecurity as a public good

(Mulligan and Schneider, 2011). While Mulligan

doctrine demonstrates rational, defensible and legit-

imate arguments, it has not gone beyond an acknowl-

edgment that the benefits of cybersecurity are to some

degree non-rivalrous and non-excludable, and has not

explored the aspects of both cybersecurity and pub-

lic goods that contribute on efficiency and effective-

414

Kianpour, M.

Heterogeneous Preferences and Patterns of Contribution in Cybersecurity as a Public Good.

DOI: 10.5220/0010336204140421

In Proceedings of the 13th International Conference on Agents and Artificial Intelligence (ICAART 2021) - Volume 1, pages 414-421

ISBN: 978-989-758-484-8

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

ness of cybersecurity provision. Accordingly, we are

not trying to provide normative justification for gov-

ernments to invest more heavily in cybersecurity as

a public good. Conversely, we aim to investigate

whether this idea matches the existing theories and

how this doctrine affects the resilience of such dy-

namic and uncertain environments like digital ecosys-

tems. Therefore, the aim of this study is to construct

an agent-based model that captures the main elements

of public goods theory (i.e. free-riders problem, ef-

fectiveness of punishment, and network effects) and

investigate whether it complies with the unique char-

acteristics of cybersecurity (i.e. dynamic and uncer-

tain environment with incomplete and imperfect in-

formation, and difficulty in assessing the cybersecu-

rity value and cyber risks). In this study, we look at

how agent-based modeling (ABM) can contribute to

exploring macro outcomes of collective contributions

of agents to provide cybersecurity as a public good

considering the heterogeneous social preferences of

agents. Introduction of social preferences into stan-

dard models in economic theory provides us with a

better understanding of different phenomena.

This paper proceeds first by reviewing cybersecu-

rity as a public good. Therefore, in Section 2, we re-

view the previous research on cybersecurity as a pub-

lic good. We present our ABM in Section 3. Section

4 demonstrates the results of the simulation and sen-

sitivity analysis. Finally, this paper is concluded in

Section 5 with suggestions for future work.

2 CYBERSECURITY AS A

PUBLIC GOOD

Samuelsen defines public goods as non-rival and non-

excludable goods in consumption (Samuelson, 1954).

The former implies that once the good is produced,

it can be consumed by other consumers at no addi-

tional cost. The latter, however, is sometimes added

and specifies that consumers cannot be excluded from

consumption of the good once produced. Goods with

these characteristics are often produced with some

form of public assistance (e.g. tax). Accurate pro-

duction and provision of these goods compared to

the level that would be best for society is the main

challenge of policy makers. Some public goods are

best created by direct government provisioning, while

other may be best created by the all beneficiaries as a

participatory public good. Participatory public goods

are created best by changing individuals and organi-

zations’ incentives through different policies and reg-

ulations.

Consumption of a public good by an end-user does

not necessarily have to be free of charge, however, it

is essential that its costs do not become a discriminat-

ing factor, and consequently, determining access and

use of it. From an economic point of view, cybersecu-

rity has been treated as a club good, in that it is non-

rivalrous (i.e. it is not exhausted by its use), but ex-

cludable (i.e. its access is regulated by its cost). The

failure of the market to produce adequate investments

in cybersecurity is documented in (Rowe and Galla-

her, 2006; Etzioni, 2011) and addresses the challenges

of managing cybersecurity as a club good.

Taddeo argues that considering cybersecurity as a

public good will be a step in the right direction to

support policy and governance approaches that will

foster robust, open, pluralistic, and stable information

societies (Taddeo, 2019). She elaborates managing

cybersecurity as a public good brings the advantages

of systemic approaches to security, shared responsi-

bilities among different stakeholders; and facilitation

of collaboration. Asllani et al. also explores the

role of establishing an appropriate legal, social, and

ethical framework to enhance cybersecurity (Asllani

et al., 2013). The authors compare the cybersecurity

with safety and conclude that financing of cybersecu-

rity by taxes justifies the significant role of govern-

ments in enhancing cybersecurity. Comparison of cy-

bersecurity with other public goods is not limited to

public safety and other researchers also compared it

with public health. Sedenberg and Mulligan evaluated

different cybersecurity information sharing proposals

leaning on the analogous public good-oriented field of

public health, and proposed some recommendations

to orient cybersecurity policies adopt the doctrine of

public cybersecurity (Sedenberg and Mulligan, 2015).

Reviewing the literature shows that there are dif-

ferent arguments favoring treating cybersecurity as a

public good and the public goods theory plays a rel-

atively minor role in both cybersecurity policy and

practices. Although appraisal of these arguments are

beyond the scope of this research, we attempt to quan-

titatively analyze whether the context of cybersecurity

complies with this theory and employing this theory

maintains the robustness and resilience of the such dy-

namic and stochastic environment in presence of var-

ious externalities.

3 MODEL

In the model, there are N ≥2 organization which each

of them has an initial resource R ≥ 0, expressed in

monetary units. The organizations simultaneously de-

cide on their respective contributions c

i

≥ 0 to invest

on security measures (SM). The total contributions to-

Heterogeneous Preferences and Patterns of Contribution in Cybersecurity as a Public Good

415

wards the cybersecurity provision using these mea-

sures is given by C =

∑

n

i=1

c

i

. The monetary gain of

organization i ∈ N is given by

g

i

=

R −c

i

+ (ROSI ×Cost

SM

) W /O punishment

R −c

i

+ (ROSI ×Cost

SM

) −Cost

p

∑

j6=i

p

i j

−

∑

j6=i

p

ji

W / punishment

(1)

where Cost

SM

is the annual cost of deployment and

maintenance of the security measure (SM), and ROSI

is the return on security investment by the all organi-

zations arising from implementation of security mea-

sures. In the public goods theory literature, this pri-

vate benefit is called the marginal per capita return

(MPCR). In our model, we calculate this variable as

follows

ROSI =

ALE −(ALE ×(1 −RM)) −AC

SM

AC

SM

(2)

where ALE, RM and AC are the annual loss ex-

pectancy, mitigated risk by implementation of the se-

curity measure, and annual cost of it, respectively. On

the other side, the return on the conducted attack for

the attackers will be calculated by

ROA =

EMG −(EMG ×RM) −Cost

att

Cost

att

(3)

where EMG and Cost

att

are the expected monetary

gain and cost of the conducted attack, respectively.

An experimental game played in western countries,

where the rule of law and the norm of civic co-

operation are high, shows that punishment of non-

contributors by contributors is common (Herrmann

et al., 2008). Hence, in Equation 1, we added inves-

tigate two situations; with and without punishment.

Punishment incurs expenses on both sides. There-

fore, it is likely that contributors ignore punishment

considering the cost of punishment (Cost

p

) and their

social preferences. For example, altruistic defenders

are willing less, in compare to aggressive defenders,

to punish the non-contributor.

Considering our discussion in introduction, so-

cial preferences models with risk aversion may break

down into two main elements of self-regarding and

other-regarding preferences. With this in mind, we

express our utility function as below:

π

i

(g

i

,g

j

) = g

i

−α

i

max[g

j

−g

i

,0] −β

i

max[g

i

−g

j

,0]

(4)

where α

i

and β

i

are constant elasticity of substitution

in this function to exhibit the elasticity of the ratio

of altruism and individualism, respectively. Figure 1

depicts these two elements. In our model −100 ≤

α

i

≤ 100 and 0 ≤β

i

≤ 100.

cooperative

competitive

¼

other

¼

self

0

altruistic

agressive

individualistic

(0,100)

(-)

( )+

+

(-)

Figure 1: The value orientation ring with radius r = 100. The 24 possible choices are represented

by the 24 points on the ring, separated from each other by a

π

12

angle.

unknown subject during each treatment.

The two treatments

The basic game is the following. For each alternative of the ring test, we add two new steps.

Accordingly, the game is composed of 24 sets of 3 steps:

1. The first step consists of an initial ring test alternative (see sections 2.2 and 2.3). Two

allocations are proposed to the dictator who chooses one of the form (π

i

, π

j

).

2. In the second step, the dictator has the possibility to make additionally a transfer t to

the other player, the only constraint being that t ≥ 0.

3. The third step defines the way the transfer occurs and so, the treatment.

• For the basic treatment (treatment 0), the transfer occurs so that players are

endowed with (π

i

− t, π

j

+ t).

• For the second treatment (treatment 1), the transfer is completed and matched by

the experimenters so that the final allocation vector is (π

i

− t, π

j

+ 2 t).

• Other treatments using uncertainty about the transfer are in discussion and could

be used in alternative experimental settings.

Specific features of the transfer game

Payoffs are expressed in points. The conversion rate from point to money is given at the

beginning of the experiment. The summary of initial endowments, transfers, final endow-

ments and the sum is only displayed at the end of the completed transfer game, i.e. after

the completion of both treatments.

5

Figure 1: Social Orientation Value Ring.

The static equilibrium of this game, when all the

quantities have unchanging values and organizations

are self-regarded, is zero contributions (∀i ∈ N : c

i

=

0). Furthermore, (Isaac et al., 1982) shows that the

social optimum will be achieved under ∀i ∈ N : c

i

=

R. However, in presence of externalities and other-

regarding preferences which are highly feasible in our

study and the context of cybersecurity, these funda-

mental theorems need not hold. Assuming that the

preferences of all the agents are separable, Dufwen-

berg proposed a general equilibrium for the con-

ditions that other-regarding preferences exist in the

market, particularly if it is competitive (Dufwenberg

et al., 2011). Presence of externalities also does not

necessarily result in a socially desirable distribution

of resources. Therefore, to investigate all these pos-

sible conditions, we implement an agent-based model

to investigate the feasible development of outcomes.

3.1 Agent-based Model

The ABM presented in this paper models the impor-

tance of an agent’s social preferences on the decision

to cooperate or not cooperate in providing cyberse-

curity in the environment as a participatory public

good (i.e. requires the beneficiaries to participate in

creation of the good). In this model, defenders play

a standard public good game where in each period,

each defender makes the decision to contribute to im-

plement the security measures with specific cost and

applications. In case of attack, if the measure is im-

plemented adequately, the attack fails and at the end

of period, the calculated ROSI shares equally among

all the defenders. Otherwise, the impact of conducted

attack will be reduces of the attack target’s resource

and adds to the attacker resource.

Five cyber attacks with different levels of impact

occur in each period. These attacks and their corre-

sponding impacts and costs are extracted from (Bis-

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

416

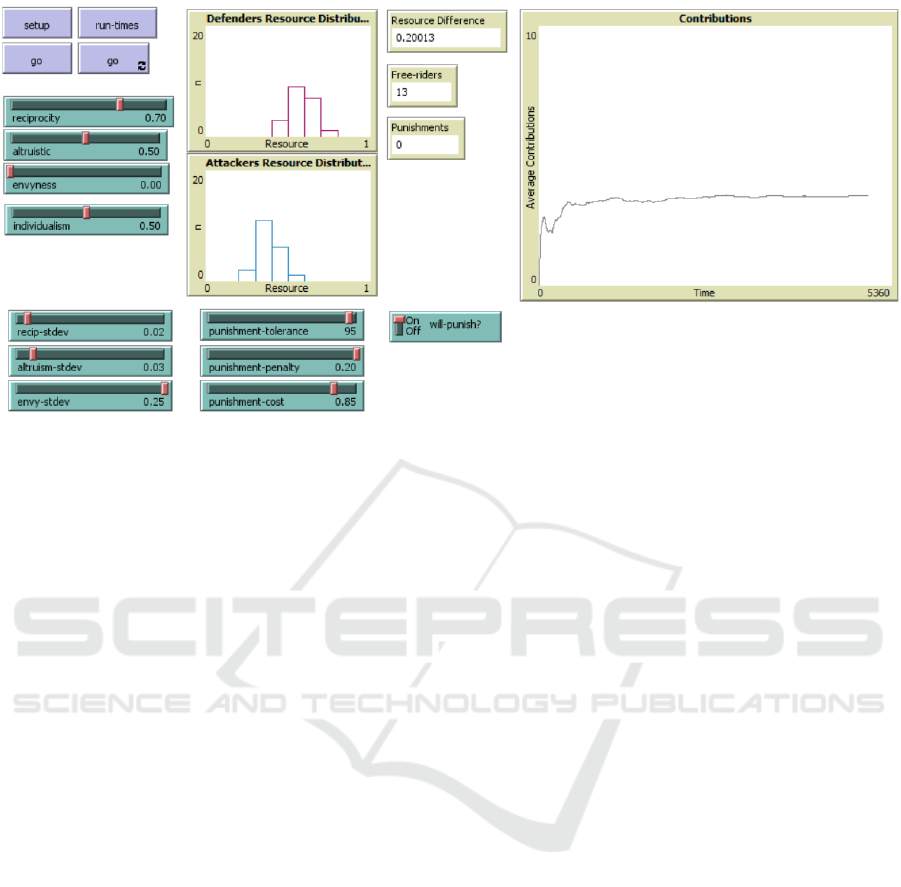

Figure 2: A screenshot of the implemented model using NetLogo 6.1.1.

sell et al., 2019). The attackers have no information

regarding the implemented measures and defenders.

However, defenders have the information regarding

the contributions of other defenders. Accordingly, to

store this information and introduce the reciprocity

behavior into the model, all the defenders have their

own memory which stores the attacks that have oc-

curred to them, the defenders that they have punished

and the defenders that were punished by.

The model is written in NetLogo (see Figure 2)

and each step of the simulation represents one day and

the simulation period is 365 steps, equivalent with one

year. The probability of cooperation of each defender

in each period is based on personal motivation, level

of resource, and experience. The defenders do not

know the contribution probability of the other defend-

ers and the attack likelihood, however, the are able to

observe if any contribution is made or any attack has

occurred. Thus, the game is implemented with incom-

plete and imperfect information among the agents.

4 RESULTS

This section discusses the results of the implemen-

tation of our ABM. The results show that the model

replicates the general features of public goods theory

and presents the outcomes of the players decision in

the game focusing on their social preferences. Firstly,

we look at pure social preferences (Reciprocity Ratio

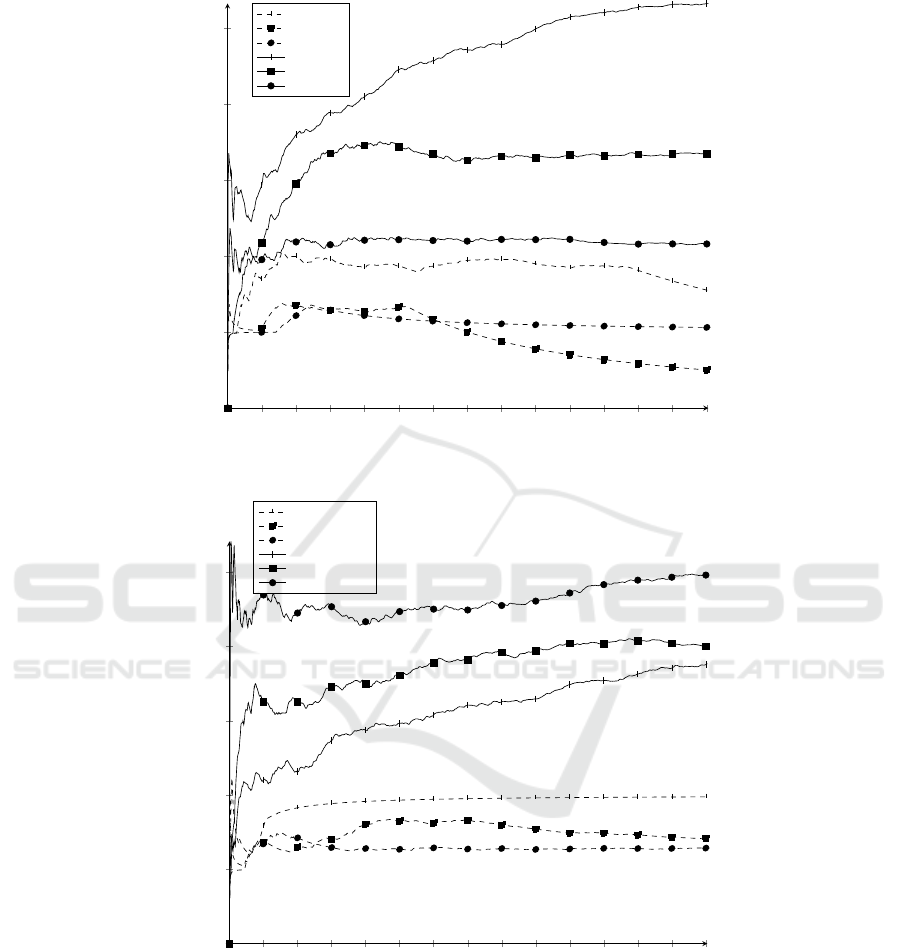

= 0) with and without punishment. Figure 3 shows the

average contributions made by the defenders to pro-

tect their environment and maintain their robustness

in 15 years. The figure shows that punishment dra-

matically promotes contribution. It also shows that

altruistic preferences increases over time whereas the

individual and and aggressive preferences reach to a

constant level of contribution after first five periods of

the simulation.

Reciprocity affects the choice of those who choose

later. Figures 5 and 4 show the results of simula-

tion run in cooperative and competitive modes, re-

spectively, with different reciprocity ratio. As we

observe, the possibility of punishment alters the re-

sults in both modes. In cooperative mode with pun-

ishment, increase in reciprocal behavior increases the

average contribution. In contrast, without punish-

ment, increase in reciprocal behavior decreases the

contributions among the defenders. The reason of this

phenomenon is inequity aversion which is described

in (Fehr and Schmidt, 1999; Bolton and Ockenfels,

2000). Inequity aversion is the preference for fairness

and resistance to incidental inequalities. With higher

reciprocity ratio, defenders care more about interper-

sonal comparisons of their own payoff and the payoffs

of others. Therefore, increase in contribution moti-

vates them more to contribute and vice-versa. More-

over, these results show that despite the heteroge-

neous preferences among the agents, they gradually

change to a homogeneous behavior to contribute in

provision of cybersecurity and maintain the resiliency

of the environment. To put it more generally, we ob-

serve that in a dynamic and stochastic environment,

logic at the level of the system can not be easily in-

ferred from logic at the level of the agents.

Heterogeneous Preferences and Patterns of Contribution in Cybersecurity as a Public Good

417

6 No Author Given

2

3

4

5

6

7

8

9

10

11

12

13

14

15

1

2

3

4

5

Time

Average Contribution

Social Preferences

Alt - OFF

Ind - OFF

Agg - OFF

Alt - ON

Ind - ON

Agg - ON

Fig. 2. Social Preferences with punishment (ON) and without punishment (OFF)

probability of cooperation of each defender in each period is based on personal

motivation, level of resource, and experience. The defenders do not know the

contribution probability of the other defenders and the attack likelihood, how-

ever, the are able to observe if any contribution is made or any attack has

occurred. Thus, the game is implemented with incomplete and imperfect infor-

mation among the agents.

4 Results

This section discusses the results of the implementation of our ABM. The results

show that the model replicates the general features of public goods theory and

presents the outcomes of the players decision in the game focusing on their social

preferences. Firstly, we look at pure social preferences (Reciprocity Ratio = 0)

with and without punishment. Figure 2 shows the average contributions made by

the defenders to protect their environment and maintain their robustness in 15

years. The figure shows that punishment dramatically promotes contribution. It

also shows that altruistic preferences increases over time whereas the individual

Figure 3: Social Preferences with punishment (ON) and without punishment (OFF).

Title Suppressed Due to Excessive Length 7

2

3

4

5

6

7

8

9

10

11

12

13

14

15

1

2

3

4

5

Time

Average Contribution

Cooperation

Recip 0 - OFF

Recip 0.5 - OFF

Recip 1 - OFF

Recip 0 - ON

Recip 0.5 - ON

Recip 1 - ON

Fig. 3. Cooperation with punishment (ON) and without punishment (OFF)

and and aggressive preferences reach to a constant level of contribution after

first five periods of the simulation.

Reciprocity affects the choice of those who choose later. Figures 4 and 3 show

the results of simulation run in cooperative and competitive modes, respectively,

with different reciprocity ratio. As we observe, the possibility of punishment al-

ters the results in both modes. In cooperative mode with punishment, increase

in reciprocal behavior increases the average contribution. In contrast, without

punishment, increase in reciprocal behavior decreases the contributions among

the defenders. The reason of this phenomenon is inequity aversion which is de-

scribed in [8, 4]. Inequity aversion is the preference for fairness and resistance to

incidental inequalities. With higher reciprocity ratio, defenders care more about

interpersonal comparisons of their own payoff and the payoffs of others. There-

fore, increase in contribution motivates them more to contribute and vice-versa.

Moreover, these results show that despite the heterogeneous preferences among

the agents, they gradually change to a homogeneous behavior to contribute in

provision of cybersecurity and maintain the resiliency of the environment. To

put it more generally, we observe that in a dynamic and stochastic environment,

Figure 4: Cooperation with punishment (ON) and without punishment (OFF).

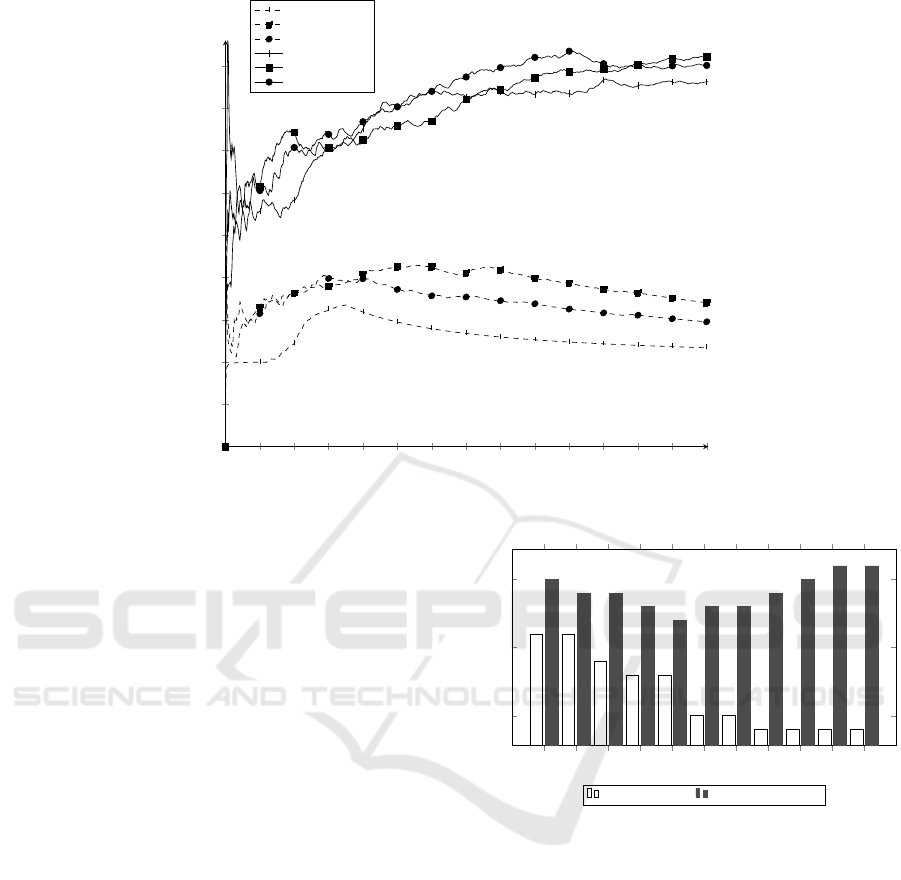

4.1 Sensitivity Analysis

Sensitivity analysis (parameter variability) technique

consists of changing the values of the inputs and pa-

rameters of a model to determine the effect upon the

model’s behavior or output. We used the quantita-

tive approach to investigate both direction and mag-

nitudes of the outputs. The outputs that we examined

in this study are the number of free-riders, resource

difference between defenders and attackers, and the

spending on punishments by contributors. Figure 6

show the result of our analysis on the number of free-

riders in cooperative mode, with and without punish-

ment. As this figure shows, the number of free-riders

increases with the increase in reciprocity ratio if con-

tributors do not punish the non-contributors. We ob-

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

418

8 No Author Given

2

3

4

5

6

7

8

9

10

11

12

13

14

15

0.5

1

1.5

2

2.5

3

3.5

4

4.5

Time

Average Contribution

Competition

Recip 0 - OFF

Recip 0.5 - OFF

Recip 1 - OFF

Recip 0 - ON

Recip 0.5 - ON

Recip 1 - ON

Fig. 4. Competition with punishment (ON) and without punishment (OFF)

logic at the level of the system can not be easily inferred from logic at the level

of the agents.

4.1 Sensitivity Analysis

Sensitivity analysis (parameter variability) technique consists of changing the

values of the inputs and parameters of a model to determine the effect upon the

model’s behavior or output. We used the quantitative approach to investigate

both direction and magnitudes of the outputs. The outputs that we examined in

this study are the number of free-riders, resource difference between defenders

and attackers, and the spending on punishments by contributors. Figure 5 show

the result of our analysis on the number of free-riders in cooperative mode, with

and without punishment. As this figure shows, the number of free-riders increases

with the increase in reciprocity ratio if contributors do not punish the non-

contributors. We observed the same trend in competition mode. As we pointed

out earlier, this shows the change of preferences in this highly interdependent

and dynamic environment.

Figure 5: Competition with punishment (ON) and without punishment (OFF).

served the same trend in competition mode. As we

pointed out earlier, this shows the change of prefer-

ences in this highly interdependent and dynamic en-

vironment.

In our model, resource difference between defend-

ers and attackers shows the resilience of the environ-

ment against the cyber attacks, in that positive value

means that defenders were able to maintain the ro-

bustness of their systems by taking advantage of the

implemented security measures, and negative value

means attackers were successful in breaching into the

defenders’ systems. Our sensitivity analysis shows

that cooperation mode with medium reciprocity ra-

tio has the best results to maintain the environment

resilient. Furthermore, punishments arise out of dis-

satisfaction towards the contribution of other defend-

ers and imposes cost to both sides (See equation 1).

Therefore, defenders can choose whether they are

willing, and they are able to punish those who under

contribute less in each period. Spending on the pun-

ishments in aggressive mode with lowest reciprocity

behavior has the highest value in our repeated games.

These results show the importance of reciprocal be-

havior in interactions among defenders and change of

preferences based on the experience and behavior of

other peers.

Title Suppressed Due to Excessive Length 9

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

5

10

15

11 11

9

8 8

5 5

4 4 4 4

15

14 14

13

12

13 13

14

15

16 16

Reciprocity Ratio

#Free-riders

With Punishment Without Punishment

Fig. 5. Impact of reciprocal behavior on the number of free-rider in cooperative mode

(N = 20)

In our model, resource difference between defenders and attackers shows the

resilience of the environment against the cyber attacks, in that positive value

means that defenders were able to maintain the robustness of their systems

by taking advantage of the implemented security measures, and negative value

means attackers were successful in breaching into the defenders’ systems. Our

sensitivity analysis shows that cooperation mode with medium reciprocity ratio

has the best results to maintain the environment resilient. Furthermore, pun-

ishments arise out of dissatisfaction towards the contribution of other defenders

and imposes cost to both sides (See equation 1). Therefore, defenders can choose

whether they are willing, and they are able to punish those who under contribute

less in each period. Spending on the punishments in aggressive mode with lowest

reciprocity behavior has the highest value in our repeated games. These results

show the importance of reciprocal behavior in interactions among defenders and

change of preferences based on the experience and behavior of other peers.

4.2 Validation

The model validation is a process of assessing the degree to which the model is a

reasonable representation of the real world from the perspective of the model’s

intended applications. A clear understanding of the phenomena to be described

by the model and the testing the simplest behavior rules are the key to reliable

ABM validation [15]. Validation has a rigorous-relevance issue. The most rigorous

validation is data based, however, in order to conduct a rigorous validation for

such a complex problem, we require collection of data for many years. Therefore,

we employ other methods of validation in this study. Sargent proposed different

Figure 6: Impact of reciprocal behavior on the number of

free-rider in cooperative mode (N = 20).

4.2 Validation

The model validation is a process of assessing the

degree to which the model is a reasonable represen-

tation of the real world from the perspective of the

model’s intended applications. A clear understand-

ing of the phenomena to be described by the model

and the testing the simplest behavior rules are the key

to reliable ABM validation (Ormerod and Rosewell,

2006). Validation has a rigorous-relevance issue. The

most rigorous validation is data based, however, in

order to conduct a rigorous validation for such a com-

plex problem, we require collection of data for many

years. Therefore, we employ other methods of vali-

dation in this study. Sargent proposed different meth-

ods of validity for simulation models (Sargent, 2013).

Heterogeneous Preferences and Patterns of Contribution in Cybersecurity as a Public Good

419

This paper mainly studies the result of framing and

managing cybersecurity as a public good, rather than

specifically predicting the agents behavior in the envi-

ronment. Therefore, we only test replicative validity

(i.e. comparison to other models and determining the

internal stochastic variability in the model).

There are four levels of model performance for

replication validity (Axtell and Epstein, 1994). Since,

it would not be realistic to achieve the highest level

(i.e. the model behavior is in quantitative agreement

with empirical micro-structures, actual human behav-

ior) due to inherent uncertainty in human behavior

and the random events in reality, we satisfy the crite-

ria of the third level which is quantitative agreement

with empirical macro-structures. The results of this

simulation model are compared with empirical data

from previous studies (Falk and Fischbacher, 2001;

Fischbacher and Gachter, 2010; Lacomba and L

´

opez-

P

´

erez, 2015). The evidence shows that the agents be-

havior in this model under all the conditions (i.e. with

punishment, without punishment and reciprocity) is

in line with the empirical data.

5 CONCLUSION

This study shows that agent-based modeling of com-

plex socio-technical systems can be valuable for test-

ing fundamental theories which are difficult to in-

spect mathematically and experimentally. We pre-

sented a model to explore the interdependence of in-

dividual decisions in a repeated public goods game

that treats cybersecurity as a public good. This model

maps agents’ preferences to choices of contribution

and punishment. Repeated interactions among the de-

fenders that are able to remember their experience of

cyber attacks, punishments and contributions by oth-

ers lead to a convergence of individual preferences

and emergence of a cooperative behavior as a result.

Heterogeneity of agents is represented by heteroge-

neous social preferences with different reciprocal be-

havior, various level of resources and different source

of incentives. All these parameters affect on the prob-

ability of the contribution and punishment of non-

contributors.

We acknowledge that numerous externalities in

the context of cybersecurity and difficulty in assess-

ing the cybersecurity value and cyber risks cause mis-

aligned incentives and information asymmetry which

all contribute to poor cybersecurity investment and

management. However, this study suggests that the

theory of public goods should play a more significant

role in how we treat cybersecurity in the fast devel-

oping societies to maintain robust and resilient digital

ecosystems. Moreover, it shows that the maintaining

the resilience of the systems promotes the collective

actions among the defenders to combat the future at-

tacks. This highlights the importance of experience

and strongly interdependent decisions that changes

the status of the environment radically.

This is the first implementation of a public goods

game in the context of cybersecurity to investigate

whether the theory of public goods complies with this

domain. This study is meant as a starting point for

research in quantitatively analysis of the doctrine of

public cybersecurity. In future, we investigate the dif-

ferent types of economic efficiencies in this domain

and explore the factors that define the efficient situa-

tions in this context.

REFERENCES

Asllani, A., White, C. S., and Ettkin, L. (2013). Viewing

cybersecurity as a public good: The role of govern-

ments, businesses, and individuals. Journal of Legal,

Ethical and Regulatory Issues, 16(1):7.

Axtell, R. and Epstein, J. (1994). Agent-based modeling:

Understanding our creations. The Bulletin of the Santa

Fe Institute, 9(4):28–32.

Bissell, K., LaSalle, R., and Cin, P. (2019). Ninth annual

cost of cybercrime study. Ponemon Institute: Dublin,

Ireland, 6.

Bolton, G. E. and Ockenfels, A. (2000). Erc: A theory of

equity, reciprocity, and competition. American eco-

nomic review, 90(1):166–193.

Dufwenberg, M., Heidhues, P., Kirchsteiger, G., Riedel, F.,

and Sobel, J. (2011). Other-regarding preferences in

general equilibrium. The Review of Economic Studies,

78(2):613–639.

Etzioni, A. (2011). Cybersecurity in the private sector. Is-

sues in Science and Technology, 28(1):58–62.

Falk, A. and Fischbacher, U. (2001). A theory of reci-

procity.

Fehr, E. and Schmidt, K. M. (1999). A theory of fairness,

competition, and cooperation. The quarterly journal

of economics, 114(3):817–868.

Fischbacher, U. and Gachter, S. (2010). Social prefer-

ences, beliefs, and the dynamics of free riding in pub-

lic goods experiments. American economic review,

100(1):541–56.

Herrmann, B., Th

¨

oni, C., and G

¨

achter, S. (2008).

Antisocial punishment across societies. Science,

319(5868):1362–1367.

Isaac, R. M., McCue, K. F., and Plott, C. R. (1982). Public

goods provision in an experimental environment.

Kianpour, M., Øverby, H., Kowalski, S. J., and Frantz, C.

(2019). Social preferences in decision making under

cybersecurity risks and uncertainties. In International

Conference on Human-Computer Interaction, pages

149–163. Springer.

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

420

Lacomba, J. A. and L

´

opez-P

´

erez, R. (2015). Cooperation.

In Experimental Economics, pages 105–123. Springer.

Mulligan, D. K. and Schneider, F. B. (2011). Doctrine for

cybersecurity. Daedalus, 140(4):70–92.

Ormerod, P. and Rosewell, B. (2006). Validation and verifi-

cation of agent-based models in the social sciences. In

International Workshop on Epistemological Aspects

of Computer Simulation in the Social Sciences, pages

130–140. Springer.

Rowe, B. R. and Gallaher, M. P. (2006). Private sector cyber

security investment strategies: An empirical analysis.

In The fifth workshop on the economics of information

security (WEIS06).

Samuelson, P. A. (1954). The pure theory of public expen-

diture. The review of economics and statistics, pages

387–389.

Sargent, R. G. (2013). Verification and validation of simu-

lation models. Journal of simulation, 7(1):12–24.

Sedenberg, E. M. and Mulligan, D. K. (2015). Public

health as a model for cybersecurity information shar-

ing. Berkeley Technology Law Journal, 30(3):1687–

1740.

Taddeo, M. (2019). Is cybersecurity a public good?

Heterogeneous Preferences and Patterns of Contribution in Cybersecurity as a Public Good

421