Towards Collaborative Analysis of Computational Fluid Dynamics

using Mixed Reality

Thomas Schweiß

a

, Deepak Nagaraj

b

, Simon Bender and Dirk Werth

August-Wilhelm Scheer Institut, Uni Campus Nord D 5.1, Saarbrücken, Germany

Keywords: Computational Fluid Dynamics, Mixed Reality, Collaborative Virtual Environments, Artificial Intelligence,

Machine Learning.

Abstract: Computational fluid dynamics is an important subtopic in the field of fluid mechanics. The associated

workflow includes post processing simulation data which can be enhanced using Mixed Reality to provide an

intuitive and more realistic three-dimensional visualization. In this paper we present a cloud-based proof of

concept Mixed Reality system to accomplish collaborative post processing and analysis of computational

fluid dynamics simulation data. This system includes an automated data processing pipeline with a ML-based

3D mesh simplification approach and a collaborative environment using current head mounted Mixed Reality

displays. To prove the effectiveness and accordingly support the workflow of engineers in the field of fluid

mechanics we will evaluate and extend the system in future work.

INTRODUCTION

In the field of fluid mechanics, computational fluid

dynamics (CFD) represents an important subtopic to

optimize product design workflows, reduce the need

for costly prototypes and eliminate rework. With

CFD simulations of either liquid or gas passing

through or around an object, engineers can analyse

the flow’s impact on the object. Therefore, CFD

simulations are used in several fields of application,

such as aerodynamics and aerospace analysis,

industrial system design and analysis, biological

engineering as well as engine and combustion

analysis. The process of CFD simulation and analysis

consists of three main steps: Pre-processing, which

includes identifying the fluid domain of interest and

set up the geometry of the object, which will be

analysed. The solving step to solve physical equations

related to the fluid flow and the post processing step,

where appropriate visual representations of the results

are being generated for analysis purposes. The latter

can be done by post processors to visualize the

resulting solutions represented as contour and vector

plots or 3D models. The analysis of this data is mostly

done on pc monitors, which restricts the graphical

representations on two dimensions. Thus, an intuitive

a

https://orcid.org/0000-0003-0052-937X

b

https://orcid.org/0000-0003-1102-1619

and realistic data analysis is prevented. Additionally,

these post processors and CFD simulation software

often rely on proprietary data formats, so that

incompatibilities on different systems can occur.

Especially when distributed and interdisciplinary

teams collaborate.

We suppose that current Mixed Reality (MR)

systems can support and enable collaborative CFD

post processing by outsourcing this process

completely into mixed reality. Results of a CFD

solver will be uploaded into our system in an open file

format to process them further in three dimensions

without restricting users to be completely separated

from reality and manipulate data intuitively with hand

gestures, eye gaze and speech control.

Current systems used for CFD post processing

lack of collaborative features. Especially when it

comes to multidisciplinary and distributed teams. It is

not uncommon for third-party systems such as video

conferencing tools to be used. Therefore, users share

their desktop screens or presentation slides to present

resulting data, which means, that the integration of

new ideas and the communication of feedback is

often limited through an abstraction of the actual

three-dimensional data to static images or animations,

based on pre-defined camera angles. As a result,

individual inspection of components is restricted.

284

Schweiß, T., Nagaraj, D., Bender, S. and Werth, D.

Towards Collaborative Analysis of Computational Fluid Dynamics using Mixed Reality.

DOI: 10.5220/0010321602840291

In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2021) - Volume 1: GRAPP, pages

284-291

ISBN: 978-989-758-488-6

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

With the help of cloud computing, AI technology

and current MR hardware, a distributed MR

environment can be implemented to accomplish

collaborative and interdisciplinary post processing

and analysis of CFD data. Thus, the workflow of

engineers in the field of fluid mechanics can be

actively supported by minimizing times of analysis

and reducing travel costs. It also leads to enhanced

coordination and collaboration between team

members by providing natural exploration of

multidimensional data paired with enhanced social

interaction due to digital avatars and collaboration

features like object annotations or digital

whiteboards. Accordingly, we assume that this will

result in a better product and system design.

In this paper we present a proof of concept system

to manipulate and analyse the results of CFD

simulations in a collaborative and distributed MR

environment. The goal is to integrate this system in

the daily workflow of engineers and scientists. To

achieve this, a cloud infrastructure used for

automated data computation based on an open data

format is necessary, so that users can upload their

simulation results and view them on an MR head

mounted display (HMD). The latter can be

computationally very intensive for complex CAD

models due to the large number of polygons within

the meshes. Accordingly, an artificial intelligence

(AI) model, which can implicitly learn the shape and

simplify the meshes in a goal-oriented manner, needs

to be developed, so that also complex models can be

computed by the HMDs. In the collaborative MR

environment, users will be able to control the post

computing process by intuitive interactions via

gestures or speech, as they can set filters to redefine

visualization results and move or scale an object

freely. With the given cloud infrastructure,

distributed collaboration including virtual avatars,

can also be made possible as mentioned above.

According to the sensor data of current HMDs, those

avatars can be equipped with eye gaze and hand

visualization as proposed in (Piumsomboon et al.

2017) and also spatial sound to enhance

communication.

The following sections are structured as follows:

In section 2, we will present related work according

to collaborative MR systems in the field of CFD and

AI based mesh simplification. Section 3 will describe

the overall system design including the automated

data computation (Section 3.1), the AI based model

optimization approach (Section 3.2) and the

capabilities of the collaborative MR environment

(Section 3.3). In section 4 we will conclude and

present future work.

RELATED WORK

CFD simulations and analysis are widely used in the

field of fluid mechanics. The visualisation of CFD

results with MR technology was proposed in several

projects. (J. Moreland et al. 2013) for e.g. developed

an MR representation of simulated fluid flow within

a power plant for training purposes. Vectors,

streamlines and colour gradients were used to

visualize CFD data. (Zhu et al. 2020) created a

system, used in early building-design processes

including the modelling of information about the

building, mesh generation and CFD simulation to

visualize animated thermal activities in a full-scale

room using MR. Both studies rely on precomputed

data, which must be manually changed on a desktop

pc to visualize the MR projections. Functionalities to

dynamically update models were proposed in

(Malkawi und Srinivasan 2005). In this project

different wireless temperature sensors were used to

update the CFD simulation and visualize projections.

These projects provide visualizations of simulation

data but lack adequate user interfaces (UIs) and

therefore an intuitive user experience while

performing CFD post processing in MR in order to

create new visualisations for HMDs.

As described in (Cheng et al. 2020), there is a lack

of MR systems based on cloud storage and cloud

computing, which are current trends for MR systems.

They also mention that current systems are limited in

terms of number of gestures or stability of voice

recognition. Additionally, collaborative multiuser

systems should be more promoted in the industry. For

collaborative and distributed CFD analysis, cloud

computing is also an essential part in order to

accomplish efficient data manipulation, computation

and visualisation. (García et al. 2015) presented a

cloud-based system to monitor and alter CFD

simulations for collaborative solution analysis. This

includes pre-processing, solving and post-processing

data within a server environment. The latter enables

the user to set new basic filters such as stream tracers

or colormaps to manipulate the simulations.

Simulation results can be displayed in 3D

environments, but are visualised on 2D screens,

which, in contrast to MR systems, abstracts the

outgoing data into unintuitive WIMP interactions.

Furthermore, according to the literature research,

there is a lack of MR environments to accomplish

collaborative analysis of CFD simulation data by

distributed working teams, which agrees with the

statements provided by (Cheng et al. 2020).

Polygonal meshes effectively represent 3D shapes

by capturing both surfaces and topology, and leverage

non-uniform elements to represent large flat regions

as well as sharp, intricate features. However, naive

Towards Collaborative Analysis of Computational Fluid Dynamics using Mixed Reality

285

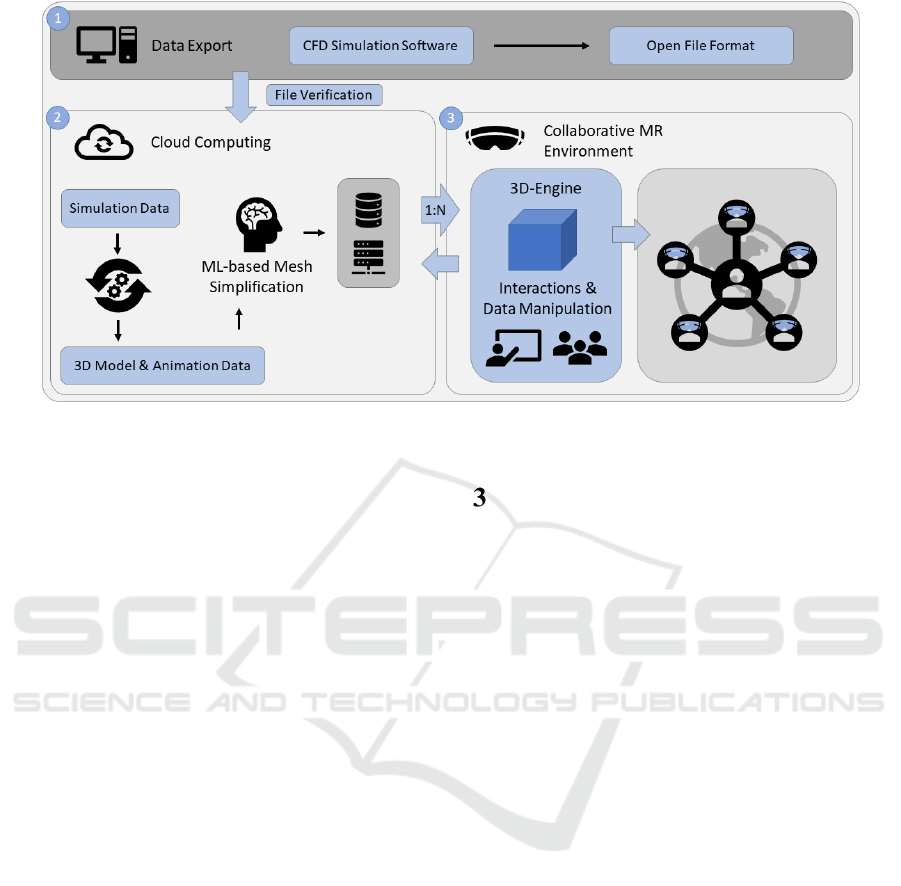

Figure 1: Architecture.

application of MR for CFD data visualization to

create an immersive design environment, for example

for automobile shape designing, requires huge

memory demand and accordingly it would be difficult

to render those graphics in real-time. Numerous

algorithms have been proposed for mesh

simplification (aka polygonal simplification). Most of

such conventional algorithms can be grouped under

Manifold-only simplification and Nonmanifold

simplification algorithms (D. P. Luebke 2001; P.

Cignoni et al. 1998). Manifold-only algorithms are

limited in their application, as they are not capable of

handling nonmanifold meshes, which are typical for

CAD models created manually (D. P. Luebke 2001).

The most commonly used approach in simplifying

nonmanifold meshes is to use a quadric error metric

algorithm (QEM) (Garland und Heckbert 1997). Over

the years, many variations to the original QEM

algorithm (Garland und Heckbert 1997) were

developed. Each of these variations are suitable for

different and specialized applications, but produce

undesirable results when used for other types of

meshes (e.g., no boundaries, lots of boundaries,

textured, etc.) (Bahirat et al. 2018). However,

machine learning (ML) based techniques which

implicitly learn how to retain or collapse edges,

depending on the overall task being undertaken, are

still in nascent stage. In the study (Hanocka et al.

2019), a novel convolutional neural network (CNN)

called MeshCNN has been introduced. They have

demonstrated the ability of MeshCNN in task-driven

pooling to collapse redundant edges and expand

important features on various 3D meshes. However,

their approach works only on triangular meshes.

SYSTEM DESIGN

After considering the limitations of past and current

systems, and future trends for MR in section 2, in this

section, we propose a system which has the potential

to overcome these limitations by providing a cloud

based approach that makes dynamical CFD post

processing, based on user inputs, possible. With this

system, users can change the data and its visualization

through a post processor working in the cloud and

share all information and 3D components within an

immersive and collaborative distributed MR

environment. Including three-dimensional avatars

and abilities to e.g. create annotations in the

environment, we assume to improve efficiency of MR

remote collaboration.

The overall system consists of three main

components visualised in Erro! A origem da

referência não foi encontrada.. First, resulting CFD

simulation data is exported from the simulation

software system to an open file format to ensure data

compatibility. These files will then be uploaded to the

cloud and verified to minimize computation errors.

The second part includes the cloud based automated

data computation, the interfaces for handling MR

control commands and the ML based mesh

simplification. The computed data will then be sent to

the last component, a collaborative and distributed

MR system. This includes UIs and interaction

techniques for communication between users as well

as for data manipulation.

GRAPP 2021 - 16th International Conference on Computer Graphics Theory and Applications

286

3.1 Automated Data Computation

As described in section 1, the process of generating

CFD simulations and analysing them is divided into

three basic steps: Pre-processing, solving and post

processing. Our system will be part of the post

processing stage, in which simulation data is

transferred into 3D data for computation in MR.

Therefore, data must be exported from the CFD

simulation software into an open file format.

Proprietary data formats are difficult to handle when

it comes to partners or team members with different

software applications. Based on this, an open file

format, which can be handled by different CFD

applications such as STAR-CCM+, ANSYS or

OpenFOAM, is required. The CFD General Notation

System (CGNS) has established itself as such an open

file format and data model to store CFD simulation

results. It is capable of storing several types of

auxiliary data, such as generic, discrete or integral

data, dimensional unity and exponents or

nondimensionalization information (Poirier et al.

1998). This also includes mesh data of CAD models.

In order to compute and visualise CGNS data

models, a post processing software is needed. This

software must be capable of converting CGNS into a

readable data format for 3D engines in order to send

this computable data to the HMD. Additionally, it

must handle all necessary tools and commands which

are required in the workflow of CFD engineers to

perform CFD post processing. The post processing

software should be included in the cloud

infrastructure to directly connect it to the MR system

and therefore, be able to benefit from cloud storage as

well as cloud computing power in terms of CPUs,

GPUs and RAM storage. A tool which is capable of

these requirements is ParaView (Ahrens et al. 2005).

As an open source post processing software,

including the Visualization Toolkit, it provides a

variety of algorithms to process CFD simulation data

such as isosurfacing, cutting, clipping and

streamlines. With the ability to access its filter

pipeline by a Python API, ParaView can be run in a

docker container. Based on this, resulting data can

easily be processed on server side via HTTP requests.

For further processing in the 3D engine and for

computation on the MR device, different export

formats are available. Currently, the Wavefront OBJ

format is used to send data from ParaView to the MR

devices. Indeed, this data format is not directly

capable of processing additional visualization data

like surface textures. The GL Transmission Format is

an open data format providing efficient transmission

of 3D scenes and models between applications. It is

capable of handling large datasets such as needed for

CFD data processing and supported by ParaView.

Also it includes binary files such as images and

shaders (Schilling et al. 2016) and therefore makes it

suitable for our approach.

To visualize the resulting data from ParaView, the

Mixed Reality HMD HoloLens 2 from Microsoft is

used. With its hand- and eye-tracking capabilities as

well as voice recognition and spatial audio within a

stand-alone device, it provides a variety of different

visualization and interaction possibilities for the user.

Although it has some limitations as mentioned in

(Cheng et al. 2020), it is still the most advanced

standalone MR device with improved performance,

wearing comfort and especially an renewed

interaction design compared to its predecessor.

In order to connect the MR device to the cloud,

the game engine Unity 3D is used. Based on its

modular structure, open accessibility by utilising

.NET scripting with either C# or JavaScript and

together with the Mixed Reality Toolkit, which

provides a variety of predefined interaction scripts

and connection services, the engine is a good matter

of choice for this system.

The 3D engine establishes a connection to the

cloud, as the user logs into the system. After logging

in, its uploaded and optimized models will

automatically be fetched and loaded. Therefore, a

network manager receives the optimized meshes from

the cloud. These meshes will be included in the

following interaction sequence.

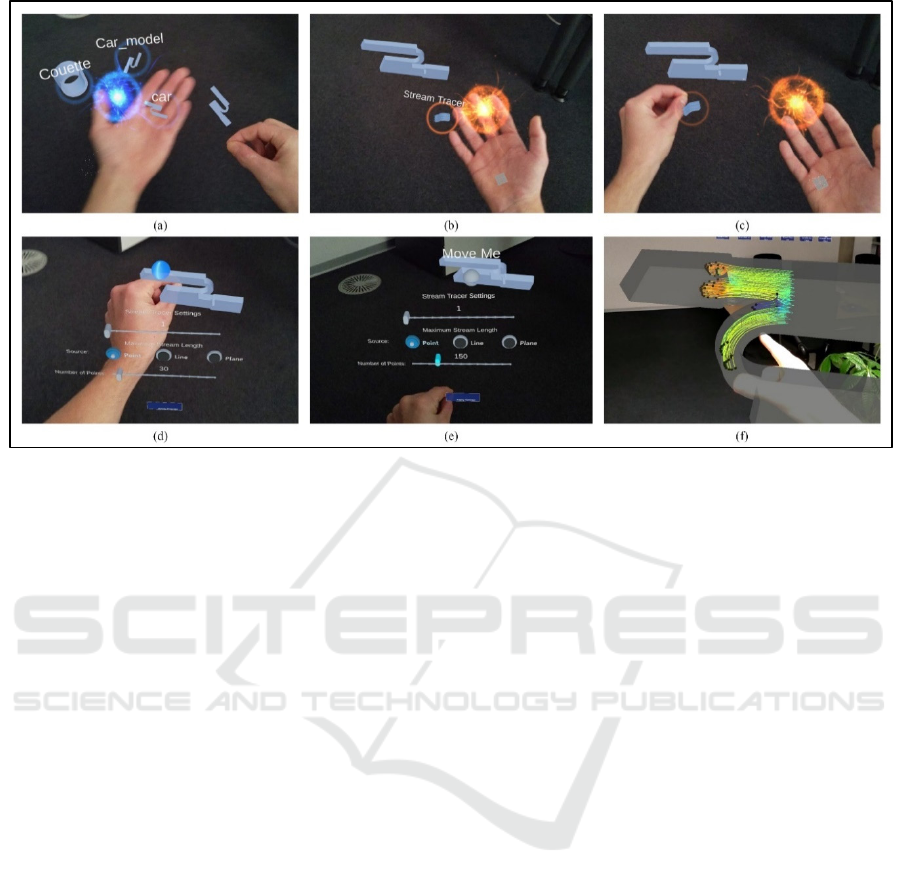

Figure 2: Hand menus.

To facilitate a user-centred object interaction and

provide a good ease of use, we have designed two

hand menus (see Figure 2). The object menu on the

left hand provides the processed meshes as 3D objects

around a sphere. The second menu on the right hand

lets the user access the current implemented filters of

ParaView. This is a modular approach in which more

filters can be added in future versions. The interaction

sequence is visualized in Figure 3, pictures (a) to (f).

In order to apply one or more filters to an object, the

user can place objects into the room via drag and drop

with hand gestures (a). Also, the scale of an object can

easily be changed by grabbing the object with both

hands (thumb and forefinger) and move the hands

away from each other. After the object is placed, a

Towards Collaborative Analysis of Computational Fluid Dynamics using Mixed Reality

287

Figure 3: MR interaction sequence to apply filters.

filter from the filter menu can be selected (b) and

applied onto the object, utilizing the drag and drop

method (c). With adjusted filter settings via a menu

near the current selected object (d, e), a new mesh

including the resulting data is generated by ParaView

and Unity (f). If the user wants to remove an object,

it can be dragged and released into the object menu

again. Additionally, filters like the stream tracer can

be animated to allow the visualization of a local

stream flow (f). Besides the stream tracer, also filters

like the clip, slice and contour (isosurfaces) filter

together with colour gradients are implemented in a

simple form.

3.2 AI for Model Optimization

With reference to section 2, another edge collapsing

algorithm which works effectively and efficiently on

big CAD files, is NSA (Silva 2007). Unlike other

QEM based methods, which are based on

minimization of error associated with each new

vertex, the NSA algorithm follows a geometric

criterion which implies, that the region around the

collapsing edge be nearly coplanar. An edge is only

collapsed if the variation of the face normal around

the target edge is within a given tolerance. This makes

NSA to arrive at a good compromise between shape

preservation, time performance, and mesh quality

(Silva 2007).

Another interesting approach for mesh

simplification would be, to use neural mesh

autoencoders, which have been recently applied for

many 3D tasks (Ranjan et al., 2018). With this

approach, the task is, that the autoencoder implicitly

learns the mesh structure and the encoder component

of the autoencoder compresses the input mesh into a

latent space representation. Later, the decoder

component of the autoencoder decodes / reconstructs

the latent representation into a mesh structure,

neglecting the redundant elements from the original

mesh. (Ranjan et al. 2018) for example, proposed

such a convolutional mesh autoencoder called

CoMA, which used spectral convolution layers

accompanied by quadric up-and-down sampling

methods to achieve promising results in aligned data

of a 3D human face. However, such learning-based

methods would also require additional heuristics (or

operators) to make them work with different number

of neighbouring elements, yet maintaining the weight

sharing property of CNNs. In this direction, the study

(Zhou et al. 2020) has proposed a template-free fully

convolutional autoencoder, empowered by novel

convolution and (un)pooling operators, which works

for arbitrary registered meshes like tetrahedrons and

non-manifold meshes. The spatially varying

convolution kernel is especially interesting for our

application, as every vertex will have its own

convolution kernel, which accounts for irregular

sampling and connectivity in the dataset. Their

method of jointly learning the global kernel weight

basis and a low dimensional sampling function for

each individual kernel, would greatly reduce the

number of parameters and accordingly would be less

computationally expensive (Zhou et al. 2020).

Although, our task here is restricted only to carry out

GRAPP 2021 - 16th International Conference on Computer Graphics Theory and Applications

288

mesh simplification with this approach based on an

autoencoder, the capability of obtaining semantically

meaningful localized latent codes would further assist

in better semantic manipulation of the given original

3D mesh, if desired by the designer. Although the

application of autoencoder based algorithms seems

very interesting, owing to goal-oriented mesh

simplification, the main drawback here is, that the

technique requires a sufficient number of datasets for

each kind of mesh and long training times to be

effective. However, the research in this direction is

ongoing and with recent advances, particularly in

hardware technology, learning based algorithms have

a great potential for this application.

3.3 Distributed and Collaborative XR

Environment

Due to complex characteristics of the human body,

the human perception of physical quantities like the

behaviour of light as well as social interaction

between multiple users, creating collaborative and

distributed MR environments is a challenging task.

They have to provide different key aspects, such as

telepresence via co-present active communication,

immersion achieved through interactivity or include

tacit knowledge such as cultural and contextual cues

to enhance distributed collaboration (Raybourn et al.

2019). Although collaborative MR environments

have been developed and studied in different fields of

application like architectural design (Ahn et al. 2019)

or analysis of geo-spatial data (Mahmood et al. 2019),

currently there is a lack of such systems for

collaborative CFD analysis as proposed in section 2.

In order to close this gap and extend the MR system,

we propose a cross reality (XR) system to achieve

collaborative CFD post processing and data analysis,

in which additional features like virtual whiteboards

will be provided, in order to enhance collaboration.

Indeed, the system will also be capable of

including other devices, such as Virtual Reality (VR)

headsets to make the system accessible for different

team members, who prefer to view the simulation data

in a fully immersed environment. Advanced Mixed

Reality hardware like the HoloLens 2 is currently

quite expensive (3500€) but offers more

functionalities like hand and eye tracking in

standalone devices and advanced communication

aspects based on see-through displays in comparison

to VR systems. Nevertheless, VR headsets can also

provide adequate and immersive environments for

CFD analysis. To accomplish such analysis, the user,

according to section 3, must be able to upload the

CGNS data for further computation. This can be done

by a web application, including a user management

system. In order to do so, the user can sign up to the

system, which generates a unique user ID and a certain

amount of cloud storage for the simulation data. After

this step, the files can be uploaded and verified to

ensure for e.g. that the data format is correct, which

minimizes further computation errors. After the file

upload is completed, an automated mesh generation

process will be initiated to create the basic meshes for

the 3D objects in the object menu by ParaView.

Further processing is described in section 3.1.

To enhance user collaboration and provide

telepresence, automatically generated 3D avatars of

each connected user will be included. Based on the

sensor technology of current MR headsets, these

avatars and the MR environment can be equipped

with different kinds of features to enrich collaboration

and communication of distributed users. Hand

recognition and eye tracking can be used to create

realistic hand and finger movement as well as eye

gaze visualizations, which enhanced multi-user

collaboration (Piumsomboon et al. 2017) and create

significantly stronger sense of co-presence (Bai et al.

2020). With spatial audio, the task of finding points

in three dimensions, that are located outside the users

Field of View (FoV), is less time consuming

(Hoppenstedt et al. 2019). This can help users to find

avatars of currently speaking team members, which

can’t be seen in the FoV at that moment, and therefore

improve communication.

To further enhance virtual collaboration,

annotations can be added to the virtual scene by the

user. When it comes to CFD analysis and workflows

of engineers, it is important to show other team

members, at which point a simulation is defective or

where enhancements can be applied. Especially,

interdisciplinary teams can benefit from annotations

as the task of collaborative CFD analysis in expert to

non-expert relationships can be accomplished faster

(Gauglitz et al. 2014). Therefore, an annotation

system with simple annotations will be implemented

to visualize important or critical parts of objects.

Thereby, users will be able to mark objects partially

or in complete in an annotation mode using their

hands in MR or controllers in VR systems.

CONCLUSION AND FUTURE

WORK

The paper proposes a proof of concept for

collaborative post processing and analysis of

computational fluid dynamics using Mixed Reality. It

recommends a cloud based and automated data

computation pipeline with a user management system

to allow CFD engineers uploading simulation results

in the open data format CGNS. With a CFD post

processing software, which is located in the backend,

Towards Collaborative Analysis of Computational Fluid Dynamics using Mixed Reality

289

and an MR application for visualization, analysis of

resulting 3D fluid flow models is possible. The

system is partially implemented in the ongoing

project.

For future work, we plan to implement a first

working prototype and evaluate the collaboration

aspects and effectiveness of the proposed system with

different user tests. This includes implementation of

the ML algorithms for mesh simplification as well as

the collaboration aspects such as avatars and the

annotation system. Additionally, it is planned to

examine if GLTF, as an internal format for 3D data

exchange, is an appropriate alternative for simple data

formats such as the Wavefront OBJ format.

ACKNOWLEDGEMENTS

This Work is based on HoloSim, a project partly

founded by the German ministry of education and

research (BMBF), as part of the “KMU-innovativ:

Informations- und Kommunikationstechnologien”

program, reference number 01IS18020D. The authors

are responsible for the content of this publication.

REFERENCES

Ahn, Kiljae; Ko, Dae-Sik; Gim, Sang-Hoon (2019): A Study

on the Architecture of Mixed Reality Application for

Architectural Design Collaboration. In: Roger Lee (Hg.):

Applied Computing and Information Technology,

Bd. 788. Cham: Springer International Publishing

(Studies in Computational Intelligence, 788), S. 48–61.

Ahrens, James; Geveci, Berk; Law, Charles (2005):

Paraview: An end-user tool for large

data visualization. Online verfügbar unter

https://www.researchgate.net/profile/berk_geveci/publ

ication/247111133_paraview_an_end-

user_tool_for_large_data_visualization/links/53fb414d

0cf2e3cbf566193d/paraview-an-end-user-tool-for-

large-data-visualization.pdf, zuletzt geprüft am

26.10.2020.

Bahirat, Kanchan; Lai, Chengyuan; McMahan, Ryan;

Prabhakaran, Balakrishnan (2018): Designing and

Evaluating a Mesh Simplification Algorithm for Virtual

Reality. In: ACM Transactions on Multimedia

Computing, Communications, and Applications 14, S.

1–26. DOI: 10.1145/3209661.

Bai, Huidong; Sasikumar, Prasanth; Yang, Jing;

Billinghurst, Mark (2020): A User Study on Mixed

Reality Remote Collaboration with Eye Gaze and Hand

Gesture Sharing. In: Regina Bernhaupt, Florian 'Floyd'

Mueller, David Verweij, Josh Andres, Joanna

McGrenere, Andy Cockburn et al. (Hg.): Proceedings

of the 2020 CHI Conference on Human Factors in

Computing Systems. CHI '20: CHI Conference on

Human Factors in Computing Systems. Honolulu HI

USA, 25 04 2020 30 04 2020. [S.l.]: Association for

Computing Machinery, S. 1–13.

Cheng, Jack C. P.; Chen, Keyu; Chen, Weiwei (2020):

State-of-the-Art Review on Mixed Reality Applications

in the AECO Industry. In: J. Constr. Eng. Manage. 146

(2), S. 3119009. DOI: 10.1061/(asce)co.1943-

7862.0001749.

D. P. Luebke (2001): A developer’s survey of polygonal

simplification algorithms. In: IEEE Computer Graphics

and Applications 21 (3), S. 24–35. DOI:

10.1109/38.920624.

García, Manuel; Duque, Juan; Boulanger, Pierre; Figueroa,

Pablo (2015): Computational steering of CFD

simulations using a grid computing environment. In: Int

J Interact Des Manuf 9 (3), S. 235–245. DOI:

10.1007/s12008-014-0236-1.

Garland, Michael; Heckbert, Paul S. (1997): Surface

Simplification Using Quadric Error Metrics. In:

Proceedings of the 24th Annual Conference on

Computer Graphics and Interactive Techniques. USA:

ACM Press/Addison-Wesley Publishing Co

(SIGGRAPH ’97), S. 209–216.

Gauglitz, Steffen; Nuernberger, Benjamin; Turk, Matthew;

Höllerer, Tobias (2014): World-stabilized annotations

and virtual scene navigation for remote collaboration.

In: Hrvoje Benko, Mira Dontcheva und Daniel Wigdor

(Hg.): Proceedings of the 27th annual ACM symposium

on User interface software and technology - UIST '14.

the 27th annual ACM symposium. Honolulu, Hawaii,

USA, 05.10.2014 - 08.10.2014. New York, New York,

USA: ACM Press, S. 449–459.

Hanocka, Rana; Hertz, Amir; Fish, Noa; Giryes, Raja;

Fleishman, Shachar; Cohen-Or, Daniel (2019):

MeshCNN: A Network with an Edge. In: ACM Trans.

Graph. 38 (4). DOI: 10.1145/3306346.3322959.

Hoppenstedt, Burkhard; Probst, Thomas; Reichert,

Manfred; Schlee, Winfried; Kammerer, Klaus;

Spiliopoulou, Myra et al. (2019): Applicability of

Immersive Analytics in Mixed Reality: Usability Study.

In: IEEE Access 7, S. 71921–71932. DOI:

10.1109/ACCESS.2019.2919162.

J. Moreland; Jichao Wang; Yanghe Liu; Fan Li; Litao Shen;

B. Wu; C. Zhou (2013): Integration of Augmented

Reality with Computational Fluid Dynamics for Power

Plant Training. Online verfügbar unter

https://www.semanticscholar.org/paper/Integration-of-

Augmented-Reality-with-Computational-Moreland-

Wang/a072acf7f2081dc34a58b2e8d22b102459adaf58.

Mahmood, Tahir; Fulmer, Willis; Mungoli, Neelesh;

Huang, Jian; Lu, Aidong (2019): Improving

Information Sharing and Collaborative Analysis for

Remote GeoSpatial Visualization Using Mixed Reality.

In: 2019 IEEE International Symposium on Mixed and

Augmented Reality (ISMAR 2019). Beijing, China, 14-

18 October 2019. 2019 IEEE International Symposium

on Mixed and Augmented Reality (ISMAR). Beijing,

China, 10/14/2019 - 10/18/2019. Piscataway, NJ: IEEE,

S. 236–247.

GRAPP 2021 - 16th International Conference on Computer Graphics Theory and Applications

290

Malkawi, Ali M.; Srinivasan, Ravi S. (2005): A new

paradigm for Human-Building Interaction: the use of

CFD and Augmented Reality. In: Automation in

Construction 14 (1), S. 71–84. DOI:

10.1016/j.autcon.2004.08.001.

P. Cignoni; C. Montani; R. Scopigno (1998): A comparison

of mesh simplification algorithms. In: Computers &

Graphics 22 (1), S. 37–54. DOI: 10.1016/S0097-

8493(97)00082-4.

Piumsomboon, Thammathip; Day, Arindam; Ens, Barrett;

Lee, Youngho; Lee, Gun; Billinghurst, Mark (2017):

Exploring enhancements for remote mixed reality

collaboration. In: Mark Billinghurst und Witawat

Rungjiratananon (Hg.): SIGGRAPH Asia 2017 Mobile

Graphics & Interactive Applications. SIGGRAPH Asia

2017 Mobile Graphics & Interactive Applications.

Bangkok, Thailand, 11/27/2017 - 11/30/2017.

Association for Computing Machinery-Digital Library;

ACM Special Interest Group on Computer Graphics and

Interactive Techniques. New York, NY: ACM, S. 1–5.

Poirier, Diane; Allmaras, Steven; McCarthy, Douglas;

Smith, Matthew; Enomoto, Francis (1998): The CGNS

system. In: 29th AIAA, Fluid Dynamics Conference.

29th AIAA, Fluid Dynamics Conference.

Albuquerque,NM,U.S.A, 15 June 1998 - 18 June 1998.

Reston, Virigina: American Institute of Aeronautics

and Astronautics.

Ranjan, Anurag; Bolkart, Timo; Sanyal, Soubhik; Black,

Michael (2018): Generating 3D faces using

Convolutional Mesh Autoencoders.

Raybourn, Elaine M.; Stubblefield, William A.; Trumbo,

Michael; Jones, Aaron; Whetzel, Jon; Fabian, Nathan

(2019): Information Design for XR Immersive

Environments: Challenges and Opportunities. In: Jessie

Y.C. Chen und Gino Fragomeni (Hg.): Virtual,

Augmented and Mixed Reality. Multimodal interaction /

11th international conference, VAMR 2019 : held as part

of the 21st HCI international conference, HCII 2019 :

Orlando, FL, USA, July 26-31, 2019 : proceedings,

Bd. 11574. Cham: Springer International Publishing

(LNCS Sublibrary, 11574-11575), S. 153–164.

Schilling, Arne; Bolling, Jannes; Nagel, Claus (2016): Using

glTF for streaming CityGML 3D city models. In:

Unknown (Hg.): Proceedings of the 21st International

Conference on Web3D Technology. the 21st International

Conference. Anaheim, California, 7/22/2016 - 7/24/2016.

New York, NY: ACM, S. 109–116.

Silva, Frutuoso (2007): NSA simplification algorithm:

Geometrical vs. visual quality. In:, S. 515–523.

Zhou, Yi; Wu, Chenglei; Li, Zimo; Cao, Chen; Ye, Yuting;

Saragih, Jason et al. (2020): Fully Convolutional Mesh

Autoencoder using Efficient Spatially Varying Kernels.

Zhu, Yuehan; Fukuda, Tomohiro; Yabuki, Nobuyoshi

(2020): Integrating Animated Computational Fluid

Dynamics into Mixed Reality for Building-Renovation

Design. In: Technologies 8 (1), S. 4. DOI:

10.3390/technologies8010004.

Towards Collaborative Analysis of Computational Fluid Dynamics using Mixed Reality

291