SCAN: Sequence-character Aware Network for Text Recognition

Heba Hassan

1

, Marwan Torki

2

and Mohamed E. Hussein

2,3

1

Dept. of Computer Science and Engineering, Egypt-Japan University of Science and Technology, Egypt

2

Dept. of Computer and Systems Engineering, Alexandria University, Egypt

3

Information Sciences Institute, University of Southern California, U.S.A.

Keywords:

Text Recognition, Multi-task Learning.

Abstract:

Text recognition continues to be a challenging problem in the context of text reading in natural scenes. Bearing

in mind the sequential nature of text, the problem is usually posed as a sequence prediction problem from a

whole-word image. Alternatively, it can also be posed as a character prediction problem. The latter approach

is typically more robust to challenging word shapes. Attempting to find the sweet spot that attains the best

of the two approaches, we propose Sequence-Character Aware Network (SCAN). SCAN starts by locating

and recognizing the characters, and then generates the word using a sequence-based approach. It comprises

two modules: a semantic-segmentation-based character prediction, and an encoder-decoder network for word

generation. The training is done over two stages. In the first stage, we adopt a multi-task training technique

with both character-level and word-level losses and trainable loss weighting. In the second stage, the character-

level loss is removed, enabling the use of data with only word-level annotations. Experiments are conducted

on several datasets for both regular and irregular text, showing state of the art performance of the proposed

approach. It also shows that the proposed approach is robust against noisy word detection.

1 INTRODUCTION

Dealing with text recognition as a sequence recogni-

tion problem has its advantages as it leverages the se-

quential nature of text. However, the approach falls

short when it comes to text with challenging shapes.

Several methods have been proposed to deal with text

of challenging shapes. In most recent works the text

image is first rectified (Shi et al., 2018; Luo et al.,

2019; Yang et al., 2019), and then sequence recogni-

tion is applied on the rectified image. Another group

of methods handle irregular text shapes by starting

with character prediction. The characters are then

sorted to recognize the word (Lyu et al., 2018; Liao

et al., 2019). In addition to handling irregular shapes,

this approach has the advantage of being more ro-

bust against noisy text localization. In the proposed

model, we adopt character prediction to handle irreg-

ular text shapes without the need for complex prepos-

sessing, such as rectification, while maintaining ro-

bustness against text localization errors.

Mere ordering of detected characters could lead

to missing crucial sequential information in text. To

address this issue, our proposed model uses a two-

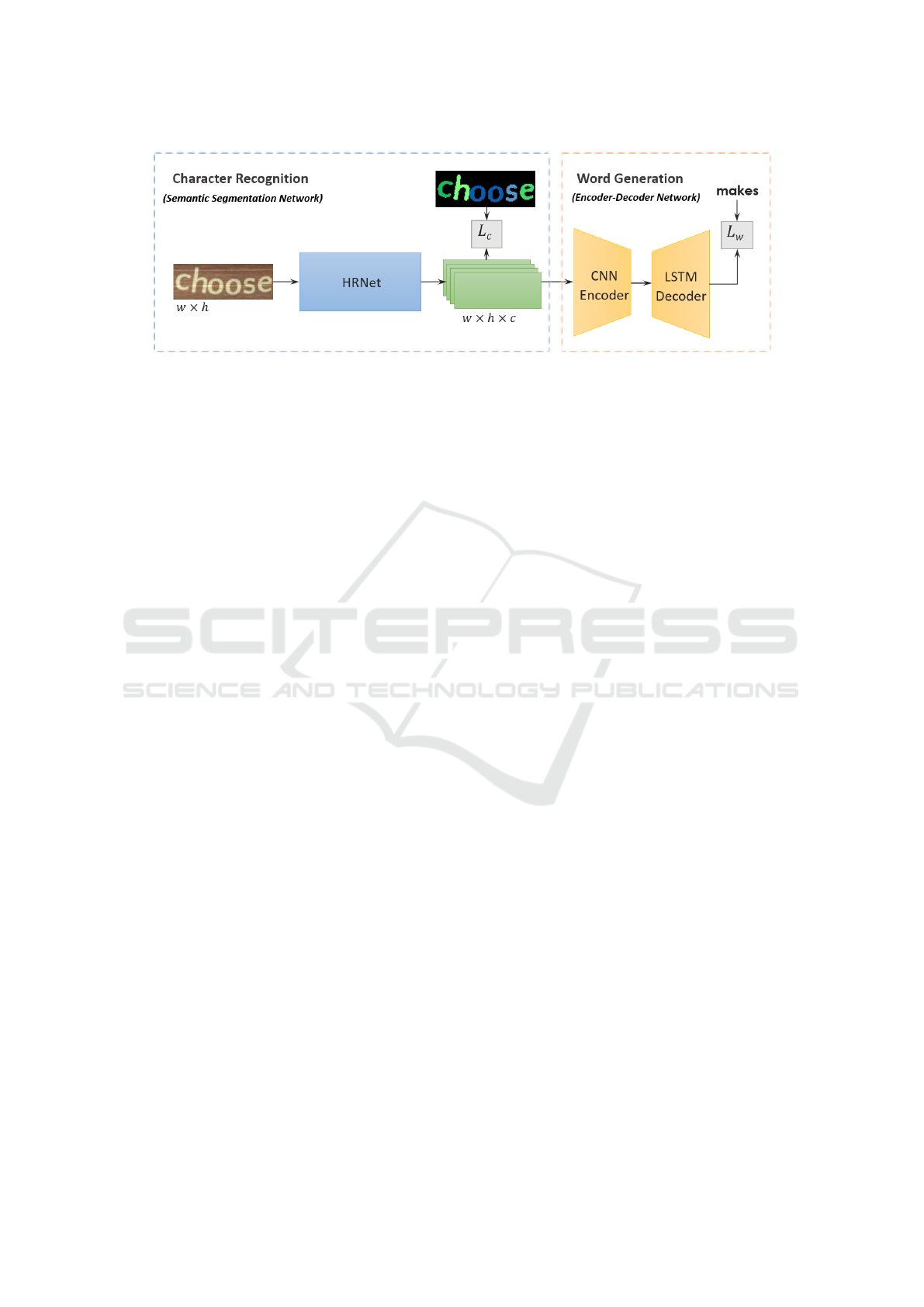

stage model. The first stage is a semantic segmen-

tation network that is responsible for character pre-

diction. It produces a pixel-level map with the loca-

tions and classes of the characters. The second stage

Figure 1: Examples taken from irregular text datasets,

which include rotated, curved, distorted, and multi-font

text. Our model first outputs a character map, then the map

is used to generate a word.

is an encoder-decoder network that processes the map

produced by the first stage and generates the final

word, as shown in Figure 2. The character segmenta-

tion stage is a high-resolution network (HRNet) (Sun

et al., 2019) for semantic segmentation. HRNet has

proved efficiency in many recent semantic segmenta-

tion work as it maintains a high resolution represen-

tation of the image through the network. The word

generation stage receives a map with the same size as

the input image with the number of channels equals to

the number of character classes plus the background

class. A set of convolutional layers are used to encode

the image into a sequence and then an LSTM decoder

is used to produce the final result.

602

Hassan, H., Torki, M. and Hussein, M.

SCAN: Sequence-character Aware Network for Text Recognition.

DOI: 10.5220/0010321106020609

In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2021) - Volume 5: VISAPP, pages

602-609

ISBN: 978-989-758-488-6

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

Figure 2: The proposed model architecture: A character map is first produced with a semantic segmentation network (HR-

Net (Sun et al., 2019), in particular) with a character-level loss L

c

, and then the map is used to generate a word using an

encoder-decoder network with a word-level loss L

w

.

Our training procedure is done over two stages.

The first stage is a multi-task training stage, where the

two parts of character segmentation and word genera-

tion are trained together with both losses contributing

to the final loss of the network. We use the adap-

tive weighting technique proposed in (Kendall et al.,

2018) to ensure efficient training with the two losses.

The second stage is the word-level stage where only

one loss is used and the training is done with data

with only word-level annotations. To overcome the

absence of accurate ground truth for character seg-

mentation, a pseudo ground truth is generated. The

generated ground truth is used to train the character

segmentation network, which is further supervised by

the word generation network.

The main contribution of this paper could be sum-

marized as follows:

• We propose Sequence-Character Aware Network

(SCAN) for text recognition that combines both

character and sequence awareness, which has

proven to be robust for both regular and irreg-

ular text recognition. Our approach, while be-

ing simple and intuitive, outperforms other –more

complex– recognition techniques.

• We deploy a state-of-the-art semantic segmenta-

tion network (HRNet), which yields a lightweight

and efficient semantic segmentation for character

prediction.

• We also deploy a multi-task training setting with

adaptive loss weighting. Our experimental evalu-

ation shows the effectiveness our training proce-

dure.

• Finally, we propose a new technique for pseudo

ground truth generation, which proves to be more

efficient than using only the character bounding

boxes as ground truth.

2 RELATED WORK

The techniques for text recognition could be divided

into two main streams; namely character-based and

sequence-based. Early techniques in text recognition

used the characters as the main detection block. In

such techniques, conventional features are deployed

to detect the characters, which are then sorted to form

a word. For example, in (Wang et al., 2011; Wang

and Belongie, 2010), characters are detected using a

multi-scale sliding-window search along with a clas-

sifier to classify the character windows, the positively

classified of which are later grouped into words. In

some other works, binarization techniques, such as

Maximally Stable Extremal Regions (MSER) (Neu-

mann and Matas, 2010) or Stroke Width Transform

(SWT) (Epshtein et al., 2010), are used to detect

the characters, relying on common texture and shape

characteristics of text.

With the success of deep learning techniques,

Jaderberg et al. (Jaderberg et al., 2016) considered

the word recognition problem as a classification prob-

lem using a Convolutional Neural Network (CNN)

with a dictionary of 90k words as the classes. Later,

many approaches addressed the problem as a se-

quence recognition problem, considering the cropped

word image to be the input sequence and the desired

word to be the output sequence (Su and Lu, 2014;

Shi et al., 2016a; He et al., 2016b). Adding atten-

tion further boosted the performance of the sequence-

based techniques, as illustrated in (Lee and Osindero,

2016; Cheng et al., 2017). To have a network that

is more robust to irregularly warped words, e.g. ori-

ented, curved, or skewed, rectification is employed to

invert the warping applied to a word before recogniz-

ing it.

In contrast to sequence-based approaches,

character-based approaches rely on detecting char-

SCAN: Sequence-character Aware Network for Text Recognition

603

acters and then sorting them to form words. Such

techniques offer natural handling of challenging text

shapes. In (Lyu et al., 2018; Liao et al., 2019), Fully

Convolutional Networks (FCNs) are used for seman-

tic segmentation to detect the character locations and

general orientation. The detected characters are then

sorted from left to right to construct a word.

Each of the sequence-based and character-based

approaches has its points of strength. The sequence-

based approaches exploit the sequential nature of text,

adding a language sense to the model, and hence lead-

ing to better word recognition. On the other hand,

character-based approaches exhibit better capabilities

in handling background clutter, noisy text localiza-

tion, and irregular text shapes. In this paper, we are at-

tempting to get the best of both streams. Our method

uses character segmentation as a first stage, which can

also be seen as a type of supervised spatial attention,

focusing the sequence-based stage on the right char-

acter locations despite the possible irregularity in the

text shape. Subsequently, the word is obtained via a

sequence-based stage, which adds a sense of a lan-

guage to the model, and hence adds extra word recog-

nition accuracy.

3 PROPOSED MODEL

As shown in Figure 2, the model has two modules,

the first is the character segmentation module, and the

second is word generation module. In this section,

we describe the two components in SCAN and the

different stages of the training process.

3.1 Semantic Segmentation for

Character Prediction

This part of the network is a semantic segmentation

network that outputs a map with size w× h × c, where

c is the number of classes. The HRNet-V1 networks

architecture is adopted, which, as explained in (Sun

et al., 2019), maintains high resolution representation

by fusing high resolution features with low resolution

features at each stage in the network. The network

has four blocks. In each block, the low resolution

features are up-sampled and added to the high reso-

lution features while the high resolution features are

down-sampled and added to the low resolution ones

in a fully connected manner. The down-sampling is

performed via a 2-strided convolution while the up-

sampling is performed using bilinear interpolation.

The last layer has a Sigmoid activation with the num-

ber of channels equal to the number of classes. The

network has a cross entropy loss, which we denote as

Table 1: Architecture of the Encoder-Decoder Network.

Layer Output Size Configuration

Input 64 × 256 × 38 −

Conv 32 × 256 × 64 [3 × 3, 64], s: 2 × 1

Conv 16 × 128 × 64 [3 × 3, 64], s: 2 × 2

Conv 8 × 128 × 128 [3 × 3, 128], s: 2 × 1

Conv 4 × 64 × 128 [3 × 3, 128], s: 2 × 2

Conv 2 × 64 × 256 [3 × 3, 256], s: 2 × 1

Conv 1 × 32 × 512 [3 × 3, 512], s: 2 × 2

Bi-LSTM 32 × 512 512 unit

LSTM 32 × 512 512 unit

FC 32 × 38 38

L

c

for character-level loss, that is calculated per pixel

between the ground-truth and the predicted semantic

segmentation map, normalized by the total number of

pixels.

3.2 Encoder-decoder Network for Word

Generation

The word generation stage of the model takes the out-

put from the character segmentation stage as its in-

put, which is a feature map of the same size as the

input image h × w × c, with c being the number of

classes. The encoder is a set of convolutional layers

that shapes the image in the form of a 1D sequence.

The decoder is a bidirectional LSTM layer followed

by an LSTM layer with self attention to produce the

final output. The final layer has a Softmax activation

with cross entropy loss, which we denote as L

w

for

word-level loss. The configuration for the encoder

and the decoder are shown in Table 1.

3.3 Model Training

3.3.1 Ground Truth Generation

The ground truth mask for the characters is gener-

ated using the bounding boxes provided in the Syn-

thText (Gupta et al., 2016) dataset as shown in Fig-

ure 3 by the following steps. First, A rectangular

slice is taken from the image containing one character,

guided by the ground truth bounding box. For each

slice we perform simple thresholding using Otsu’s

method (Otsu, 1979) .We then concatenate the charac-

ters in one binary image. The image is then multiplied

by another binary image formed from the ground truth

boxes to eliminate some of the noise outside the char-

acters, and to give each character its label. As a final

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

604

Figure 3: Ground truth generation: (a) image from Syn-

thText; (b) Bounding Boxes ground truth; (c) Binary im-

age after character thresholding; (d) Final ground truth used

for training, which is the output of multiplying (b) and (c);

(e)Rotated rectangles ground truth.

Figure 4: Examples of masks with IOU less than 30% with

the bounding box mask.

verification step, we calculate the intersection over

union (IOU) for the generated binary mask and the

boxes ground truth and eliminate the image if the IOU

is less than 30%. Some examples of images elim-

inated by this procedure could be seen in Figure 4.

The number of eliminated images is about 1% of the

number of images extracted from the dataset.

The obtained ground truth is considered a pseudo

ground truth that is further guided by the second

part of the network in the second stage of the train-

ing, as explained below. Having an accurate seg-

mentation for each character is not the main goal of

our network. However, having a more expressive

ground truth would help both the semantic segmen-

tation and the encoder-decoder networks perform bet-

ter, as shown in Section 4. We also experimented with

another way of generating ground truth, which is sim-

ply labeling each character with a rotated rectangle

with the size of 50% of the size of the rotated rect-

angle surrounding the bounding box as shown in Fig-

ure 3. However, our approach performs better with

the segmented character ground truth.

3.3.2 Multi-task Training Stage

One of the most important parameters that affect

the training of a network with multiple losses is the

weights assigned to each of the losses. The loss func-

tion would be the weighted sum of the losses in the

system. However, due to the variation in the range

of each loss and the uncertainty of each of the losses,

the weights assigned to different losses must be fine

tuned. We started by investigating several weight as-

signments for the two losses and observed the big ef-

fect it could have on the network’s training behavior

and performance.

A smarter way is to have the weight learnt by the

network. In (Kendall et al., 2018), the use of learnable

Figure 5: Training Stages: (a) multi-task training with two

losses L

c

and L

w

; (b) word-level training with one loss L

w

.

loss weights is investigated based on the homoscedas-

tic uncertainty of each of the tasks in the network. The

loss L for each task is mapped to another loss L

n

that

is formulated as:

L

n

=

1

2σ

2

L + log σ (1)

where σ is a the task uncertainty to be learnt by the

network, and the second term works as a regularizer.

According to (Kendall et al., 2018), this is found to be

more stable than learning a linear weight multiplied

by the loss. Our model has two losses, the character-

level loss L

c

, and the word-level loss L

w

, as shown in

Figure 5, the total loss L

t

is formulated as:

L

t

=

1

2σ

2

c

L

c

+

1

2σ

2

w

L

w

+ log σ

c

+ log σ

w

(2)

3.3.3 Word-level Training Stage

The need for ground truth for character locations is

one of the biggest limitations in character-based text

recognition, which is only available in synthetic data.

In this stage of training, the network has only one out-

put and one loss L

w

as shown in Figure 5. In this

stage, we can use data that only has word level an-

notations. Here, the semantic segmentation output is

only supervised by the final word output, which helps

the output of the character segmentation stage take a

form that increases the accuracy of the sequence net-

work.

4 EXPERIMENTS

In this section, we provide a brief description of the

datasets used for training and evaluation. Next, the

implementation and training details are presented. We

also describe the evaluation criteria used to evaluate

the model. We then present the results obtained on

different datasets and ablation studies conducted to

demonstrate the contribution of the proposed model’s

components.

SCAN: Sequence-character Aware Network for Text Recognition

605

Table 2: Word recognition accuracy for public datasets, both regular and irregular. ”50” means lexicon of size 50, ”1k” means

lexicon of size one thousand, and ”None” means no lexicon. GT1 refers to the usage of rotated-rectangles ground truth. GT2

refers to the usage of segmented-character ground truth. En is an ensemble of the models trained with the two types of ground

truth. Rot refers to using a rotation strategy at test time.

Method

Regular Irregular

IIIT5k SVT IC13 IC15 SVT-P CUTE80

50 1k None 50 None None None None None

(Wang et al., 2011)

(Mishra et al., 2012)

(Wang et al., 2012)

(Yao et al., 2014)

(Jaderberg et al., 2016)

(He et al., 2016a)

(Lee and Osindero, 2016)

(Shi et al., 2016b)

(Shi et al., 2016a)

(Yang et al., 2017)

(Cheng et al., 2017)

(Liu et al., 2018)

(Liao et al., 2019)

(Cheng et al., 2018)

(Shi et al., 2018)

(Luo et al., 2019)

(Huang et al., 2019)

(Yang et al., 2019)

(Qiao et al., 2020)

(Wang et al., 2020)

-

64.1

-

80.2

97.1

94.0

96.8

96.2

97.8

97.8

99.3

-

99.8

99.6

99.6

97.9

99.6

99.5

-

-

-

57.5

-

69.3

92.7

91.5

94.4

93.8

95.0

96.1

97.5

-

98.8

98.1

98.8

96.2

98.8

98.8

-

-

-

-

-

-

-

-

78.4

81.9

81.2

-

87.4

92.0

92.0

87.0

93.4

91.2

94.5

94.4

93.8

94.3

57.0

73.2

70.0

75.9

95.4

93.5

96.3

95.5

97.5

95.2

97.1

-

98.8

96.0

99.2

96.6

97.1

97.2

-

-

-

-

-

-

80.7

-

80.7

81.9

82.7

-

85.1

85.5

86.4

82.8

93.6

88.3

90.0

88.9

89.6

89.2

-

-

-

-

90.8

-

90.0

88.6

89.6

-

93.3

91.1

91.5

-

91.8

92.4

93.9

94.2

92.8

94.2

-

-

-

-

-

-

-

-

-

-

70.6

74.2

-

68.2

76.1

68.8

75.3

78.7

80.0

80.0

-

-

-

-

-

-

-

71.8

66.8

75.8

71.5

78.9

-

73.0

78.5

76.1

79.8

80.8

80.8

74.5

-

-

-

-

-

-

-

59.2

54.9

69.3

63.9

-

79.9

76.8

79.5

77.4

84.7

87.5

83.6

84.4

SCAN (GT1)

SCAN (GT2)

SCAN (En)

SCAN (En+Rot)

99.5

99.5

99.6

99.6

98.7

98.6

98.6

98.7

91.4

92.6

93.2

93.7

99.0

99.2

99.1

99.2

87.1

89.3

90.0

90.7

92.2

93.3

94.2

94.2

74.2

78.5

77.8

80.0

77.5

81.0

81.3

81.7

85.0

85.7

86.8

87.8

4.1 Datasets

There are several datasets used in text recognition:

two large synthetic datasets, and several real datasets,

which contain both regular and irregular text images.

• SynthText: It is a large synthetically generated

dataset that was introduced in (Gupta et al., 2016).

It provides ground truth boxes for both words and

characters. We used the word bounding boxes to

crop images of words, and the character boxes

were used for training in the Multi-task stage.

• Synth90K: It is a synthetic dataset with images of

words generated for text recognition task (Jader-

berg et al., 2016). It contains about 9 million im-

ages generated from a dictionary of 90k words.

• COCO-Text: COCO-Text (Veit et al., 2016) is

the largest dataset for text that contains real im-

ages with bounding boxes for words extracted

from the original COCO dataset. It has 42618 im-

ages for training, 9896 images for validation, and

9837 images for testing.

• ICDAR2013: This dataset has 848 images for

training and 1015 images for testing (Karatzas

et al., 2013).

• IIIT5k-Words: This dataset (Mishra et al., 2012)

has both front-end, perspective, and curved text

with 3000 images for testing and 2000 for train-

ing. The images are associated with a 50-word

lexicon and a 1000-word lexicon.

• Street View Text (SVT): This dataset (Wang

et al., 2011) contains 647 images cropped from

Google’s street view dataset. Each image is asso-

ciated with a 50-word lexicon.

• CUTE: This dataset is mainly for curved

text (Risnumawan et al., 2014). The dataset con-

tains 288 images with no associated lexicon.

• ICDAR2015: This dataset consists of mostly ir-

regular text (Karatzas et al., 2015). It contains

4468 images for training and 2077 images for test-

ing.

• Street View Text Perspective (SVTP): Images in

the SVTP dataset (Quy Phan et al., 2013) were

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

606

cropped from Google street view dataset’s images

with perspective view.

4.2 Implementation and Training

The configuration and implementation details for HR-

Net follow the original paper (Sun et al., 2019). For

the word generation part, the configuration for the

convolutional encoder and the LSTM decoder are

shown in Table 1. All the images are resized to the

size 64 × 256. The number of classes used are 38

classes for letters and numbers, one class for spe-

cial characters, and a background class. The training

starts with the multi-task training with images ex-

tracted from SynthText dataset, using approximately

6 million images. After training for two epochs, train-

ing is switched to word-level training with around

500k images from SynthText, 500k images from

Synth90K, and around 49k real images from the train-

ing sets in COCO-text, IIIT5K, ICDAR13 and IC-

DAR15 datasets. The initial learning rate is set to

10

−3

and reduced on plateau by a factor of 0.8 until it

reaches 10

−5

4.3 Evaluation Criteria

We use the word-accuracy as the main evaluation met-

ric for both the character segmentation and the word

recognition modules. For the character segmentation

stage, after word-level training, we follow a simple

technique by applying connected component analysis

on character pixels. Each blob is considered a char-

acter and is given the label of the majority of its pix-

els. The characters are then sorted from left to right to

form a word. The output of the second stage is a set

of characters that readily form a word.

4.4 Experimental Results and

Comparison with Other Methods

The proposed SCAN network is evaluated over six

public datasets that contain regular and irregular text.

Compared to the most recent prior methods, the net-

work achieves better results in most of the datasets.

As shown in Table 2, our network performs well for

both regular and irregular datasets, achieving the best

results in SVT, IC13, SVTP, and CUTE80. The effect

of the proposed ground truth generation method could

be observed from rows 1 and 2 in SCAN’s results.

From these results, we see that using the ground truth

with segmented character shapes performs better than

using the rotated rectangles ground truth. We also ob-

tain even better performance with an ensemble of the

two models as shown in the SCAN (En) row (for en-

semble) in the table. The output for the ensemble is

obtained by summing the two outputs from the two

models. We also evaluate the test datasets with a ro-

tation strategy as shown in the row SCAN (En+Rot).

In this strategy, we rotate the image in the range from

-50 to 50 degrees with a step of 10 degrees. For each

rotation angle, we estimate the confidence of the pre-

diction as the sum of the most-likely-character prob-

abilities for each pixel, excluding pixels classified as

background. We then choose the prediction with the

highest confidence.

4.5 Effect of Word-level Training on

Character Prediction

In the word-level training stage, the character seg-

mentation loss is removed and the network learns us-

ing the final word-level loss only. The effect on the

accuracy can be seen in Table 3. The accuracy en-

hancement with this training stage is obvious. How-

ever, it is also interesting to inspect the effect of ap-

plying only the word-level loss on the segmentation

map. After word-level training stage, the characters

in the semantic segmentation map start to take a more

concise form, representing the character’s silhouette

rather than the exact character shape, and also the

characters appear to be more separated, as shown in

Figure 6. Both of these properties appear to make the

segmentation results more suitable for word genera-

tion.

Table 3: Word accuracy after training with multi-task train-

ing and word-level training.

Training stage IIIT5k SVT IC13 IC15 SVTP CU80

Multi-task 90.6 81.8 90.1 69.4 70.4 78.8

Word-level 92.6 89.3 93.3 78.5 81.0 85.7

Figure 6: (a) Original image; (b) Character segmentation af-

ter the multi-task training stage; (c) Character segmentation

after the word-level training stage.

SCAN: Sequence-character Aware Network for Text Recognition

607

Table 4: Word accuracy for the two outputs from both stages

of the network.

Output IIIT5k SVT IC13 IC15 SVTP CU80

Sequence 93.7 90.7 94.2 80.0 81.7 87.8

Character 92.6 85.7 92.0 74.0 73.3 82.2

Figure 7: Images from IIIT5K dataset with predicted words,

wrongfully predicted word by character sorting in red; cor-

rected word by sequence prediction in green.

4.6 Effect of Encoder-decoder Network

We argued earlier that adding the encoder-decoder

network to the model leverages the sequential charac-

teristics of text. Here, we evaluate the word accuracy

using the two outputs from both modules; character

segmentation (character output) and word generation

(sequence output). We can observe the effect of the

sequence network on the accuracy in Table 4. This

show the added value obtained by incorporating the

sequence information into the model. In Figure 7, we

present some of the cases where the sequence network

corrected the output obtained from the semantic seg-

mentation network.

4.7 Effect of Noisy Text Localization

One of the main points of strength in the proposed

model is the ability to deal with noise in text detec-

tion. We conducted an experiment on the CUTE80

dataset to evaluate this characteristic of our model.

The dataset has 80 images for text detection, from

which 288 images are extracted for recognition. We

used the detection dataset and cropped the words with

a larger background by expanding the sides of the

cropped rectangle with a random percentage of the

side length, as shown in Figure 8. We refer to this

new set of images with noisily localized words as the

Noisy CUTE dataset. From Figure 8, it can be ob-

served that the model still detects the characters and

generate the correct word despite the added noise.

The accuracy of our model on the CUTE dataset

is 87.8%, while the accuracy on the Noisy CUTE

dataset is 85.4%. With such a small difference in the

recognition accuracy, the model proved to be robust

against localization noise and background distraction.

Figure 8: Character segmentation for images from CUTE

dataset (top) and the Noisy CUTE dataset (bottom).

5 CONCLUSION

In this paper, we introduced a novel Sequence-

Character Aware Network (SCAN) for text recogni-

tion that proved efficient for both regular and irregu-

lar text. Our method has the simplicity of character-

based methods while benefiting from the added in-

formation provided by the sequence-based word gen-

eration, without the need for a complicated rectifica-

tion process. The model has also proved its robustness

against noisy text localization. Our future work will

focus on developing our method into end-to-end text

detection and recognition system, as well as applying

our method to other languages.

REFERENCES

Cheng, Z., Bai, F., Xu, Y., Zheng, G., Pu, S., and Zhou,

S. (2017). Focusing attention: Towards accurate text

recognition in natural images. In Proceedings of the

IEEE International Conference on Computer Vision,

pages 5076–5084.

Cheng, Z., Xu, Y., Bai, F., Niu, Y., Pu, S., and Zhou,

S. (2018). Aon: Towards arbitrarily-oriented text

recognition. In Proceedings of the IEEE Conference

on Computer Vision and Pattern Recognition, pages

5571–5579.

Epshtein, B., Ofek, E., and Wexler, Y. (2010). Detecting

text in natural scenes with stroke width transform. In

2010 IEEE Computer Society Conference on Com-

puter Vision and Pattern Recognition, pages 2963–

2970. IEEE.

Gupta, A., Vedaldi, A., and Zisserman, A. (2016). Syn-

thetic data for text localisation in natural images. In

Proceedings of the IEEE Conference on Computer Vi-

sion and Pattern Recognition, pages 2315–2324.

He, K., Zhang, X., Ren, S., and Sun, J. (2016a). Deep resid-

ual learning for image recognition. In Proceedings of

the IEEE conference on computer vision and pattern

recognition, pages 770–778.

He, P., Huang, W., Qiao, Y., Loy, C. C., and Tang, X.

(2016b). Reading scene text in deep convolutional se-

quences. In Thirtieth AAAI conference on artificial

intelligence.

Huang, Y., Luo, C., Jin, L., Lin, Q., and Zhou, W. (2019).

Attention after attention: Reading text in the wild with

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

608

cross attention. In 2019 International Conference on

Document Analysis and Recognition (ICDAR), pages

274–280. IEEE.

Jaderberg, M., Simonyan, K., Vedaldi, A., and Zisserman,

A. (2016). Reading text in the wild with convolutional

neural networks. International Journal of Computer

Vision, 116(1):1–20.

Karatzas, D., Gomez-Bigorda, L., Nicolaou, A., Ghosh, S.,

Bagdanov, A., Iwamura, M., Matas, J., Neumann, L.,

Chandrasekhar, V. R., Lu, S., et al. (2015). Icdar 2015

competition on robust reading. In 2015 13th Interna-

tional Conference on Document Analysis and Recog-

nition (ICDAR), pages 1156–1160. IEEE.

Karatzas, D., Shafait, F., Uchida, S., Iwamura, M., i Big-

orda, L. G., Mestre, S. R., Mas, J., Mota, D. F., Al-

mazan, J. A., and De Las Heras, L. P. (2013). Ic-

dar 2013 robust reading competition. In 2013 12th

International Conference on Document Analysis and

Recognition, pages 1484–1493. IEEE.

Kendall, A., Gal, Y., and Cipolla, R. (2018). Multi-task

learning using uncertainty to weigh losses for scene

geometry and semantics. In Proceedings of the IEEE

Conference on Computer Vision and Pattern Recogni-

tion, pages 7482–7491.

Lee, C.-Y. and Osindero, S. (2016). Recursive recurrent

nets with attention modeling for ocr in the wild. In

Proceedings of the IEEE Conference on Computer Vi-

sion and Pattern Recognition, pages 2231–2239.

Liao, M., Zhang, J., Wan, Z., Xie, F., Liang, J., Lyu, P.,

Yao, C., and Bai, X. (2019). Scene text recognition

from two-dimensional perspective. In Proceedings of

the AAAI Conference on Artificial Intelligence, vol-

ume 33, pages 8714–8721.

Liu, W., Chen, C., and Wong, K.-Y. K. (2018). Char-net:

A character-aware neural network for distorted scene

text recognition. In Thirty-Second AAAI Conference

on Artificial Intelligence.

Luo, C., Jin, L., and Sun, Z. (2019). Moran: A multi-object

rectified attention network for scene text recognition.

Pattern Recognition, 90:109–118.

Lyu, P., Liao, M., Yao, C., Wu, W., and Bai, X. (2018).

Mask textspotter: An end-to-end trainable neural net-

work for spotting text with arbitrary shapes. In Pro-

ceedings of the European Conference on Computer Vi-

sion (ECCV), pages 67–83.

Mishra, A., Alahari, K., and Jawahar, C. (2012). Scene text

recognition using higher order language priors.

Neumann, L. and Matas, J. (2010). A method for text lo-

calization and recognition in real-world images. In

Asian Conference on Computer Vision, pages 770–

783. Springer.

Otsu, N. (1979). A threshold selection method from gray-

level histograms. IEEE Transactions on Systems,

Man, and Cybernetics, 9(1):62–66.

Qiao, Z., Zhou, Y., Yang, D., Zhou, Y., and Wang, W.

(2020). Seed: Semantics enhanced encoder-decoder

framework for scene text recognition. In Proceedings

of the IEEE/CVF Conference on Computer Vision and

Pattern Recognition, pages 13528–13537.

Quy Phan, T., Shivakumara, P., Tian, S., and Lim Tan, C.

(2013). Recognizing text with perspective distortion

in natural scenes. In Proceedings of the IEEE Inter-

national Conference on Computer Vision, pages 569–

576.

Risnumawan, A., Shivakumara, P., Chan, C. S., and Tan,

C. L. (2014). A robust arbitrary text detection system

for natural scene images. Expert Systems with Appli-

cations, 41(18):8027–8048.

Shi, B., Bai, X., and Yao, C. (2016a). An end-to-end train-

able neural network for image-based sequence recog-

nition and its application to scene text recognition.

IEEE transactions on pattern analysis and machine

intelligence, 39(11):2298–2304.

Shi, B., Wang, X., Lyu, P., Yao, C., and Bai, X. (2016b).

Robust scene text recognition with automatic recti-

fication. In Proceedings of the IEEE conference on

computer vision and pattern recognition, pages 4168–

4176.

Shi, B., Yang, M., Wang, X., Lyu, P., Yao, C., and Bai, X.

(2018). Aster: An attentional scene text recognizer

with flexible rectification. IEEE transactions on pat-

tern analysis and machine intelligence.

Su, B. and Lu, S. (2014). Accurate scene text recognition

based on recurrent neural network. In Asian Confer-

ence on Computer Vision, pages 35–48. Springer.

Sun, K., Zhao, Y., Jiang, B., Cheng, T., Xiao, B., Liu, D.,

Mu, Y., Wang, X., Liu, W., and Wang, J. (2019). High-

resolution representations for labeling pixels and re-

gions. arXiv preprint arXiv:1904.04514.

Veit, A., Matera, T., Neumann, L., Matas, J., and Belongie,

S. (2016). Coco-text: Dataset and benchmark for text

detection and recognition in natural images. arXiv

preprint arXiv:1601.07140.

Wang, K., Babenko, B., and Belongie, S. (2011). End-to-

end scene text recognition. In 2011 International Con-

ference on Computer Vision, pages 1457–1464. IEEE.

Wang, K. and Belongie, S. (2010). Word spotting in the

wild. In European Conference on Computer Vision,

pages 591–604. Springer.

Wang, T., Wu, D. J., Coates, A., and Ng, A. Y. (2012). End-

to-end text recognition with convolutional neural net-

works. In Proceedings of the 21st International Con-

ference on Pattern Recognition (ICPR2012), pages

3304–3308. IEEE.

Wang, T., Zhu, Y., Jin, L., Luo, C., Chen, X., Wu, Y., Wang,

Q., and Cai, M. (2020). Decoupled attention network

for text recognition. In AAAI, pages 12216–12224.

Yang, M., Guan, Y., Liao, M., He, X., Bian, K., Bai, S.,

Yao, C., and Bai, X. (2019). Symmetry-constrained

rectification network for scene text recognition. In

Proceedings of the IEEE International Conference on

Computer Vision, pages 9147–9156.

Yang, X., He, D., Zhou, Z., Kifer, D., and Giles, C. L.

(2017). Learning to read irregular text with attention

mechanisms. In IJCAI, volume 1, page 3.

Yao, C., Bai, X., and Liu, W. (2014). A unified frame-

work for multioriented text detection and recog-

nition. IEEE Transactions on Image Processing,

23(11):4737–4749.

SCAN: Sequence-character Aware Network for Text Recognition

609