Self Representation and Interaction in Immersive Virtual Reality

Eros Viola

a

, Fabio Solari

b

and Manuela Chessa

c

Dept. of Informatics, Bioengineering, Robotics, and Systems Engineering,

University of Genoa, Italy

Keywords:

Virtual Reality, Self Representation, Intel Realsense D435, Interaction, Leap Motion, Manus Prime Haptic

Gloves, Alignment, Rigid Transformation, Live Correction.

Abstract:

Inserting a self-representation in Virtual Reality is an open problem with several implications for both the

sense of presence and interaction in virtual environments. To cope the problem with low cost devices, we de-

vise a framework to align the measurements of different acquisition devices used while wearing a tracked VR

head-mounted display (HMD). Specifically, we use the skeletal tracking features of an RGB-D sensor (Intel

Realsense d435) to build the user’s avatar, and compare different interaction technologies: a Leap Motion, the

Manus Prime haptic gloves, and the Oculus Controllers. The effectiveness of the proposed systems is assessed

through an experimental session, where an assembly task is proposed with the three different interaction medi-

ums, with and without the self-representation. Users reported their feeling by answering the User Experience

and Igroup Presence Questionnaires, and we analyze the total time to completion and the error rate.

1 INTRODUCTION

In current Virtual Reality (VR) applications, the vi-

sual feedback of the user’s body is often missing, de-

spite the abundance of enabling technologies, which

could be used for its implementation. The drawback

of these technologies is mainly the high cost, in fact

they are not so used in practice except by big compa-

nies.

This paper considers the problem of constructing a

self-representation in VR and finding a stable, reliable

and affordable solution that can improve the sense of

presence of the users in the virtual environment. The

aim of our work is to devise a general framework that

sets up different VR systems, which combine the most

commonly used VR headsets (e.g. the Oculus Rift and

the HTC Vive), devices to capture the full body (e.g.

off-the-shelf RGB-D devices, like the Intel Realsense

d435), and technologies to represent the hands and the

fine movement of the fingers, such as the Oculus con-

trollers, the Leap Motion and the Manus Prime haptic

gloves.

The importance of the user’s avatar is studied

comparing the proposed systems with and without

the self-representation of the user (i.e., the avatar).

a

https://orcid.org/0000-0001-7447-7918

b

https://orcid.org/0000-0002-8111-0409

c

https://orcid.org/0000-0003-3098-5894

The focus is to develop a framework that is compat-

ible with the most common head-mounted displays

(HMDs), and that can also be extended to other track-

ing devices, both for the body tracking and for the

hands and fingers tracking.

This would be extremely helpful in many applica-

tions, such as training of people or simulation of spe-

cific situations (e.g. medical, first-aid, rescue), where

the use of avatars of the users would result in a much

more immersive scenario, thus increasing the quality

of the realism of the application.

To address the self-representation in VR, we pro-

pose to fuse the data acquired by an RGB-D camera

and an HMD with a hand-tracking device, to recon-

struct an accurate avatar of the users. Such an avatar

not only moves in a coherent way with the user, but

also has a good representation of the hands and the

fine movement of the fingers to allow interaction in-

side the virtual environment. Some authors have al-

ready addressed the problem of fusing information

acquired by an RGB-D camera and the Leap Mo-

tion: e.g. in (Chessa et al., 2016) the authors set up

an affordable virtual reality system, which combines

the Oculus Rift HMD, a Microsoft Kinect v1, and a

Leap motion, to recreate inside the virtual environ-

ment a first-person avatar, who replicates the move-

ment of the user’s full-body and the fine movements

of his/her fingers and hands. Here, we generalize

Viola, E., Solari, F. and Chessa, M.

Self Representation and Interaction in Immersive Virtual Reality.

DOI: 10.5220/0010320402370244

In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2021) - Volume 2: HUCAPP, pages

237-244

ISBN: 978-989-758-488-6

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

237

that idea by developing a framework that allows us to

combine different off-the-shelf devices to obtain im-

mersive VR systems with a self-representation of the

user, who is experiencing the VR environment.

2 RELATED WORKS

In the current market, there are several solutions that

allow us to build an avatar of the user. The first one

uses the Inverse Kinematics (IK) to dynamically re-

produce natural body movements via six data points

acquired by the headset, which represent the position

of the head, the controllers that represent the posi-

tion of the hands and some trackers, two positioned

on the feet and one on the pelvis (Roth et al., 2016;

Caserman et al., 2019). IK generates an accurately

proportioned virtual human skeleton, and seamlessly

re-targets and synchronizes the users’ motion to the

bones of the chosen avatar. Other approaches use mo-

tion capture suits and systems (Rahul, 2018; Takayasu

et al., 2019), such as those used for animation in films

and games (Bao et al., 2019). Both of these solutions

are not easily accessible for everyone, thus we would

like to build a cheaper systems.

In the literature, people extensively investigate the

role and the impact of a self-representation in VR.

There are studies that focus on the process of embod-

iment of a self-avatar. When embodied within a self-

avatar, in some ways the user treats it as own actual

body, resulting in the so-called “body-ownership illu-

sion”. This shows that the virtual body has an impact

on how the person reacts to virtual stimuli (Yuan and

Steed, 2010). As an example, there are studies investi-

gating how people judge distances (Ries et al., 2008),

thus walking (Canessa et al., 2019; Reinhard et al.,

2020), or how embodiment affects presence (Steed

et al., 2016). Another important aspect is that the

use of self-avatars can invoke psychological effects

on user arousal, attitudes, and behaviours in virtual

environments. Some of these behaviours and atti-

tudes may extend beyond the interaction in the vir-

tual environment influencing judgments or behaviours

in the real world (Biocca, 2014). Also, the presence

of a self-avatar improves the interaction with the sur-

rounding virtual environment and simplifies motor-

related tasks (Slater et al., 1995). Furthermore, in a

shared virtual environment (SVE), the use of a self-

representation would allow the users to communicate

through their own body, as in reality, with other users

(Pan and Steed, 2017). In that case, the use of a per-

sonalized avatar (i.e. a mesh that better represent the

human figure) significantly increases the body own-

ership, agency, as well as the feeling of presence as

explained in (Waltemate et al., 2018). Consequence

of all these effects is the increase of the sense of pres-

ence inside the virtual environment.

3 MATERIALS AND METHODS

3.1 Sensor Fusion and Reference Frame

Alignments

In this paper, to align the reference frames of the

used devices for building the self-representation of

the user we follow the method proposed by (Chessa

et al., 2016). Before the alignment phase, the data

acquired by the different sensors are referred to the

sensors’ reference frame, thus the resulting compo-

nents of avatar are not perceived by the user in a first-

person perspective as in Figure 1(a). It is worth to

note that we use a simple avatar, i.e. a skeleton, since

we are mainly interested to the alignment of the ref-

erence frames and thus to the related effectiveness of

the interaction in VR, than the graphical representa-

tion of the body.

To align the data of all sensors, the alignment

phase is divided into two steps:

• Rigid transformation, computed just once, among

common points from the sensors.

• Live correction to overcome the residual offset

present between the avatar body and the hands

module.

3.1.1 Rigid Transformation

This first step allows us to align the data coming

from the different sensors. We use the least-square

rigid motion using the Singular Values Decomposi-

tion (SVD) technique (Sorkine, 2009) to compute the

rigid transformation between two sets of points (Eq.

1).

(

R, t) = argmin

R,t

n

∑

i=1

|(Rp

i

+t) − q

i

|

2

(1)

where:

• R is the rotation matrix between the two sets of

points, called P and Q, and R the computed esti-

mate.

• t is the translation vector between the centers of

mass of the two sets of points, called P and Q,

and t the computed estimate.

• P = {p

1

, p

2

, . . . , p

n

} are the VR system samples,

acquired by the HMD and the hand detection de-

vice.

HUCAPP 2021 - 5th International Conference on Human Computer Interaction Theory and Applications

238

(a) (b) (c)

Figure 1: Reference frame alignment. (a) The body and the hands representations are in their own reference frames (different

also with respect the first person view). (b) The head, body and hand reference frames are aligned. (c) The head, body and

hand reference frames are aligned and residual errors corrected.

• Q = {q

1

, q

2

, . . . , q

n

} are the RGB-D camera sam-

ples.

• n is the number of samples.

To properly align the different reference frames of

the sensors (i.e. “to move” P towards Q), several cor-

respondences between them are required. In our case,

we have some common joints tracked by both the

RGB-D camera and the HMD with one of the tech-

nologies for the hands and fingers detection: i.e. the

head, the palms and the wrists. Nevertheless, the re-

sult of the rigid transformation, carried out for a single

set of common points (i.e. one sample), often leads to

have a visually incorrect alignment. This means that

the user does not perceive the avatar as superimpose

to his/her body. This can be due to multiple factors,

in particular to:

• co-planar points among the common joints se-

lected,

• noise on the points detected by the sensors.

To overcome this problem, we decided to take

more samples in time as explained in (Chessa et al.,

2016): we take in consideration 5 common points

each frame and 500 samples over time. Furthermore,

during this step, the user have to move his/her arms up

and down, keeping attention that both are tracked by

the sensors, to increase the robustness of the tracking.

After this step, the result is a partial alignment of the

reference frames (i.e. the avatar with the real body of

the user), as shown in Figure 1(b).

3.1.2 Live Correction

The second step to obtain a fine alignment between

the user and the avatar is to perform a run-time cor-

rection. To have a unique body structure (i.e. natu-

ral body structure), which shows a continuity between

the hands tracked by the Controllers or the Leap Mo-

tion and the rest of the body tracked by the RGB-D

camera, we have to better fuse the data coming from

the different sensors. In particular, we decided to use

the position of the wrists acquired by the two tech-

nologies used for hand detection, since they are much

more precise with respect to the RGB-D camera de-

tection of the wrists. This allows a better alignment

of the forearm with the hand. The result of this step is

shown in Figure 1(c).

3.2 Hardware Components

The device used for Virtual Reality is the Oculus Rift

CV1

1

: it has an estimated field of view of 110 de-

grees, a resolution of 2160 × 1200 pixels, and a frame

rate of about 90Hz. It contains several sensors, such

as the accelerometer, the gyroscope and magnetome-

ter, which are used, together with the infrared constel-

lation, to track the user position and movements.

To manage the data flow from the different sen-

sors simultaneously, we used a machine with the fol-

lowing specifications: a PC equipped with graphics

card NVIDIA GeForce GTX 1080, processor Intel(R)

Core(TM) i9-8950 @ 2.90 GHz, 32 GB of RAM and

as operating system Windows 10 Home 64 bit.

The user body is detected and tracked by an RGB-

D camera, specifically developed for tracking, the In-

tel Realsense d435

2

. As skeletal tracking SDK, we

used Nuitrack

3

, a 3D tracking middleware developed

by 3DiVi Inc. This software gives information about

19 joints of the user body. Even if this SDK is paid

for, we decide to use it due to the wide range of RGB-

D camera supported such as Kinect v1, Asus Xtion,

1

https://www.oculus.com/rift/

2

https://www.intelrealsense.com/

3

https://www.nuitrack.com/

Self Representation and Interaction in Immersive Virtual Reality

239

Intel RealSense, and all the other devices on the mar-

ket.

For the detection and tracking of the hands and the

fine movements of the fingers, we used three devices:

• The standard controllers that come along with the

HMD we have chosen. The tracking technology

is the same of the considered system, i.e. the in-

frared constellation of the Oculus Rift controllers.

• The Leap Motion

4

device that captures each hand

with two 640 × 240-pixel near-infrared cameras

and fits the data with a model of the hand. Thus,

based on image stereo pairs, it can compute the

3D position of each finger and of the center of the

hand. It has a good accuracy and a field of view

of 140

◦

×120

◦

, but since it is based on image pro-

cessing, the measurements are affected by occlu-

sions and noise due to illumination, thus it cannot

be used in any environmental condition. In our

setup, we attached the Leap Motion device to the

headset.

• The Manus Prime haptic gloves

5

that have 3 main

components. One is the haptic feedback that is

transmitted by linear resonance actuators on the

fingertips, whose signals can be adjusted to dif-

ferent strengths for specific application scenar-

ios. Then, mostly important for the system here

presented, the hand tracking that is achieve by a

HTC Vive tracker through the base station of the

Vive system, and fingers tracking that is obtained

by bending sensors fused with high-performance

inertial measurement units. This, in principle,

would ensure a permanently high quality of move-

ment measurement, with a latency of 10ms. How-

ever, we will discuss in this paper some compat-

ibility problems which emerged in our sensor fu-

sion approach.

3.3 Software Components

The platform used to develop our solutions was Unity

2019.3.0f6 and Visual Studio 2019 as our IDE to code

in C#. The main plugins used are: the SteamVR tool,

so that our software is compatible with all the sup-

ported HMDs; Math.NET Numerics, to compute the

SVD in the Alignment phase; and VRTK, the Leap

Motion Unity module or the Manus plugins for Unity,

to implement the interaction with the virtual objects

based on the chosen technology.

To use the Oculus Rift HMD together with the

Manus Prime haptic gloves, a further calibration is

4

https://www.ultraleap.com/datasheets/Leap Motion

Controller Datasheet.pdf

5

https://manus-vr.com/.com/

needed to align the Vive trakers reference frame,

which is used by the Manus Prime haptic gloves,

with the Oculus reference frame. To this aim we use

OpenVR Space Calibrator

6

, a software available on

GitHub, which allows us to obtain the correct calibra-

tion.

4 EXPERIMENT

Participants. To validate the proposed method and

the three developed systems, we performed an exper-

imental session and collected data from 6 subjects (4

males, 2 females). The participants were aged from

20 to 55 (38.5 ± 16.7), and with normal or corrected-

to-normal vision. All the subjects were novel to VR.

Each subject performed all the experimental condi-

tions in a randomize order to avoid learning or habit-

uation effects.

Procedure. The experiment is performed as fol-

lows. Before starting, the experimenter shows how

to properly wear the HMD, how to wear/use the given

hand tracking device, and starts the simulation. The

user’s body inside the virtual scene is represented by

small cubes for the joints, parallepipeds for the bones,

and a 3D hand model for the hands.

The user has to perform the alignment phase, as

explained in the previous sections, and then to com-

plete the assembly task that consists of interacting and

grabbing pieces of the Iron man suit to assemble it.

As the assembly task starts, several pieces of the

superhero suit are on a table in scattered order. The

user should grasp the pieces, and put them in a high-

lighted area, by following an arbitrary order. The

pieces should be correctly oriented before attaching

them to the suit. The task ends when all the pieces are

correctly positioned.

At the end of the assembly task, the user removes

the HMD and he/she is asked to fill the questionnaires.

Measurements. During the task, the total time to

completion (TTC) and the number of time a piece of

the suit falls from his/her hands, as error rate, are

recorded. When the user finishes, he/she compiles

the User Experience Questionnaire (UEQ) (Schrepp

et al., 2014) and the Igroup Presence Questionnaire

(IPQ) (Regenbrecht and Schubert, 2002). UEQ is a

recent, fast and reliable questionnaire, which covers

a comprehensive impression of the user experience,

by considering both classical usability aspects (effi-

ciency, perspicuity, dependability) and user experi-

6

https://github.com/pushrax/OpenVR-SpaceCalibrator/

HUCAPP 2021 - 5th International Conference on Human Computer Interaction Theory and Applications

240

(a) (b) (c)

Figure 2: Arrangement of sensors with the 3 hand and fingers detection system. (a) Oculus Rift controllers. (b) Leap Motion

attached to the headset. (c) Manus Prime haptic gloves, which needs the HTC Vive base stations.

ence aspects (originality, stimulation). IPQ is a scale

for measuring the sense of presence experienced in a

virtual environments.

Conditions. We have implemented three different

setups, depending on the interaction device utilized:

controllers, Leap Motion, and Manus gloves. For

each interaction device, we considered two condi-

tions, one in which the full avatar is displayed, the

other one in which only the hands are displayed. A

problem of compatibility has emerged while using the

Manus gloves with the avatar systems. In fact, the

gloves are not stable if used with an RGB-D camera

based on infrared light. The problem is caused by

the IR light of the RGB-D camera that interferes with

the Vive trackers. Therefore, the calibration lead to a

bad alignment of the avatar and furthermore it is hard

to complete the task. Thus, we decided not to take

in consideration this condition, but only to use the

gloves without the RGB-D camera, i.e. without the

avatar. Figure 2 shows the arrangement of the sen-

sors in the environment for the three conditions with

avatar. The user stands in front of the RGB-D camera

at a distance of about 1m wearing the HMD. The user

is free to move over an area of about 1.5m

2

to interact

with the 3D objects in the virtual environment.

In total, we tested 5 conditions: Controller-

sAvatar (Fig. 3(a)), ControllersNoAvatar, LeapA-

vatar (Fig. 3(b)), LeapNoAvatar, ManusNoAvatar

(Fig. 3(c)).

5 RESULTS

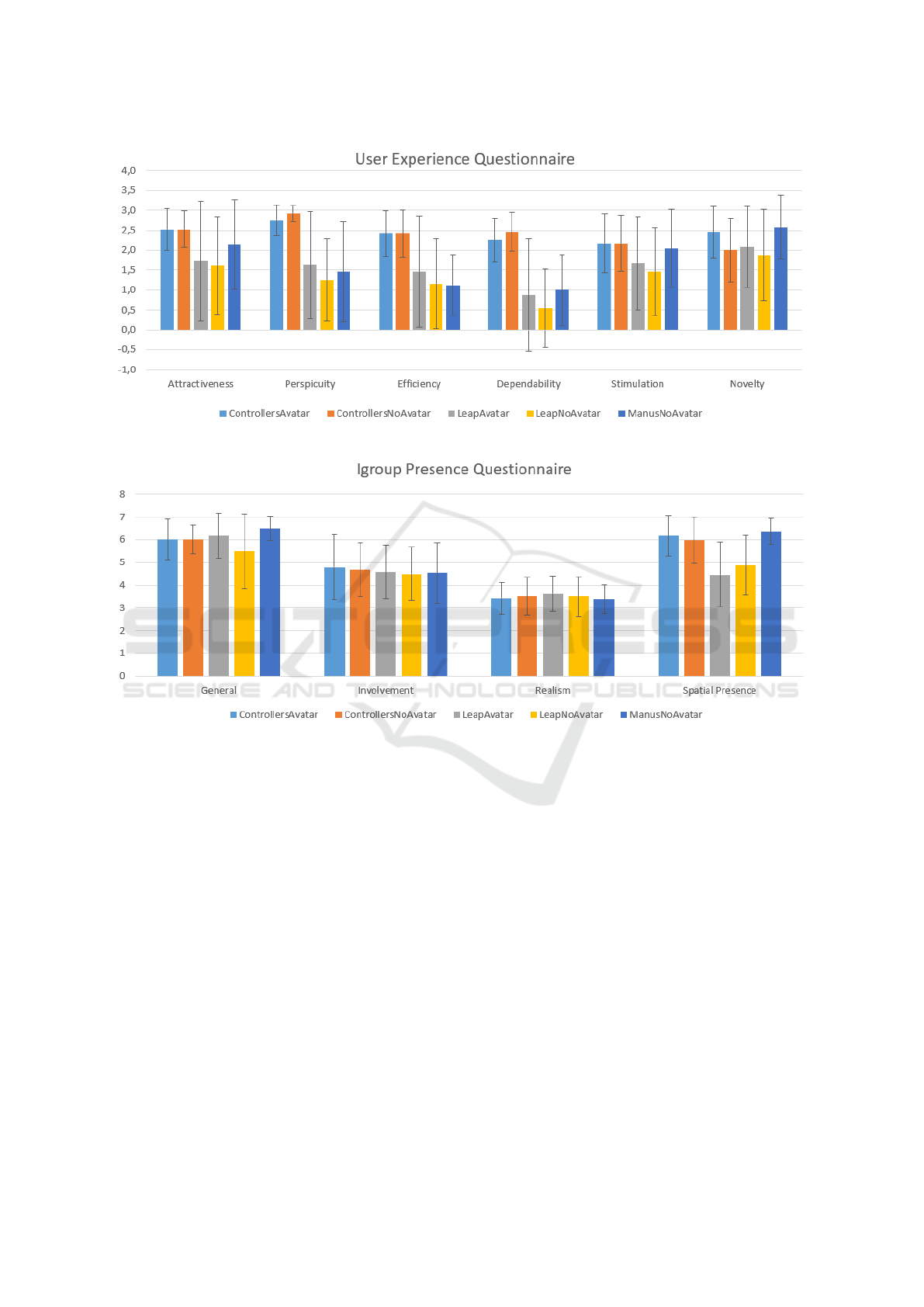

Figure 4 shows the results of the UEQ. The average

value of each scale (range between -3 and 3) and the

associated standard deviations are shown for the 5 ex-

perimental conditions. To understand the results, we

need to separately analyze each scale:

• Attractiveness: Due to the ease of interaction,

the subjects seem to prefer the Controllers (mean

score 2.53 both with and without avatar), then

(a)

(b)

(c)

Figure 3: Snapshots of 3 (out 5) experimental conditions,

ControllersAvatar (a), LeapAvatar (b) and ManusNoAvatar

(c). For each figure, on the left the first-person view of the

VR scene, on the right the external view of the user in the

real environment.

the Manus Prime haptic gloves (mean score 2.14)

and then the Leap Motion (mean score 1.72 with

avatar, 1.61 without).

• Perspicuity: As expected, the Controllers are eas-

ier to learn (mean score 2.75 with avatar, 2.92

without avatar), than the Leap Motion though with

a high standard deviation (1.63 ± 1.35) and the

Manus Prime haptic gloves (1.46 ± 1.26).

• Efficiency: Due to the different types of inter-

action the three technologies provide, the task is

solved easily by using the Controllers rather than

with the Leap Motion (again with a high standard

deviation 1.46 ± 1.39) and the Manus Prime hap-

tic gloves.

Self Representation and Interaction in Immersive Virtual Reality

241

Figure 4: Results of the UEQ: mean values and standard deviations on a scale from -3 to 3.

Figure 5: Results of the IPQ: mean values and standard deviations on a scale from 1 to 7.

• Dependability: The subjects seem in control of

the interaction in case he/she uses the controllers,

while in case he/she uses the Leap Motion or the

Manus Prime haptic gloves the value is not high

as expected (mean scores 0.88 and 0.54 for the

Leap Motion with and without avatar, 1.00 for the

Manus gloves).

• Stimulation: The subjects provide similar scores

to this category. It seems that the five solutions

excite and motivate the subjects in an equal way.

• Novelty: For this category, the higher value is

for the Manus Prime haptic gloves (2.58 ± 0.80).

Then, the Controllers and the Leap Motion with

the avatar have higher values with respect to the

ones without the avatar.

Figure 5 shows the results of the IPQ, the mean

values of each category (range between 1 an 7) and

the associated standard deviations, for the 5 experi-

mental conditions, are reported.

The answers of the subjects show that the Manus

Prime haptic gloves allow us to achieve a higher Gen-

eral sense of ”being there” (6.50 ± 0.55) and Spatial

Presence (6.37 ± 0.57) with respect to, in particular,

the Leap Motion cases, in which the worst type of in-

teractions with complex objects reduces these two as-

pects (6.17 ± 0.98 and 4.47 ± 1.43, respectively, with

the avatar). While the values for the Involvement and

the Realism are very close one from the other. For

what concerns the comparison between the avatar and

no-avatar solution while using the same technology,

the results show that the use of avatar is slightly bet-

ter in all the categories of the IPQ. No appreciable

differences for the UEQ.

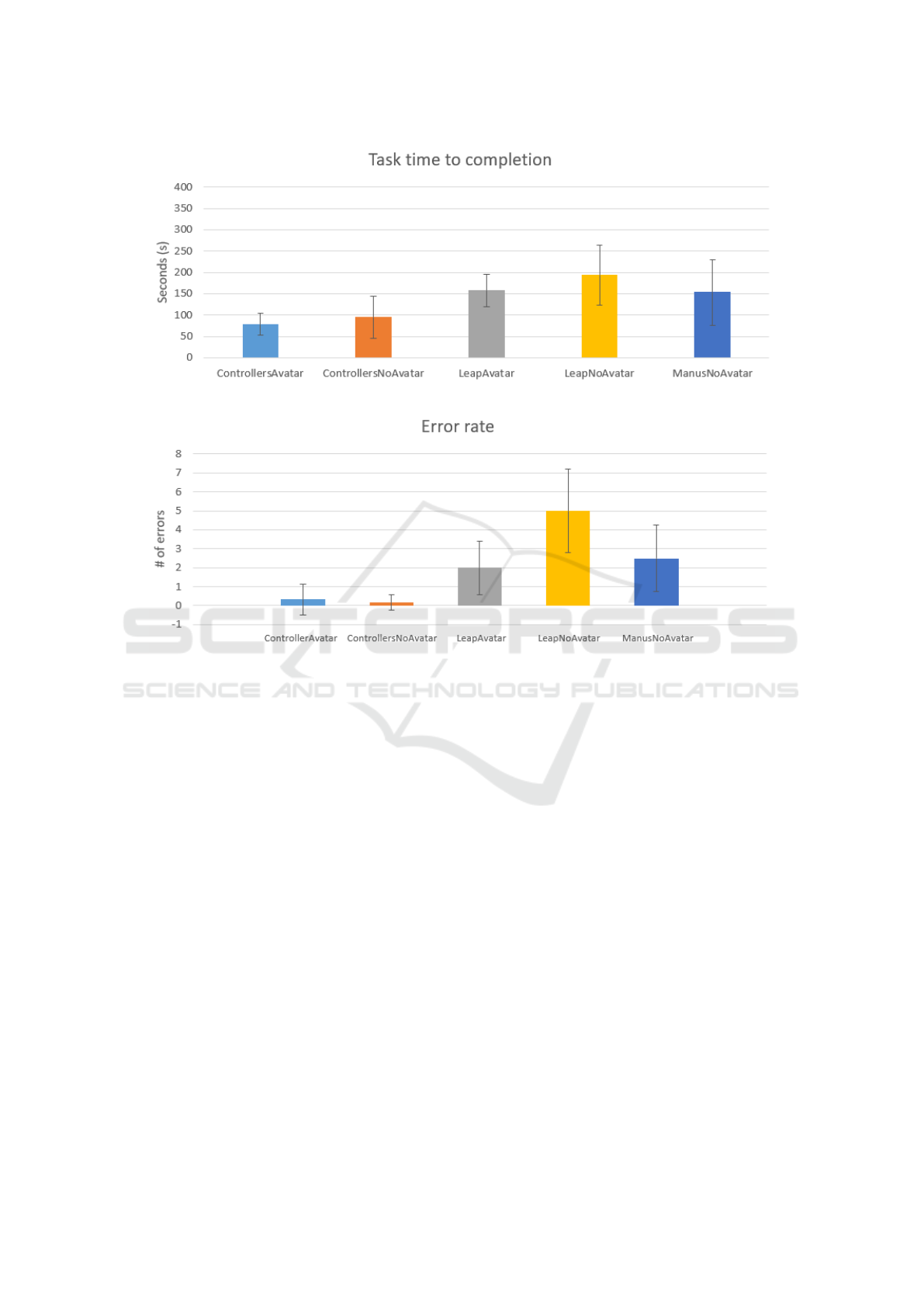

In Figures 6 and 7 the bar graphs representing the

mean values of the TTC an the error rate (and the re-

lated standard deviations), for the 5 experimental con-

ditions, are shown. The results show that the Con-

trollers are the easiest interaction device (mean TTC

with avatar 77.93 ± 25.57 seconds and 0.33 ± 0.82

HUCAPP 2021 - 5th International Conference on Human Computer Interaction Theory and Applications

242

Figure 6: Total time to completion TTC for the five experimental conditions.

Figure 7: Error rate for the five experimental conditions.

errors), while the Leap Motion (mean TTC without

avatar 193.87 ± 70.24 seconds and 5.00 ± 2.19 er-

rors) is the hardest and the Manus Prime haptic gloves

are the middle ground (mean TTC without avatar

152.65 ± 77.39 seconds and 2.50 ±1.76 errors).

6 CONCLUSIONS

In this paper, we have presented a framework based

on sensor fusion to build a self representation inside

VR (i.e. an avatar of the user). The main goal of the

proposed approach is to use off-the-shelf and low cost

devices, and to be easily extended to any tracking and

interaction device.

Starting from the developed approach, we have

implemented three different setups that use the Ocu-

lus Rift as a VR device, together with three differ-

ent interaction technologies, which allow us to repre-

sent the hands and the fine movement of the fingers:

the controllers of the headset, the Leap Motion and

the Manus Prime haptic gloves. Furthermore we have

used an RGB-D camera, the Intel Realsense d435, to

track the user body, and thus to create the avatar and to

replicate the movement of the user. To further analyze

the role of the self representation, we have devised an

assembling experiment and tested it in 5 conditions:

the 3 interaction devices, and with or without the pres-

ence of the entire user avatar (we had to remove the

ManusAvatar condition for compatibility issues).

In this paper, we have presented the results of a

preliminary evaluation, conducted with a small num-

ber of participants, but it allows us to draw some pre-

liminary conclusion. For what concerns the interac-

tion, the results we obtained from our experiment sug-

gest that the Controllers is the preferred solution due

to the simplicity of the interaction, even if the model

of the hands is not precise and the movement of the

fingers depends on their position on the controllers

and not on the real one. Instead, the Leap Motion is

the hardest technology to deal with. The problems

are that the user always need to have the hands in

front of the sensors and also that complex objects af-

fect negatively the type of interaction. At the end, the

Manus Prime haptic gloves are the middle ground so-

lution that gives a precise model of the hands and a

detailed movement of the fingers. Furthermore, the

haptic module adds, on top of these, the sensation of

Self Representation and Interaction in Immersive Virtual Reality

243

touch through the vibration of the fingers. The role

of the haptic feedback in interaction should be fur-

ther analyzed. The main drawbacks of our solution

are caused by the problems of the RGB-D camera,

which interferes with the HTC Vive tracking system,

thus hampering the use with the Manus gloves. Based

on these findings, our next works will investigate on

adding a mesh to the avatar and solving the different

issues we faced during the development of our sys-

tems that are mainly caused by the RGB-D camera.

First of all, we will try to re-implement the Manus

Prime haptic gloves with the avatar case by exploiting

the skeleton provided by standard RGB cameras such

that the gloves are not disturbed by the IR light any-

more. Then, it would be interesting to try to overcome

the occlusion problem by using one more RGB-D

camera (or multiple RGB cameras) placed behind the

user and fuse the data coming from the two RGB-D

cameras before the alignment phase to obtain a much

more stable system that could better mimic other so-

lutions for the avatar reconstruction, such as motion

capture suit or Inverse Kinematics.

ACKNOWLEDGEMENTS

This work has been partially supported by the Inter-

reg Alcotra projects PRO-SOL We-Pro (n. 4298) and

CLIP E-Sant

´

e (n. 4793).

REFERENCES

Bao, D., Zhao, L., Wang, C., Zhu, G., Chang, Z., and Yuan,

J. (2019). Short animation production using game en-

gine and motion capture. In 2019 International Con-

ference on Virtual Reality and Visualization (ICVRV),

pages 296–297. IEEE.

Biocca, F. (2014). Connected to my avatar. In International

Conference on Social Computing and Social Media,

pages 421–429. Springer.

Canessa, A., Casu, P., Solari, F., and Chessa, M. (2019).

Comparing real walking in immersive virtual reality

and in physical world using gait analysis. In VISI-

GRAPP (2: HUCAPP), pages 121–128.

Caserman, P., Achenbach, P., and G

¨

obel, S. (2019). Analy-

sis of inverse kinematics solutions for full-body recon-

struction in virtual reality. In 2019 IEEE 7th Interna-

tional Conference on Serious Games and Applications

for Health (SeGAH), pages 1–8. IEEE.

Chessa, M., Caroggio, L., Huang, H., and Solari, F. (2016).

Insert your own body in the oculus rift to improve pro-

prioception. In VISIGRAPP (4: VISAPP), pages 755–

762.

Pan, Y. and Steed, A. (2017). The impact of self-avatars on

trust and collaboration in shared virtual environments.

PloS one, 12(12):e0189078.

Rahul, M. (2018). Review on motion capture technology.

Global Journal of Computer Science and Technology.

Regenbrecht, H. and Schubert, T. (2002). Real and illu-

sory interactions enhance presence in virtual environ-

ments. Presence: Teleoperators & Virtual Environ-

ments, 11(4):425–434.

Reinhard, R., Shah, K. G., Faust-Christmann, C. A., and

Lachmann, T. (2020). Acting your avatar’s age: ef-

fects of virtual reality avatar embodiment on real life

walking speed. Media Psychology, 23(2):293–315.

Ries, B., Interrante, V., Kaeding, M., and Anderson, L.

(2008). The effect of self-embodiment on distance

perception in immersive virtual environments. In Pro-

ceedings of the 2008 ACM symposium on Virtual real-

ity software and technology, pages 167–170.

Roth, D., Lugrin, J.-L., B

¨

user, J., Bente, G., Fuhrmann,

A., and Latoschik, M. E. (2016). A simplified in-

verse kinematic approach for embodied vr applica-

tions. In 2016 IEEE Virtual Reality (VR), pages 275–

276. IEEE.

Schrepp, M., Hinderks, A., and Thomaschewski, J. (2014).

Applying the user experience questionnaire (ueq) in

different evaluation scenarios. In International Con-

ference of Design, User Experience, and Usability,

pages 383–392. Springer.

Slater, M., Usoh, M., and Steed, A. (1995). Taking steps:

the influence of a walking technique on presence

in virtual reality. ACM Transactions on Computer-

Human Interaction (TOCHI), 2(3):201–219.

Sorkine, O. (2009). Least-squares rigid motion using svd.

Technical notes, 120(3):52.

Steed, A., Frlston, S., Lopez, M. M., Drummond, J., Pan,

Y., and Swapp, D. (2016). An ‘in the wild’experiment

on presence and embodiment using consumer virtual

reality equipment. IEEE transactions on visualization

and computer graphics, 22(4):1406–1414.

Takayasu, K., Yoshida, K., Mishima, T., Watanabe, M.,

Matsuda, T., and Kinoshita, H. (2019). Upper body

position analysis of different experience level sur-

geons during laparoscopic suturing maneuvers using

optical motion capture. The American Journal of

Surgery, 217(1):12–16.

Waltemate, T., Gall, D., Roth, D., Botsch, M., and

Latoschik, M. E. (2018). The impact of avatar person-

alization and immersion on virtual body ownership,

presence, and emotional response. IEEE transactions

on visualization and computer graphics, 24(4):1643–

1652.

Yuan, Y. and Steed, A. (2010). Is the rubber hand illusion

induced by immersive virtual reality? In 2010 IEEE

Virtual Reality Conference (VR), pages 95–102. IEEE.

HUCAPP 2021 - 5th International Conference on Human Computer Interaction Theory and Applications

244