Shared Information-Based Late Fusion for Four Mammogram Views

Retrieval using Data-driven Distance Selection

Amira Jouirou

1 a

, Abir Ba

ˆ

azaoui

1 b

and Walid Barhoumi

1,2 c

1

Universit

´

e de Tunis El Manar, Institut Sup

´

erieur d’Informatique, Research Team on Intelligent Systems in Imaging and

Artificial Vision (SIIVA), LR16ES06 Laboratoire de Recherche en Informatique, Mod

´

elisation et Traitement de l’Information

et de la Connaissance (LIMTIC), 2 Rue Abou Raihane Bayrouni, 2080 Ariana, Tunisia

2

Universit

´

e de Carthage, Ecole Nationale d’Ing

´

enieurs de Carthage,

45 Rue des Entrepreneurs, 2035 Tunis-Carthage, Tunisia

Keywords:

Content-based Mammogram Retrieval, Data-driven Distance Selection, Four Mammogram Views, Random

Forest, Shared Information, Late Fusion.

Abstract:

Content-Based Mammogram Retrieval (CBMR) represents the most effective method for the breast cancer

diagnosis, especially CBMR based on the fusion of different mammogram views. In this work, an efficient

four-view CBMR method is proposed in order to further improve the mammogram retrieval performance. The

proposed method consists in combining the retrieval results of the provided four views from the screening

mammography, which are the Medio-Lateral Oblique (MLO) and the Cranio-Caudal (CC) views of the Left

(LMLO and LCC) and the Right (RMLO and RCC) breasts. In order to personalize each query view in

the final result, a classified mammogram dataset has been used to retrieve the relevant mammograms to the

query. Indeed, the proposed method takes as input four query views corresponding to the four different views

(LMLO, LCC, RMLO and RCC) and displays the most similar mammogram cases to each breast view using a

dynamic data-driven distance selection and the shared information. In particular, we explore the use of random

forest machine learning in order to predict the most appropriate similarity measure to each query view and the

late fusion from the four view result-level, through the shared information concept, for the final retrieval.

According to their clinical cases, the retrieved mammograms can be analyzed in order to help radiologists to

make the right decision relatively to the four-view mammogram query. The reported experimental results from

the challenging Digital Database for Screening Mammography (DDSM) dataset proved the effectiveness of

the proposed four-view CBMR method.

1 INTRODUCTION

Breast cancer is the most common form of cancer

worldwide among women, with a high mortality rate.

In fact, in most cases, breast cancer leads to death.

Nevertheless, thanks to the early detection, the num-

ber of breast cancer related deaths was been reduced

in the last decade (Boudraa et al., 2020). The best

tool to carry out the early breast cancer detection is

mammography, where through certain typical signa-

tures like masses and microcalcifications can help in

the early diagnosis of this dangerous cancer (Sapate

et al., 2020). However, manual diagnosis of mam-

mographic images is a very difficult task that radiol-

ogists frequently perform subjectively. Consequently,

the Computer-Aided Diagnosis (CADx) concept was

a

https://orcid.org/0000-0001-7931-2389

b

https://orcid.org/0000-0001-7206-3842

c

https://orcid.org/0000-0003-2123-4992

introduced in order to aid radiologists in mammo-

gram interpretation (Arora et al., 2020). Because of

the high misclassifications and the mistrust from ex-

perts, the most effective alternative is the Content-

Based Mammogram Retrieval (CBMR), which proves

its efficiency in the mammogram clinical case identi-

fication (Singh et al., 2018). Indeed, depending on

the visual content, the CBMR approach consists of

recovering, from a given dataset of past cases, the

most similar mammograms to a query mammogram

(Kiruthika et al., 2019). Since the retrieved images

are provided with proven diagnostics, CBMR systems

allow to effectively assist clinicians to make an ac-

curate decision regarding the query image. More-

over, in order to improve the retrieval performance,

modern CBMR systems have been evolved from Sin-

gle View (SV)-CBMR, which uses only one view of

mammogram query (Rayen and Subhashini, 2020), to

Multi View (MV)-CBMR, which allows to use sev-

144

Jouirou, A., Baâzaoui, A. and Barhoumi, W.

Shared Information-Based Late Fusion for Four Mammogram Views Retrieval using Data-driven Distance Selection.

DOI: 10.5220/0010261701440155

In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2021) - Volume 4: VISAPP, pages

144-155

ISBN: 978-989-758-488-6

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

eral views of mammogram query (Liu et al., 2020b).

In the related literature, as best as we know, all pro-

posed MV-CBMR systems have been based on the use

of two views, which are the Medio-Lateral Oblique

(MLO) and Cranio-Caudal (CC) views of only one

breast. However, the screening mammography pro-

vides two different views for each breast: LMLO

and LCC views that correspond to the left breast, and

RMLO and RCC views that are taken from the right

breast (Khan et al., 2019). Thus, the fusion of the

different views of mammograms can significantly im-

prove the performances of CBMR systems. Indeed,

two types of fusion can be applied within the frame-

work of MV-CBMR systems: early fusion and late fu-

sion. The main difference between these two classes

of fusion methods resides in the emplacement of the

fusion procedure within the global retrieval process.

In fact, for the multi-view early fusion methods (Nar-

vaez et al., 2011), the fusion procedure represents the

first step in the online phase of the retrieval. It con-

sists on merging the features of the different views

into one vector in order to retrieve the relevant mam-

mograms. For the multi-view late fusion methods

(Dhahbi et al., 2015b; Liu et al., 2011), the fusion

procedure represents the last step in the online phase

of the retrieval. It consists on merging the results of

each view of the breast for the final retrieval. Accord-

ing to the experimental results, the multi-view late fu-

sion methods have shown their superiority over the

multi-view early fusion ones. In fact, the produced

results in many studies confirm that the fusion of per-

spective high-level decisions or results is consistently

better than the fusion of low-level features (Jouirou

et al., 2019).

Furthermore, with the intention of improving the

final results of the retrieval, we have demonstrated,

in a previous work (Ba

ˆ

azaoui et al., 2020), the ef-

fectiveness of using a meta-learner that dynamically

defines the most suitable query-dependent similarity

measure for each treated mammogram. This returns

to adopt a machine learning model in order to pre-

dict the most adequate distance metric for each query

image. Compared with the traditional medical im-

age retrieval based on static distances (Gao et al.,

2020; Rasheed et al., 2020; Wu et al., 2020), this dy-

namic distance ensures the top precision in addition

to the preservation of both the semantic and the vi-

sual similarities (Soudani and Barhoumi, 2019). As

mentioned in (Ba

ˆ

azaoui et al., 2020), there are two

types of Distance Metric Learning (DML) within the

framework of CBMR, including unsupervised DML-

CBMR methods (Gu et al., 2017) and supervised

DML-CBMR ones (Yang et al., 2010; Wei et al.,

2017). The unsupervised DML-CBMR methods are

based on constructing a low-dimensional manifold

while preserving the geometric relationships between

data points. The supervised DML-CBMR methods

consist on exploiting the class label information in or-

der to identify the most informative dimensions to the

classes of examples.

In this paper, we propose a new MV-CBMR

method based on the late fusion. The main con-

tribution of the proposed method resides in the use

of the four views of the mammograms for an effec-

tive retrieval. In fact, the proposed four-view CBMR

method takes as input the four views of a mammo-

gram query and displays the relevant mammograms

to each view with their clinical cases in order to as-

sist radiologists while taking the right decision. The

relevant mammograms are extracted from a classi-

fied mammogram dataset. Indeed, the used dataset

is portioned according to their views (LMLO, LCC,

RMLO, RCC) and global clinical case (normal, ab-

normal) in order to determine after that the class

of severity (benign vs. malignant) of each abnormal

query mammogram. Therefore, each view of the

mammogram query is compared to the sub-dataset

that contains mammograms with the same view and

general clinical case as the query view. Similarly to

(Ba

ˆ

azaoui et al., 2020), a dynamic similarity assess-

ment has been adopted in order to retrieve the most

similar mammograms for each query mammogram.

This dynamic selection of the most appropriate dis-

tance, according to the input query view, aims to en-

sure the effectiveness of the proposed method for re-

trieving the most similar images, clinically speaking,

for each view. Indeed, we explore the use of the dis-

tance learning via the random forest machine learning

in order to learn how to predict the adequate distance

for each input query according to its visual content.

As well, in order to improve the accuracy rate, an ef-

fective late fusion module is introduced. This mod-

ule is mainly based on the shared information con-

cept, which allows to guide effectively the process of

selecting the relevant mammograms to both views of

the same breast, while adopting the same process for

the dynamic query-dependent assessment of similar-

ity between two mammograms. Then, according to

the retrieved mammograms and their clinical cases,

the final decision can be effectively performed. Real-

ized experiments on the challenging DDSM (Digital

Database for Screening Mammography) dataset show

that the implemented four-view CBMR method can

be a valuable assistance in the diagnosis of the breast

cancer.

The remaining part of this paper is organized as

follows. Section 2 describes the proposed method.

Experimental results are presented in Section 3 and a

Shared Information-Based Late Fusion for Four Mammogram Views Retrieval using Data-driven Distance Selection

145

summary discussion is provided in Section 4. Finally,

a conclusion and some ideas for future works are de-

tailed in Section 5.

2 PROPOSED METHOD

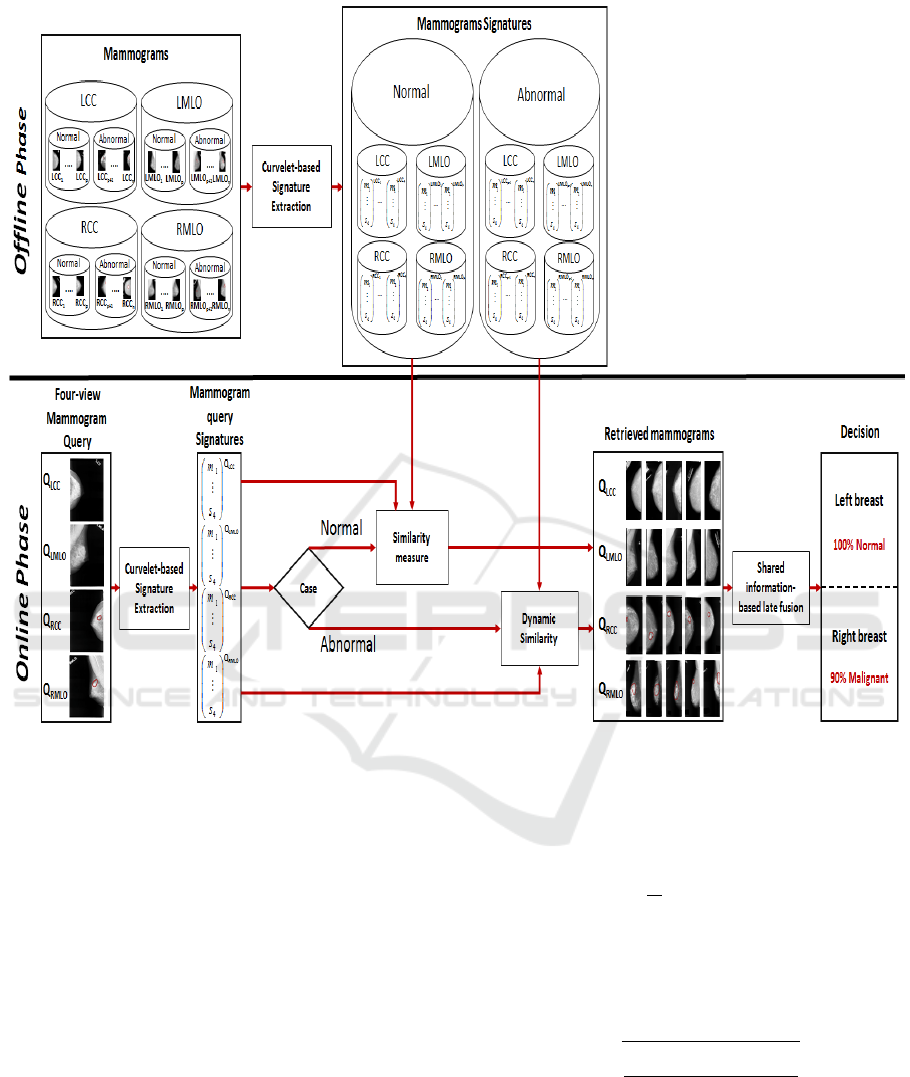

The proposed four-view CBMR method is com-

posed of an offline stage and four online stages, in-

cluding curvelet-based signature extraction, similar-

ity measure, data-driven distance selection and shared

information-based late fusion (Figure 1). The of-

fline stage consists mainly on extracting the signa-

tures of mammograms from normal and abnormal

LCC, LMLO, RCC and RMLO mammograms within

the investigated sub-datasets using curvelet transform

and moment theory. This choice is mainly due to the

fact that curvelet transform is one of the most suit-

able descriptors that allow to extract the discrimina-

tive mammogram features. It provides an efficient

representation of smooth objects with discontinuities

along curves, without the need of a segmentation step

(Jouirou et al., 2015). Moreover, the use of curvelet

moments is performed in order to reduce the size

of the signatures without losing information of the

original space (Dhahbi et al., 2015a). Similarly to

the offline stage, the online signature extraction of

the submitted four-view query is performed using the

curvelet moments. In fact, the online retrieval process

is triggered by the user who submits four mammo-

grams as a query element rather than a single query

mammogram. These four mammograms represent the

provided four views from screening mammography

for the same patient. In fact, they annotated each

query view to select the general clinical case of each

view: normal (mammogram without lesion) or abnor-

mal (mammogram with lesion). For the normal case,

the Chi-squared distance has been applied in order to

retrieve the relevant mammograms; whereas for the

abnormal case, a dynamic distance has been adopted

in order to resend the relevant mammograms. The

prediction of the most appropriate distance, accord-

ing to the studied query mammogram, is performed

through the random forest machine learning. After re-

trieving the most similar mammograms to each view

of the query, a late fusion process must be performed

for making the final decision. This latter is based on

the shared information between the outputs (retrieved

mammograms of the CC and MLO views) and the

query views of each breast. The main objective of this

step is to provide, for each breast, the relevant mam-

mograms and the final decision of its clinical case. It

is worth mentioning that the proposed method sug-

gests the final decision of each breast by providing

the percentage of malignancy and benignity for the

abnormal cases.

2.1 Data-driven Distance Selection

Since there is no standalone similarity measure that

provides the best results for all queries, the use of

the appropriate similarity measure for each query is

very promising (Ba

ˆ

azaoui et al., 2020; Soudani and

Barhoumi, 2019). As well, for the same query, the

appropriate measure is not the same for its different

views. Thereby, applying the most adequate similar-

ity measure to each query view allows improving the

retrieval performance. The first step toward determin-

ing the query-dependent distance metric is to use var-

ious similarity measures. In fact, the used measures

can be regrouped into standard similarity measures

and statistic similarity measures. More precisely, the

investigated standard similarity measures are: the Eu-

clidean distance (1), the Manhattan distance (2) and

the Canberra distance (3), whereas the studied statis-

tic similarity measures are the Chi-squared distance

(4) and the Pearson Correlation Coefficient distance

(5).

Euc(q,r) =

v

u

u

t

16

∑

i=1

(S

i

(q) − S

i

(r))

2

, (1)

Man(q,r) =

16

∑

i=1

|(S

i

(q) − S

i

(r))|, (2)

Canb(q, r) =

16

∑

i=1

|(S

i

(q) − S

i

(r))|

|S

i

(q)| +|S

i

(r)|

, (3)

χ

2

(q,r) =

v

u

u

t

16

∑

i=1

(S

i

(q) − S

i

(r))

2

S

i

(q) + S

i

(r)

, (4)

PCC(q,r) =

COV (S(q),S(r))

σ(S(q)) ∗ σ(S(r))

, (5)

where, COV is the covariance of the two signa-

tures, which are composed of 16 attributes, of the

two compared images q and r (S(q) for the query

mammogram signature and S(r) for the signature

of the mammogram belonging to the corresponding

sub-dataset), and σ is the standard deviation.

The prediction of each query-based distance is

performed through the random forest machine learn-

ing. Thus, for each view of the query, a prediction

of the most appropriate similarity measure is carried

out in order to retrieve the relevant mammograms.

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

146

Figure 1: Flowchart of the proposed four-view CBMR method.

The choice of the random forest, as a machine learn-

ing tool, is mainly due to the fact that it has been

successfully used for the dynamic distance selection

within the mammogram case (Ba

ˆ

azaoui et al., 2020).

Moreover, it is characterised by its simplicity and

its multi-class nature. As well, it has proven its su-

periority over other supervised classifiers, especially

within the framework of multiple classification prob-

lems. Thus, the used random forest machine learn-

ing algorithm contains two main phases, namely the

learning phase and the predicting phase. In the learn-

ing phase, a set of features F = S(1) ... S(m) cor-

responding to the signatures of the training mammo-

grams m is processed as input along with their clas-

sifications C = c(1) .. . c(m) that correspond to the

adequate distance for each signature, while selecting

the number N of the used trees, to train a classification

or regression tree T

n

on F

n

, C

n

(n ∈ {1, N}). The pre-

diction of the appropriate class (i.e. the best perform-

ing distance) for the query signature S(q) is thereafter

made by averaging the predictions from all the indi-

vidual regression trees on S(q) (6) or by taking the

majority vote in the case of classification trees.

T

0

=

1

N

N

∑

n=1

T

n

(S(q)). (6)

In addition, for the estimate of the uncertainty of the

prediction, the standard deviation of the predictions

of all individual regression trees on S(q) is performed

(7).

σ =

s

∑

N

n−1

(T

n

(S(q)) − T

0

)

2

N − 1

. (7)

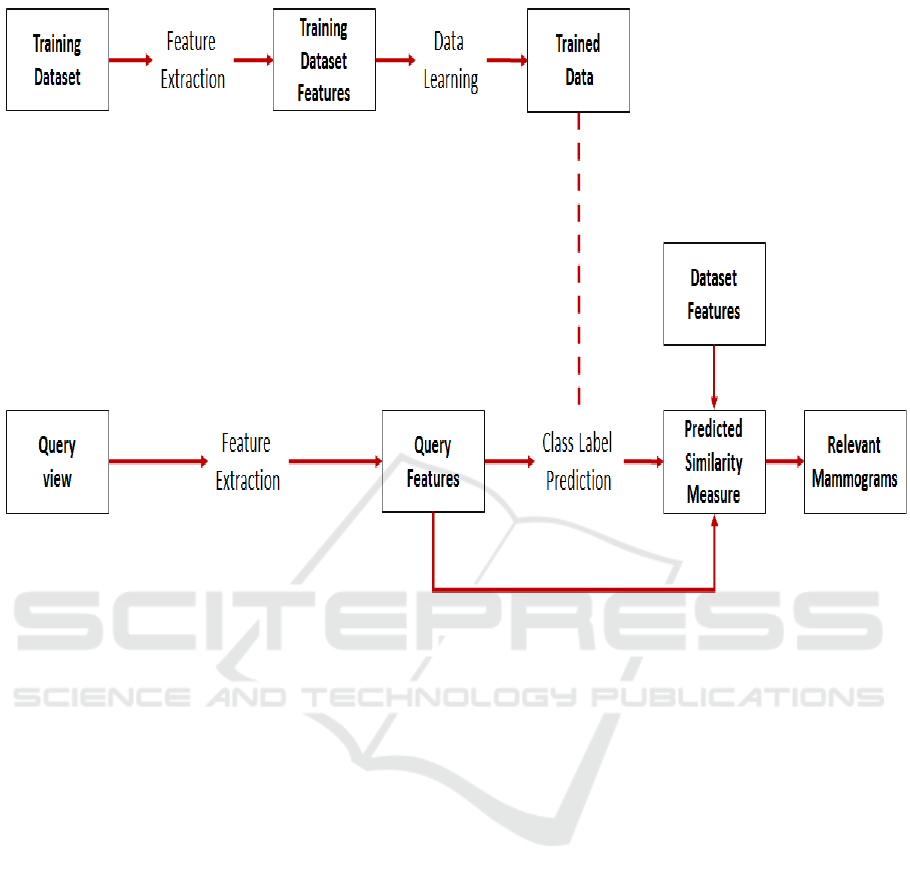

The overview of the proposed dynamic similarity

model for each input mammogram query view is sum-

marized in Figure 2.

Shared Information-Based Late Fusion for Four Mammogram Views Retrieval using Data-driven Distance Selection

147

Figure 2: Overview of the proposed dynamic similarity model for each mammogram query view, including offline data

learning and online similarity measure predicting.

2.2 Shared Information-based Late

Fusion

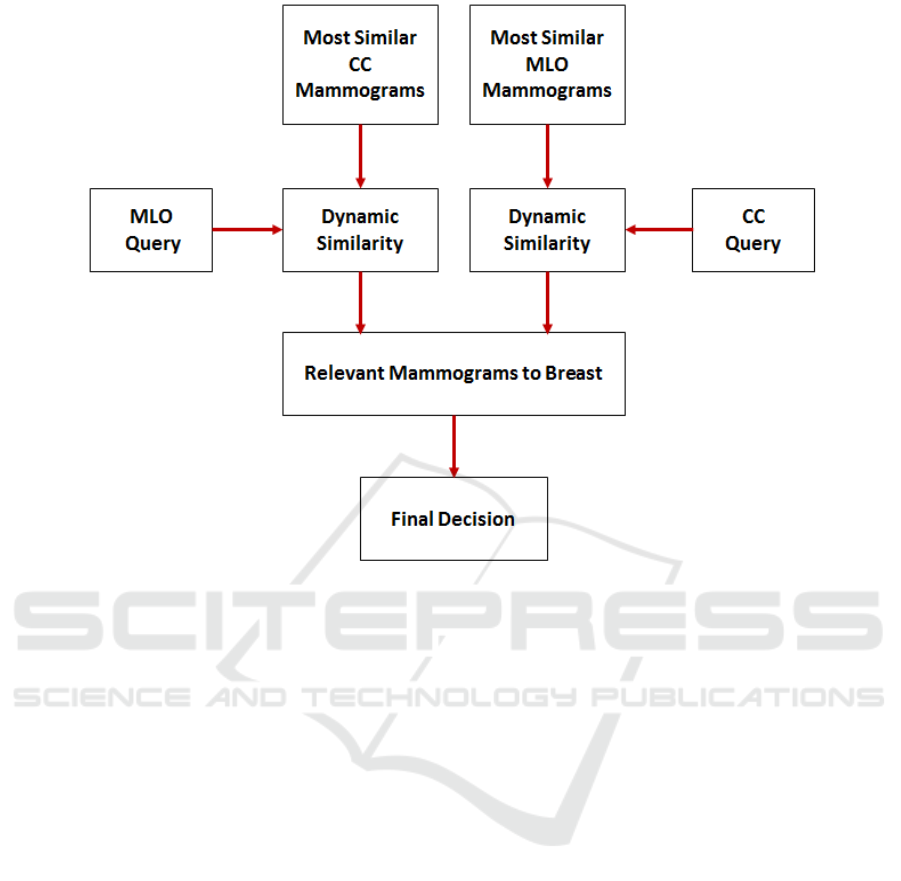

In order to improve the final results, a late fusion

module is adopted herein (Figure 3). The proposed

module is based on the shared information between

the both views (CC and MLO) of the same breast.

In practice, the shared information concept has been

investigated in various medical applications, such as

the follow-up, the outcome analysis, the planning

and the decision-making. Within the medical image

analysis field, the concept of shared information has

been successfully investigated for the image segmen-

tation (Sbei et al., 2020), the image feature extraction

(Martens et al., 2020) as well as for the image feature

selection (Hao et al., 2020). In this study, we explore

this concept of shared information (Krishnasamy and

Paramesran, 2019) for making the final decision of the

clinical case of each studied mammogram. In fact, the

choice of the shared information concept in the pro-

posed late fusion module is mainly motivated by the

complementarity of the both views of the breast, what

confirms the relevance of the results of the one view

to the other one, since the two views illustrate the

same breast. Moreover, the shared information con-

cept has proved its efficiency in other medical image

analysis works (Liu et al., 2020a), although they have

not been investigated within the multi-view mammo-

gram framework, as best as we know. In our case,

after retrieving, for each breast, the sets Ξ

MLO

and

Ξ

CC

of the k most similar mammograms for the MLO

and the CC query views, respectively, we explore the

shared information in order to keep, among the mam-

mograms within the set Ξ

MLO

(resp. Ξ

CC

), only the

set Ξ

0

MLO

(resp. Ξ

0

CC

) of the k/2 ones that are strongly

similar to the complementary CC (resp. MLO) view.

This is performed while adopting the already identi-

fied dynamic distances for the MLO and the CC query

views. Then, the final decision on the clinical case of

the input query breast is defined according to those of

the mammograms composing the two sets Ξ

0

MLO

and

Ξ

0

CC

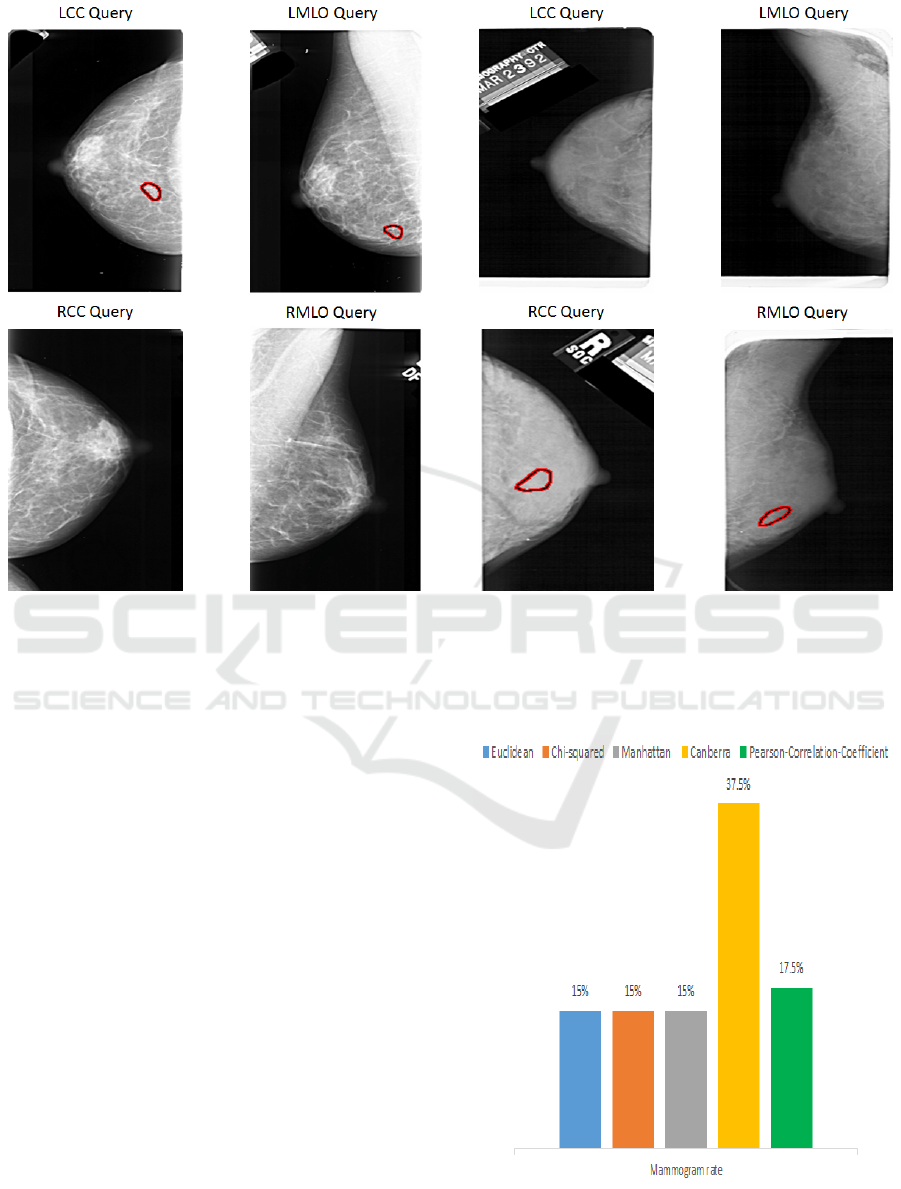

. Figure 4 illustrates an example of the retrieved

mammograms, relatively to a malignant right breast

query, which compose the sets Ξ

CC

and Ξ

MLO

before

the shared information-based late fusion, as well as

the sets Ξ

0

CC

and Ξ

0

MLO

after the late fusion process.

It is clear that the shared information allows to select

the more relevant mammograms, in terms of clinical

cases, for each view of the query.

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

148

Figure 3: Overview of the proposed late fusion module, which is applied to each breast separately.

3 EXPERIMENTAL RESULTS

In order to validate the effectiveness of the proposed

four-view CBMR method, extensive experiments on

the challenging classified DDSM dataset have been

conducted. In the following, we start with the de-

scription of the used mammogram dataset as well as

the validation protocol. Then, we detail the imple-

mentation of the proposed four-view CBMR method.

Finally, we discuss the qualitative and the quantitative

evaluations of the proposed method, while comparing

it against the most relevant methods from the state-of-

the-art.

3.1 Data and Validation Protocol

The experiments have been performed on mammo-

grams from the DDSM dataset. This dataset, which

is composed of 9,852 different mammograms, is

the largest publicly available resource for mammo-

graphic image analysis research (Ba

ˆ

azaoui et al.,

2020). The construction of this challenging dataset

was performed within the framework of the DDSM

project (Heath et al., 1998), which comprised the

Massachusetts General Hospital, the University of

South Florida and the Sandia National Laboratories.

Mammograms belonging to the used dataset have

been classified according to their views into four sub-

datasets, which are LCC, LMLO, RCC and RMLO

datasets. Each of these sub-datasets contains 2,463

mammograms. Moreover, the produced mammo-

grams are classified according to their clinical cases

into normal mammograms and abnormal ones. The

total number of abnormal mammograms is 3,664,

whereas the total number of normal mammograms is

6,188. Besides, abnormal mammograms are classi-

fied in their turn into malignant mammograms and

benign ones. Indeed, there are 1,768 benign mam-

mograms and 1,896 malignant mammograms. It is

annotated that the numbers of normal, benign and ma-

lignant mammograms are not the same in the four

sub-datasets. In fact, the numbers of normal mam-

mograms are 1,504, 1,481, 1,623 and 1,580 in LCC,

LMLO, RCC and RMLO datasets, respectively. The

numbers of benign mammograms are 472, 480, 398

and 418 in LCC, LMLO, RCC and RMLO datasets,

respectively. The numbers of malignant mammo-

grams are 487, 502, 442 and 465 in LCC, LMLO,

RCC and RMLO datasets, respectively. An example

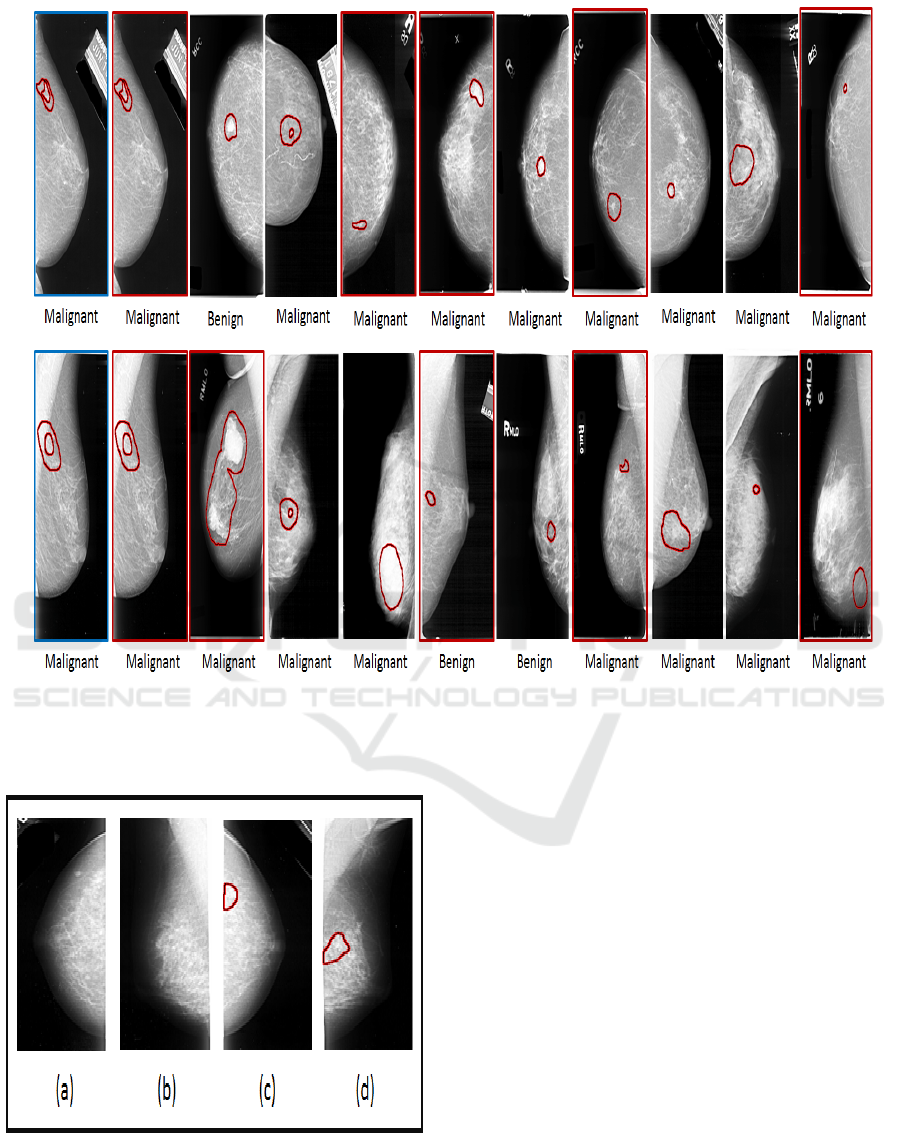

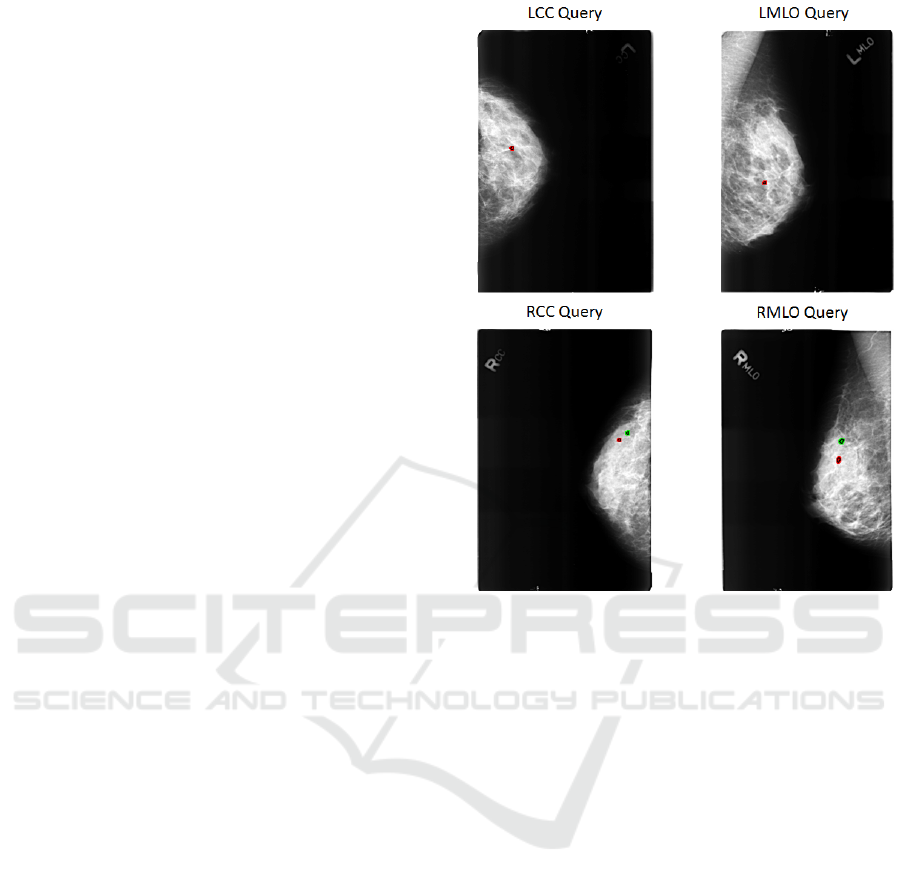

of four-view mammograms is illustrated in Figure 5.

Furthermore, in order to quantitatively evalu-

ate the proposed four-view CBMR method, most

Shared Information-Based Late Fusion for Four Mammogram Views Retrieval using Data-driven Distance Selection

149

Figure 4: Example of the shared information-based late fusion for a malignant right breast. The first row illustrates the RCC

query (framed in blue) with its 10 (i.e k = 10) most similar RCC mammograms (Ξ

CC

). The second row shows the RMLO

query (framed in blue) with its 10 most similar RMLO mammograms (Ξ

MLO

). The kept mammograms (Ξ

0

CC

and Ξ

0

MLO

) are

framed in red in the both cases.

Figure 5: Example of four-view mammograms used in

the experiments: Normal LCC mammogram (a), Normal

LMLO mammogram (b), Malignant RCC mammogram (c),

Malignant RMLO mammogram (d).

relevant evaluation metrics have been considered

in this work. The precisely choice of the ac-

curacy, FP and FN rates is mainly due to the

fact that they are the most used ones within the

CBMR context, seen their effective ability to mea-

sure the precision of the CBMR systems. It is

worth mentioning that the proposed four-view CBMR

method has been implemented using MATLAB

R2013a software, and all the tests were performed

on a machine with 64-bit Windows operating sys-

tem, over an DELL PC with Intel(R)Core(T M)i7 −

6500UCPU@2.50GHz2.60GHz processor and 8Go

of RAM.

3.2 Qualitative Evaluation

To qualitatively evaluate the implemented method,

sundry tests have been performed. Examples of four-

view mammograms queries with their final clinical

case decisions are shown in Figure 6 and Figure 7.

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

150

In fact, the presented four-view mammogram query

in Figure 6 has two abnormal breasts, and the clinical

case of these two breasts is benign. The reported re-

sults of this query are very encouraging. Indeed, the

accuracy rate of the left breast is equal to 90% and the

accuracy rate of the right one is of 100%. Besides,

the mammogram query of Figure 7 has one abnormal

breast and one normal breast. The left breast contains

a malignant lesion, while the right breast has no le-

sion. The final decision of the aforementioned query

is correct. In fact, the suggested results are 90% ma-

lignant for the left breast and 100% normal for the

right one. Otherwise, among the tested queries, the

worst one is shown is Figure 8, where the clinical case

of the presented four-view mammogram query is nor-

mal for the left breast and benign for the right one.

The provided accuracy rate by the left breast is equal

to 100%, while that provided by the right breast is

only equal to 60%. This low rate can be explained by

the irrelevant results of the separately retrieved mam-

mograms for the RMLO view of the query and the

RCC view of the query. Also, we clearly observe that

the value of the accuracy rate of the right breast with-

out fusion drops (it is equal to 40%). This is mainly

due to the RCC view of the query, which provides

only 20% of accuracy rate. We thus conclude that

although that the right breast accuracy rate is weak,

it has been improved significantly through the pro-

posed shared information-based late fusion from 40%

to 60%.

3.3 Quantitative Evaluation

In order to quantitatively evaluate the suggested four-

view CBMR method, we firstly describe the experi-

mental process before discussing the retrieval perfor-

mance of the method. Then, we compare the pro-

posed method with some relevant methods from the

state-of-the-art.

3.3.1 Experimental Process

To perform the learning step, some mammograms

from the DDSM dataset were tested using five dif-

ferent distances (Euclidean, Chi-squared, Manhattan,

Canberra and Pearson Correlation Coefficient). Table

1 shows a sample of the used ground-truth for the dis-

tance metric learning. According to the final decision,

the distribution of the training mammograms in five

classes corresponding to the used distances metrics is

detailed in Figure 9. For the number of the retrieved

images, we set k to 10.

Figure 6: Example of a benign four-view mammogram

query with its final decision. The first row shows the com-

plementary views of the left breast with a final decision

equals to 90% as benign and 10% as malignant. The second

row illustrates the complementary views of the right breast

with a final decision equals to 100% as benign and 0% as

malignant.

3.3.2 Retrieval Performance

In order to assess the proposed method for CBMR, we

have computed the False Positive (FP), the False Neg-

ative (FN) and the accuracy rates in all cases (normal,

abnormal and overall). The reported results in Table

2 demonstrate that the provided FP and FN rates in

the aforementioned cases by the proposed four-view

CBMR method are quite low and the provided accu-

racy rate is quite high, which confirms the efficiency

of the suggested method. Otherwise, in order to show

the effectiveness of the proposed late fusion module, a

comparison between the performance of the proposed

four-view CBMR method without and with adding the

late fusion module has been performed. It is clear

in Table 3 and Table 4 that the proposed late fusion

module improves the final results. In fact, reported

results show that the accuracy increases at a rapid rate

contrary to the FP and FN rates, which decrease con-

siderably in all cases (overall, abnormal, benign and

malignant). This is ensured by the shared information

Shared Information-Based Late Fusion for Four Mammogram Views Retrieval using Data-driven Distance Selection

151

Figure 7: Example of a malignant four-view mammogram

query with its final decision. The first row shows the com-

plementary views of the left breast with a final decision

equals to 10% as benign and 90% as malignant. The sec-

ond row illustrates the complementary views of the right

breast with a final decision equals to 100% as normal.

of complementary views of the breast, which allows

to minimize the error rate by eliminating the furthest

mammograms, within the studied dataset, with regard

to the query mammogram.

3.3.3 Comparison with Relevant Sate-of-the-Art

Methods

In order to confirm the effectiveness of the proposed

four view CBMR method, we compare it with some

relevant MV-CBMR methods. In fact, one multi-

view early fusion method and one multi-view late fu-

sion method have been investigated. The early fusion

method is based on merging the features of both views

of the breast into one vector that is thereafter used

for the retrieval. The score-based late fusion method

is based on the fusion between the results of the CC

view and the MLO view of the same breast. Accord-

ing to Table 5, we can observe that the proposed late

fusion method outperforms the compared state-of-

the-art methods (Narvaez et al., 2011; Dhahbi et al.,

2015b). The provided results prove that the higher

Figure 8: The worst four-view mammogram query with

its final decision. The first row shows the complementary

views of the left breast with a final decision equals to 100%

as normal. The second row illustrates the complementary

views of the right breast with a final decision equals to 60%

as benign and 40% as malignant.

Figure 9: Distribution of the trained mammograms accord-

ing to the used distance metrics.

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

152

Table 1: A sample of the proposed ground-truth that has

been used for distance metric learning over the DDSM

dataset.

View Clinical case Adequate distance

LCC Benign Canberra

LCC Benign Manhattan

LCC Malignant Pearson-Correlation-

Coefficient

LCC Malignant Manhattan

LMLO Benign Canberra

LMLO Benign Chi-squared

LMLO Malignant Euclidean

LMLO Malignant Pearson-Correlation-

Coefficient

RCC Benign Canberra

RCC Benign Pearson-Correlation-

Coefficient

RCC Malignant Euclidean

RCC Malignant Chi-squared

RMLO Benign Pearson-Correlation-

Coefficient

RMLO Benign Chi-squared

RMLO Malignant Pearson-Correlation-

Coefficient

RMLO Malignant Canberra

Table 2: Quantitative evaluation of the proposed four-view

CBMR method over the DDSM dataset.

Overall Normal Abnormal

Accuracy 92% 100% 89%

FP 6.92% 0% 12.86%

FN 6% 0% 10%

accuracy rate (89%) and the lower FP (12.86%) and

FN (10%) rates have been obtained by the proposed

CBMR method based on late fusion. This improve-

ment can be explained by three main insights: (1)

the compared MV-CBMR methods are based on two

views unlike the proposed method, which is based

on four views, (2) the use of the adequate similarity

distance for each view of the query, which allows to

increase the retrieval performance considerably, and

(3) the adoption of the shared information of comple-

mentary mammogram views in the late fusion mod-

ule, which permits to further enhance the final results.

4 DISCUSSION

Due to the fact that the use of only a single view

within the framework of CBMR does not satisfy ra-

diologists supervision and interpretation, since it does

not always provide the correct decisions, the integra-

tion of the multi-view concept is becoming a great

necessity in recent years. In this paper, we have

proposed an effective MV-CBMR method based on

the use of the four views of mammograms. Indeed,

the MLO and the CC views of both breasts (left and

right) of one patient are used as input in the pro-

posed method and the relevant mammograms are dis-

played, for each of the four views, with their clini-

cal cases in order to make the right decision. The

main contributions of the proposed four view CBMR

method resides in: (1) the use of the classified dataset,

which allows to personalize each view of the query,

(2) the adaptation of the dynamic distance, which al-

lows to improve the accuracy of the retrieved mam-

mograms for each view of the mammogram query,

and (3) the proposed late fusion module, which al-

lows to increase the decision precision rate. In fact,

the classified dataset permits to perform the search

of relevant mammograms for each view of the query

over a sub-dataset that contains mammograms with

the same view and general clinical case as the query

view. This allows to avoid the search in the whole

dataset, what optimizes the time cost. Moreover, the

dynamic data-driven distance selection is performed

in order to guarantee that the most adequate simi-

larity, which provides the highest precision for each

view of the query, will be adopted. The prediction

of the used similarity distance is carried out through

the random forest machine learning that proved its

efficiency within the context of mammogram clas-

sification. Finally, the proposed late fusion mod-

ule, which is based on the shared information, rep-

resents the most sturdiness contribution of the pro-

posed MV-CBMR method. In fact, the shared infor-

mation between the complementary views of the same

breast was performed in order to select the most sim-

ilar mammograms to each breast from the retrieved

mammograms of the CC view and the MLO view of

the breast. Experimental results on the challenging

DDSM dataset show that combining the random for-

est algorithm, in order to predict the most appropriate

distance to each view of the query, with the shared in-

formation, in order to fuse the results of both views of

the same breast, allows to optimize remarkably the ac-

curacy of the retrieved mammograms, which reaches

a value equals to 92% in the overall case. As well, the

FP and the FN rates are dropped to achieve 6.92% and

6%, respectively. Besides, the proposed four-view

late fusion method outperforms existing multi-view

late fusion methods with gain rates rounded to 26%,

34% and 15% for the accuracy, the FP and the FN

rates, respectively.

Shared Information-Based Late Fusion for Four Mammogram Views Retrieval using Data-driven Distance Selection

153

Table 3: Comparison of the performances of the proposed four-view CBMR method with and without the use of the

late fusion over the DDSM dataset.

Case Overall Normal Abnormal

Late fusion Without With Without With Without With

Accuracy 88% 92% 100% 100% 83% 89%

FP 11.23% 6.92% 0% 0% 20.86% 12.86%

FN 8.07% 6% 0% 0% 13.45% 10%

Table 4: Comparison of the performances of the proposed four-view CBMR method with and without the use of the

late fusion in the benign and malignant cases over the DDSM dataset.

Case Benign Cancer

Late fusion Without With Without With

Accuracy 79.14% 87.14% 86.55% 90%

Table 5: Comparison of the performance of the proposed method against two relevant MV-CBMR methods from the

state-of-the-art.

Abnormal case (Be-

nign vs. Malignant)

Early fusion method

(Narvaez et al., 2011)

Score-based late fu-

sion method (Dhahbi

et al., 2015b)

Proposed shared

information-based

late fusion method

Accuracy 56.4% 63.2% 89%

FP 48,4% 46,33% 12.86%

FN 38.4% 25.6% 10%

5 CONCLUSION

In this paper, we proposed a four-view CBMR method

based on the use of a classified dataset. Indeed, the

proposed method takes as input a four-view query

mammogram and conjointly retrieves the most sim-

ilar mammograms for each query view in order to

provide radiologists with a source that allows them to

make the right decision. The retrieved mammograms

for each view of the query are selected from its rele-

vant sub-dataset that contains mammograms with the

same view and general clinical case. Moreover, the

dynamic similarity assessment was effectively inte-

grated within the proposed four-view CBMR method.

This data-driven distance selection is mainly based

on the random forest machine learning, which al-

lows to predict dynamically the similarity measure

that should be applied for each view of the query. The

appropriate similarity can be either a standard simi-

larity measure or a statistic similarity measure. Fur-

thermore, in order to increase the right decision rate,

a late fusion module has been proposed. This mod-

ule is principally based on the shared information be-

tween both views of the same breast. It consists of re-

trieving the most similar mammograms to each breast

with their clinical case (normal, malignant, benign)

and suggesting the final decision. Produced results

using the classified DDSM dataset show the relevance

of the proposed four-view CBMR method. As well,

the effectiveness of the proposed late fusion module

has been proved by the increase of the accuracy rate

from 88% to 92% in the overall case and from 83%

to 89% in the abnormal case. As future work, we

aim to propose a late fusion approach, based on the

Dempster-Shafer theory, that will be adapted in the

case of bilateral analysis of mammograms.

REFERENCES

Arora, R., Rai, P. K., and Raman, B. (2020). Deep fea-

ture–based automatic classification of mammograms.

Medical and Biological Engineering and Computing,

58:1199—-1211.

Ba

ˆ

azaoui, A., Abderrahim, M., and Barhoumi, W. (2020).

Dynamic distance learning for joint assessment of vi-

sual and semantic similarities within the framework

of medical image retrieval. Computers in Biology and

Medicine, 122:103833.

Boudraa, S., Melouah, A., and Merouani, H. F. (2020). Im-

proving mass discrimination in mammogram-cad sys-

tem using texture information and super-resolution re-

construction. Evolving Systems, 11:697—-706.

Dhahbi, S., Barhoumi, W., and Zagrouba, E. (2015a).

Breast cancer diagnosis in digitized mammograms us-

ing curvelet moments. Computers in Biology and

Medicine, 64:79–90.

Dhahbi, S., Barhoumi, W., and Zagrouba, E. (2015b).

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

154

Multi-view score fusion for content-based mammo-

gram retrieval. In Proceedings of SPIE 9875, Eighth

International Conference on Machine Vision, page

987515.

Gao, X., Mu, T., Goulermas, J. Y., Thiyagalingam, J.,

and Wang, M. (2020). An interpretable deep archi-

tecture for similarity learning built upon hierarchical

concepts. IEEE Transactions On Image Processing,

29:3911–3926.

Gu, Y., Vyas, K., Yang, J., and Yang, G.-Z. (2017). Unsu-

pervised feature learning for endomicroscopy image

retrieval. In International Conference on Medical Im-

age Computing and Computer-Assisted Intervention,

pages 64–71.

Hao, X., Bao, Y., Guo, Y., Yu, M., Zhang, D., Risacher,

S. L., Saykin, A. J., Yao, X., Shen, L., and for the

Alzheimer’s Disease Neuroimaging Initiative (2020).

Multi-modal neuroimaging feature selection with con-

sistent metric constraint for diagnosis of alzheimer’s

disease. Medical Image Analysis, 60:101625.

Heath, M., Bowyer, K., Kopans, D., Jr, P. K., Moore, R.,

Chang, K., and Munishkumaran, S. (1998). Current

status of the digital database for screening mammog-

raphy. Digital Mammography Computational Imag-

ing and Vision, 13:457—-460.

Jouirou, A., Ba

ˆ

azaoui, A., and Barhoumi, W. (2019). Multi-

view information fusion in mammograms: A compre-

hensive overview. Information Fusion, 52:308–321.

Jouirou, A., Ba

ˆ

azaoui, A., Barhoumi, W., and Zagrouba,

E. (2015). Curvelet-based locality sensitive hash-

ing for mammogram retrieval in large-scale datasets.

In 12th ACS/IEEE International Conference on Com-

puter Systems and Applications (AICCSA), pages 1–8.

IEEE.

Khan, H. N., Shahid, A. R., Raza, B., Dar, A. H., and

Alquhayz, H. (2019). Multi-view feature fusion based

four views model for mammogram classification us-

ing convolutional neural network. IEEE Access,

7:165724–165733.

Kiruthika, K., Vijayan, D., and R, L. (2019). Retrieval

driven classification for mammographic masses. In

International Conference on Communication and Sig-

nal Processing, page 18619401. IEEE.

Krishnasamy, G. and Paramesran, R. (2019). Multiview

laplacian semisupervised feature selection by leverag-

ing shared knowledge among multiple tasks. Signal

Processing: Image Communication, 70:68–78.

Liu, Q., Dou, Q., Yu, L., and Heng, P. A. (2020a). Ms-net:

Multi-site network for improving prostate segmenta-

tion with heterogeneous mri data. IEEE Transactions

on Medical Imaging, 39(9):2713–2724.

Liu, W., ran Wei, Y., and qian Liu, C. (2020b). Mam-

mographic mass retrieval using multi-view informa-

tion and laplacian score feature selection. In Proceed-

ings of the 2nd International Conference on Intelligent

Medicine and Image Processing, pages 75–79.

Liu, W., Xu, W., Li, L., Li, S., Zhao, H., and Zhang, J.

(2011). Mammographic mass retrieval based on multi-

view information. In 5th International Conference on

Bioinformatics and Biomedical Engineering (iCBBE),

page 12072452.

Martens, R. M., Koopman, T., Lavini, C., Ali, M., Peeters,

C. F. W., Noij, D. P., Zwezerijnen, G., Marcus, J. T.,

Vergeer, M. R., Leemans, C. R., de Bree, R., de Graaf,

P., Boellaard, R., and Castelijns, J. A. (2020). Multi-

parametric functional mri and 18f-fdg-pet for survival

prediction in patients with head and neck squamous

cell carcinoma treated with (chemo)radiation. Euro-

pean Radiology, https://doi.org/10.1007/s00330-020-

07163-3.

Narvaez, F., Diaz, G., and Romero, E. (2011). Multi-view

information fusion for automatic bi-rads description

of mammographic masses. In Proceedings of SPIE

7963, Medical Imaging 2011: Computer-Aided Diag-

nosis, pages 84—-89.

Rasheed, A. S., Zabihzadeh, D., and Al-Obaidi, S. A. R.

(2020). Large-scale multi-modal distance metric

learning with application to content-based informa-

tion retrieval and image classification. International

Journal of Pattern Recognition and Artificial Intelli-

gence, https://doi.org/10.1142/S0218001420500342.

Rayen, S. and Subhashini, R. (2020). An effi-

cient mammogram image retrieval system using an

optimized classifier. Neural Processing Letters,

https://doi.org/10.1007/s11063-020-10254-3.

Sapate, S., Talbar, S., Mahajan, A., Sable, N., Desai, S.,

and Thakur, M. (2020). Breast cancer diagnosis using

abnormalities on ipsilateral views of digital mammo-

grams. Biocybernetics and Biomedical Engineering,

40(1):290–305.

Sbei, A., ElBedoui, K., Barhoumi, W., and Maktouf, C.

(2020). Gradient-based generation of intermediate

images for heterogeneous tumor segmentation within

hybrid pet/mri scans. Computers in Biology and

Medicine, 119:103669.

Singh, V. P., Srivastava, S., and Srivastava, R. (2018). Auto-

mated and effective content-based image retrieval for

digital mammography. Journal of X-Ray Science and

Technology, 26(1):29–49.

Soudani, A. and Barhoumi, W. (2019). An image-based

segmentation recommender using crowdsourcing and

transfer learning for skin lesion extraction. Expert Sys-

tems with Applications, 118:400–410.

Wei, G., Cao, H., Ma, H., Qi, S., Qian, W., and Ma, Z.

(2017). Content-based image retrieval for lung nodule

classification using texture features and learned dis-

tance metric. Journal of Medical Systems, 42(13):1–7.

Wu, W., Tao, D., Li, H., Yang, Z., and Cheng, J. (2020).

Deep features for person re-identification on metric

learning. Pattern Recognition, 110:107424.

Yang, L., Jin, R., Mummert, L., Sukthankar, R., Goode, A.,

Zheng, B., Hoi, S. C., and Satyanarayanan, M. (2010).

A boosting framework for visuality-preserving dis-

tance metric learning and its application to medical

image retrieval. IEEE Transactions On Pattern Anal-

ysis And Machine Intelligence, 32(1):30–44.

Shared Information-Based Late Fusion for Four Mammogram Views Retrieval using Data-driven Distance Selection

155