Real-time Web-based Remote Interaction with Active HPC Applications

Tim Dykes

14

, Ugo Varetto

2

, Claudio Gheller

3

and Mel Krokos

4

1

HPE HPC/AI EMEA Research Lab, Bristol, U.K.

2

Pawsey Supercomputing Centre, Kensington, Perth, WA, Australia

3

Institute of Radioastronomy, INAF. Via P. Gobetti, 101 40129 Bologna, Italy

4

School of Creative Technologies, University of Portsmouth, Eldon Building, Winston Churchill Avenue, Portsmouth, U.K.

Keywords:

Web-based Scientific Visualization, Remote Interaction, High Performance Computing.

Abstract:

Real-time interaction is a necessary part of the modern high performance computing (HPC) environment, used

for tasks such as development, debugging, visualization, and experimentation. However, HPC systems are re-

mote by nature, and current solutions for remote user interaction generally rely on remote desktop software

or bespoke client-server implementations combined with an existing user interface. This can be an inhibiting

factor for a domain scientist looking to incorporate simple remote interaction to their research software. Fur-

thermore, there are very few solutions that allow the user to interact via the web, which is fast becoming a

crucial platform for accessible scientific HPC software. To address this, we present a framework to support re-

mote interaction with HPC software through web-based technologies. This lightweight framework is intended

to allow HPC developers to expose remote procedure calls and data streaming to application users through

a web browser, and allow real-time interaction with the application while executing on a HPC system. We

present a classification scheme for remote applications, detail our framework, and present an example use case

within a HPC visualization application and real world performance for remote interaction with a HPC system

over a Wide Area Network.

1 INTRODUCTION

In the modern Internet era, much of the informa-

tion we need throughout our working day is available

on the web. Over the past few decades communi-

cations infrastructure, user hardware, web browsers,

and associated tools and libraries have evolved to

such an extent that many user applications that once

would have been installed locally are now run entirely

remotely. This new, remote, web-based, paradigm

means that a modern Internet user can do much

more than type emails and view static pages in their

browser, as was the case in the early days of the In-

ternet. They can now type documents through online

word processors, edit photos with image manipula-

tion tools, compete in online 3D games via real-time

3D rendering and streaming, design engineering solu-

tions with web CAD applications, and more, all from

the comfort of a laptop web browser. This paradigm

has led to a user expectation of powerful web services,

exploiting cloud computing (Patidar et al., 2012), that

are more accessible, portable, and simpler to use, than

traditional applications. Web browsers typically do

not require expensive high powered hardware, and

are pre-installed on most personal computing devices.

Users can access services built and hosted on the other

side of the world to communicate, run applications,

and store and retrieve data in real-time, without prior

installation or configuration on their local machine.

With the rise of low cost, on-demand, cloud-based

web infrastructure, for example Amazon Web Ser-

vices (AWS) and Microsoft Azure, and fast Internet

streaming speeds, it is becoming commonplace for

fully featured Software As A Service (SaaS) applica-

tions to be available in-browser (e.g. (Miller, 2009)).

Conversely, High Performance Computing (HPC)

applications are typically executed remotely by ex-

ploiting computing clusters accessible by terminal or

remote desktop protocol (e.g. Virtual Network Com-

puting (VNC) client or X Forwarding protocol). User

applications are run via job requests submitted to a

resource management system, which are queued and

executed when the required resources become avail-

able. This approach is effective for many traditional

HPC applications (e.g. modelling and simulation);

however, due to the paradigm of web and cloud com-

puting, the modern day research scientist (a typical

HPC user) has access to a much broader array of tools

88

Dykes, T., Varetto, U., Gheller, C. and Krokos, M.

Real-time Web-based Remote Interaction with Active HPC Applications.

DOI: 10.5220/0010260300880100

In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2021) - Volume 3: IVAPP, pages

88-100

ISBN: 978-989-758-488-6

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

that may benefit from, or even require, high perfor-

mance computing, from interactive computing with

Python-based tools to workflow software for coupling

and/or chaining multiple large scale parallel applica-

tions.

Notably, there are a variety of HPC applica-

tions that necessitate a Human-In-The-Loop (HITL)

(Nunes et al., 2015) with real-time user interaction;

for example, visualization software, monitoring and

inspection utilities, debugging tools, and computa-

tional steering software. This type of application re-

quires some form of user interface, which is typically

achieved through a client-server remote software ap-

proach where a local user application communicates

with a remote HPC application; for example, a lo-

cal graphical debugging client connected to a debug

server running on a HPC system. However, these ap-

proaches are often tailored to a specific application

and require a significant amount of setup (detailed fur-

ther in Section 2). This class of application could ben-

efit from the accessibility, portability, and simplicity

of cloud-like web-based software.

There are existing efforts to provide cloud-like

services for HPC, from creating HPC-like environ-

ments or running traditional HPC applications on

cloud-computing infrastructures (Canon et al., 2010;

Church et al., 2015; Younge et al., 2017), to building

cloud-like infrastructures on HPC systems (Mauch

et al., 2013). This extends to HPC applications ex-

posed to users through the web via workflow man-

agement tools (Goecks et al., 2010; Brown et al.,

2015) and Science Gateways (Gesing et al., 2015),

and public web platforms supported by HPC infras-

tructures such as the Theoretical Astrophysical Obser-

vatory (TAO) (Bernyk et al., 2016) and similar efforts

in other scientific communities. However, for devel-

opers to build HPC applications requiring real-time

interaction, interoperability between web and HPC

technologies is a necessity, particularly in terms of

auxiliary software libraries. This is challenging espe-

cially due to the significantly disparate environments,

both software and hardware, of web and HPC. A do-

main scientist developing high performance research

software is unlikely to also be an expert in web de-

velopment or even familiar with the languages and

tools common in the field, and vice versa. This diffi-

culty is compounded when there is a requirement for

real-time interaction, necessitating high performance

implementations on both sides. There are only few

examples of HPC applications and web applications

interacting in real-time (see Section 3); whilst this is

partially due to the infancy of a HPC-Web conver-

gence, the problem is exacerbated by a lack of general

purpose tools to facilitate interoperability.

This challenge of interoperability has been the fo-

cus of existing efforts in the HPC community, for

example with REST APIs for web-based interaction

with resource management systems (Cholia et al.,

2010; Cruz and Martinasso, 2019), environments

such as EnginFrame

1

and Bridges (Nystrom et al.,

2015) that are designed to support web portals and

non-traditional HPC workloads, and containerized so-

lutions packaging HPC software for general portable

usage such as those available on NVIDIA’s GPU-

Cloud

2

. It is clear there is an emerging paradigm

shift in HPC from the traditional command line batch

scheduled job execution to a more accessible and

user-friendly experience motivated by web, cloud,

and interactive technologies. However, there is still

a long distance to go, particularly for applications re-

quiring real-time user interaction.

In this paper, to help bridge the gap between HPC

and Web applications and address the lack of gen-

eral purpose interoperability tools, we introduce WS-

RTI: a WebSocket (Fette and Melnikov, 2011) based

framework for fast data streaming and simple, real-

time, user interaction with HPC applications. The

core principle of this framework is to allow HPC spe-

cialists and research scientists to quickly and easily

create web interfaces to monitor and interact with ac-

tive HPC applications in real-time. We provide a

lightweight mechanism for a headless HPC applica-

tion to expose a Remote Procedure Call (RPC) in-

terface automatically linked to a web based graphical

user interface. This is complimented by data stream-

ing support to allow the user to transport data to and

from the application independently of the RPC mech-

anism.

The remainder of the paper is structured as fol-

lows: Section 2 presents a novel classification scheme

to identify and differentiate remote applications. With

reference to this scheme we discuss related work in

Section 3, before presenting the WSRTI framework in

Section 4. This is followed by a practical explanation

of the requirements for a HPC application to utilize

WSRTI in Section 5, followed by a specific example

use case within a high performance visualization ap-

plication in Section 6. We briefly discuss performance

in Section 7, and Section 8 concludes the work with a

summary and future directions.

1

https://www.nice-software.com/products/enginframe

2

https://www.nvidia.com/en-us/gpu-cloud/

Real-time Web-based Remote Interaction with Active HPC Applications

89

2 A CLASSIFICATION SCHEME

FOR REMOTE APPLICATIONS

The inherently remote nature of working on a HPC

system can discourage use of interactive applications.

HPC systems are traditionally hosted in dedicated

computing centers and on university campuses, and

access typically requires a local workstation and ter-

minal connection via Secure Shell (SSH) protocol.

Once connected, the user can submit jobs through a

resource management system such as SLURM (Yoo

et al., 2003) or the Portable Batch System (PBS)

3

to

request computing resources in either a batch or in-

teractive manner. Batch submission inserts the job

into a queue and schedules it to run when resources

are available to be allocated, which allows efficient

job management by the scheduler and maximum re-

source utilization. However, the job must be defined

ahead of time and often failure will yield the alloca-

tion and require a new job to be created. Interactive

submission indicates the resources are required im-

mediately, providing the user with a shell where they

can interactively launch applications for the duration

of the allocation. While it is standard practice to sup-

port an interactive queue for applications that require

it, interactive applications typically have a broader set

of requirements, e.g. supporting user interaction.

An important characteristic of an interactive HPC

application is the means by which user interaction is

supported. This can be complicated by one or more

layers of indirection that typically exist between the

user and the compute nodes on which their software

executes, illustrated by Figure 1. This indirection is

necessary for security of the system, however it can

introduce difficulties for the end user who is routinely

also outside of a gateway firewall. Furthermore, com-

pute nodes typically have a stripped down operating

system with only high performance components, and

as such may not include software for traditional desk-

top environments (e.g. an X11 server). Interaction

with software running on the compute nodes must

somehow address these layers of indirection between

the user and the application. The most common ap-

proach is for the remote application to act as a server,

and a local application on the user’s machine to act

as a client, coupled via a communication schema and

forwarding mechanism such as SSH tunnelling. How-

ever, there are a variety of different approaches for

this client-server scenario, from remote-desktop soft-

ware to bespoke application frameworks.

To contextualise the work presented in this pa-

per, we first critically review and classify the existing

3

e.g. http://www.pbspro.org/

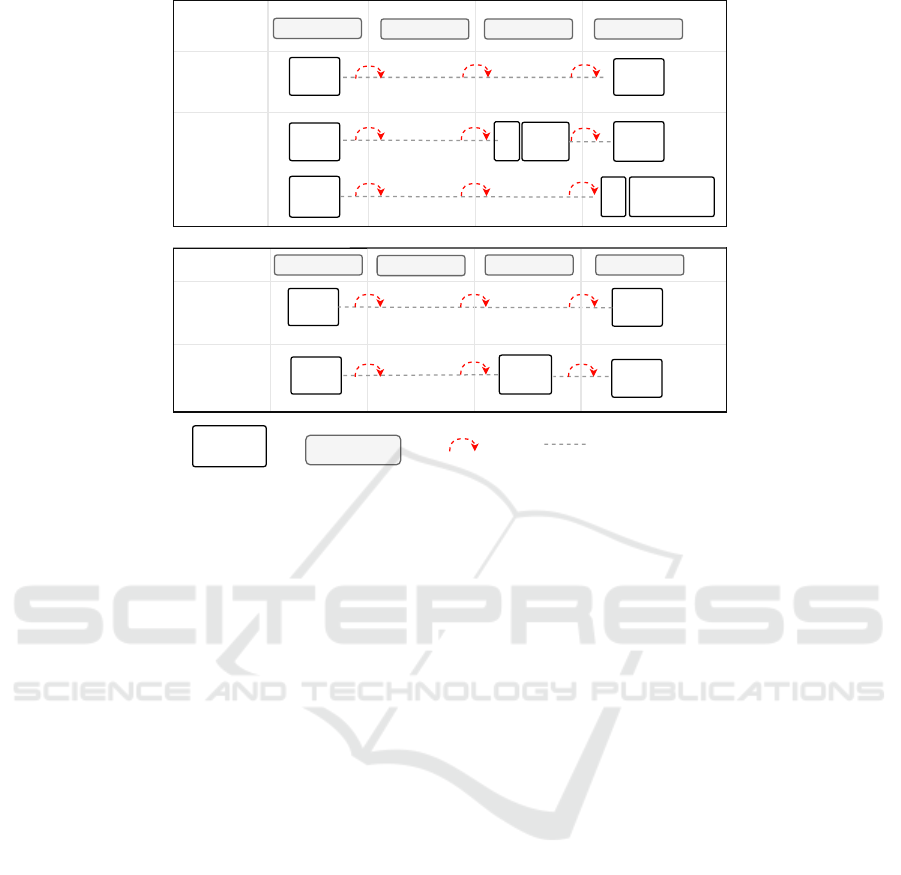

Gateway Node Login Node Compute NodesUser Machine

WAN LAN

Figure 1: The typical layers of indirection between a user

machine and the compute nodes of a HPC system.

approaches for remote interaction with high perfor-

mance applications, in terms of direct vs. indirect ap-

proaches, and web vs. native approaches. This classi-

fication scheme is outlined in Figure 2, in the context

of a typical HPC setup. In this diagram, the high per-

formance application consists of Interface and Com-

pute components, which are exposed to the user via

five different approaches with varying layers of in-

direction. Indirection of an approach refers to the

use of auxiliary software (Aux) to support the remote

access. Applications are connected via an Applica-

tion Layer Protocol (ALP) (Zimmermann, 1980), and

each hop refers to a jump between network layers that

may need facilitating (e.g. by port forwarding).

Direct Remote Native (DRN): This classification

refers to native applications that implement the full

end to end client-server model, i.e. the application

interface is installed on a users local machine, and

the application server runs on the compute nodes of

a HPC system. The connection is typically a cus-

tom messaging schema sent over a common appli-

cation layer protocol (e.g. ZeroMQ

4

, TCP sockets),

and each hop is often enabled via SSH tunneling.

This approach can perform well due to developer op-

timised messaging schemas tailored for the specific

application, however relies on availability and instal-

lation of a client for the specific user machine. The

increased performance is more effective for interac-

tive applications, particularly those requiring real-

time interaction. Examples of this class of appli-

cation are high performance visualization software

tools ParaView (Ahrens et al., 2005) and VisIt (Childs

et al., 2011), and remote debugging software such

as NVidia NSight Eclipse edition (NVIDIA Corpo-

ration, 2018a).

Indirect Remote Native (IRN): In this case na-

tive applications implement part of the client-server

model, however they may rely on auxiliary software

to extend fully to the user machine, or they may not

directly connect to the remote application. There are

various indirect approaches, and the location of the

interface or auxiliary software does not always match

exactly the two example scenarios shown in Figure

2. A typical example of the first scenario is a client

4

http://zeromq.org/

IVAPP 2021 - 12th International Conference on Information Visualization Theory and Applications

90

User Machine Gateway Node Login Node Compute Nodes

App

Interface

App

Compute

Forwarding Forwarding

Direct Remote

Native (DRN)

Application

Indirect Remote

Native (IRN)

Application

Aux

Interface

App

Interface

App

Compute

App

Interface +

Compute

Aux

Interface

Forwarding

Forwarding

Forwarding

Aux

Aux

Browser

Direct Remote

Web (DRW)

Application

App

Compute

Indirect Remote

Web (IRW)

Application

Browser

Aux

Web Server

Internal Node

Compute Nodes

App

Compute

User Machine

Forwarding

Forwarding

Hop

Application layer

protocol

Forwarding

Application

Location

Figure 2: A classification scheme for remote applications in the context of HPC systems. Top: Native applications (DRN,

IRN), Bottom: Web applications (DRW, IRW).

application which runs on the login node of the HPC

system in order to interact with the resource manage-

ment system; the client is then accessed locally via

a remote desktop approach such as TurboVNC or X

Forwarding. The second scenario requires the inter-

face and auxiliary software to run directly on the com-

pute nodes; this can be more difficult to achieve due to

the lack of common desktop environment software on

compute nodes. Both approaches can provide more

portability when using auxiliary software. For exam-

ple, a linux client can run on the HPC system whilst

the user connects through remote desktop software

on a Microsoft Windows-based PC. However, perfor-

mance can degrade as auxiliary software is typically

not able to optimise data transfers as effectively as

custom approaches in a Direct Remote Application,

and may use slow transfer protocols such as reliance

on a shared filesystem. Examples of this type of appli-

cation are remote profiling tools such as Intel VTune

Amplifier (Intel Corporation, 2018) or the NVidia Vi-

sual Profiler (NVIDIA Corporation, 2018b). This ap-

proach is also commonly used to allow installing a

variety of user software, which may in fact be direct

remote applications, on a HPC system, such that the

users of the system only need to install a remote desk-

top solution on their own machine.

Direct Remote Web (DRW): A web application run-

ning in the users browser is directly connected to the

application server running on compute nodes. The di-

rect approach requires exposing a public web server

that also has internal access to the compute nodes, and

directly connecting the user web application to the

server via a web-capable ALP such as WebSockets

(the direct connection may include intervening router

software). This approach is most flexible and conve-

nient for the user, however introduces significant se-

curity concerns if the web-server is to be made public.

A simple work-around for an internal application is to

run the web server on an internal node, and use SSH

Tunneling to forward ports from the user machine

to the internal node, thus securing the user connec-

tion via SSH. Some examples of these approaches are

the ParaView Web Interface and JupyterHub (Jupyter

Hub Development Team, 2018), detailed further in

Section 3.

Indirect Remote Web (IRW): A web application

running in the users browser is connected via a web

server to auxiliary software (beyond simple routing

software), which in turn is connected to the applica-

tion server. This approach allows for the auxiliary

software to provide a layer of security between the

public web server and the application, however can

introduce difficulties for interactivity. For example,

common techniques are to use a shared filesystem or

database polling as an intermediary step to pass re-

quests from the user to the server, which is sufficient

for data access but introduces additional latency and

constraints not amenable to real-time interaction with

the application. This approach is typical for public

facing web portals supported by HPC resources, for

example TAO (Bernyk et al., 2016) and the Cosmo-

logical Web Portal (Ragagnin et al., 2016).

Real-time Web-based Remote Interaction with Active HPC Applications

91

This four-part classification scheme effectively

describes the ways in which applications are run re-

motely on HPC systems. Some may support more

than one mode of execution, and so can fit into mul-

tiple categories of this scheme; for instance extensive

visualization software ParaView can be used with var-

ious types of interface that can be classified in each of

these categories.

3 RELATED WORK

With reference to the classification scheme of Sec-

tion 2, there are many remote applications that can

be placed into one or more of these categories. The

scope of the work presented in this paper is limited to

those that fit into the Direct or Indirect Remote Web

categories and can support real-time interactivity. The

related tools we have identified that can be classified

as DRW or IRW are listed below.

ParaView (Ahrens et al., 2005) is a large scale par-

allel visualization software, designed for effective ex-

ploitation of HPC systems. A web enabled version

ParaViewWeb

5

, can act as a Direct Remote Web Ap-

plication by allowing the user to remotely connect

via web browser to a ParaView server running on a

HPC system. The connection is enabled via custom

library wslink

6

that connects JavaScript web clients to

a Python web server through ALP WebSockets. Fur-

thermore, the in-situ library Paraview Catalyst (Aya-

chit et al., 2015) allows users to instrument their ap-

plication for in-situ analysis, visualization, and com-

putational steering purposes.

The Cactus computational framework (Goodale

et al., 2003) supports a web browser interface for in-

situ visualization and steering tasks. The user can

instrument existing HPC applications with the Cac-

tus API, and perform interactive steering tasks and

view visualization outputs through a web browser.

The standard implementation utilises a HTTPD

7

web

server, and forwards ports to the user for remote ac-

cess.

The Jupyter Notebook

8

is a web application al-

lowing users to interactively write and execute code,

and transform, analyse, and visualize data using a

variety of languages. The Notebook can be set up

manually on a HPC system, exposing a web inter-

face via the built in Notebook python web server that

can be accessed by browser from a user machine via

5

https://kitware.github.io/paraviewweb

6

https://github.com/kitware/wslink

7

https://httpd.apache.org/

8

http://jupyter.org/

port forwarding. Furthermore, it is possible to deploy

JupyterHub as a multi-user hub to access over HTTP,

an approach also viable on HPC systems (Milligan,

2017).

The WebVis framework (Zhou et al., 2013) is a

multi-user, client-server, visualization system with a

web-based client that can interact with a cluster-based

visualization server. The client is connected to the

server via a back-end service built using the Google

Web Toolkit and a Java web server, which communi-

cate with an institutional web service that forwards

events and images to and from the internal render

cluster (i.e. an IRW approach). Client GUI inter-

actions are forwarded to the server, and images re-

turned, through an EventBus using the HTTP server

push paradigm.

The commercial remote desktop software FastX

9

enables users to use existing desktop interfaces via the

web with a WebAssembly module for a uniquely effi-

cient high performance remote desktop service in the

browser. This option is an effective solution for en-

abling access via the web for applications with ex-

isting interfaces, but does require a FastX license. A

similar approach can be taken with other remote desk-

top protocols, such as VNC through e.g. TightVNC

10

.

However, compute nodes of HPC systems commonly

have a stripped down version of Linux that does not

support GUI applications, so in most cases the appli-

cation must already support remote execution from a

login node. This software can enable Direct and Indi-

rect Remote Applications to be used as Indirect Web

Applications.

Remote frameworks that support web such as

FastX are seeing some success, for example the web

visualization portal at the Texas Advanced Comput-

ing Centre supports web-based usage of Paraview

through a web-based VNC session, however such ap-

proaches do require the user to already have a direc-

t/indirect remote application. Other solutions are built

upon bespoke frameworks which are effective for a

single application but less useful for the general case

of a HPC application requiring remote interaction or

monitoring. The development of WSRTI, described

in the next section, is intended to address the lack of

general purpose tools for interoperability, in order to

support the development of more applications using

the DRW and IRW approach for remote interactivity.

WSRTI, the development of which is described in

the next section, is intended to address this lack of

general purpose tools for interoperability, in order to

support the development of more applications using

the DRW and IRW approach for remote interactivity.

9

https://www.starnet.com/fastx/

10

https://www.tightvnc.com/

IVAPP 2021 - 12th International Conference on Information Visualization Theory and Applications

92

Client

Template

Interface

Data

Handler

RPC Event

CLI

GUI

Client Side

JS Library

Other

Binary

Image

datGUI

Other

GUI

Figure 3: Structure of the client-side JavaScript library.

4 A FRAMEWORK FOR

CONNECTING REAL TIME

HPC APPLICATIONS TO THE

WEB

The WSRTI library

11

is built to facilitate interoper-

ability of interactive web and HPC technologies. We

aim to reduce as much as possible the burden on

a research scientist to understand web technologies,

and allow them to link their application to a web in-

terface automatically from application-side specifica-

tions. The key features we support are data stream-

ing, RPC and event forwarding, and dynamic inter-

face generation. The following subsections discuss

the technical details of the library and illustrate how

each of the key features is supported.

4.1 Overview

WSRTI is conceptually split into two components, a

client side web library and a set of application-side

C++ utilities to assist HPC developers in exposing

RPC functionality and application data. The two com-

ponents are connected via a WebSocket communica-

tion scheme discussed in Sections 4.2.

The client-side JavaScript library is structured as

illustrated in Figure 3. The Data Handler, Event,

RPC, and Interface modules are described in the fol-

lowing sections, and integrated into an included client

template consisting of a web client capable of receiv-

ing and displaying images with a console input and

debug log.

Figure 4 illustrates the server-side utilities, con-

sisting of modules for binary serialization, generation

of JSON interface objects, RPC, and asynchronous

queues, along with convenience wrappers for image

compression and WebSocket servers. These modules

can be used by the HPC application developer to ex-

port data and interface descriptions, while sending

11

https://github.com/RemoteRTI/WSRTI

Serializer TJPP

WebSocket

Plus

Interface

Server Side

C++ Utilities

Sync

Queue

RPC

Figure 4: The collection of C++ utilities for HPC applica-

tions.

Data Stream

RPC

Generic Events

Interaction Events

Data Websocket

Auxiliary Websocket

Binary

Text

HPC Application User Browser

Figure 5: The data flow between a HPC application and

WSRTI. Data is split across two sockets, one for dedicated

binary streaming and a second for binary event and text

RPC messages. Variation of image also presented in (Dykes

et al., 2018) (Figure 5).

and receiving RPCs and events. All modules of the

library are documented with Doxygen

12

, and contain

unit tests that also act as example usage.

4.2 Communication: Data and Event

Streaming

Client and application side utilities enable the user

to package and asynchronously stream data back and

forth between the active HPC application and web

client; this includes generic binary data, formatted

binary messages, and strings. In order to facilitate

fast and interactive web communication, WSRTI ex-

ploits the WebSocket protocol. The full-duplex nature

of a WebSocket connection is ideal for interactivity;

messages can be sent and responses received without

polling, allowing streaming services to be built in a

simple and efficient manner. Two sockets are cre-

ated to connect to the application, a dedicated Data

Stream WebSocket for streaming binary application

data and user interaction events (mouse and keyboard

input), and an Auxiliary WebSocket for string-based

RPC commands and generic application events, as il-

lustrated in Figure 5.

The user can exploit the dedicated Data Stream to

forward application data to the client. The client data

handler module consists of set of data receivers, one

of which monitors the Data Stream at any one time.

Data receivers are simply implemented, and typically

assume the message they receive is of an expected for-

12

http://www.stack.nl/ dimitri/doxygen/

Real-time Web-based Remote Interaction with Active HPC Applications

93

mat. The client and application should both agree on

the type of data expected on the Data Stream (this can

be agreed, for example, via an event on the Auxil-

iary stream). Messages are received in the form of a

JavaScript Blob and forwarded to the current active

data processing module, which is by default an image

processor. Keyboard and mouse events can also be

automatically streamed to from the client to the appli-

cation on the Data Stream.

The user can exploit the dedicated Auxiliary

Stream via the client event module to send and receive

formatted binary and string messages (distinguishable

in the WebSockets layer). Formatted binary events

are stored as JavaScript TypedArrays with a preced-

ing integer identifier, using a schema agreed upon

by both client and application. This identifier is in-

spected and the event is forwarded to the appropriate

event handler, for example this may be a non-standard

data message (e.g. a one-time downloadable file) or

metadata for analysis. String messages are inspected

for valid JSON-RPC formatting and forwarded to the

RPC module (Section 4.3).

The use of two dedicated sockets allows WSRTI

to make assumptions about the type of message re-

ceived and optimize client-side de-serialization. This

approach is used for data streaming from application

to client, which may potentially trigger messages in

high frequency and volume. For example during im-

age streaming the client data handler can choose to

interpret all messages received as JPEG compressed

images and directly update the display at high frame

rate. This avoids the need to de-serialize and check

a message identifier which can have a noticeable im-

pact on performance in the JavaScript data handling

module.

The application-side serializer utility is a C++

header-only library to assist with serializing C++ ob-

jects and structures to binary messages, for example

to create events or serialize application data. The

tjpp convenience library assists with compressing im-

ages to JPEG via lib-jpeg-turbo

13

. These can then be

passed to a WebSockets server (WebSocketPlus util-

ity) via a synchronized queue (SyncQueue utility) to

be asynchronously sent to the client.

4.3 Bi-directional RPC

The client and the application can exchange RPC re-

quests in both directions. To avoid the user imple-

menting application specific RPC handling in both the

client and application the application developer can

specify a function name and list of arguments as part

of the interface generation process (see Section 4.4).

13

https://libjpeg-turbo.org/

Interface elements such as a button or input field on

the client can be bound to a procedure call on the

server at application run-time. This allows the appli-

cation RPC interface to be extended or updated with-

out changing the client code, and avoids the need for

specific RPC functions and arguments to be known in

advance,

Internally, the RPC module can send and receive

JSON-RPC

14

formatted requests. The JSON-RPC

format is a simple specification that covers the nec-

essary feature set for RPC in this context. JSON

(JavaScript Object Notation) is essentially a subset of

JavaScript, and natively supported by the language.

Bi-directional RPC allows the server to also trigger

client-side operations if supported, such as handling a

dynamic interface descriptor or accepting a file down-

load. This model of RPC allows more advanced

clients to be implemented, for example facilitating a

peer-to-peer model where a client can trigger an ac-

tion that will be forwarded to multiple other clients.

This feature would support an advanced implementor

in creating a collaborative environment through indi-

rect messaging between clients.

4.4 Dynamic Interface Generation

The library follows an application-centric design,

meaning that the majority of the client functionality is

defined by the application through dynamic interface

generation and RPC linkage. This allows the devel-

oper to focus on their HPC application and the data

they want to expose, rather than on building a web

GUI. The HPC application at runtime can define and

modify the look and feel of the web client user in-

terface, for example adding or removing buttons and

sliders and specifying RPC calls and arguments that

should be linked to client actions.

The interface generation mechanism depends on

the construction of an interface descriptor, typically

on the application side, which is forwarded to the

client on connection. This can then be updated via

client-targeted or broadcast RPC messages from the

application, or upon request by the client. The de-

scriptor is a hierarchical structure, reflecting the typ-

ical conceptual hierarchy of a user interface, that de-

scribes a series of buttons, sliders, input fields and dis-

play fields.

Figure 6 demonstrates the application-side pro-

cess to create an interface descriptor. Groups of menu

elements are of type group, while elements have types

such as Button or TextInput which are used by the

client to generate web interface elements. The args

object is used to specify arguments to the RPC call,

14

http://www.jsonrpc.org/specification

IVAPP 2021 - 12th International Conference on Information Visualization Theory and Applications

94

which are linked to data objects contained in the in-

terface descriptor and can be generated via menu in-

teractions or manually. The JSON descriptor is gener-

ated using a language specific JSON library, such as

RapidJSON

15

for C++. A C++ Interface header in-

cluded with the framework provides utility functions

to generate JSON menu elements such as text input

boxes, numerical sliders, and buttons, whilst an RPC

header supports creation of RPC messages.

The client interface module (Figure 3) is split be-

tween a CLI component and a GUI component. In the

template example. the CLI component is linked to an

input box on the web page, and parses the input string

for internal commands (such as a request to print the

help message to the log), distinguished by a preced-

ing ’.’ character, or commands to the server which

are forwarded as RPC calls. This is helpful for simple

text interfaces and debugging.

The GUI component generates an interface based

on the interface descriptor. By default, the interface is

generated via lightweight graphical interface library

datGUI

16

, however this can be replaced by a different

interface library (e.g. React, jQuery) by overriding a

series of interface generation functions. This allows

users with web-experience to exploit more extensive

interface libraries to add widgets and other advanced

interface elements. Whilst the client typically parses

the interface descriptor from the application and dy-

namically generates a user interface, it is also pos-

sible to manually construct this on the client-side if

preferred.

5 EXPOSING A REMOTE

APPLICATION FOR

INTERACTION VIA WSRTI

In order to interact with an active remote applica-

tion, there is a set of requirements the application

should satisfy. As interaction is typically a necessary

part of computational steering, the following require-

ments take inspiration from those for steering libraries

(Brooke et al., 2003). However, WSRTI is intended to

apply more generally to remote interaction with ap-

plications, as opposed to steering of numerical sim-

ulations, as such the requirements are generalised to

represent the minimal requirements for user interac-

tion. At least one of these requirements must be met

in order to enable remote interaction: (1) expose a

representation of application state, (2) expose a repre-

sentation of application data, (3) expose an RPC inter-

15

http://rapidjson.org/

16

https://github.com/dataarts/dat.gui

// JSON Value objects, a top level descriptor, a group

representing a subfolder and its contents.

Value dsc(kObjectType), user_grp(kObjectType),

user_content(kObjectType);

string current_input_file = "";

// A text box for an input file name and a ’load’ button

user_content.AddMember("Input File",

ui_text_input(current_input_file));

// Describe the argument to the ’Load’ function: 1

string, the ’Input File’ text box content

vector<string> args = { "User Settings/Input File" };

user_content.AddMember("Load", ui_button("cmd_load",

args));

// Add the contents to the ’User Settings’ group

user_grp.AddMember("type", "Group");

user_grp.AddMember( "contents", user_content);

// Add ’User Settings’ group to the interface descriptor

dsc.AddMember("User Settings", user_grp);

Figure 6: Creating an interface descriptor with one menu

called ”User Settings”, containing a text input box and a

button to load an input file by name. RapidJSON custom

memory allocator arguments are removed for brevity.

face, (4) accept input from standard Human Interface

Devices (HIDs).

Supporting (1) is a minimal requirement allow-

ing a web interface to display the current application

state. This could be, for example, whether the appli-

cation is still running and some indicator of algorith-

mic progress, e.g. the current time in a computational

simulation. (2) enables an interface to display a repre-

sentation of the applications working data. This could

be a subset of simulation data for analysis, or pre-

generated analyses such streaming 3D visualization

or graph plots. (3) enables linking web interactions to

an RPC interface to trigger application mechanisms

such as modifying variables or changing state. (4)

relates to input from HIDs, for example linking key-

board and mouse interactions to actions within the ap-

plication such as controlling a virtual camera during

visualization or stepping through program execution

in a debugging tool.

In order to support one or more of these require-

ments, the application must contain a control loop or

checkpoint, in which point actions (1) and/or (2) can

be performed, or input from the remote client can be

accepted and handled (Figure 7, left). A typical case

of control loop is iterating over the time domain dur-

ing a simulation (or time stepping), in which case

WSRTI could be used for computational steering.

These requirements may be satisfied by instrument-

ing user code with a set of additional functions. Fig-

ure 7, right, demonstrates the minimum necessities to

add interactivity to a generic application for WSRTI.

setup() is responsible for initialising the WebSocket

servers and providing callbacks for event handling,

Real-time Web-based Remote Interaction with Active HPC Applications

95

initialise()

while(true)

process_data()

write_output()

check_exit_condition()

finalise()

initialise()

while(true)

finalise()

setup()

update()

process_data()

write_output()

check_exit_condition()

send_state_and_data()

Figure 7: The generic case for instrumenting a high perfor-

mance application for remote observation or interaction.

along with constructing the JSON interface descriptor

which is sent to the remote client. update() receives

events and RPC requests from the client and pro-

cesses them accordingly, potentially modifying the

user code parameters based on the events received.

send state and data() outputs application state and

data for the client to process, either in the form of

interface updates or streaming data.

To demonstrate the applicability of WSRTI to

real-time applications, we use as an example case a

high performance batch visualization code. This HPC

application is instrumented to accept input, RPC and

image streaming to become a web-accessible real-

time large scale visualization server for HPC systems.

6 USE CASE: SCIENTIFIC

VISUALIZATION

Splotch (Dolag et al., 2008) is a scientific visualiza-

tion package designed to run on HPC systems and

process large scientific datasets. Splotch is open

source, written entirely in C++, with minimal depen-

dencies beyond those for parallel models and spe-

cific file I/O. Splotch can exploit OpenMP for shared

memory parallelism, MPI for distributed memory par-

allelism, and is also able to exploit heterogeneous

machines with computational accelerators (Jin et al.,

2010; Rivi et al., 2014; Dykes et al., 2017).

Initially designed to run in batch processing mode,

Splotch reads a file on start-up describing input data

and visualization parameters. After loading the input

data and setting up the visualization scene, an itera-

tive render loop performs the visualization, creating

and writing a series of one or more images along a

pre-defined camera path. We detail the steps taken to

instrument our code to communicate with the WSRTI

library and fulfil the requirements in Section 5, ex-

tending Splotch from a batch visualization software

to a visualization server capable of real-time interac-

tion through a web interface.

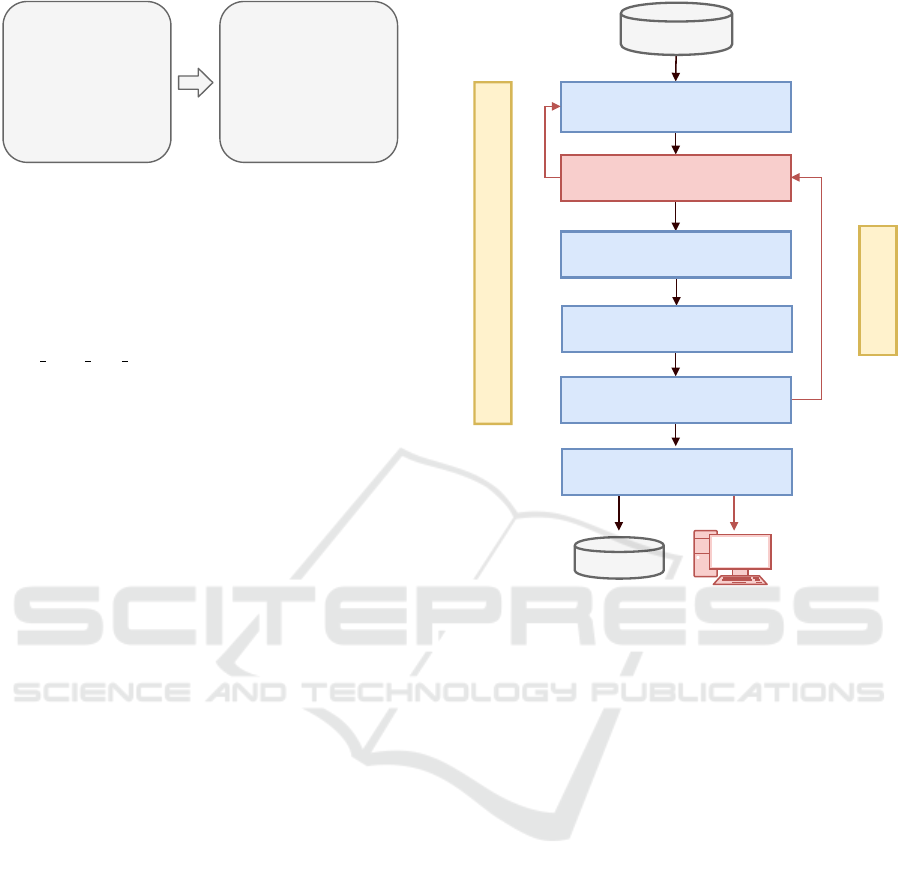

The Splotch algorithm is presented in Figure 8,

with additions for WSRTI marked in red. The key al-

Binary Data File(s)

Load parameter file and data file

Setup server components, build interface

descriptor

Handle Events, Update parameters,

Update interface descriptor

3D Transformation, perspective

projection, coloring

Solving radiative transfer equation

along lines of sight

Parallel image reduction

Save to disk orcompress and send to

client

MPI

CUDA / OpenMP

/ MIC

Image file

INPUT

UPDATE LOOP

PROJECT/COLOR

RENDER

COMPOSITION

OUTPUT

Figure 8: The Splotch algorithm, with changes for WS-

RTI interactivity highlighted in red. Image also presented

in (Dykes et al., 2018) (Figure 4).

gorithmic change involved the addition of an update

function with loop back mechanism to move from al-

gorithm completion back to the update, followed by a

series of additional functions to perform tasks as de-

tailed below.

Initialisation. After file input, we initialise the WS-

RTI server side components and create an interface

descriptor as demonstrated in Figure. 6, adding text

input and number sliders, adding RPC linkage for var-

ious modifiable visualization parameters.

Update. At the beginning of the control loop the

update function performs various tasks. Event han-

dling processes both events and RPCs received since

the last update. Local parameters are updated, and

updates are reflected in the interface descriptor. In

the case of Splotch, we support HID events to control

the visualization camera (mouse for rotation, keys for

movement), and RPC messages to update visualiza-

tion parameters such as the active dataset, color map,

and graphical variables.

Output Data. Our output data, an uncompressed

TGA formatted image, is passed through a JPEG

image compressor and added to the message queue

(using WSRTI syncqueue utility), which is asyn-

chronously extracted and passed to the WebSocket-

IVAPP 2021 - 12th International Conference on Information Visualization Theory and Applications

96

Figure 9: Splotch running on a HPC system visualizing

an astronomical light-cone in real-time, streaming to web

browser via the WSRTI framework. Dynamic interface is

seen on the right hand side using the default dat.GUI inter-

face generator. Image also presented in (Dykes et al., 2018)

(Figure 11), see reference for further details.

Plus utility for sending to the client. In Splotch, the

limiting factor on framerate is the data size; for large

datasets (hundreds of Gigabytes and more), it is ex-

pected to run at a reduced frame-rate (e.g. 10fps) as

compared to other real-time rendering. For this reason

we use only frame-by-frame compression, rather than

video compression which would increase the latency

for user interaction at low frame rates.

Various application-specific optimisations were

implemented to achieve a fast frame rate for real-time

visualization, outside of the scope of WSRTI. These

focused predominantly on reduced memory allocation

and reuse of data buffers, and may or may not be nec-

essary for a more general research application.

The initial instrumentation for interactivity is

lightweight, and so a researcher looking for low-

impact monitoring can be up and running quickly,

however further work is required to create a fully fea-

tured interactive application. The interactive build of

Splotch exploiting WSRTI is illustrated in Figure 9,

and for more extensive detail on the feature-set and

usage of interactive Splotch for astronomical visual-

ization, the reader is referred to (Dykes et al., 2018).

Instrumentation via WSRTI also allows a deeper

integration with web-based tools for scientific analy-

sis, beyond the simple client shown in Figure 9. To

demonstrate this, in Figure 10 we embed our web

client within a Jupyter notebook, and connect it to the

Splotch visualisation server running on a high perfor-

mance computing system (test system as detailed in

Section 7).

In this case, we are visualising a set of snapshots

of a large state of the art galaxy formation simulation

GigaERIS (Tamfal, Mayer, et al. in prep.), a higher

resolution follow-up to the successful Eris simulation

suite (Guedes et al., 2011). The full evolution of this

Figure 10: A Jupyter Notebook showing interactive visual-

ization via the Splotch code running on a remote HPC sys-

tem, visualizing the results of state of the art galaxy forma-

tion simulation GigaERIS.

simulation produces hundreds of snapshots, each of

which consists of 1.1 billion particles, with 10-12 data

fields per particle, resulting in approximately 50GB

disk space required per snapshot. There are three

types of particle, dark matter, stars, and gas; the vi-

sualisation consists of just the stars and gas (between

500 and 600 million particles for a single snapshot),

with gas coloured by temperature and stars coloured

by age. A volume rendering of such data is typically

beyond the capabilities of a web browser, due to the

size (too large to download and process locally), lo-

cation (source data is often stored on the parallel file

system of a supercomputer), and complexity (can re-

quire domain specific tools and high performance re-

sources to visualize).

Whilst this example is simple and demonstra-

tory, a full integration could benefit from Python

to JavaScript bindings to allow full control of the

Splotch server through the Notebook. For example,

interesting subsets of data may be identified and ex-

tracted via the visual interface, and statistical analy-

sis may then be applied using common Python-based

analysis tools, either via downloading to the local ma-

chine, or via a hosted Jupyter Notebook instance on

the HPC system.

7 REAL WORLD

PERFORMANCE

HPC systems are typically accessed remotely, from

elsewhere in the same building to continents on the

other side of the world. For this reason, we run a num-

ber of tests spanning a wide physical area to demon-

strate the extent of support for event and data stream-

ing through WebSockets for WSRTI.

Real-time Web-based Remote Interaction with Active HPC Applications

97

For many network-based tools, performance is

significantly dependent on the performance of the net-

work, as such the most significant factor in this case is

the variable Internet connection between the user and

the HPC system. Real-world performance for WSRTI

is dependent on the user and their environment as well

as the typical load on the network at any one time.

This section describes the performance for one such

environment, which represents a typical user scenario.

A user laptop is set up as a client in a U.K. University

laboratory, whilst a server application is executed on

a HPC system at a remote computing facility abroad.

The test system is Swan, a Cray XC50 located at

the Cray computing facility in Wisconsin, USA. Each

utilized node consists of 2 Broadwell 22-core Xeon

CPUs clocked at 2.2Ghz. The web client runs on a

Macbook Pro (early 2013 model) with 2.7 GHz In-

tel Core i7, and Mozilla Firefox 61.0.1, at the Univer-

sity of Portsmouth in the UK. The test environment on

the HPC system is a C++ application (included with

the library) that generates data buffers of varying size

and streams them over WebSockets using the Sync-

Queue and WebsocketPlus utilities. It has the capac-

ity to send without expecting reply, mimicking a data

stream, or wait for replies and measure send and re-

ceive latencies mimicking events or RPC calls. On the

user laptop the template web client in which we have

implemented an additional generic client DataHan-

dler (see 4.2), which can act as a binary data stream

receiver or a ping-pong type application that will re-

turn each received message. The test system network

is organised similarly to Figure 1, without the gate-

way node. A port is forwarded for each WebSocket

via SSH tunnel, and the client web-page is hosted lo-

cally for the user. For a series of tests with varying

packet size, we stream packets to the client and mea-

sure latency and bandwidth.

Figure 11 shows a series of bandwidth results for

the described test setup. In this environment we reach

a maximum sustained bandwidth for data streaming

of ˜9 MB/s, which is reached at packets of 2MB,

with ideal bandwidth for packet ranges from 128KB

to 2MB. As previously mentioned, test results are de-

pendent on the current network load, and so may vary

with time. Figure 12 shows data latency, for packets

under 8KB we have a latency of under 100ms, which

is typically acceptable for real-time interaction in a

typical remote application. For packets up to 500KB

latency remains in the 1-200ms range, steadily rising

as packets increase in size beyond this.

Figures 11 and 12 show WSRTI performance

when streaming from a HPC system roughly 4000

miles away, and typical users of HPC centers in their

own country, or even their own institution, may ob-

0

1

2

3

4

5

6

7

8

9

10

8

16

32

64

128

256

512

1024

2048

4096

8192

16384

32768

65536

131072

262144

524288

1048576

2097152

4194304

8388608

16777216

33554432

67108864

134217728

Bandwidth (MB/s)

Data Packet Size (Bytes)

Bandwidth v.s. Packet Size: Wisconsin, USA -

Portsmouth, UK.

Figure 11: Bandwidth for data streaming from a WSRTI-

enabled synthetic remote application.

10

100

1000

10000

8

16

32

64

128

256

512

1024

2048

4096

8192

16384

32768

65536

131072

262144

524288

1048576

2097152

4194304

8388608

Latency (ms)

Data Packet Size (Bytes)

Latency v.s. Packet Size: Wisconsin, USA -

Portsmouth, UK.

Figure 12: Packet latency for data streaming from a

WSRTI-enabled synthetic remote application.

tain higher bandwidths. Conversely, users in remote

locations may indeed obtain much lower bandwidths.

This can affect both the number of data packets that

we can receive per second, and the latency at which

we receive them, as illustrated by inconsistent band-

width measurements for larger data objects in Figure

11. In our Splotch use case, JPEG compressed im-

ages are streamed from the application to the client

and user interaction events (mouse and keyboard) and

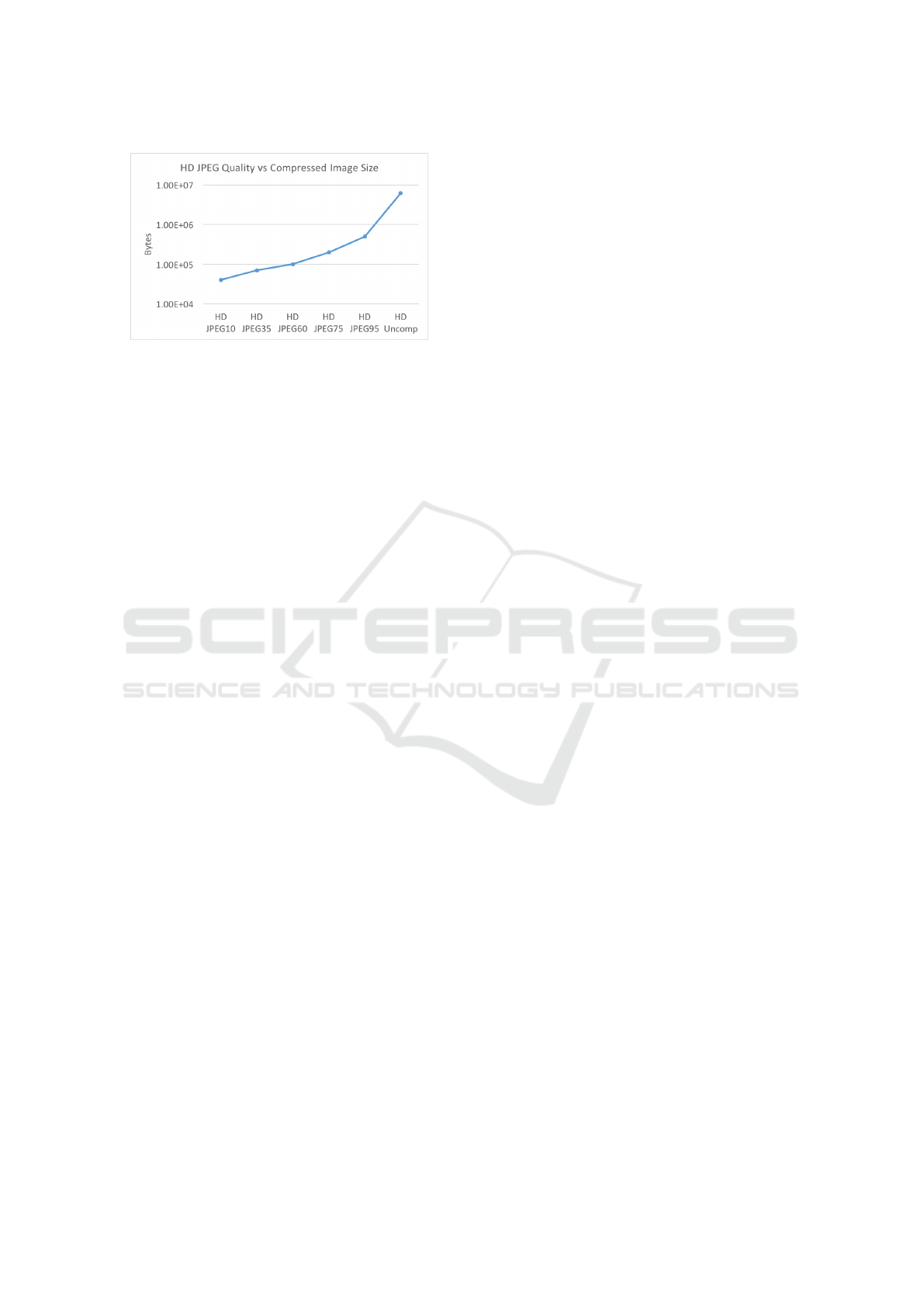

RPC messages are returned. Figure 13 illustrates the

compressed binary size of a typical 1920x1080 pixel

High Definition (HD) Splotch image at varying com-

pression qualities. Referring to Figure 11, we are able

to achieve maximum bandwidth in the mid to high

quality ranges (JPEG 60-95 range of Figure 13), and

>10 FPS on all compressed data sizes.

The size of binary events and RPC calls typically

range from 32 to 128 bytes, potentially extending to a

few kilobytes and above if a data packet is attached to

an RPC call. As demonstrated by the data in Figures

11 and 12, for real-time interaction with a HPC ap-

plication WSRTI can perform sufficiently to stream

user interaction events and RPC calls between the

web client and application. Whilst bandwidth may be

low for small size packets case, the low latency means

IVAPP 2021 - 12th International Conference on Information Visualization Theory and Applications

98

Figure 13: The relationship of JPEG compression quality

factor and image byte-size for a typical High Definition

(1920x1080 pixel) Splotch visualization image. Uncomp

indicates the size of an uncompressed output.

interaction is effective and responsive.

For real-time data streaming, there are a few lim-

itations on the web-client. Particularly, the web

browser is less capable of processing large data (e.g.

in the range of multiple Gigabytes). In our tests, web

browsers typically have upper limits on single Web-

Socket messages starting around 256 MB, and will

struggle computationally with buffers of multiple gi-

gabytes. For larger data, it is recommended to per-

form computationally-intensive analysis on the HPC

system and send small analysis datasets along with

application status/interface and analysis results such

as graph plots and visualization images to the client.

8 SUMMARY AND

CONCLUSIONS

In this paper we introduce the WSRTI library, which

aims to support integration between traditional HPC

applications in languages such as C or C++, and the

modern day Web environment. In particular, we aim

to provide a lightweight solution to interact via the

web with HPC applications in real-time.

We summarise and classify the techniques avail-

able for remote connections to HPC systems, explain-

ing the process and benefits of each. We summarise

existing tools supporting remote interaction via web,

highlighting the lack of general purpose tools to as-

sist HPC application developers in remotely interact-

ing with their applications, and detail the features of

WSRTI and how they can be used to support such ac-

tivities, demonstrating usage by instrumenting a batch

scientific visualisation software for real-time interac-

tion. We expect future work to include streamlining

our utilities to reduce the tax on HPC developers hop-

ing to extend their HPC application for web connec-

tivity, particularly reducing the necessity for boiler-

plate code. One of the avenues we are exploring is

the use of a wrapper API to allow WSRTI to be used

in a similar manner to popular in-situ visualization li-

braries such as Paraview Catalyst and VisIt Libsim.

We also intend to further optimise the communica-

tion routines, especially for data streaming, consider-

ing features such as automatic compression factor ad-

justment and tiled compression for multiplexed image

streams. This should be combined with an investiga-

tion into the optimal approach to set up a WebSocket

between HPC applications and Web clients from a se-

curity perspective, whilst retaining interactivity. Fi-

nally, we are also considering interfaces for other lan-

guages in use in HPC, such as Fortran.

ACKNOWLEDGMENT

We gratefully acknowledge Swinburne Centre for As-

trophysics and Supercomputing for hosting author

Tim Dykes while part of this work was completed.

We thank Lucio Mayer, University of Zurich, for pro-

viding the GigaERIS data for visualization. Thanks to

HPE & Cray UK for providing HPC resources. Mel

Krokos acknowledges support by NEANIAS, funded

by the EC Horizon 2020 research and innovation pro-

gramme under grant agreement No. 863448.

REFERENCES

Ahrens, J., Geveci, B., and Law, C. (2005). 36 - par-

aview: An end-user tool for large-data visualization.

In Hansen, C. D. and Johnson, C. R., editors, Visu-

alization Handbook, pages 717 – 731. Butterworth-

Heinemann, Burlington.

Ayachit, U., Bauer, A., Geveci, B., O’Leary, P., Moreland,

K., Fabian, N., and Mauldin, J. (2015). Paraview cat-

alyst: Enabling in situ data analysis and visualization.

In Proceedings of the First Workshop on In Situ Infras-

tructures for Enabling Extreme-Scale Analysis and Vi-

sualization, ISAV2015, pages 25–29, New York, NY,

USA. ACM.

Bernyk, M., Croton, D. J., Tonini, C., Hodkinson, L., Has-

san, A. H., Garel, T., Duffy, A. R., Mutch, S. J., Poole,

G. B., and Hegarty, S. (2016). The Theoretical As-

trophysical Observatory: Cloud-based Mock Galaxy

Catalogs. The Astrophysical Journal Supplement Se-

ries, 223:9.

Brooke, J., Coveney, P., Harting, J., Jha, S., Pickles, S.,

Pinning, R., and Porter, A. (2003). Computational

steering in realitygrid. In Cox, S., editor, Proceed-

ings of the UK e-Science All Hands Meeting 2003, 2-4

September, Nottingham, UK, pages 885–1/4. EPSRC.

Brown, D. K., Penkler, D. L., Musyoka, T. M., and

Bishop, O. T. (2015). Jms: An open source work-

flow management system and web-based cluster front-

Real-time Web-based Remote Interaction with Active HPC Applications

99

end for high performance computing. PLOS ONE,

10(8):e0134273.

Canon, S., Wright, N. J., Muriki, K., Ramakrishnan, L.,

Shalf, J., Wasserman, H. J., Cholia, S., and Jackson,

K. R. (2010). Performance analysis of high perfor-

mance computing applications on the amazon web

services cloud. In 2010 IEEE Second International

Conference on Cloud Computing Technology and Sci-

ence(CLOUDCOM), volume 00, pages 159–168.

Childs, H. et al. (2011). Visit: An end-user tool for visual-

izing and analyzing very large data. In In Proceedings

of SciDAC.

Cholia, S., Skinner, D., and Boverhof, J. (2010). Newt: A

restful service for building high performance comput-

ing web applications. In 2010 Gateway Computing

Environments Workshop (GCE), pages 1–11.

Church, P., Goscinski, A., and Lef

`

evre, C. (2015). Exposing

hpc and sequential applications as services through

the development and deployment of a saas cloud. Fu-

ture Generation Computer Systems, 43-44:24 – 37.

Cruz, F. A. and Martinasso, M. (2019). Firecrest: Restful

api on cray xc systems. In Proceedings of the Cray

User Group.

Dolag, K., Reinecke, M., Gheller, C., and Imboden, S.

(2008). Splotch: visualizing cosmological simula-

tions. New Journal of Physics, 10(12):125006.

Dykes, T., Gheller, C., Rivi, M., and Krokos, M. (2017).

Splotch: porting and optimizing for the xeon phi. The

International Journal of High Performance Comput-

ing Applications, 31(6):550–563.

Dykes, T., Hassan, A., Gheller, C., Croton, D., and Krokos,

M. (2018). Interactive 3D visualization for theoretical

virtual observatories. Monthly Notices of the Royal

Astronomical Society, 477:1495–1511.

Fette, I. and Melnikov, A. (2011). The websocket protocol.

RFC 6455, RFC Editor. http://www.rfc-editor.org/rfc/

rfc6455.txt.

Gesing, S., Dooley, R., Pierce, M., Kr

¨

uger, J., Grunzke,

R., Herres-Pawlis, S., and Hoffmann, A. (2015). Sci-

ence gateways - leveraging modeling and simulations

in hpc infrastructures via increased usability. In 2015

International Conference on High Performance Com-

puting Simulation (HPCS), pages 19–26.

Goecks, J., Nekrutenko, A., Taylor, J., et al. (2010). Galaxy:

a comprehensive approach for supporting accessible,

reproducible, and transparent computational research

in the life sciences. Genome Biology, 11(8):R86.

Goodale, T., Allen, G., Lanfermann, G., Mass

´

o, J., Radke,

T., Seidel, E., and Shalf, J. (2003). The Cactus frame-

work and toolkit: Design and applications. In Vector

and Parallel Processing – VECPAR’2002, 5th Inter-

national Conference, Lecture Notes in Computer Sci-

ence, Berlin. Springer.

Guedes, J., Callegari, S., Madau, P., and Mayer, L. (2011).

Forming Realistic Late-type Spirals in a ΛCDM Uni-

verse: The Eris Simulation. ApJ, 742(2):76.

Intel Corporation (2018). Intel vtune amplifier 2018 user’s

guide.

Jin, Z., Krokos, M., Rivi, M., Gheller, C., Dolag, K., and

Reinecke, M. (2010). High-performance astrophysical

visualization using Splotch. ArXiv e-prints.

Jupyter Hub Development Team (2018). Jupyterhub —

jupyterhub 0.9.1 documentation.

Mauch, V., Kunze, M., and Hillenbrand, M. (2013). High

performance cloud computing. Future Generation

Computer Systems, 29(6):1408 – 1416.

Miller, M. (2009). Cloud computing. Que Publishing.

Milligan, M. (2017). Interactive hpc gateways with jupyter

and jupyterhub. In Proceedings of the Practice and

Experience in Advanced Research Computing 2017 on

Sustainability, Success and Impact, PEARC17, pages

63:1–63:4, New York, NY, USA. ACM.

Nunes, D. S., Zhang, P., and Silva, J. S. (2015). A survey

on human-in-the-loop applications towards an inter-

net of all. IEEE Communications Surveys Tutorials,

17(2):944–965.

NVIDIA Corporation (2018a). Nsight eclipse edition cuda

toolkit documentation.

NVIDIA Corporation (2018b). Nvidia cuda toolkit profiler

user’s guide.

Nystrom, N. A., Levine, M. J., Roskies, R. Z., and Scott,

J. R. (2015). Bridges: A uniquely flexible hpc re-

source for new communities and data analytics. In

Proceedings of the 2015 XSEDE Conference: Sci-

entific Advancements Enabled by Enhanced Cyber-

infrastructure, XSEDE ’15, pages 30:1–30:8, New

York, NY, USA. ACM.

Patidar, S., Rane, D., and Jain, P. (2012). A survey paper

on cloud computing. In 2012 Second International

Conference on Advanced Computing Communication

Technologies, pages 394–398.

Ragagnin, A., Dolag, K., Biffi, V., Cadolle Bel, M., Ham-

mer, N. J., Krukau, A., Petkova, M., and Steinborn,

D. (2016). An online theoretical virtual observatory

for hydrodynamical, cosmological simulations. ArXiv

e-prints.

Rivi, M., Gheller, C., Dykes, T., Krokos, M., and Dolag,

K. (2014). Gpu accelerated particle visualisation with

splotch. Astronomy and Computing, 5:9–18. 12

months embargo.

Yoo, A. B., Jette, M. A., and Grondona, M. (2003). Slurm:

Simple linux utility for resource management. In Fei-

telson, D., Rudolph, L., and Schwiegelshohn, U., ed-

itors, Job Scheduling Strategies for Parallel Process-

ing, pages 44–60, Berlin, Heidelberg. Springer Berlin

Heidelberg.

Younge, A. J., Pedretti, K., Grant, R. E., and Brightwell,

R. (2017). A tale of two systems: Using contain-

ers to deploy hpc applications on supercomputers and

clouds. In 2017 IEEE International Conference on

Cloud Computing Technology and Science (Cloud-

Com), pages 74–81.

Zhou, Y., Weiss, R. M., McArthur, E., Sanchez, D., Yao,

X., Yuen, D., Knox, M. R., and Czech, W. W. (2013).

WebViz: A Web-Based Collaborative Interactive Vi-

sualization System for Large-Scale Data Sets, pages

587–606. Springer Berlin Heidelberg, Berlin, Heidel-

berg.

Zimmermann, H. (1980). Osi reference model - the

iso model of architecture for open systems intercon-

nection. IEEE Transactions on Communications,

28(4):425–432.

IVAPP 2021 - 12th International Conference on Information Visualization Theory and Applications

100