Assessing COVID-19 Impacts on College Students via Automated

Processing of Free-form Text

Ravi Sharma

1

, Sri Divya Pagadala

2

, Pratool Bharti

2

, Sriram Chellappan

1

,

Trine Schmidt

3

and Raj Goyal

3

1

Department of Computer Science and Engineering, University of South Florida, Tampa, FL, U.S.A.

2

Department of Computer Science, Northern Illinois University, DeKalb, IL, U.S.A.

3

Ajivar LLC, Tarpon Springs, FL, U.S.A.

Keywords:

COVID-19, Emotion, Mental Health, Natural Language Processing, Semantic Search.

Abstract:

In this paper, we report experimental results on assessing the impact of COVID-19 on college students by

processing free-form texts generated by them. By free-form texts, we mean textual entries posted by college

students (enrolled in a four year US college) via an app specifically designed to assess and improve their mental

health. Using a dataset comprising of more than 9000 textual entries from 1451 students collected over four

months (split between pre and post COVID-19), and established NLP techniques, a) we assess how topics of

most interest to student change between pre and post COVID-19, and b) we assess the sentiments that students

exhibit in each topic between pre and post COVID-19. Our analysis reveals that topics like Education became

noticeably less important to students post COVID-19, while Health became much more trending. We also

found that across all topics, negative sentiment among students post COVID-19 was much higher compared

to pre-COVID-19. We expect our study to have an impact on policy-makers in higher education across several

spectra, including college administrators, teachers, parents, and mental health counselors.

1 INTRODUCTION

The COVID-19 pandemic has impacted people across

the globe irrespective of where they live, how old they

are, and what they do. From a physical health per-

spective, COVID-19 has affected senior citizens the

most. However, from a mental health perspective,

younger people (especially college students) have

been severely impacted due to many factors includ-

ing transitioning to new modes of instruction, loss

of friend circles, cancellation of classes and even en-

tire semesters, financial hardships, shrinking job mar-

kets, failing relationships and much more. Also, since

mental health services are harder to access now (due

to social distancing necessities), students are suffering

even more from this pandemic.

On a related note, various stakeholders in higher

education are also impacted because there is a need to

manage new forms of online instruction, understand

and react to the financial hardships of students, ensure

classrooms are socially isolated, generate facilities for

COVID-19 testing, and more. In overcoming these

challenges, there needs to be a mechanism to under-

stand student needs and expectations in real-time, as

students are the most important, while also being vul-

nerable entities in the higher education ecosystem.

One approach to get student perceptions in such

times is to send periodic surveys to them. But these

can be sent only a limited number of times to prevent

students from simply choosing not to respond. Sur-

vey responses also suffer from well-known recency

biases (Chiesi and Primi, 2009). Furthermore, sur-

veys cannot proactively capture student perceptions

and are sensitive more to the mindset of those creat-

ing the surveys, rather than students themselves.

The next viable option to glean student percep-

tions is mining social media. This is an area rich in

exploration today, and there are many studies on min-

ing social media content to understand student mental

health needs (Bagroy et al., 2017) (Tsakalidis et al.,

2018), relationship tendencies (Sin et al., 2019), aca-

demic performance (Paul et al., 2012), political par-

ticipation (Yang and Lee, 2020), (Kushin and Ya-

mamoto, 2010) and much more. But again, the core

issue with mining social media data in the context of

our problem in this paper stems from a) social media

data being noisy, meaning that there is so much ir-

relevant information out there, which makes it much

Sharma, R., Pagadala, S., Bharti, P., Chellappan, S., Schmidt, T. and Goyal, R.

Assessing COVID-19 Impacts on College Students via Automated Processing of Free-form Text.

DOI: 10.5220/0010249404590466

In Proceedings of the 14th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2021) - Volume 5: HEALTHINF, pages 459-466

ISBN: 978-989-758-490-9

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

459

harder to filter out and extract only ones relevant to

any particular scenario; b) the fact that there are so

many social media sites that are popular today, and so

it becomes increasingly harder to make conclusions

by processing data from only one or few of them. Ad-

ditionally, these sites are very hard for developers to

crawl massive data due to privacy concerns.

In this paper, we report the results of an experi-

ment we conducted at a four-year college in the US

to assess topics and sentiments of students before the

COVID-19 pandemic began and after, via processing

free-form texts collected via a smartphone app specif-

ically designed for students to express their moods,

feelings, opinions via the app. With this dataset, we

wanted to answer, broadly speaking, two questions

presented below:

1. Before COVID-19, what are the topics that stu-

dents most posted about, and how did the topics

change post-COVID-19?

2. Across these topics and timelines, how did the

sentiment of students change between positive,

neutral, and negative?

To answer the above questions, we process our dataset

using several standard and well-established tech-

niques in Natural Language Processing. Our analysis

that compares the post-COVID-19 timeframe with the

pre-COVID-19 timeframe revealed Health became a

highly trending topic for our subjects which increased

sharply post COVID-19 timeframe while Housing,

Finance, and Relationships experiencing a marginal

increase. Also, Education decreased significantly dur-

ing the post-COVID-19 timeframe. From the perspec-

tive of emotion, universally across each topic, there

was a significant increase in negative sentiment in the

post-COVID-19 timeframe.

To the best of our knowledge, this study is unique

in processing free-form text generated via a custom

made app (that was designed to glean mental health

insights of college students) to infer trending topics

and sentiments in the COVID-19 timeframe. Towards

the end of the paper, we present how our work enables

policy-makers in higher education to cater to student

mental health and other needs in these unique times.

Additionally, the data will also help the app devel-

opers to optimize the AI to deliver individualized re-

sources that help the specific student in their time of

need based on their sentiment, etc.

The rest of the paper is organized as follows. Sec-

tion 2 presents related work in the literature. Section 3

explains our dataset. Section 4 details our underlying

research methodology. Section 5 presents our results.

Finally, Section 6 presents a related discussion, con-

clusions, and future work.

2 RELATED WORK

Since December 2019, when the COVID-19 pan-

demic began, there has been an urgent interest across

the globe to understand its impact from many per-

spectives. In particular, the genetic aspects of the dis-

ease, the epidemiological aspects, the impact of con-

tact tracing, the impact on economies, and political

aspects across the globe are all actively being investi-

gated today. In this section though, we highlight cur-

rent research in the realm of COVID-19 that is most

relevant to our work in this paper, namely the pro-

cessing of texts over the Internet concerning the pan-

demic.

In (Jelodar et al., 2020), researchers applied topic

modeling on COVID-19–related Reddit comments

to extract meaningful categories from public opin-

ions. They then designed an LSTM recurrent neu-

ral network (Hochreiter and Schmidhuber, 1997) for

polarity and sentiment classification. They used

sentiStrength (Thelwall et al., 2012) for sentiment

detection and average out the sentiment of COVID-

19 related comments obtained from 10 subreddits fo-

rums. They labeled the data as per sentiStrength

scores and achieved an 81.15% classification accu-

racy. In (Oyebode et al., 2020), comment data

obtained from social media is processed and then

context-aware themes are extracted. For each theme,

the sentiment is extracted using VADER lexicon-

based algorithm (Gilbert and Hutto, 2014) and only

themes having positive or negative scores are kept,

neutral themes are discarded. Later, these themes are

categorized into 34 negative and 20 positive broader

themes using human reviewers. These themes span

different areas related to health, psychological, and

social issues and showed the impact of COVID-19

negatively. The authors also recommend possible so-

lutions for negative themes. In another paper (Kabir

and Madria, 2020), the authors also mine Twitter to

use collected tweets as a repository to analyze infor-

mation shared by the people to visualize topics of in-

terest, and the emotions of common citizens in the

USA as the pandemic is unfolding.

Our work in this paper broadly falls in the above

categories. However, there are compelling differ-

ences. First, in existing research on processing social

media data, the trend is to ignore the demographics of

subjects, since demographic information is very hard

to find from social media data, and even if a person

does post demographic information, there is little ev-

idence to check if the posted information is correct.

So, if the goal is to understand the topic and emo-

tions of a particular demographic only, then mining

social media data can be problematic. In this paper,

HEALTHINF 2021 - 14th International Conference on Health Informatics

460

our goal is to only look at college students as they

face the pandemic, and hence we did not want to mine

social media data. Instead, we mine free-form texts

that students post using an app, that is specifically de-

signed to monitor and enhance student mental health.

As such, the reliability of our data, as it pertains to

our demographic is significantly high. Secondly, with

social media data, there is a massive scale of irrele-

vant information, and during pre-processing, there is

always a danger of discarding contextually relevant

information and/or incorporating contextually irrele-

vant information. Both of these things are avoided in

our data since again, the context of the app is pro-

moting the mental health of students. Hence we be-

lieve that our dataset and corresponding results in this

paper, and unique and contextually very relevant, in

terms of gleaning insights on how college students (in

the USA) are responding to the COVID-19 pandemic.

3 DATA DESCRIPTION AND

CLEANING

3.1 Data Description

Data (i.e., free-form text) for this study was collected

in partnership with Ajivar LLC. (Ajivar, 2019), a

company that designs smartphone technologies to as-

sess and enhance the Emotional Intelligence (EI) of

students, so that they are more resilient to life’s chal-

lenges. At the core of Ajivar’s capabilities is an en-

gaging smartphone app that students can download,

which enables them to compose free-form texts in En-

glish to the app, either on their own or in response to

a request via the app. During interaction with Ajivar,

students share anything from their day to day life ex-

periences, problems, happy/sad moments, situations

revolving around their academic and personal lives,

and so on. These texts are then processed by Ajivar’s

AI, in an automated manner, so that real-time empa-

thetic responses are given to students to improve their

EI. This service while being important at any time to

college students becomes even more important during

this pandemic time. As of today, more than 9000 stu-

dents, at a four-year US college are using Ajivar’s app

that is available on both iOS and Android.

For the purposes of this study, 9090 free-form

texts (all in English) were collected from 1451 stu-

dents between February 1, 2020 and April 30, 2020.

This timeframe is ideal for this study because March

15 was about the time, the COVID-19 pandemic was

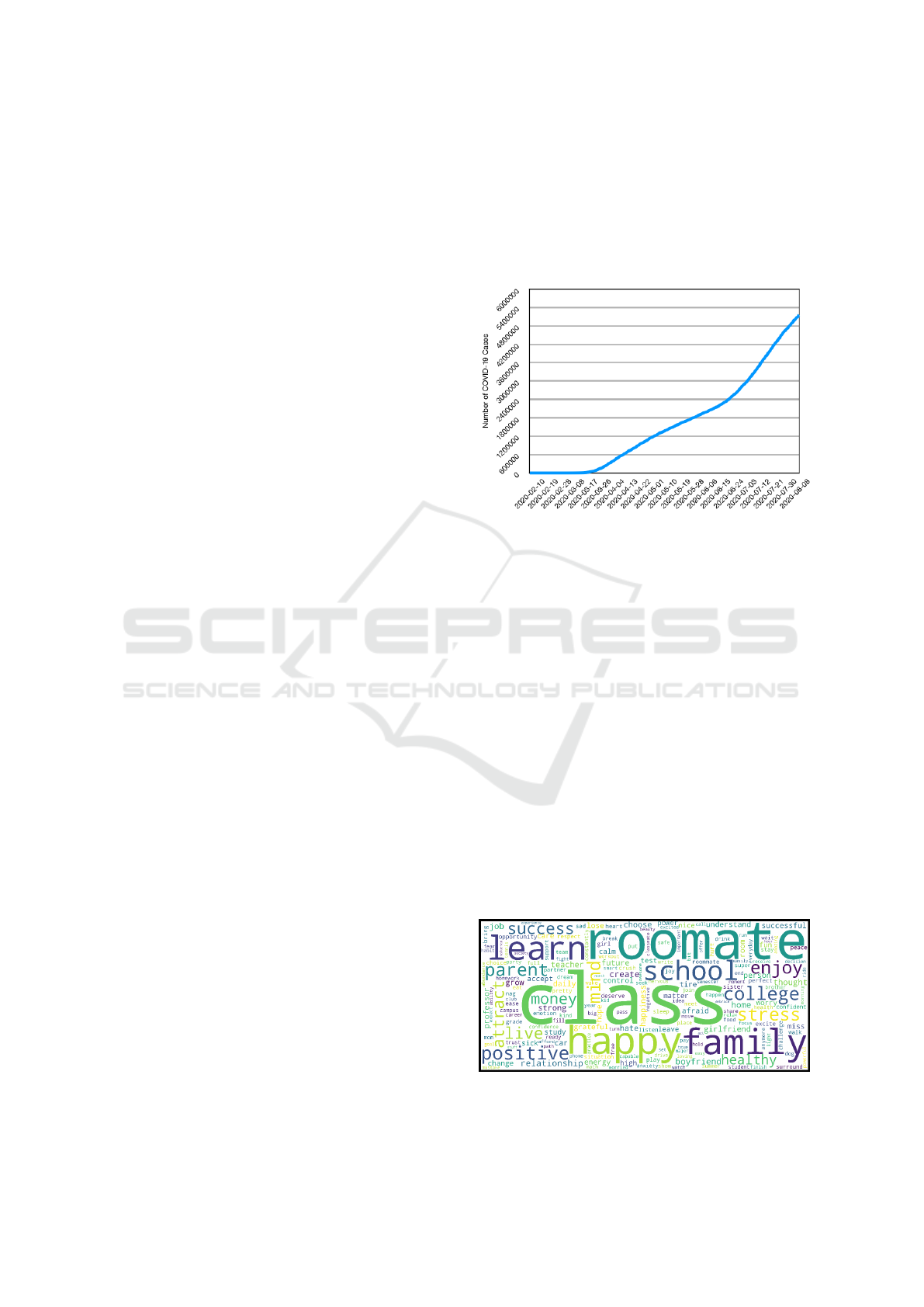

considered serious enough in the USA (as seen in Fig.

1), that necessitated significant changes in the lives of

many in the US (and especially college students). We

had an equal number of texts before and after March

15 in our dataset to keep it balanced pre and post

COVID-19. Some examples of texts in our dataset

are presented in Table 1 that we used in this study.

All personal identifiers were removed manually be-

fore processing the texts.

Figure 1: COVID-19 spread in US in 2020.

3.2 Data Cleaning

Due to the free-form nature of data, it can be noisy

and requires cleansing before further processing. To

do so, we processed texts in our dataset (both pre and

post-COVID-19) to remove sources of noise includ-

ing stopwords and generic terms using the popular

Natural Language Toolkit (NLTK) (Loper and Bird,

2002). Due to the nature of free-form texts, we ex-

pected some un-intelligible words in our dataset like

‘gjhgdjfg’, ‘jshdfkj’, etc. However, we did not en-

counter any such words. Typos were corrected using

publicly available spellchecker software.

We then performed lemmatization with part of

speech (POS) tags to convert the remaining (and rele-

vant) words to their root form for standardization dur-

ing processing. For instance, Lemmatization step will

convert “booking” to book (since booking is a verb)

but will convert “string” to “string” again (since string

is a noun).

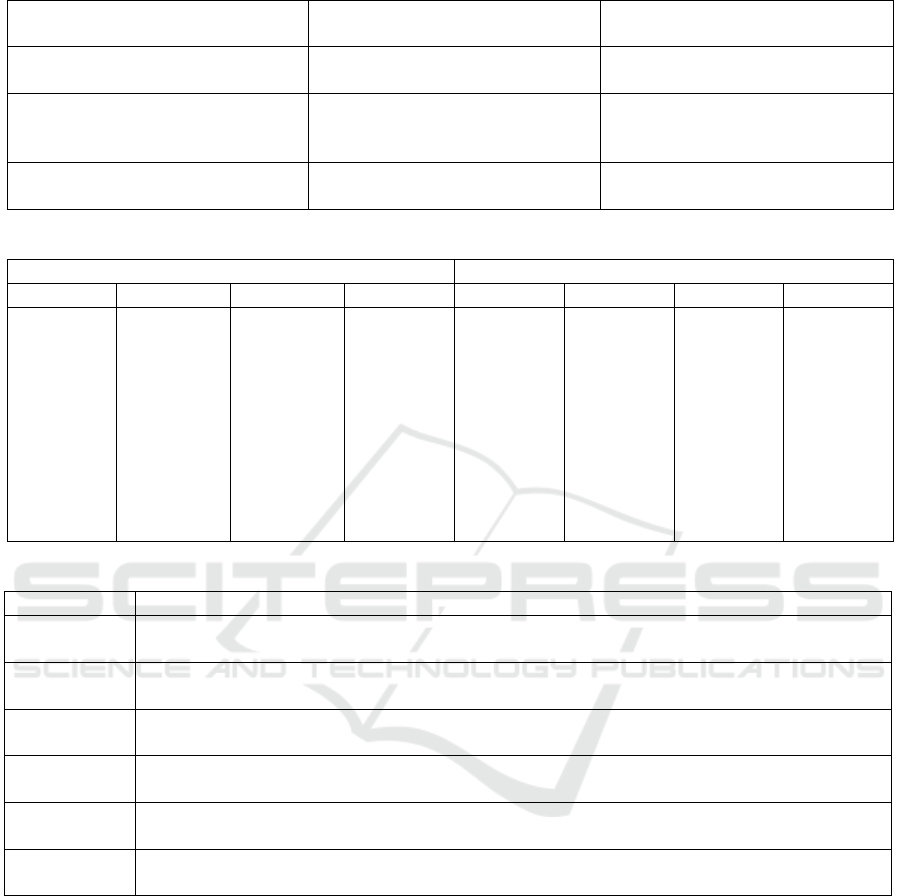

Figure 2: Word-Cloud for pre-COVID-19 Data.

Finally, for illustration purposes, table 1 shows the

Assessing COVID-19 Impacts on College Students via Automated Processing of Free-form Text

461

Figure 3: Word-Cloud for post-COVID-19 Data.

result of the data cleaning process. In Fig. 2 and

Fig. 3, we show word clouds for the cleaned data

in both pre and post COVID-19. We can clearly see

how words like “class”, “roommate”, “learn”, “enjoy”

and “family” were dominant in the pre-COVID-19

phase, compared to words like “quarantine”, “covid”,

“sick”, “hope” and “pandemic” that were dominant

post-COVID-19. Presenting these results in a more

formalized context from the perspective of identify-

ing the most critical topics for students and their as-

sociated sentiments as a result of the pandemic is our

end goal and is presented next in detail.

4 OUR METHODOLOGY FOR

TOPIC CATEGORIZATION

AND SENTIMENT ANALYSIS

In this section, we present the overall methodology

for automating the topic categorization and sentiment

determination from the perspective of COVID-19 and

college students. Recall the two major questions we

want to answer in this study: 1) Before COVID-19,

what are the topics that students most posted about,

and how did these topics change post-COVID-19? 2)

Across these topics, how did the sentiment of students

change between positive, neutral, and negative?

4.1 Determining Topics of Interest via

Contextual and Semantic

Categorization

To answer the first question, we perform two criti-

cal steps - Contextual Categorization and Semantic

Categorization. Step 1 is to use our intuition, do-

main expertise of counselors at Ajivar, and the fac-

ulty/student authors of this paper to categorize the

broad topics of interest to college students as it per-

tains to this pandemic. However, instead of merely

relying on our intuition, we also delved deeper into

the output of the Data Cleanup process (presented ear-

lier), namely the root words of students’ free-form

texts. Table 2 presents the top 20 root words in both

the pre and post COVID-19 phases after lemmati-

zation. Combining our intuitions, categories men-

tioned in related works (AlKandari, 2020) (Doygun

and Gulec, 2012)(Brooker et al., 2017)(Head and

Eisenberg, 2011) and coupled with the results gleaned

from student posts in Table 2, we identified a total of

six topics along with their corresponding vocabulary

presented in Table 3 that we believe are most relevant

to college students. This completes Step 1 - the iden-

tification of topics of interest to students.

The next step towards answering Question 1 in-

volves building an AI model to semantically map a

student’s post to a topic. To do this, we need to cap-

ture the semantic textual similarity between the stu-

dents’ texts and topics. Transformers (Vaswani et al.,

2017) based models have been a popular choice for

performing various NLP tasks including semantic tex-

tual similarity in recent years due to their improved

performance over existing methods.

This had lead to the development of pre-trained

systems like Bidirectional Encoder Representations

from Transformers (BERT) (Devlin et al., 2018),

where pre-trained representations capture a large

spectrum of contexts and generate embeddings of

each word based on the underlying context in the

sentence. RoBERTa (Liu et al., 2019) is an im-

provement to the BERT model using larger training

data with longer sequences, and dynamic masking.

It has achieved achieve state-of-the-art performance

on Multi-Genre Natural Language Inference Corpus

(MNLI) (Williams et al., 2017), Question-answering

NLI (QNLI) (Wang et al., 2018), Semantic Textual

Similarity Benchmark (STS-B) (Cer et al., 2017),

ReAding Comprehension dataset from Examination

(RACE) (Lai et al., 2017) and GLUE (Wang et al.,

2018).

Though both BERT and RoBERTa are recent and

state of the art, the sentences to be checked for

similarity before feeding into the network resulting

in computational overhead. This can lead to scal-

ing issues later on. To leverage state-of-the-art per-

formance by BERT/RoBERTa but avoiding compu-

tational overhead, we leverage an extremely robust

and more recent neural network architecture called

Sentence-BERT (SBERT) (Reimers and Gurevych,

2019).

The core idea of SBERT is the notion of Sentence-

level Embedding via pre-trained Transformers-based

models (e.g. BERT and RoBERTa). The notion

of Sentence-level embedding is to embed sentences

into vector representations that retain the context of

each word within the sentence (instead of the actual

word), and have been a popular choice for seman-

HEALTHINF 2021 - 14th International Conference on Health Informatics

462

Table 1: Data Cleaning Process.

Students’ Texts

Removal of Stopwords and

Generic Terms

Final Text After

Lemmatization

Freaking out my grades ever since

online learning.

[‘Freaking’, ‘grades’, ‘online’,

‘learning’]

[‘freak’, ‘grade’, ‘online’,

‘learning’]

Im constantly thinking about

domestic abuse victims who have

to stay in their homes now

[‘constantly’, ‘thinking’,

‘domestic’, ‘abuse’, ‘victims’,

‘stay’, ‘homes’]

[‘constantly’, ‘think’, ‘domestic’,

‘abuse’, ‘victim’, ‘stay’, ‘home’]

My sleep schedule is off track

because im inside all day.

[‘sleep’, ‘schedule’, ‘track’,

‘inside’]

[‘sleep’, ‘schedule’, ‘track’,

‘inside’]

Table 2: Top 20 Frequent Words for Pre and Post COVID-19.

Pre-COVID-19 Post-COVID-19

Word Frequency Word Frequency Word Frequency Word Frequency

class 90 roomate 71 quarantine 277 covid 210

happy 70 family 69 hope 147 home 145

learn 62 school 56 family 145 pandemic 128

positive 53 college 52 miss 126 class 125

mind 50 stress 49 school 116 virus 105

parent 49 enjoy 48 happy 100 worry 99

live 41 attract 41 sick 99 parent 98

success 40 money 40 sad 96 stay 92

relationship 39 healthy 39 house 84 learn 81

create 37 boyfriend 37 understand 80 job 78

Table 3: Topics and Vocabulary for categorization and Semantic Similarity.

Topics Vocabulary

Education

grade, lecture, professor, exam, attendance, homework, quiz, assignment, syllabus, gpa,

marks, college, school, study,

Finance

tuition, job, money, rent, debt, grocery, fees, wage, dollars, shopping, car, budget,

scholarship, cash, salary, bills, economy, poor, rich, income

Health

health, disease, illness, nutrition, vaccine, medicine, diagnosis, sickness, eat, food,

disability, hospital, exercise, gym, covid, pandemic, quarantine, lockdown, virus, corona

Family

mom, dad, grandma, brother, sister, grandparents, aunt, uncle, sibling, son, daughter, kid,

wife, relative, family, cousin, stepfather, stepmother, husband, home, homesick, fiance

Relationships

boyfriend, girlfriend, bf, gf, crush, friend, buddy, dude, ex, stranger, people, partner,

associate, pal, mate

Housing

hostel, apartment, roommate, house, dorm, cloths, room, bed, window, bathroom, fan,

sound

tic textual similarity over recent years. For instance,

robust, semantic embeddings for two sentences like

“We are very happy now.” and “Our group is enjoy-

ing presently.” will be very similar, even though they

contain a completely different set of words. Need-

less to say, such, robust embedding is critical for our

problem, since two free-form texts from students may

have words completely different from each other, but

still could refer to the same topic, for example, sen-

tences like “Have a homework to submit tonight.” and

“Working on an assignment due later today”, despite

having no common words must have similar semantic

embeddings since both refer to the topic of Education.

How to do this effectively is our challenge.

For this challenge, we use SBERT that improves

upon BERT and RoBERTa, by optimizing seman-

tic textual similarity using sentence-level embedding

from siamese and triplet network structure (Schroff

et al., 2015). This allows the model to derive fixed-

size vectors for input sentences, and are much more

efficient to scale. In our study, posts corresponding

to pre and post COVID-19, along with the vocabu-

lary for each Topic identified in Table 3 was fed into

the SBERT network to derive sentence-embeddings.

Once we derive semantic vector embeddings, we

compare pre and post text embeddings with embed-

Assessing COVID-19 Impacts on College Students via Automated Processing of Free-form Text

463

dings generated from words for each Topic in Table

3 using the notion of Cosine similarity (Li and Han,

2013). The output of this step essentially gives a no-

tion of similarity between the two embeddings com-

pared. For every text, the topic which it belongs to

was identified as the one for whose embedding, the

similarity with the embedding of the corresponding

text was identified as the highest.

4.2 Sentiment Analysis of Topics Pre

and Post COVID-19

We now are ready to detail our method to glean the

sentiment of a post both pre and post COVID-19.

For a comprehensive analysis, multiple states of art

NLP models were used for validity analysis, namely,

VADER and TextBlob:

4.2.1 VADER

Valence Aware Dictionary and sEntiment Reasoner

(VADER) (Gilbert and Hutto, 2014) is a lexicon and

rule-based sentiment analysis tool. It does not re-

quire any training data and is constructed from a gen-

eralized, valence-based human-curated gold standard

sentiment. It is fast and gives positive, negative, neu-

tral and an overall sentiment score. It is especially

effective for more casual posts (as in free-form text)

and is especially useful for mining sentiments of so-

cial media posts, YouTube comments, etc.

4.2.2 TextBlob

TextBlob (Loria, 2018) is a Python-based open-

source library and returns polarity and subjectivity

of a text input. The polarity is in the float range

[−1, +1] with -1 being highly negative and +1 be-

ing highly positive sentiment. Subjectivity lies in the

range [0, 1] where 0 being objective and 1 being sub-

jective. The way it works is that TextBlob goes along

finding words and phrases it can assign polarity and

subjectivity to based on predefined rules, and it aver-

ages them all together for longer text. It is especially

useful for more formal texts.

5 RESULTS

In this section, we discuss the results of topic distri-

bution identification and sentiment analysis in the pre

vs post-COVID-19 dataset.

5.1 Topic Categorization and

Distribution

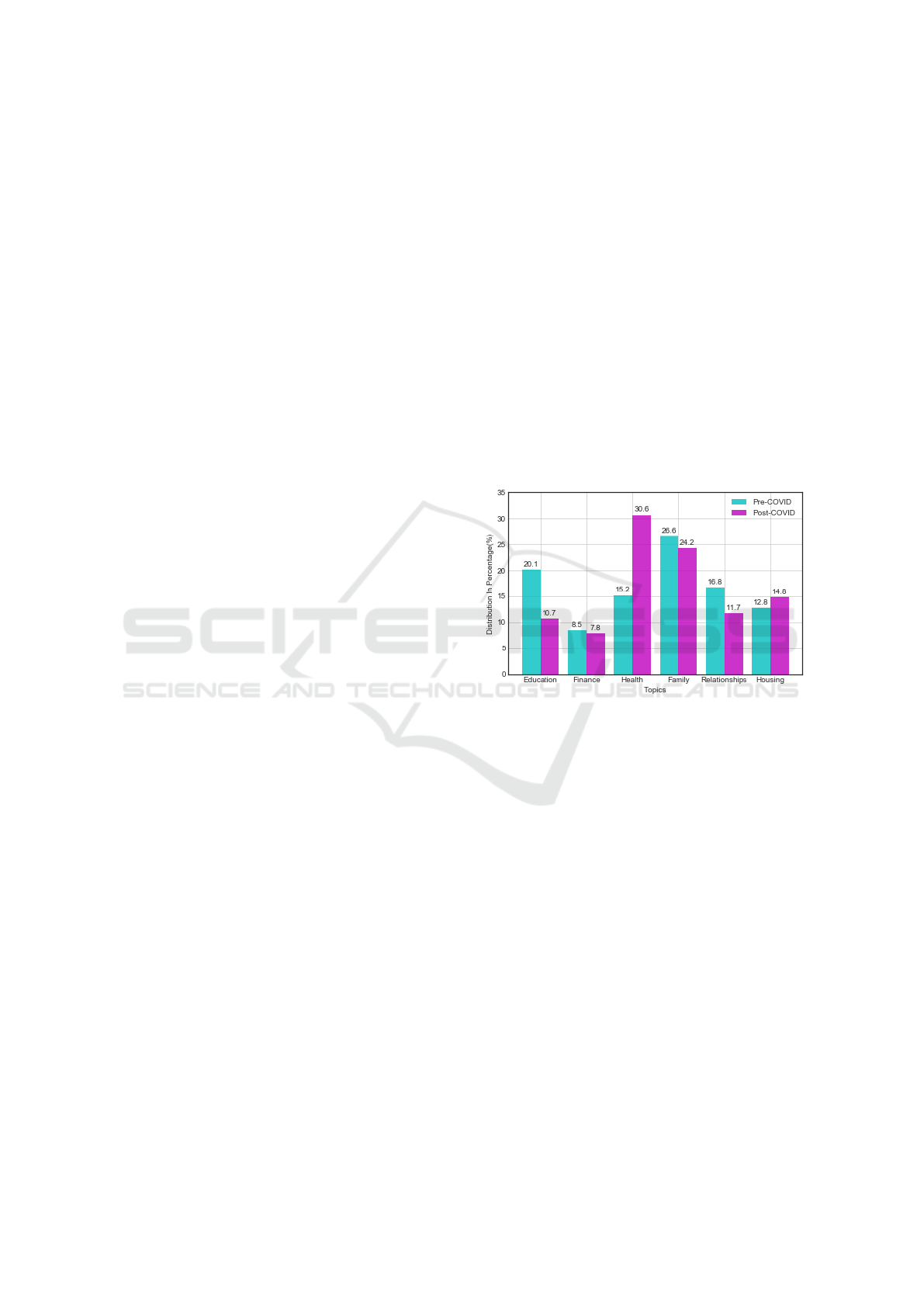

Fig. 4 presents our results of topics of importance to

students before and after COVID-19. We see from

the figure that there is a significant shift in the im-

portance of these topics before and after COVID-

19. We see that “Education” had a steep drop as a

topic in students’ posts after COVID-19 compared

to before COVID-19. After COVID-19, it had the

second-lowest importance (after Finance) among stu-

dents. The “Health” topic has the biggest jump among

student concerns in post-COVID-19 timeframe com-

pared to the pre-COVID-19 timeframe. There was not

much change in the “Family” topic, but we do see an

increase in “Housing” and a decrease in “Relation-

ship” topics in the post-COVID-19 timeframe com-

pared to the pre-COVID-19 timeframe.

Figure 4: Topic Distribution Comparison Between Pre and

Post COVID-19.

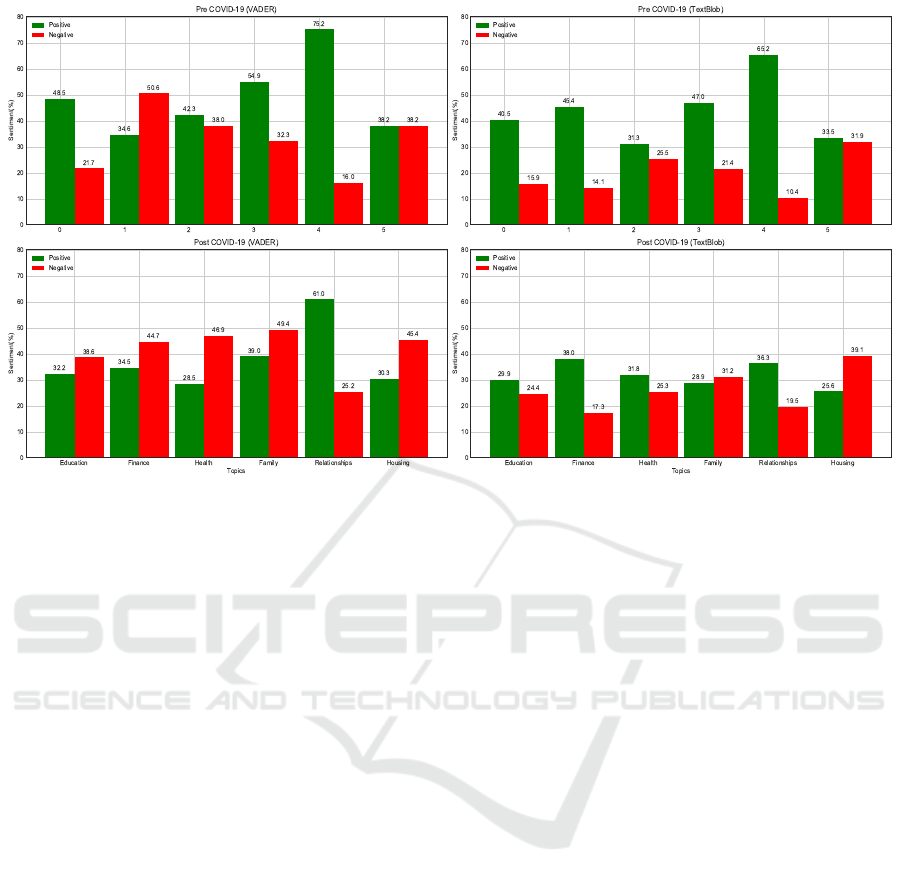

5.2 Sentiment Analysis among Topics

While the topics of interest to students are impor-

tant for decision-makers in academic environments,

it is important to also glean what is the sentiment

expressed by students on these topics, and how they

change over time. For each of the two sentiment anal-

ysis models we utilized, the corresponding sentiments

for the topics are presented in Fig. 5. The results from

left to right are for VADER and TextBlob. At the top,

is the pre-COVID-19 timeframe, and at the bottom

is the post-COVID-19 timeframe. In general, overall

sentiment values saw a decrease in positive sentiment

and an increase in negative sentiment.

6 DISCUSSION & CONCLUSIONS

COVID-19 is still adversely affecting the lives of col-

lege students, in the form of numerous uncertainties

HEALTHINF 2021 - 14th International Conference on Health Informatics

464

Figure 5: Sentiment across Topics Pre and Post COVID-19.

from the perspective of health, finance, career, re-

lationships, and more, that has taken a toll on their

mental health. It is important to understand their is-

sues at the micro and macro levels to provide an ef-

fective and scalable solution. Our paper makes im-

portant early contributions to this space in terms of

gleaning insights on student needs from a custom app,

designed and deployed to detect and address student

mental health concerns. For instance, our finding that

“Education” is not a topic of critical interest to stu-

dents posts COVID-19 should raise concerns on ad-

ministrators to ensure that students are motivated to

learn and that the quality of instruction must be given

priority. Our findings related to how topics of inter-

est changed post-pandemic are also important for ad-

ministrators to know, to channel their resources in the

appropriate directions.

Also, the majority of declination of categories

from pre to post-covid-19 such as “Education”, “Re-

lationships”, and “Family” is due to the sharp increase

in the “Health” category which further emphasis the

fact that medical concerns and other related topics

were affecting the conversations of the students. Al-

though our findings are critical to understanding the

college students’ areas of concern, it is important to

mention the limitations and underline assumptions of

our study.

Firstly, all the participants in the study belong to

the same university and hence can have similarities

in their context to some degree. This also limits the

study to issues related to geographical demographics

of one type. Secondly, the free-texts provided by the

participant were not categorized by the age groups

and were merged, which may overlook the issues spe-

cific to graduate vs undergraduate students. Next,

the population considered in our study were over-

lapped for the two-time frames meaning pre and post-

COVID-19 data can be from the same or different stu-

dents. This restricts us from conducting any statistical

studies on the samples as the style and structure of the

texts can vary from person to person and for a gener-

alized distribution for categories and sentiments, clear

separation between users is needed. We would like to

mitigate these issues by collecting more data across

universities and demographics.

In conclusion, authorities in universities have out-

reach programs, counselors, and other traditional

measures to ease out the mental distress among stu-

dents. While these are adaptable for the academic

persona in general, it can be ineffective with scaling

student population, lack of counselors, hesitant target

subjects, etc. An effective measure to merge with the

traditional methods is to curb these problems and au-

tomate the process. Methods to provide students with

comfortable sharing and categorize them in defined

classes for easy management can not only solve scal-

ing issues of growing student mental health cases but

also tackles the lack of counselors’ problem. Though

our study is not exhaustive to all students and should

not be taken as a systematic assessment, we believe

that can be a preliminary step towards providing in-

sight to policymakers, stakeholders, and administra-

tive figures to develop strategies for students’ needs

during the pandemic.

Assessing COVID-19 Impacts on College Students via Automated Processing of Free-form Text

465

REFERENCES

Ajivar (2019). Life coach powered by artificial intelligence,

https://ajivar.com.

AlKandari, N. Y. (2020). Students anxiety experiences in

higher education institutions. In Anxiety Disorders.

IntechOpen.

Bagroy, S., Kumaraguru, P., and De Choudhury, M. (2017).

A social media based index of mental well-being in

college campuses. In Proceedings of the 2017 CHI

Conference on Human factors in Computing Systems,

pages 1634–1646.

Brooker, A., Brooker, S., and Lawrence, J. (2017). First

year students’ perceptions of their difficulties. Student

Success, 8(1):49–63.

Cer, D., Diab, M., Agirre, E., Lopez-Gazpio, I., and Spe-

cia, L. (2017). Semeval-2017 task 1: Semantic tex-

tual similarity-multilingual and cross-lingual focused

evaluation. arXiv preprint arXiv:1708.00055.

Chiesi, F. and Primi, C. (2009). Recency effects in primary-

age children and college students. International Elec-

tronic Journal of Mathematics Education, 4(3):259–

279.

Devlin, J., Chang, M.-W., Lee, K., and Toutanova, K.

(2018). Bert: Pre-training of deep bidirectional trans-

formers for language understanding. arXiv preprint

arXiv:1810.04805.

Doygun, O. and Gulec, S. (2012). The problems faced

by university students and proposals for solution.

Procedia-Social and Behavioral Sciences, 47:1115–

1123.

Gilbert, C. and Hutto, E. (2014). Vader: A parsimo-

nious rule-based model for sentiment analysis of so-

cial media text. In Eighth International Confer-

ence on Weblogs and Social Media (ICWSM-14).

Available at (20/04/16) http://comp. social. gatech.

edu/papers/icwsm14. vader. hutto. pdf, volume 81,

page 82.

Head, A. and Eisenberg, M. (2011). How college students

use the web to conduct everyday life research. First

Monday, 16(4).

Hochreiter, S. and Schmidhuber, J. (1997). Long short-term

memory. Neural computation, 9(8):1735–1780.

Jelodar, H., Wang, Y., Orji, R., and Huang, H. (2020). Deep

sentiment classification and topic discovery on novel

coronavirus or covid-19 online discussions: Nlp us-

ing lstm recurrent neural network approach. arXiv

preprint arXiv:2004.11695.

Kabir, M. and Madria, S. (2020). Coronavis: A

real-time covid-19 tweets analyzer. arXiv preprint

arXiv:2004.13932.

Kushin, M. J. and Yamamoto, M. (2010). Did social me-

dia really matter? college students’ use of online me-

dia and political decision making in the 2008 election.

Mass Communication and Society, 13(5):608–630.

Lai, G., Xie, Q., Liu, H., Yang, Y., and Hovy, E. (2017).

Race: Large-scale reading comprehension dataset

from examinations. arXiv preprint arXiv:1704.04683.

Li, B. and Han, L. (2013). Distance weighted cosine simi-

larity measure for text classification. In International

Conference on Intelligent Data Engineering and Au-

tomated Learning, pages 611–618. Springer.

Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M., Chen, D.,

Levy, O., Lewis, M., Zettlemoyer, L., and Stoyanov,

V. (2019). Roberta: A robustly optimized bert pre-

training approach. arXiv preprint arXiv:1907.11692.

Loper, E. and Bird, S. (2002). Nltk: the natural language

toolkit. arXiv preprint cs/0205028.

Loria, S. (2018). textblob documentation. Release 0.15, 2.

Oyebode, O., Ndulue, C., Adib, A., Mulchandani, D., Su-

ruliraj, B., Orji, F. A., Chambers, C., Meier, S., and

Orji, R. (2020). Health, psychosocial, and social is-

sues emanating from covid-19 pandemic based on so-

cial media comments using natural language process-

ing. arXiv preprint arXiv:2007.12144.

Paul, J. A., Baker, H. M., and Cochran, J. D. (2012).

Effect of online social networking on student aca-

demic performance. Computers in Human Behavior,

28(6):2117–2127.

Reimers, N. and Gurevych, I. (2019). Sentence-bert: Sen-

tence embeddings using siamese bert-networks. arXiv

preprint arXiv:1908.10084.

Schroff, F., Kalenichenko, D., and Philbin, J. (2015).

Facenet: A unified embedding for face recognition

and clustering. In Proceedings of the IEEE conference

on computer vision and pattern recognition, pages

815–823.

Sin, M., Pyeon, H., Kim, H., and Moon, J. (2019). Inter-

personal relationship, body image, academic achieve-

ment according to sns use time of college students.

The Journal of the Convergence on Culture Technol-

ogy, 5(1):257–264.

Thelwall, M., Buckley, K., and Paltoglou, G. (2012). Sen-

timent strength detection for the social web. Journal

of the American Society for Information Science and

Technology, 63(1):163–173.

Tsakalidis, A., Liakata, M., Damoulas, T., and Cristea, A. I.

(2018). Can we assess mental health through social

media and smart devices? addressing bias in method-

ology and evaluation. In Joint European Conference

on Machine Learning and Knowledge Discovery in

Databases, pages 407–423. Springer.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones,

L., Gomez, A. N., Kaiser, Ł., and Polosukhin, I.

(2017). Attention is all you need. In Advances in

neural information processing systems, pages 5998–

6008.

Wang, A., Singh, A., Michael, J., Hill, F., Levy, O., and

Bowman, S. R. (2018). Glue: A multi-task bench-

mark and analysis platform for natural language un-

derstanding. arXiv preprint arXiv:1804.07461.

Williams, A., Nangia, N., and Bowman, S. R. (2017).

A broad-coverage challenge corpus for sentence

understanding through inference. arXiv preprint

arXiv:1704.05426.

Yang, C.-c. and Lee, Y. (2020). Interactants and activities

on facebook, instagram, and twitter: Associations be-

tween social media use and social adjustment to col-

lege. Applied Developmental Science, 24(1):62–78.

HEALTHINF 2021 - 14th International Conference on Health Informatics

466