A Probabilistic Theory of Abductive Reasoning

Nicolas A. Espinosa Dice

1 a

, Megan L. Kaye

1 b

, Hana Ahmed

1,2 c

and George D. Monta

˜

nez

1 d

1

AMISTAD Lab, Department of Computer Science, Harvey Mudd College, Claremont, CA, U.S.A.

2

Scripps College, Claremont, CA, U.S.A.

Keywords:

Abductive Logic, Machine Learning, Creative Abduction, Creativity, Graphical Model, Bayesian Network,

Probabilistic Abduction.

Abstract:

We present an abductive search strategy that integrates creative abduction and probabilistic reasoning to pro-

duce plausible explanations for unexplained observations. Using a graphical model representation of abduc-

tive search, we introduce a heuristic approach to hypothesis generation, comparison, and selection. To identify

creative and plausible explanations, we propose 1) applying novel structural similarity metrics to a search for

simple explanations, and 2) optimizing for the probability of a hypothesis’ occurrence given known observa-

tions.

1 INTRODUCTION

Imagine that one morning you step outside to find that

the grass is wet, ruining your new shoes. Could rain

have caused the wet grass? However, you cannot re-

call whether yesterday was cloudy. How likely is it to

have rained last night if there were no clouds?

Now imagine an alternative scenario, in which you

are a medical student studying the causes and symp-

toms of tuberculosis. You learn that if a patient has

an abnormal x-ray, there are several possible factors,

including lung cancer and tuberculosis. How can you

determine which diagnosis to give in light of the x-

ray results? What relevant information is available to

help you decide a best explanation?

For a final example, imagine arriving at work to

find that information on your company’s database has

been corrupted. Your boss is responsible for fixing the

deficiency that allowed this data corruption to occur.

Overwhelmed by the vast number of possible expla-

nations for the data corruption, your boss tasks you,

a database engineer, with identifying plausible causes

of the issue.

These tasks require abductive inference: creating

and identifying hypotheses (causes) that are the most

a

https://orcid.org/0000-0001-7802-6196

b

https://orcid.org/0000-0001-5422-8244

c

https://orcid.org/0000-0003-4532-0334

d

https://orcid.org/0000-0002-1333-4611

promising explanations for the observed effects. You

can make use of background information, prior oc-

currences similar in nature to this one, where the ef-

fects and causes were successfully identified. How-

ever, you also acknowledge the possibility of unfa-

miliar causes, which are beyond the scope of prior

information and your current knowledge.

For example, in our database problem, corrupted

data is the unexplained observed effect. Your primary

task reflects that of abductive inference, which is a

strategy for discovering hypotheses that are worthy of

further investigation, which Schurz refers to as the

strategical function of abductive inference (Schurz,

2008). What constitutes further investigation de-

pends on the application of abductive inference; it

can be broadly defined as any work that provides

more information about the causes or effects. Addi-

tionally, the search space—the space of all available

hypotheses—can be significantly large, necessitating

an effective search strategy for finding promising hy-

potheses within reasonable time and computational

costs.

Despite significant advances in machine learning

research over the last three decades, traditional super-

vised learning models are ill-equipped to handle the

aforementioned example problems. Supervised learn-

ing models emulate inductive inference, in which hy-

potheses are causal rules that best fit the known data.

Unlike abduction, the primary function of inductive

inference is justificational, specifically the justifica-

562

Espinosa Dice, N., Kaye, M., Ahmed, H. and Montañez, G.

A Probabilistic Theory of Abductive Reasoning.

DOI: 10.5220/0010195405620571

In Proceedings of the 13th International Conference on Agents and Artificial Intelligence (ICAART 2021) - Volume 2, pages 562-571

ISBN: 978-989-758-484-8

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

tion of the conjectured conclusion. Induction serves

little strategical function because the range of possi-

ble conclusions is restricted by the methods of gener-

alizing prior observed cases.

In this paper, we present a novel abductive search

method capable of handling the examples previously

described. Our model first uses abductive search for

hypothesis identification. To limit redundancy in the

abductive search results, we introduce two distinct

similarity metrics that compare causal structures of

variables. Additionally, to account for possible un-

familiar causes, we implement hypothesis generation

in our model as a method of generating novel ex-

planations—hypothesized causes that are not neces-

sarily observed in the background information. Fi-

nally, while abductive “confirmation” does not in-

dicate whether an abduced hypothesis logically pre-

cedes the observed effects, our model utilizes a hy-

pothesis comparison method to compare hypotheses

based on the likelihood of the explanation.

Cox et al. used abduction with surface deduction

to generate novel hypotheses from Horn-clauses, and

suggested extending this method’s application to ab-

duction from directed graphs (Cox et al., 1992). Our

abductive search model relies on Reichenbach’s Com-

mon Cause Principle rather than surface deduction

for hypothesis generation, and uses edit-distance and

Jaccard-based reasoning to distinguish redundant hy-

potheses. This combination of creative and proba-

bilistic abduction with similarity-based reasoning for

abductive search is distinct from the approach of Cox

et al. (Cox et al., 1992). The use of Reichenbach’s

Common Cause Principle is inspired by Schurz’s

theory on common cause abduction (Schurz, 2008).

While Schurz seems to deny the potential usefulness

of integrating common cause and Bayesian reasoning,

we introduce a form of Bayesian confirmation that

provides probabilistic explanations for the hypotheses

discovered through common cause abduction. Like-

wise, while our abductive model checks consistency

and simplicity similarly to Reiter’s heuristic diagno-

sis model (Reiter, 1987), we rely on Bayesian condi-

tioning during hypothesis generation and comparison,

which strengthens the plausibility of our model’s con-

jectured hypotheses.

2 GRAPHICALLY MODELING

ABDUCTION

Graphical models are tools for integrating logical and

probabilistic reasoning in order to represent rational

processes and causal relationships. Developed by

Pearl, they comprehensively account for complexity

and uncertainty within a dataset (Pearl, 1998). A

probabilistic graphical model is composed of nodes

representing random variables, and edges connecting

the nodes to indicate conditional independence or de-

pendence.

An abductive search problem can be represented

in a directed acyclic graph (DAG), in which a di-

rected edge from one node (the “parent”) to another

(the “child”) represents a causal relationship between

them. For edges of a DAG that are weighted with the

conditional probability P(child | parent) of the child

variable given the parent, the weight speaks to the

causal relationship’s influential strength.

2.1 Bayesian Networks

We adapt the definition of Bayesian network from

(Feldbacher-Escamilla and Gebharter, 2019), and

make use of conventional notation: Sets of objects, in-

cluding sets of sets, are represented by boldfaced up-

percase letters (e.g., S). Variables are represented by

upper-case letters (e.g., X ), and their respective real-

izations are represented by corresponding lower-case

letters (e.g., x). Additionally, a directed edge between

two variables is represented by an arrow, →, where

the parent node is at the arrow’s tail and the child node

is at the tip (e.g., X

i

→ X

j

).

Following the definitions in (Feldbacher-

Escamilla and Gebharter, 2019), BhV

V

V ,E

E

E,Pi is a

Bayesian network such that V

V

V is a set of random

variables, E

E

E is a set of directed edges, and P is a

probability distribution over V

V

V .

For all X

i

∈ V

V

V , P

P

Pa

a

ar

r

r(X

i

) is the set of X

i

’s parents:

P

P

Pa

a

ar

r

r(X

i

) = {X

j

∈ V

V

V | X

j

→ X

i

}. (1)

The set of X

i

’s children is defined as

C

C

Ch

h

h(X

i

) = {X

j

∈ V

V

V | X

i

→ X

j

}. (2)

We define the set of X

i

’s descendants to be

D

D

De

e

es

s

s(X

i

) = {X

j

∈ V

V

V | X

i

→ ... → X

j

}, (3)

and the set of X

i

’s ancestors to be

A

A

An

n

nc

c

c(X

i

) = {X

j

∈ V

V

V | X

j

→ ... → X

i

}. (4)

Within the context of this paper, all variables in V

V

V

are discrete. To properly incorporate continuous vari-

ables into the model, the discretization approach pre-

sented in (Chen et al., 2017) can be used with a dis-

cretization runtime of O(r ·n

2

), where r is the number

of class variable instantiations. Furthermore, Freid-

man et al. present a method of discretizing continuous

variables while learning the structure of the Bayesian

network using background information, that is, data

denoting the values of previous instantiations of vari-

ables in V

V

V (Friedman et al., 1996).

A Probabilistic Theory of Abductive Reasoning

563

Additionally, we define a set of observed nodes

O

O

O = O

O

O

E

E

E

∪ O

O

O

O

O

O

, (5)

where

O

O

O

E

E

E

= {O

E

1

,...,O

E

l

} (6)

is the set of nodes representing observed effects that

require explanation. Subsequently,

O

O

O

O

O

O

= {O

O

1

,...,O

O

j

} (7)

is the set of nodes representing observations that do

not require explanations. Sets O

O

O

E

E

E

and O

O

O

O

O

O

are disjoint,

namely, O

O

O

E

E

E

∩ O

O

O

O

O

O

=

/

0. Furthermore, U

U

U is the set of

unobserved nodes, such that

U

U

U = V

V

V − O

O

O. (8)

A hypothesis H

H

H will take the form

H

H

H = {h

1

,...,h

m

}, (9)

where H

H

H ⊆ U

U

U. An explanation refers to hypotheses

that are causally related to a given set of observed ef-

fects.

2.1.1 Node Marginal Probability Distribution

We wish to calculate probabilities of n proposed

nodes v

i

∈ {v

1

,...,v

n

} given a set of known

nodes O

O

O = {x

1

,...,x

m

} and unknown nodes U

U

U =

{U

1

,...,U

`

}. Because there are unknown nodes—

random variables with unknown values—we need to

account for all potential outcomes. Thus, we calculate

the marginal probability distribution:

P(v

1

,...,v

n

| x

1

,...,x

m

)

=

P(v

1

,...,v

n

,x

1

,...,x

m

)

P(x

1

,...,x

m

)

(10)

To calculate the marginal probability distribution that

accounts for all potential outcomes of U

1

,...,U

`

, we

take a sum over all possible values. Thus, the numer-

ator of the full equation is:

P(v

1

,...,v

n

,x

1

,...,x

m

) =

∑

U

1

∈{u

1

,¬u

1

}

...

∑

U

`

∈{u

`

,¬u

`

}

P(v

1

,...,v

n

,x

1

,...,x

m

,U

1

,...,U

`

) (11)

To complete the equation, the denominator follows

the same computation.

3 ABDUCTION AS A SEARCH

STRATEGY

We will demonstrate how to use abductive reasoning

in a best-first search for explanations. Schurz defines

a best-first explanation within the search space of an

abductive model as one that meets the following cri-

teria (Schurz, 2008):

1. The hypothesis is the most justifiable out of all

candidate hypotheses.

2. The children/successors are the most plausible of

all the hypothesis’ successors.

We expand on Schurz’s definition by adding the fol-

lowing third criterion:

3. The hypothesis is a common cause or distant com-

mon cause (an ancestor node) of all given ob-

served effects.

Addition of this third criterion for potential hypothe-

ses is based on Reichenbach’s Common Cause Prin-

ciple (CCP). The CCP is cited by Schurz as the jus-

tification basis for creative abduction (Schurz, 2008),

and it is defined as follows:

Definition 3.1 (Reichenbach’s Common Cause Prin-

ciple). For two properties A and B that are 1) corre-

lated, and 2) unrelated by a conditional relationship,

there must exist some common cause C such that A

and B are both causal effects of C.

Our method relies on this principle during hypoth-

esis selection. Given observed effects, we target a

common cause (a hypothesis) that is the most promis-

ing explanation of the observed effects.

3.1 Problem Definition

Using abduction as a search strategy, we model a cre-

ative abductive solution for the following search prob-

lem adapted from (Feldbacher-Escamilla and Gebhar-

ter, 2019).

Given:

• A set of observed effects O

O

O

E

E

E

.

• A set of known or background data O

O

O

O

O

O

.

Find:

A solution with the following elements (Prendinger

and Ishizuka, 2005):

• A candidate hypothesis H

H

H

C

C

C

that is causally related

to all O

O

i

∈ O

O

O

O

O

O

and all O

E

i

∈ O

O

O

E

E

E

.

• A causal rule denoting that H

H

H

C

C

C

is a potential set

of causes for O

O

O

E

E

E

.

• The necessary condition that H

H

H

C

C

C

∩O

O

O is consistent

for all O

O

i

,O

E

i

∈ O

O

O.

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

564

4 HYPOTHESIS

IDENTIFICATION,

GENERATION, AND

COMPARISON

Due to the vast search space of possible causes, the

model’s first component, hypothesis identification,

must be completed using an abductive search strategy.

Hypothesis identification serves a strategical function

in the model by identifying possible common causes

of the observed effects. Treating possible causes as

hypotheses, we use the term abductive search because

we optimize our search for P(O

O

O

E

E

E

| H

H

H), the probabil-

ity of the observed effects occurring given the hy-

pothesis. P(O

O

O

E

E

E

| H

H

H) measures the fit of a hypoth-

esis, or the degree to which hypothesis H

H

H explains

observed effects O

O

O

E

E

E

. Abductive inference, by defini-

tion, is agnostic towards how probable a hypothesis

is, and instead optimizes for how well they explain

the observed effects. From a probabilistic reasoning

perspective, this is akin to optimizing for P(O

O

O

E

E

E

| H

H

H).

Thus, by optimizing our search for how well hypothe-

ses explain the observed effects, we are modeling

abduction. Additionally, optimizing the search for

P(O

O

O

E

E

E

| H

H

H) allows for the consideration of surprising

(unlikely) hypotheses that if true, sufficiently explain

the observed effects.

Having identified the set of possible common

causes, each cause is treated as a candidate hypoth-

esis and evaluated by a comparison function that opti-

mizes for P(H

H

H | O

O

O

E

E

E

), the probability that the hypoth-

esis is true given the observed effects occurring. By

Bayes’ Theorem, we see

P(H

H

H | O

O

O

E

E

E

) ∝ P(O

O

O

E

E

E

| H

H

H)P(H

H

H). (12)

Thus, by optimizing for P(H

H

H | O

O

O

E

E

E

), the probability

of the hypothesis is taken into account. Through hy-

pothesis comparison, our model incorporates a justifi-

cational function. The best performing candidate hy-

potheses are added to the set of promising hypotheses,

denoted H

H

H

P

P

P

.

4.1 Hypothesis Identification and

Abductive Search for Possible

Common Causes

This section discusses the search for possible com-

mon causes of a set of observed effects. For clarity,

in probability functions, we treat possible common

causes as possible hypotheses.

The abductive search attempts to model the fol-

lowing

argmax

H

H

H⊆U

U

U

P(O

O

O

E

E

E

| H

H

H), (13)

in cases where O

O

O

E

E

E

is given. Because edges in

a Bayesian network are weighted with conditional

probabilities, the hypotheses that optimize P(O

O

O

E

E

E

| H

H

H)

will generally be connected to more of the observed

effects by an edge or directed path. So, rather than

computing P(O

O

O

E

E

E

| H

H

H) for all of the possible hypothe-

ses, we can instead search for possible hypotheses that

maximize the number of observed effects to which

they are connected.

To begin the search for such possible hypothe-

ses, we can apply CCP to the observed effects if and

only if all variables O

E

i

∈ O

O

O

E

E

E

satisfy the conditions

of CCP. Specifically, for all O

E

i

,O

E

j

∈ O

O

O

E

E

E

such that

O

E

i

6= O

E

j

, O

E

i

and O

E

j

must be correlated and unre-

lated by a conditional relationship. For now, we as-

sume that O

O

O

E

E

E

satisfies the criteria for CCP, and we

will later demonstrate how to handle the two possible

cases in which CCP criteria are not satisfied.

By CCP, there exists some common cause C

such that O

E

i

and O

E

j

are both effects of C, for all

O

E

i

,O

E

j

∈ O

O

O

E

E

E

where O

E

i

6= O

E

j

. This means there

exists some directed path from C to O

i

and O

j

.

Definition 4.1 (Directed Path). A directed path from

X

i

to X

j

, where X

i

,X

j

∈ V

V

V , is a set of edges E

E

E

X

i

,X

j

such that either (X

i

,X

α

1

),...,(X

α

k

,X

j

) ∈ E

E

E

X

i

,X

j

where

α

1

,...,α

k

represent arbitrary node indices of the

graph for some k ∈ N, or (X

i

,X

j

) ∈ E

E

E

X

i

,X

j

.

Therefore, the set of common causes of the ob-

served effects, C

C

C(O

O

O

E

E

E

), must be a subset of the set of

variables with a directed path to O

O

O

E

E

E

.

Definition 4.2 (Singleton Complete Explanations).

A singleton complete explanation is a variable in

A

A

An

n

nc

c

c(O

O

O

E

E

E

) with a directed path to every variable in O

O

O

E

E

E

.

The set of singleton complete explanations is given by

C

C

C

P

P

P

(O

O

O

E

E

E

) :=

\

O

E

i

∈O

O

O

E

E

E

A

A

An

n

nc

c

c(O

E

i

). (14)

We refer to the singleton explanations in C

C

C

P

P

P

(O

O

O

E

E

E

) as

possible common causes because P(O

O

O

E

E

E

| H

H

H)—where

H

H

H is the cause—has not yet been computed. Calcu-

lating this marginal probability is the only method of

verifying a variable or set of variables as an actual

common cause. Each possible cause in this set is con-

sidered a complete explanation because there exists a

directed path from the nodes composing the explana-

tion to each observed effect.

However, we must also consider cases where O

O

O

E

E

E

does not satisfy the CCP criteria. Specifically, there

are two possible cases in which the CCP criteria is

not satisfied by O

O

O

E

E

E

.

Case 1. Suppose that there exists some distinct pairs

of variables O

E

i

,O

E

j

∈ O

O

O

E

E

E

such that O

E

i

and O

E

j

are

A Probabilistic Theory of Abductive Reasoning

565

conditionally related. In such a case, there must be

a directed path between O

E

i

and O

E

j

. Consequently,

since the Bayesian network is acyclic, then without

a loss of generality, O

E

i

∈ D

D

De

e

es

s

s(O

E

j

). Therefore, it

is possible that O

E

j

explains O

E

i

, meaning that O

E

j

causes O

E

i

. So, O

E

i

can be removed from O

O

O

E

E

E

and

added to O

O

O

O

O

O

. Thus, we would maintain the condition

that all pairs of distinct variables in O

O

O

E

E

E

are unrelated

by an edge or directed path. However, if we are not

certain that O

E

j

explains O

E

i

, then we can leave O

E

i

,

O

E

j

in O

O

O

E

E

E

.

Case 2. Suppose there exists some distinct pairs of

variables O

E

i

,O

E

j

∈ O

O

O

E

E

E

such that O

E

i

and O

E

j

are

uncorrelated. In this case, because O

E

i

and O

E

j

are

observed effects, it may be impossible to find a single

common cause explaining both nodes. Therefore, we

must consider cases where the best explanation is a

hypothesis containing multiple variables.

There may exist three distinct types of possible

common causes:

1. Multivariate subsets of A

A

An

n

nc

c

c(O

O

O

E

E

E

) that are com-

plete explanations.

2. Multivariate subsets of A

A

An

n

nc

c

c(O

O

O

E

E

E

) that are partial

explanations.

3. Multivariate subsets of A

A

An

n

nc

c

c(O

O

O

E

E

E

) that are novel

explanations.

Note that the possible common causes are now multi-

variate sets rather than singleton sets.

When the CCP criteria is not satisfied, there may

not exist a single common cause of all the observed

effects. In such cases, we must instead consider ex-

planations that incorporate multiple variables.

Definition 4.3 (Multivariate Complete Explanations).

A multivariate complete explanation consists of mul-

tiple nodes whose joint set of descendants contains

the set of observed effects as a subset. The set of mul-

tivariate complete explanations is given by

{S

S

S ⊆ A

A

An

n

nc

c

c(O

O

O

E

E

E

) | O

O

O

E

E

E

⊆ D

D

De

e

es

s

s(S

S

S)}. (15)

We must also consider the existence of an observed

effect whose explanation is beyond the scope of the

model. This could occur when O

O

O

E

E

E

contains noisy ob-

served effects that cannot be sufficiently explained by

the model.

A simple example of noisy observed effects in

Bayesian networks are root nodes: nodes with empty

ancestor sets. Since the ancestor sets of root nodes

are empty, there cannot exist any causes or hypothe-

ses that explain the root nodes. In such cases, the root

nodes in O

O

O

E

E

E

given by

O

O

O

R

R

R

= {O

E

i

∈ O

O

O

E

E

E

| P

P

Pa

a

ar

r

r(O

E

i

) =

/

0}, (16)

would be removed from O

O

O

E

E

E

and added to O

O

O

O

O

O

.

If a noisy observation is not a root node, it remains

in O

O

O

E

E

E

. To handle such cases, we include possible

causes that do not have a directed path from the pos-

sible cause to every observed effect.

Definition 4.4 (Multivariate Partial Explanations). A

partial explanation consists of multiple nodes whose

joint set of descendants contains a subset of O

O

O

E

. The

set of partial explanations is given by

{S

S

S ⊆ A

A

An

n

nc

c

c(O

O

O

E

) | ∃O

E

i

∈ O

O

O

E

E

E

,O

E

i

∈ D

D

De

e

es

s

s(S

S

S)}. (17)

Lastly, we account for observed effects that de-

scend from unfamiliar causes: causes of a given set of

observed effects that were not observed in the back-

ground information. In these cases, we develop a hy-

pothesis generation method to generate novel expla-

nations of the unique causes.

Definition 4.5 (Novel Explanations). A novel expla-

nation is an explanation found through hypothesis

generation.

Hypothesis generation refers to the introduction of

new edges in the Bayesian network for the purpose of

creating common causes of the observed effects. Gen-

erating an edge between two nodes entails the devel-

opment of a causal relationship between them. The

set of generated edges E

E

E

H

H

H

G

G

G

of a hypothesis H

H

H will

take the form

E

E

E

H

H

H

G

G

G

:= {(h

α

1

,h

β

1

),...,(h

α

g

,h

β

g

)}. (18)

However, the model is faced with a vast search

space of nodes to generate new edges between, neces-

sitating incorporation of bias in the search. Specif-

ically, in searching for a set of unobserved nodes to

generate edges between, we optimize for the number

of observed effects that are descendants of the given

set of unobserved nodes.

4.1.1 Implementation

Because there is uncertainty as to whether O

O

O

E

E

E

satis-

fies the CCP criteria, we must include multivariate

complete explanations, multivariate partial explana-

tions, and novel explanations in the set of possible

common causes.

Algorithm 1 identifies the sets of singleton com-

plete explanations, multivariate complete explana-

tions, and multivariate partial explanations, and it re-

turns their union, defined as C

C

C

P

P

P

(O

O

O

E

E

E

)

+

. Algorithm 2

then uses C

C

C

P

P

P

(O

O

O

E

E

E

)

+

to generate novel explanations,

where C

C

C

P

P

P

G

G

G

(O

O

O

E

E

E

) is the set of novel explanations.

Algorithm 1 is motivated by the Apriori algo-

rithm (Agrawal and Srikant, 1994) for inferring causal

relations between sales items from large transaction

datasets. The Apriori algorithm relies on the apriori

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

566

property, a relational invariant between sets and sub-

sets, in order to improve efficiency. We leverage a

similar property in the following algorithm.

Algorithm 1: Computing Partial and Complete

Explanations, C

C

C

P

P

P

(O

O

O

E

E

E

)

+

.

Set C

C

C

P

P

P

(O

O

O

E

E

E

)

+

=

/

0;

Set R

R

R

Prev

= A

A

An

n

nc

c

c(O

O

O

E

E

E

);

Set R

R

R

Curr

=

/

0;

for k = k

T

,k

T

− 1,k

T

− 2,. . . , 1 do

if R

R

R

Prev

is empty then

Return C

C

C

P

P

P

(O

O

O

E

E

E

)

+

;

end

for S

S

S ⊆ R

R

R

Prev

such that |S

S

S| = k and

sim(R

R

R

Curr

,S

S

S) < S

T

do

if

|O

O

O

E

E

E

∩D

D

De

e

es

s

s(S

S

S)|

|O

O

O

E

E

E

|

≥ P

T

then

Set C

C

C

P

P

P

(O

O

O

E

E

E

)

+

= C

C

C

P

P

P

(O

O

O

E

E

E

)

+

∪ {S

S

S};

Set R

R

R

Curr

= R

R

R

Curr

∪ S

S

S;

end

end

Set R

R

R

Prev

= R

R

R

Curr

;

Set R

R

R

Curr

=

/

0;

end

Return C

C

C

P

P

P

(O

O

O

E

E

E

)

+

;

Let k represent the size of the candidate possible hy-

potheses set and k

T

be a hyperparameter specifying

the maximum size k to be considered. The set R

R

R

Curr

keeps track of variables for which the algorithm will

compute smaller subsets. Whether a set of variables

is added to R

R

R

Curr

is determined by measuring the sim-

ilarity of R

R

R

Curr

to the new set. If the new set is above

a certain threshold, specified by hyperparameter S

T

,

then the new set does not substantially add to the ex-

isting connection between the nodes in R

R

R

Curr

and the

observed effects, nor does the new set significantly in-

crease the ability of R

R

R

Curr

to explain the observed ef-

fects. In such a case, we ignore that set. Finally, P

T

is

a hyperparameter that specifies the percentage of the

observed effects that must be connected to a possible

hypothesis.

Next, we use C

C

C

P

P

P

(O

O

O

E

E

E

)

+

to compute novel expla-

nations. Specifically, Algorithm 2 computes N

N

N(O

O

O

E

E

E

),

a set of tuples that associates hypotheses with their

corresponding generated edges. More precisely, for

the members of N

N

N(O

O

O

E

E

E

), the first element in each tu-

ple is a hypothesis and the second element in the tu-

ple is the hypothesis’ corresponding set of generated

edges, E

E

E

H

H

H

G

G

G

. Consequently, the set of novel explana-

tions, C

C

C

P

P

P

G

G

G

(O

O

O

E

E

E

), is the set of the first elements in the

tuples in N

N

N(O

O

O

E

E

E

). Additionally, note that C

C

C

P

P

P

(O

O

O

E

E

E

)

+

=

C

C

C

P

P

P

G

G

G

(O

O

O

E

E

E

), but each hypothesis in C

C

C

P

P

P

G

G

G

(O

O

O

E

E

E

) contains a

corresponding set of generated edges that defines new

edges between nodes, resulting in a new explanation.

As a technical note, the implementation of Algorithm

2 only iterates over partial explanations in C

C

C

P

P

P

(O

O

O

E

E

E

)

+

,

since complete explanations contain nodes that are al-

ready connected to all observed effects.

Algorithm 2: Computing Novel Explanations.

Set N

N

N(O

O

O

E

E

E

) =

/

0;

for H

H

H ∈ C

C

C

P

P

P

(O

O

O

E

E

E

)

+

do

Set O

O

O

Exc

= O

O

O

E

E

E

− (O

O

O

E

E

E

∩ D

D

De

e

es

s

s(H

H

H));

Set E

E

E

H

H

H

G

G

G

=

/

0;

for O

i

∈ O

O

O

Exc

do

Set H

Max

= argmax

H

i

∈H

H

H

sim(O

i

,H

i

);

Set E

E

E

H

H

H

G

G

G

= E

E

E

H

H

H

G

G

G

∪ {(H

Max

,O

i

)};

end

Set N

N

N(O

O

O

E

E

E

) = N

N

N(O

O

O

E

E

E

) ∪ {(H

H

H, E

E

E

H

H

H

G

G

G

)};

end

Return N

N

N(O

O

O

E

E

E

);

4.2 Hypothesis Comparison

Once we have identified the set of potential com-

mon causes C

C

C

P

P

P

(O

O

O

E

E

E

)

+

, we refine it by optimizing for

P(H

H

H | O

O

O), the likelihood of a hypothesis, which serves

a justificational function in the model.

It is important to note that P(O

O

O | H

H

H) is not a ver-

ification measure of the hypothesis’ occurrence in a

given situation, but it is rather a justificational com-

ponent for the model’s output set C

C

C

P

P

P

(O

O

O

E

E

E

)

+

. Each

H

H

H ∈ C

C

C

P

P

P

(O

O

O

E

E

E

)

+

is theoretical and therefore unverifi-

able by an abductive model, but we can estimate a

hypothesis’ promise given the observed facts with the

measure P(O

O

O | H

H

H).

4.2.1 Comparing Identified and Generated

Hypotheses

In order to compare selected and generated hypothe-

ses, we defined a cost function that optimizes for

P(H

H

H | O

O

O), while creating a negative “cost” for gen-

erated edges.

The comparison function is

F(H

H

H, E

E

E

H

H

H

G

G

G

,O

O

O) := P(H

H

H | O

O

O) − αc(E

E

E

H

H

H

G

G

G

), (19)

where c(E

E

E

H

H

H

G

G

G

) is the cost of generating edges, defined

below, α is a weighting hyperparameter, and E

E

E

H

H

H

G

G

G

is

the set of generated edges of hypothesis H

H

H. The cost

of generating edges is defined by

c(E

E

E

H

H

H

G

G

G

) :=

∑

(X

i

,X

j

)∈E

E

E

H

H

H

G

G

G

(1 − sim(X

i

,X

j

)), (20)

where sim(X

i

,X

j

) is the similarity of X

i

compared to

X

j

, according to the similarity metrics defined in Sec-

A Probabilistic Theory of Abductive Reasoning

567

tion 5. Note that for each hypothesis under consid-

eration, the probability distribution for the particular

hypothesis is updated such that for all (O

i

,H

i

) ∈ E

E

E

H

H

H

G

G

G

,

P(O

i

| H

i

) = 1.

For all candidate hypotheses H

H

H ∈ C

C

C

P

P

P

(O

O

O

E

E

E

)

+

,

F(H

H

H, E

E

E

H

H

H

G

G

G

,O

O

O) is computed, and the hypotheses with

the highest P

Z

percent of scores are added to the set

of promising hypotheses, H

H

H

P

P

P

, where P

Z

is a hyperpa-

rameter used to control the selectivity of the process.

5 SIMILARITY METRICS

In hypothesis comparison, we incorporate bias to-

wards simple explanations. Simplicity in the graph-

ical model is indicated by structural dissimilarity,

as similar hypotheses can be deemed redundant. To

gauge structural similarity between hypotheses, we

introduce two metrics that determine node similarity:

1) graph edit distance, on the basis of edge weight,

and 2) the Jaccard Index of Variables, on the basis

of common descendants. In the search for plausible

explanations within a probabilistic Bayesian network,

disfavoring similarity reduces redundancy among hy-

potheses and produces simpler explanations. Intu-

itively, this bias reflects an innate understanding that

objects with similar properties and behaviors produce

similar outcomes, and vice versa.

5.1 Similarity Metric: Jaccard Index of

Variables

The Jaccard Index is defined as:

J(A,B) :=

|A ∩ B|

|A ∪ B|

, (21)

where A and B are sets.

The model uses what we define as the Jaccard In-

dex of Variables, a Jaccard Index-inspired metric of

similarity between variables in V

V

V . But rather than re-

lying solely on set cardinalities, we use the probabil-

ity distribution P. Thus, we adapt the Jaccard Index

to compute similarity between two variables A and B

in the Bayesian network based on their children. The

children of A and B are denoted as C

C

Ch

h

h(A) = A

A

A

C

C

C

and

C

C

Ch

h

h(B) = B

B

B

C

C

C

. The Jaccard Index of Variables is de-

fined as

sim

Jaccard

(A,B) :=

(C

C

Ch

h

h(A) ∩C

C

Ch

h

h(B))

(C

C

Ch

h

h(A) ∪C

C

Ch

h

h(B))

, (22)

=

(A

A

A

C

C

C

∩ B

B

B

C

C

C

)

(A

A

A

C

C

C

∪ B

B

B

C

C

C

)

, (23)

where

(A

A

A

C

C

C

∪ B

B

B

C

C

C

) := |A

A

A

C

C

C

− B

B

B

C

C

C

| + (A

A

A

C

C

C

) + (B

B

B

C

C

C

)

− (A

A

A

C

C

C

∩ B

B

B

C

C

C

), (24)

(A

A

A

C

C

C

∩ B

B

B

C

C

C

) :=

∑

C∈A

A

A

C

C

C

∩B

B

B

C

C

C

min(P(C|A),P(C|B)) (25)

and

(A

A

A

C

C

C

) :=

∑

C∈A

A

A

C

C

C

∩B

B

B

C

C

C

P(C | A), (26)

(B

B

B

C

C

C

) :=

∑

C∈A

A

A

C

C

C

∩B

B

B

C

C

C

P(C | B). (27)

5.2 Similarity Metric: Edit-distance

We refer to edit-distance (Bunke, 1997) as the cost of

operations required to transform one graphical struc-

ture into another. Edit-distance is the basis of our edit-

distance based similarity metric, which is defined as

sim

Edit

(A,B) :=

c(A,B)

|C

C

Ch

h

h(A)|

(28)

=

c(A,B)

|A

A

A

C

C

C

|

, (29)

where c(A,B) is a cost function to measure the cost of

graph changing operations such that

c(A,B) :=

∑

C∈A

A

A

C

C

C

∩B

B

B

C

C

C

kP(C | A)−P(C | B)k+|A

A

A

C

C

C

−B

B

B

C

C

C

|,

(30)

where kP(C | A) − P(C | B)k is the absolute value of

the difference P(C | A) − P(C | B).

5.3 Similarity of Sets of Variables

In addition to computing the similarity of variables,

we can compute the similarity of sets of variables.

Specifically, for some similarity metric sim(·,·), we

can compute

sim(A

A

A,B

B

B) :=

∑

X

i

∈A

A

A

sim(X

i

,X

∗

j

)

|A

A

A|

(31)

where X

∗

j

:= argmax

X

j

∈B

B

B

sim(X

i

,X

j

). The algorithm

for computing the similarity of sets of variables is

given below in Algorithm 3.

6 APPLICATIONS

6.1 Database Corruption

Using the hypothesis identification, generation, and

comparison methods previously described, we can

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

568

Algorithm 3: Computing Similarity of Sets of

Variables, sim(A

A

A,B

B

B).

Set total = 0;

for X

i

∈ A

A

A do

Set X

∗

j

= argmax

X

j

∈B

B

B

sim(X

i

,X

∗

j

);

Set total = total + sim(X

i

,X

∗

j

);

end

Set sim(A

A

A,B

B

B) = total/|A

A

A|;

Return sim(A

A

A,B

B

B);

demonstrate hypothesis selection within the example

of a corrupted database, which we discussed in Sec-

tion 1.

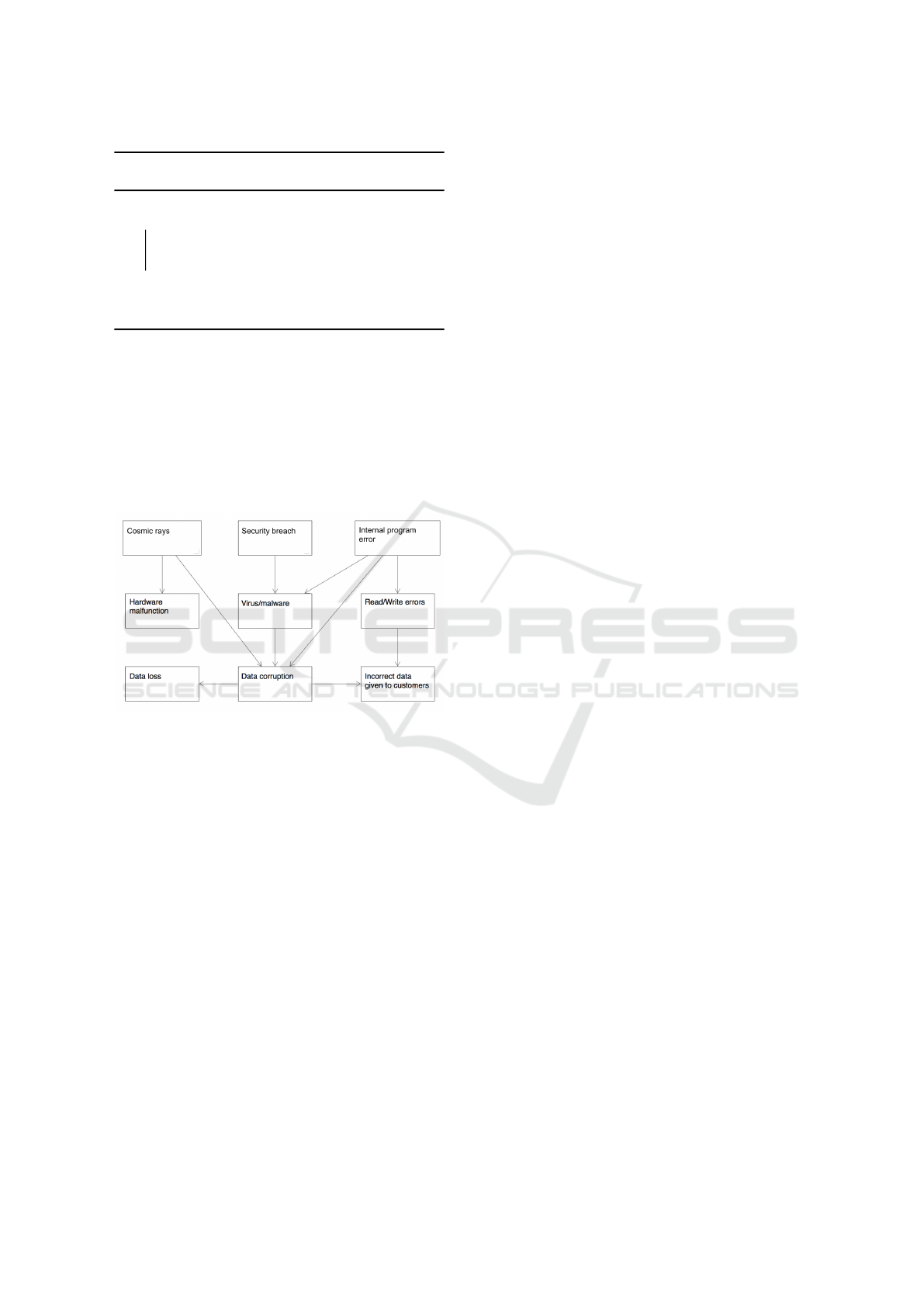

First, we will construct a graphical model to repre-

sent the situation. Figure 1 gives a hypothetical graph-

ical representation of the corrupted database example

to illustrate our process, with nodes that are related by

an arbitrary conditional probability table.

Figure 1: Data Corruption Example DAG.

After inputting background information and observed

effects/surprising phenomena to the current model,

we can identify every potential hypothesis—both

probable and improbable. For example, we could

have observed Hardware Malfunction in conjunction

with Data Corruption.

Some of these hypotheses could be overly-

complicated and redundant. For example, a possi-

ble explanation could be the simultaneous occurrence

of an Internal Program Error and a Virus/Malware.

Another possible explanation could that only a

Virus/Malware caused Data Corruption. These two

hypotheses share common components and effects,

but we can reduce the complexity of our hypothesis

by simply selecting Virus/Malware. In these cases of

redundant hypotheses, our model utilizes the similar-

ity metrics to identify the simple hypothesis.

Our first step, which we refer to as observation-

testing, is calculating P(O

O

O | H

H

H

i

i

i

) for some hypothesis

H

H

H

i

i

i

. Given a potential hypothesis, we will calculate

the probabilities of the observed effects. However,

due to the graph structure, we need to calculate the

marginal probability, which takes into account all rel-

evant nodes, regardless of whether they are in our hy-

pothesis set or not. We will then choose the top n%

of these hypotheses to move on to the second phase.

Having found hypotheses that best explain the ob-

served effects, the second step is hypothesis refine-

ment: calculating P(H

H

H

i

i

i

| O

O

O) for some hypothesis H

H

H

i

i

i

.

Given the set of hypotheses from observation-testing,

we will then identify and choose the hypothesis that is

most probable given our background information. As

a result of this step, we are given the most probable

hypothesis that also adequately explains our observed

effects.

Suppose that our first step selected two potential

hypotheses to move forward into stage two: Cos-

mic Rays, which yielded a probability of .93, and

Virus/Malware, which yielded a probability of .71.

However, during phase two, suppose Virus/Malware

yielded a probability of .67, while Cosmic Rays

yielded a probability of .002. Virus/Malware would

have a higher final probability and would therefore be

chosen as our final hypothesis.

We continue the data corruption example to

demonstrate hypothesis generation. Consider a new

situation where we are given Internal Program Error

and Security Breach as potential explanations for the

observed occurrences of both Hardware Malfunction

and Read/Write Errors. Note that while Internal Pro-

gram Error is a parent node to Read/Write Errors, it

is not an ancestor for Hardware Malfunction. Also,

Security Breach is not an ancestor for Hardware Mal-

function nor Read/Write Errors. This means that we

have no common cause hypothesis for the observed

effects. In this case, the model will generate a com-

mon cause hypothesis using the edge generation pro-

cess described in Section 4.1.

An edge will be introduced to connect a hypothe-

sis node with a new child node that is also an observed

effect. In the current example, neither of our potential

hypotheses would cause a Hardware Malfunction, yet

a Hardware Malfunction has been observed. So, two

edges are generated: one connecting Security Breach

to Hardware Malfunction, and one connecting Inter-

nal Program Error to Hardware Malfunction. Security

Breach and Internal Program are now novel common

cause hypotheses, and will be reevaluated using the

observation-testing and hypothesis refinement meth-

ods previously described.

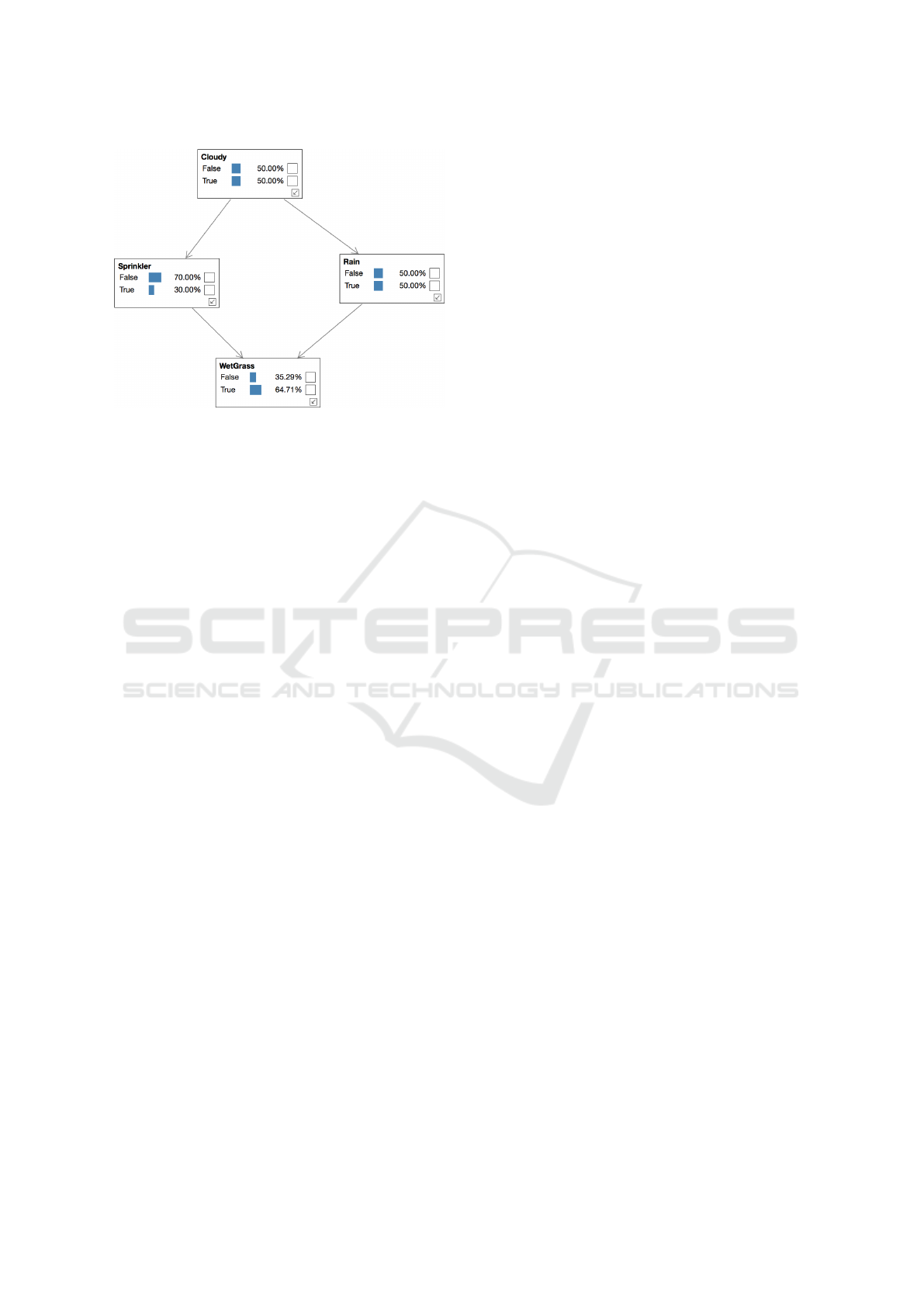

6.2 The Wet Grass Network

As an additional example, let us consider the Wet

Grass Bayesian network (Bayes Server, 2020), shown

A Probabilistic Theory of Abductive Reasoning

569

Figure 2: Wet Grass Bayes Net.

in Figure 2. Using our similarity methods, the algo-

rithm successfully avoids choosing both “S” (Sprin-

kler) and “R” (Rain) to increase the probability when

the two are not given. However, when either “S” or

“R” is an observation, the algorithm chooses the cor-

responding node to increase the probability of grass

being wet. However, it appears to strongly weight hy-

potheses that perform well in the first stage, especially

if the graph is small. As an example, when given

“W” (WetGrass) while observing “C” (Cloudy), the

algorithm chooses “S” as the cause, as it performs

better in the first phase and there are only two hy-

potheses, “S” and “R”, and the algorithm chooses the

top half for these testing purposes. Overall, this sys-

tem chooses simple hypotheses with no extraneous

information that aim to increase probability of the

observed effects, while also taking into account the

probability of the hypotheses.

6.3 Discussion

While our algorithms produce reasonable explana-

tions for small graphs, it remains to be seen how well

these methods scale to exponentially larger networks,

and how they adapt to non-Bayesian probability the-

ories or graphical models. As such, we view this

work as a preliminary investigation into using prob-

abilistic structures to develop abductive explanations,

in comparison to the large body of previous work

in abductive computation which has focused primar-

ily on symbolic methods and formal logic (Ng and

Mooney, 1992; Mooney, 2000; Juba, 2016; Ignatiev

et al., 2019).

7 CONCLUSION

The purpose of our abductive search model is to de-

velop plausible explanations for surprising phenom-

ena. Approaching this scenario as a search problem,

we are interested in finding our search target, which

is a set of the most promising potential hypothetical

causes for the unexplained observed events. The fit

of a hypothesis relative to known data is measured

by P(O

O

O | H

H

H), which is the probability of the observed

events occurring given that the hypothesis is also true.

So, we want a set of hypotheses C

C

C

P

P

P

= H

H

H

1

1

1

,...,H

H

H

m

m

m

that, for each H

H

H

i

i

i

∈ C

C

C

P

P

P

, 1) optimizes the measure of fit

P(O

O

O | H

H

H

i

i

i

), and 2) has a high P(H

H

H

i

i

i

| O

O

O) value relative

to other hypotheses.

Having established criteria for a search target,

we apply an abductive search strategy to find these

promising potential hypotheses. We deemed abduc-

tion as the most effective form of inference for ad-

dressing such problems, including the data corruption

one, where a) known information is incomplete and

b) a set of novel hypotheses are the search target.

We used graphical models—specifically, Bayesian

networks—to represent both the causal relationships

within a search space and the elements of abductive

search.

We present hypothesis selection, generation, and

comparison as the primary methods for finding

promising potential hypotheses. Our hypothesis se-

lection criteria is based upon Reichenbach’s Common

Cause Principle. In the case that no common cause

hypothesis exists, we rely on hypothesis generation

to produce novel potential common cause hypothe-

ses. These generated hypotheses can be a) multivari-

ate hypotheses, b) partial explanations, or c) gener-

ated edges. Then, having obtained the set of all po-

tential hypothetical causes, we subject the individual

elements to hypothesis comparison, selecting the hy-

pothesis that maximizes P(H

H

H | O

O

O).

Future research on probabilistic abduction’s ex-

planatory capabilities can be conducted with regards

to search algorithms in general, which are often

viewed as black-box methods. We also see Bayes’

Theorem as a tool for bridging theoretical abductive

search methods—such as those presented in this pa-

per, those in (Schurz, 2008), and those in (Cox et al.,

1992)—with machine learning classification from in-

complete or fuzzy data.

Other future work includes analyzing algorithmic

scaling and implementing methods such as dynamic

programming to reduce algorithm runtime, as well

as further exploring effective hypothesis generation

methods by examining the effects of edge genera-

tion on hidden variables in a Bayesian network and

ICAART 2021 - 13th International Conference on Agents and Artificial Intelligence

570

the implications of successive edge generation on our

model’s predictive accuracy.

ACKNOWLEDGEMENTS

This research was supported in part by Harvey Mudd

College and the National Science Foundation under

Grant No. 1950885. Any opinions, findings or con-

clusions expressed are the authors’ alone, and do not

necessarily reflect the views of Harvey Mudd College

or the National Science Foundation.

REFERENCES

Agrawal, R. and Srikant, R. (1994). Fast Algorithms for

Mining Association Rules. Proceeding of the 20th

VLDB Conference, pages 487–499.

Bayes Server (2020). Live Examples. https://www.

bayesserver.com/. Accessed: 2020/09/25.

Bunke, H. (1997). On a relation between graph edit distance

and maximum common subgraph. Pattern Recogni-

tion Letters, 18(8):689–694.

Chen, Y.-C., Wheeler, T. A., and Kochenderfer, M. J.

(2017). Learning discrete Bayesian networks from

continuous data. Journal of Artificial Intelligence Re-

search, 59:103–132.

Cox, P., Knill, E., and Pietrzykowski, T. (1992). Abduction

in logic programming with equality. In Proceedings

of the Eighth International Conference on Fifth Gen-

eration Computer Systems.

Feldbacher-Escamilla, C. J. and Gebharter, A. (2019). Mod-

eling creative abduction Bayesian style. European

Journal for Philosophy of Science, 9(1):9.

Friedman, N., Goldszmidt, M., et al. (1996). Discretiz-

ing continuous attributes while learning Bayesian net-

works. In Proceeding of the Thirteenth International

Conference on Machine Learning, pages 157–165.

Ignatiev, A., Narodytska, N., and Marques-Silva, J. (2019).

Abduction-based explanations for machine learning

models. In Proceeding of the Thirty-Third AAAI Con-

ference on Artificial Intelligence, volume 33, pages

1511–1519.

Juba, B. (2016). Learning abductive reasoning using

random examples. In Proceedings of the Thirti-

eth AAAI Conference on Artificial Intelligence, pages

999–1007. Citeseer.

Mooney, R. J. (2000). Integrating abduction and induction

in machine learning. Abduction and Induction, pages

181–191.

Ng, H. T. and Mooney, R. J. (1992). A First-Order Horn-

Clause Abductive System and Its Use in Plan Recog-

nition and Diagnosis. Technical report, Department

of Computer Sciences, The University of Texas at

Austin.

Pearl, J. (1998). Graphical models for probabilistic and

causal reasoning. Handbook of Defeasible Reasoning

and Uncertainty Management Systems, 1:367–389.

Prendinger, H. and Ishizuka, M. (2005). A creative abduc-

tion approach to scientific and knowledge discovery.

Knowledge-Based Systems, 18(7):321–326.

Reiter, R. (1987). A theory of diagnosis from first princi-

ples. Artificial intelligence, 32(1):57–95.

Schurz, G. (2008). Patterns of abduction. Synthese,

164(2):201–234.

A Probabilistic Theory of Abductive Reasoning

571