Combining Gesture and Voice Control for Mid-air Manipulation of CAD

Models in VR Environments

Markus Friedrich, Stefan Langer and Fabian Frey

Institute of Informatics, LMU Munich, Oettingenstraße 67, 80538 Munich, Germany

Keywords:

CAD Modeling, Virtual Reality, Speech Recognition, Constructive Solid Geometry.

Abstract:

Modeling 3D objects in domains like Computer Aided Design (CAD) is time-consuming and comes with a

steep learning curve needed to master the design process as well as tool complexities. In order to simplify the

modeling process, we designed and implemented a prototypical system that leverages the strengths of Virtual

Reality (VR) hand gesture recognition in combination with the expressiveness of a voice-based interface for

the task of 3D modeling. Furthermore, we use the Constructive Solid Geometry (CSG) tree representation

for 3D models within the VR environment to let the user manipulate objects from the ground up, giving an

intuitive understanding of how the underlying basic shapes connect. The system uses standard mid-air 3D

object manipulation techniques and adds a set of voice commands to help mitigate the deficiencies of current

hand gesture recognition techniques. A user study was conducted to evaluate the proposed prototype. The

combination of our hybrid input paradigm shows to be a promising step towards easier to use CAD modeling.

1 INTRODUCTION

Current Computer Aided Design (CAD) modeling

tools use mainly mouse control-based object manipu-

lation techniques in predefined views of the modelled

objects. However, manipulating 3D objects with a 2D

input device (mouse) on a 2D output device (moni-

tor) always necessitates a complex transfer, making it

difficult for beginners to grasp the needed interaction

concepts.

With the advent of affordable Virtual Reality (VR)

devices and robust hand gesture recognition systems,

more possibilities are at the fingertips of interaction

designers and researchers. The goal is to leverage the

potential of these immersive input methodologies to

improve intuitiveness, flattening the learning curve,

and increasing efficiency in the 3D modeling task.

However, hand gesture recognition systems face

many challenges in productive environments. Com-

pared to expert devices like 3D mouses, these systems

lack robustness as well as precision. Moreover, con-

stant arm movements can cause fatigue syndroms like

the Gorilla Arm Syndrom (LaValle, 2017), making it

hard to use systems over an extended period of time.

This is where we hypothesize that a hybrid approach,

combining gesture recognition and voice control, is

beneficial. While using hands for intuitive model

part manipulation, voice commands replace complex

to recognize gestures with simple to say and easy to

memorize word commands.

In this work, we propose a novel interaction con-

cept, implemented as a prototype, which combines

hand gesture recognition and voice control for CAD

model manipulation in a VR environment. The pro-

totype uses gesture-based mid-air 3D object manip-

ulation techniques where feasible and combines it

with a set of voice commands to complement the in-

teraction concept. CAD models are represented as

a combination of geometric primitives and Boolean

set-operations (so called Constructive Solid Geome-

try (CSG) trees) enabling transformation operations

that are more intuitive for beginners. The whole sys-

tem is evaluated in a user study, showing its potential.

The paper makes the following contributions:

• A new interaction concept for intuitive, CSG

tree-based, CAD modeling in VR leveraging the

strengths of both, gesture- and voice-based inter-

actions.

• A prototypical implementation of the interaction

concept with off-the-shelf hard- and software.

• A detailed user study proving the advantages of

the proposed approach.

The paper is structured as follows: Essential terms

are explained in Section 2. Related work is discussed

in Section 3 which focuses on mid-air manipulation

techniques. This is followed by an explanation of the

concept (Section 4) which is evaluated in Section 5.

Friedrich, M., Langer, S. and Frey, F.

Combining Gesture and Voice Control for Mid-air Manipulation of CAD Models in VR Environments.

DOI: 10.5220/0010170501190127

In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2021) - Volume 2: HUCAPP, pages

119-127

ISBN: 978-989-758-488-6

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

119

Since the proposed prototype opens up a multitude of

different new implementation and research directions,

the paper concludes with a summary and a short de-

scription of possible future work (Section 6).

2 BACKGROUND

2.1 Construction Trees

Construction or CSG trees (Requicha, 1980) is a rep-

resentation and modeling technique for geometric ob-

jects mostly used in CAD use cases. Complex 3D

models are created by combining primitive geomet-

ric shapes (spheres, cylinders, convex polytopes) with

Boolean operators (union, intersection, difference,

complement) in a tree-like structure. The inner nodes

describe the Boolean operators while the leave nodes

represent primitives. The CSG tree representation has

two big advantages over other 3D model representa-

tions: Firstly, it is memory-saving, and secondly, it is

intuitive to use.

2.2 Mid-air Interactions for 3D Object

Manipulation

The manipulation of 3D objects in a virtual scene is

a fundamental interaction in immersive virtual envi-

ronments. Manipulation is the task of changing char-

acteristics of a selected object using spatial transfor-

mations (Bowman and Hodges, 1999). Translation,

rotation, and scaling are referred to as basic manipu-

lation tasks (Bowman et al., 2004). Each task can be

performed in any axis direction. In general, a single

transformation in one specific axis is defined as a de-

gree of freedom (DOF). Thus, a system that provides

a translation-only interface on all three axes supports

three DOFs whereas a system that offers all three

manipulation tasks on all axes has 9 DOFs (Mendes

et al., 2019).

In so-called mid-air interactions, inputs are passed

on through the user’s body, including posture or hand

gestures (Mendes et al., 2019). This kind of inter-

action provides a particularly immersive and natural

way to grab, move, or rotate objects in VR which

allows for more natural interfaces that can increase

both, usability and user performance (Caputo, 2019).

In this paper, the term mid-air manipulation refers

specifically to the application of basic transformations

to virtual objects in a 3D environment using hand ges-

tures. Apart from the allowed degrees of freedom, ex-

isting techniques can be classified by the existence of

a separation between translation, rotation, and scaling

(Mendes et al., 2019) which also affects manipula-

tion precision. Furthermore, two additional interac-

tion categories exist: Bimanual and unimanual. Bi-

manual interfaces imply that users need both hands

to perform the intended manipulation, whereas appli-

cations using unimanual interfaces can be controlled

with a single hand (Mendes et al., 2019).

3 RELATED WORK

Object Manipulation. The so-called Handle Box ap-

proach (Houde, 1992) is essentially a bounding box

around a selected object. The Handle Box consists

of a lifting handle, which moves the object up and

down, as well as four turning handles to rotate the ob-

ject around its central axis. To move the object hori-

zontally, an activation handle is missing and instead,

the user can easily click and slide.

In (Conner et al., 1992), so-called Virtual Han-

dles that allow full 9 DOF control are proposed. The

handles have a small sphere at their ends, which are

used to constrain geometric transformations to a sin-

gle plane or axis (Mendes et al., 2019). The user

selects the manipulation mode with the mouse but-

ton. During rotation, the initial user gesture is recog-

nized to determine the rotation axis. Both techniques

are initially designed for classic mouse/screen inter-

actions but are seamlessly transferable to hand ges-

ture/VR environments.

Mid-air Interactions. Caputo et al. state that users

often like mid-air interactions more than other meth-

ods, finding the accuracy in manipulation sufficiently

good for many proposed tasks (Caputo, 2019). First

systems used gloves or other manual input devices

providing the functionality to manipulate or to grab

objects. In (Robinett and Holloway, 1992), a system

for translating, rotating, and scaling objects in VR is

proposed. The formalization of the object manipula-

tion task in three sub tasks, namely (object) selection,

(object) transformation and (object) release was pro-

posed in (Bowman and Hodges, 1999). One of the

most influential techniques in this field is the Hand-

centered Object Manipulation Extending Ray-casting

(HOMER) method (Bowman and Hodges, 1997). Af-

ter selecting an object with a light ray, the hand moves

to the object. The hand and the object are linked and

the user can manipulate the object with a virtual hand

until the object has been dropped. After that, the con-

nection is removed and the hand returns to its natural

position.

Metaphors for Manipulation. A bimanual 7 DOF

manipulation technique is the Handlebar introduced

by Song et al. (Song et al., 2012). It is adopted

HUCAPP 2021 - 5th International Conference on Human Computer Interaction Theory and Applications

120

by multiple real-world applications. The Handlebar

uses the relative position and movement of both hands

to identify translation, rotation, scaling, and to map

the transformation into a virtual object. The main

strength of this method is the intuitiveness due to the

physical familiarity with real-world actions like ro-

tating and stretching a bar. However, holding an arm

position may exhaust the user (Gorilla Arm Syndrome

(LaValle, 2017)).

In (Wang et al., 2011), manipulations (in particu-

lar translation and rotation) are separated. Translation

is applied by moving the closed hand and rotation by

using two hands around the three main axes, mimick-

ing the physical action of rotating a sheet of paper.

The most direct unimanual method is the Simple Vir-

tual Hand metaphor (Caputo, 2019) where objects are

selected by grasping them. After the selection, move-

ment and rotation of the user’s hand are mapped di-

rectly to the objects. This method is characterized by

its high intuitiveness. However, accurate, reliable and

robust hand tracking is required. Kim et al. extended

this idea in (Kim and Park, 2014) by placing a sphere

around the selected object and additionally enable the

user to control the reference frame during manipula-

tion which shows great improvements, in particular

for rotation.

In order to demonstrate the advantages of DOF

separation, Mendes et al. applied the Virtual Han-

dles concept (Conner et al., 1992) to VR environ-

ments (Mendes et al., 2016). Since Virtual Handles

allow users to choose a single axis, transformations in

unwanted axes are improbable. However, transforma-

tions in more than one axis take a bit more time. We

use this concept for basic transformations in our pro-

totype (see Figure 4a).

Multimodal Object Manipulation. Chu et al. (Chu

et al., 1997) presented a multimodal interface for

CAD Systems in VR as early as 1997, concluding

that voice commands and hand gestures are supe-

rior to eye tracking. Zhong et al. (Zhong and Ma,

2004) propose an approach for constraint-based ma-

nipulations of CAD models in a VR environment.

The authors implemented a prototype for a three-

level model comprising of a high-level model for ob-

ject definition, a mid-level, hybrid, CSG and Bound-

ary Representation model for object creation, and a

polygon-level based model for visualization and in-

teraction. Zheng et al. (Zheng et al., 2000) also pro-

pose a VR-based CAD system for geometric model-

ing. In order to enhance the human-machine inter-

action, they add an electronic data glove as an addi-

tional input device for constructing, destroying and

freeform-creating objects. The authors claim these in-

teraction techniques to be more suitable and intuitive

to humans than traditional manipulation devices. Lee

et al. (Lee et al., 2013) conducted a usability study

of multimodal inputs in Augmented Reality environ-

ments. The authors compare speech-only and gesture-

only approaches to a hybrid solution and find that

the multimodal approach is a more satisfying inter-

action concept for users. Xue et al. (Xue et al., 2009)

improve the voice command based input model for

CAD software by making the interaction more natural

through natural language processing. Kou et al. (Kou

et al., 2010), as well, focus on natural language pro-

cessing, utilizing a template matching approach, en-

abling the system to process unknown expressions as

well.

4 CONCEPT

In order to simplify the modeling process as much

as possible, we utilized mid-air manipulation tech-

niques without any controllers or gloves - hand track-

ing only. The main feature of our technique is the

use of only the grasping gesture. This simple gesture

allows the usage of low-cost hand-tracking sensors,

more specifically the Leap Motion Controller sold by

Ultraleap. More complex instructions are operated

via voice control. To the best of our knowledge, this is

the first approach that combines mid-air manipulation

techniques with voice commands in order to create an

intuitive and fast way of manipulating CSG-based 3D

objects in VR.

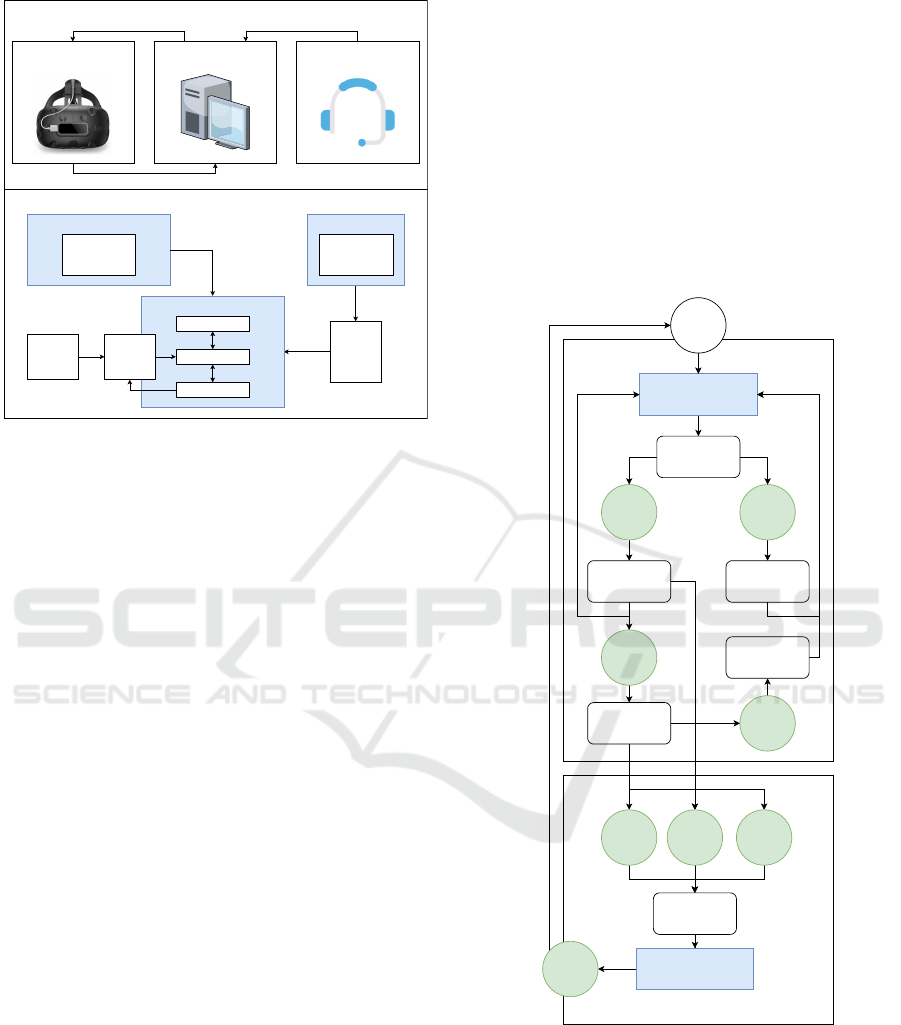

4.1 System Overview

The proposed system consists of hardware and soft-

ware components and is detailed in Figure 1. The

hand tracking controller (Leap Motion Controller)

is mounted on the VR headset which is an off-the-

shelf HTC Vive that uses laser-based head tracking

and comes with two 1080x1200 90Hz displays. The

speech audio signal is recorded using a wearable mi-

crophone (Headset). A central computing device

(Workstation) takes the input sensor data, processes

it, applies the interaction logic and renders the next

frame which is then displayed in the VR device’s

screens.

The software architecture consists of several sub-

components. The Gesture Recognition module trans-

lates input signals from the Leap Motion sensor into

precise hand poses and furthermore recognizes hand

gestures by assembling temporal chains of poses. The

Speech Recognition module takes the recorded speech

audio signal and translates it into a textual representa-

tion. The CSG model, which is later used for editing,

Combining Gesture and Voice Control for Mid-air Manipulation of CAD Models in VR Environments

121

HTC Vive with mounted

Leap Motion Controller

Workstation Headset

Head and hand pose

Image of rendered scene Audio signal (speech)

Hardware Architecture

Software Architecture

Command

Processor

Speech Recognition

Microsoft

Windows Speech

Recognition

Gesture Recognition

Leap Motion

Unity API

VR Engine & Interaction Logic

Unity3D

SteamVR

Interaction Logic

Triangle

Mesh

Transformer

CSG Tree

Parser

Figure 1: Architecture overview of the developed system.

is parsed by the CSG Tree Parser based on a JSON-

based input format and transformed into a triangle

mesh by the Triangle Mesh Transformer. This step is

necessary since the used rendering engine (Unity3D)

is restricted to this particular geometry representa-

tion. The main component (VR Engine & Interaction

Logic) reacts on recognized gestures and voice com-

mands, executes corresponding actions (Interaction

Logic), and handles VR scene rendering (Unity3D,

SteamVR). For example, if the user applies a basic

scaling transformation to a model part consisting of

a sphere, the geometric parameters of the sphere (in

this case its radius) are changed based on the hand

pose. Then, the model is re-transformed into the tri-

angle mesh representation, rendered, and the resulting

image is sent to the VR device.

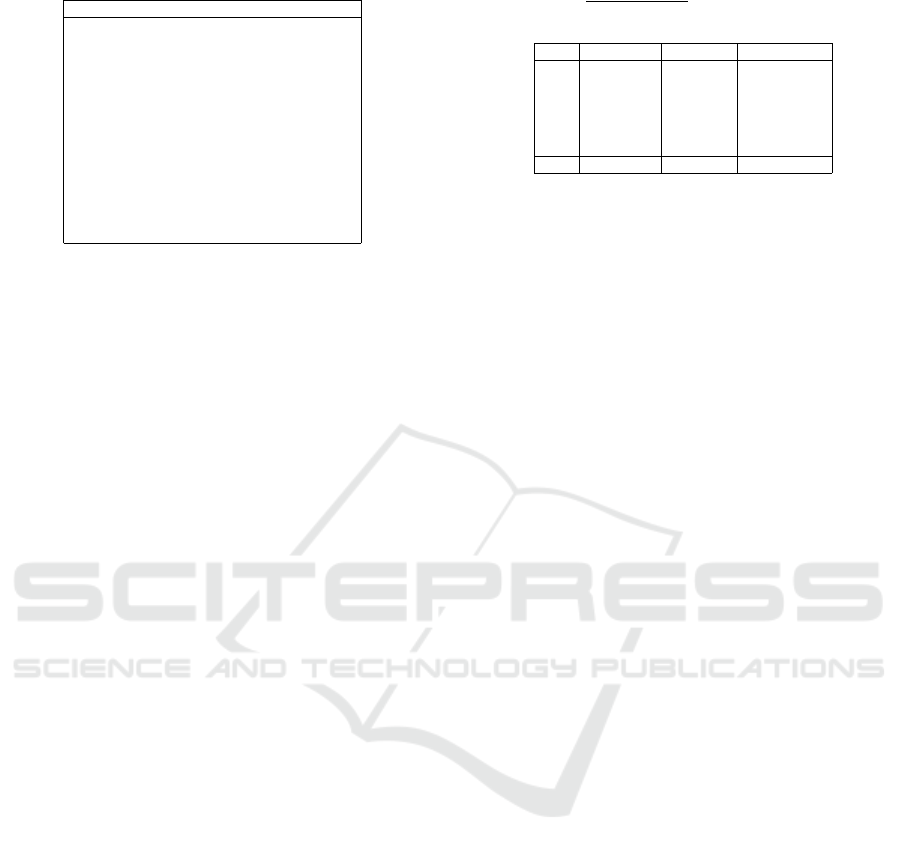

4.2 Interaction Concept

The interaction concept is based on hand gestures

(visible as two virtual gloves that visualize the user’s

hand and finger poses) and simple voice commands.

It furthermore consists of two tools, the model tool

and the tree tool, as well as the information board

serving as a user guidance system.

4.2.1 Model Tool

The model tool is used for direct model manipula-

tion and is active right after system startup. It can

be divided in two different input modes, the selec-

tion mode and the manipulation mode. Modes can

be switched using the voice command ’select’ (to

selection mode) and one of the manipulation com-

mands ’scale’, ’translate’ and ’rotate’ (to manipula-

tion mode). Figure 2 details the interaction concept

of the model tool.

The main reason for separating interactions that

way is the ambiguity of hand gestures. In some cases,

the recognition system was unable to separate be-

tween a model part selection and a translation or ro-

tation transformation since both use the same grasp

gesture. The grasp gesture was chosen since initial

tests which involved basic recognition tasks for all

supported gestures revealed that it is the most robustly

recognized gesture available.

<<move hand into

primitive>>

Selection Mode

Manipulation Mode

"append" "remove"

Primitive is

highlighted

Primitive is

selected

Primitive is de-

selected

"group"

"un-group"

Selected

primitives are

grouped

Group does not

exist anymore

"translate" "rotate"

Start

"scale"

Virtual handles

are visible

<<grab virtual handles

and perform

transformations>>

"select"

Figure 2: Interaction concept of the model tool.

Selection Mode. The initial application state can

be seen in Figure 3 (a): The triangle mesh of the

loaded model is displayed in grey. The user can enter

the selection mode by saying the word ’select’. The

mode change is additionally highlighted through an

on-screen message. Using virtual hands, the user can

enter the volume of the model which highlights the

hovered primitives in green (Figure 3 (b)). This imi-

HUCAPP 2021 - 5th International Conference on Human Computer Interaction Theory and Applications

122

tates the hovering gesture well known from desktop-

based mouse interfaces. Once highlighted, the user

can append the primitive to the list of selected primi-

tives by using the voice command ’append’. Selected

primitives are rendered in red (Figure 3 (c)). This

way, multiple primitives can be selected. In order to

remove a primitive from the selection, the user’s hand

must enter the primitives volume and use the voice

command ’remove’. Multiple selected primitives can

be grouped together with the voice command ’group’

which is useful in situations where the selected primi-

tives should behave like a single primitive during ma-

nipulation, e.g., when a rotation is applied. A group

is displayed in blue (see Figure 3 (d)) and can be dis-

solved by saying ’un-group’.

(a) Initial State. (b) Highlighted.

(c) Selected. (d) Grouped.

Figure 3: Illustration of all possible states of a primitive.

Manipulation Mode. For the manipulation of se-

lected or grouped primitives (in the following: a

model part), three basic transformations are avail-

able: translation, rotation and scaling. The currently

used transformation is selected via a voice command

(’translate’, ’rotate’ and ’scale’). The manipulation

mode is entered automatically after invoking one of

these commands. The selected transformation is high-

lighted through the displayed Virtual Handles (Con-

ner et al., 1992) (three coordinate axes with a small

box at their ends, pointing in x-, y-, and z-direction as

shown in Figure 4a).

Rotation. Per-axis rotations are done by grabbing the

small boxes at the end of each coordinate axis and

performing a wrist rotation. The rotation is directly

applied to the corresponding model part axis. Alter-

natively, the sphere displayed at the center of the coor-

dinate system can be grabbed and rotated. The sphere

rotations are directly applied to the model part. This

by-passes the restrictions of per-axis rotations and al-

lows for faster manipulation at the cost of precision.

Translation. Per-axis translations work like per-axis

rotations. However, instead of rotating the wrist, the

grabbed boxes can be moved along the corresponding

axis which results in a direct translation of the model

part. The sphere in the center of the coordinate axis

can be grabbed and moved around without any restric-

tions. Resulting translations are directly applied to the

model part.

Scaling. Per-axis scaling works similar to per-axis

translation. The use of the sphere at the center of

the coordinate system is not supported since it cannot

be combined with a meaningful gesture for scaling.

The transformation to use can be switched in manip-

(a) Virtual Handles. (b) Highlighting.

Figure 4: Virtual handles (a) and the tree tool (b).

ulation mode by invoking the aforementioned voice

commands. If the primitive selection or group should

be changed, a switch to the selection mode is neces-

sary.

4.2.2 Tree Tool

The tree tool displays a representation of the model’s

CSG tree using small spheres as nodes. It appears

above the user’s left hand when the user holds it up-

wards. Each leaf node corresponds to a primitive,

the inner nodes represent the Boolean operators. Op-

eration nodes have textures that depict the operation

type (∪, ∩, −). The user can change the operation

type of a node by grabbing the corresponding sphere

and invoking one of the following voice commands

(’change to union’, ’change to inter’, ’change to sub’).

The tree tool also allows highlighting multiple prim-

itives at once by grabbing their parent node. Once

primitives are highlighted, their corresponding nodes

are displayed in green as well (see Figure 4b).

4.2.3 Information Board

The Information Board depicts the current state of the

application, the manipulation task, and all voice com-

mands including their explanations. The board is al-

ways visible as a billboard and helps the user to mem-

orize voice commands and to be aware of the current

interaction mode.

Combining Gesture and Voice Control for Mid-air Manipulation of CAD Models in VR Environments

123

5 EVALUATION

A usability study was conducted in order to evaluate

the proposed interaction concept. Its goal was to val-

idate whether the concept is easy to understand, also

for novices in the field of VR and CAD, and whether

the combination of hand gesture- and voice control is

perceived as a promising idea for intuitive CAD mod-

eling.

5.1 Participants

Five student volunteers, three females and two males

participated in the study. Two of them never used VR

headsets before. Four participants have a background

in Computer Science, one participant is a student of

Molecular Life Science. Details about the participants

are depicted in Table 1.

Table 1: Study participants. Abbreviations: Background

in Computer Science (CS), User Experience Design (UXD)

and Molecular Life Science (MLS). Experience in Com-

puter Aided Design (CAD) or Virtual Reality (VR).

Gender Age Background VR CAD

male 30-40 UXD Yes No

female 20-30 CS Yes Yes

female 20-30 MLS No No

female 20-30 CS No No

male 20-30 CS No No

5.2 User Study Setup

Participants could move around freely within a radius

of two square meters. The overall study setting is

shown in Figure 5. As an introduction, the partici-

Figure 5: Participants conducting the user study.

pants were shown a simple CAD object (Object 1 in

Figure 6) together with the information board to fa-

miliarize themselves with the VR environment. Up-

coming questions were answered directly by the study

director.

After the introduction, the participants were asked

to perform selection and modification tasks (see Table

2 for the list of tasks) where they were encouraged to

share their thoughts and feelings using a Thinking-

Aloud methodology (Boren and Ramey, 2000). The

tasks were picked such that all possible interactions

with the prototype were covered. After completing

these tasks, two more objects (Objects 2 and 3 in Fig-

ure 6) were shown to the participants, and they were

asked to do the same tasks as with Object 1. The

whole process was recorded on video for further re-

search and evaluation purposes.

The participants’ actions, comments and experi-

enced difficulties were logged in order to distill the

advantages and disadvantages of the proposed inter-

action concept. In addition, problems that came up

during the test could be directly addressed and dis-

cussed.

Table 2: Tasks for the participants.

Tasks

Try to select the circle and the cube together.

Deselect the selected primitives.

Select the same primitives using the object tree.

Rotate a primitive by 90 degrees.

Scale a primitive.

Move a cylinder.

Change an operator from union to subtraction.

(a) Object 1. (b) Object 2. (c) Object 3.

Figure 6: User study objects (Friedrich et al., 2019).

5.3 Interviews

After finishing the aforementioned tasks, an interview

was conducted in order to gain further insight into the

participants’ user experience. Each interview started

with general questions about pre-existing knowledge

of VR and CAD software which should help with

the classification of the given answers (see Table 3).

Statements were assembled based on the catalogue of

Table 3: Selected questions for the interview.

Questions

How much experience do you have in virtual

reality applications?

How much experience do you have in CAD/3D

modeling software?

How do you see our control method compared to a

mouse based interface?

What is your opinion on pointer devices?

questions (Bossavit et al., 2014) (see Table 4) which

served as an opener for discussion. Interviews were

digitally recorded and then transcribed.

5.4 Results

All results discussed in the following were derived

from the interview transcriptions and video record-

HUCAPP 2021 - 5th International Conference on Human Computer Interaction Theory and Applications

124

Table 4: Statements given to initiate discussion.

Statements

I found the technique easy to understand.

I found the technique easy to learn.

I found it easy to select an object.

I found it easy to group multiple objects.

I found translation easy to do.

I found rotation easy to do.

I found scaling easy to do.

I found the tree tool easy to understand.

The tree tool was useful to understand the

structure of the object.

The change of the Boolean operators was easy to

understand.

ings. In general, the interaction design was well re-

ceived by the participants even in this early stage of

prototype development. All users stated that they un-

derstood the basic interaction idea and all features of

the application.

However, it was observed that users had to get

used to the separation between selection and ma-

nipulation mode which was not entirely intuitive to

them. For instance, some participants tried to high-

light primitives in the edit mode. In this case, addi-

tional guidance was needed. All users stated that the

colorization of objects is an important indication to

determine the state of the application and which ac-

tions can be executed.

The information board was perceived as being very

helpful but was actively used by only three partici-

pants. Two users struggled with the information board

not always being in the field of view which necessi-

tated a turn of the head to see it. Only one user re-

alized that the current state of the application is dis-

played there as well.

Hand gesture-based model manipulation was very

well received by the users. The hover and grab ges-

tures did not need any explanation. Four users had

trouble using their hands due to sensor range issues.

The voice control was evaluated positively. However,

participants reported that the command recognition

did not always work. The overall success rate was

70.7% (see Table 5). Interestingly, both male partici-

pants have significantly higher success rates than the

three female participants which might be due to dif-

ferent pitches of their voices.

In addition, some command words like ’append’

confused the users, because they expected a new

primitive to be appended to the object. This indicates

that an intuitive voice command design is essential

but, at the same time, hard to achieve.

The manipulation technique including the Virtual

Handles was also evaluated positively. All users high-

lighted the intuitiveness of translation and scaling in

particular. Two participants missed the functionality

of uniform scaling. One participant needed an extra

Table 5: Overview of voice control success rates

(success rate =

recog.

recog.+not recog.

). Success means, that a com-

mand was successfully recognized by the system.

User recognized not recog. Success Rate

P1 60 12 83.3%

P2 52 35 59.8%

P3 49 23 68.1%

P4 55 27 67.1%

P5 36 12 75.0%

252 109 ∅70.7%

explanation in order to understand the intention of the

sphere in the center (axis-free object manipulation).

The rotation was not fully transparent to the par-

ticipants. All mentioned that they did not immediately

recognize the selected rotation axis and instead of ro-

tating the cubes at the end of the handle tried to move

the complete handle.

The tree tool was evaluated positively. All partic-

ipants used it to understand and manipulate the struc-

ture of the object. One participant liked that it was

easy-to-use and always accessible. Two participants

rated it as an intuitive tool without having an in-depth

knowledge of what exactly a CSG tree is. However,

one participant could not directly see the connection

between the spheres in the tree and the corresponding

primitives. Thus, we could observe users hovering

random nodes to see the assigned primitive, trying to

select specific primitives. Three users had difficulties

in understanding the Boolean set-operations.

The participants showed different preferences for

highlighting primitives. Two users rather used the tree

tool while the others favored the hover gesture-based

approach. The selection as well as the de-selection

was rated positively mostly for being simple to use.

Especially while dealing with more complex objects,

it was perceived more difficult to select the desired

primitive. This was also due to the size of the ele-

ments and due to a smaller distance to the next prim-

itive, which makes hovering more difficult. It was

also observed that in unfavorable cases, the selection

hand covered the left hand so that the sensor could

no longer detect its palm. As a result, the tree disap-

peared. This made it more difficult for users to select

a sphere in the tree.

5.5 Discussion

5.5.1 Limitations

The accuracy of hand tracking is very important.

Even though only very simple gestures need to be de-

tected, tracking problems occurred repeatedly for no

specific or obvious reason. This led to problems like

unwanted transformations, especially when the end of

a gesture was not detected correctly. However, the

Combining Gesture and Voice Control for Mid-air Manipulation of CAD Models in VR Environments

125

study participants were able to gain comprehensive

insights in the application’s interaction flow.

Furthermore, the group of participants was rather

small and none of them had any experience with ex-

isting mid-air interaction techniques. Future versions

of the prototype should be tested with more users,

also taking different target user groups into account

(CAD modelling experts and beginners, ...). In addi-

tion, tasks should be performed also using standard

CAD modeling software for comparison.

5.5.2 Findings

The reported flat learning curve shows the potential

of the proposed approach. After just a few explana-

tions and a few minutes of training, users were able

to modify 3D objects, even those participants without

VR or CAD modelling experience.

The Virtual Handle approach is a suitable and in-

tuitive three DOF manipulation tool which can be

used for translation, rotation, and scaling in virtual

environments. For those actions, our prototype needs

to be improved, since the implementation of the rota-

tion transformation was not perceived well.

Using additional voice commands for interacting

with the system is a good way to simplify the graphi-

cal user interface. However, voice interfaces must be

designed carefully in order to be intuitive while com-

mand recognition must work flawlessly.

The examination and manipulation of objects us-

ing the CSG tree structure works well even for

novices. The tree tool can be understood and used

without having any knowledge about the theory of

CSG trees. However, the system quickly reaches its

limits if the object consists of many small primitives.

Furthermore, some users reported that they had diffi-

culties imagining intersection and subtraction opera-

tions in advance. In addition, the more complex the

objects become, the more difficult it is to manipulate

them using the proposed method.

6 CONCLUSION

In this work, a novel, multi-modal mid-air manipu-

lation technique for CSG-based 3D objects was pre-

sented. To make the interaction as intuitive as possi-

ble, the developed prototype is based on three control

elements: Unimanual gesture controls via the demon-

strated Virtual Handles, voice control and a virtual

widget we named ’the tree tool’ for direct CSG tree

manipulation. We conducted a qualitative user study

which showed that users learn and understand our

manipulation method very quickly. For future work,

we would like to add missing functionality (e.g., for

adding new primitives), evaluate our approach by

conducting quantitative and comparative user studies,

and extend the voice command concept more in the

direction of natural language dialogue systems.

REFERENCES

Boren, T. and Ramey, J. (2000). Thinking aloud: reconcil-

ing theory and practice. IEEE Transactions on Profes-

sional Communication, 43(3):261–278.

Bossavit, B., Marzo, A., Ardaiz, O., De Cerio, L. D., and

Pina, A. (2014). Design choices and their implications

for 3d mid-air manipulation techniques. Presence:

Teleoperators and Virtual Environments, 23(4):377–

392.

Bowman, D., Kruijff, E., LaViola Jr, J. J., and Poupyrev,

I. P. (2004). 3D User interfaces: theory and practice,

CourseSmart eTextbook. Addison-Wesley.

Bowman, D. A. and Hodges, L. F. (1997). An evaluation of

techniques for grabbing and manipulating remote ob-

jects in immersive virtual environments. In Proceed-

ings of the 1997 symposium on Interactive 3D graph-

ics, pages 35–ff.

Bowman, D. A. and Hodges, L. F. (1999). Formalizing the

design, evaluation, and application of interaction tech-

niques for immersive virtual environments. Journal of

Visual Languages & Computing, 10(1):37–53.

Caputo, F. M. (2019). Gestural interaction in virtual envi-

ronments: User studies and applications. PhD thesis,

University of Verona.

Chu, C.-C., Dani, T. H., and Gadh, R. (1997). Multimodal

interface for a virtual reality based computer aided de-

sign system. In Proceedings of International Confer-

ence on Robotics and Automation, volume 2, pages

1329–1334. IEEE.

Conner, B. D., Snibbe, S. S., Herndon, K. P., Robbins,

D. C., Zeleznik, R. C., and Van Dam, A. (1992).

Three-dimensional widgets. In Proceedings of the

1992 symposium on Interactive 3D graphics, pages

183–188.

Friedrich, M., Fayolle, P.-A., Gabor, T., and Linnhoff-

Popien, C. (2019). Optimizing evolutionary csg tree

extraction. In Proceedings of the Genetic and Evolu-

tionary Computation Conference, GECCO ’19, page

1183–1191, New York, NY, USA. Association for

Computing Machinery.

Houde, S. (1992). Iterative design of an interface for easy 3-

d direct manipulation. In Proceedings of the SIGCHI

conference on Human factors in computing systems,

pages 135–142.

Kim, T. and Park, J. (2014). 3d object manipulation using

virtual handles with a grabbing metaphor. IEEE com-

puter graphics and applications, 34(3):30–38.

Kou, X., Xue, S., and Tan, S. (2010). Knowledge-guided

inference for voice-enabled cad. Computer-Aided De-

sign, 42(6):545–557.

HUCAPP 2021 - 5th International Conference on Human Computer Interaction Theory and Applications

126

LaValle, S. (2017). Virtual Reality, volume 1. Cambridge

University Press.

Lee, M., Billinghurst, M., Baek, W., Green, R., and Woo,

W. (2013). A usability study of multimodal input in

an augmented reality environment. Virtual Reality,

17(4):293–305.

Mendes, D., Caputo, F. M., Giachetti, A., Ferreira, A., and

Jorge, J. (2019). A survey on 3d virtual object manip-

ulation: From the desktop to immersive virtual envi-

ronments. In Computer graphics forum, volume 38,

pages 21–45. Wiley Online Library.

Mendes, D., Relvas, F., Ferreira, A., and Jorge, J. (2016).

The benefits of dof separation in mid-air 3d object

manipulation. In Proceedings of the 22nd ACM Con-

ference on Virtual Reality Software and Technology,

pages 261–268.

Requicha, A. G. (1980). Representations for rigid solids:

Theory, methods, and systems. ACM Comput. Surv.,

12(4):437–464.

Robinett, W. and Holloway, R. (1992). Implementation

of flying, scaling and grabbing in virtual worlds. In

Proceedings of the 1992 symposium on Interactive 3D

graphics, pages 189–192.

Song, P., Goh, W. B., Hutama, W., Fu, C.-W., and Liu, X.

(2012). A handle bar metaphor for virtual object ma-

nipulation with mid-air interaction. In Proceedings of

the SIGCHI Conference on Human Factors in Com-

puting Systems, pages 1297–1306.

Wang, R., Paris, S., and Popovi

´

c, J. (2011). 6d hands: mark-

erless hand-tracking for computer aided design. In

Proceedings of the 24th annual ACM symposium on

User interface software and technology, pages 549–

558.

Xue, S., Kou, X., and Tan, S. (2009). Natural voice-enabled

cad: modeling via natural discourse. Computer-Aided

Design and Applications, 6(1):125–136.

Zheng, J., Chan, K., and Gibson, I. (2000). A vr-based

cad system. In International Working Conference on

the Design of Information Infrastructure Systems for

Manufacturing, pages 264–274. Springer.

Zhong, Y. and Ma, W. (2004). An approach for solid

modelling in a virtual reality environment. In Virtual

and Augmented Reality Applications in Manufactur-

ing, pages 15–42. Springer.

Combining Gesture and Voice Control for Mid-air Manipulation of CAD Models in VR Environments

127