Multi-view Real-time 3D Occupancy Map for Machine-patient Collision

Avoidance

Timothy Callemein, Kristof Van Beeck and Toon Goedem

´

e

EAVISE, PSI, KU Leuven, Jan Pieter de Nayerlaan 5, Sint-Katelijne-Waver, Belgium

Keywords:

Cobots, Real-time, 3D Occupancy, Multi-view.

Abstract:

Nowadays - due to advancements in technology - cooperative robots (or cobots) find their way outside the more

traditional industrial context. They are used for example in medical scenarios during operations or scanning of

patients. Evidently, these scenarios require sufficient safety measures. In this work, we focus on the scenario

of an X-ray scanner room, equipped with several cobots (mobile scanner, adjustable tabletop and wall stand)

where both patients and medical staff members can walk around freely. We propose an approach to calculate a

3D safeguard zone around people that can be used to restrict the movement of the cobots to prevent collisions.

For this, we rely on four ceiling-mounted cameras. The goal of this work is to develop an accurate system

with minimal latency at limited hardware costs. To calculate the 3D safeguard zone we propose to use CNN

people detection or segmentation techniques to provide the silhouette input needed to calculate a 3D visual

hull. We evaluate several state-of-the-art techniques in the search of the optimal trade-off between speed and

accuracy. Our research shows that it is possible to achieve acceptable performance processing four cameras

with a latency of 125ms with a precision of 54% at a recall of 75%, using the YOLACT++ model.

1 INTRODUCTION

In industrial processes, steady growth in robotics has

led to faster and more precise manufacturing, decreas-

ing the requirement of heavy human labour. These in-

dustrial robots often execute a preprogrammed repet-

itive task. However, more recently such robots are

also employed outside of an industrial context, and

- instead of a fixed preprogrammed task - they work

together with a human operator in a cooperative man-

ner. Hence, they are often referred to as cobots (Ed-

ward et al., 1999; Peshkin and Colgate, 1999; Vil-

lani et al., 2018). Even though these cobots are su-

pervised and controlled by a human, important safety

precautions must be taken into account to e.g. avoid

collisions. In this work, we propose a vision-based

safety system, which automatically calculates a safe-

guard zone around people in real-time. This safeguard

zone can be used as an off-limits zone for the cobots,

restricting their movements so they are unable to col-

lide with a person present inside the robot’s move-

ment space. Our system is able to calculate this real-

time person 3D safeguard zone using several multiple

viewpoint cameras as input. Our method uses visual

data, which nowadays is cheap, easily expandable in

numbers, and capable of being processed both cen-

tralised and decentralised.

Figure 1: Use case example: Scanner room equipped with

the mobile scanner, a bucky and scanning table.

To develop our system, we focus on a specific real-

life clinical scenario: an X-ray scanner room with

several cobots installed in it. In this scanner room,

both patients and medical staff members are able to

walk around freely. By calculating an off-limits zone

automatically, we can prevent the robot from collid-

ing with all people present, ensuring their safety.

An example of a scanner room, with all the pre-

viously described equipment installed is illustrated in

figure 1.

Current safety measures only consist of a dead

man’s switch, operated by the medical staff. When-

ever a collision is imminent, the switch is released

freezing all motor functions in the room. This

Callemein, T., Van Beeck, K. and Goedemé, T.

Multi-view Real-time 3D Occupancy Map for Machine-patient Collision Avoidance.

DOI: 10.5220/0010151906270636

In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2021) - Volume 4: VISAPP, pages

627-636

ISBN: 978-989-758-488-6

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

627

method, however, heavily relies on the presence and

awareness of the staff member. Our goal, therefore,

is to automatically calculate a 3D safeguard area that

can be used as an off-limits zone for the cobots, re-

sulting in a much safer environment.

Such a safeguard, however, should meet stringent

criteria to be usable in practice. Evidently, a high

accuracy should be achieved at a low-latency per-

formance. Due to the safety aspect, a higher recall

should be prioritised over a high precision: it is better

to unnecessarily stop the robot, than to stop the robot

too late or not at all. Furthermore, the room lighting

conditions can vary greatly, especially when the room

has windows.

In a nutshell, our approach calculates an occu-

pancy map containing the voxels of all people present

in the room, to be used as a reference of positions that

are inaccessible for any robotic component. For this,

we rely on multiple cameras installed in the scanner

room at strategical locations (e.g. four cameras placed

at each ceiling corner of the room). The cameras are

positioned in such a manner to have a visual overlap

of the safeguarded area, allowing us to calculate 3D

positions from multiple 2D detections. To generate

the 2D detections, we compared a number of state-of-

the-art object detectors, including both bounding box

and instance segmentation.

Note that our approach is easily generalisable to

other cobot applications. In this work, we employ the

X-ray scanner room as a challenging, real-life appli-

cation. Furthermore, the detector in our approach can

easily be extended to other objects than people.

To summarize, our main contributions are:

• We developed a flexible and fast multi-view

vision-based system capable of calculating a 3D

safeguard zone for person-cobot collision avoid-

ance.

• We compared both bounding box producing de-

tectors and instance segmentation techniques as

input for a visual hull calculation.

• We performed extensive experiments to determine

the optimal speed and accuracy trade-off, using

different state-of-the-art people detectors.

We tested the proposed approach in a real-live

lab setting, and for evaluation we used a public

dataset CMU (Joo et al., 2015), containing point

cloud ground truth of various scenarios taken from

many calibrated camera perspectives.

The remainder of this paper is structured as fol-

lows. Section 2 discusses various techniques pro-

posed in literature to calculate a 3D representation

of objects. Section 3 follows, describing our test

dataset, and specifying which sequences were used

during evaluation, alongside a description of the pre-

processing techniques we developed. Our proposed

approach is detailed in section 4, followed by section

5 discussing our results on the test datasets. We end

with a conclusion and future work in section 6.

2 RELATED WORK

One of the primary concerns involving cobots, is the

safety of the operator (Vicentini, 2020; Villani et al.,

2018). When working nearby robotic parts, an emer-

gency button must be available at all times. However,

during a manufacturing process when something goes

wrong, it might take some time before the operator

can use the emergency button. Automatically trigger-

ing an emergency stop reduces this delay, increasing

the safety of the operator. Several sensing techniques

exist today, e.g. a torque sensor that measures move-

ment resistance might trigger an error when too much

force is required (Phan et al., 2018). However, these

sensors only act when a collision has occurred which

is not ideal and might scare the patient. Other tech-

niques use capacitive or laser tactile proximity sen-

sors (Navarro et al., 2013; Safeea and Neto, 2019),

stopping an imminent collision between the operator

and the robot only nearly before it happens. In our use

case, the patients and medical staff are untrained and

therefore unaware of how close the robot comes be-

fore stopping. Furthermore, only stopping when near

something might still result in a crash depending on

the configured proximity distance of the sensors.

Instead of mounted sensors on each mobile

robotic component, Mohammed et al. (Mohammed

et al., 2017) installed two depth cameras nearby the

cobot and operator. By using two kinect sensors they

calculate a 3D occupancy grid, enabling a safeguard

zone of the people present. However, they rely on

prior background data to filter out the 3D noise and

known robot position to filter out the person points.

In our case, this technique is not possible since peo-

ple walk around in the room in addition to having no

static background to filter out 3D noise. Furthermore,

Mohammed et al. (Mohammed et al., 2017) currently

only uses two depth sensors placed nearby the oper-

ator and the small robotic arm offering little chances

of occlusions by other objects or people. Whereas

our application, a large-sized scanner room equipped

with large mobile equipment, has a higher chance

of occlusions on certain cameras. To overcome this,

more than two cameras can be installed capturing an

overview from multiple viewpoints which might be

combined to partially overcome camera occlusions.

However, increasing the number of depth cameras

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

628

like the kinect comes with an increase in complexity

and increase of the required computational power and

hardware cost. Instead, we chose cheaper RGB sen-

sors allowing an upscale with a feasible price, which

will result in a less complex setup because the possi-

bility of hardware sync triggering.

Most research requiring the 3D positions of peo-

ple, often use a 3D skeleton-based representation.

Whereas some techniques aim to calculate 3D pose

keypoints (Sarafianos et al., 2016; Nie et al., 2017)

from a single camera image, others use multi-view

(Slembrouck et al., 2020) combining 2D pose key-

points together. The current state-of-the-art in both

single view and multi-view 3D pose estimation tech-

niques achieve real-time speed results with accept-

able accuracies for their use cases (Slembrouck et al.,

2020; Sarafianos et al., 2016; Nie et al., 2017). How-

ever, these techniques only output pose keypoints,

whereas for our application we require a 3D bound-

ing volume. Furthermore, both state-of-the-art tech-

niques still have a joint position error of around 5cm,

which for our application is not feasible.

Techniques like (Shi et al., 2020; Yoo et al., 2020)

(evaluated using (Geiger et al., 2012)) show good per-

formance when trying to directly estimate a car and

pedestrian 3D bounding box. The best performing

technique (Shi et al., 2020) uses a 3D RCNN with

available LIDAR point clouds to calculate the 3D

bounding boxes around objects. Although this ad-

ditional sensory data is easily acquired from a vehi-

cle perspective, in our case where we capture from

a top-down perspective, occlusions might reduce the

performance greatly. Furthermore, we require a more

tight 3D enclosure around the person, whereas a 3D

bounding box might be overestimating the person, re-

stricting the movement of the cobots.

A classic method called visual hull (Laurentini,

1994) is capable of acquiring a 3D voxel grid of an

object, using the silhouette of the object taken from

multiple perspectives. (Abdelhak and Chaouki, 2016;

Matusik et al., 2000; Vlasic et al., 2008; Furukawa

and Ponce, 2006; Esteban and Schmitt, 2004). These

techniques, however, often rely on a fast background

subtractor whilst controlling the environment back-

ground and lighting to improve the quality of the ac-

quired foreground, i.e. the silhouette. The mobile na-

ture of the cobots might cause them to be mistaken

for people by the background subtractor. To over-

come the aforementioned challenges, we propose to

use object detection techniques as input for such a vi-

sual hull approach, ensuring that our system works

under various lighting changes and that the resulting

safeguard zone only includes people.

Object detectors in most cases output a bound-

ing box around the object, which for many use-cases

is enough. Multi-stage object detectors (e.g. (Ren

et al., 2015)) achieve very high accuracy by first cal-

culating box proposals, and then performing box clas-

sification. However, the use of multiple stages in-

creases computational complexity, rendering it diffi-

cult to achieve real-time performance. Single-stage

approaches (Liu et al., 2016; Lin et al., 2017; Redmon

and Farhadi, 2018) outperform the multi-stage tech-

niques in terms of speed, with only a minor decrease

in accuracy. Increasing the speed performance even

further with only minor decreases of the accuracy is

often achieved by changing the neural network back-

bone calculating the image features. For example, the

recently proposed MobileNetv3+SSD (Howard et al.,

2019), has a MobileNetV3 backbone optimised for

embedded platforms which minimises the number of

parameters and therefore the required computational

cost.

The bounding boxes produced by these object de-

tection approaches from multiple viewpoint cameras

already allow to calculate a coarse visual hull. How-

ever, exact segmentation of the persons in the image

evidently increases the overall accuracy of the system,

since bounding boxes often tend to give an overesti-

mation of the 3D space. Techniques like (He et al.,

2017; Cai and Vasconcelos, 2019) add an additional

stage after the multi-stage bounding box object detec-

tors to generate an instance mask. However, adding

an additional stage will decrease the network speed

even further. A recent technique called YOLACT++

(Bolya et al., 2019b; Bolya et al., 2019a) aims at sin-

gle shot instance segmentation by simultaneously de-

tecting the bounding box and proposing mask proto-

types of each object in parallel. This ensures real-time

performance at the cost of only a small drop in accu-

racy.

In this work, we will search for the optimal

trade-off between speed and accuracy by compar-

ing both the calculated 3D safeguard zones us-

ing the bounding box detections from the Mo-

bileNetv3+SSD (Howard et al., 2019) method (in its

large and small versions) against the instance seg-

mentations from YOLACT++ (Bolya et al., 2019a).

We compare the results also to more classical back-

ground subtraction techniques.

3 DATASET

To evaluate our system we require a public dataset

with people in various poses in additions to occlu-

sions, all taken from multiple calibrated top-down

camera perspectives. In our use case, we mainly fo-

Multi-view Real-time 3D Occupancy Map for Machine-patient Collision Avoidance

629

z

x

[0;0]

00_01

00_19

00_04

00_07

00_02

00_28

00_13

00_17

00_10

00_06

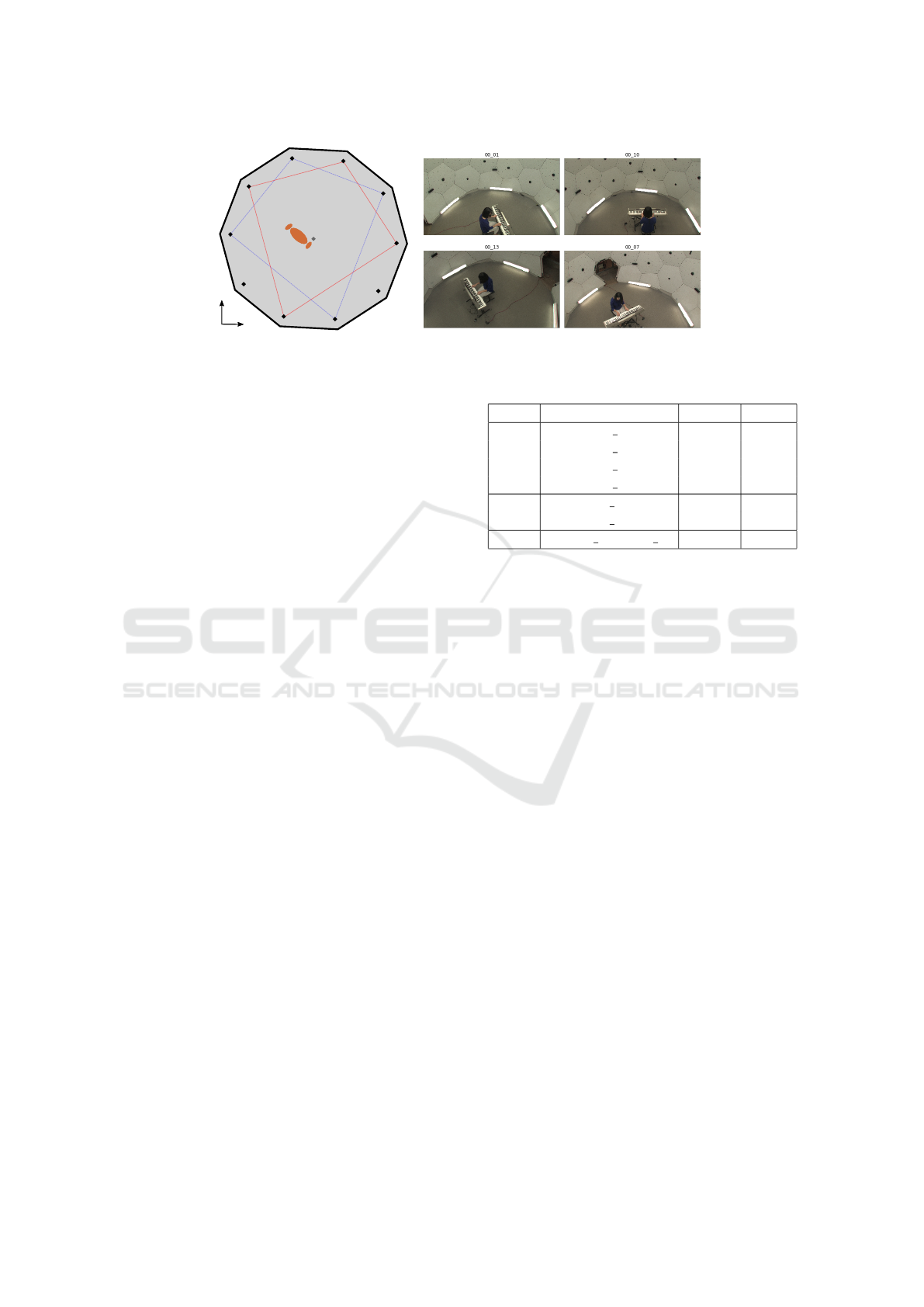

Figure 2: (left) Top-down scheme of the Panoptic dataset, showing the used cameras and two camera sets, (blue and red).

(right) Example frames from the piano sequence taken from the blue camera set.

cus on the 3D position of a single patient, walking

around the scanner room to take place on or in front

of the table or bucky. In this room, multiple top-down

wide-angle image sensors must be installed, captur-

ing the area accessible by the patient. In addition to

sensory data, person point cloud ground truth is re-

quired to measure the accuracy of our calculated pa-

tient occupancy map. We found two publicly avail-

able dataset resembling our use case best, the Panop-

tic Studio (Joo et al., 2015; Joo et al., 2017) and

Multi-View Operation Room (MVOR) dataset (Sri-

vastav et al., 2018). While the MVOR dataset fea-

tures an operation room with similar equipment as our

scanner room, too many people are present, with only

a limited amount of 3D poses and movement varia-

tions. Furthermore, the dataset has only images taken

from three cameras, with no person point cloud data.

The Panoptic dataset, however, contains many differ-

ent scenarios and pose variations of both single and

multiple people, taken from different viewpoints. Al-

though 3D point clouds acquired by the kinects are

available, they are automatically generated and in-

clude noise and other objects apart from people. Be-

low, we describe which sequences we used, followed

by our pre-processing techniques to filter out only the

person point clouds.

3.1 Sequences

The Panoptic dataset contains many different situa-

tions and sequences. As mentioned before, our ap-

plication mainly focuses on avoiding collision with

a single patient. To test various situations, we com-

posed three subsets composed using sequences taken

from the Panoptic studio dataset. Each of them will

test a different scenario and will for the remainder

of this paper be referred to as, the single, piano, and

multi set. Table1 shows which sequences were aggre-

gated from the Panoptic dataset.

The “single” set contains four sequences, each

containing a single person moving around with vari-

Table 1: Used sequences from the Panoptic dataset.

Set Sequences Frames People

Single

171026 pose1 1922 1

171026 pose2 1412 1

171204 pose1 2891 1

171204 pose2 1139 1

Piano

161029 piano1 278 2

161029 piano2 1295 1

Multi 170407 haggling a1 2489 3

ous poses. We subsampled the large sequences in time

(1 frame out of 10), since there is only little variation

between frames.

In our scanner room, the patients sometimes might

be partially occluded (e.g. by the measurement in-

struments or a wheelchair). Such exact situations are

not included in the Panoptic dataset sequences. How-

ever, some sequences show a pianist whose body is in-

deed partially occluded by her instrument, which we

used to simulate occluded patients (the “piano” set).

While other sequences with other interaction objects

are available, they are not stationary and therefore dif-

ficult to exclude from the ground truth point clouds,

explained in more detail in section 3.3.

The “multi” set shows multiple people walking

around in the small room, frequently going outside

the field-of-view of several cameras.

3.2 Camera Selection

To minimise occlusions and maximise the field-of-

view in the scanner room, the best option would be to

place the cameras in each corner of the scanner room,

providing a top-down overview.

The Panoptic dataset is recorded in a sphere-like

room with various types of cameras positioned in var-

ious locations (see figure 2). Ten wide-angle cameras

are installed at the top around the room providing a

top-down perspective. At the left of figure 2, a scheme

of the Panoptic setup is visible, with the approximated

locations and names of the cameras used in this work.

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

630

Pre-Processing

2D Dilation

None

Silhouettes

YOLACT++

MobileNetV3+SSD

Visual Hull

Thresh # Cams

Thresh Confidence

Post-Processing

None

3D Dilation

Output

Figure 3: Our proposed approach showing the four input cameras, each used component, the pipeline output (red) and the

pre-processed ground truth (green).

From these 10 cameras, we select a set of four cam-

eras (blue) in such a way that they mimic the positions

of the cameras in our scanner room, (example frames

at the right-hand side of Figure 2).

During the evaluation, all nine camera combina-

tions using these four relative camera positions are

used so no camera combination is arbitrarily chosen,

(e.g. next combination in red).

3.3 Pre-processing Ground Truth

A common problem when working with 3D data

points is that the sheer amount of data increases the re-

quired computation power very quickly. The ground

truth currently contains fine 3D positions with a high

resolution, which is not required for our safeguard

system. Therefore, we quantize the points to a reso-

lution of 5cm, reducing the number of points greatly,

which leads to a lower latency (due to the decrease in

computational power).

As we mentioned, we require the ground truth

point clouds of all people present in the room. How-

ever, since these point clouds were automatically gen-

erated using Kinect cameras solely based on captured

depth maps, other objects are present in these point

clouds. Therefore, in our second pre-processing step,

we filter out the people points using the available an-

notated 3D poses.

Since the point clouds were automatically gener-

ated based on Kinect depth maps only, the 3D per-

son point clouds are hollow inside. The lack of these

points poses no problem for a robot path planner since

the outer points will shield the inner points. However,

when comparing our generated 3D occupancy maps

to the ground truth, it will seem to have a decreased

accuracy due to these hollow regions. Therefore, we

fill the hollow upper body region, using the ground

truth 3D pose points of the neck and waist. These 3D

points are dilated once in 3D, creating a 3D volume

that we add to the ground truth point cloud to fill the

hollow upper body region.

4 APPROACH

In the previous section, we discussed the Panoptic

dataset, providing calibrated cameras images from

various positions and the pre-processed 3D point

cloud ground truth of each person. Our main goal

is acquiring a 3D safeguard zone that makes it pos-

sible to restrict the movements of robotic parts in the

scanner room, achieved by calculating a 3D people

occupancy map. Figure 3 shows a block diagram of

our complete approach. As input, we use four dif-

ferent viewpoints (i.e. cameras). In a first step, we

calculate the silhouettes of each person in the image.

These silhouettes are optionally pre-processed with a

2D dilation before the visual hull is calculated. Next,

this visual hull can be post-processed with a 3D di-

lation before being used as a 3D occupancy map. In

the next subsections, we describe each block in more

detail.

4.1 Silhouettes

Since our specific use-case involves person safety as-

pects, the latency should be minimal. Furthermore,

recall is more important than precision. Indeed, it is

much more costly to miss a person (which might get

hit by the robotic arm), then to generate a larger area

where the robot cannot be used. As a latency starting

point, we chose to use the MobileNetV3+SSD detec-

tor (both the small and large model) (Howard et al.,

2019). These models are heavily optimised for mo-

bile devices with low computational power and there-

fore have a small latency. However, this framework

outputs bounding boxes. Using a bounding box in-

stead of a silhouette to calculate the visual hull will

yield over-estimating the person’s 3D volume. There-

fore, we compare this with the single-shot instance

segmentation technique(Bolya et al., 2019a), trained

to output the masks of detected objects. Figure 4 illus-

trates an output example of these three different mod-

els on a single time frame from four viewpoints from

the Panoptic dataset. These visual results already re-

veal interesting observations. Visually comparing the

small MobileNetv3+SSD model output( fig. 4a), with

Multi-view Real-time 3D Occupancy Map for Machine-patient Collision Avoidance

631

(a) MobileNetV3+SSD Small

(b) MobileNetV3+SSD Large

(c) YOLACT++

Figure 4: Example output detections of the different frameworks.

the large model (fig. 4b), we notice that in almost all

cases all persons are found. The bounding box from

the small model is sometimes overestimated, and the

small person in the entry of the dome was not found.

Whereas the large model had a better detection rate,

the bounding boxes are more accurate and the small

person in the entry was found.

Because the overestimated detection only occurs

on a single frame, and the person in the entry is

actually not part of the ground truth. The output

of YOLACT++ (fig. 4c), is capable of detecting the

object bounding box along with the instance seg-

ments of each person with high confidence. How-

ever, YOLACT++ does not use pixel classification to

output these instance segmentation but uses prototype

masks to aggregate the single segmentation mask.

Each prototype contains both positive segmentation

pixel areas that are part of the object, and negative

pixels areas (background or a part of another object).

Together with the prototype mask, mask coefficients

are calculated that combine all the prototypes to either

agree or disagree together creating a full instance seg-

mentation mask. Therefore, the contours might have

a little offset from the actual person contour.

4.2 Visual Hull

We propose to construct the 3D occupancy map as fol-

lows. Firstly, Our case requires a minimum resolution

of 5 cm (see section evaluation 5.1 for more details on

the required specs), thus the resolution of the occu-

pancy map is reduced to 5cm. The total safeguard 3D

voxel grid contains 600.000 voxels, which are by de-

fault unoccupied. Next, we determine which of these

points are occupied (by persons) by combining the sil-

houette output from multiple top-down perspectives,

as determined above. For each camera viewpoint, we

calculate the projection cone of this camera. Where

it intersects with a silhouette, we increment the cor-

responding value for that voxel. This way, the final

voxel grid values represent the number of cameras

that contained a projected point of a silhouette. This

value, together with the minimal required cameras,

can be varied to output the 3D occupancy map (see

section 5.2).

4.3 Pre-and Post-processing

As explained before, the instance segmentation con-

tours often have a slight offset from the actual person

silhouette. This implies that some 3D projected points

(that should be part of the silhouette) fall outside of

the contour around the person. We quantized our grid

to a resolution of 5cm to decrease the number of 3D

points and to increase the processing speed. How-

ever, the quantization of the points can cause some

projected 3D points positioned near the contour to

either be shifted inside or outside of the person sil-

houette. We tested two different approaches to re-

duce the aforementioned effects. We can either dilate

the 3D occupancy map or perform a dilation of the

silhouettes output from YOLACT++ (i.e. in the 2D

domain). The latter is done by adding margins near

the contours, which allow for more projected points

to fall within the person detection silhouette. When

comparing both approaches, they both showed an in-

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

632

crease in recall, however, the 2D dilation is far less

computationally expensive. Furthermore, the time re-

quired to execute 2D dilation only slightly depends on

the number of detections, while the execution time of

the 3D dilation highly depends on the number of 3D

points. Therefore, we use the 2D dilation over the 3D

dilation.

5 EVALUATION

For our use case, a 3D safeguard system capable of

preventing collisions with people in an automated X-

ray scanner room, we search an optimal trade-off be-

tween speed and accuracy. This section first specifies

the minimum requirements for such a system devised

together with a manufacturer of X-ray scanner rooms,

followed by the qualitative results of our approach on

a single frame and video. Next, we will quantitatively

evaluate the accuracy, and discuss the accuracy-speed

trade-off. Finally, we will discuss the robustness of

our framework against occlusions.

5.1 Specifications

Experts in the field indicate a minimal speed of 5FPS,

in other words, the 3D safeguard output of the system

has a maximum allowed latency of 200ms. Further-

more, the 3D outputs must have a resolution of 5cm.

Such latency and resolution allow for optimal robot

control while assuring maximal safety. As explained

above, we prefer high accuracy and give priority to

high recall over a high precision.

5.2 3D Map

To compare the accuracy of our approach we com-

pare the calculated safeguard voxel grid with the pre-

processed ground truth. For each voxel in the ground

truth, we check whether it is found in the safeguard

voxel grid, producing a true positive. If this is not

the case this will produce a false negative. Finally, all

safeguard voxels that were not present in the ground

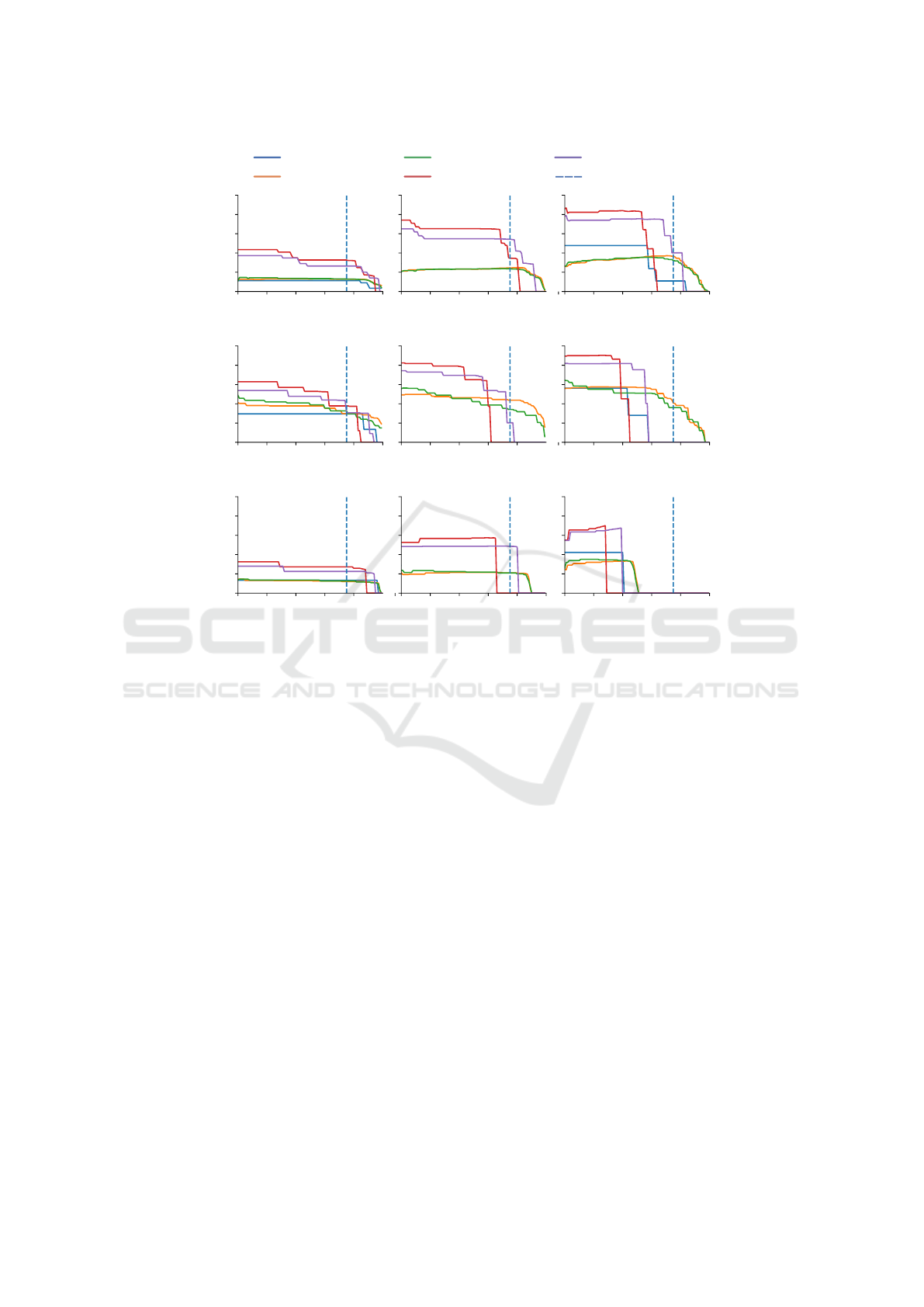

truth are counted as false positives. We sweep over the

threshold on the detection confidence of the bounding

boxes and silhouettes, using the previously mentioned

metrics to calculate precision-recall curves which al-

low us to define an optimal point, as shown in figure

6. Instead of determining an optimal point, we use

the precision at a minimum recall of 0.75 as a met-

ric to compare the different models and pre- or post-

processing techniques. We used the same method to

evaluate the influence of different minimum required

number of viewpoints from which a person must be

(a) Single set

(b) Piano set

(c) Multi set

Figure 5: Example output showing the output of Mo-

bileNetV3+SSD small, large and YOLACT++ with 2D di-

lation.

visible. With a minimum of 2 cameras producing a

higher recall with lower precision due to filtering out

fewer voxels. Whereas a min. of 4 cameras is more

strict with a lower recall and higher precision. From

the 10 different camera viewpoints, we consecutively

select a set of four relative camera positions (see fig. 2

for an example of two sets - red and blue). In total.

we thus evaluate 10 different sets of camera positions

for each frame. A single-precision result is calculated

by using the micro-average of all 10 sets. Figure 5

shows a qualitative evaluation for each test set (with

minimum 3 cameras), showing the detections on the

camera frames along with the 3D ground-truth and

output for each model

1

. Both figure 5a and 5b show

the output of a single person, clearly indicating that

1

Full video: https://youtu.be/n-HfHBgd-EI

Multi-view Real-time 3D Occupancy Map for Machine-patient Collision Avoidance

633

mobilenet_large

bgs

bgs

yolact++

mobilenet_small yolact++_2d

recall = 0.75

0.0 0.2 0.4 0.6 0.8 1.0

Recall

0.0

0.2

0.4

0.6

0.8

1.0

Precision

min cams = 2

0.0 0.2 0.4 0.6 0.8 1.0

Recall

min cams = 3

0.0 0.2 0.4 0.6 0.8 1.0

Recall

min cams = 4

(a) Single set

0.0 0.2 0.4 0.6 0.8 1.0

Recall

0.0

0.2

0.4

0.6

0.8

1.0

Precision

min cams = 2

0.0 0.2 0.4 0.6 0.8 1.0

Recall

min cams = 3

0.0 0.2 0.4 0.6 0.8 1.0

Recall

min cams = 4

(b) Piano set

0.0 0.2 0.4 0.6 0.8 1.0

Recall

0.0

0.2

0.4

0.6

0.8

1.0

Precision

min cams = 2

0.0 0.2 0.4 0.6 0.8 1.0

Recall

min cams = 3

0.0 0.2 0.4 0.6 0.8 1.0

Recall

min cams = 4

(c) Multi set

Figure 6: PR-curves of each model for the different subsets.

the 3D output of YOLACT++ based silhouettes (red)

is finer, compared to the bounding boxes approach

(yellow and blue). However, bounding boxes yield

a more coarse 3D estimation, which is to be expected

an overestimation of the volume of the person. For

the application at hand, this means that these meth-

ods will produce a wider safeguard zone around the

persons, hence a better recall (but worse precision) as

will be demonstrated below. Figure 5c shows a sim-

ilar behaviour, with less space between people near

each other on the bounding box method compared to

the instance segmentation approach.

5.3 Precision vs. Speed

Figure 7 display the measured performance of the sin-

gle, piano and multi test sets, showing the latency ver-

sus the precision (with a set minimum recall of 0.75)

for each model. Each configuration is represented

by a circle, with the colour representing the used de-

tection method. The size of the circle represents the

set required minimum number of viewpoints that con-

tributed to the voxels. All these experiments measur-

ing latency were executed on an i7-8750H with 32 GB

RAM with an RTX 2060 GPU.

As a baseline method to compare against, we

also used silhouettes procured by a Mixture of Gaus-

sians background subtraction background subtractor

(BGS) approach with an image resolution of 480 ×

270 (Zivkovic, 2004; Zivkovic and Van Der Heijden,

2006). Although we expected the MobileNetV3+SSD

models (using an image resolution of 224 × 224)

to be the best detection based approach, in terms

of latency, the large model seems to be almost 5%

slower than the YOLACT++ model with a larger in-

put resolution of 550 × 550. In terms of performance,

we show results as comparison in figure 6, showing

that YOLACT++ far outperforms both the BGS and

MobileNetV3+SSD. For MobileNetv3+SSD this is

mainly caused by the overestimating of the bounding

box silhouettes, causing many false positives. With

the BGS approach, we see that certain body parts are

missing, which required us to add sufficient dilation

to reach the minimum recall of 75%. Moreover, using

background subtraction it is unavoidable that people

disappear in the background when immobile, which is

in our application always the case as patients are lay-

ing on a table or standing still during scans. The BGS

results on this Panoptic dataset hence show a better

performance than what is expected in a real scanning

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

634

0.2

0.4

0.6

0.8

1.0

precision at recall=0.75

Single person

model

bgs

mobilenet_large

mobilenet_small

yolact++

yolact++_2d

min cams

2

3

out of 4

out of 4

100 105 110 115 120 125

latency

0.0

0.2

0.4

0.6

0.8

1.0

precision at recall=0.75

Single person

100 105 110 115 120 125

latency

0.0

0.2

0.4

0.6

0.8

1.0

precision at recall=0.75

Multiple people

100 105 110 115 120 125

latency

0.0

0.2

0.4

0.6

0.8

1.0

precision at recall=0.75

Partially occluded single

Figure 7: Latency vs. precision at a minimum recall of 0.75 for all sequences.

room. In the case of the Yolact++ approach, with no

missing body parts, adding the 2D dilation causes an

increase in both recall and precision.

5.4 Occlusions

In a second experiment, we evaluate the performance

of our framework with regard to occlusion. As seen

for the single test set results (top-left graph of fig. 7),

our framework achieves good performance in both

speed and accuracy. However, for sets with occlusion

(piano set and multi set, a drop in precision and recall

is seen. Figures 6c and 6b show that a decrease occurs

when the minimum number of required viewpoints is

set to 4 (i.e. all 4 cameras need to find the detections),

even causing some approaches to not reach the mini-

mum recall of 75%. This threshold is considered very

strict since any voxel not projected within a detection

on all four cameras is filtered out. Depending on the

level of occlusion, this is to be expected since missing

parts will not be compensated for by the other cam-

eras with no occlusion. Hence, our approach enables

us to create a safeguard zone, even around partially

occluded people, by setting this amount of required

views lower than the number of cameras installed.

6 CONCLUSIONS

In this work, we searched for a detection based ap-

proach capable of calculating a 3D safeguard region

to ensure person safety by restricting the movements

of cobots in e.g. medical scanning rooms. In this

paper, we proposed to extend the classic visual hull

3D estimation technique with CNN-based person de-

tection and segmentation methods, instead of the tra-

ditionally used background subtraction. We evalu-

ated several techniques on a public dataset comparing

their latency and precision at a guaranteed recall. Our

results show that the 2D dilated Yolact++ approach

reaches a precision of 54% with a recall of 75% with

a latency of 123ms. Even though the latency is higher

compared to a traditional BGS, it achieves higher pre-

cisions and still performs faster than the maximum la-

tency of 200 ms. In future work, a dataset featuring

the actual equipment could be gathered to evaluate

even further, adding the challenge of mobile cobots

causing more moving occlusions that are disastrous

for BGS.

ACKNOWLEDGEMENTS

This work is supported by VLAIO and AGFA NV via

the Start to Deep Learn TETRA project.

REFERENCES

Abdelhak, S. and Chaouki, B. M. (2016). High perfor-

mance volumetric modelling from silhouette: Gpu-

image-based visual hull. In IEEE/ACS 13th Interna-

tional Conference of Computer Systems and Applica-

tions (AICCSA). IEEE.

Bolya, D., Zhou, C., Xiao, F., and Lee, Y. J. (2019a).

Yolact++: Better real-time instance segmentation.

Bolya, D., Zhou, C., Xiao, F., and Lee, Y. J. (2019b). Yolact:

Real-time instance segmentation. In ICCV.

Multi-view Real-time 3D Occupancy Map for Machine-patient Collision Avoidance

635

Cai, Z. and Vasconcelos, N. (2019). Cascade r-cnn: High

quality object detection and instance segmentation.

arXiv preprint arXiv:1906.09756.

Edward, J., Wannasuphoprasit, W., and Peshkin, M. (1999).

Cobots: Robots for collaboration with human opera-

tors.

Esteban, C. H. and Schmitt, F. (2004). Silhouette and stereo

fusion for 3d object modeling. Computer Vision and

Image Understanding.

Furukawa, Y. and Ponce, J. (2006). Carved visual hulls for

image-based modeling. In European Conference on

Computer Vision. Springer.

Geiger, A., Lenz, P., and Urtasun, R. (2012). Are we ready

for autonomous driving? the kitti vision benchmark

suite. In CVPR. IEEE.

He, K., Gkioxari, G., Doll

´

ar, P., and Girshick, R. (2017).

Mask r-cnn. In Proceedings of the IEEE international

conference on computer vision.

Howard, A., Sandler, M., Chu, G., Chen, L.-C., Chen, B.,

Tan, M., Wang, W., Zhu, Y., Pang, R., Vasudevan, V.,

et al. (2019). Searching for mobilenetv3. In Proceed-

ings of the IEEE International Conference on Com-

puter Vision.

Joo, H., Liu, H., Tan, L., Gui, L., Nabbe, B., Matthews,

I., Kanade, T., Nobuhara, S., and Sheikh, Y. (2015).

Panoptic studio: A massively multiview system for so-

cial motion capture. In The IEEE International Con-

ference on Computer Vision (ICCV).

Joo, H., Simon, T., Li, X., Liu, H., Tan, L., Gui, L.,

Banerjee, S., Godisart, T. S., Nabbe, B., Matthews,

I., Kanade, T., Nobuhara, S., and Sheikh, Y. (2017).

Panoptic studio: A massively multiview system for so-

cial interaction capture. IEEE Transactions on Pattern

Analysis and Machine Intelligence.

Laurentini, A. (1994). The visual hull concept for

silhouette-based image understanding. IEEE Trans-

actions on pattern analysis and machine intelligence.

Lin, T.-Y., Goyal, P., Girshick, R., He, K., and Doll

´

ar, P.

(2017). Focal loss for dense object detection. In

Proceedings of the IEEE international conference on

computer vision.

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S.,

Fu, C.-Y., and Berg, A. C. (2016). Ssd: Single shot

multibox detector. In ECCV. Springer.

Matusik, W., Buehler, C., Raskar, R., Gortler, S. J., and

McMillan, L. (2000). Image-based visual hulls. In

Proceedings of the 27th annual conference on Com-

puter graphics and interactive techniques.

Mohammed, A., Schmidt, B., and Wang, L. (2017). Ac-

tive collision avoidance for human–robot collabora-

tion driven by vision sensors. International Journal

of Computer Integrated Manufacturing.

Navarro, S. E., Marufo, M., Ding, Y., Puls, S., G

¨

oger, D.,

Hein, B., and W

¨

orn, H. (2013). Methods for safe

human-robot-interaction using capacitive tactile prox-

imity sensors. In IEEE/RSJ International Conference

on Intelligent Robots and Systems. IEEE.

Nie, B. X., Wei, P., and Zhu, S.-C. (2017). Monocular 3d

human pose estimation by predicting depth on joints.

In 2017 IEEE International Conference on Computer

Vision (ICCV). IEEE.

Peshkin, M. and Colgate, J. E. (1999). Cobots. Industrial

Robot: An International Journal.

Phan, T.-P., Chao, P. C.-P., Cai, J.-J., Wang, Y.-J., Wang, S.-

C., and Wong, K. (2018). A novel 6-dof force/torque

sensor for cobots and its calibration method. In IEEE

International Conference on Applied System Invention

(ICASI). IEEE.

Redmon, J. and Farhadi, A. (2018). Yolov3: An incremental

improvement. arXiv preprint arXiv:1804.02767.

Ren, S., He, K., Girshick, R., and Sun, J. (2015). Faster

r-cnn: Towards real-time object detection with region

proposal networks. In Advances in neural information

processing systems.

Safeea, M. and Neto, P. (2019). Minimum distance calcu-

lation using laser scanner and imus for safe human-

robot interaction. Robotics and Computer-Integrated

Manufacturing.

Sarafianos, N., Boteanu, B., Ionescu, B., and Kakadiaris,

I. A. (2016). 3d human pose estimation: A review

of the literature and analysis of covariates. Computer

Vision and Image Understanding, 152.

Shi, S., Guo, C., Jiang, L., Wang, Z., Shi, J., Wang, X.,

and Li, H. (2020). Pv-rcnn: Point-voxel feature set

abstraction for 3d object detection. In CVPR.

Slembrouck, M., Luong, H., Gerlo, J., Sch

¨

utte, K.,

Van Cauwelaert, D., De Clercq, D., Vanwanseele,

B., Veelaert, P., and Philips, W. (2020). Multiview

3d markerless human pose estimation from openpose

skeletons. In International Conference on Advanced

Concepts for Intelligent Vision Systems. Springer.

Srivastav, V., Issenhuth, T., Kadkhodamohammadi, A.,

de Mathelin, M., Gangi, A., and Padoy, N. (2018).

Mvor: A multi-view rgb-d operating room dataset for

2d and 3d human pose estimation. arXiv preprint

arXiv:1808.08180.

Vicentini, F. (2020). Collaborative robotics: a survey. Jour-

nal of Mechanical Design.

Villani, V., Pini, F., Leali, F., and Secchi, C. (2018). Survey

on human–robot collaboration in industrial settings:

Safety, intuitive interfaces and applications. Mecha-

tronics.

Vlasic, D., Baran, I., Matusik, W., and Popovi

´

c, J. (2008).

Articulated mesh animation from multi-view silhou-

ettes. In ACM SIGGRAPH 2008 papers.

Yoo, J. H., Kim, Y., Kim, J. S., and Choi, J. W. (2020). 3d-

cvf: Generating joint camera and lidar features using

cross-view spatial feature fusion for 3d object detec-

tion. arXiv preprint arXiv:2004.12636.

Zivkovic, Z. (2004). Improved adaptive gaussian mixture

model for background subtraction. In Proceedings of

the 17th International Conference on Pattern Recog-

nition, 2004. ICPR 2004. IEEE.

Zivkovic, Z. and Van Der Heijden, F. (2006). Efficient adap-

tive density estimation per image pixel for the task of

background subtraction. Pattern recognition letters.

VISAPP 2021 - 16th International Conference on Computer Vision Theory and Applications

636