Admonita: A Recommendation-based Trust Model for Dynamic Data

Integrity

Wassnaa Al-Mawee

1

, Steve Carr

1

and Jean Mayo

2

1

Department of Computer Science, Western Michigan University,

1903 W. Michigan Ave., Kalamazoo, MI 49008-5466, U.S.A.

2

Department of Computer Science, Michigan Technological University,

1400 Townsend Dr., Hougton, MI 49931-1292, U.S.A.

Keywords:

Data Integrity, Biba Model, Trust, Subjective Logic, Tranquility.

Abstract:

Data integrity is critical to the secure operation of a computer system. Applications need to know that the

data that they access is trustworthy. Many current production-level integrity models are tightly coupled to

a specific domain, (e.g., databases), or only apply after the fact (e.g., backups). In this paper we propose a

recommendation-based trust model, called Admonita, for data integrity that is applicable to any structured data

in a system and provides a measure of trust to applications on-the-fly. The proposed model is based on the Biba

integrity model and utilizes the concept of an Integrity Verification Procedure (IVP) proposed by Clark-Wilson.

Admonita incorporates subjective logic to maintain the trustworthiness of data and applications in a system.

To prevent critical applications from losing trust, Admonita also incorporates the principle of weak tranquility

to ensure that highly trusted applications can maintain their trust levels. We develop a simple algebra around

these elements and describe how it can be used to calculate the trustworthiness of system entities. By applying

subjective logic, we build a powerful, artificial and reasoning trust model for implementing data integrity.

1 INTRODUCTION

Computer security is at the forefront of modern soci-

ety. The Ponemon Institute and IBM Security have

published their 2019 Cost of a Data Breach Report

showing an average cost of almost $4 million. With

this cost, organizations must do everything they can to

ensure the confidentiality, availability and integrity of

data in their systems. In order to do this, researchers

must develop practical models that allow a system to

provide assurance of data security.

Confidentiality and availability have justly re-

ceived a significant amount of attention in the liter-

ature. However, these two factors are not enough.

For instance, encryption and access control make it

difficult for unauthorized access to data, but do not

verify the integrity of the data being protected. An

authorized user or application may make changes to

data that lower its integrity and confidentiality mea-

sures are unable to detect the authorized, yet erro-

neous change. Thus, an application may have access

to data that may have been altered in a way that lowers

the integrity of the data without being able to detect

the problem.

Integrity often is maintained by restricting access

to high integrity items to only subjects that have high

integrity. However, as illustrated in the previous para-

graph, integrity is also a property of the data itself,

not just of who accesses or modifies it. How can an

application know how trustworthy the data it accesses

is? In addition, if an application tries to access data

that is not very trustworthy, should that application be

allowed to access that data and, if so, does the access

affect the future trustworthiness of both the data and

subject?

This paper addresses these questions by using

an improvement to the trust-enhanced data integrity

model of Oleshchuk (Oleshchuk, 2012). We present a

recommendation-based trust model for dynamic data

integrity, called Admonita. Admonita is based upon

subjective logic (Jøsang, 1999; Jøsang, 2001; Jøsang,

2002; Gao et al., 2009; Oleshchuk, 2012) and the

Biba integrity model (Biba, 1977); however, it in-

corporates the idea of an Integrity Verification Proce-

dure (IVP) from the Clark-Wilson model (Clark and

Wilson, 1987), the principle of tranquility (Bell and

Padula, 1973; Bishop, 2019) that allows integrity lev-

els to increase or decrease, and the notion that the data

Al-Mawee, W., Carr, S. and Mayo, J.

Admonita: A Recommendation-based Trust Model for Dynamic Data Integrity.

DOI: 10.5220/0010150402730282

In Proceedings of the 7th International Conference on Information Systems Security and Privacy (ICISSP 2021), pages 273-282

ISBN: 978-989-758-491-6

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

273

itself has a measure of integrity apart from who mod-

ifies it.

In Admonita, the trust level for subjects and ob-

jects is set by a trusted authority. Admonita then

incorporates the opinion of an independent observer

via an IVP implemented in a language that de-

scribes what it means for structured data to have in-

tegrity (Bonamy, 2016; ?). Admonita maintains the

trustworthiness of both subjects and objects in a com-

puting system via the conjunctive, consensus and rec-

ommendation operators from subjective logic. Ad-

monita adjusts the trustworthiness of entities dynam-

ically based upon the trust levels of subjects and the

objects they access, and includes bidirectional weak

tranquility to allow the trust levels to increase or de-

crease.

The rest of paper is organized as follows. In Sec-

tion 2 we give the background related to Biba, Clark-

Wilson, and data integrity language. In Section 3

we give an overview of related work on trust models

based subjective logic. In Section 4 we formally in-

troduce needed notation, relations and notions of sub-

jective logic. Then we present the tranquility prin-

ciple for dynamic data protection in Section 5. A

description of our proposed recommendation-based

trust model is presented in Section 6. Section 7 pro-

vides a structure and example for trust authentication

in our trust model. Finally, Section 8 concludes this

paper.

2 BACKGROUND

2.1 Security Models

There are several security models that address in-

tegrity for secure systems. The most directly useful

and related to our work are Biba and Clark-Wilson.

Each integrity model offers a definition of data in-

tegrity and introduces their own mechanisms for pre-

serving integrity.

The first model that supported data integrity based

on a subject’s static integrity level was the Biba model

developed by Kenneth J. Biba in 1977 (Balon and

Thabet, ; Biba, 1977). The model describes a set of

subjects, a set of objects, and a set of integrity lev-

els. Subjects may be either users or processes. Each

subject and object is assigned an integrity level, de-

noted as I(S) and I(O), for the subject S and the ob-

ject O, respectively. The idea is that subjects with

lower integrity levels are not permitted to modify ob-

jects that have higher integrity levels. Similarly, sub-

jects with high integrity levels cannot be corrupted

by objects with low integrity levels. Biba is a well-

known general integrity model in computer systems.

Its mandatory integrity property succeeds at enforc-

ing integrity in a system, but it does not deal with the

integrity of data itself; authorized users can still make

improper modifications. For example, if a trusted user

account is compromised, an attacker can use a trusted

user’s integrity level to modify high-level integrity re-

sources.

One of the biggest threats to a company’s data

are its employees, including users and administrators.

They are able to access data, make modification and

copies, use USB discs etc. A popular adoption of

Biba model is in modern Microsoft Windows oper-

ating systems where processes carry integrity labels

and low-integrity subjects/processes cannot interact

with high-integrity ones. Windows mandatory access

control (MAC) ensures data integrity via an access

control mechanism. Windows restricts access rights

depending on whether the subject’s integrity level is

equal to, higher than, or lower than the object’s in-

tegrity level. The integrity level of an object is stored

as a mandatory label access control entry (ACE) that

distinguishes it from the discretionary ACEs govern-

ing access to the object (Microsoft, ). The limitation

of this technology is that the ACEs can be modified

by an offline attack (modification by the system’s ad-

ministrator). This problem can be solved using Ad-

monita. Admonita is recommendation-based model

that uses past behavior to determine whether to trust

an entity. The independent trust opinion of a declara-

tive system that states what it means for data to have

integrity results from validating the actual user input

against the resources that he/she wants to access. The

trust level is independently computed without human

intervention and combined with the integrity levels of

subjects and objects using subjective logic.

A second integrity model is that proposed by

David Clark and David Wilson (CWM) (Clark and

Wilson, 1987). CWM focuses on the prevention and

detection of data integrity faults using transactions.

The model is based on two concepts that are used to

enforce commercial security polices or constraints:

1. Well-formed transactions: A user can manipulate

data using only constrained rules to ensure the in-

tegrity of data.

2. Separation of duty among users: A person who

has the permission to perform well-formed trans-

actions may not have the permission to access the

constrained data.

CWM enforces integrity controls on data by separat-

ing all the data items within a system into two groups:

1. Constrained Data Items (CDIs): Data items that

have associated integrity constraints.

ICISSP 2021 - 7th International Conference on Information Systems Security and Privacy

274

2. Unconstrained Data Items (UDIs): Data items that

do not have associated integrity constraints.

After classifying the data items, the integrity system

tests the data items through two types of procedures:

1. Transformation Procedures (TPs): Achieve data

transactions by changing the system’s CDIs from

one valid state to another.

2. Integrity Verification Procedures (IVPs): Ensure

that all the CDIs conform to the integrity con-

straints or specifications.

CWM provides data integrity but imposes a number

of restrictions that make it impractical to implement.

A transformation procedure may have an issue if a

single application is able to execute many different

transformations. For example, a text editor can be

used to produce HTML files, or to edit the UNIX

password file. To implement CWM, the text editor

must be broken into an HTML editor, and a password

file editor to be certified to produce valid HTML files,

and valid UNIX password file. Additionally, an ad-

ministrator needs to manage and verify all the editors

and that is impractical.

With respect to integrity protection, all data in-

tegrity models deal with the preservation of trust.

There is a need for a more flexible definition of data

integrity that takes into account whether the data itself

can be trusted apart from who modifies the data.

2.2 A Data Integrity Language

We have incorporated a data integrity language,

which we will call Maia (Bonamy, 2016; ?) to

work as an IVP. A Maia specification is compiled into

an authorized program that ensures that all the con-

strained data conform to the integrity constraints or

specifications contained in the Maia specification file.

Maia is a specification language that declares what

it means for arbitrary structured file types (Bonamy,

2016; ?) to have integrity as a property of the data

contained within the file itself. For example, Maia

within the context of Linux has been used to specify

the integrity of system configuration files, PNG files,

and others.

In Maia, a file verification process is accomplished

using two phases that correspond to checking the file

syntax and semantics. In the first phase, the user pro-

vides a grammar in order to verify the file structure

and extract its syntactic elements for processing. This

syntax-checking component of Maia is designed to

work like a normal parser as generated by a parser

generator where the elements of the file are put into

collections. The second phase of Maia checks the col-

lections of data in the syntactic elements by using set

theory and predicate calculus to express the integrity

constraints.

3 RELATED WORK

The following are different enhanced trust-based sub-

jective logic models to support various organiza-

tional security policies that have been proposed.

Oleshchuck proposes a trust-enhanced data integrity

model that is based on the Biba integrity model us-

ing subjective logic (Oleshchuk, 2012). In his model,

he reformulates the rules of the Biba integrity model

in terms of trust and proposes how to combine Role-

Based Access Control RBAC with the introduced in-

tegrity model. Gao, et al. (Gao et al., 2009), pro-

pose a trust model by analyzing and improving sub-

jective logic. By using subjective logic in their model

(Jøsang, 2002), they can evaluate the trust relation-

ship between peers and resolve security problems in

practical computing environments. Jøsang proposes

a trust management system based on subjective logic

(Jøsang, 2001). He proposes an evidence space and

opinion space that are used to evaluate and measure

trust relationships. These policy-based trust models

use credentials to instantiate policy rules that deter-

mine whether to trust an entity, resource or informa-

tion. The policies do not protect the system entities

since the credentials themselves are information that

is not protected by the model. On the other hand, trust

models preserve the initial evaluation of data integrity

by providing information about the trustworthiness of

data and entities. These models do not consider all

of the side effects of dynamic data integrity. For in-

stance, the trust opinions of the system’s subjects can

keep obtaining lower trust levels when they read less

trusted data but there is not mechanism in the model

to raise the integrity levels, possibly resulting in iso-

lation of the subject.

To deal with the problems mentioned above, we

propose a new recommendation-based trust model

that is based on subjective logic and bi-directional

weak tranquility. The recommendation-based trust

model uses past behavior during interactions and in-

formation from other resources to determine whether

to trust an entity. Our model adopts the rules from

the Biba integrity model and incorporates recommen-

dation opinions from Maia. In our trust model, in-

tegrity levels of subjects and objects are expressed as

trust opinions. Since the security of the system is a

subjective measure that depends on individuals who

are qualified to express trust opinions, we define such

trust opinions in the framework of subjective logic.

We compute the recommendation values and the trust

Admonita: A Recommendation-based Trust Model for Dynamic Data Integrity

275

opinions with a simple algebra based on the trust met-

rics of our model. Also, we add a flexible definition of

data integrity by using bidirectional weak tranquility.

4 SUBJECTIVE LOGIC

In this section, we use an artificial reasoning frame-

work called subjective logic to express the levels of

trust. Due to the lack of certainty about the degree of

the trustworthiness of subjects and objects, we need to

have opinions to measure the integrity of these sub-

jects and objects. Subjective logic defines the term

opinion, w, which expresses an opinion about the

trust level of subjects/objects (Jøsang, 1999; Jøsang,

2002). The opinion translates into degrees of trust,

distrust as well as uncertainty, that represents the ab-

sence of both trust and distrust values. Let t, d, and

u be trust, distrust and uncertainty, respectively, such

that:

t + d + u = 1, t,d,u ∈ [0, 1] (1)

The opinion w = {t,d,u} is a triplet satisfying (1).

We use opinions to express trust levels. Having differ-

ent levels of trust instead of a single level, such as in

Biba, provides a better integrity model for real-world

applications.

Subjective logic defines set logical operators that

are equivalent to traditional logical operators, such as

conjunction (AND), disjunction (OR), and negation

(NOT), as well as some non-traditional operators that

are used for combining opinions, such as recommen-

dation and consensus. The expressed opinions are the

input and output parameters for subjective logic op-

erators. For the purpose of this paper, we will define

only consensus, recommendation and conjunctive op-

erators.

Let A and B be two entities that represent ob-

servers who maintain the trust opinions of system re-

sources, and let o be an object. When there are in-

dependent opinions about o, subjective logic defines

a consensus operator to combine these independent

opinions.

Let w

A

o

= {t

A

o

,d

A

o

,u

A

o

} and w

B

o

= {t

B

o

,d

B

o

,u

B

o

} be

opinions held by the observers A and B, respectively,

about o. According to subjective logic, the combined

consensus opinion w

A,B

o

based on opinions w

A

o

and w

B

o

is defined as follows:

w

A,B

o

= w

A

o

⊕ w

B

o

= {t

A,B

o

,d

A,B

o

,u

A,B

o

}

where,

t

A,B

o

= (t

A

o

u

B

o

+t

B

o

u

A

o

)/(u

A

o

+ u

B

o

− u

A

o

u

B

o

)

d

A,B

o

= (d

A

o

u

B

o

+ d

B

o

u

A

o

)/(u

A

o

+ u

B

o

− u

A

o

u

B

o

),

u

A,B

o

= (u

A

o

u

B

o

)/(u

A

o

+ u

B

o

− u

A

o

u

B

o

)

(2)

Let A and B be two observers such that observer

A invokes observer B to access an object o. Let

w

A

B

= {t

A

B

,d

A

B

,u

A

B

} be A’s opinion about B’s recom-

mendation, and let w

B

o

= {t

B

o

,d

B

o

,u

B

o

} be B’s opinion

about the trustworthiness of the object o. Subjective

logic defines a recommendation operator to compute

the indirect opinion w

AB

o

based on opinions w

A

B

and w

B

o

as:

w

AB

o

= w

A

B

⊗ w

B

o

= {t

AB

o

,d

AB

o

,u

AB

o

}

where,

t

AB

o

= (t

A

B

t

B

o

)

d

AB

o

= (t

A

B

d

B

o

),

u

AB

o

= (d

A

B

+ u

A

B

+t

A

B

u

B

o

)

(3)

Furthermore, subjective logic defines the conjunc-

tive operator that expresses an opinion that is held

by observer A about the trustworthiness of two dis-

tinct objects o

1

and o

2

. Let w

A

o

1

= {t

A

o

1

,d

A

o

1

,u

A

o

1

} and

w

A

o

2

= {t

A

o

2

,d

A

o

2

,u

A

o

2

} be observer A’s opinions about o

1

and o

2

. Then the conjunction opinion w

A

o

1

∧o

2

of w

A

o

1

and w

A

o

2

is defined by:

w

A

o

1

∧o

2

= w

A

o

1

∧ w

A

o

2

= {t

A

o

1

∧o

2

,d

A

o

1

∧o

2

,u

A

o

1

∧o

2

}

where,

t

A

o

1

∧o

2

= (t

A

o

1

t

A

o

2

)

d

A

o

1

∧o

2

= (d

A

o

1

+ d

A

o

2

− d

A

o

1

d

A

o

2

),

u

A

o

1

∧o

2

= (t

A

o

1

u

A

o

2

+ u

A

o

1

t

A

o

2

+ u

A

o

1

u

A

o

2

)

(4)

In our model, we consider the opinion w

B

, where

d

B

< t

B

, to be more trustworthy than opinion w

A

,

where d

A

< t

A

, denoted w

B

w

A

, if and only if

t

B

> t

A

. When t

B

= t

A

, we consider higher uncertainty

to be more trustworthy. In the case of t

B

= t

A

=⇒

w

B

w

A

⇐⇒ u

B

> u

A

.

5 TRANQUILITY FOR DYNAMIC

INTEGRITY POLICY

In this section, we outline a tranquility principle that

is trust-enhanced to protect trust levels of system en-

tities and resources.

When a model, such as that of Oleshchuk, al-

lows the trustworthiness of subjects to decrease due

to reading low trusted data, the subject may become

isolated from system resources since Biba’s model in-

corporates only unidirectional weak tranquility. Such

isolation can cause a violation of the security policy.

For example, the ls Linux command is a command-

line utility for listing the contents of a directory or

directories given to it via standard input, and it writes

ICISSP 2021 - 7th International Conference on Information Systems Security and Privacy

276

to the standard output (William E. Shotts, ). When ls

accesses a corrupted directory/file, the trust level of ls

after will decrease. If ls continues accessing objects

with low integrity levels, the trust level of ls may be-

come isolated from system resources. To solve this

issue, we apply the principle of weak tranquility such

that the trust level may both increase and decrease,

making it bidirectional.

The tranquility principle allows controlled copy-

ing from high security levels to low security levels via

trusted subjects. There are two forms of the tranquil-

ity principle: strong tranquility and weak tranquil-

ity. In strong tranquility, the security levels do not

change during the normal operation of the system. In

weak tranquility, the security levels may never change

in such a way as to violate a defined security pol-

icy. Bidirectional weak tranquility is more desirable

in our model. An entity may obtain a new low trust

level due to accessing low integrity data or invok-

ing low integrity entities. By applying bidirectional

weak tranquilly, the entity can progressively accumu-

late higher trust levels, as actions require it. In other

words, subjects and objects integrity levels will be

managed within an allowable range to make the pro-

cess more flexible in application. So, our model not

only incorporates weak tranquility in a bi-directional

manner, the are both maximum and minimum trusts

levels that represent boundaries across which an ob-

ject’s integrity level may not change.

6 RECOMMENDATION-BASED

TRUST MODEL FOR DATA

INTEGRITY

Trust models are divided into two types: policy-based

models and recommendation-based models. Both

types use a language to express relationships about

trust. Each type provides a measure of the trust in an

entity, and the result of the evaluation is a complete

trust, a complete distrust, or somewhere between cer-

tain or uncertain.

Policy-based models require a language in which

to express and analyze system policies. For example,

the Keynote trust management system (Blaze et al.,

1998) that is based on Policy-Marker (Blaze et al.,

1996) is extended to support applications that use

public keys. Recommendation-based models use past

behavior to determine whether to trust an entity, in-

cluding recommendations from other entities. For

example, Abdul-Rahman and Hailes (Abdul-Rahman

and Hailes, 1997) base trust on the recommendations

of other entities. In their model, they consider di-

rect trust relationships and recommender trust rela-

tionships. Trust is computed based on integer values.

They use -1 for direct trust as representing untrusted,

values from 1 to 4 as representing the lowest to high-

est trust values, and 0 as the inability to make trust

judgments. For recommender trust values, the inte-

gers -1 and 0 have the same meaning as with direct

trust, while the values from 1 to 4 indicates how close

the recommender judgment is to the entity that is be-

ing recommended.

Admonita is a recommendation-based trust

model. It is based on Biba and Maia. In our proposed

model, the Biba integrity model defines the subject-

objects access properties, while Maia works as an

Integrity Verification Procedure IVP that preserves

data integrity. Basically, a Maia specification defines

a set of constraints declaring what it means for data

to have integrity. Maia verifies structured data when

a subject writes to the file and generates a limited

number of integrity levels to reflect the evaluation of

the data’s integrity.

The Biba integrity model is concerned with an

unauthorized modification of data within a system by

controlling who may access it. It works as a preven-

tion system for data integrity. The model deals with a

set of subjects, a set of objects, and a set of integrity

levels. Subjects may be either users or processes.

Each subject and object is assigned an integrity level,

denoted as I(s) and I(o), for the subject s and the ob-

ject o, respectively. The integrity levels describe how

subjects and objects are more or less trustworthy re-

garding a higher or lower integrity level.

Let S = {s

1

,s

2

,.. .} be a set of subjects, and O =

{o

1

,o

2

,.. .} be a set of objects. According to subjec-

tive logic, the opinions about a subject and an object

are expressed as w

s

= {t

s

,d

s

,u

s

} and w

o

= {t

o

,d

o

,u

o

}

respectively, where s ∈ S and o ∈ O. Therefore, the

trust opinion about the subject w

s

represents the in-

tegrity of the subject I(s). Similarly, the trust opinion

about the object w

o

represents the integrity of the ob-

ject I(o).

According to (Gambette, 1988), the definition of

trust is “Anna trusts Bernard if Anna believes, with

the level of subjective probability, that Bernard will

perform a particular action, both before the action can

be monitored (or independently of capacity of being

able to monitor it) and in a context in which it af-

fects Anna’s own action.” If Anna establishes trust in

Bernard based on her observation and other interac-

tions, the trust is direct. If it is established based on

Anna’s acceptance of Bernard’s recommendation of

other entities, then the trust is indirect.

Admonita combines direct and indirect opinions

about the trustworthiness of subjects and objects. A

Admonita: A Recommendation-based Trust Model for Dynamic Data Integrity

277

security officer T expresses direct trust opinions about

the subject s, denoted as w

T

s

, and the trust opinions

about the object o, denoted as w

T

o

. Also, T maintains

a list of minimum trust opinions for subjects, denoted

as w

T

s−min

, and list of maximum trust opinions for ob-

jects, denoted as w

T

o−max

. Maia expresses the indirect

subject-object trust opinion, denoted as w

M

s

o

.

We incorporate the Biba model operations for both

subjects and objects:

• Observe: Allows a subject s to read information

in an object o, denoted as read(s,o).

• Update: Allows a subject s to write or update in-

formation in an object o, denoted as update(s,o).

• Invoke: Allows a subject s

1

to execute another

subject s

2

, denoted as invoke(s

1

,s

2

,o).

The Biba model can be divided into two types of

policies, mandatory and discretionary. Most literature

on the Biba model refers to the model as being manda-

tory as a part of the strict integrity policy (Balon and

Thabet, ). The Biba model defines a number of rules

as part of the strict integrity policy. We reformulate

each rule and compute the integrity level of subjects

I(s) and objects I(o) as follows:

6.1 Simple Integrity Property

The Simple Integrity Property enforces no-read-

down. It allows a subject to read (observe) an object

only if the integrity level of the subject is less than the

integrity level of the object.

s ∈ S reads o ∈ O ⇐⇒ I(s) 6 I(o)

This ensures that high-integrity data cannot be di-

rectly contaminated by low-integrity data. For exam-

ple, if the simple integrity rule is enforced, a low-

integrity process may read high-integrity data, but

it cannot contaminate itself by reading low-integrity

data.

Our trust model adds a dynamic property to the

Simple Integrity Property to allow high trust subjects

to access low trust objects, however, the integrity of

the subject may be lowered. The Simple Integrity

Property in our model is reformulated as described

below.

∀s ∈ S,∀o ∈ O : read(s,o) ⇐⇒

i f I(s) > I(o) then I

0

(s) = I(s) ⊗ I(o)

(5)

When s reads o, denoted read(s, o), the integrity

of s may be changed by o, while the integrity of o

will not be changed. If s reads less trusted data, then

the integrity level of s after reading, denoted I

0

(s),

will decrease. To compute the indirect opinion I

0

(s),

denoted as w

T M

s

o

, let T and Maia be two observers

such that the observer T invokes observer Maia to

access an object o. Let w

T

M

be T ’s opinion about

Maia’s recommendation, denoted as w

T

s∧o

, and let w

M

s

o

be Maia’s opinion about the trustworthiness of the ob-

ject o that is accessed by the subject s. We adjust

reasoning from (3) and (4) and we argue the applica-

bility of con junction and recommendation operators

described in the previous section as follows:

w

T M

s

o

= w

T

s∧o

⊗ w

M

s

o

= (w

T

s

∧ w

T

o

) ⊗ w

M

s

o

(6)

T expresses the direct trust opinions of w

T

s

and

w

T

o

. These two opinions are combined using the con-

junctive operator since they are both are assigned by

the same observer. w

T

s∧o

represents T ’s opinion about

Maia’s recommendation. On the other hand, the Maia

model expresses the indirect trust opinion about w

M

s

o

.

To compute the indirect opinion about the trust-

worthiness of w

T M

s

o

based on the trustworthiness of

recommendation of Maia, the two opinions are com-

bined using the recommendation operator. Equation

(6) will decrease the integrity level of s when it reads

less trusted o.

To prevent subject isolation, bidirectional weak

tranquility is applied to ensure that the new obtained

trust level is within an allowable range. It is accom-

plished by comparing the new trust value I

0

(s), de-

noted as t

0

s

, against its minimum value of trust, de-

noted as t

s−min

as follows:

1. If the new trust value of the subject is greater than

its minimum value such that t

0

s

> t

s−min

, then read

access will be granted.

2. If the trust values of a subject are equal such that

t

0

s

= t

s−min

, we consider higher uncertainty to be

more trustworthy. In the case when t

0

s

= t

s−min

then w

0

s

> w

s−min

if u

0

s

> u

s−min

.

3. If the new trust value of the subject is less than its

minimum value such that t

0

s

< t

s−min

then the new

integrity level of the subject violates the integrity

policy. To solve this problem, we consider two

cases:

• If the subject is not allowed to have an integrity

level less than its minimum integrity level, then

read access will be denied and that reflects the

no-read-down rule of the Biba integrity model.

• If the subject is allowed to have an integrity

level less than its minimum integrity level then

the read access will be granted and the integrity

level of the subject will be forced to go back

to its previous integrity level to prevent subject

isolation.

i f t

0

s

< t

s−min

then I

0

(s) = I(s) (7)

ICISSP 2021 - 7th International Conference on Information Systems Security and Privacy

278

6.2 Integrity Star Property

The second property of a Biba policy enforces no-

write-up. It allows a subject to write an object only

if the integrity level of the object is less than or equal

to the integrity level of the subject.

s ∈ S updates o ∈ O ⇐⇒ I(o) 6 I(s)

The Integrity Star Property in our model can be

reformulated as follows:

∀s ∈ S,∀o ∈ O : update(s,o) ⇐⇒

i f I(o) 6 I(s) then I

0

(o) = I(o) ⊕ I(s)

(8)

When s updates o, denoted update(s,o), the in-

tegrity level of o, denoted as I(o), with trust opinion

w

o

will be changed by the integrity level of s, denoted

as I(s), with trust opinion w

s

. If w

s

is more trustwor-

thy than w

o

then the integrity level of the object af-

ter the update, denoted as I

0

(o), will be increased and

I(s) will not change. In contrast, if w

s

is less trust-

worthy than w

o

then s will not be allowed to update o.

This corresponds to the no-write-up rule of the Biba

integrity model.

We consider two scenarios to enforce the no-

write-up rule. First, when a high trust subject s up-

dates a low trust object o invalidly, either accidentally

or intentionally, Maia generates a lower recommenda-

tion opinion with a higher distrust value for accessing

the object o. That lowers the integrity level of s by up-

dating I

0

(s) using (6). Then, I

0

(s) is compared against

I(o) without updating I

0

(o) with (8). With that, our

model enforces no-write-up, and s will be denied to

update o. After that, I

0

(s) will be set using (7), to

avoid isolation from the system resources.

The second scenario, occurs when a high trust

subject s updates a low trust object in a valid format.

In this case, the Maia generates a valid recommenda-

tion opinion for accessing the object o.

w

T,M

s

o

= w

T

o∧s

⊕ w

M

s

o

= (w

T

o

∧ w

T

s

) ⊕ w

M

s

o

(9)

T expresses the direct trust opinions of w

T

o

and

w

T

s

. These two opinions are combined using the con-

junctive operator since they are both are assigned by

the same observer. w

T

o∧s

represents T ’s opinion about

Maia’s recommendation. As with Simple Integrity

Property, Maia expresses the indirect recommenda-

tion trust opinion about w

M

s

o

. Since the Integrity Star

Property in our model keeps s unchanged, we intro-

duce a con junctive consensus term w

T,M

s

o

that com-

bines two independent opinions about accessing o.

Equation (9) enforces increasing the integrity level of

o when it is accessed by highly trustworthy s.

To prevent object isolation, bidirectional weak

tranquility is applied to ensure that the new obtained

trust level I

0

(o) is within an allowable range. It is ac-

complished by comparing the new trust value of I

0

(o),

denoted as t

0

o

, against its maximum value of trust, de-

noted as t

o−max

, as follows:

1. If the new trust value of the object is less than its

maximum value such that t

0

o

< t

o−max

then update

will be granted.

2. If the trust values of objects are equal such that

t

0

o

= t

o−max

, we consider higher uncertainty to be

more trustworthy. In the case of t

0

o

= t

o−max

then

w

0

o

> w

o−max

if u

0

o

> u

o−max

.

3. If the new trust value of the object is greater than

its maximum value such that t

0

o

> t

o−max

then the

new integrity level of the object violates the in-

tegrity policy. To solve this problem, we consider

two cases:

• If the object is not allowed to have an integrity

level greater than its maximum integrity level,

then the update will be denied.

• If the object is allowed to have an integrity level

greater than its maximum integrity level, then

the update access is granted and the integrity

level of the object will be forced to go back to

its previous integrity level to prevent object iso-

lation.

i f t

0

o

> t

o−max

then I

0

(o) = I(o) (10)

6.3 Invocation Property

In Biba’s model, a subject may execute another sub-

ject at its own integrity level or below.

s

1

∈ S invokes s

2

∈ S ⇐⇒ I(s

2

) 6 I(s

1

)

This last property states that a subject at one integrity

level is prohibited from invoking (send/request mes-

sages for service) a subject at a higher level of in-

tegrity. The Invocation Property in our model is re-

formulated as follows:

∀s

1

,s

2

∈ S,∀o ∈ O : read(s

1

,s

2

,o) ⇐⇒

i f I(s

1

) < I(s

2

) then I

0

(s

1

) = I(s

1

) ⊗ (I(s

2

) ⊗ I(o))

(11)

In our trust model, when s

1

invokes s

2

to ac-

cess o, it is denoted as invoke(s

1

,s

2

,o). According

to the strict integrity policy, our trust model requires

I(s

1

) > I(s

2

) preventing a less trustworthy s

1

using

more trustworthy s

2

to update the data. This condition

keeps I(s

1

) unchanged when s

1

reads lower integrity

data o via s

2

.

However, when I(s

1

) < I(s

2

), the indirect opinion

of I

0

(s

1

), denoted as w

T M

s

1

s

2

o

, can be calculated using

the conjunctive recommendation term as follows:

w

T M

s

1

s

2

o

= w

T

s

1

⊗ (w

T

s

2

∧o

⊗ w

M

s

2

o

)

= w

T

s

1

⊗ ((w

T

s

2

∧ w

T

o

) ⊗ w

M

s

2

o

)

(12)

Admonita: A Recommendation-based Trust Model for Dynamic Data Integrity

279

In Equation (12), the security officer T expresses

the direct trust opinions of w

T

s

1

, w

T

s

2

and w

T

o

. The

opinions of w

T

s

2

and w

T

o

are combined using the con-

junctive operator since they are both assigned by the

same observer. Maia expresses the indirect trust opin-

ion w

M

s

2

o

. Then the indirect recommendation opinion

of w

T M

s

2

o

is combined with w

T

s

1

using the recommen-

dation operator. As a result, the integrity level of s

1

decreases due to using highly trusted resources.

The proposed trust opinion calculations associ-

ated with no-read-down and no-write-up operations

along with a dynamic range of integrity levels give

our model more flexibility and better control of in-

tegrity violations.

7 A STRUCTURE AND EXAMPLE

FOR TRUSTWORTHINESS

AUTHENTICATION

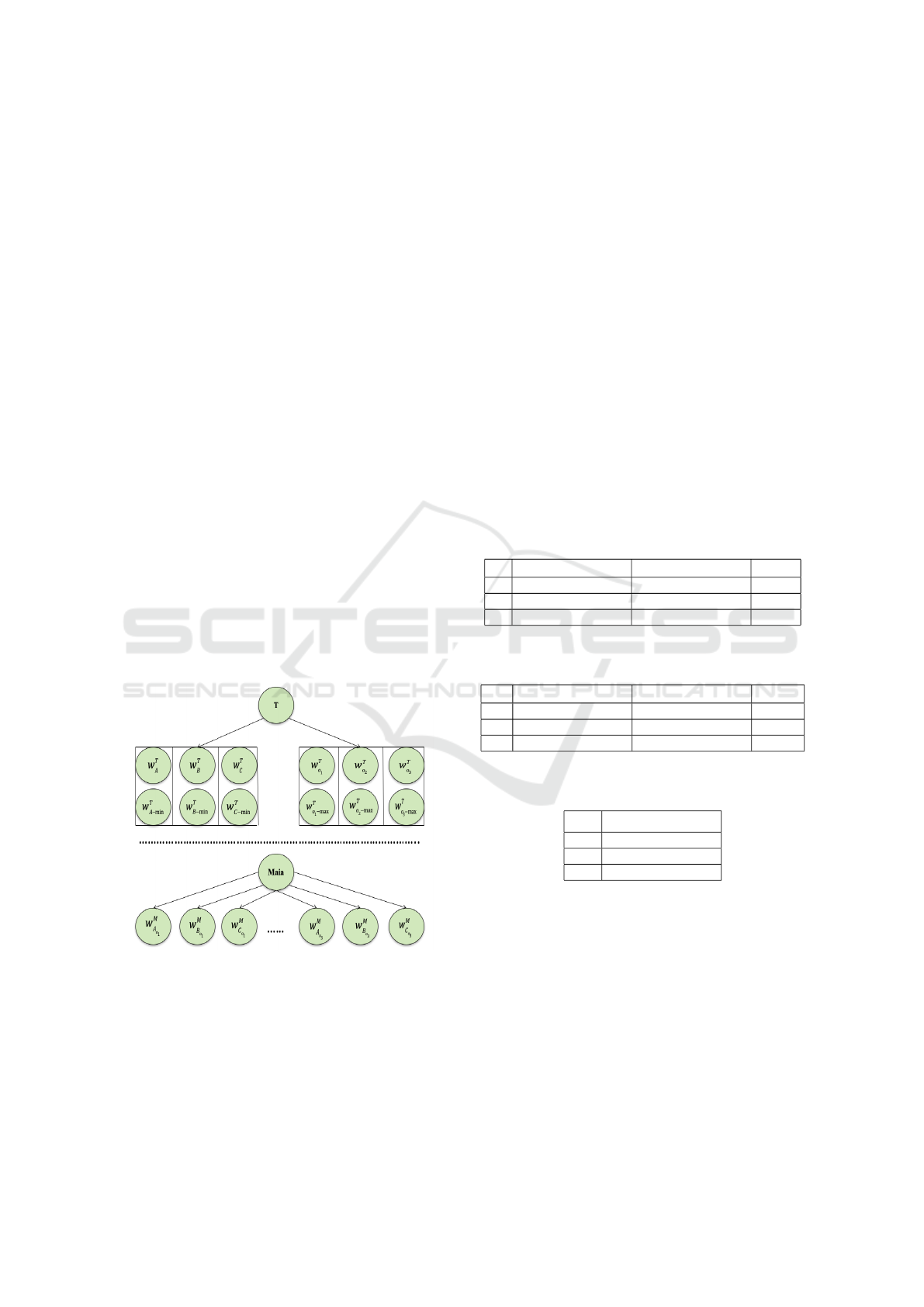

Fig. 1 illustrates a possible structure for comput-

ing the integrity level as trust opinions about sub-

jects/objects. The structure above the dotted line rep-

resents the opinions of the security officer T about

subjects and objects as stored in T

0

s private database.

Also, T maintains a list of minimum trust opinions for

each subject s, denoted w

T

s−min

, and list of maximum

trust opinions for each object o, denoted w

T

o−max

.

Figure 1: A Structure of Trustworthiness Authentication in

Recommendation-Based Trust Model.

To ensure dynamic computation of trust opinions

for system’s entities, T assigns values of 0 and 1 for

trusted subjects and objects, denoted T

s

and T

o

respec-

tively. A value of 0 for a subject s does not allow s

to obtain an integrity level less than its minimum in-

tegrity level. A value of 0 for an object o does not al-

low o to obtain an integrity level greater than its max-

imum integrity level. In contrast, a value of 1 for s

allows s to have access to less trustworthy data and

return to its previous integrity level. Similarly with o,

a value of 1 allows o to have an integrity level greater

than its maximum, for updates, and return to its pre-

vious integrity value.

The integrity level of a subject s reflects how much

T trusts s when accessing an object. It is assumed that

T knows the trust levels of s. On the other hand, the

integrity level of an object reflects T

0

s opinion about

the trustworthiness of the data itself.

In our model, T must keep a list of her opinions,

w

T

s

and w

T

o

, about the trustworthiness of subjects and

objects, respectively. T ’s opinions about an subject

reflects the trust level of the subject. However, T ’s

opinions w

T

s−min

about s ensure dynamic data integrity

while the T ’s opinion about an object reflects the trust

level about the data itself. Table 1 gives an example

of possible opinion values.

Table 1: Security Officer’s Opinions about Subjects’ Trust-

worthiness.

S w

T

S

w

T

S−min

T − S

A {1.00, 0.00, 0.00} {0.99, 0.01, 0.00} 1

B {0.98, 0.00, 0.02} {0.85, 0.10, 0.05} 1

C {0.88, 0.10, 0.02} {0.80, 0.10, 0.10} 0

Table 2: Security Officer’s Opinions about Objects’ Trust-

worthiness.

O w

T

O

w

T

O−max

T − O

o

1

{0.90, 0.05, 0.05} {1.00, 0.00, 0.00} 0

o

2

{0.96, 0.02, 0.02} {0.96, 0.02, 0.02} 0

o

3

{0.98, 0.00, 0.02} {0.98, 0.00, 0.02} 1

Table 3: Maia’s Opinions about (Subject-Object) Trustwor-

thiness.

S

o

1

w

M

S

o

1

A

o

1

{0.95, 0.01, 0.04}

B

o

1

{1.00, 0.00, 0.00}

C

o

1

{0.89, 0.02, 0.09}

In order to enforce weak tranquility, T must maintain

a list of her maximum opinions about objects w

T

o−max

. Table 2 gives an example of possible opinion values.

The structure below the dotted line represents a list

of Maia trust recommendations w

M

S

o

, based upon the

Maia specification for that file, for each subject that

wants to access the object o. Table 3 gives an example

of possible opinion values.

Assume subject B wants to read an object o

1

,

read(B,o

1

). Since I(B) is greater than I(o

1

), the trust

of B, denoted I

0

(B), can now be calculated using (6):

ICISSP 2021 - 7th International Conference on Information Systems Security and Privacy

280

w

T M

B

o

1

= (w

T

B

∧ w

T

o

1

) ⊗ w

M

B

o

1

= {0.882,0.00,0.118}

Notice that the new integrity level of B, 0.882, is less

than the old value 0.98 due to B reading object o

1

with

a lower trust level than B. Also, the new I

0

(B) satis-

fies (7) since it does not fall below its minimum trust

value. Now, the integrity level of B in Table 1 will be

replaced by the new value in order to prevent the low

integrity of B from updating other objects in future

interactions.

Suppose subject B wants to update object o

1

,

update(B,o

1

). Since I(B) is greater than I(o

1

), the

trust of o

1

, denoted I

0

(o

1

), can now be calculated us-

ing (9):

w

T,M

B

o

1

= (w

T

o

1

∧ w

T

B

) ⊕ w

M

B

o

1

= {1.00,0.00,0.00}

Notice that the new integrity level of o

1

, 1.00, is

greater than the old value 0.90 since B has a higher

trust value than o

1

. Also, I

0

(o

1

) satisfies (10) since

it does not exceed the maximum trust opinion. Now,

the integrity level of o

1

in Table 2 will be replaced by

the new value.

Consider the case when a subject invokes another

subject to access an object o. Assume B invokes A

to access o

1

. We need to modify the trust level of B

for two reasons. First, the integrity level of B is lower

than the integrity level of A, so the trust model will

prevent B from using A. Second, subject A accesses

less trusted data o

1

. This decreases the integrity level

of A.

To calculate the trustworthiness of B, I

0

(B), first

I

0

(A) is calculated using (6) and (7) to let A obtain

back its trust opinion since it is a trusted subject. Then

I

0

(B) is calculated using (12):

w

T M

B

A

o

1

= w

R

B

⊗ ((w

T

A

∧ w

T

o

1

) ⊗ w

M

A

o

1

)

= {0.8379,0.00882,0.15328}

The new integrity level of B, 0.8379, is less than the

old value 0.98 and that is due to the Invocation Prop-

erty. In addition, the new trust value of B violates

the integrity policy since it is less than its minimum

trusted value. However, B is a trusted subject. There-

fore, our trust model allows B to read the less trusted

object and obtain back its trust opinion. Now, the

trustworthiness of B, denoted as I

0

(B), can be calcu-

lated using (7):

i f t

0

(B) < t

B−min

then I

0

(B) = I(B)

I

0

(B) = {0.98, 0.00, 0.02}

If B is not a trusted subject, then the read access will

be denied.

8 CONCLUSIONS AND FUTURE

WORK

In this work we propose a new recommendation-

based trust model for data integrity called Admonita.

Admonita incorporates subjective logic, the Biba in-

tegrity model, the Clark-Wilson integrity model and

the principle of bidirectional weak tranquility. Com-

pared to previous models, our model adds the opinion

of an IVP from Clark-Wilson of the integrity of the

data that is a property of the data itself rather than the

opinion of a trusted user. In addition, our model uses

bidrectional weak tranquility to allow opinions about

the integrity of data to change dynamically within a

restricted range. The result is a model that determines

the integrity of subjects and objects in a system that

is not based solely on the integrity of the users in the

system.

In the future, we plan to implement Admonita

in a real system and measure its performance. This

will involve creating a high-performance compiler for

Maia that utilizes its natural parallelism. The result

will be a system that measures and maintains the trust

levels for the applications and data contained within

it.

REFERENCES

Abdul-Rahman, A. and Hailes, S. ((1997). A distributed

trust model. In Proceeding of the 1997 Workshop on

New Security Paradigms, pages 48 – 60.

Al-Mawee, W., Carr, S., Bonamy, P., and Mayo, J. (2019).

Maia: A language for mandatory integrity controls

of structured data. In Proceedings of the 5th Inter-

national Conference on Information Systems Security

and Privacy (ICISSP 2019).

Balon, N. and Thabet, I. The biba security

model,. https://pdfs.semanticscholar.org/7360/

c680906617622f27ef2596c7efcc902795db.pdf.

Bell, D. E. and Padula, L. J. L. (1973). Secure computer

systems: Mathematical foundations. Technical Report

MTR-2547, The MITRE Corporation, Bedford, MA.

Biba, K. J. (1977). Integrity considerations for secure com-

puter systems. Technical report, The MITRE Corpo-

ration.

Bishop, M. (2019). Computer Security: Art and Science.

Pearson Education Inc., second edition.

Blaze, M., Feigenbaum, J., and Keromytis, A. D. (1998).

Trust management for public –key infrastructures. In

Proceedings of the Ninth International Workshop on

Services Computing ( Lecture Notes in Computer Sci-

ence 1550), pages 59 – 63.

Blaze, M., Feigenbaum, J., and Lacy, J. (1996). Decentral-

ized trust management. In Proceeding of 1996 IEEE

Symposium on Security and Privacy, pages 164 – 173.

Admonita: A Recommendation-based Trust Model for Dynamic Data Integrity

281

Bonamy, P. (2016). Maia and Mandos: Tools for Integrity

Protection on Arbitrary Files. PhD thesis, Michigan

Technological University.

Clark, D. D. and Wilson, D. R. (1987). A comparison of

commercial and military computer security policies.

In IEEE Symposium of Security and Privacy, pages

184 – 194.

Gambette, D. (1988). Trust: Making and breaking cooper-

ative relations. Technical report, Basil Blackwell Ltd.

Gao, W., Zhang, G., Chen, W., and Li, Y. (2009). A trust

model based on subjective logic. In Proceedings of

the Fourth International Conference on Internet Com-

puting for Science and Engineering, pages 272 – 276.

Jøsang, A. (1999). An algebra for assessing trust in certi-

fication chains. In Proceedings of the Networks and

Distributed Systems Security (NDSS’99).

Jøsang, A. (2001). A logic for uncertain probabilities.

International Journal of Uncertainty, Fuzziness and

Knowledge-Based Systems, 9(3):279 –– 311.

Jøsang, A. (2002). The consensus operator for combining

beliefs. Artificial Intelligence Journal, 142(1-2):157 –

170.

Microsoft. Windows integrity mechanism,. https://docs.

microsoft.com/en-us/openspecs/windows protocols/

ms-azod/75e4ff94-ff5f-43d2-b2e4-4c1429c35261.

Oleshchuk, V. (2012). Trust-enhanced data integrity model.

In 2012 IEEE 1st International Symposium on Wire-

less Systems (IDAACS-SWS), pages 109 – 112.

William E. Shotts, J. The linux command line,. https://wiki.

lib.sun.ac.za/images/c/ca/TLCL-13.07.pdf.

ICISSP 2021 - 7th International Conference on Information Systems Security and Privacy

282