Responsibility for Causing Harm as a Result of a Road Accident

Involving a Highly Automated Vehicle

R. R. Magizov

1a

, E. M. Mukhametdinov

1b

and V. G. Mavrin

1c

Kazan Federal University, Suyumbike Avenue, 10A, Naberezhnue Chelny, Russia

Keywords: Highly Automated Vehicles, Road Accident, Responsibility for Causing Harm.

Abstract: The development and implementation of highly automated transport (unmanned vehicles) is accompanied by

a number of problems, including problems related to liability for accidents and causing harm involving

unmanned vehicles. The indicated problem faces the legislators and the public of all countries where highly

automated transport is produced, tested and introduced. A number of countries have already made an attempt

to legislatively regulate issues related to the production of highly automated and unmanned vehicles, their

operation and liability for damage. In the presented article, an analysis of the current legislation of the

regulatory framework and the conditions for prosecution for damage caused by a highly automated vehicle as

a result of a traffic accident, as well as an analysis of the positions of the authors on the topic under study, is

carried out. Based on the study, the author formulates proposals on the need for amendments and additions to

the current legislation of the Russian Federation related to fixing the grounds and conditions for holding liable,

as well as determining the range of subjects to be liable for damage caused by a highly automated vehicle.

1 INTRODUCTION

In recent decades, there has been growing interest in

whom as intellectualization and automation will

change the future of transport systems. Despite the

assurances of experts that highly automated vehicles

are the most intelligent and safe cars that do not know

what absent-mindedness or fatigue, poor visibility or

the human factor on the roads, the development of

artificial intelligence has not yet reached the level

where errors are reduced to zero.

It should be noted that in a number of countries

that are actively introducing highly automated

transport into operation in one form or another,

regulatory documents have already been developed

that regulate the operation of unmanned vehicles,

while others are being actively developed.

The press publishes hundreds of notes, paying

attention to the smallest details related to unmanned

vehicles, in connection with which news about road

accidents (hereinafter - accidents) with unmanned

vehicles simply cannot go unnoticed.

a

https://orcid.org/0000-0001-7918-0371

b

https://orcid.org/0000-0003-0824-0001

c

https://orcid.org/0000-0001-6681-5489

In 2017, Allen & Overy International Law Firm

published Navigation on Legal Issues Related to

Unmanned Vehicles (Allen & Overy, 2017). In

October 2019, the World Congress ADAS & AV

legal issues, implications and liabilities (ADAS &

AV legal …, 2019) was held, which focused

exclusively on the differing legal issues brought about

by different levels of vehicles autonomy.

In the Russian Federation, as in a number of other

countries, work is underway to create, test and put

into operation highly automated vehicles.

Unfortunately, Russia has progressed less than other

countries in developing legislation in the field of their

use. As early as March 2016, the Committee on

Science and High Technologies of the State Duma of

the Russian Federation organized a round table on the

topic "Normative and legal regulation of the use of

unmanned systems in the Russian Federation." Based

on the results of the discussion, proposals were

prepared, in particular, on amendments to the current

legislation addressed to the State Duma of the

Russian Federation (On the Experimental

Operation…, 2020), which were ignored by the

606

Magizov, R., Mukhametdinov, E. and Mavrin, V.

Responsibility for Causing Harm as a Result of a Road Accident Involving a Highly Automated Vehicle.

DOI: 10.5220/0009825506060613

In Proceedings of the 6th International Conference on Vehicle Technology and Intelligent Transport Systems (VEHITS 2020), pages 606-613

ISBN: 978-989-758-419-0

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

legislator. In 2018, by an order of the Government of

the Russian Federation, the Strategy for Road Safety

in the Russian Federation for 2018-2024 was

approved (On Approval of the Road…, 2018), in

which there is no mention of unmanned vehicles.

The periodicals of recent years actively discuss

the legal aspects of the introduction of unmanned

vehicles on public roads. Among the most discussed

problems are the questions of terminology, the issues

of using an autonomous vehicle, the legal regime of

such vehicles, and questions of liability.

There are still many unresolved legal issues, and

one of them is who will be to blame and be liable in

the event of an accident.

2 SAFETY ISSUES OF

HIGH-AUTOMATED

VEHICLES

Increased attention to the development and use of

highly automated transport is understandable. Due to

the operation of an unmanned vehicle, first of all, the

solution of the problem of road safety, as well as

economic, organizational and social issues, is

achieved.

As practice shows, as well as a number of studies

in this area, if there are advantages, the appearance of

such vehicles on public roads raises a number of

questions, primarily related to its safety, and in

particular to determining the subjects of

responsibility for possible traffic accidents involving

of such vehicles and compensation for harm

(Makarova et al., 2018).

Autonomous vehicle, like any other device with a

network connection, can be subjected to hacker

attacks. Anyone who hacked into a vehicle’s software

can gain access to its vehicle security systems and

personal data (Fafoutellis, P., Mantouka, E.G., 2018).

For this reason, a malfunction of equipment or

software should not lead to loss of vehicle's control,

that is, vehicle should have protection to prevent such

situations (Yağdereli, E. et.al., 2015).

Experts draw attention to the unreliability of

software, which is vulnerable to hacking and

snooping, and due to the latter circumstance, loss of

privacy (Korobeev, A.I., Chuchaev, A.I., 2019).

Unmanned technologies are in a special risk zone, as

a result of cyber-terrorist actions people will die.

Theoretically, it looks like this - a hacker breaks into

the network, turns off the brakes, or vice versa - stops

the car on a busy highway. Back in 2015, Uber

engineers discovered weaknesses in the software of a

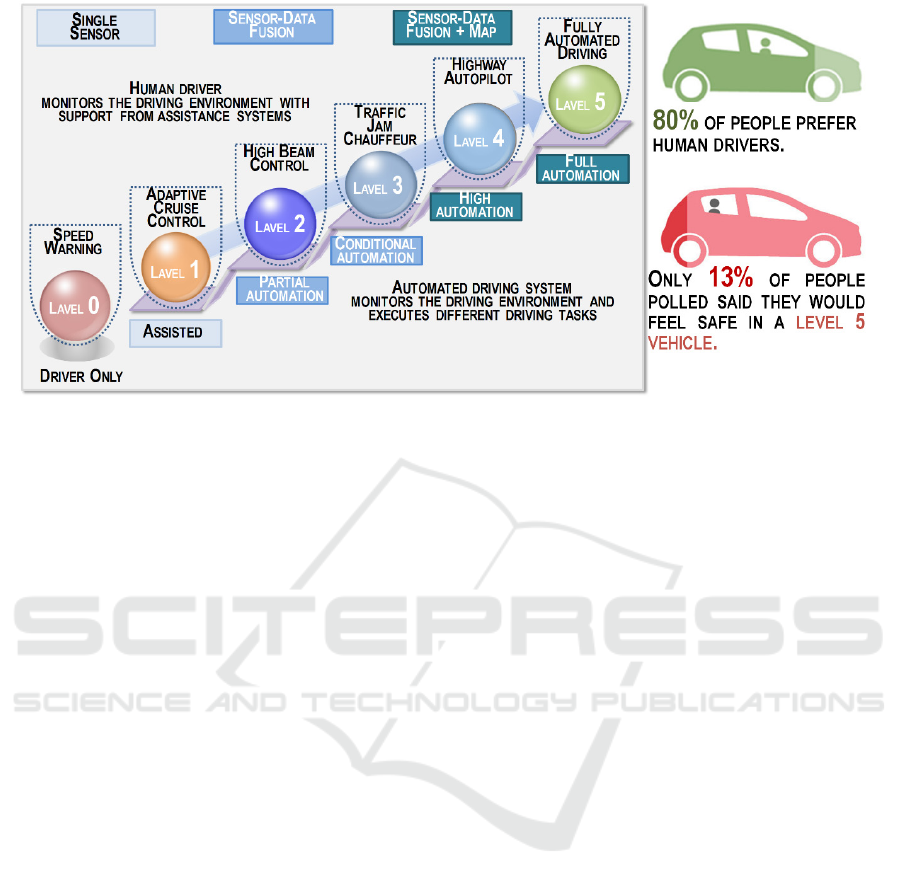

Figure 1: Autonomous vehicle market dynamics by

automation levels.

car with an autopilot system. As part of the

experiment, they managed to gain access to the brake

system.

Engine stopping, disabled brakes and locked

doors are some examples of possible cyberattacks on

car systems (Eiza, M.H., Ni, Q., 2017). Hackers can

infiltrate any targeted vehicle system, steal the

owner’s personal information, and endanger vehicle

safety (Some, E. et.al., 2019).

The CEO of General Motors calls cyber threats

the main problem of automakers and the issue of

international security today. Some companies, such

as Tesla, Fiat Chrysler, and GM specifically

encourage individuals who find vulnerabilities in the

security systems of automated machines. The number

of startups is increasing, the purpose of which is to

create the latest cyber defense technologies for cars.

Argus, a company specializing in the

development of cyber defense tools for automobiles,

believes that a single product cannot be suitable for

these purposes: different solutions designed for

different parts of an unmanned vehicle should be

integrated with each other in order to ensure full

protection of the latter (Korobeev, A.I., Chuchaev,

A.I., 2019).

An article McAllister (McAllister, R. et.al., 2017)

states that improving security alone is not enough.

Passengers also need to feel safe and trust

autonomous vehicles. In the study (Kalra, N.,

Paddock, S.M., 2016), one of the proposed methods

for assessing safety is to test autonomous vehicles in

real traffic, monitor their performance and make

statistical comparisons with the characteristics of a

human driver, which showed that fully autonomous

vehicles should to drive hundreds of millions of miles

to demonstrate their reliability in terms of fatalities

and injuries.

Responsibility for Causing Harm as a Result of a Road Accident Involving a Highly Automated Vehicle

607

Figure 2: Vehicles automation levels according to the SAE classification and citizens belief degree to robot-driver.

Autonomous vehicles are not ready for wide

distribution, their safety problems must be addressed

in terms of building trust in these vehicles, legislative

regulation of issues related to accidents, solving

software vulnerability issues.

3 RESPONSIBILITY ISSUES FOR

THE RESULTS OF AN

ACCIDENT WITH THE

PARTICIPATION OF HIGHLY

AUTOMATED VEHICLES

The lack of understanding among the population of

the difference between an autonomous vehicle and a

car equipped with computer technologies that help the

driver is a serious problem with the ensuing

consequences. This situation leads not only to

violations of traffic rules and causing property

damage, but also to deaths on the roads. It is also

worrying that some drivers, having yielded to the

promises associated with autonomous cars, are

completely irresponsible to use existing assistive

technologies. The above confirms the analysis of

existing practice.

So, in 2015 in California, an unmanned Google

car was stopped by a policeman for violating the

standard speed limit, and not for speeding, but for

driving too slow. An unmanned google car was

traveling at a speed of 40 km / h, which created some

interference with other participants in the movement.

When the officer stopped the car, there was no driver

at the wheel — there simply was no steering wheel in

the cabin. A policeman warned a passenger - a

Google employee - that driving too slow is also a

violation of the rules of the road (Martynov, A.V.,

2019). The officer did not write a fine and spoil the

statistics of Google, because for all the time of the test

drones have never been fined.

In the UK, a Tesla electric car driver was

convicted of having climbed into the back passenger

seat while driving on a busy highway at a speed of

about 64 km per hour. The driver used the Tesla

autopilot system, which independently brakes,

accelerates and keeps the car in a lane on major roads,

not considering that this system is not designed to

completely replace the driver. Similar technologies

are used by other manufacturers, for example, Volvo,

Mercedes-Benz and Cadillac. But, none of these

systems is completely autonomous. In this

connection, some experts in the field of road safety

consider it necessary to increase the responsibility of

car manufacturers for the correct use of modern

autopilot technologies by drivers so that they do not

make tragic mistakes.

Sports competition is the only place where

unmanned vehicles will have to demonstrate their

high-speed driving capabilities. The Roborace brand

plans to create a series of unmanned vehicles that will

race on the track. The company has already managed

to test its fireballs, and one of them even suffered an

accident. During a show event between two DevBots,

a small incident occurred. When racing drones made

maneuvers (sometimes speed reached 185 km/h), one

of them did not fit into the turn and flew into the

fence. But the second car did the job 100% and was

even able to go around the dog, which suddenly ran

out onto the track.

iMLTrans 2020 - Special Session on Intelligent Mobility, Logistics and Transport

608

Protests from three teams, 73 penalty minutes for

violation of traffic rules and one accident - with such

results the Up Great “Winter City” unmanned

vehicles contest ended, the final of which was held at

Dmitrovsky training ground. The technological

barrier has not been taken: not a single drone could

travel the entire distance in the allotted time. But there

is reason for pride: the participants of the “Winter

City” staged the world's first traffic jam on the landfill

roads.

The developers have been assuring for several

years that unmanned vehicles strictly abide by the

rules of the road. However, more recently, the

exclusive human rights to violate traffic rules have

been challenged by the Uber drone, which drove into

the red light of a traffic light. During testing of drones,

six such cases were recorded.

In foreign traffic practice, accidents involving

unmanned vehicles, including accidents involving the

death of participants, have already been recorded.

In December 2017, the Chevrolet Bolt, regulated

by an automatic control system, collided with a

motorcycle while rebuilding from one lane to another.

According to the incident report filed by General

Motors ’with the California Department of Motor

Vehicles, the car saw the motorcycle with" side

vision. " The injured motorcyclist filed a lawsuit

against the American corporation - this is the first

lawsuit involving an unmanned vehicle. The incident

itself was only recently announced.

The first fatal accident with an unmanned vehicle

occurred in the US state of Florida in 2016. The Tesla

Model S Robocar, driven by an autopilot, rammed a

truck with a trailer crossing the intersection.

According to the press service of the company Tesla,

the cause of the accident was a coincidence. As a

result, the drone drove under the trailer, demolished

the roof, rammed 2 fences and flew into the pole

without leaving a chance to the driver.

Experts in this case put forward two versions:

1. The computer could not identify the trailer

because of the white color and confused it with the

sky, and the driver did not have time to respond in

time and take control. Too bright sun rays could also

blind the driver.

2. The on-board computer was mistaken due to the

long length of the truck and the increased clearance

under the trailer. This prevented the sensors from

seeing and recognizing dangerous obstacles in time.

It is worth noting that the company hid

information about the tragic accident with a fatal

outcome of 8 weeks, during which it managed to sell

shares in the amount of $ 2 billion. According to the

results of an investigation conducted by the USA

National Transportation Security Council, the

autopilot system was justified, and part of the blame

was blamed on the driver himself - he was not careful

enough on the road.

Another tragedy was recorded in Tempe (USA,

Arizona) on March 21, 2018. Participants in the

accident were a “Uber” sports utility vehicle and a

cyclist. At the same time, an operator was provided in

the cabin of the “autonomously controlled machine”,

which was designed just in case of emergency

situations (Korobeev, A.I., Chuchaev, A.I., 2019). "...

It would be very difficult to avoid this collision in any

mode - standalone or with the driver - based on how

she (the cyclist) jumped out of the shadows right onto

the road ... Uber is hardly to blame for this incident,"

the chief said Tempe S. Moir Police Department -

Neither the camera, nor the person sitting in the cab

of the tested car noticed the bike before the collision.

In particular, the driver realized that the collision only

heard his sound. A car equipped with two cameras

also made no attempt to brake. rate of 38 miles per

hour (61 km/h) in a zone with a velocity of 35 miles

per hour limitation ".

The U.S.A. National Council for Transport Safety

has identified the likely causes of an accident in 2018.

The company is guilty of a deadly drone accident, but

not only Uber is to blame. Federal investigators

admitted that 4 parties were to blame for the accident

of an unmanned vehicle: Uber, a driver (a safety

engineer who was sitting in the driver’s chair during

the accident), the victim of the accident and the state

of Arizona, where the accident occurred. In an official

federal report, the US government also claimed

responsibility for improperly regulating the drone

industry. The Council also found that the company's

unmanned vehicles were not properly programmed to

respond to pedestrians crossing the street at non-

pedestrian crossings. In addition, Uber said that the

company's drone got into more than three dozen

accidents before the fatal accident in Tempe.

Thus, in both cases, there were no defects in the

equipment that could provoke an emergency. The

issue of liability for harm caused, primarily due to

gaps in the law, has not been resolved (Zabrodina, Е.,

2018).

Although these cases are far from the first when

an autopilot car is involved in an accident, they again

exacerbated the question that has been asked for a

long time: who is the responsible party? A driver or

an auto company designing an autonomous driving

system?

It should be noted that the process of forming

requirements for the introduction of autonomous cars

on public roads is currently only starting, the main of

Responsibility for Causing Harm as a Result of a Road Accident Involving a Highly Automated Vehicle

609

which are the mandatory presence of a driver in the

cabin, the ability to switch drones to manual control

(Chuchaev, A.I., Malikov S.V., 2019). Therefore, in

order to ensure safety and minimize the risks of

harming a highly automated vehicle, most countries

developing unmanned vehicles are moving towards

legislatively securing the need for a person in the cabin

of an unmanned vehicle to take over the vehicle in

case of emergency. This approach is based on the

provisions of the 1968 Vienna Convention on Road

Traffic, which established that each vehicle or

composition of vehicles that are in motion must have

a driver (Art. 8) (The Vienna Convention…, 1999).

Along with this, foreign legislation, as a rule, does

not regulate the procedure for obtaining rights to drive

unmanned vehicles, does not contain rules providing

for liability for road traffic crimes involving them

(Chuchaev, A.I., Malikov S.V., 2019). However, this

situation is not observed in all states introducing

highly automated cars on public roads.

For example, in U.S. law, the following statutory

provisions for unmanned vehicles can be noted:

- To test an unmanned vehicle on the road, you

must obtain the appropriate permit - license. For

example, in California, where Google cars were first

tested, they were issued to 7 companies;

- insurance - a prerequisite for the operation of an

autonomous car. The amount of insurance coverage in

the United States is quite serious - $ 5 million;

- According to the law, a driver must be present in

the driver's seat who, in case of emergency, can take

control;

- a mandatory requirement for drones.

- recording and storage of telemetry 30 seconds

before the accident. Moreover, all incidents involving

such vehicles must be reported to the USA

Department of Transportation.

German experts have enshrined ethical standards

for unmanned vehicles, prohibiting artificial

intelligence from making decisions that can save the

lives of some people, causing damage to others. They

echo the three laws of robotics Isaac Asimov

(Korobeev, A.I., Chuchaev, A.I., 2019). Based on the

established ethical standards, a law was developed and

approved by the Bundesrat that defines the legal basis

for the use of unmanned vehicles on public roads. The

main condition provided for by this law is the

mandatory presence of a driver at the wheel who is

ready at any time to take control of the vehicle in his

hands. In addition, the so-called black box should be

installed in the unmanned vehicle, the data of which,

in the event of an accident, will show who was to

blame for the accident - the driver or the autopilot

(Nigmatullin, I., 2016).

In the UK, they are actively discussing the topic

of liability and are considering the Vehicle

Technology and Aviation Bill project, which defines

the main postulates of future legal standards

regarding unmanned vehicles:

- if at the time of registration of the insurance

policy the insurance company was informed that the

vehicle will be used in autopilot mode, then it bears

full responsibility for the insured car;

- if the unmanned vehicle is not insured, then in

the event of an accident the car owner will be liable;

- in the event that an emergency occurred due to a

malfunction in software or equipment, the fault lies

with the manufacturer;

- if the accident was the result of a car owner’s

intervention in the software or the owner did not

follow the manufacturer’s instructions (for example,

did not update the software on time), then the insurer

can collect insurance payment from the car owner.

Based on the foregoing, the following conclusion

can be made - insurance for self-driving machines

with artificial intelligence will not differ much from

ordinary OSAGO. In this case, car owners will need

to monitor all software updates, to prevent third-party

interference in the technique.

So far, only one plus is obvious - the cost of

insurance for an unmanned vehicle will be

significantly lower than the cost of an ordinary policy

for a classic car. A high level of safety and a low

probability of getting into an accident will have a key

impact on pricing in the field of unmanned vehicle

insurance.

In the Russian Federation, the first attempt to

regulate relations in the field of the use of unmanned

vehicles, including affecting liability issues, is Decree

of the Government of the Russian Federation of

November 26, 2018 No. 1415 "On conducting an

experiment on the pilot operation of highly automated

vehicles on public roads" (On conducting an

experiment…, 2018) The specified regulatory act

defines the subjects of emerging legal relations with

the distribution of responsibility in the event of

Damage to life and health of people, as well as

property damage. These include: the owner of a

highly automated vehicle - a legal entity that owns a

highly automated vehicle on the basis of ownership

and which participates in the experiment on a

voluntary basis; driver of a highly automated vehicle

- an individual who is in the place of the driver of a

highly automated vehicle during an experiment,

activates an automated driving system of a highly

automated vehicle and controls the movement of this

vehicle in an automated control mode, as well as

controls a highly automated vehicle in manual control

iMLTrans 2020 - Special Session on Intelligent Mobility, Logistics and Transport

610

mode. The driver of a highly automated vehicle is a

driver in accordance with the provisions of the Rules

of the Road of the Russian Federation, approved by

Decree of the Government of the Russian Federation

of October 23, 1993 No. 1090 (On the Rules of the

Road…, 1993).

According to p.18 of the Decree of the Russian

Federation Government No. 1415 (On conducting an

experiment…, 2018), the vehicle owner bears

responsibility for road traffic and other accidents on

the roads of Russia that occurred with the highly

automated vehicle participation. It is also necessary

to establish a responsibility traffic accident measure

in event of the autonomous vehicle's theft.

Interpretation of this norm and other provisions of

the analyzed Decision allows us to state that the driver

is understood as a participant in traffic, whose guilty

actions can also lead to traffic accidents causing

damage to property, health and life of others, since he

is obliged to activate the automated driving system of

a highly automated transport means, control its

movement in an automated control mode, as well as

carry out highly automated control vehicle in manual

mode. At the same time, this approach leaves the

question of what is meant by the guilty actions of the

driver. Difficulties are caused by the lack of a

legislative definition of the concept of “driving a

vehicle,” which makes it difficult to assess the

driver’s “contribution” to the process of driving

autopilot vehicles, determining their guilt, and the

nature of their influence on the onset of road traffic

consequences.

The approach laid down in Decree of the

Government of the Russian Federation No. 1415

testifies to a greater responsibility of the driver than

the owner, since the first is entrusted with the duty of

monitoring compliance with traffic rules and

preventing traffic accidents. The owner of a highly

automated vehicle in the framework of the

experiment is more likely to be responsible for

failures in software and hardware that cannot be

eliminated by the driver. This statement also argues

in favor of the fact that prosecution of car

manufacturers, organizations responsible for the

proper condition of the road network in the

framework of the current Russian criminal law is

impossible due to the absence of criminal liability of

legal entities (Chuchaev, A.I., Malikov S.V., 2019).

This circumstance is indicated, in particular, by

the fact that, prior to the day of filing an application

for a conclusion regarding a highly automated

vehicle, the vehicle’s compliance with the

amendments to its design with safety requirements,

the applicant (owner) must insure and maintain the

risk for the insured during the trial operation liability

for obligations arising from harm to life, health or

property of others in favor of third parties in the

amount of 10 ml RUB in relation to each highly

automated vehicle, i.e. it is only a civil liability.

Regarding criminal liability for harming an

unmanned vehicle, the following should be noted.

In Russia, when a driver is involved to one degree

or another in a vehicle, the existing norms of the

Criminal Code of the Russian Federation apply.

Another thing is with the complete autonomy of the

vehicle. The resolution of criminal liability in this

case is particularly difficult for a number of reasons.

Firstly, the norm should be blank by definition,

however, to date, there are no rules that should be

referred to in the criminal law. Secondly, it should be

decided what lies at the basis of the etiology of a

traffic accident when using a highly automated

vehicle: disruption of the system or violation of traffic

rules as such (a combination of these factors is

possible). This is necessary to determine the legal

nature of a possible crime: transport or some other

(for example, technological), and therefore,

determine the object of crime and the place of the

latter in the system of the Special Part of the Criminal

Code of the Russian Federation. There is no clarity

regarding the subject of responsibility: should such be

the operator, system designer or manufacturer?

In addition, it is necessary to resolve the issue of

liability for external interference in the operation of

an unmanned vehicle; it must be noted that none of

the norms of the Criminal Code of the Russian

Federation covers such actions.

It should be borne in mind that the development

of unmanned vehicles will lead to their complete

autonomy (Chuchaev, A.I., Malikov S.V., 2019).

According to the classification of SAE International

(community of automotive engineers) of driver

assistance systems, or ADAS (Advanced Driver

Assistance System), there are six classes of autonomy

(Standard SAE J3016, 2020).

According to this classification, in the case of

highly automated and fully automated cars,

manufacturers are at a higher risk of being held

accountable than in the case of cars driven by people.

In the case of partially automated vehicles, the

driver is responsible under the same conditions as in

traditional vehicles, which only the driver drives.

It should also be noted that there is a problem with

the minimum standard of an unmanned vehicle: it is

difficult to determine whether a vehicle meets this

standard. This can only be verified through statistics

based on the widespread use of such vehicles.

Responsibility for Causing Harm as a Result of a Road Accident Involving a Highly Automated Vehicle

611

Therefore, it cannot be ruled out that in legal

practice a standard will be put forward that is easier

to apply in the case of individual responsibility. Such

a standard may be that an automated car should be at

least as reliable as an average or good driver.

Regarding specific accidents, a simple question to ask

would be: could an average / good driver prevent this

accident? The problem with this non-statistical,

human factor-based standard is that an automated car

is different from a human. In this connection, it is very

difficult to meet such a standard for manufacturers of

automated cars.

4 CONCLUSIONS

The introduction of unmanned control systems in

vehicles in the near future will entail changes in the

regulation of civil law and criminal liability arising

from damage caused by a highly automated vehicle.

That is why it is now necessary to review the

mechanisms for compensation for harm caused by

unmanned vehicles. In addition, it is important to

conduct theoretical research in the field of the use of

unmanned systems controlled by artificial

intelligence, as well as add new provisions to the

legislation governing a fundamentally new circle of

public relations.

The technology of unmanned movement is still far

from perfect, which means that accidents are

inevitable. But the purely human trait to learn from

our mistakes will allow in the future to minimize such

incidents on the roads. But accidents with unmanned

vehicles in any case will occur, simply because when

cars go, then accidents occur, this is inevitable.

To date, to address the issue of liability for

damage caused by a highly automated vehicle, the

following issues should be addressed:

- it is necessary to introduce a single term - a

highly automated vehicle;

- to fix the procedure for issuing a special permit

of the competent authority for the experimental use of

unmanned vehicles;

- introduce mandatory liability insurance for the

production and use of highly automated vehicles;

- develop and approve a minimum standard for a

highly automated vehicle;

- consolidate the mechanism and procedure for

compensation for damage in case of an accident

involving an unmanned vehicle, determine the

subjective composition of liability, as well as the

conditions for liability in such situations;

- to fix the mechanism and procedure for

compensation for damage in an accident involving an

unmanned vehicle, determine the subjective

composition of liability, as well as the conditions for

liability in the event of vehicle theft;

- oblige manufacturers of highly automated

vehicles to establish a "black box" fixing the course

of the trip.

As for the distribution of responsibility, then most

likely it will look like this:

- insurance companies will be liable for the

insured unmanned vehicles, but only if, at the time of

conclusion of the policy, the company was notified of

the fact of unmanned use of the vehicle;

- if the unmanned vehicle has not been insured,

the owner will be liable;

- if the accident was caused by a malfunction in

the program or equipment of the vehicle, the

responsibility is transferred to the manufacturer (the

owner or the insurance company has the right to set a

regression);

- if the accident was caused by the owner of the

car interfering in the software or equipment of the

insured vehicle or the owner did not follow the

manufacturer's instructions (for example, the

software was not updated), the insurance company

may recover the amount of insurance compensation

paid from the owner.

- if an accident occurred when the vehicle was

stolen, then the culprit of the accident will be

responsible.

Thus, Compulsory Motor Third Party Liability

(CMTPL) insurance for the owner of an unmanned

vehicle will not differ much from the insurance of a

standard vehicle, but the owner will have to follow

the software update and prevent interference with it

and the vehicle equipment. It is likely that with the

development of technology, the cost of insurance for

unmanned vehicles will significantly decrease,

compared with the standard, due to a decrease in the

probability of getting into an accident.

When unmanned vehicles go off the assembly

lines and appear on the roads, the autopilot will have

to "side by side" with manual control. Accidents and

conflicts cannot be avoided - this is a fact. In the case

of manual control, the driver is more likely to make a

mistake. However, this does not mean that with an

accident, a priori the fault falls on the shoulders of a

person, because artificial intelligence also suffers

from software failures.

But there are other concerns here - with the

increasing number of unmanned vehicles on the

roads, drivers who drive manually will have an

insignificant chance of justification. At the same time,

these changes will decrease with the increasing

number of drones.

iMLTrans 2020 - Special Session on Intelligent Mobility, Logistics and Transport

612

Unmanned technology will save millions of lives,

but no matter what the quality of the software, crashes

cannot be avoided. The problem is that although the

driver of a highly automated vehicle does not need to

closely monitor the situation on the road, today he

still bears full responsibility for the vehicle.

Perhaps in the future, everything can change when

private car ownership goes into oblivion, and drones

become available in the system of ride sharing

services. Then, in the event of accidents, corporations

such as Google, Baidu and Uber will be responsible,

to which smart robo-cars will belong.

ACKNOWLEDGEMENTS

This work was supported by the Russian Foundation

for Basic Research: grant No. 19-29-06008 \ 19

REFERENCES

ADAS & AV Legal Issues & Liabilities World Congress.

The legal issues, implications and liabilities arising

from ADAS and future autonomous vehicles. 2019.

URL: https://www.adaslegal-issuesandliabilities.com/

en/index.php [electronic resource] (accessed December

20, 2019).

Allen & Overy. 2017. Autonomous and connected vehicles:

navigating the legal issues. Allen & Overy LLP. URL:

http://www.allenovery.com/SiteCollectionDocuments/

Autonomous-and-connected-vehicles.pdf. [electronic

resource] (accessed January 20, 2020).

Chuchaev, A.I., Malikov S.V., 2019. Responsibility for

causing harm by a highly automated vehicle: state and

perspectives. Actual problems of Russian law. No 6

(103), p.p. 117-124.

Eiza, M.H., Ni, Q., 2017. Driving with Sharks: Rethinking

Connected Vehicles with Vehicle Cybersecurity. IEEE

Vehicular Technology Magazine. Volume: 12, Issue: 2.

DOI: 10.1109/MVT.2017.2669348.

Fafoutellis, P., Mantouka, E.G., 2018. Major Limitations

and Concerns Regarding the Integration of Autonomous

Vehicles in Urban Transportation Systems. The 4th

Conference on Sustainable Urban Mobility. CSUM

2018: Data Analytics: Paving the Way to Sustainable

Urban Mobility, pp 739-747.

Kalra, N., Paddock, S.M., 2016. Driving to safety: How

many miles of driving would it take to demonstrate

autonomous vehicle reliability? Transportation

Research Part A: Policy and Practice. Volume 94, pp.

182-193

Korobeev, A.I., Chuchaev, A.I., 2019. Unmanned Vehicles:

New Challenges to Public Security. Lex Russica. No 2

(147), pp. 9 - 28.

Makarova, I., Shubenkova, K., Mukhametdinov, E., Mavrin,

V., Antov, D., Pashkevich, A., 2018. ITS Safety

Ensuring Through Situational Management Methods.

Lecture Notes of the Institute for Computer Sciences,

Social-Informatics and Telecommunications

Engineering, vol. 222. 2018. DOI: 10.1007/978-3-319-

93710-6_15.

Martynov, A.V., 2019. Prospects for Establishing

Administrative Responsibility in the Field of Operation

of Unmanned Vehicles. Laws of Russia: experience,

analysis, practice, No 11, pp. 42-55.

McAllister, R., Gal, Y., Kendall, A., van der Wilk, M., Shah,

A., Cipolla, R., & Weller, A. 2017. Concrete Problems

for Autonomous Vehicle Safety: Advantages of

Bayesian Deep Learning. Proceedings of the Twenty-

Sixth International Joint Conference on Artificial

Intelligence, pp. 4745-4753. URL: https://doi.org/

10.24963/ijcai.2017/661.

Nigmatullin, I., 2016. Germany came up with three ethical

rules for unmanned cars. URL: https://hightech.fm/

2016/09/12/3-rules. [electronic resource] (accessed

January 20, 2020).

On Approval of the Road Safety Strategy in the Russian

Federation for 2018 - 2024. Decree of the Government

of the Russian Federation of January 08, 2018 № 1-r.

Russian newspaper. No15. - 25.01.2018.

On conducting an experiment on the pilot operation of

highly automated vehicles on public roads. Decree of the

Government of the Russian Federation of November 26,

2018 No. 1415 (together with the "Regulations on the

experiment on the pilot operation of high-speed vehicles

on public roads") Legislation collection of the Russian

Federation. 03.12.2018. № 49 (part VI). art. 7619.

On the Experimental Operation of Innovative Vehicles and

Amending Certain Legislative Acts of the Russian

Federation. Draft Federal Law No. 710083-7. URL:

http://sozd.parlament.gov.ru. [electronic resource]

(accessed January 20, 2020).

On the Rules of the Road. Decree of the Government of the

Russian Federation of October 23, 1993 No 1090 (ed. by

21.12.2019) (together with the "Basic Provisions for the

Admission of Vehicles to Operation and the Obligations

of Officials to Ensure Road Safety"). Russian News. No.

227. 11/23/1993.

Some, E., Gondwe, G., Rowe, E.W., 2019. Cybersecurity

and Driverless Cars: In Search for a Normative Way of

Safety. Sixth International Conference on Internet of

Things: Systems, Management and Security (IOTSMS)

DOI: 10.1109/IOTSMS48152.2019.8939168

Standard SAE J3016, 2020. URL: https://www.sae.org/

binaries/content/assets/cm/content/news/press-

releases/pathway-to-autonomy/automated_driving.pdf

[electronic resource] (accessed January 20, 2020).

The Vienna Convention on Road Traffic 08.11.1968) (with

ed. on 23.09.2014), 1999. Treaty Series. Volume 1732.

New York: United Nations. pp. 522 – 587.

Yağdereli, E., Gemci, C., & Aktaş, A. Z. (2015). A study on

cyber-security of autonomous and unmanned vehicles.

The Journal of Defense Modeling and Simulation, 12(4),

pp. 369–381.

Zabrodina, Е., 2018. Inhuman factor. Russian newspaper.

2018, March 21.

Responsibility for Causing Harm as a Result of a Road Accident Involving a Highly Automated Vehicle

613