Evasive Windows Malware: Impact on Antiviruses and Possible

Countermeasures

C

´

edric Herzog, Val

´

erie Viet Triem Tong, Pierre Wilke, Arnaud Van Straaten and Jean-Louis Lanet

a

Inria, CentraleSup

´

elec, Univ. Rennes, CNRS, IRISA, Rennes, France

Keywords:

Antivirus, Evasion, Windows Malware, Windows API.

Abstract:

The perpetual opposition between antiviruses and malware leads both parties to evolve continuously. On the

one hand, antiviruses put in place solutions that are more and more sophisticated and propose more complex

detection techniques in addition to the classic signature analysis. This sophistication leads antiviruses to leave

more traces of their presence on the machine they protect. To remain undetected as long as possible, malware

can avoid executing within such environments by hunting down the modifications left by the antiviruses.

This paper aims at determining the possibilities for malware to detect the antiviruses and then evaluating the

efficiency of these techniques on a panel of antiviruses that are the most used nowadays. We then collect

samples showing this kind of behavior and propose to evaluate a countermeasure that creates false artifacts,

thus forcing malware to evade.

1 INTRODUCTION

There is a permanent confrontation between malware

and antiviruses (AVs).

1

On the one hand, malware

mainly intend to infect devices in a short amount of

time, while remaining undetected for as long as pos-

sible. On the other hand, of course, AVs aim at de-

tecting malware in the fastest way possible.

When a new malware is detected, AVs lead fur-

ther analysis to produce a signature and keep their

database up-to-date quickly. Malware authors, will-

ing to create long-lasting malware at low cost, can

then use simple methods to avoid being detected and

analyzed deeply by these AVs. To this end, a malware

can search for the presence of traces or artifacts left

by an AV, and then decide whether or not they exe-

cute their malicious payload. We call such a malware

an evasive malware.

This article focuses on the evaluation of the eva-

sion techniques used by Windows malware in the

wild. First, we evaluate how common AVs cope both

with unknown malware and well-known evasion tech-

niques. To this end, we develop and use Nuky, a ran-

somware targeting Windows, and implementing sev-

eral evasion techniques.

Second, we design and evaluate a countermeasure,

mentioned by (Chen et al., 2008) and (Chen et al.,

a

https://orcid.org/0000-0002-4751-3941

1

https://www.av-test.org/en/statistics/malware/

2016), that simply consists of reproducing the pres-

ence of the artifacts on computers by instrumenting

the Windows API in order to force malware to evade.

The purpose of this countermeasure is to limit the in-

fection’s spread and not to replace malware detection.

We study the impact of this approach on both mal-

ware and legitimate software before concluding on its

limitations. To evaluate these experiments, we create

a small dataset and discuss the problem of collecting

evasive samples.

We give an overview of AV abilities and evasion

techniques in Section 3 and Section 4. We evaluate

AV’s abilities to detect new evasive malware in Sec-

tion 5. Then, we present the countermeasure and the

construction of the dataset used for its evaluation in

Section 6 and Section 7. Finally, before concluding,

we discuss the limitations of this study in Section 8.

2 STATE OF THE ART

There are multiple definitions of evasive malware in

the literature. For instance, (Naval et al., 2015) define

environment-reactive malware as:

Detection-aware malware carrying multiple

payloads to remain invisible to any protection

system and to persist for a more extended pe-

riod. The environment aware payloads deter-

mine the originality of the running environ-

302

Herzog, C., Tong, V., Wilke, P., Van Straaten, A. and Lanet, J.

Evasive Windows Malware: Impact on Antiviruses and Possible Countermeasures.

DOI: 10.5220/0009816703020309

In Proceedings of the 17th International Joint Conference on e-Business and Telecommunications (ICETE 2020) - SECRYPT, pages 302-309

ISBN: 978-989-758-446-6

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

ment. If the environment is not real, then the

actual malicious payload is not delivered. Af-

ter detecting the presence of a virtual or emu-

lated environment, malware either terminates

its execution or mimics a benign/unusual be-

havior.

However, this definition designates malware aim-

ing at detecting virtual or emulated environments

only. We complete this definition using part of the

proposition made by (Tan and Yap, 2016) that calls a

malware detecting “dynamic analysis environments,

security tools, VMs, etc., as anti-analysis malware”.

We choose to use this definition for the term

“evasive malware” instead of the proposed terms

“environment-reactive malware” or “anti-analysis

malware”, because we believe this is the term that is

the most used by both researchers and malware writ-

ers.

One possibility for malware to detect unwanted

environments is to search for specific artifacts present

only in these environments. For instance, (Black-

thorne et al., 2016) detail an experiment that extracts

fingerprints about AV’s emulators, and (Yokoyama

et al., 2016) extract predefined features from a Win-

dows computer. Once the harvest of artifacts com-

pleted, malware can then decide whether they are run-

ning in an AV environment or a standard environment.

Malware then use these artifacts to create evasion

tests, as described by (Bulazel and Yener, 2017), (Afi-

anian et al., 2020) and (Miramirkhani et al., 2017). It

is also possible to artificially add artifacts by using

implants as detailed by (Tanabe et al., 2018).

To detect evasive malware, we can compare the

malware’s behavior launched in an analysis environ-

ment and on a bare-metal environment. To compare

behaviors, (Kirat and Vigna, 2015) search for differ-

ences in the system call traces. (Kirat et al., 2014)

extract raw data from the disk of each environment

and compare file and registry operations, among oth-

ers, to detect a deviation of behavior. Finally, (Lindor-

fer et al., 2011) compare the behavior of malware be-

tween multiple analysis sandboxes and discover mul-

tiple evasion techniques.

It is possible to create an analysis environment

that is indistinguishable from a real one, called a

transparent environment, as presented by (Dinaburg

et al., 2008). However, (Garfinkel et al., 2007) gives

reservations about the possibilities of creating a fully

transparent environment as for them, “virtual and na-

tive hardware are likely to remain dissimilar”.

For this reason, we decide to do the opposite of a

transparent environment by creating an environment

containing artificial dissimilarities. To the best of our

knowledge, this idea was already discussed by (Chen

et al., 2008) but never tested on real malware.

3 ANTIVIRUS ABILITIES

AVs are tools that aim at detecting and stopping the

execution of new malware samples. They are an ag-

gregate of many features designed to detect the mal-

ware’s presence by different means, as described by

(Koret and Bachaalany, 2015). Using these features

incurs overhead that, if too significant, can hinder the

use of legitimate programs. In order to stay compet-

itive, AV editors have to limit this overhead. For this

reason, it is difficult for them to apply time demand-

ing techniques.

Most AVs use signature checking by comparing

the file against a database of specific strings, for in-

stance. The signature database needs to be updated

regularly to detect the latest discovered malware.

23

Fewer AVs use features that produce significant over-

heads, such as running the malware in an emulator.

45

During its installation, an AV adds files and mod-

ifies the guest OS. For instance, an AV can load its

features by manually allocating memory pages or by

using the LoadLibrary API to load a DLL file, as de-

scribed by (Koret and Bachaalany, 2015). As we de-

tail in the following section, evasive malware can de-

tect these modifications and then adapt their behavior.

Once a new malware is detected, AVs send the

sample to their server for further analysis. To ana-

lyze malware more deeply, an analyst can use multi-

ple tools such as debugger to break at particular in-

structions or Virtual Machines (VMs) and emulators

to analyze it in a closed environment.

A malware willing to last longer can then try to

complicate the analyst’s work by using anti-debugger

or anti-VM techniques to slow down the analysis.

4 AV EVASIONS

In the previous section, we gave details about the en-

vironment in which we are interested. We now de-

tail possible ways for evasive malware to detect the

presence of such environments. Evasive malware can

use many evasion techniques, and (Bulazel and Yener,

2

https://support.avast.com/en-us/article/22/

3

https://www.kaspersky.co.uk/blog/the-wonders-of-

hashing/3629/

4

https://i.blackhat.com/us-18/Thu-August-9/us-

18-Bulazel-Windows-Offender-Reverse-Engineering-

Windows-Defenders-Antivirus-Emulator.pdf

5

https://eugene.kaspersky.com/2012/03/07/emulation-

a-headache-to-develop-but-oh-so-worth-it/

Evasive Windows Malware: Impact on Antiviruses and Possible Countermeasures

303

2017), (Lita et al., 2018) and (Afianian et al., 2020)

report a vast majority of them. In this article, we

separate these techniques into three main categories:

detection of debugging techniques, detection of AV

installation and execution artifacts, and detection of

VMs. In this section, we briefly describe these cate-

gories, and we list the artifacts searched by Nuky for

each of them in Table 1.

Detection of Debuggers. To observe malware in

detail, an AV can use a debugger to add breakpoints

at a specific instruction. The usage of debuggers im-

plies that a process has to take control of the debugged

process. This take of control leaves marks visible

from the debugged process, thus can be seen by the

malware. For instance, these marks can be the ini-

tialization of specific registers, the presence of well-

known debuggers’ process, or specific behaviors of

some Windows API functions.

Detection of AVs’ Installation and Execution Ar-

tifacts. The installation of the AV makes some

changes to the operating system. For Windows, it

starts by creating a folder, often in the Program Files

folder. This folder contains the executable of the an-

tivirus and the other resources it requires. During the

installation of an AV, some of the registry keys are

changed or added. They can set up hooks of the Win-

dows API from the user or the kernel level.

In the same way, the execution of the core pro-

cess and plugins of the AV leaves artifacts within the

operating system, and these alterations can be visible

from the user-mode. A simple technique to detect the

presence of antivirus is thus to search for standard AV

processes names in the list of the running processes.

Detection of VMs, Emulators, Sandboxes. Fi-

nally, an AV can execute malware in a sandbox, i.e., a

controlled and contained testing environment, where

its behavior can be carefully analyzed. These sand-

boxes intend to reproduce the targeted environment

but, indeed, slightly diverge from the real environ-

ment. An evasive malware can then search for pre-

cise files, directories, process names, or configura-

tions that indicate the presence of a VM or emulator.

5 EXPERIMENTS

5.1 Nuky, A Configurable Malware

At this point, we want to measure the malware’s abil-

ity to evade the most commonly used AVs. To carry

Table 1: Artifacts searched by Nuky for each category.

Artifacts Debugger AV VM

Process Names × × ×

GUI Windows Names ×

Debugger registers values ×

Imported functions × ×

Registries Names & Values ×

Folder Names × ×

.DLL Names ×

Usernames ×

MAC addresses ×

Table 2: Nuky’s payloads.

Type Method

Encryption AES in ECB mode

6

Compression Huffman

7

Stash Sets hidden attribute to true

Test Drops Eicar’s file on Desktop

8

out our experimentations, we develop Nuky, a config-

urable malware. Nuky has 4 different payloads and

multiple evasion capabilities divided into 2 sections,

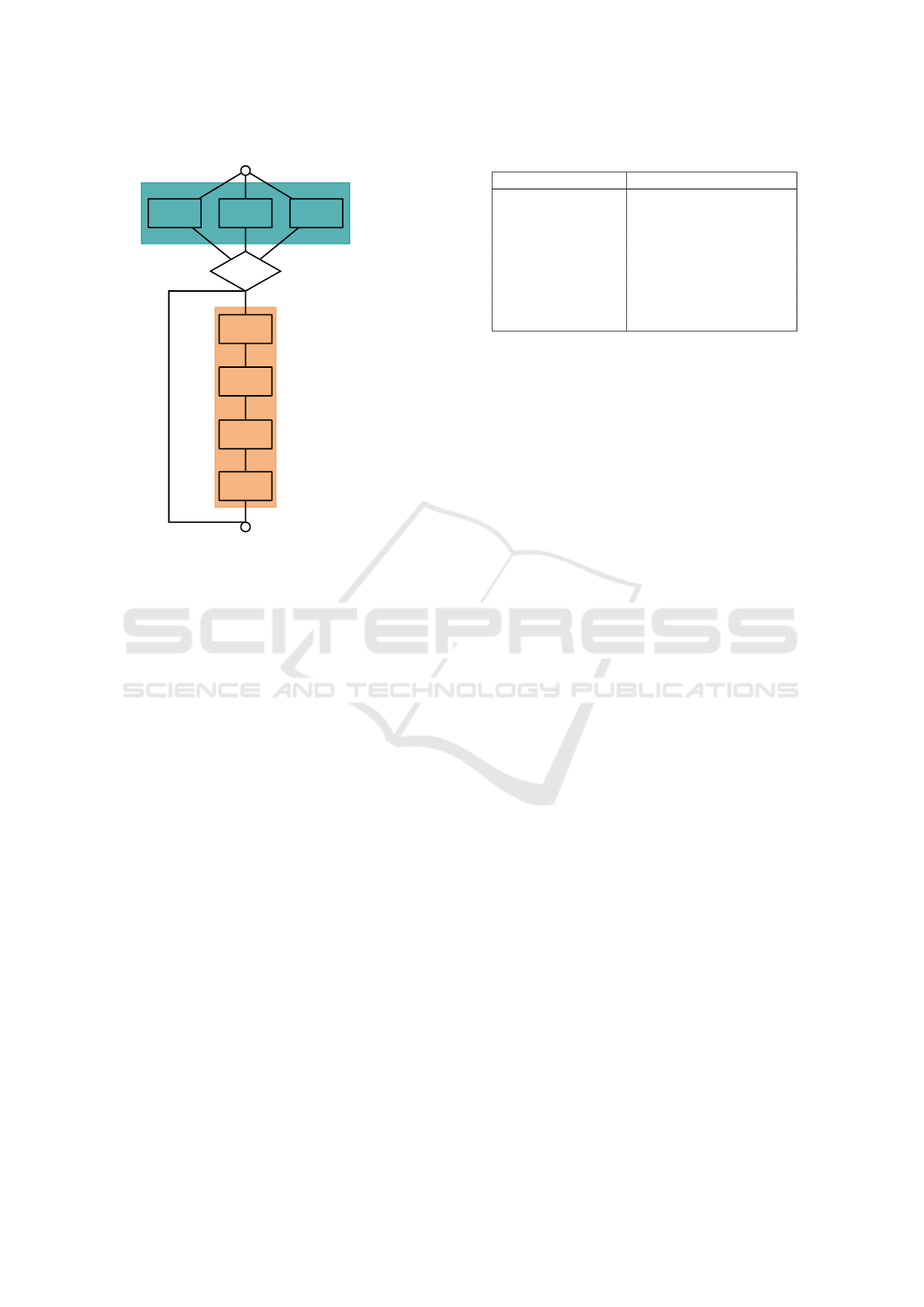

as depicted in Figure 1:

• The Evasion Tests section is composed of 3 blocks

containing numerous evasion techniques designed

to detect the artifacts listed in Table 1. Nuky is

configurable to launch zero or multiple evasion

blocks.

• The Payload section contains 4 payloads detailed

in Table 2. They implement methods to modify

files in the Documents folder on a Windows work-

station.

In the following, we use 2 different configurations

of Nuky, first to test the detection capability of the

available AVs and then to test the evasive capability of

malware using the theoretical techniques enumerated

in Section 4.

Set-up. All of our experiments use the same archi-

tecture. We prepare Windows images equipped with

some of the most popular AVs, according to a study

from AVTest.

9

These images are all derived from the

same Windows 10 base image, populated with files

taken from the Govdocs1 dataset.

10

Every AV is set

on a high rate detection mode to give more chances

for AVs to trigger alerts. Some AVs propose a spe-

cific feature designed to put a specific folder (speci-

fied by the user) under surveillance. For these AVs,

6

https://github.com/SergeyBel/AES/

7

https://github.com/nayuki/Reference-Huffman-coding

8

https://www.eicar.org/?page id=3950

9

https://www.av-test.org/en/antivirus/home-windows/

10

http://downloads.digitalcorpora.org/corpora/files/

govdocs1/

SECRYPT 2020 - 17th International Conference on Security and Cryptography

304

Debugger

Evasion

Antivirus

Evasion

VM

Evasion

AV Found?

AES

Huffman

Hide file

No

Yes

Start

End

Evasion Tests

Section

Payload

Section

Eicar

Figure 1: Operation of Nuky.

we build an additional Windows image with the AV

parametrized to watch the Documents folder. For all

our experiments, we update the AVs before discon-

necting the test machine from the network (offline

mode), to ensure that the Nuky samples do not leak

to the AVs.

5.2 AVs’ Detection Abilities

This first experiment aims at finding which AVs can

catch Nuky depending on the payload used, and to

verify that they are all able to detect the Eicar’s file.

In this experiment, Nuky does not use any evasion

technique and executes each of its 4 payloads. Nuky

is a new malware and consequently not present in

AVs records. Nevertheless, its first 3 payloads (Com-

pression, Encryption, and Stash) are characteristic of

common malware. An AV should, therefore, be able

to identify them. Before launching Nuky, we run tar-

geted scans on Nuky’s executable file, but no AV suc-

ceeds to detect it as malicious. We then execute Nuky

once for each payload on every AV with an image

restoration step before each run.

As depicted in Table 3, only AVs equipped with

a specific ransomware detection feature succeed in

detecting Nuky’s payloads. K7 Computing’s prod-

uct also detects Nuky after some time. Nevertheless,

Nuky succeeds in ciphering part of the computer’s

files before being stopped. We expect K7 Comput-

ing to generate false positives as a legitimate image

Table 3: Payloads detected by AVs.

Antivirus

AES Huffman Hide

Eicar

Windows Defender ×

Windows Defender+

× × ×

×

Immunet (ClamAV) ×

Kaspersky ×

Avast ×

Avast+

× × ×

×

AVG ×

Avira ×

K7 Computing

×

∗

×

∗

×

∗

×

*Part of the files modified before stopping Nuky.

rotation made by the Photos program is detected mali-

cious by this AV. Same observation for Windows De-

fender blocking a file saved using LibreOffice Draw.

However, they still detect Nuky as malicious and are

the AVs we want to precisely identify in the second

experiment to abort Nuky’s execution and avoid de-

tection. Finally, all AVs achieve to detect the test pay-

load with Eicar’s file.

The fact that a majority of AVs do not detect the

compression, encryption, and stash payloads could be

a sign that they still heavily rely on signature analysis.

5.3 Nuky’s Evasion Abilities

In the second experiment, we enable the three evasion

blocks one-by-one, to check whether Nuky using the

test payload (Eicar’s file) is still detected or not, com-

pared to the first experiment. If Nuky is no longer

detected, it means that the evasion block successfully

identifies an AV and does not launch its payload. Oth-

erwise, if the evasion block fails, this means that Nuky

does not detect the presence of any AV and runs its

malicious payload.

We consider an evasion as successful if at least

one of the 2 following conditions is satisfied:

• An AV detects Nuky in the first experiment, but

no longer in the second experiment.

• Nuky prints the AV’s name identified in a console.

It can also send the name through an IRC channel.

However, as Nuky can be executed in closed envi-

ronments without a console or disconnected from the

network, we can lose some proofs of evasion.

It may be our case, as only the AV evasion block

provides detections for which we can see Nuky’s re-

sults printed in the console. We do not observe a dif-

ference using the debugger evasion block nor the VM

evasion block. The reason could be that the AVs we

tested do not use the debuggers and VMs targeted by

Nuky. It could also be possible that Nuky prints the

results on an unreachable console.

Table 4 shows the number of artifacts found in

Evasive Windows Malware: Impact on Antiviruses and Possible Countermeasures

305

Table 4: Number of artifacts detected by Nuky’s AV evasion

block.

Antivirus

Folder

Names

Process

Names

Windows Defender

3 1

Immunet (ClamAV)

1 1

Kaspersky

4 4

Avast

2 2

AVG

2 2

Avira

1 9

K7 Computing

2 9

each AV by the 2 techniques composing the AV eva-

sion block.

As our evasions heavily rely on string compar-

isons, our string set has to be exhaustive to detect a

maximum of AVs’ artifacts.

Using these evasion methods, we can successfully

identify the AVs on which Nuky can launch its cipher-

ing payload without being detected and, thus, increase

its life expectancy.

In the first experiment, we find that AVs have trou-

bles to detect Nuky’s payloads unless they propose

specific features to detect ransomware. In the second

experiment, we show that it is possible to identify pre-

cisely AVs to adapt the malware’s behavior.

6 DATASET CREATION

In the previous section, we conclude that the AVs’

evasion methods are easy to set up and very efficient

against modern AVs. We now detail the way we col-

lected evasive samples in order to build a dataset to

test our countermeasure. First, we describe the way

we build a filter to keep only evasive samples, and

second, how we use it to collect samples.

6.1 Filtering using Yara Rules

To create our filter, we use a program called Yara

11

,

which allows matching samples according to a set of

rules. Yara can match samples containing a specific

string or loading a specific Windows library or func-

tion and, thus, does not work on ciphered or packed

malware. However, we believe that the evasive tests

could occur before the unpacking or deciphering, thus

readable and matchable.

We create a rule containing five subrules, each

composed of several conditions and designed to find

malware that respectively try to detect a debugger, de-

tect a running AV or sandbox, manipulate and iterate

11

http://virustotal.github.io/yara/

Table 5: Number of samples matched by each rule.

Rules All samples Final samples

Debugger 20 16

AV and sandbox 0 0

Folder manipulation 5 0

MAC addresses 37 2

Find window 0 0

over folders, find VM’s MAC addresses, and find a

debugger window.

A set of rules designed to match evasive samples

already exists

12

. However, we create a new one as

these rules match too easily and return too much non-

evasive malware.

6.2 Samples Collection

The difficulty of collecting samples of evasive mal-

ware lies in the fact that, by definition, these malware

avoid being caught. We manually crawl the WEB in

public datasets such as hybrid-analysis

13

and public

repositories like theZoo

14

, for instance. The data col-

lection covers 3 months between the November 18th,

2019 and the January 21st, 2019.

Over this period, we found 62 samples match-

ing the Yara rule among the few thousand samples

crawled. To avoid false positives and to be sure to

keep samples PE files that are more likely to be ac-

tual malware, we selected only samples that are not

signed and marked as malicious by VirusTotal

15

. Ta-

ble 6 lists all the MD5 of the 18 selected malware, and

Table 5, the number of samples matched by each rule.

The fact that some rules do not give any result

might be because we crawled the WEB by hand, and

therefore, had not numerous samples to match.

The Yara rule we use is very restrictive and

matches only a few samples for the duration of the

malware harvest. However, we get only a few false

positives and now intend to automate the WEB crawl-

ing process to continue the acquisition of malware.

6.3 Selected Samples Description

We create groups of samples sharing most of their as-

sembly code by comparing them using Ghidra’s diff

tool. All samples within a group contain the same

code in the text section that seems to carry the eva-

sion part of the malware.

12

https://github.com/YaraRules/rules/blob/master

/antidebug antivm/antidebug antivm.yar

13

https://www.hybrid-analysis.com/

14

https://github.com/ytisf/theZoo

15

https://www.virustotal.com

SECRYPT 2020 - 17th International Conference on Security and Cryptography

306

Table 6: Selected samples and countermeasure results.

Group MD5 Results

A

de3d1414d45e762ca766d88c1384e5f6 OK

2d57683027a49d020db648c633aa430a OK

d3e89fa1273f61ef4dce4c43148d5488 OK

bd5934f9e13ac128b90e5f81916eebd8 OK

512d425d279c1d32b88fe49013812167 OK

2ccc21611d5afc0ab67ccea2cf3bb38b OK

6b43ec6a58836fd41d40e0413eae9b4d OK

B

ee12bbee4c76237f8303c209cc92749d OK

5253a537b2558719a4d7b9a1fd7a58cf OK

8fdab468bc10dc98e5dc91fde12080e9 OK

e5b2189b8450f5c8fe8af72f9b8b0ad9 OK

ee88a6abb2548063f1b551a06105f425 OK

4d5ac38437f8eb4c017bc648bb547fac OK

C f4d68add66607647bf9cf68cd17ea06a OK

D 862c2a3f48f981470dcb77f8295a4fcc CRASH

E e51fc4cdd3a950444f9491e15edc5e22 NOK

F 812d2536c250cb1e8a972bdac3dbb123 NOK

G 5c8c9d0c144a35d2e56281d00bb738a4 CRASH

Groups A, B, and C share a significant part of their

text section with small additions of code or function’s

signature modifications. The other groups are very

different and contain their unique code.

We compute the entropy for each sample and find

a min-entropy of 6.58 and a max-entropy of 7.11.

Based on the experiment described by (Lyda and

Hamrock, 2007), these values could suggest that the

samples are packed or encrypted. We found in ev-

ery sample different resources that seem to be PE files

that could be unpacked or decrypted after the evasion

tests.

All these similarities could be due to the sharing

of code on public projects such as Al-Khaser

16

or

smaller ones

17

. Some packers also propose the ad-

dition of an evasion phase before unpacking such as

the Trojka Crypter.

18

7 AV EVASION

COUNTERMEASURE

It is possible to thwart such evasion techniques by in-

strumenting the Windows API to force malware to

evade, as discussed by (Chen et al., 2008). To do

these instrumentations, we used Microsoft Detours,

a framework that facilitates the hooking of Windows

API functions.

19

16

https://github.com/LordNoteworthy/al-khaser

17

https://github.com/maikel233/X-HOOK-For-CSGO

18

MD5: c74b6ad8ca7b1dd810e9704c34d3e217

19

https://github.com/microsoft/Detours/wiki/

OverviewInterception/

Microsoft Detours produces a new DLL file

loaded at execution time in the malware using the

AppInit registry

20

. This method is efficient against

software such as Al-Khaser but needs tests on real

malware. We now describe the Windows API func-

tions that we modify, discuss the countermeasure’s ef-

ficiency on real malware, and measure the overhead it

induces.

7.1 Windows API Instrumentations

We implemented 3 types of instrumentations on 8

functions. First, for IsDebuggerPresent and GetCur-

sorPos, we always return the same fixed value, re-

spectively True and a pointer to a cursor structure with

the coordinates set to 0.

Second, for GetModuleHandle, RegOpenKeyEx,

RegQueryValueEx, CreateFile, and GetFileAttributes,

they access file handles, registry names, and values by

providing their names in parameters. We instrument

the functions to return fake handles, values, and sys-

tem error codes if the name requested is related to an

analysis tool.

Finally, CreateToolhelp32Snapshot is modified to

create fake processes of popular analysis tools if they

are not already running on the machine.

7.2 Countermeasure Efficiency

We test our countermeasure by comparing the behav-

ior of the malware we collected on images with and

without the countermeasure enabled. We use the same

Windows image created for the AVs experiments and

create a second image, similar but with the counter-

measure installed. We consider that we successfully

forced a malware to evade if it behaves differently on

the 2 images. All 18 malware are tested by hand using

Process Monitor from the SysInternals library.

21

For 14 malware, we observe on the image with-

out the countermeasure that they launch a subprocess

in the background. Besides, these malware start a

WerFault.exe process. With the countermeasure en-

abled, the parent process of these malware immedi-

ately stops, which could mean they evade. The results

detailed in Table 6 show that 2 malware crash in both

environments and 2 others keep the same behavior.

7.3 Countermeasure Overhead

We evaluate the overhead induced by the countermea-

sure on 4 software typically found on Windows com-

20

https://attack.mitre.org/techniques/T1103/

21

https://docs.microsoft.com/en-

us/sysinternals/downloads/procmon

Evasive Windows Malware: Impact on Antiviruses and Possible Countermeasures

307

Table 7: Executions times (in seconds) with and without the

countermeasure enabled. The first line of each row is with

the countermeasure enabled, and the second line without.

Software Mean Standard Deviation

Al-Khaser

15.24 1.38

13.29 1.36

VLC

0.8772 0.0609

0.8593 0.0677

Notepad

0.0615 0.0033

0.0607 0.0028

Firefox

0.8005 0.0270

0.8026 0.0259

MS-Paint

0.0581 0.0096

0.0583 0.0106

puters that are VLC, Notepad, Firefox, and Microsoft

Paint. We also measure Al-Khaser, a program per-

forming a set of evasion techniques and generating a

report on their results. We removed from Al-Khaser

all the techniques relying on time observation.

We run each software 100 times, interacts with

them, and computes the mean of all the execution

times with and without the countermeasure enabled,

as shown in Table 7. The only significant overhead

that we observe is on Al-Khaser with +14.62%, as

this software heavily calls our instrumented functions,

which we consider the worst-case scenario.

8 LIMITATIONS AND FUTURE

WORK

8.1 Nuky

When testing AVs against evasion techniques, we use

a minimal number of basic evasion techniques, well

known to attackers, and easily reproducible. Almost

all the techniques we test are based on string com-

parisons and are not representative of all the possi-

ble ways to detect an AV. For now, Nuky represents

the malware that any attacker could write by looking

at tutorials, and not the elaborated malware created

by big groups or states. We intend to extend Nuky’s

functionalities to make it harder to detect.

We did not achieve to test the evasion techniques

targeting debuggers and VM’s with our simple exper-

iment. We can then only conclude on the efficiency

of the category of evasions targeting AVs, which con-

tains for now 2 techniques. However, we believe that

it is possible to test the other categories of evasion

with more elaborated black-box testing.

8.2 Countermeasure

We chose to instrument the Windows API functions

to block the same basic techniques used by Nuky and

not more elaborated ones. As for a signature database,

this countermeasure needs continuous updates to be

able to thwart new evasive techniques. Of course,

with this method, we can only block malware evading

using the Windows API and not those using different

means.

To avoid testing the countermeasure on malware

it was designed for, we used a different set of strings

to construct the dataset and to implement the counter-

measure. However, this bias is still present and is re-

movable by using different techniques to collect mal-

ware and to evaluate the countermeasure.

We also need to measure the side-effects of this

countermeasure on malware, legitimate software, and

the operating system.

8.3 Dataset

There is no public repository properly providing mal-

ware with the details of the evasion techniques per-

formed. For this reason, we use a small dataset we

created and analyzed by hand, but on which we can-

not generalize our conclusion for now.

We aim at automatically crawling and flagging

samples to create a larger dataset and observe the evo-

lution of such evasive malware through time by letting

this experiment run in the long term.

Finally, after obtaining a fully formed dataset of

evasive malware, we intend to study how they evade.

Most of the tested malware just stop their execution,

but others could choose to behave differently while

still executing.

9 CONCLUSION

We found that AVs are still vulnerable to unknown

malware using basic encryption, compression, and at-

tribute modification. Only 3 out of the 9 AVs we

tested caught Nuky, and we suspect them to gener-

ate a lot of false positives. Other AVs may also still

rely on simple signature detection.

Moreover, when an AV succeeds in catching our

malware, we prove that basic evasive techniques en-

able us to identify them precisely and change the mal-

ware’s behavior to avoid detection. We showed that

Nuky could escape AVs by detecting AV artifacts but

need more tests for the debugger and VM artifacts.

A few samples of real malware implementing eva-

sion techniques are collected using Yara rules. We

SECRYPT 2020 - 17th International Conference on Security and Cryptography

308

found that a lot of these samples share their evasive

code that we retrieved in public code repositories.

Finally, we implemented a countermeasure that

seems to be able to thwart evasive malware by instru-

menting the Windows API. In the end, 14 out of the

18 malware tested showed a different behavior with

and without the countermeasure enabled. The highest

overhead measured is on Al-Khaser with an addition

of 14.62% to the execution time.

The code for Nuky’s evasion part and the Yara rule

is available on request.

REFERENCES

Afianian, A., Niksefat, S., Sadeghiyan, B., and Baptiste,

D. (2020). Malware dynamic analysis evasion tech-

niques: A survey. CSUR Computing Surveys - ACM,

52(6).

Blackthorne, J., Bulazel, A., Fasano, A., Biernat, P., and

Yener, B. (2016). Avleak: Fingerprinting antivirus

emulators through black-box testing. In WOOT Work-

shop on Offensive Technologies, number 10, pages

91––105, Austin, TX, USA. USENIX Association.

Bulazel, A. and Yener, B. (2017). A survey on auto-

mated dynamic malware analysis evasion and counter-

evasion: Pc, mobile, and web. In ROOTS Reversing

and Offensive-Oriented Trends Symposium, number 1,

pages 1–21, Vienna, Austria. ACM.

Chen, P., Huygens, C., Desmet, L., and Joosen, W. (2016).

Advanced or not? A comparative study of the use of

anti-debugging and anti-vm techniques in generic and

targeted malware. In IFIP SEC International Infor-

mation Security and Privacy Conference, number 31,

pages 323–336, Ghent, Belgium. Springer.

Chen, X., Andersen, J., Mao, Z. M., Bailey, M., and

Nazario, J. (2008). Towards an understanding of anti-

virtualization and anti-debugging behavior in modern

malware. In DSN Dependable Systems and Networks,

number 38, pages 177–186, Anchorage, Alaska, USA.

IEEE Computer Society.

Dinaburg, A., Royal, P., Sharif, M. I., and Lee, W. (2008).

Ether: malware analysis via hardware virtualization

extensions. In CCS Conference on Computer and

Communications Security, number 15, pages 51–62,

Alexandria, Virginia, USA. ACM.

Garfinkel, T., Adams, K., Warfield, A., and Franklin, J.

(2007). Compatibility is not transparency: VMM

detection myths and realities. In HotOS Hot Topics

in Operating Systems, number 11, pages 30–36, San

Diego, California, USA. USENIX Association.

Kirat, D. and Vigna, G. (2015). Malgene: Automatic extrac-

tion of malware analysis evasion signature. In SIGSAC

Conference on Computer and Communications Secu-

rity, number 22, pages 769–780, Denver, Colorado,

USA. ACM.

Kirat, D., Vigna, G., and Kruegel, C. (2014). Barecloud:

Bare-metal analysis-based evasive malware detection.

In USENIX Security Symposium, number 23, pages

287–301, San Diego, California, USA. USENIX As-

sociation.

Koret, J. and Bachaalany, E. (2015). The Antivirus Hacker’s

Handbook. Number 1. Wiley Publishing.

Lindorfer, M., Kolbitsch, C., and Comparetti, P. M.

(2011). Detecting environment-sensitive malware. In

RAID Recent Advances in Intrusion Detection, num-

ber 14, pages 338–257, Menlo Park, California, USA.

Springer.

Lita, C., Cosovan, D., and Gavrilut, D. (2018). Anti-

emulation trends in modern packers: a survey on the

evolution of anti-emulation techniques in UPA pack-

ers. Computer Virology and Hacking Techniques,

12(2).

Lyda, R. and Hamrock, J. (2007). Using entropy analysis

to find encrypted and packed malware. SP Security &

Privacy - IEEE, 5(2).

Miramirkhani, N., Appini, M. P., Nikiforakis, N., and Poly-

chronakis, M. (2017). Spotless sandboxes: Evading

malware analysis systems using wear-and-tear arti-

facts. In SP Symposium on Security and Privacy, num-

ber 38, pages 1009–1024, San Jose, California, USA.

IEEE Computer Society.

Naval, S., Laxmi, V., Gaur, M. S., Raja, S., Rajarajan,

M., and Conti, M. (2015). Environment–reactive

malware behavior: Detection and categorization. In

DPM/QASA/SETOP Data Privacy Management, Au-

tonomous Spontaneous Security, and Security Assur-

ance, number 3, pages 167–182, Wroclaw, Poland.

Springer.

Tan, J. W. J. and Yap, R. H. C. (2016). Detecting malware

through anti-analysis signals - A preliminary study. In

CANS Cryptology and Network Security, number 15,

pages 542–551, Milan, Italy. Springer.

Tanabe, R., Ueno, W., Ishii, K., Yoshioka, K., Matsumoto,

T., Kasama, T., Inoue, D., and Rossow, C. (2018).

Evasive malware via identifier implanting. In DIMVA

Detection of Intrusions and Malware, and Vulnerabil-

ity Assessment, number 15, pages 162–184, Saclay,

France. Springer.

Yokoyama, A., Ishii, K., Tanabe, R., Papa, Y., Yoshioka, K.,

Matsumoto, T., Kasama, T., Inoue, D., Brengel, M.,

Backes, M., and Rossow, C. (2016). Sandprint: Fin-

gerprinting malware sandboxes to provide intelligence

for sandbox evasion. In RAID Research in Attacks,

Intrusions, and Defenses, number 19, pages 165–187,

Evry, France. Springer.

Evasive Windows Malware: Impact on Antiviruses and Possible Countermeasures

309