A Method for Learning Netytar: An Accessible Digital Musical

Instrument

Nicola Davanzo and Federico Avanzini

University of Milan, Dept. of Computer Science, Via Celoria 18 20-133 Milano, Italy

Keywords:

ADMI, Accessibility, Musical Instrument, Learning.

Abstract:

Accessible Digital Musical Instruments (ADMI) are increasingly raising interest within the scientific com-

munity, especially in the contexts of Sound and Music Computing and Human-Computer Interaction. In the

past, Netytar has been proposed among these. Netytar is a software ADMI operated through the eyes us-

ing an eye tracker and an additional switch or sensor (e.g., a breath sensor). The instrument is dedicated to

quadriplegic users: it belongs to the niche of gaze operated musical instruments, and has been proven effective

and functional through testing. Although there are several other gaze operated ADMIs available in market

and literature, a formal method for studying music with them has not yet been proposed. The present work

introduces a simple study method based on a set of exercises. This can be useful for approaching musical

performance with Netytar, but it’s also potentially generalizable for learning other similar instruments. The

exercises are illustrated, discussed and explained in view of an improvement. A simple musical notation is

introduced. At the end of a learning cycle, a user is expected to be able to perform simple melodies, and have

a basis with which to learn other new ones. In the future, the method will be tested with the target users.

1 INTRODUCTION

As demonstrated by the recently published works

dedicated to the topic, Accessible Digital Musical In-

struments (ADMIs) are conquering an important slice

of literature. Several works (Larsen et al., 2016;

Hornof, 2014; Frid, 2019) offer reviews of the state-

of-the-art instruments dedicated to users with vari-

ous types of disabilities: physical, cognitive, sensory.

Among them, a significant portion (both in literature

and market) is dedicated to various types of physical

impairments and related applications. Available inter-

faces targets space from rehabilitation purposes (Cor-

rea et al., 2009), to hemiplegic paralysis (Harrison and

McPherson, 2017), quadriplegia (Jamboxx, nd), and

extreme conditions such as lock-in syndrome (Vam-

vakousis and Ramirez, 2014, 2016), where the user

is unable to control any muscle other than those that

move the eyes. The recent work by Frid (Frid, 2019)

reports and categorizes a total of 83 musical inter-

faces, showing that 39.8% of them are dedicated to

people with physical impairments. Within this portion

there are the so-called gaze controlled musical instru-

ments (Bailey et al., 2010; Refsgaard, nd; Morimoto

et al., 2015; Vamvakousis and Ramirez, 2016), i.e. in-

struments operated by the eyes using an eye tracker.

These exploit alternative interaction channels to limbs

and hands, which are used for playing the vast major-

ity of traditional musical instruments. They are there-

fore generally dedicated to users with conditions such

as quadriplegic paralysis.

In 2018, Netytar (Davanzo et al., 2018) was pro-

posed: it is a monophonic musical instrument oper-

ated through gaze, blinking and an additional switch

or sensor (a breath sensor in its current version). In

the aforementioned work, Netytar has been compared

to a state-of-the-art instrument of that time: the Eye-

Harp (Vamvakousis and Ramirez, 2016). Preliminary

tests showed that in some respects it was slightly more

precise and effective. In addition to the layout differ-

ences, this difference in performance can be explained

by specific design choices, in particular the absence

of smoothing filters on the gaze data to avoid delays

(more on this in Sec. 2).

Despite the abundance of ADMIs, and gaze oper-

ated instruments in particular, there is a general lack

of teaching methods for them in literature.

1

This

and numerous other factors may discourage their use

1

One notable exception is the MUSA project, which in-

volved teaching music to users with disabilities using the

EyeHarp. See https://www.upf.edu/web/musa (Accessed

on: 29/02/2020).

620

Davanzo, N. and Avanzini, F.

A Method for Lear ning Netytar: An Accessible Digital Musical Instrument.

DOI: 10.5220/0009816106200628

In Proceedings of the 12th Inter national Conference on Computer Supported Education (CSEDU 2020), pages 620-628

ISBN: 978-989-758-417-6

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

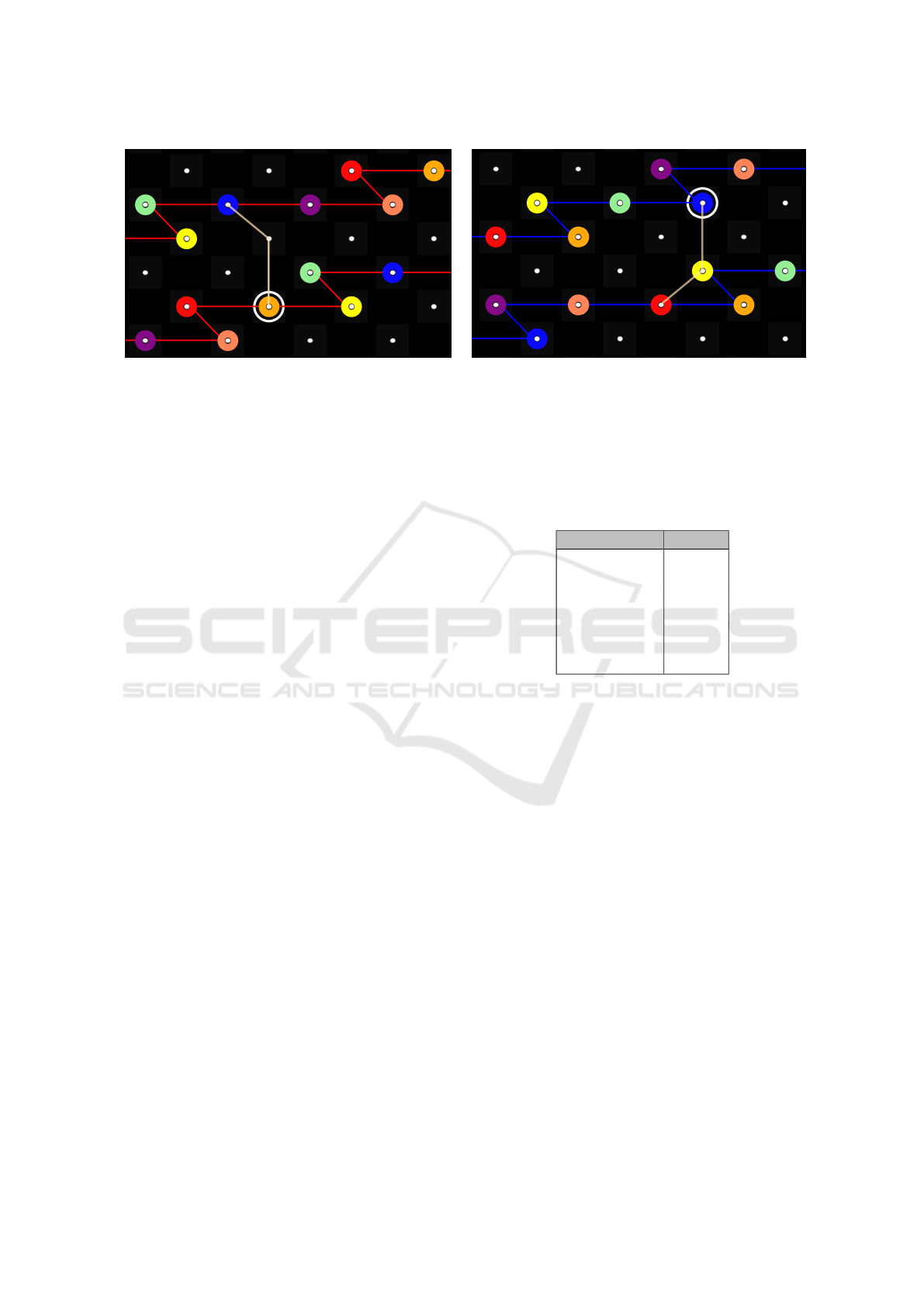

(a) (b)

Figure 1: Two examples of Netytar’s keyboard surface as it appears while running the software instrument. Keys appear as

circles. A white trace flashes and slowly disappears showing the notes played in the immediate past, while a white circle

surrounds the currently selected note. In Fig. 1a, a major scale is highlighted with red connectors, while in Fig. 1b a minor

scale is highlighted with blue connectors. Color code for keys is as indicated in Tab. 1.

both by private users and by centers for rehabilita-

tion or hospitalization with music teaching or music

therapy departments. As an example, as highlighted

by Marquez-Borbon and Martinez Avila (2018), the

lack of repertoires and communities dedicated to a

specific Digital Musical Instrument (DMI) in gen-

eral could negatively affect its diffusion. Ward et

al. (Ward et al., 2017) outline a group of guidelines

for the development of musical instruments dedicated

to Special Educational Needs (SEN) contexts, high-

lighting that technology is often overlooked, being

seen as too complex, useless, or “geeky”.

This paper purpose is to address the lack of train-

ing methods by introducing one (described in Sec.

4) for learning Netytar, conceived and designed to

cover some aspects of gaze-based musical interaction.

This consists of a series of exercises dedicated to non-

musicians who approach music for the first time using

the instrument. Such exercises are aimed at cover-

ing different aspects of a first experience with a mu-

sical instrument, both gaze based and in a general

sense. This method should be validated by exper-

imental observations, which refer to future publica-

tions. Finally, although the method is focused on Ne-

tytar, some sections (particularly Musical calisthen-

ics, Sec. 4.1) could be easily adaptable to other gaze

based musical interfaces. Sec. 2 provides a review of

the main features included in the instrument. Lastly,

Sec. 5 describes further possible developments to im-

prove the described method.

2 Netytar

The original implementation of Netytar has been de-

scribed elsewhere (Davanzo et al., 2018). Here we

Table 1: Color code for keys on Netytar’s keyboard. Colors

represent grades on the selected scale (major or minor), and

not absolute note values.

Grade in scale Color

1st Red

2nd Orange

3rd Yellow

4th Green

5th Blue

6th Purple

7th Peach

resume the main features, as well as some improve-

ments introduced in later implementations.

As already mentioned, the musician interacts with

Netytar through the eyes and a dynamic switch or

controller (e.g. breath sensor). Using the jargon of

DMIs related literature, we can describe the mapping

between physical action performed by the user and

musical performance parameters in this way: Gaze

point controls note selection, moving on a virtual keys

surface displayed on screen; Blinks are employed to

execute repeated notes (same note executed two or

more times) and to interact with some properties of

the surface (e.g. to highlight different scales); Breath

is used to control note dynamics (e.g. intensity), in

the same way as an acoustic flute.

Netytar’s layout was conceived so as to avoid in-

teraction problems common to other interfaces based

on eye tracking. A major one is a consequence of the

so-called “Midas touch” issue, first described in (Ja-

cob, 1995): even if saccadic movements are very fast,

keys crossed by the line defined by such movements

may be involuntarily activated. Example screenshots

of Netytar’s surface are provided in Fig. 1. As a con-

A Method for Learning Netytar: An Accessible Digital Musical Instrument

621

5 7

1

4

3

2

6

10

9

12

11

8

R

Figure 2: Vectors indicating possible ways to trace inter-

vals on Netytar’s keyboard. Numbers indicate semitones

from the starting note (indicated with an R). Colors follow

the cathegories defined in Sec. 4.2, exercise T1: adjacent

group is colored red; distant easy green; distant hard blue;

obstructed purple.

sequences of this layout, the following characteristics

emerge, which are specifically addressed by the study

method proposed in Sec. 4:

• Isomorphism of the Keyboard Layout. Netytar’s

layout has been discussed extensively in (Da-

vanzo et al., 2018). Netytar’s virtual keyboard

is characterized by keys of equal shape and size,

which obey to the following property: a vector

connecting two keys corresponds to a precise and

constant musical interval, regardless of the trans-

position (i.e., the relative position on the inter-

face). This should make the transposition of mu-

sical sequences on different keys easy. More-

over, it could make the relationships between

notes clearer and more immediate, due to geomet-

rical consistency. Among other isomorphic lay-

outs, this one has been chosen in order to avoid

as much as possible intermediate key crossings

for the most common musical intervals (see Fig.

2), proposing a partial solution to the aforemen-

tioned “Midas Touch” problem. Moreover, se-

quences of notes can be described as paths or ge-

ometric shapes composed of broken lines, poten-

tially making memorization easier. Some stud-

ies investigate in greater detail the learnability of

various isomorphic layouts (Maupin et al., 2011),

as well as differences between isomorphic and

non-isomorphic layouts (Stanford et al., 2018).

There is evidence that isomorphic layouts have

benefits among musicians, but results among non-

musicians are mixed, leaving home for further ex-

perimentation. In the latest version of Netytar

2

keys are arranged in accordance with the so called

SMARC effect: a layout in which the highest notes

are found in top-right and the lower notes in the

2

https://github.com/Neeqstock/Netytar. Accessed on:

29/02/2020.

bottom-left should be more natural and immedi-

ate (Rusconi et al., 2006). Figures 2 and 3 pro-

vide a graphical explanation in terms of intervals

and absolute notes.

• Immediate Reaction. Some interfaces based on

gaze interaction (e.g. EyeHarp

3

) employ algo-

rithms, fixation-discrimination, and other kinds of

spatial filters to alleviate problems such as move-

ment inaccuracy, the aforementioned Midas touch

issue, noise introduced by the eye tracker sensor,

and others. Netytar does not employ filters. As a

consequence, latency in the feedback is reduced,

which can make the instrument more reactive but

more challenging to learn.

• Colored Keys. While many musical interfaces em-

ploy differently shaped keys (e.g. piano) or spa-

tialization (e.g. EyeHarp) to help note localiza-

tion, Netytar relies explicitly on highly contrast-

ing colors to indicate notes on the keyboard. Con-

sequently, it is possible to look for the next note

with the “corner of the eye”, without having to

reach it with the gaze (taking advantage of color

sensitivity in the areas outside the fovea (Lou

et al., 2012)). The color code employed by Ne-

tytar is provided in Table 1.

• Auto-scrolling Capability. Netytar features an

auto-scrolling algorithm which smoothly moves

the surface on both vertical and horizontal axes,

in such a way that the point which falls under

the gaze point is always scrolling to the center

of the screen. The purpose of this feature is to

extend the keyboard dimensions beyond the size

of the screen (allowing for a potentially infinite

surface). This feature exploits the smooth pur-

suit movements, described in Sec. 3, which can

be performed by eyes: such a feature would be

difficult or impossible to introduce in a fingered

touch screen based instrument).

3 GAZE INTERACTION IN

MUSIC

This section focuses on the most important aspect of

Netytar: gaze-based interaction.

While breath is a widely explored interac-

tion channel in aerophone intruments (but also in

other non-musical applications related to accessibil-

ity (Jones et al., 2008; Mougharbel et al., 2013)),

3

http://theeyeharp.org/eyeharp-download/, ’Complete

Manual (PDF)’. Accessed on: 29/02/2020.

CSME 2020 - Special Session on Computer Supported Music Education

622

C5 D5 E5

F5 G5 A5D#5

E5 F#5

F#5 G#5

A#5G#5

G5

G#5 A#5

A5 B5

C6 D6 E6

C#6

C6

Figure 3: Netytar’s keyboard layout, explained indicating

the position of absolute notes, in a section ranging from C5

to E6. Keys assigned to notes of the C major scale are indi-

cated in red, while black keys denote accidentals.

gaze-based interaction is still rather young. In every-

day life the eyes are a passive organ. Playing mu-

sic or, in general, interacting with a computer through

the eyes implies their use as an active input system:

something we are not actually used to (Zhang and

MacKenzie, 2007). Eyes move in a very peculiar way,

which differs from finger movements. A basic un-

derstanding of these movements is needed in order

to justify the exercises developed in Sec. 4. For the

purposes of this work, a summary of the classifica-

tion proposed by (Hornof, 2014) may suffice (more

detailed discussions can be found in dedicated publi-

cations).

Gaze point is the point in space (or, in software

applications, the point on the screen) where the per-

son looks at. Eyes generally move through saccades,

which are jerky movements, lasting about 30 ms, dur-

ing which the gaze point moves from one discrete

point to another. These are interspersed with fixa-

tions, where the gaze point remains, indeed, almost

fixed on a position. Usually a fixation lasts from

100 ms to 400 ms. That said, the eye is unable to per-

form fluid movements unless it has a target to lock on:

this is called smooth pursuit, a fluid movement which

follows the movement of a target. Blinks are some-

times not recommended as an interaction channel due

to their potentially involuntary nature (Jacob, 1995),

but are employed in some applications, like Netytar.

Finally, even during a fixation eyes are not perfectly

still but make small random movements within 0.1

◦

of the visual angle, called jitter. Among these ocular

movements, Netytar mostly exploits saccades, fixa-

tions and smooth pursuits (the latter especially in re-

lation to the “auto-scrolling” capability mentioned in

Sec. 2). These movements can be activated volun-

tarily, but many can occur involuntarily and uncon-

sciously. Involuntary saccades, for example, occur

on a regular basis even during fixations Purves et al.

(2001). Those may preclude musical performance,

which requires very precise control.

There is evidence for gaze anticipating physical

movement (Gesierich et al., 2008) and interactions in

virtual environments (Badler and Canossa, 2015), a

behavior which the performer must learn to avoid dur-

ing gaze-controlled musical performance. Those lead

to the anticipated execution of a note with respect to

the prescribed tempo, unless the introduction of fil-

ters to compensate by creating latency. Such behav-

ior was also noticed in (Vamvakousis and Ramirez,

2016, Sec. 2.2.2, ’Melody layer evaluation’). Netytar

does not use filters in order to improve the precision

at higher tempos (Davanzo et al., 2018), thus not pro-

viding any aid to avoid anticipations.

Rhythmic capabilities of the eye are limited.

Hornof (2014) reports an eye-tapping experiment

which shows that eyes are unable to deliberately per-

form more than 4 saccades per second (approximately

one saccade every 250 ms). According to the author,

this seems to be an upper limit which cannot be over-

come, not even through training. In systems like Ne-

tytar where notes are selected through gaze pointing,

this translates into a maximum limit in note changing

speed. We however make the hypothesis, supported

by direct observations, that more trained people could

manage to maintain rhythms with greater precision.

Next section will discuss the proposed study method

to reach this and other goals while learning music

with Netytar.

4 LEARNING METHOD

The main objective of the proposed method is to pro-

vide the users with a basic set of exercises, which

should make them able to explore the instrument by

themselves and deepen its practice, having gained a

certain familiarity with the movements and under-

standing the rationale of the interface. Exercises are

designed for simplicity, and are given in order of dif-

ficulty: some are preparatory to others and should

be performed in the prescribed order, at most mixing

them up between categories and going back to the pre-

vious ones from session to session. It is assumed that

at the end of a certain number of iterations, the user

will be able to perform simple melodies, as well as to

learn new ones independently. Broader aspects of mu-

sical theory are not addressed in this paper, given that

adequate literature already exists. The focus is instead

on performance aspects and on the use of the instru-

ment. Nonetheless, it may be useful to combine the

proposed exercises with pure music theory provided

by other sources.

The method consists of three categories of exer-

cises, which correspond to three related sections:

A Method for Learning Netytar: An Accessible Digital Musical Instrument

623

• Musical Alisthenics: i.e. exercises designed to

train motor skills of the eyes and breath in view of

the required performance;

• Musical Techniques: performed using the instru-

ment;

• Musical Practice: where the performer applies

the acquired skills for musical purposes. This part

is particularly important to provide motivation for

the student.

These three categories are discussed in detail in the

next subsections. For each exercise, a sentence is pro-

vided to describe its aim and discuss the expected im-

provement.

4.1 Musical Calisthenics

Eyes are governed by muscles. Constant training

could improve or stabilize their rhythmic perfor-

mance, as well as reducing fatigue.

People with physical disabilities may have re-

duced coordination in the residual movement chan-

nels, as well as a lack of rhythmic ability. This part

of the training therefore consists of a series of ex-

ercises aimed at improving these aspects: improve

sensitivity in the awareness of eye movements, not-

ing and bringing to consciousness involuntary move-

ments, jitter and other peculiarities; perform muscu-

lar stretching, so as to accustom the eye to perform

large movements while keeping the head still, without

suffering fatigue and pain; accustom the eye to make

saccadic movements rhythmically, perceiving muscle

tension. Similar exercises are given for breath as well,

which is the second interaction channel employed by

Netytar. Exercises are as follows, divided by those

dedicated to the eyes (with prefix CE-) and those ded-

icated to the breath (with prefix CB-):

(CE1) Arhythmic Stretching and Smooth Pursuit.

An assistant places themselves in front of the student,

holding two colored objects. The student is instructed

to move with the gaze from one object to another with

saccadic movements, at a moderate pace while keep-

ing the head still. The objects are initially placed close

to each other, and their distance is slowly increased

(horizontally, then vertically in the subsequent itera-

tion), until the limits of the visual field are reached.

Then, the distance is gradually decreased again. The

exercise must be interrupted if the user experiences

excessive discomfort or pain, especially near the lim-

its of the visual field. A short session, in which the

student is asked to concentrate and observe an object

moving smoothly in front of them in the most pre-

cise way may be added (bringing the smooth pursuit

movement to consciousness). Aim: an improvement

in eyes mobility is expected after this exercise, as well

as reduced fatigue while making long saccades.

(CE2) Rhythmic Blinking. Using a metronome,

starting from slow tempos then repeating the exer-

cise at faster ones, the student is asked to blink both

eyes in time, at every tick. It is possible to intro-

duce rhythmic dictation exercises to introduce com-

plex rhythms, possibly in combination with simple

notions of rhythm theory. Aim: this exercise is de-

signed to familiarize the student with the concept

of rhythm, before moving on to more difficult eye-

tapping exercises.

(CE3) Rhythmic Eye-tapping. An assistant places

themselves in front of the student in the same way

as CE1. The student performs CE1 in a timed man-

ner with a metronome (one saccade per tick). The as-

sistant can also provide feedback on the correct tim-

ing through direct observation. This exercise is po-

tentially more difficult than CE2, given the anticipa-

tory characteristic of the gaze movement discussed in

Sec. 3. Since when playing Netytar the new note will

sound exactly at the end of the saccadic movement,

the student must become accustomed to this char-

acteristic, as well as to perceive objects outside the

fovea before even performing the movement. Aim:

an improvement in anticipatory movement reduction

is expected as a consequence of this exercise.

(CE4) Rhythmic Fixation. The student is asked to

perform CE3, but instead of performing a saccade for

each tick, they will perform one saccade every four,

concentrating on keeping the fixation as stable as pos-

sible on the object between the saccades. Aim: this

exercise aims to bring to a conscious level the invol-

untary movements of the eye, which can preclude a

voluntarily stable fixation. A precision improvement

is hence expected.

(CE5) Rhythmic Color Tapping. Several objects

with different (possibly highly contrasting) colors are

placed in front of the student. A sequence is estab-

lished a priori (e.g. ”red, yellow, blue, green”). The

student is then asked to perform timed eye-tapping

(like in CE3) by fixating on the objects in turn, cy-

cling along the predetermined sequence. After a few

cycles, the student is asked to close their eyes: the

objects are re-positioned randomly, then they repeats

the exercise. Aim: this exercise could be useful to

strengthen the ability to find objects outside the fovea,

taking advantage of the color sensitivity discussed in

Sec. 3.

CSME 2020 - Special Session on Computer Supported Music Education

624

(CE6) Rhythmic Mixture. This consists of a vari-

ant of CE5 (i.e., with two or more objects) where the

student performs a mixed sequence of eye-taps (cor-

responding to note changes), blinks (corresponding to

repeated notes) and fixations (corresponding to hold-

ing a note), timed by metronome ticks. Possible re-

peated sequences could be, for example: tap, blink,

tap, blink... or tap, fix, blink, fix, tap, fix, blink, fix....

By introducing a simple symbolic notation, more

complex sequences can be outlined, to be read and

played in real time, while increasing the difficulty (as

happens with solfeggio in traditional music education

contexts). Aim: this exercise is aimed at strength-

ening the independence between saccadic movements

(useful for selecting a new note) and blinking (useful

for performing a repeated note).

(CB1) Stabilizing Breath. The student is asked to

blow into the breath sensor’s mouthpiece with as con-

stant and stable intensity as possible for a few sec-

onds. In subsequent iterations, the level of breath in-

tensity to be achieved is varied. Aim: this exercise

should improve breath stabilization.

(CB2) Breath Crescendo. The student is asked to

perform a “crescendo”, i.e. a continuous increase of

intensity, to reach a peak, and then gradually decrease

to a resting position, all in the smoothest possible way.

This should be performed at different speeds at each

iteration. Aim: this exercise aims to strengthen con-

trol over the change in intensity.

(CB3) Breath Tapping. Once the metronome is set,

the student emits breath with constant intensity for a

predetermined number of ticks, then stops the emis-

sion for as many ticks. They will repeat the exercise

in a continuous cycle. An example would be: two

ticks blowing, two pause ticks, two ticks blowing, two

pause ticks, etc.. Aim: this exercise should improve

rhythmical breath control.

(CB4) Breath Tapping with Short Bursts. Again

with a metronome, the student will perform breath

emission impulses at each tick (at slow rhythms) or

interspersed with a variable number of ticks (at more

sustained rhythms), estabilished a priori. Aim: this

exercise could be useful to gain confidence with the

timed release of breath, as well as to strengthen the

required muscles (i.e. diaphragm).

4.2 Musical Techniques

Once the rhythmic control of the eyes has been

strengthened with exercises in the previous section,

this next set of exercises should be performed di-

rectly on the Netytar’s interface. These aim to transfer

the acquired motor skills to simple technical musical

sequences, which are preparatory to melody perfor-

mance. Exercises in this category are noted with the

prefix T-.

(T1) Interval Tracing. Playing Netytar, the diffi-

culty associated to performing different musical in-

tervals while avoiding the activation of intermedi-

ate keys is uneven: with respect to some intervals,

distances between keys are large and paths narrow

(sometimes obstructed). Intervals, with reference to

the chosen isomorphic layout, could be roughly di-

vided into 4 ranges of difficulty: adjacent, or in the

immediate vicinity of the key (1, 2, 3 and 4 semi-

tones); distant easy, i.e. not adjacent but not ob-

structed by other keys, therefore rather simple to per-

form (5 and 7 semitones, corresponding to perfect

4th and 5th); distant hard, or described by unob-

structed but narrow, distant or difficult paths (6, 10

and 11 semitones); obstructed, or described by paths

obstructed by other keys (8, 9 and 12 semitones),

however playable through a rapid saccadic movement

or breath interruption. These 4 groups are shown in

Fig. 2. Having established this classification, the pro-

posed exercise consists in performing, in both direc-

tions, in turns and in repetition, intervals with diffi-

culty adjacent and distant easy. Notes can be played

as quarter notes with a metronome. A variant can

be introduced by performing a repeated note with a

blink between each note change. Aim: this exercise

should improve the association of geometric move-

ments within the keyboard with given musical inter-

vals, in addition to improving the confidence with the

keys layout.

(T2) Scales Tracing. Major and minor diatonic

scales are performed using a subset of the adjacent

group described in T1. It should therefore not be dif-

ficult for the student, once T1 has been trained, to

perform this next exercise: the major and minor scale

are performed in ascending and descending directions

with a metronome, one note per tick. It is possible

to introduce repeated notes as indicated for T1. As

a variant, it could be useful to introduce also ma-

jor and minor pentatonic scales. Aim: this exercise

should increase the performer’s knowledge of the key-

board and its melodic capabilities, and improve the

performer’s playing precision.

(T3) Arpeggio Tracing. In this exercise, the stu-

dent plays various arpeggios using a metronome, one

note per tick. Although it is advisable to start from

A Method for Learning Netytar: An Accessible Digital Musical Instrument

625

1

2

4

7

5

3

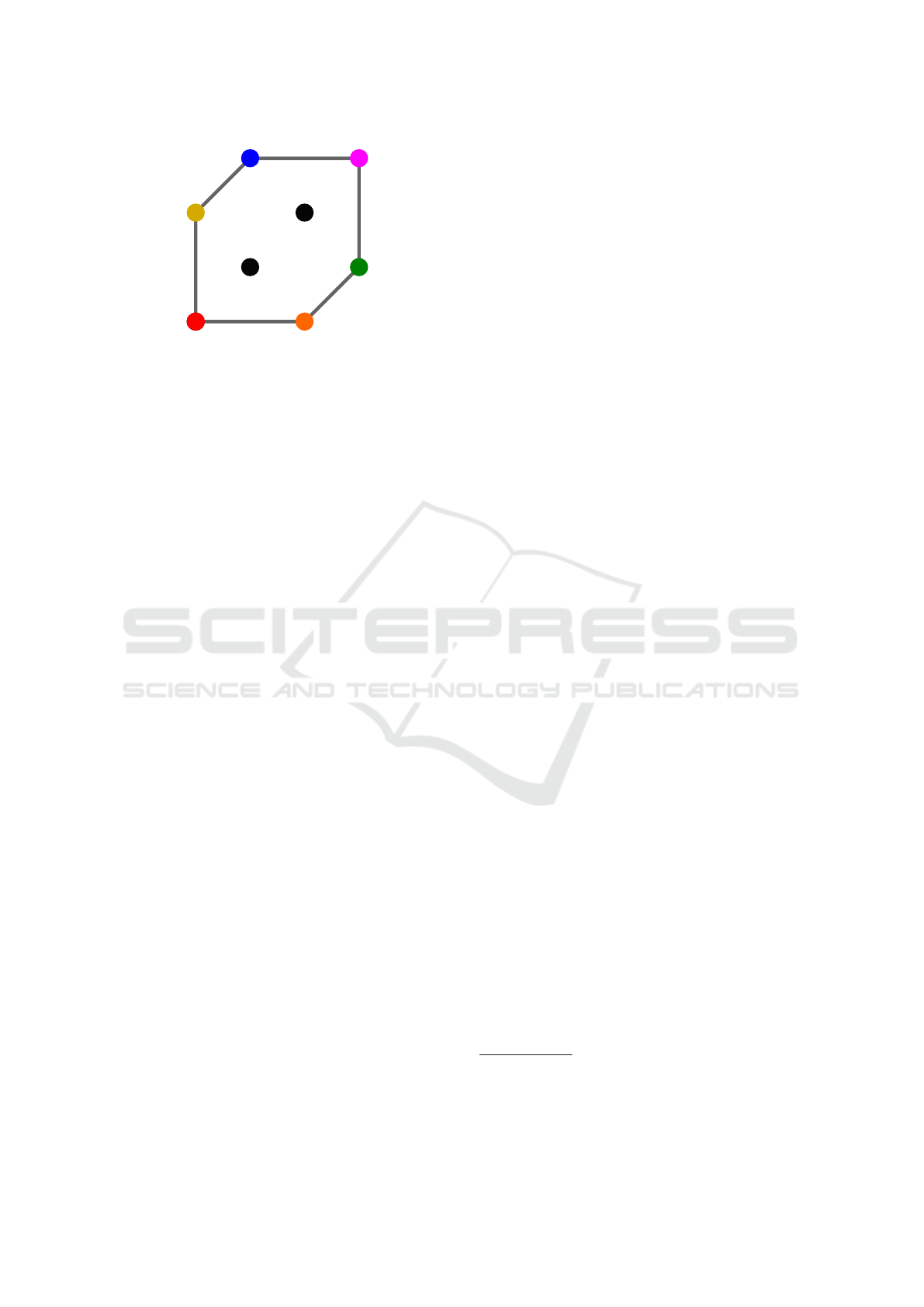

Figure 4: A possible closed shape for exercise T4 in

Sec. 4.2. Color code for keys is as in Tab. 1.

simple major and minor arpeggios with single tri-

ads, major or dominant 7th arpeggios could be intro-

duced. These arpeggios trace very short and simple

paths on Netytar’s virtual keyboard, and should be re-

peated transposing them to other keys, so that the user

becomes familiar with its isomorphic properties (de-

scribed in Sec. 2) and the concept of transposition.

Aim: the expected improvement given by this exer-

cise is comparable to the previous, with respect to

arpeggios.

(T4) Complex Shape Tracing. This exercise con-

sists in defining arbitrary shapes and trace them with

gaze on Netytar’s keyboard, playing the notes fol-

lowing the metronome. Shapes can consist of open

shapes (to be performed in an ascending or descend-

ing direction), or closed shapes (to be performed both

clockwise and counter-clockwise). Examples of these

shapes could be given by a complex, multiple octave

chord arpeggio, or by the closed shape made by the

1st, 3rd, 5th, 6th, 4th and 2nd degrees of the ma-

jor scale (as illustrated in Fig. 4). The student could

be stimulated to invent and perform new shapes and

”test” them. Aim: in addition to precision improve-

ments, this exercise should introduce the performer to

keyboard exploration.

(T5) Shapes with Returns. Many traditional musi-

cal instruments study methods involve “repeated pat-

tern” exercises. An example could be given by this

sequence constructed on the major scale: C D E, D

E F, E F G, F G A, G A B, A B C (to be played in

both ascending and descending manner). Aim: this

exercise could be preparatory to performing less lin-

ear and more complex phrases.

(T6) Complex Rhythms. All the previous exercises

are revisited, adding complex rhythms instead of the

“one note per tick” pattern. Examples could be given

by the execution of 2/4 notes followed by 1/4 notes,

with or without the introduction of repeated notes.

Sequences should be estabilished and given a priori.

Aim: this should be the final introduction to melodic

phrasing. The following section consists indeed in the

execution of actual music pieces.

4.3 Musical Practice

This section of the training consists of giving the stu-

dent simple tunes to be played with Netytar, to put

into practice the improvements given by previous ex-

ercises. This work will not focus on providing a

list of melodies, given that there are already several

texts and advice on the subject, dedicated to other in-

struments but still suitable. As an example, the My

Breath My Music foundation is active in the music

education field within SEN contexts (teaching people

with disabilities in the upper limbs how to play the

Magic Flute

4

instrument), and offers a training pro-

gram composed of simple melodies freely available

on their website

5

. It should also be noted that it is

probably simpler for the student to play an already

known melody than learning a new one. The musi-

cal tradition however varies from culture to culture.

In different contexts it is possible to identify different

pieces to propose. The following lend themselves to

be useful guidelines for identifying simple tunes for

Netytar.

• Identifying the type of musical intervals the per-

formance requires helps to determine their diffi-

culty. A difficulty classification is indicated in

Sec. 4.2, for exercise T1.

• Tracing and transcribing passages using the nota-

tion described in Sec. 5.1 can help in the process,

highlighting also the amount and localization of

the required eye movement.

• The upper bound in speed imposed by the na-

ture of saccadic movements, discussed in Sec. 3,

should be taken into account, providing some con-

straints for the tempo.

• A good difficulty progression should take into ac-

count the rhythmic complexity of the piece. A ho-

mogeneous rhythm should be simpler.

5 FURTHER DEVELOPMENTS

This section will discuss further possible devel-

opments to enhance the proposed methodology.

4

https://mybreathmymusic.com/en/magic-flute. Ac-

cessed on: 29/02/2020.

5

http://mybreathmymusic.com/en/liedjes-spelen-voor-

beginners. Accessed on: 29/02/2020.

CSME 2020 - Special Session on Computer Supported Music Education

626

These include a possible notation for exercises and

melodies, and ideas for replacing the assistant for ex-

ercises in Sec. 4 with software.

5.1 Notation

In order to propose a simple way to write down new

exercises, which could be developed by a possible

teacher or assistant, a simple notation is introduced.

This does not aim at being as complete as traditional

staff notation, but relies on the idea of indicating the

“geometric shape” described by a short musical se-

quence and therefore provide simple mnemonic sup-

port that does not require previous knowledge in read-

ing notes on the music staff. It can be described using

the following rules:

• Notes that make up the sequence are connected

by a broken line, following Netytar’s virtual key-

board layout and colors. The line can also be

”folded on itself” to indicate to go over the same

interval several times.

• Only a small number of bars should be drawn in

a single image (1 bar or few more, depending on

the complexity).

• The temporal progress of the sequence is indi-

cated by a color gradient along the line: a color

(e.g. green) indicates the beginning of the se-

quence, another color (e.g. red) the end. Other-

wise, if not possible, only the two endpoints could

be noted down with color.

• A repeated note is indicated by single or multiple

symbols (e.g. an O) placed next to the keys. This

information could be otherwise omitted for visual

simplicity.

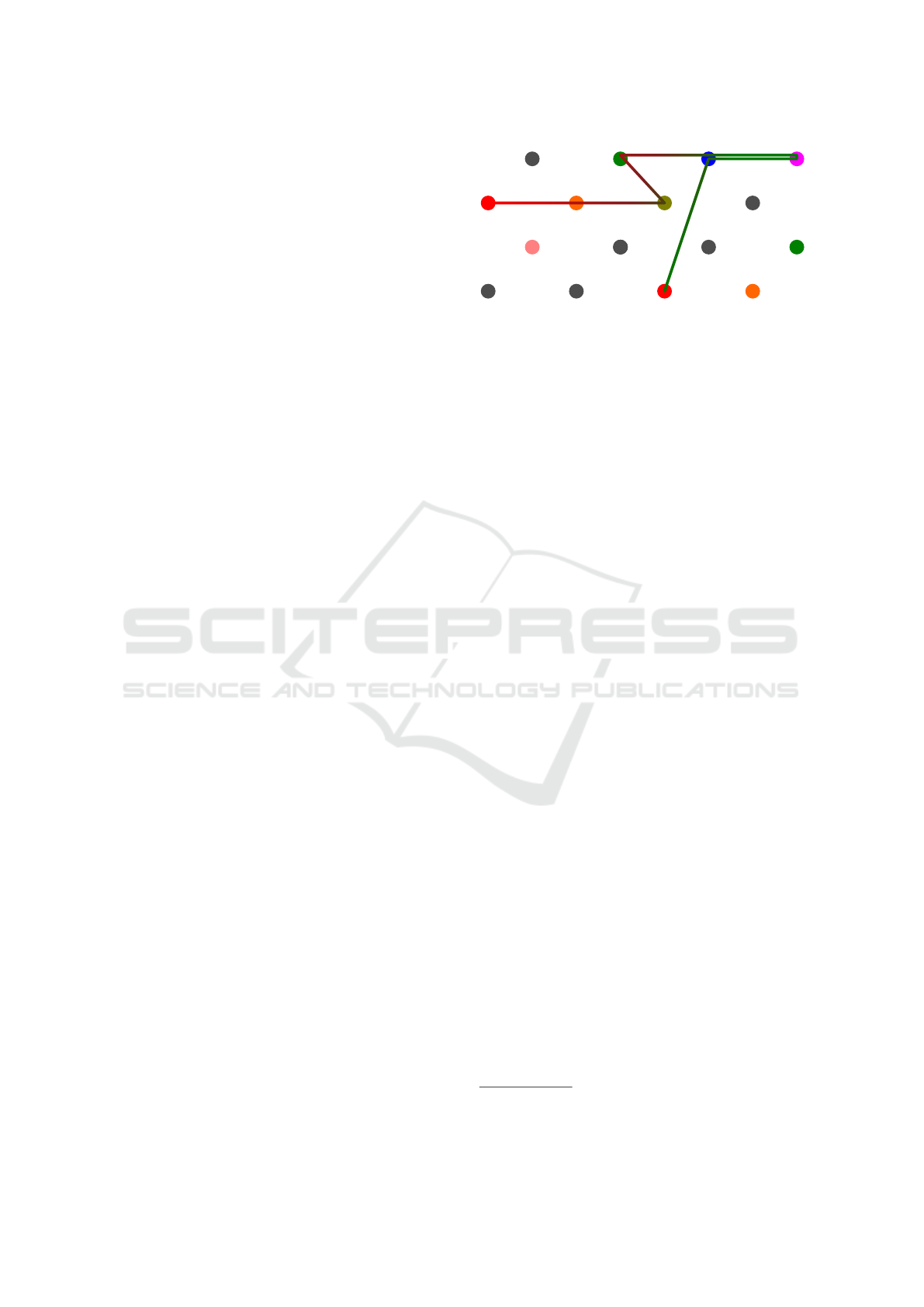

An example is given by Fig. 5, which represents the

first bars of the song ”Twinkle, Twinkle, Little Star”

(C, C, G, G, A, A, G, F, F, E, E, D, D, C).

It should be noted that while playing an instru-

ment that requires the performer to use their gaze as

an interaction channel, music cannot be read at the

same time using traditional staff notation. A future

development of Netytar could implement the simple

notation described above so that the score can be dis-

played directly on the keyboard while playing, pro-

viding a significant aid to the performance An inter-

active version of the notation (similar to a score fol-

lower) could include a cursor (e.g. a circle) which

moves in a timed manner on the next note to be played

following the path, effectively “gamifying” the musi-

cal performance.

o o

o

o

o

o

Figure 5: The first bars of the popular tune Twinkle, Twinkle,

Little Star, drawn using the notation exposed in Sec. 5.1.

5.2 Automating Exercises using

Software

Most of the exercises described in Sec. 4 should be

performed with a human assistant, which provides vi-

sual elements and gives feedback. However, a sim-

ple additional software interface could be created as

a replacement, thus making the user autonomous in

practising, providing also more precise and objective

feedback. Visual objects indicated in Sec. 4.1 (CE1,

CE3, CE4, CE5, and CE6) can be easily replaced by

virtual objects on screen, providing also auditory or

vibrotactile feedback (using an actuator) upon suc-

cessful gaze selections of each item. Breath-related

exercises in Sec. 4.1 (CB1, CB2, CB3 and CB4) could

be more effective if supported by an intensity indi-

cator on screen. The use of other gaze controlled

applications unrelated to the musical purpose could

strengthen eyes control abilities and confidence with

gaze interaction (e.g. eye controlled text writing soft-

ware such as the freely available GazeSpeaker

6

).

6 CONCLUSIONS

A study method has been presented for allowing peo-

ple who have never had prior music experience to ap-

proach the accessible digital musical instrument Ne-

tytar. Choices are motivated through an analysis of

the relevant properties of the instrument and eye in-

teraction in a general sense. A review of the relevant

characteristics of Netytar has been proposed. Possi-

ble future developments to automate the training have

been indicated in Sec. 5.2, along with the introduc-

tion of a simple musical notation suitable for Netytar

in Sec. 5.1. Further future works will include testing

the proposed methodology with the target users, per-

forming case studies and user experience assessment,

measuring also possible improvements in users mu-

6

https://www.gazespeaker.org/. Accessed on:

29/02/2020.

A Method for Learning Netytar: An Accessible Digital Musical Instrument

627

sical performance using objective methods, as hap-

pened in previous Netytar evaluations (Davanzo et al.,

2018).

REFERENCES

Badler, J. B. and Canossa, A. (2015). Anticipatory Gaze

Shifts during Navigation in a Naturalistic Virtual En-

vironment. In Proc. of the 2015 Annual Symposium

on Computer-Human Interaction in Play (CHI PLAY

’15), pages 277–283, London, United Kingdom. As-

sociation for Computing Machinery.

Bailey, S., Scott, A., Wright, H., Symonds, I. M., and Ng, K.

(2010). Eye.Breathe.Music: Creating music through

minimal movement. In Proc. Conf. Electronic Visual-

isation and the Arts (EVA 2010), pages 254–258, Lon-

don, UK.

Correa, A. G. D., Ficheman, I. K., do Nascimento, M.,

and Lopes, R. d. D. (2009). Computer Assisted Mu-

sic Therapy: A Case Study of an Augmented Real-

ity Musical System for Children with Cerebral Palsy

Rehabilitation. In Proc. of the 2009 Ninth IEEE In-

ternational Conference on Advanced Learning Tech-

nologies, pages 218–220, Riga, Latvia. IEEE.

Davanzo, N., Dondi, P., Mosconi, M., and Porta, M. (2018).

Playing music with the eyes through an isomorphic

interface. In Proc. of the Workshop on Communica-

tion by Gaze Interaction - COGAIN ’18, pages 1–5,

Warsaw, Poland. ACM Press.

Frid, E. (2019). Accessible Digital Musical Instruments—A

Review of Musical Interfaces in Inclusive Music

Practice. Multimodal Technologies and Interaction,

3(3):57.

Gesierich, B., Bruzzo, A., Ottoboni, G., and Finos, L.

(2008). Human gaze behaviour during action execu-

tion and observation. Acta Psychologica, 128(2):324–

330.

Harrison, J. and McPherson, A. (2017). An Adapted Bass

Guitar for One-Handed Playing. In Proc. of the 17th

Int. Conf. on New Interfaces for Musical Expression

(NIME’17), NIME 2017, Copenhagen, Denmark.

Hornof, A. J. (2014). The Prospects For Eye-Controlled

Musical Performance. In Proc. of the 14th Int. Conf.

on New Interfaces for Musical Expression (NIME’14),

NIME 2014, Goldsmiths, University of London, UK.

Jacob, R. J. K. (1995). Eye tracking in advanced interface

design. In Virtual Environments and Advanced Inter-

face Design, pages 258–288. Oxford University Press,

Inc., USA.

Jamboxx (n.d.). Jamboxx. https://www.jamboxx.com/. Ac-

cessed 8 June 2019.

Jones, M., Grogg, K., Anschutz, J., and Fierman, R. (2008).

A Sip-and-Puff Wireless Remote Control for the Ap-

ple iPod. Assistive Technology, 20(2):107–110.

Larsen, J. V., Overholt, D., and Moeslund, T. B. (2016).

The Prospects of Musical Instruments For People with

Physical Disabilities. In Proc. of the 16th Int. Conf.

on New Interfaces for Musical Expression (NIME’16),

NIME 2016, pages 327–331, Griffith University, Bris-

bane, Australia.

Lou, C. I., Migotina, D., Rodrigues, J. P., Semedo, J., Wan,

F., Mak, P. U., Mak, P. I., Vai, M. I., Melicio, F.,

Pereira, J. G., and Rosa, A. (2012). Object Recog-

nition Test in Peripheral Vision: A Study on the In-

fluence of Object Color, Pattern and Shape. In Zan-

zotto, F. M., Tsumoto, S., Taatgen, N., and Yao, Y.,

editors, Proc. Int. Conf. on Brain Informatics, Lecture

Notes in Computer Science, pages 18–26, Berlin, Hei-

delberg. Springer.

Marquez-Borbon, A. and Martinez Avila, J. P. (2018). The

problem of DMI adoption and longevity: Envisioning

a NIME performance pedagogy. In Proc. of the 18th

Int. Conf. on New Interfaces for Musical Expression

(NIME’18), Blacksburg, Virginia, USA. Virginia Tech

Libraries.

Maupin, S., Gerhard, D., and Park, B. (2011). Isomorphic

Tessellations for Musical Keyboards. In Proc. of 2011

Sound and Music Computing Conf., pages 471–478,

Conservatorio Cesare Pollini, Padova, Italy.

Morimoto, C. H., Diaz-Tula, A., Leyva, J. A. T., and Elmad-

jian, C. E. L. (2015). Eyejam: A Gaze-controlled Mu-

sical Interface. In Proceedings of the 14th Brazilian

Symposium on Human Factors in Computing Systems,

IHC ’15, pages 37:1–37:9, Salvador, Brazil. ACM.

Mougharbel, I., El-Hajj, R., Ghamlouch, H., and Monacelli,

E. (2013). Comparative study on different adaptation

approaches concerning a sip and puff controller for a

powered wheelchair. In Proc. of the 2013 Science and

Information Conf., pages 597–603, London, UK.

Purves, D., Augustine, G. J., Fitzpatrick, D., Katz, L. C.,

LaMantia, A.-S., McNamara, J. O., and Williams,

S. M. (2001). Types of Eye Movements and Their

Functions. Neuroscience. 2nd edition, pages 361–390.

Refsgaard, A. (n.d.). Eye Conductor.

https://andreasrefsgaard.dk/project/eye-conductor/.

Accessed 8 June 2019.

Rusconi, E., Kwan, B., Giordano, B. L., Umilt

`

a, C., and

Butterworth, B. (2006). Spatial representation of pitch

height: The SMARC effect. Cognition, 99(2):113–

129.

Stanford, S., Milne, A. J., and MacRitchie, J. (2018). The

Effect of Isomorphic Pitch Layouts on the Transfer of

Musical Learning †. Applied Sciences, 8(12):2514.

Vamvakousis, Z. and Ramirez, R. (2014). P300 Harmonies:

A Brain-Computer Musical Interface. In Proc. of 2014

Int. Computer Music Conf./Sound and Music Comput-

ing Conf., pages 725–729, Athens, Greece.

Vamvakousis, Z. and Ramirez, R. (2016). The EyeHarp:

A Gaze-Controlled Digital Musical Instrument. Fron-

tiers in Psychology, 7:906.

Ward, A., Woodbury, L., and Davis, T. (2017). Design Con-

siderations for Instruments for Users with Complex

Needs in SEN Settings. In Proc. of the 17th Int. Conf.

on New Interfaces for Musical Expression (NIME’17),

Copenhagen, Denmark.

Zhang, X. and MacKenzie, I. S. (2007). Evaluating Eye

Tracking with ISO 9241 - Part 9. In Jacko, J. A.,

editor, Human-Computer Interaction. HCI Intelligent

Multimodal Interaction Environments, Lecture Notes

in Computer Science, pages 779–788, Berlin, Heidel-

berg. Springer.

CSME 2020 - Special Session on Computer Supported Music Education

628