An Excited Binary Grey Wolf Optimizer for Feature Selection in

Highly Dimensional Datasets

Davies Segera, Mwangi Mbuthia and Abraham Nyete

Department of Electrical and Information Engineering, University of Nairobi, Harry Thuku Road, Nairobi, Kenya

Keywords: Meta-Heuristic, Feature Selection, Evolutionary Algorithm, Binary Grey Wolf Optimizer.

Abstract: Currently, feature selection is an important but challenging task in both data mining and machine learning,

especially when handling highly dimensioned datasets with noisy, redundant and irrelevant attributes. These

datasets are characterized by many attributes with limited sample-sizes, making classification models over-

fit. Thus, there is a dire need to develop efficient feature selection techniques to aid in deriving an optimal

informative subset of features from these datasets prior to classification. Although grey wolf optimizer

(GWO) has been widely utilized in feature selection with promising results, it is normally trapped in the local

optimum resulting into semi-optimal solutions. This is because its position-updated equation is good at

exploitation but poor at exploration. In this paper, we propose an improved algorithm called excited binary

grey wolf optimizer (EBGWO). In order to improve on exploration, a new position-updating criterion is

adopted by utilizing the fitness values of vectors 𝑋

⃗

, 𝑋

⃗

and 𝑋

⃗

to determine new candidate individuals.

Moreover, in order to make full use of and balance the exploration and exploitation of the existing BGWO, a

novel nonlinear control parameter strategy is introduced, i.e. the control parameter of 𝑎⃗ is innovatively

decreased via the concept of the complete current response of a direct current (DC) excited resistor-capacitor

(RC) circuit. The experimental results on seven standard gene expression datasets demonstrate the

appropriateness and efficiency of the fitness value based position-updating criterion and the novel nonlinear

control strategy in feature selection. Moreover, EBGWO achieved a more compact set of features along with

the highest accuracy among all the contenders considered in this paper.

1 INTRODUCTION

The major challenge in analysing big data is the vast

number of features. Out of the many available

features, only a few of them will be useful in

distinguishing samples that belong to different classes

while majority of the of the features will be irrelevant,

noise, or redundant. Foremost, these irrelevant

features lead to noise generation in big data analysis.

In addition, they result in increased dataset

dimensions and a further computational complexity

in both clustering and classification operations. All

these consequently decreases the rate of classification

accuracy. Thus, superior approaches are needed to

identify diverse features, compute the relationship

between the features and optimally select informative

attributes from these highly dimensioned datasets

(Almugren & Alshamlan, 2019).

For a dataset containing 𝑁 number of features,

there exists 2

number of candidate subsets. The

main objective of designing different feature

selection techniques has always been to determine a

compressed and optimal subset with the highest

precision among the possible candidate subsets.

Since the scope of possible solutions is wide and

the size of this set of responses is on the rise due to

the ever-increasing number of features, determining

the best subset of 𝑁 features is extremely difficult

and costly(Pirgazi et al., 2019), (Liang et al., 2018).

Feature selection techniques can be broadly

categorized into two i.e. filters and wrappers. Filter

approaches utilizes the dependency, mutual

information, distance, and information theory in

carrying out feature selection(Shunmugapriya &

Kanmani, 2017). Unlike filters, wrappers utilize

classifiers as the learning algorithm in optimizing the

classification performance by selecting the

informative features. Commonly, filter techniques are

often faster compared to wrappers, which is largely

attributed to their reduced computational complexity

(Sun et al., 2018). Nevertheless, wrapper techniques

can usually offer better performances compared to

filters(Zorarpacı & Özel, 2016). Wrappers apply

metaheuristic optimization approaches, such as

Segera, D., Mbuthia, M. and Nyete, A.

An Excited Binary Grey Wolf Optimizer for Feature Selection in Highly Dimensional Datasets.

DOI: 10.5220/0009805101250133

In Proceedings of the 17th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2020), pages 125-133

ISBN: 978-989-758-442-8

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

125

binary genetic algorithm (BGA)(De Stefano et al.,

2014), binary grey wolf optimization

(BGWO)(Emary et al., 2016), binary ant colony

optimization (BACO) (Aghdam et al., 2009), binary

particle swarm optimization (BPSO) (He et al., 2009),

to select the optimal informative feature subsets.

BGWO is a recent feature selection approach,

which usually offers better performance compared to

other conventional methods(Emary et al., 2016) .

However, the wolves’ new positions are based on the

experience of leaders i.e. alpha, beta and delta, which

normally leads to premature convergence. In

addition, a proper balance between the diversification

(global search) and intensification (local search) is

still the challenging issue in BGWO (Too et al.,

2018).

In this paper, we propose a new excited binary

grey wolf optimizer (EBGWO) that aims to improve

the performance of BGWO(Emary et al., 2016) in

selecting informative features in highly dimensioned

microarray datasets. Foremost, to overcome the

insufficiency of the existing BGWO regarding its

position-updated equation, which is good at

exploitation but poor at exploration, a new position-

updated equation utilizing the fitness values of

vectors 𝑋

⃗

, 𝑋

⃗

and 𝑋

⃗

is proposed to determine new

candidate individuals. Moreover, inspired by the

concept of the complete current response of a direct

current (DC) excited resistor-capacitor (RC) circuit, a

new nonlinear control parameter strategy for

parameter 𝑎⃗ is introduced in order to make full use

of and balance the diversification and intensification

of the existing BGWO algorithm.

The performance of EBGWO is tested using

seven standard gene expression datasets. To evaluate

the effectiveness of proposed method, EBGWO is

compared with five existing binary metaheuristic

algorithms i.e. BGWO1, BGWO2, BPSO, BDE and

BGA. The experimental results indicate EBGWO has

a very efficient computational complexity, while

keeping a comparative performance in feature

selection.

The rest of paper is organized as follows.

Preliminaries for the work, GWO is presented in

section 2. The proposed excited grey wolf optimizer

(EGWO) is presented in section 3. The binary version

of EGWO i.e. Excited binary grey wolf optimizer

(EBGWO) is presented in section 4. Section 5 reports

the experimental setting and a discussion of the

obtained results. Finally, a conclusion and future

works are given in section 6.

2 GREY WOLF OPTIMIZER

Grey wolf optimizer (GWO) is a recently proposed

metaheuristic optimization technique(Mirjalili et al.,

2014). In nature, grey wolves live in groups ranging

between 5 to 12. GWO mimics the behaviour

portrayed by these grey wolves while hunting and

searching of a prey. In GWO, to simulate the

leadership hierarchy in a pack, the population is

divided into four types of wolves i.e. Alpha (𝛼), beta

(𝛽), delta (𝛿), and omega (𝜔). The alpha wolf is the

overall leader responsible for decision-making. The

beta wolf is the second in command is aids the alpha

in making the decision or other activities. Delta wolf

is referred as the third leader in the group, which

dominates the omega wolves. The three leaders i.e.

𝛼,𝛽 and 𝛿 guide the hunting (optimization) while the

remaining omega wolves ( 𝜔) follow them(El-

Gaafary et al., 2015).

Equation 1 depicts the encircling behaviour of the

pack while hunting a prey.

𝑋𝑡 1 = 𝑋

𝑡

𝐴

.𝐷

(1

)

Where 𝑋

is the position of prey, 𝐴 is the coefficient

vector, and 𝐷 is defined by equation 2.

𝐷 =𝐶.𝑋

𝑡

𝑋𝑡

(2

)

Where 𝐶 is the coefficient vector, 𝑋 is the position of

grey wolf, and 𝑡 is the number of iterations.

The coefficient vectors, 𝐴 and 𝐶, are determined

by equations 3 and 4 respectively.

𝐴

2.𝑎.𝑟

𝑎

(3

)

𝐶2.𝑟

(4

)

Where 𝑟

and 𝑟

are two independent random

numbers uniformly distributed between 0,1, and 𝑎

is the encircling coefficient that is used to balance the

trade-off between exploration and exploitation.

In GWO, 𝑎 is linearly decreased, from 2 to 0,

according to Equation (5).

𝑎22.

(5

)

Where 𝑡 and 𝑇 represent the number of iterations and

maximum number of iterations respectively.

In GWO, the three leaders 𝛼,𝛽 and 𝛿 leaders are

deemed knowledgeable of the potential position of

the prey. Thus, they guide the remaining omega

wolves to move toward the optimal position.

ICINCO 2020 - 17th International Conference on Informatics in Control, Automation and Robotics

126

Mathematically, the new position of wolf is updated

as per Equation (6).

𝑋

𝑡1

(6)

Where 𝑋

,𝑋

and 𝑋

are determined according to

Equations (7)-(9)

𝑋

|

𝑋

𝐴

.𝐷

|

(7)

𝑋

𝑋

𝐴

.𝐷

(8)

𝑋

|

𝑋

𝐴

.𝐷

|

(9)

Where 𝑋

,𝑋

and 𝑋

are the position of 𝛼,𝛽 and 𝛿

respectively during iteration 𝑡.

𝐴

,𝐴

and 𝐴

are determined using Equation 3;

and 𝐷

,𝐷

and 𝐷

are defined in Equations (10)-(12)

respectively.

𝐷

|

𝐶

.𝑋

𝑋

|

(10)

𝐷

𝐶

.𝑋

𝑋

(11)

𝐷

|

𝐶

.𝑋

𝑋

|

(12)

Where 𝐶

,𝐶

and 𝐶

are determined using Equation

(4).

3 EXCITED GREY WOLF

OPTIMIZER (EGWO)

3.1 Nonlinearly Controlling Parameter

𝒂 via the Complete Current

Response of the DC Excited RC

Circuit

It is a well-established fact that for population-based

metaheuristics, both exploration (diversification) and

exploitation (intensification) are conducted

concurrently.

Exploration is termed as the ability of a

population-based metaheuristic to examine new areas

within the defined search space with the aim of

determining the global optima. On the hand,

exploitation is the ability to utilize the information of

already identified individuals in deriving better

individuals(Long et al., 2018; Luo et al., 2013).

In every population-based metaheuristic, both

exploration (diversification) and exploitation

(intensification) abilities are attained by applying

specific operators.

In the conventional GWO algorithm, the control

parameter 𝑎 plays a critical role in balancing between

diversification and intensification of an individual

candidate search (Long et al., 2018). A larger value

of 𝑎 enhances global exploration, while its smaller

value promotes local exploitation. Thus, selection of

a suitable control strategy for parameter 𝑎 is critical

in attaining an effective balance between local

exploitation and global exploration. From literature,

one proved way to achieve the required balance is

critically studying the control of parameter 𝑎. To

date, various approaches have been proposed to

control the conventional GWO’s parameter 𝑎 (Long,

2016; Long et al., 2018; Mittal et al., 2016).

However, in the conventional GWO, 𝑎 linearly

decreases from 2 to 0 using Equation (5). Since

GWO incorporates a highly complicated nonlinear

search process, the utilized linear control of

parameter 𝑎 doesn’t reflect the actual search process

(Long et al., 2018). Moreover, (Mittal et al., 2016)

suggested that the performance of GWO would

improve if parameter 𝑎 is nonlinearly controlled.

Motivated by both the above consideration and

the complete current response of a direct current (DC)

excited resistor-capacitor (RC) circuit(Alexander &

Sadiku, 2016), a novel nonlinear adaptation of

parameter 𝑎 is proposed in this paper.

The complete current response of the RC circuit

to a sudden application of a dc voltage source, with

the assumption that the capacitor is initially not

charged is given in Equation 13.

𝑖𝑡

(13

)

Where 𝜏𝑅𝐶 is the time constant that expresses

the rapidity with which the value if 𝑖 decreases from

the initial value

to zero over time . 𝑉

is value of a

constant DC voltage while 𝑅 and 𝐶 are the resistor

and capacitor values of the circuit.

We adopt this concept i.e. the exponential decay

of 𝑖 over time to develop a new nonlinear control

strategy of parameter 𝑎 as presented in Equation 14.

𝑎

,

𝑎

𝑀𝑎𝑥𝐼𝑡𝑒𝑟

𝑀𝑎𝑥𝐼𝑡𝑒𝑟

,

(14

)

Where 𝑎

,

is the value of the control parameter 𝑎

assigned to grey wolf 𝑖 during iteration 𝑡.

𝑀𝑎𝑥𝐼𝑡𝑒𝑟

indicates the total number of iterations (generations)

and 𝑎

is the initial value of the control

parameter 𝑎. 𝜏

,

is a nonlinear modulation index

assigned to the grey wolf 𝑖 during iteration 𝑡.

An Excited Binary Grey Wolf Optimizer for Feature Selection in Highly Dimensional Datasets

127

To ensure that the value of the control parameter 𝑎

,

is proportional to the fitness value of grey wolf 𝑖

during iteration 𝑡, a new formulation of the value of

the nonlinear modulation index 𝜏

,

is given in

Equation (15).

𝜏

,

(15)

Where 𝐹𝛼

, 𝐹𝛽

and 𝐹𝛿

are the fitness values of

𝛼,𝛽 and 𝛿 wolves (the 3 best wolves) respectively

during the current iteration 𝑡. 𝐹𝑋

is the fitness value

of grey wolf 𝑖 during iteration 𝑡 and finally 𝐹𝑤

is

the worst fitness value among the omega (𝜔) wolves

during iteration 𝑡 .

Consequently, 𝐴

,𝐴

and 𝐴

are determined using

Equation (16) which is a variant of Equation 3.

𝐴

2.𝑎

,

.𝑟

𝑎

,

(16)

From the literature of conventional GWO

(Mirjalili et al., 2014), when 𝐴1 the grey wolves

are compelled to attack the prey (exploitation) and

when 𝐴1 the grey wolves are compelled to move

away from the current prey with the hope of finding

another fitter prey. This implies that smaller values of

the control parameter 𝑎 promotes local exploitation

while larger values facilitates global exploration.

According to Equation (5) of the conventional

GWO algorithm, it is evident that half of the iterations

are committed to exploration and the remaining half

to exploitation. This strategy fails to consider the

impact of effective balancing between these two

conflicting milestones to guarantee accurate

approximation of the global optimum.

The nonlinear control strategy of parameter 𝑎

proposed in Equation (14), tries to overcome this

challenge by adopting a variant of decay function to

strike a proper balance between exploration and

exploitation. Since this strategy promotes allocates a

large proportion of the iterations to global exploration

compared to local exploitation, the convergence

speed of the proposed EGWO algorithm is enhanced

while minimizing the local minima trapping effect.

Moreover, since the proposed scheme is

proportional to the fitness values of the individual

grey wolves in the search space and the current

iteration (generation), diversity and the quality of the

solutions is enhanced.

3.2 Socially Strengthened Hierarchy

via a Fitness-value based

Position-updating Criterion

In the conventional GWO, social hierarchy is the

cornerstone in both the internal management and the

hunting patterns of the pack(Tu et al., 2019) . All the

wolves in the pack conduct hunting under the

guidance of the 𝛼,𝛽 and 𝛿 wolves. An assumption

that these three dominant wolves have a better

knowledge of the prey’s location. Consequently, the

omega (𝜔) wolves update their positions with the aid

of these three leaders during the hunting process. This

implies that the conditions of the 𝛼,𝛽 and 𝛿 wolves

are key in updating the whole pack. Meanwhile, the

higher the rank a wolf attains during the search, the

closer it gets to the global optimum.

In addition, all the wolves including the three

leaders utilize Equation (6) to update their positions.

That is to say the 𝛼 wolf will utilize the lowly ranked

𝛽 and 𝛿 wolves to update its position. Likewise, 𝛽

wolf will utilize the lowly ranked 𝛿 wolf to update

itself. Since the conditions of the 𝛽 and 𝛿 wolves are

worse compared to that of the 𝛼 wolf, there are higher

chances that the two wolves will compel the 𝛼 wolf

to move away from the global optimum. Likewise, 𝛽

wolf may also be misled by the 𝛿 wolf. Ultimately,

the accumulative error will have an adverse effect on

updating the positions of the all the wolves in the pack

and the convergence efficiency of the GWO will

drastically reduce(Tu et al., 2019).

On the other hand, since all the omega (𝜔) wolves

are attracted towards the 𝛼,𝛽 and 𝛿 wolves, they may

prematurely converge due to limited exploration

within the search space. Thus, the conventional GWO

is good at exploitation but poor at exploration.

Thus, to overcome the GWO’s premature converge

and still maintain the social hierarchy of the pack, a

different scheme for updating both the dominant (𝛼,𝛽

and 𝛿) and the omega (𝜔) wolves is needed. To attain

this, a new position-updated equation utilizing the

fitness values of vectors 𝑋

⃗

, 𝑋

⃗

and 𝑋

⃗

is utilized in

determining new candidate individuals.

Foremost, for each wolf in the pack,

vectors 𝑋

, 𝑋

and 𝑋

are computed using

Equations (17)-(19).

𝑋

𝑋

𝑗

(17

)

𝑋

𝑋

𝑗

(18

)

ICINCO 2020 - 17th International Conference on Informatics in Control, Automation and Robotics

128

𝑋

𝑋

𝑗

(19)

Where 𝑑 is the dimension of the search space and

𝑋

𝑗

,𝑋

𝑗 and 𝑋

𝑗 are determined using

Equations (7)-(9) respectively.

Next, the fitness values 𝐹𝑋

,𝐹𝑋

and

𝐹𝑋

for vectors 𝑋

, 𝑋

and 𝑋

respectively are determined and the one with the best

fitness forms the new position as depicted by

Equations (20)-(21).

𝑓

𝑖𝑡𝑡𝑒𝑠𝑡,𝑃𝑜𝑠𝑚𝑖𝑛

𝐹𝑋

(20)

𝑋

𝑡1

𝑋

(21)

4 EXCITED BINARY GREY

WOLF OPTIMIZER (EBGWO)

Feature selection (FS) is a significant problem in

pattern recognition and machine learning areas. The

aim of FS is to select the most informative feature

subset guided by a given evaluation criterion(Salesi

& Cosma, 2017; Tu et al., 2019).

In essence, FS is a broad-based optimization problem

that is characterized by huge computations.

Since the FS problem utilizes a binary search space,

it is important to convert the proposed continuous

EGWO to binary i.e. EBGWO. One of the commonly

adopted approach for this transformation is the

utilization of transfer functions(Salesi & Cosma,

2017; Tu et al., 2019).

In our experiments, the transfer function we utilized

in converting the real values of each solution to binary

is depicted by Equation (22).

𝑋

𝑡1

1,𝑖𝑓 𝑆𝑋

𝑡1

𝜌,

0, 𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

(22)

Where 𝜌∈0,1 depict a random threshold and 𝑆 is

the considered sigmoid function as expressed by

Equation (23).

𝑆

𝑥

1

1 exp 10𝑥 0.5

(23)

𝑋

𝑡1

=1 imply that the 𝑗

element of 𝑋𝑡1

is selected as an informative attribute while 𝑋

𝑡

1

=0 imply that the corresponding 𝑗

element is

ignored.

For instance, if 𝑋

𝑡1

0.55,0.21,0.35,0.8

and 𝜌0.5, the output of Equation (21) becomes

𝑋

𝑡1

1, 0,0,1 which imply that the 1

and

4

features be selected while the 2

and 3

features will be ignored.

By doing so, the number of features is reduced

without affecting the classification performance.

Since the FS task aims at attaining better

classification accuracy with the utilization of fewer

attributes, the objective function 𝐹𝑖𝑡 utilized in this

paper is given by Equation (24)(Salesi & Cosma,

2017).

𝐹𝑖𝑡𝜀 ∗

|

𝑆

|

|

𝑁

|

1𝜀

∗

𝐴

𝑐𝑐

(24)

Where 𝐴𝑐𝑐 is indicates the accuracy of a given

classifier,

|

𝑆

|

is the size of the selected feature subset

and

|

𝑁

|

is the number of the total features of a

dataset. Thus, FS is turned into a problem of

determining the minimum value of Equation (24).

Herein, 𝜀 and

1𝜀

are weights corresponding to

the feature subset size and average accuracy

respectively. The parameter of 𝜀 in Equation (24) is

set 0.2 (Salesi & Cosma, 2017).

The pseudocode of the proposed excited binary

grey wolf optimizer (EBGWO) algorithm is

presented in Algorithm 1.

Algorithm 1: Pseudo-code for the EBGWO.

Input: labelled gene dataset D, Total number of

iterations 𝑀𝑎𝑥𝐼𝑡𝑒𝑟, Population size N, Initial value of

the control parameter

𝑎

Output: Optimal Individual’s position

𝑋

, Best

fitness value Fit (

𝑋

)

1. Randomly initialize N individuals’ positions to

establish a population

2. Using Equation (23), evaluate the fitness of all

wolves, Fit (

𝑋)

3.

[~, Index] =Sort (𝐹𝑖𝑡

𝑋,′𝐴𝑠𝑐𝑒𝑛𝑑′)

4.

𝐹𝛼 =𝐹𝑖𝑡 𝑋

5.

𝐹β =𝐹𝑖𝑡 𝑋

6.

𝐹δ =𝐹𝑖𝑡 𝑋

7.

𝐹w =𝐹𝑖𝑡 𝑋

8.

𝑋

=𝑋𝐼𝑛𝑑𝑒𝑥1

9.

𝑋

=𝑋𝐼𝑛𝑑𝑒𝑥2

10.

𝑋

=𝑋𝐼𝑛𝑑𝑒𝑥3

11.

For t=1 To 𝑀𝑎𝑥𝐼𝑡𝑒𝑟

12. For i=1 To N

13.

Determine

𝑎

,

using Equation (14)

14.

Compute 𝑋

,𝑋

and 𝑋

using

Equations (17)-(19)

15.

Generate

𝑋

𝑣𝑒𝑐1

, 𝑋

𝑣𝑒𝑐2

and

An Excited Binary Grey Wolf Optimizer for Feature Selection in Highly Dimensional Datasets

129

𝑋

𝑣𝑒𝑐3

using Equation (21)

16. Evaluate the fitness values

𝐹𝑋

, 𝐹𝑋

and 𝐹𝑋

of the binary

vectors

𝑋

𝑣𝑒𝑐1

𝑋

𝑣𝑒𝑐2

and

𝑋

𝑣𝑒𝑐3

respectively using Equation (24)

17. Determine the minimum value(fittest) of the

three evaluated fitness values and its Index

using Equations (20)

18.

If (fittest<𝐹𝑖𝑡

𝑋

) Then

19.

𝐹𝑖𝑡

𝑋

= fittest

20.

Update

𝑋

using Equation (21)

End If

21. Next i

22. Repeat steps 3 to 10

23. Next t

5 EXPERIMENTAL RESULTS

AND DISCUSSION

All the experiments were conducted in Windows

Windows 10 Home Single Language 64-bit operating

system; processor Intel(R) Core (TM) i7-3770CPU ,

processor speed of 3.4GHZ; 12GB of RAM. All the

considered algorithms were implemented using

MATLAB 2017 environment.

5.1 Dataset Description

In order to evaluate the performance of the proposed

algorithm, seven gene expression datasets were

utilized. The datasets were selected to have a variety

of instances (sample-size), genes and classes as a

representative of various issues. Table 1 outlines the

detailed distribution of instances, genes and classes

for each considered dataset.

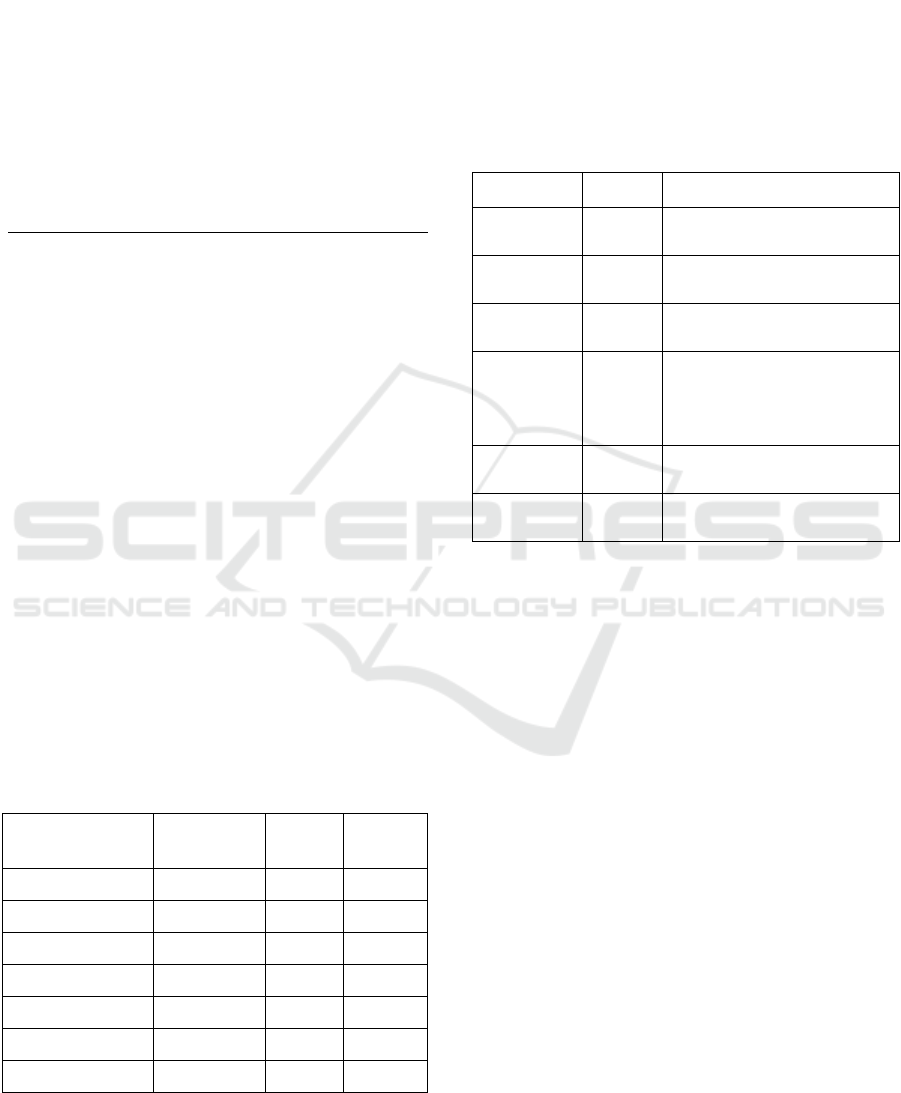

Table 1: Microarray datasets used in the experiments.

Dataset

No. of

Instances

No. of

Genes

No. of

Classes

Brain_Tumour1 90 5920 5

Brain_Tumour2 50 10367 4

CNS 60 7129 2

DLBCL 77 5469 2

Leukemia 72 7129 2

Colon 62 2000 2

Lung Cancer 203 12600 4

5.2 Parameter Setting

The proposed EBGWO is benchmarked with two

novel binary grey wolf optimizations (i.e. BGWO1

and BGWO2) (Emary et al 2016), binary particle

swarm optimization (BPSO), binary differential

evolution (BDE) and binary genetic algorithm(Too et

al., 2019). The optimizer- specific settings of the

considered algorithms are presented in Table 2.

Table 2: Parameter settings for each considered algorithm.

Algorithm Year Parameter settings

EBGWO

N=10,𝑀𝑎𝑥𝐼𝑡𝑒𝑟

100, 𝑎

2

BGWO1 2016

N=10,𝑀𝑎𝑥𝐼𝑡𝑒𝑟

100, 𝑎

2

BGWO2 2016

N=10,𝑀𝑎𝑥𝐼𝑡𝑒𝑟

100, 𝑎

2

BPSO 2019

N=10,𝑀𝑎𝑥𝐼𝑡𝑒𝑟

100, 𝐶

𝐶

2, 𝑉

6, 𝑊

0.9, , 𝑊

0.4

BDE 2019

N=10 ,𝑀𝑎𝑥𝐼𝑡𝑒𝑟

100, 𝐶𝑅0.9

BGA 2019

N=10,𝑀𝑎𝑥𝐼𝑡𝑒𝑟

100, 𝐶𝑅0.8, MR=0.01

Additionally, all the considered algorithms are

repeated over 10 independent runs to ensure both

stability and statistical significance of the obtained

results. Furthermore, the commonly utilized 10-fold

cross validation scheme is used to divide the

considered microarray datasets into training and

testing (Arlot & Celisse, 2010).

A wrapper approach based on the K-Nearest

Neighbour (K-NN) classifier(Emary et al., 2016;

Pirgazi et al., 2019) is used for feature selection in this

paper. The K-NN classifier (where k=5) is utilized to

obtain the classification accuracy of the solutions.

Table 3 presents the performance of all the

algorithms considered for the feature selection task

using the gene expression datasets whose details are

presented in Table 1.

The following information is presented in each

column of Table 3:

i) Algorithm: Provides the abbreviations of the

considered algorithms i.e. Excited Binary

Grey Wolf Optimizer (EBGWO), Binary Grey

Wolf Optimizer 1(BGWO1), and Binary Grey

Wolf Optimizer 2 (BGWO2)

ii) 𝑀𝑎𝑥_𝐴𝑐𝑐 Maximum Accuracy value obtained

when a given algorithm is repeated for 10

independent runs.

ICINCO 2020 - 17th International Conference on Informatics in Control, Automation and Robotics

130

Table 3: Experimental Results.

Algorithm Accuracy Number of Genes Dataset

𝑀𝑎𝑥_𝐴𝑐𝑐 𝑀𝑖𝑛_𝐴𝑐𝑐 𝐴𝑣𝑔

_

𝐴𝑐𝑐 𝑀𝑎𝑥

_

𝑁𝑓𝑒𝑎𝑡 𝑀𝑖𝑛

_

𝑁𝑓𝑒𝑎𝑡 𝐴𝑣𝑔

_

𝑁𝑓𝑒𝑎𝑡

EBGWO (Ours)

0.933 0.911 0.919 673 440 501.9

Brain_Tumour1

BGWO1 0.889 0.856 0.871 3831 2952 3356.9

BGWO2 0.911 0.878 0.894 1656 1094 1343.3

BPSO 0.854 0.823 0.843 2972 2763 2863.9

BDE 0.864 0.834 0.854 3017 2737 2937.6

BGA 0.869 0.844 0.859 2950 2840 2889.4

EBGWO (Ours)

0.920 0.84 0.884 2811 712 1151.5

Brain_Tumour2

BGWO1 0.840 0.820 0.838 7415 6103 6813.4

BGWO2 0.880 0.820 0.846 4019 2528 3083.8

BPSO 0.800 0.780 0.798 5126 5090 5122.4

BDE 0.728 0.713 0.714 5198 5076 5172.3

BGA 0.767 0.753 0.752 5139 5039 5089.5

EBGWO (Ours)

0.85 0.8 0.827 1020 564 710.3

CNS

BGWO1

0.783 0.750 0.760 4942 4217 4606.4

BGWO2

0.800 0.750 0.780 2502 1842 2175.8

BPSO 0.767 0.733 0.737 3502 3486 3487.6

BDE 0.693 0.663 0.683 3530 3478 3521.9

BGA 0.727 0.707 0.717 3528 3428 3501.7

EBGWO (Ours)

1.000 0.987 0.997 534 333 426.7

DLBCL

BGWO1

0.987 0.961 0.971 3706 2826 3343.4

BGWO2

1.000

0.948 0.986 1700 1002 1408.3

BPSO 0.919 0.891 0.901 2703 2672 2675.1

BDE 0.885 0.869 0.882 2732 2687 2721.4

BGA 0.906 0.883 0.896 2709 2699 2685.1

EBGWO (Ours)

0.931 0.889 0.903 913 524 649.8

Leukemia

BGWO1

0.861 0.833 0.849 5065 3897 4428.5

BGWO2

0.889 0.847 0.874 2141 1618 1805.5

BPSO 0.828 0.809 0.814 3516 3505 3514.9

BDE 0.782 0.751 0.784 3537 3527 3531.2

BGA 0.801 0.782 0.792 3501 3461 3481.8

EBGWO (Ours)

0.935 0.903 0.919 220 103 143.4

Colon

BGWO1

0.887 0.855 0.865 1316 1096 1189.4

BGWO2

0.919 0.871 0.900 622 351 455.2

BPSO 0.849 0.829 0.839 986 931 936.5

BDE 0.810 0.780 0.794 995 955 965.3

BGA 0.881 0.875 0.878 990 984 987.3

EBGWO (Ours)

0.985 0.970 0.977 1148 781 1005.5

Lung Cancer

BGWO1

0.966 0.941 0.951 7598 6621 7211

BGWO2

0.975 0.956 0.966 2672 2167 2413.2

BPSO 0.936 0.931 0.935 6196 6179 6180.7

BDE 0.931 0.921 0.924 6256 6218 6226.8

BGA 0.952 0.939 0.945 6235 6214 6218.2

Values in bold represent the best result and values in italic denote the worst in each column, respectively.

An Excited Binary Grey Wolf Optimizer for Feature Selection in Highly Dimensional Datasets

131

iii) 𝑀𝑖𝑛_𝐴𝑐𝑐 Minimum Accuracy value

obtained when a given algorithm is repeated

for 10 independent runs.

iv) 𝐴𝑣𝑔_𝐴𝑐𝑐: Is the average of all the accuracy

values obtained when a given algorithm is

repeated for 10 independent runs.

v) 𝑀𝑎𝑥_𝑁𝑓𝑒𝑎𝑡: Is the Maximum number of

features reported by a given algorithm

during the 10 independent runs.

vi) 𝑀𝑖𝑛_𝑁𝑓𝑒𝑎𝑡: Is the Minimum number of

features reported by a given algorithm

during the 10 independent runs.

vii) 𝐴𝑣𝑔_𝑁𝑓𝑒𝑎𝑡 Is the average of all the

number of features reported by a given

algorithm during the 10 independent runs.

viii) Dataset: Captures the datasets utilized for

experimentation as articulated in Table 1.

It is important to point out that the best result

achieved in each column for all the considered gene

expression datasets is highlighted in bold while the

worst is italicized.

Concerning the classification accuracy, as

presented in Table 3, the proposed EBGWO

algorithm outperformed all the competing when the

fitness function (refer to Equation 24) was adopted.

Concerning the selection of the informative subset

of genes, again the proposed EBGWO identified

subsets with the least number of features to achieve

the highest classification accuracy for all the seven

highly dimensional microarray datasets considered in

this paper.

6 CONCLUSION AND FUTURE

WORKS

An excited grey wolf optimizer (EGWO) is proposed

in this paper. In the proposed algorithm, the concept

of the complete current response of a direct current

(DC) excited resistor capacitor (RC) circuit are

innovatively utilized to make the non-linear control

strategy of parameter 𝑎 of the GWO adaptive. Since

this scheme allocates a large proportion of the number

of iterations to global exploration compared to local

exploitation, the convergence speed of the proposed

EGWO algorithm is enhanced while minimizing the

local minima trapping effect. Moreover, since the

proposed scheme assigns each wolf a value of

parameter 𝑎 that is proportional its fitness values in

both the search space and the current iteration

(generation), diversity and the quality of the solutions

is improved as well.

To overcome premature converge (a limitation of

existing versions of GWO algorithms) and still

maintain the social hierarchy of the pack, a new

position-updated equation utilizing the fitness values

of vectors 𝑋

⃗

, 𝑋

⃗

and 𝑋

⃗

is proposed in determining

new candidate individuals.

As a feature selector, EBGWO is compared with

five metaheuristic algorithms i.e. BGWO1, BGWO2,

BPSO, BDE and BGA that are in existence. The

obtained experimental results revealed that EBGWO

yielded the best performance and overtook the other

algorithms. EBGWO not only attained the highest

classification accuracy, but also selected subsets with

the least number of informative features (genes). In

conclusion, the proposed EBGWO is successful, and

more appropriate to be used as a feature selector in

highly dimensional datasets. For further works, a

chaotic map can be adopted to fine-tune the

parameters of the EBGWO. Utilizing EBGWO as a

hybrid filter-wrapper for feature selection seeking to

evaluate the generality of the selected attributes will

be another valuable contribution. Moreover, EGWO

will be applied to other optimization areas, such as

training neural network, knapsack, and numerical

problems.

REFERENCES

Aghdam, M. H., Ghasem-Aghaee, N., & Basiri, M. E.

(2009). Text feature selection using ant colony

optimization. Expert Systems with Applications, 36(3,

Part 2), 6843–6853. https://doi.org/10.1016/j.eswa.

2008.08.022

Alexander, C., & Sadiku, M. (2016). Fundamentals of

Electric Circuits. https://www.mheducation.com/

highered/product/fundamentals-electric-circuits-

alexander-sadiku/M9780078028229.html

Almugren, N., & Alshamlan, H. (2019). A Survey on

Hybrid Feature Selection Methods in Microarray Gene

Expression Data for Cancer Classification. IEEE

Access, 7, 78533–78548. https://doi.org/10.1109/

ACCESS.2019.2922987

Arlot, S., & Celisse, A. (2010). A survey of cross-validation

procedures for model selection. Statistics Surveys, 4,

40–79. https://doi.org/10.1214/09-SS054

De Stefano, C., Fontanella, F., Marrocco, C., & Scotto di

Freca, A. (2014). A GA-based feature selection

approach with an application to handwritten character

recognition. Pattern Recognition Letters, 35, 130–141.

https://doi.org/10.1016/j.patrec.2013.01.026

El-Gaafary, A. A. M., Mohamed, Y. S., Hemeida, A. M., &

Mohamed, A.-A. A. (2015). Grey Wolf Optimization

for Multi Input Multi Output System. Universal

Journal of Communications and Network, 3(1), 1–6.

https://doi.org/10.13189/ujcn.2015.030101

ICINCO 2020 - 17th International Conference on Informatics in Control, Automation and Robotics

132

Emary, E., Zawbaa, H. M., & Hassanien, A. E. (2016).

Binary grey wolf optimization approaches for feature

selection. Neurocomputing, 172, 371–381.

https://doi.org/10.1016/j.neucom.2015.06.083

Long, W. (2016, December). Grey Wolf Optimizer based

on Nonlinear Adjustment Control Parameter. 2016 4th

International Conference on Sensors, Mechatronics

and Automation (ICSMA 2016). https://doi.org/10.

2991/icsma-16.2016.111

Long, W., Jiao, J., Liang, X., & Tang, M. (2018). An

exploration-enhanced grey wolf optimizer to solve

high-dimensional numerical optimization. Engineering

Applications of Artificial Intelligence, 68, 63–80.

https://doi.org/10.1016/j.engappai.2017.10.024

Luo, J., Wang, Q., & Xiao, X. (2013). A modified artificial

bee colony algorithm based on converge-onlookers

approach for global optimization. Applied Mathematics

and Computation, 219(20), 10253–10262. https://

doi.org/10.1016/j.amc.2013.04.001

Mirjalili, S., Mirjalili, S. M., & Lewis, A. (2014). Grey

Wolf Optimizer. Advances in Engineering Software,

69, 46–61. https://doi.org/10.1016/j.advengsoft.2013.

12.007

Mittal, N., Singh, U., & Sohi, B. S. (2016). Modified Grey

Wolf Optimizer for Global Engineering Optimization

[Research Article]. Applied Computational Intelligence

and Soft Computing. https://doi.org/10.1155/2016/

7950348

Pirgazi, J., Alimoradi, M., Esmaeili Abharian, T., &

Olyaee, M. H. (2019). An Efficient hybrid filter-

wrapper metaheuristic-based gene selection method for

high dimensional datasets. Scientific Reports, 9(1),

18580. https://doi.org/10.1038/s41598-019-54987-1

Salesi, S., & Cosma, G. (2017). A novel extended binary

cuckoo search algorithm for feature selection. 2017 2nd

International Conference on Knowledge Engineering

and Applications (ICKEA), 6–12. https://doi.org/

10.1109/ICKEA.2017.8169893

Shunmugapriya, P., & Kanmani, S. (2017). A hybrid

algorithm using ant and bee colony optimization for

feature selection and classification (AC-ABC Hybrid).

Swarm Evol. Comput., 36. https://doi.org/10.1016/

j.swevo.2017.04.002

Sun, Y., Lu, C., & Li, X. (2018). The Cross-Entropy Based

Multi-Filter Ensemble Method for Gene Selection.

Genes, 9(5). https://doi.org/10.3390/genes9050258

Too, J., Abdullah, A. R., Mohd Saad, N., Mohd Ali, N., &

Tee, W. (2018). A New Competitive Binary Grey Wolf

Optimizer to Solve the Feature Selection Problem in

EMG Signals Classification. Computers, 7(4), 58.

https://doi.org/10.3390/computers7040058

Too, J., Abdullah, A. R., Mohd Saad, N., & Tee, W. (2019).

EMG Feature Selection and Classification Using a

Pbest-Guide Binary Particle Swarm Optimization.

Computation, 7(1), 12. https://doi.org/10.3390/

computation7010012

Tu, Q., Chen, X., & Liu, X. (2019). Hierarchy Strengthened

Grey Wolf Optimizer for Numerical Optimization and

Feature Selection. IEEE Access, 7, 78012–78028.

https://doi.org/10.1109/ACCESS.2019.2921793.

Zorarpacı, E., & Özel, S. A. (2016). A hybrid approach of

differential evolution and artificial bee colony for

feature selection. Expert Systems with Applications, 62,

91–103. https://doi.org/10.1016/j.eswa.2016.06.004.

An Excited Binary Grey Wolf Optimizer for Feature Selection in Highly Dimensional Datasets

133