Using Mixed Reality as a Tool to Assist Collaborative Activities in

Industrial Context

Breno Keller

a

, Andrea G. Campos Bianchi

b

and Saul Delabrida

c

Department of Computing, Federal University of Ouro Preto, Brazil

Keywords:

Industrial Inspection, User Study, Mixed Reality, Collaborative Activities, Industry 4.0.

Abstract:

The transition process from industry 3.0 to 4.0 results in need to develop interconnected systems as well as

new interfaces for human-computer interaction with these systems since it is not yet possible or allowed to

automate these processes in all industrial contexts fully. Therefore, new technologies should be designed

for the workers’ interaction with the new systems of Industry 4.0. Mixed Reality (MR) is an alternative for

the inclusion of workers, as it allows them to have their perception increased through information from the

industrial environment. Consequently, the use of MR resources as a tool for performing collaborative activities

in an industrial context is promising. This work aims to analyze how this strategy has been applied in the

industry context and discuss its advantages, disadvantages, and characteristics that impact the performance

workers in Industry 4.0.

1 INTRODUCTION

The Fourth Industrial Revolution or Industry 4.0 de-

fines a new level of organization and control over the

production chain aimed at meeting increasingly spe-

cific customer demands (R

¨

ußmann et al., 2015). This

process occurs through the integration of equipment

and systems using technologies such as the Internet

of Things (IoT) and machine learning. Visualization

technologies like Virtual Reality (VR), Augmented

Reality (AR), and Mixed Reality (MR) present them-

selves as promising for making information about

the industrial park available to industry operators and

managers, in particular as an interface to provide in-

formation and support the decision making.

The availability of wearable mixed reality devices

allows the development of advanced interfaces for in-

teraction with industrial equipment, as well as with

other human operators collaboratively. This fact sup-

ports the construction of new computational inter-

faces to perform collaborative activities in the indus-

trial context. Mixed Reality can be defined as the

combination of virtual elements with the real world at

different levels with technologies such as Augmented

Reality and Augmented Virtuality within its limits

a

https://orcid.org/0000-0001-5414-6716

b

https://orcid.org/0000-0001-7949-1188

c

https://orcid.org/0000-0002-8961-5313

(Milgram and Colquhoun, 1999). The Google Glass

1

,

the Microsoft Hololens

2

, the Gear VR

3

and the HTC

Vive

4

are examples of more well-known devices for

MR.

This work focuses on discussing the use of collab-

orative activities in an industrial context. Collabora-

tive activities can be defined as a joint work between

two or more actors to perform an activity (Graham

and Barter, 1999), where these actors could be a hu-

man or a machine combination for the development

of several activities in the production chain. How-

ever, in the current stage of the industry, we still find

contexts where it is not possible to have complete au-

tomation, which means only machines operating in

an integrated manner to perform an activity. Hence

in these contexts, human intervention as part of col-

laboration can be essential for the proper execution of

activities in these specific contexts.

The applications of MR devices in the indus-

try require evaluations from the perspective of HCI

(Human-Computer Interaction) due to the novelty of

1

More information about the device at: bit.ly/2uiMf8L,

accessed on 02/20/2020.

2

More information about the device at: bit.ly/2T2EsEB,

accessed on 02/20/2020.

3

More information about the device at: bit.ly/2SN1qjW,

accessed on 02/20/2020.

4

More information about the device at: bit.ly/32gFalG,

accessed on 02/20/2020

Keller, B., Bianchi, A. and Delabrida, S.

Using Mixed Reality as a Tool to Assist Collaborative Activities in Industrial Context.

DOI: 10.5220/0009792405990605

In Proceedings of the 22nd International Conference on Enterprise Information Systems (ICEIS 2020) - Volume 2, pages 599-605

ISBN: 978-989-758-423-7

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

599

the technologies, their interaction model, and applica-

bility.

This paper aims to discuss aspects related to how

can the use of Mixed Reality enhance collaborative

activities in Industry 4.0. In our methodology, we

present discussions based on learning through theo-

retical reasoning, prototype development, and mixed

reality technology analysis for collaboration. We

hope that the discussions and contributions presented

in this paper will assist as a basis for further re-

searches and practitioners towards the integration of

the human in the processes of Industry 4.0.

This document has the following structure: Sec-

tion 2 presents some concepts related to this work,

and some tools applied to these concepts. Section 3

discusses aspects of the use of handheld and hands-

free devices. Section 4 presents a proof of concept

of the proposed solution for collaborative activities in

the industrial context. Section 5 shows the positive

and negative points of the proposed approach. Finally,

Section 6 presents the conclusions of this work.

2 BACKGROUND

This section presents the concepts of mixed reality

and collaboration. In the end, it presents an overview

of mixed reality technologies that can be used as a

tool to perform collaborative activities in the indus-

trial context.

2.1 Mixed Reality

The concept of Mixed Reality (MR) does not have

a consensus in the research community. Some re-

searches understand MR as a broad classification that

contains differents technologies such as Augmented

Reality (AR), Virtual Reality (VR), and Augmented

Virtuality (AV). In our work, we understand MR as

defined by Milgram and Colquhoun (Milgram and

Colquhoun, 1999) that define MR by characteristics

such as the degree of virtuality and the level of mod-

eling used. The authors also define a spectrum where

the Real Environment (RE) and the Virtual Environ-

ment (VE) are not two distinct entities, but two oppo-

sites poles in the Reality-Virtuality Spectrum. This

spectrum is similar to the Extent of World Knowl-

edge Continuum (EWK), which defines the degree of

modeling that a system knows about a given world,

in other words, how much the system knows about

the rules that describe a given world. In EWK Spec-

trum, on one side, the system does not know any

modeling about the world, and on the other side, the

system known all about the model that describes the

world. Figure 1 shows the relationship between these

two spectra, where the real world is a world with no

known modeling and the virtual world, a world with

fully known modeling.

Reality-Virtuality Spectrum

Extent of World Knowledge Continuum

Real

Environment (RE)

Virtual

Environment (VE)

Non Modeled

World

Modeled

World

Partially modeled world

Figure 1: Reality-Virtuality Spectrum and its relationship

with the Extent of World Knowledge Continnuum adapted

from (Milgram and Colquhoun, 1999).

Between the RE and VE extremes of the Reality-

Virtuality Spectrum, there is the partially modeled

world in which locates technologies such as Aug-

mented Reality (AR) and Augmented Virtuality (AV).

The difference between both technologies is the de-

gree of virtuality in the world with which they work.

Figure 2 presents a representation of the distribution

of these technologies in the spectrum, which contains

technologies that have some degree of virtuality, such

as AR and AV.

Real

Environment

(RE)

Virtual

Environment

(VE)

Augmented

Reality (AR)

Augmented

Virtuality

(AV)

Mixed

Reality (MR)

Reality-Virtuality Spectrum

Figure 2: Reality-Virtuality Spectrum and its technologies

adapted from (Milgram and Colquhoun, 1999).

Although MR is composed of AR and AV technolo-

gies, the boundary between them is blurred because

both use combinations between the virtual and the

real at different levels. However, we can define that

AR extends the real world with virtual resources,

while AV extends the virtual world with real-world re-

sources (Milgram and Colquhoun, 1999). In another

way, closer a technology is to one extreme of the spec-

trum, higher is the difference between an AR and an

AV technology.

2.2 Collaboration

Graham and Barter (Graham and Barter, 1999) de-

fine collaboration as a relationship where two or

more stakeholders bring together resources to achieve

goals that neither party could achieve alone. There

are terms with similar meaning, as (i) partnership,

which Rodal and Mulder (Rodal and Mulder, 1993)

define as an agreement between two or more par-

ties that have agreed to work cooperatively to achieve

shared and/or compatible objectives; and, (ii) coop-

ICEIS 2020 - 22nd International Conference on Enterprise Information Systems

600

eration which Hord (Hord, 1986) defines as an aid

to the achievement of specific goals of each party in-

volved. Therefore, these concepts differ from col-

laboration because characteristics of task objective,

since for collaboration, they are shared and common

to both stakeholders. While, for cooperation and part-

nership, they either may be individual or not common

to both stakeholders (Graham and Barter, 1999).

In the industrial context, a collaborative activity is

a partnership between a Actuator and a Consultant

to achieve a common goal, which can be an inspec-

tion, the maintenance of equipment or any other task

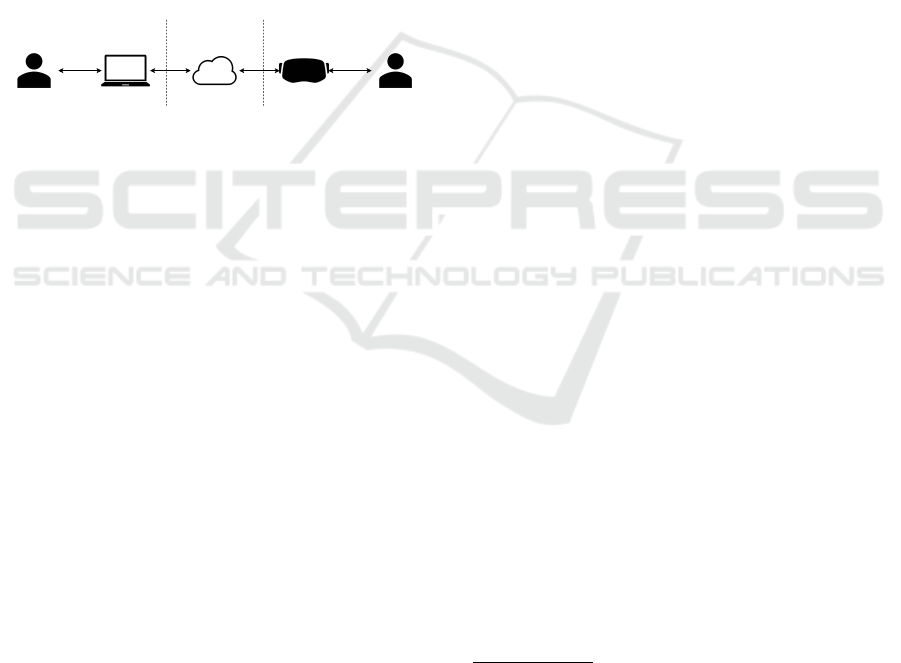

in that context. Figure 3 exemplifies how the interac-

tion between the parties can be assisted by technol-

ogy in this context. In this case, Actuator interacts

with the process location and interacts with Consul-

tant via a MR device while Consultant interacts with

Actuator through a desktop.

Task EnvironmentOffice Communication

Layer

Consultant VA ActuatorAR

Figure 3: General Structure of Technology-Assisted Col-

laboration.

As defined by Graham and Barter (Graham and

Barter, 1999), collaboration is a relationship between

parties with a common goal. In the industrial con-

text, these parts can be both people and machines,

thus characterizing three possible collaboration sce-

narios:

• Human - Human Collaboration: Where two

or more people interact to perform an activity,

through communication mechanisms;

• Human - Machine Collaboration: Where one or

more people interact with one or more electronic

equipment to perform an activity, through com-

munication and interaction mechanisms between

them;

• Machine - Machine Collaboration: Where two

or more machines interact with each other to per-

form an activity, using communication protocols

between machines.

The machine-machine collaboration describes a

complete automation environment, where the equip-

ment operates in an integrated manner and indepen-

dent of human interaction to perform its activities.

However, there are processes in the industrial context

that mechanisms are not yet known to provide com-

plete automation. An example of this is the regulation

for piloting UAVs (Unmanned Aerial Vehicles) not al-

lowed in some countries (Nascimento et al., 2017) due

their current legislation does not allow completely au-

tonomous flights. Therefore, for these contexts, ac-

tivities must be performed out through collaboration

between people or between people and machines.

In the scenario shown in Figure 3, the roles of

Consultant and Actuator can be performed by either

a person or a machine.

2.3 Available MR Tools for

Collaboration

In order to achieve the interaction shown in Figure 3,

it is necessary to use tools with MR capabilities. This

section describes examples of technologies available

for collaborating through mixed reality.

Currently, some tools are available on the market,

such as Vuforia Chalk

5

. It is an application for re-

mote assistance, which allows a remote user to inter-

act with a local user through mobile devices. The ap-

plication allows the users to make a video call and

insert 3D elements into the scene that are tracked

by mapping the environment. This mapping means

that three-dimensional markings are drawn on can-

vas and mapped to the real world. In case the local

user moves, the markings remain in the correct posi-

tion. Accelerometer and gyroscope are used to esti-

mate this mapping for smartphones.

Team Viewer Pilot

6

is an AR solution similar to

Vuforia Chalk, however, developed by Team Viewer

7

. Unlike Vuforia Chalk, it does not require two mo-

bile devices to function, requiring only the local user

to have a mobile device. Given that the remote user

can interact through the TeamViewer tool on a com-

puter.

Another model of interaction is demonstrated by

Remote Assist

8

for Microsoft Hololens. Where the

application offers an interaction similar to the appli-

cations already described, that is, it offers visual re-

sources in three dimensions for the interaction. How-

ever, the local user uses an HMD (Head Mounted Dis-

play) to capture, transmit, and display data while us-

ing the equipment.

5

More information at: bit.ly/2VbLARC, accessed on

02/19/2020.

6

More information at: bit.ly/2SLTAai, accessed on

02/19/2020.

7

More information at: bit.ly/2SPqaIq, accessed on

02/21/2020.

8

More information at: bit.ly/3bXifAd, accessed on

02/19/2020.

Using Mixed Reality as a Tool to Assist Collaborative Activities in Industrial Context

601

3 HANDHELD AND

HANDS-FREE TECHNOLOGIES

As described in Section 2, MR is a concept, so there

are several ways to implement this concept. In gen-

eral, there are two types of equipment that offer MR

features, namely the handheld devices, such as smart-

phones, and hands-free devices, such as HMDs. The

handheld devices are those that the user must hold in

their hands for interaction. Most common handheld

devices are smartphones, tablets that have a camera

attached to capture data from the real environment.

Hands-free devices are those that can be attached to

the human body and leave the hands of operators free.

In general, HMDs are wearable equipment such as

helmets and glasses with a real-world view through

screens or optical lenses.

Each of these devices has its pros and cons in-

dividually. However, in a general assessment, the

hands-free devices present greater freedom of move-

ment for the user since the user’s hands are free to

interact with the environment. However, this type

of equipment is usually uncomfortable and presents

a complex interaction model since it does not use

any widely known standard (keyboard or touch-

screen). An example is the interaction model used by

Microsoft Hololens that uses a limited set of gestures.

Other devices like Google Glass use trackpads that

can be located in its structure or presented as an ex-

tension of the equipment in the case of Epson Move-

rio

9

. However, in this case, at least one of the user’s

hands are occupied.

The handheld devices do not offer this freedom

to the user’s hands. However, as they are generally

devices such as smartphones and tablets, users are al-

ready naturalized to the use of this equipment so that

interaction with it and the system is more straightfor-

ward than hands-free devices. Thus, it is possible to

build more complex interaction models with these de-

vices since the user already has some pro-efficiency

with this interaction model.

4 PROOF OF CONCEPT

Based on the technologies and concepts presented in

Sections 2 and 3, a proof of concept of a tool to aid

collaboration in MR is presented in this work. The

development of this proof of concept has two objec-

tives: (i) allow discussion on collaboration aspects

using mixed hands-free reality and (ii) use the devel-

9

More information about the device at: bit.ly/2v5Zave,

accessed on 02/21/2020.

oped tool for experiments on the HCI perspective. Al-

though this is not the focus of this paper, we consider

this to be future work.

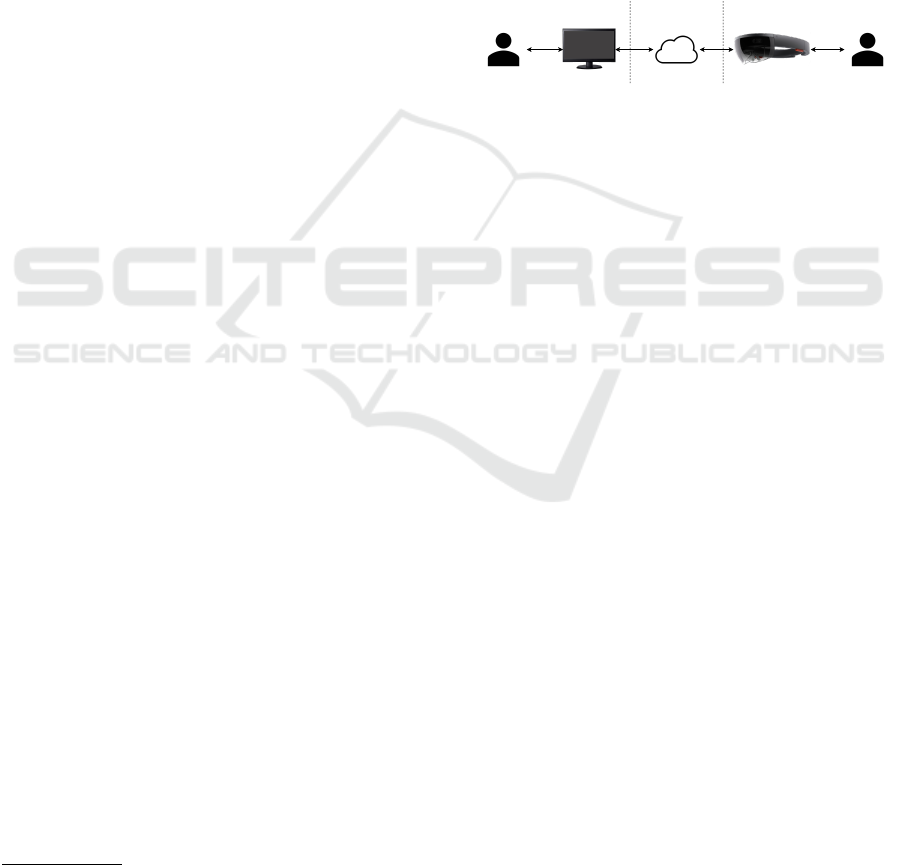

Figure 4 shows the architecture of this tool. It con-

sists of two applications, one for Microsoft Hololens,

whose the primary function is to provide an interface

for user drawing and communication capabilities in

AR. The application was named HoloDraw, how we

refer it from here in this text. Furthermore, an ap-

plication to receive the streaming from the user in

the field, which acts as an AV application to view

the HoloDraw camera capturing and interacts through

this stream. Each application is described in the sec-

tions below.

Task EnvironmentOffice Communication

Layer

HoloDraw

ActuatorHoloDraw DeviceConsultant

Figure 4: Apps Communication Structure.

4.1 HoloDraw

The application developed to run into Microsoft

Hololens was named HoloDraw and was developed

using the Unity environment configured for UWP

(Universal Windows Platform) applications. The ap-

plication has a simple HUD interface that presents all

the necessary information in the user’s view. Besides

that, this interface does not overburden the user with

complex interactions, since the equipment uses ges-

ture recognition, which in general, the user is not used

to it. Therefore, a simple HUD interface is needed

because complex interaction would increase the user

difficult to manipulate the equipment.

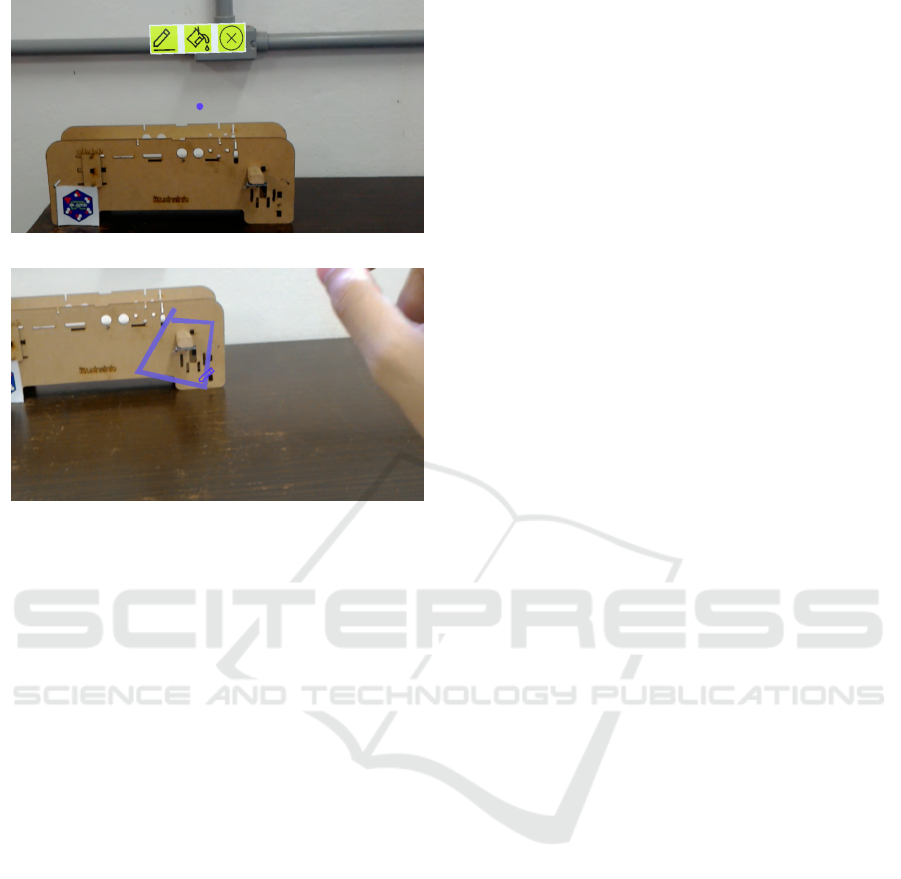

Figure 5a shows the application’s base interface.

These images were generated using the Microsoft

Hololens camera so that they may present some dis-

tortions to the actual visualization since they are

treated as fixed elements in a 3D space so that a

change in the user’s position results in a different vi-

sualization of these virtual elements.

The tool has an action bar with three options (from

left to right): drawing, recoloring, and end a line.

Also, a focus indicator was placed in the center of

interface. This indicator works as a reference point

for the center of the application camera operating as a

guide from where the point will be drawn.

Figure 5b shows an example of lines drawn by

user in a belt conveyor. For that, it is necessary to

use the functions available in the application (draw,

recolor, end). Finally, the video available at URL:

bit.ly/2QL65BG presents a complete example of us-

ing HoloDraw, demonstrating how the application

ICEIS 2020 - 22nd International Conference on Enterprise Information Systems

602

(a) Base App Interface

(b) Highlighting a Region

Figure 5: HoloDraw.

works.

4.2 HoloDraw Device

The software of HoloDraw Device was developed us-

ing the Unity environment configured for Windows

OS applications. Similar to HoloDraw, this applica-

tion was also designed to have fewer elements in the

user’s field of view since all the necessary informa-

tion is generated in the local user’s view and viewed

through the generated stream. In this way, the remote

user can see what the local user is doing and assist

him. Thus, the view of the remote user is similar to

the view of the local user, as shown in Figure 5.

5 DISCUSSION

This section discusses some topics related to the use

of MR in collaborative activities in an industrial con-

text based on the established concepts and the devel-

opment of the proof of concept presented in Section

4.

5.1 MR Devices for Work Safety

The use of handheld or hands-free devices for MR in-

teraction can impact workplace safety issues. Since

the insertion of a new element in the process implies

that this element will not pose any new risk for the

user. However, the devices used for this purpose do

not make it clear as to their suitability for international

and local safety standards. Thus, it is necessary to de-

velop or adapt those devices so they can be classified

as Personal Protective Equipment (PPE) without gen-

erating new risks to the user.

Another factor that needs to be observed about the

use of hands-free devices is the type of see-through

display (Azuma, 1997) that the equipment presents.

If it is a video see-through display, in case of equip-

ment failure, the user will be completely blind in a

hazardous environment. So for the Actuator context

(as shown in Figure 3), it is safer to use an optical

see-through device to avoid this type of problem.

5.2 Interaction Mediated by MR

Devices

Another aspect affected by technology is that the im-

plementation of these solutions also impacts the user’s

ability to solve problems. Seeing that allows users to

be more efficient because these devices can reduce the

ambiguity of oral communication and offer better re-

sources for this interaction. Reddy et al. (Reddy et al.,

2015) demonstrate that the use of a shared 3D model

of a car reduces the problems generated by the am-

biguity of verbal communication in carrying out an

inspection task to trigger car insurance.

Furthermore, it is necessary to analyze these solu-

tions in addition to the quantitative gains. Since in ad-

dition to bringing benefits, these solutions must also

be well accepted by their users. Works like Datcu

et al., Orts-Escolano et al., and Kim et al. (Datcu

et al., 2013; Orts-Escolano et al., 2016; Kim et al.,

2019) have different formats of interaction between

their users. However, most of these interaction mod-

els are different from habitual (keyboards or touch-

screens). Therefore, these models need to be evalu-

ated from the user’s perspective, trying to ensure that

the user has a good experience and reducing the im-

pact of inserting these tools in the context of the ac-

tivity performed.

Therefore, it is possible to use different evaluation

methods, as demonstrated in the works of Datcu et al.,

Orts-Escolano et al and Kim et al. (Datcu et al., 2013;

Orts-Escolano et al., 2016; Kim et al., 2019), which

use techniques such as SUS, SMEQ, Co-presence,

NASA-TLX, and AR Questionnaire.

Using Mixed Reality as a Tool to Assist Collaborative Activities in Industrial Context

603

5.3 MR System Development

The popularization and diversification of MR devices

and technologies in recent years has led to the de-

velopment of several SDKs (Software Development

Kit) for AR and development environments to meet

this demand. Within this variety, these technologies

can be divided into three groups: (i) Android-based

systems, such as applications for Epson Moverio; (ii)

systems based on SDKs for AR, such as applica-

tions developed using Vuforia or ARToolKit; and (iii)

other system architectures, such as applications for

Microsoft Hololens.

However, even with this technology variety, de-

velopment environments such as Unity can be ad-

justed to work with these different technologies, such

as importing an SDK or an application parser in the

Unity framework for the target architecture. Some

works in the literature (Piumsomboon et al., 2019;

Kim et al., 2019; De Pace et al., 2019; Choi et al.,

2018) use Unity to generate applications for Mi-

crosoft Hololens, Oculus Rift, Smartphones, Note-

books, and other types of HMDs.

Although this cross-platform behavior from Unity

brings some advantages, the unification of these tech-

nologies in a single environment also brings some

problems. At the development level, a collaborative

application that needs to run on two different plat-

forms can present completely different levels of dif-

ficulties from each other. As an example, the proof

of concept presented in Section 4 developed two ap-

plications, one for Microsoft Hololens, using Unity

to UWP platform, and one to the Windows platform.

However, even with both devices running on similar

Windows architectures, the applications are different

and, in turn, generate several compatibility problems

caused either by specific equipment characteristics or

because of the adaptation that Unity performs to ex-

port these applications.

Also, it is necessary to consider the original pur-

pose of the Unity platform is game development,

which allows the platform to be well adapted for the

development of VR applications. However, for the

MR context, it ends up generating problems due to

the APIs and interactions with the development envi-

ronment itself.

5.4 Why Use or Not Use 3D Mapping

One of the main situations when imagining the use

of MR systems is the ability of virtual elements to

behave as real elements through 3D mapping. So,

in general, 3D mapping is used when the virtual ob-

jects need to behave and interact with the environ-

ment. However, if the interface is something similar

to a fixed HUD, the mapping is optional.

Despite, obtaining a good result when using a 3D

mapping is directly related to the context of use and

the equipment used. Since the mapping quality is re-

lated to the devices’ sensors quality used to capture

and update the map and the characteristics of the envi-

ronment. For example, a teaching laboratory environ-

ment for chemistry where are several benches, piping

for transporting liquids and gases, and other charac-

teristic structures. If this environment is mapped, the

noise level generated by the structures ends up limit-

ing or even hindering the use of MR devices. When

visualizing this situation in an industrial environment

whose noise is much higher than a teaching labora-

tory, it must take great care so that the noise does not

prevent the use of the application.

Besides, not all types of MR devices cope well

with the idea of 3D mapping. Devices like Epson

Moverio and Google Glass are built like glasses, but

unlike Microsoft Hololens, they do not have a fully

immersive screen, which, although it is possible to

use applications such as 3D mapping ends up mak-

ing it impossible to use this technology well. Thus,

this type of equipment ends up using a game HUD-

style interfaces, where they present their information

in the user’s peripheral field of vision without directly

interfering with the world view, thus guaranteeing the

user’s safety.

6 CONCLUSION

This work presented a proof of concept and a discus-

sion about the pros and cons of using MR as a tool

to assist collaborative tasks in the context of industry

4.0. We can see that there are some possible solutions

developed by both the market and the academy. How-

ever, it is still necessary to develop and evaluate these

solutions from the users’ point of view so that it is

possible to insert these solutions in the industrial en-

vironment, thus bringing benefits to both the worker

and the production process.

ACKNOWLEDGEMENTS

The authors thank Coordenac¸

˜

ao de Aperfeic¸oamento

de Pessoal de N

´

ıvel Superior (CAPES), Fundac¸

˜

ao

de Amparo

`

a Pesquisa de Minas Gerais (FAPEMIG-

APQ-01331-18), Instituto Tecnol

´

ogico Vale (ITV)

and Vale S.A. (No.23109.005575/2016-81 and No.

23109.005909/2018-89) and the Federal University of

Ouro Preto for supporting this research.

ICEIS 2020 - 22nd International Conference on Enterprise Information Systems

604

REFERENCES

Azuma, R. T. (1997). A survey of augmented reality.

Presence: Teleoperators and virtual environments,

6(4):355–385.

Choi, S. H., Kim, M., and Lee, J. Y. (2018). Situation-

dependent remote AR collaborations: Image-based

collaboration using a 3D perspective map and live

video-based collaboration with a synchronized VR

mode. Computers in Industry, 101:51–66.

Datcu, D., Lukosch, S., and Lukosch, H. (2013). Compar-

ing Presence, Workload and Situational Awareness in

a Collaborative Real World and Augmented Reality

Scenario. In IEEE ISMAR Workshop on Collabora-

tion in Merging Realities (CiMeR), pages 1–6. IEEE.

De Pace, F., Manuri, F., Sanna, A., and Zappia, D. (2019). A

Comparison Between Two Different Approaches for

a Collaborative Mixed-Virtual Environment in Indus-

trial Maintenance. Frontiers in Robotics and AI, 6:18.

Graham, J. R. and Barter, K. (1999). Collaboration: A

Social Work Practice Method. Families in Society,

80(1):6–13.

Hord, S. M. (1986). A synthesis of research on or-

ganizational collaboration. Educational leadership,

43(5):22–26.

Kim, S., Lee, G., Huang, W., Kim, H., Woo, W., and

Billinghurst, M. (2019). Evaluating the Combina-

tion of Visual Communication Cues for HMD-based

Mixed Reality Remote Collaboration. In Proceed-

ings of the 2019 CHI Conference on Human Factors

in Computing Systems - CHI ’19, pages 1–13. ACM.

Milgram, P. and Colquhoun, H. (1999). A taxonomy of real

and virtual world display integration. Mixed reality:

Merging real and virtual worlds, 1:1–26.

Nascimento, R., Carvalho, R., Delabrida, S., Bianchi, A. G.,

Oliveira, R. A. R., and Garcia, L. G. (2017). An inte-

grated inspection system for belt conveyor rollers ad-

vancing in an enterprise architecture. In ICEIS 2017 -

Proceedings of the 19th International Conference on

Enterprise Information Systems, pages 190–200.

Orts-Escolano, S., Dou, M., Tankovich, V., Loop, C., Cai,

Q., Chou, P. A., Mennicken, S., Valentin, J., Pradeep,

V., Wang, S., Kang, S. B., Rhemann, C., Kohli, P.,

Lutchyn, Y., Keskin, C., Izadi, S., Fanello, S., Chang,

W., Kowdle, A., Degtyarev, Y., Kim, D., Davidson,

P. L., and Khamis, S. (2016). Holoportation: Virtual

3D Teleportation in Real-Time. In Proceedings of the

29th Annual Symposium on User Interface Software

and Technology - UIST ’16, pages 741–754. ACM.

Piumsomboon, T., Dey, A., Ens, B., Lee, G., and

Billinghurst, M. (2019). The Effects of Shar-

ing Awareness Cues in Collaborative Mixed Reality.

Frontiers in Robotics and AI, 6:1–18.

Reddy, K. P. K., Venkitesh, B., Varghese, A., Narendra,

N., Chandra, G., and Balamuralidhar, P. (2015). De-

formable 3D CAD models in mobile augmented re-

ality for tele-assistance. In 2015 Asia Pacific Con-

ference on Multimedia and Broadcasting, pages 1–5.

IEEE.

Rodal, A. and Mulder, N. (1993). Partnerships, devolution

and power-sharing: issues and implications for man-

agement. Optimum, 24:27–27.

R

¨

ußmann, M., Lorenz, M., Gerbert, P., Waldner, M., Justus,

J., Engel, P., and Harnisch, M. (2015). Industry 4.0:

The Future of Productivity and Growth in Manufactur-

ing Industries. Boston Consulting Group, 9(1):54–89.

Using Mixed Reality as a Tool to Assist Collaborative Activities in Industrial Context

605