A Literature Review on Learner Models for MOOC

to Support Lifelong Learning

Sergio Iván Ramírez Luelmo

a

, Nour El Mawas

b

and Jean Heutte

c

CIREL - Centre Interuniversitaire de Recherche en Éducation de Lille, Université de Lille, Campus Cité Scientifique,

Bâtiments B5 – B6, Villeneuve d’Ascq, France

Keywords: Learning Analytics, Knowledge Representation, Technology Enhanced Learning, Lifelong Learning, Learner

Model, Learning Environment, Literature Review, MOOC.

Abstract: Nowadays, Learning Analytics is an emerging topic in the Technology Enhanced Learning and the Lifelong

Learning fields. Learner Models also have an essential role on the use and exploitation of learner-generated

data in a variety of Learning Environments. Many research studies focus on the added value of Learner

Models and their importance to facilitate the learner’s follow-up, the course content personalization and the

trainers/teachers’ practices in different Learning Environments. Among these environments, we choose

Massive Open Online Courses because they represent a reliable and considerable amount of data generated

by Lifelong Learners. In this paper we focus on Learner Modelling in Massive Open Online Courses in an

Lifelong Learning context. To our knowledge, currently there is no research work that addresses the literature

review of existing Learner Models for Massive Open Online Courses in this context in the last five years.

This study will allow us to compare and highlight features in existing Learner Models for a Massive Open

Online Course from a Lifelong Learning perspective. This work is dedicated to MOOC designers/providers,

pedagogical engineers and researchers who meet difficulties to model and evaluate MOOCs’ learners based

on Learning Analytics.

1 INTRODUCTION

Massive Online Open Courses (MOOC) have

proliferated in the last decade all around the world.

Their global reach and popularity steams from their

original concept to offer free and open access courses

for a massive number of learners from anywhere all

over the world (Yousef et al., 2014). However,

despite their global reach, popularity and often low-

to-none costs, they have very low completion rates

(Yuan & Powell, 2013; Jordan, 2014) with research

metrics agreeing at median of about 6.5%. As this

percentage increases and tops to about 60%, a ten-

fold difference, for fee-based certificates, studies of

both cases show that engagement, intention and

motivation (Jung & Lee, 2018; Wang & Baker, 2018;

Watted & Barak, 2018) are among the top factors to

a

https://orcid.org/0000-0002-7885-0123

b

https://orcid.org/0000-0002-0214-9840

c

https://orcid.org/0000-0002-2646-3658

1

e.g. course-shopping, dabbling topic courses, auditing

knowledge on the material and on its difficulty level, etc.

affect performance in MOOCs. DeBoer, Ho, Stump,

& Breslow (2014) confirm the multifactor complexity

of this phenomena by concluding that MOOC

participants have reasons to enrol other than course

completion

1

. We extend this affirmation by

attributing a part of this phenomena to the obvious

heterogenous nature of these new global learners and

their heterogenous learning needs; a situation also

highlighted by M. L. Sein-Echaluce et al. (2016).

Thus, improving academic success in MOOCs by

increasing the learning outcome and the average

completion rate of learners creates the need to

personalize content and learning paths by modelling

the learner (El Mawas et al., 2019). Research studies

(Bodily et al., 2018; Corbet & Anderson, 1995) focus

on the added value of Learner Models (LM) and their

importance to facilitate the learner’s follow-up, the

Luelmo, S., El Mawas, N. and Heutte, J.

A Literature Review on Learner Models for MOOC to Support Lifelong Learning.

DOI: 10.5220/0009782005270539

In Proceedings of the 12th International Conference on Computer Supported Education (CSEDU 2020) - Volume 1, pages 527-539

ISBN: 978-989-758-417-6

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

527

course content personalization and the trainers /

teachers’ practices in different Learning

Environments (LE) through Learning Analytics (LA).

Moreover, it has been considered by Sloep et al.

(2011) that learner’s personalization is one of the

essential concepts in Lifelong Learning (LLL) and

Lifewide Learning contexts.

However, in the current context of Big Data, it is

very difficult to make a clear view of the research

landscape on works on Learner Models for MOOCs

in an LLL context. For instance, a simple, unrestricted

Google Scholar query on the term “learner model”

returns about 2 million results, about 1 million results

on “lifelong learning” and about 200 thousands on the

term “mooc” at the time of the writing of this paper

(early 2020). The goal of this literature review is to

analyse the most recent works in the field of “LM for

MOOC in an LLL context”. This is in general terms,

how a given LM coupled with(in) a MOOC can

support an LLL. More specifically, we aim to

differentiate and highlight LM’s features and their

relevance to a MOOC usage in an LLL experience.

To our knowledge, currently there is no research work

that addresses the literature review of existing

Learner Models for Massive Open Online Courses in

this context.

So, in order to try to bring up a more adequate and

accurate panorama on the topic of LM for MOOC in

a LLL context to the target public of this paper

(MOOC designers/providers, pedagogical engineers

and researchers who meet difficulties to evaluate

MOOC’s learners based on LA) we decided to limit

2

our literature review to the terms “learner model” and

“mooc”. We performed this research in the Google

Scholar, Web of Science and Scopus databases,

within the last five years (2015-2020) timeframe. The

thought behind these choices is to obtain the most

recent and high-quality corpus on the topic.

This work is different from previous literature

reviews (Sergis & Sampson, 2019; Bodily et al.,

2018; Abyaa et al., 2019; Liang-Zhong et al., 2018;

Afini Normadhi et al., 2018) in that, not only it covers

the most recent proposals, extensions and

implementations of Learner Models (last 5 years) but

that it discerns features in Learner Models that may

play an important role in the case of Lifelong

Learning in MOOC, such as its openness,

independence and dynamism. This specific context

led us to skip considering general models (such as

User Models) as well as more specific models

2

We note that, adding the LLL (“lifelong learning”) term

in the search query would have had the undesirable effect

of excluding LM which did not explicitly contemplate this

(Student Models, Professional Models or even

explicit Lifelong Learner Models).

We also focus on (1) which dimensions are

modelled, more precisely the way Knowledge is

represented (both Domain and Learner’s) and, (2)

what strategies the Model implements to ensure its

own accuracy, that is, its updating methods and / or

techniques. We believe that this approach may help

our target public (MOOC designers/providers,

pedagogical engineers and researchers who meet

difficulties to evaluate MOOC’s learners based on

LA) to take better informed decisions when choosing

a MOOC and its accompanying LM, namely within

the scope of LLL.

The remainder of this article is structured as

follows. Section 2 of this paper oversees the

theoretical works concerning this paper, namely the

concept of Learner Models and their importance in

MOOCs as well as presenting the Lifelong Learning

dimension as the surrounding context. Section 3

details the methodology steps and discusses the

results of this review of literature. Finally, Section 4

concludes this paper and presents its perspectives.

2 THEORETICAL

BACKGROUND

In this section we present the theoretical background

put in motion behind this research, namely the

Learner Model and the considered features, its

importance on MOOC platforms and the Lifelong

dimension as the surrounding context.

2.1 Learner Models

Learner Models represent the system’s beliefs about

the learner’s specific characteristics, relevant to the

educational practice (Giannandrea & Sansoni, 2013),

they are usually enriched by data collection

techniques (Nguyen & Do, 2009) and they aim to

encode individual learners using a specific set of

dimensions (Nakic, Granic & Glavinic, 2015). These

dimensions may or may not include personal

preferences, cognitive states, as well as learning and

behavioural preferences. Modelling the learner has

the ultimate goal of allowing the adaptation and

personalization of environments and learning

activities (El Mawas et al., 2019; Chatti et al., 2014)

while considering the unique and heterogeneous

context but could still have characteristics that would

eventually accommodate it.

CSEDU 2020 - 12th International Conference on Computer Supported Education

528

needs of learners, which in turn improves learning

metrics. Evidence from a number of studies have long

linked having a learner model can make a system

more effective in helping students learn, by using the

model to adapt to learner’s differences (Corbett &

Anderson, 1995; Bodily et al., 2018). Learner

modelling relies on three scientific fields (educational

science, psychology and information technology) and

it involves (1) the identification and selection of

learner’s characteristics that influence learning, and

(2) take into account the learner’s psychological

states during the learning process, in order to choose

the most adapted technologies to model precisely

each characteristic (Abyaa et al., 2019). One of the

most important characteristics of LM is Knowledge

representation. According to the family of techniques

used to represent knowledge, LM can be classified

into stereotype models, overlay models, differential

models, perturbation models or plan models, each

with its own set of techniques to model them (Assami

et al., 2018; Herder, 2016; Nguyen & Do, 2009).

Moreover, depending on each technique, the

knowledge representation of the learner (learner’s

knowledge) can take the form of an instantiation or a

differential or a relationship or a subset of the

knowledge representation of the topic (domain’s

knowledge), while depending heavily for this choice

on the context of utilisation (Abyaa et al., 2019).

Many studies (Somyürek, 2009; Vagale &

Niedrite, 2012; Abyaa et al., 2019) hold that learner

modelling is a process that follows these stages (1)

gathering initial data related to the learner’s

characteristics (or initialization), (2) model

construction, and (3) keeping the LM updated by

analysing the learner’s activities. During the

initialization process (1) the LM may encounter what

is known as a ‘cold start’ problem, where insufficient

data on the learner is made available to properly

instantiate the model. A similar situation (‘data

sparsity’) may also arise during the updating phase

(3), preventing the proper update on the LM or worst,

leading to data corruption.

We acknowledge the difference between Learner

Profile and Learner Model in that the former can be

either considered an instantiation of the latter in a

given moment of time, using educational data

(Martins, Faria, De Carvalho & Carrapatoso, 2008),

or, put in another way simply static uninterpreted

information about the learner (Vagale & Niedrite,

2012). For example, a Learner Profile can hold data

that may include personal details, scores or grades,

3

Note that an LM does not need to be specific to a defined

system or platform.

educational resources usage(s), learning activity

records, etc., all of which emerge during the delivery

of the learning process (Sergis & Sampson, 2019).

Another additional classification for LM are Open

Learner Models (OLM). They are a type of LM where

the model is explicitly communicated to the learner

(or to any other actors) by allowing visualization and

/ or editing of the relevant profiles (Bull & Kay, 2010;

Sergis & Sampson, 2019). This contrasts to the view

of a Closed Model, in which the student has no direct

view of the Model’s contents (Tanimoto, 2005). OLM

can be classified int three categories (Bull & Kay,

2016), based on the model’s edition and

communication modes: inspectable, negotiable or

editable. In one hand, a negotiable OLM will ask and

check for factual evidence from the learner to accept

any given modification, whereas an editable OLM

will not rely on proof, requiring instead a set of

permissions and access controls to avoid data

corruption. An inspectable OLM, in the other hand,

simply does not allow editing of any kind, leaving

solely its updating mechanisms to the hosting system.

Concerning the hosting system itself, Tanimoto,

(2005) brings up the notion that Transparency is a

desirable trait for LM to feature because, by revealing

the internal works of a system, it helps to “engender

trust, permit error detection and foster learning” about

how the system works.

Many research studies (Bull, Jackson, &

Lancaster, 2010; Sergis & Sampson, 2019) show the

importance of Models that are independent of any

system

3

by being able to accept multi-sourced data.

Hence, we consider a LM as Independent if it is not

“[…] part of a specific system and may collect or

exploit educational data from diverse sources”.

Regarding the communication with the hosting

system, an independent LM requires specific

technical connectors (API) to different CMS or LMS

platforms.

We consider paramount the independence of a

LM, as a way for the learner to take possession and

control of its own data. This cannot be accomplished

without a sound support for technical connectors and

interoperability. However, even an independent LM

makes no difference if the data is locked within: we

posit that OLM are a way to empower educators and

learners by allowing them to peek inside the LM and

keep it up-to-date through evidence.

In this part we discussed the notion of LM and

some of its features. In the following section we treat

the importance of LM for MOOC.

A Literature Review on Learner Models for MOOC to Support Lifelong Learning

529

2.2 Importance of Learner Models in

MOOC

We highlight the importance of MOOCs as means

and tools for people from different countries and

backgrounds to interact, collaborate, share and learn

without the usual geographical or temporal

constraints (Brahimi et al., 2015). As a quantitative

and dynamic example, Shah from Class Central

(2015; 2016; 2017; 2018; 2019) has been reporting a

steady increase in people signing up for courses as

well as in the number of courses being opened

worldwide. That is, from over 500 Universities, 4200

courses and 35 Million Students in 2015, 2019 has

seen over 900 Universities, 13500 courses and 110

Million Students. Yet, these numbers exclude China,

whose metrics are “difficult to validate”, according to

Shah. Furthermore, in 2019, MOOCs have come a

long way to include not only microcredentials but

also MOOC-based degrees, showing a diversification

in their offer and an adaptation to their massive public

learning needs. Thus, LM play also an important role

in MOOC, as they allow for individualisation

(Assami et al., 2018), personalization (Kay, 2012;

2019; Woolf, 2010) and recommendation (Morales et

al., 2009; Sunar et al., 2015), which improve learning

metrics by providing learners with an individual,

tailored learning experience suited to their own

uniqueness (Chatti et al., 2012).

These usage figures are a living testimony that

MOOCs are a platform of choice for knowledge-

eager lifelong learners worldwide. Such large number

of platforms from so many universities convey the

challenge of adapting first, the platform itself and

second, the course contents to an equally large

diversity of learners. A challenge where the LM can

play a substantial role, by coupling it to a MOOC,

allowing anywhere and anytime a tailoring of content

and activities to the learner’s needs.

After discussing the importance of LM for

MOOC, we introduce in the next section the context

surrounding the mixed notion of LM for MOOC.

2.3 Lifelong Learning in Learner

Models

(Knapper & Cropley, 2000) consider that the term

Lifelong Learning (LLL) holds the idea that learning

should occur through a person’s lifetime and that it

involves formal and informal domains (Cropley,

1978). This is also supported by the European

Lifelong Learning Initiative, which defines this term

as a “continuously supported process which

stimulates and empowers individuals to acquire all

the knowledge, values, skills and understanding they

will require throughout their lifetimes and to apply

them with confidence, creativity and enjoyment in all

roles, circumstances and environments” (Watson,

2003). In addition, Kay & Kummerfeld (2011)

underline not only the need for a lifelong LM as “a

store for the collection of learning data about an

individual learner” but they also cite its multi-sourced

and availability capabilities for it to be a useful

lifelong LM. This definition is later reprised by Chatti

et al., (2014) who defines Lifelong Learner Model

(LLM) as a “store” where the learner can archive all

learning activities throughout her / his life (Abyaa et

al., 2019).

Thus, lifelong learner modelling (Chatti et al.,

2014) is the process of “creating and modifying a

model of a learner, who tends to acquire new or

modify his existing knowledge skills, or preferences

continuously over a longer time span.”. However, this

process is not devoid of difficulties of

implementation: Abyaa et al. (2019) and Chatti et al.

(2014) mention data collection, activity tracking,

regular updating, privacy, reusability, forgetting

modelling, data interconnection, autonomy and self-

directed learning instigation as some of the challenges

and difficulties faced by LLM. Nevertheless, some

efforts (Chatti et al., 2014; Ishola & McCalla, 2016;

Swartout et al., 2016; Thüs et al., 2015) have been

undertaken to address some of these challenges and

difficulties, with varied results in their own domains.

2.4 Stakes in Learner Model

Comparison

As we have exposed in this section, learner modelling

for MOOC in a lifelong learning context is a complex

task facing many challenges. In this section we

outline the stakes to consider when reviewing LM.

First, in an LLL context, learning evolves in a

continuum so, the LM must also be evolving

continuously. This evolution must be assured and

reflected by a close follow-up by the LM itself, which

must establish the mechanisms to accept, hold and

analyse the data in a precise way. In one hand, we

consider that data can be multi-format and multi-

sourced and so, the LM must be capable of accepting,

understanding and holding an ample variety of it, in

time. In the other hand, the mechanisms to process

data are closely linked to the way data is represented

in the LM, namely the Knowledge representation and

the Recommender / Predictive system that is in

charge of handling learner’s data.

Second, interoperability and dynamism play a

crucial role in allowing for the LM to transcend its

CSEDU 2020 - 12th International Conference on Computer Supported Education

530

hosting platform and be used as a long-term, dynamic

portfolio of knowledge, competences, skills,

preferences, credentials, certifications or badges,

among many others, as demanded by the LLL

context. An isolated, locked LM cannot assure the

portability needed by a heterogeneous learner public,

with unique learning needs, in a multitude of

heterogenous environments, in different moments of

a lifetime. Such learner public requires then an

independent, personalizable and unlocked LM, with

cemented communication flexibility.

Third, within this LLL context, it is also desirable

that the LM allows its inspecting and visualization so

that the learner is actively made aware of his / her

model, eventually permitting its editing or

negotiation, through institutional policies or other

similar instruments. By making the learner aware of

its learning activities, that is, of its LM and of its

maintaining mechanisms, it would foster trust,

engagement and learning.

In the following section we present the

methodology followed for the literature review whilst

considering the previously presented stakes in LM

comparison. It details into the paper selection process

and it briefly introduces our developed tool that

allows automatic metadata detection and organization

from academic sources.

3 REVIEW METHODOLOGY

This review of literature follows the methodology

described by Kitchenham & Charters (2007), which

enumerates the following steps: [A] Identifying the

need for a literature review, [B] Development of the

review protocol, [C] Identifying the research

questions, [D] Identifying research databases, [E]

Launching the research and saving citations, [F]

Screening the papers, [G] Summarizing the selected

papers and, [H] Interpreting the results.

3.1 Identification of the Need for a

Literature Review [A] and

Development of the Review

Protocol [B]

This work is dedicated to MOOC

designers/providers, pedagogical engineers and

researchers who meet difficulties to evaluate

MOOCs' learners based on Learning Analytics. The

goal of this review of literature is to analyse the most

recent works in the field of “LM for MOOCs in an

LLL context”. This is in general terms, how a given

LM coupled with(in) a MOOC can support an LLL.

More specifically, we aim to differentiate and

highlight Learner Models’ features and their

relevance to a MOOC usage in a Lifelong Learning

experience. To our knowledge, currently there is no

research work that addresses the literature review of

existing Learner Models for Massive Open Online

Courses in this context. As a side note, according to

Kitchenham et al. (2007), the development of the

protocol includes “[…] all of the elements of the

review plus some additional planning information

[…]” that we have detailed in each of the subsequent

steps of their methodology, such as the rationale of

the review, the selection criteria and the procedures

and data extraction strategies.

3.2 Identification of Research

Questions [C]

Therefore, this article aims to answer the following

research questions (RQ):

• RQ1: What Learner Model features are most

relevant for a MOOC in an LLL context?

• RQ2: What are the most suitable LM for MOOC

in an LLL context?

3.3 Selection Criteria and Research

Databases Identification [D]

In this section we describe the inclusion and

exclusion criteria used to constitute the corpus of

publications for our analysis. We also detail and

justify our choice of the search terms, the identified

databases as well as the used software tool.

In one hand, our Inclusion criteria are: Works that

present a Learner Model in the context of a MOOC or

that present a new Learner Model and compare it to

an existing Learner Model. In the other hand, our

chosen Exclusion criteria consist of: Works not

written in English, under embargo, not published or

in the works. Also, works that do not treat Learner

Models directly or only peripherally, that is: LM are

not the main topic of the publication. Works of the

same author for the same year (we keep only the last

published contribution on the same subject) and

finally, works published on journals take precedence

over those on conferences.

Our Search terms were “learner model” and

“mooc”, which in most of the search engines

conveniently translates as a Boolean query of the

form { (learner* AND model*) AND (mooc*) }. This

translation allows us to include plural, gerund and

agent-noun results. It is important to note that the

term LLL, while being very important as the context

A Literature Review on Learner Models for MOOC to Support Lifelong Learning

531

of our research, does not constitute nor an inclusion

nor an exclusion criteria but a characteristic of the LM

and this is why it does not figure in the search terms.

We chose to perform this research within the last

five year’s timeframe (2015-2020) at the beginning of

January 2020 in the following scientific databases:

Web of Science, Scopus and Google Scholar. Please

note that within the Google Scholar results we are

primarily interested in the results from Taylor &

Francis Online, Science Direct, Sage Publications,

Springer and IEEE Explore. The thought behind this

choice is to have the most current and quality-proven

scientific works in the domain. We chose and used the

search software tool ‘Publish or Perish’

4

not only to

search into these databases at once (except Web of

Science and Scopus) but also to profit from the

software’s feature to filter, regroup repeated

publications and calculate different indexes, such as

Hirsch’s h-index, Egghe’s g-index and Zhang’s e-

index, commonly known to the scientific community.

3.4 Launch of the Research [E]

For a more streamlined paper selection process, we

designed and developed an external tool (publication

under way) that, coupled with the software ‘Publish

or Perish’, recovers and organizes metadata from a

list of academic sources and presents it to the

reviewer in a bias-free context (Step: Automatic

Metadata Collection). This external tool allowed us to

refine the results in terms of publication abstracts

instead of publication titles only. Also, it prevented us

from manually loading, saving and reading all of the

articles’ abstracts by hand and one by one. Its main

advantage resides in facilitating a bias-free dismiss

process by presenting only the publication’s abstract

text.

The paper selection process is pictured in Figure

1 and it happened as follows: First, we used a CSV

file as a data concentration hub to hold the search

query results issued from:

1. The Google Scholar search engine, using the

software Publish or Perish.

2. The Scopus database, using the software Publish

or Perish.

3. The Web of Science database.

Second, we automatically extracted relevant metadata

related to the previous results (abstracts and

keywords) from the corresponding articles’ Web

4

Link: https://harzing.com

5

https://www.lucidchart.com/pages/flowchart-symbols-

meaning-explained

Pages or PDF files. This process aims to present this

metadata in a bias-free context.

Figure 1: Overview of the publication selection and

categorization process. A flowchart

5

is used to represent

this process.

3.5 Paper Screening [F]

Then, we read all of the automatically extracted

abstracts and filter-categorized them. We dismissed

publications whose abstract was out of the scope of

this paper while registering the main subject

6

of the

dismissed paper. As mentioned, we focused primarily

in the abstract to determine the articles’ subject or

topic. We intentionally avoided relying on the

‘keywords’, ‘authors’ or ‘title’ fields to avoid a

possible bias. Although in doubt we recurred to

consider the ‘keywords’ and the ‘title’ fields,

respectively. The Dismissed Papers fell into one of

the following categories:

1. ‘Another kind of Model’: it describes pedagogical

models, relationship models, system models, etc.

2. ‘Profile’: it treats explicitly Learner Profile

instead of Learner Model.

3. ‘Not on topic’ results contain the search terms in

the text, title or bibliography but in a disconnected

manner

7

.

4. ‘Citation’ results were usually removed

automatically by the ‘Publish or Perish’ tool but

not always.

6

e.g.: “ethical concerns of AI in education”, “panorama on

open source LMS” or “evolution of higher education”.

7

e.g. “Solar Models in a Geography Class: a Learner’s first

experience with MOOCs”

CSEDU 2020 - 12th International Conference on Computer Supported Education

532

We also dismissed articles of which the content was

not in English, Duplicates or Previous Work (from the

same author). Most Recent Work on Topic

publications from the same year from the same author

were detected and only the most recent item kept.

Finally, we accessed and read the full text of the

Passing papers through our institutional subscription

or Open Access for full-text reading. We kept only

articles from Book Chapters, Journals and

Conference Proceedings and we dismissed

Unpublished (or in the works) papers, White Papers,

PhDs and Master works.

In the following part we deepen the paper

screening phase by detailing the selection process,

contrary to the first addressing in this section the

dismissing issue.

3.5.1 Detailing the Selection Process

In this section we detail how we pass from a full set

of search databases results to our research pool of

selected articles. From the entire set of results given

by the three search databases (442), that is 419 results

from Google Scholar, 17 from Scopus and six from

Web of Science, we constituted a final pool of 17

publications mentioning in their abstract their

intention to propose a Learner Model. Unwittingly,

the other databases did not provide results fitting

neither our inclusion criteria, nor our search query.

Out of the 419 results from Google Scholar, 342

publications were quickly dismissed thanks to our

developed bias-free method as it made very clear that

they did not fit the inclusion criteria. That is, 77 were

‘Passing’ papers that required a more in-depth

review.

During this initial search phase, the other engines

(Scopus and Web of Science) provided relatively few

results compared to Google. Surprisingly, it turned

out that all of their results, except for one, were

already within the Google Scholar results. That is, for

Web of Science, all of the six results were ‘Passing’

but repeated, and for Scopus, out of 17 result, 12 were

repeated and mixed with eight out-of-scope. This mix

left us with only one ‘Passing’ result from Scopus,

none from Web of Science and 77 from Google

Scholar, after reading all of the extracted Abstracts.

Then, we proceeded to fully read the ‘Passing’

papers. From this initial 78 (77 + 1 + 0) papers only

17 became ‘Proposals’ (16 Google, 1 Scopus, 0 Web

of Science). The dismissed papers group in this phase

consisted of a mix of PhD and Master publications,

one missed Not-in-English publication, a few most

8

To identify an LM, we kept the LM name given by its

authors, if any, otherwise we prepositioned ‘None’ to the

recent publications but mostly papers either out-of-

scope or not fulfilling the inclusion criteria correctly.

As a side note, we were able to pinpoint (and dismiss)

19 publications that either used the terms LM and

Learner Profile interchangeably, or omitted LM.

For the sake of exhaustivity and according to our

exclusion criteria, we registered not only the topic the

described in the abstract but also the reason any result

should be removed. The possible values are described

in the following list:

• Language – The main text of the publication is not

in the English language.

• Unrelated – Neither the title, nor the abstract, nor

the keywords treat the terms “Learner Model” and

“MOOC” in a connected manner. This includes

works in the categories Citation, Another Kind of

Model, Learning Modelling.

• Peripheral – The field “Learner Modelling for

MOOC” do not constitute the core of the

publication. This includes Learner Profile and

Analysis on a Learner Model.

• Substitute – Discerning metadata given by the

search engine was malformed (e.g. wrong title,

wrong source). A correction was done after we

could determine its pertinence by a reading

review.

• Repeated – It was already within the results,

usually from another search engine, but

sometimes as a miss from the ‘Publish or Perish’

tool.

• None – The articles that did not get discarded.

This allows us to justify the classification and its

dismissal.

The selection process concluded with 17 LM

‘Proposals’ which are shown in the Appendix

8

section, along with our considered features, which are

in turn addressed in the following section, namely

why and how they group into dimensions.

3.6 Summary [G] and Learner Models

in LLL Criteria Comparison

A summary of the 17 LM found and its describing

features is presented in the Appendix. In this section

we address why and how these considered features

weight in in the compositing of meaningful

dimensions that allow comparison between LM.

As mentioned beforehand, the purpose of this

paper is to detail the LM for MOOC features that

could play an important role in LLL, while

considering the stakes mentioned in section 2.4, e.g.,

country of origin of the publication (which led to a few

repeats, unfortunately).

A Literature Review on Learner Models for MOOC to Support Lifelong Learning

533

their openness or interoperability with other

platforms. We consider the mechanisms, if any,

mentioned by the authors to achieve these and the

other features.

Thus, we begin by introducing our considered

features; (1) the platform connection approach, (2)

the cold start handling, (3) the data sparsity handling,

(4) the learner knowledge representation, (5) the

recommender / predictive method, (6) the openness

of the LM, (7) its dynamism and, (8) its LLL

consideration. This review of literature led us to

consider these features to be key points to consider

when choosing an LM for a MOOC in an LLL

context.

We synthesized these nine features into four

dimensions, namely Interoperability (I), sparse Data

handling (D), Knowledge representation (K) and LLL

(LLL). The Interoperability (I) dimension illustrates

if the LM allows for standard connectors to external

hosting systems. The sparse Data dimension (D)

reflects if any given approach is considered in the

event of missing data. The Knowledge representation

dimension (K) pertains to the level of detail

considered into the representation of the Learner’s

and / or Domain’s Knowledge as well as the

mechanisms used to update the LM or to recommend

/ personalize content. Knowledge representation is an

important feature since it is closely linked to the way

the LM keeps its integrity and/or predicts or suggest

LM states. Finally, the LLL dimension illustrates how

well the LM is prepared to cope with the exigences a

LLL context demands.

All of these dimensions are important components

of a LM in a LLL and its creation takes into

consideration the presence, partial presence or

absence of evidence from the corresponding

integrating features found in the LM. We chose not to

assess nor the number nor the authors’ chosen

characteristics of their proposed LM as they depend

greatly on the purpose of each of their systems. This

makes a straight and direct comparison between LM

unfeasible and meaningless.

We represent then the authors’ explicit

consideration and description

9

of the method(s) used

to enforce any dimension by a Tick symbol [✓]. The

absence of evidence is represented by a Cross symbol

[]. Evidence of regard to any of our considered

dimensions without an explicit description of the

mechanisms to achieve it were marked with a

Question mark symbol [?].

9

If any paper did not explicitly had a quotable line as

evidence to justify its inclusion / exclusion in the

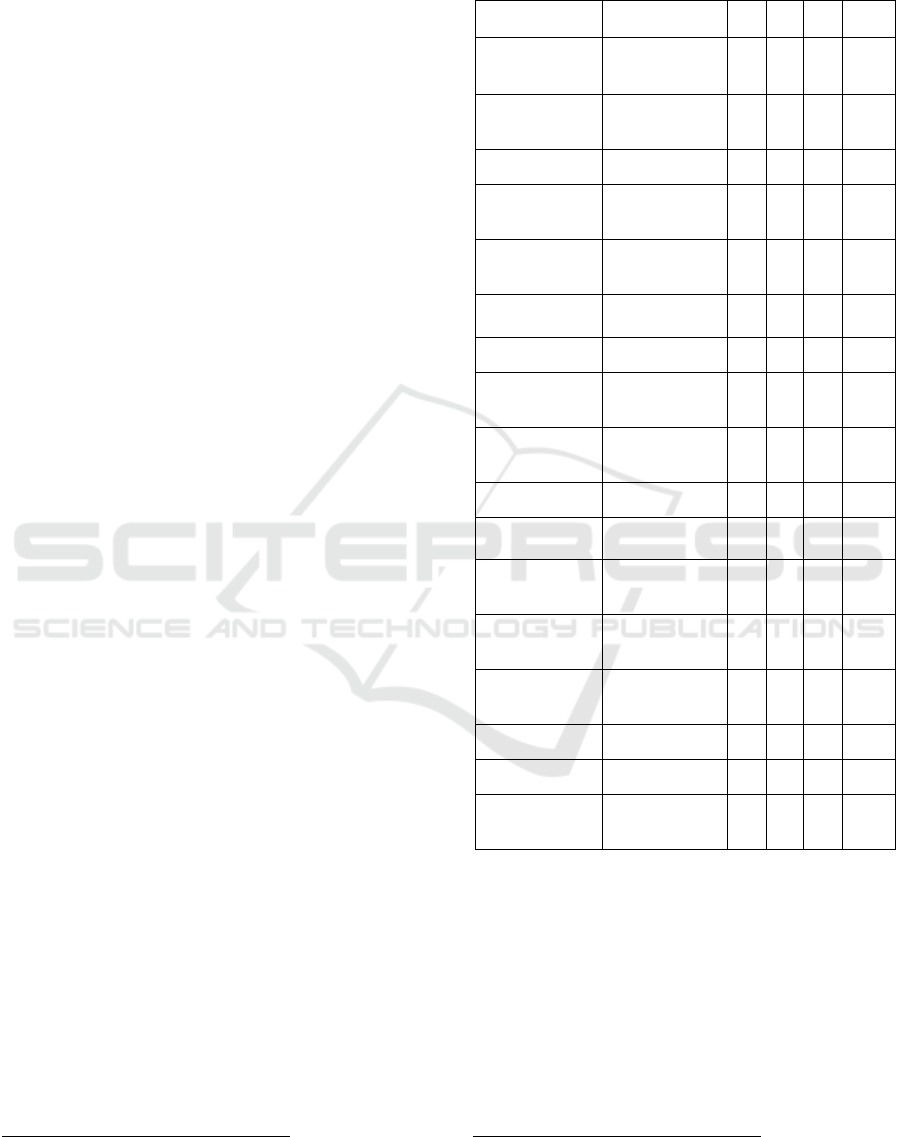

Table 1: LM found, with Interoperability (I), sparse Data

handling (D), Knowledge representation (K) and LLL

(LLL) dimensions analysis.

LM

Reference

I

D

K

LLL

TrueLearn

Bulathwela et

al., 2019

?

?

✓

✓

SBGF

Calle-Archila et

al., 2017

✓

?

x

x

MOOClm

Cook et al., 2015

✓

x

x

✓

STyLE-OLM

Dimitrova et al.,

2015

✓

?

✓

✓

None-

MOOCTAB

El Mawas et al.,

2019

✓

?

✓

✓

None-Tunis

Harrathi et al.,

2017

x

x

✓

x

None-China

He et al., 2017

?

?

x

x

EDUC8

Iatrellis et al.,

2019

?

?

✓

x

DiaCog

Karahoca et al.,

2018

x

x

✓

x

None-China

Li et al., 2016

x

x

x

x

None-ODALA

Lynda et al.,

2019

✓

?

✓

✓

None-Tunis-

France

Maalej et al.,

2016

?

x

✓

x

GAF

Maravanyika et

al., 2017

x

?

x

x

AUM (AeLF

User Model)

Qazdar et al.,

2015

✓

?

✓

✓

MLaaS

Sun et al., 2015

x

x

x

x

Logic-Muse

Tato et al., 2017

?

?

✓

✓

None-Adaptive

Hypermedia

Tmimi et al.,

2017

X

x

x

x

The Interoperability (I) dimension was granted a

Tick if a platform connector was specified and

considered a Question mark [?] if only an

implementing platform had been mentioned, hinting

to a successful implementation. In terms of

operability, a working connector [✓] allows for

portability of the LM, important characteristic in

LLL. So, the confirmation of an existing

implementation of the proposed LM assures that

some form of communication exists with a host

system but does not on its portability [?].

corresponding feature, we handled it as if they did not

consider it at all.

CSEDU 2020 - 12th International Conference on Computer Supported Education

534

The sparse Data handling (D) dimension was

granted [✓] if both of its composing features (cold

start and data sparsity problems) were addressed. A

Question mark [?] was given if any one of them was

explicitly detailed. Data sparsity represents a

challenge in LM in a LLL context: a serious problem

arises if a model does not implement solutions to

assure a proper instantiation or updates with missing

data.

The Knowledge representation (K) dimension

was granted [✓] if both features (knowledge

representation and recommender’s method) were

elaborated beyond a mere mention, Question-ed [?] if

at least the knowledge representation was explained

and a Cross [] in all the other cases. Knowledge

representation is one of the most important

characteristics of a LM, almost universal in all the

LM reviewed. Its representation lies very close to the

updating or suggesting mechanism of the LM.

The LLL dimension (LLL) is composed of our

Openness, Dynamism and LLL features. Having an

OLM (represented with [?]) is a desired but

insufficient condition for LLL. However, explicitly

describing a mechanism to assure it grants it a Tick

[✓]. If the presence of a OLM and a consideration of

Dynamism is found, a [✓] is also granted. The

Dynamism feature on its own is insufficient [] to

grant a [?] or a [✓].

Thus, the composited dimensions, based on

features we consider key points when choosing an

LM for MOOC in an LLL context, answer RQ1,

namely “What Learner Model features are most

relevant for a MOOC in an LLL context?”.

A summary of the dimensioning of the

publications is presented in Table 1. The LM

presented in Table 1 are shown by author alphabetical

order. We represent with a Tick [✓] a desirable

characteristic for LM in MOOC in LLL context as

fulfilled. We used a Question mark [?] to express a

characteristic as partially fulfilled or requiring

additional steps to be fulfilled. Lastly, a Cross [x]

shows that not enough evidence is found in the

publication to give any other mark.

In this section we have presented our considered

features and explained the dimensioning and the train

of thought behind it. The following section presents

the interpretation of our work results.

3.7 Interpretation [H]

The selected papers (17 LM ‘Proposals’) and the

considered features are shown in full in the Appendix.

The features we considered for our study and detailed

in the previous section were (1) the platform

connection approach, (2) the cold start handling, (3)

the data sparsity handling, (4) the learner knowledge

representation, (5) the recommender / predictive

method, (6) the openness of the LM, (7) its dynamism

and, (8) its LLL consideration. These features

translate into four dimensions that we believe to be

paramount points to consider when choosing an LM

for a MOOC in an LLL context. A summary of this

work is presented in Table 1 in the previous section.

Furthermore, our proposed dimensioning, based

on features we consider key points for LLL, allows as

well to discern the most appropriate LM for MOOC

in this context. That is, an LM ready to cope with the

exigences of an LLL, capable of communicate with

other systems while retaining its independence, with

a comprehensive theoretical background in

knowledge representation and/or suggesting engine,

whilst preferably being able to handle the problems

of missing or incomplete learner data.

Out of an initial pool of 442 results, our review of

literature led us to analyse 17 LM proposals. In a first

moment, seven of these 17 papers (Bulathwela et al.,

2019; Cook et al., 2015; Dimitrova et al., 2015; El

Mawas et al., 2019; Lynda et al., 2019; Qazdar et al.,

2015 ; Tato et al., 2017) fulfil the LLL dimension,

comprised of Openness, Dynamism and explicit LLL

consideration, features paramount and an explicit

requisite for LLL. Five out of these seven

publications have considered fully the

Interoperability dimension as well. Nevertheless,

only four remaining LM proposals (Dimitrova et al.,

2015; El Mawas et al., 2019; Lynda et al., 2019;

Qazdar et al., 2015) provide the explicit methods for

Knowledge representation and LM updating

necessary in an LLL context as well. We can affirm

that the answer to RQ2 is represented in these

remaining four selected LM publications (highlighted

rows in the Appendix): they provide sufficient

evidence (I, D, K and LLL dimensions) to conclude

that their LM proposal are the most suitable candidate

when choosing a LM for MOOC in a LLL context.

We strongly believe that this LM result set is of

uppermost interest to actors other than our target

public.

4 DISCUSSION

In this section we will discuss the feature analysis on

the 17 LM reviewed publications addressed in the

precedent section.

When we look at the techniques implemented by

the authors to represent Knowledge, we could not

help but to notice that Rules (or another similar hard-

A Literature Review on Learner Models for MOOC to Support Lifelong Learning

535

encoded method) is the preferred approach for the

recommender system (and for knowledge

representation, for that matter). Out of the 17

publications, eight papers (Calle-Archila et al., 2017;

Cook et al., 2015; Harrathi et al., 2017; Karahoca et

al., 2018; Li et al., 2016; Iatrellis et al., 2019; Lynda

et al., 2019; Qazdar et al., 2015) based their LM

proposal on Rules.

Bayesian strategies are a second popular choice.

Four papers (Bulathwela et al., 2019; El Mawas et al.,

2019; Maravanyika et al., 2017; Tato et al., 2017) rely

heavily on some form of Bayesian technique to

represent knowledge and to suggest or update the LM,

usually coupled to other probabilistic models.

Ontologies follow up closely, with three articles

(Harrathi et al., 2017; Iatrellis et al., 2019; Lynda et

al., 2019) employing them and some formalizing their

use of the Web Ontology Language (OWL).

Conceptual Graphs (Dimitrova et al., 2015),

Machine Learning (Sun et al., 2015), Pearson

correlations (He et al., 2017) and k-means clustering

methods (Li et al., 2016) are sparsely used, with only

one paper featuring each one of these techniques.

Please note that some proposals use a combination of

these and other ad-hoc techniques, detailed in the

Appendix. Finally, only one paper was ambiguous

enough for us to discern its approach to represent

and/or predict Knowledge.

Concerning their Interoperability, use of

standards by the reviewed LM is limited. Most of the

LM do not mention their communication method or

platform connector. This was the case of LM used in

an ad-hoc learning platform (five cases), where a

monolithic design is common. Nonetheless, a few

standards were mentioned. For instance, the use of

Ontologies for Knowledge representation (Harrathi et

al., 2017; Lynda et al., 2019) allowed LM designers

to benefit from the OWL ease of communication.

Furthermore, two papers (Lynda et al., 2019; Qazdar

et al., 2015) proposed the use of the xAPI

specification as a communication protocol and one

proposal envisaged the use of the LTI standard, a

more recent communication method. When the

reviewed LM was evaluated in a learning platform

(not in an ad-hoc solution) edX was used twice (Cook

et al., 2015; El Mawas et al., 2019), with Moodle,

Coursera and Claroline being mentioned once each.

We assume this is due to the most novel design of

edX, comprising support for communicating

technologies and other standards. In any case, the

interoperability dimension constitutes a challenge

most LM avoid or contour by implementing their LM

in an ad-hoc solution.

Besides, the approach to missing data situations

(sparse Data handling) considered by our reviewed

LM was ill-defined: the cold start problem was

scarcely addressed, usually with a starting

questionnaire but often with a vague reference to

some ‘registration’ or ‘external’ data input, whilst

none of the papers took into consideration the Data

Sparsity problem.

We regretted to acknowledge that our LLL

studied context is not yet an explicit consideration by

most of LM designers, with a clear minority of five

publications addressing the issue at a minimum.

However, among these, one paper (Qazdar et al.,

2015) detached itself from the rest by providing

details on the technical implementation to fulfil this

dimension (OpenID). OLM models are yet to be

universally recognized as part of an LLL solution and,

for the few proposals in our sample who do

(Bulathwela et al., 2019; Cook et al., 2015; Dimitrova

et al., 2015; El Mawas et al., 2019; Qazdar et al.,

2015), Negotiable and fully Open are the preferred

choices over Visualisation in OLM. Thus, regrettably,

LLL is not a priority for many LM designers, whose

proposals highlight mostly the application of a novel

technique, (e.g. machine learning) or focus on a

specific delivery content (e.g. video for mobile

learning).

5 CONCLUSION AND

PERSPECTIVES

This review of literature addresses the question of LM

for MOOC in a LLL context, namely the most

relevant features in a LM for a MOOC in an LLL

context. This study aims to differentiate and highlight

LM’s features and their relevance to a MOOC usage

in an LLL experience. To our knowledge, currently

there is no research work that addresses the literature

review of such topic. This study intents to fill in that

gap by reviewing the most recent LM for MOOC

proposals that can handle the exigences of an LLL

context. Thus, it covers only the works published in

the last five years (2015-2020) that explicitly

mentioned in their abstract a LM proposal central to

their article.

Out of an academic database search result pool of

442 publications, 17 papers were reviewed, their

feature highlighted and compared. This study led us

to consider the following features to be key points to

consider when choosing an LM for a MOOC in an

LLL context: (1) the platform connection approach,

(2) the cold start handling, (3) the data sparsity

CSEDU 2020 - 12th International Conference on Computer Supported Education

536

handling, (4) the learner knowledge representation,

(5) the recommender / predictive method, (6) the

openness of the LM, (7) its dynamism and, (8) its

LLL consideration. We synthesized these nine

features into four dimensions, namely

Interoperability (I), sparse Data handling (D),

Knowledge representation (K) and LLL (LLL). Four

LM finalists, highlighted rows in the Appendix,

(Dimitrova et al., 2015; El Mawas et al., 2019; Lynda

et al., 2019; Qazdar et al., 2015) fulfilled most though

not all, of our comparing criteria. We concluded that

their LM proposal were the most suitable candidates

for a LM for MOOC in LLL.

Currently, our next research step is to propose a

LM that considers the features and dimensions we

have reviewed in this study. Given that none of the

reviewed LM fulfil completely our presented

comparison criteria we envisage to either, propose a

composite comprising characteristics of the final four

LM or, select and extend one of them. Such an LM

would be the object of a first use and evaluation for

the MOOC

10

“Gestion de Projet”: the largest French-

speaking MOOC, addressed primarily to engineers

worldwide, operating continuously since 2013 and

counting close to 265,000 students inscribed since its

creation, with a total of about 40,000 laureates.

We feel confident that actors other than MOOC

and LM designers/providers, pedagogical engineers

and researchers can benefit from this study to help

them asses features in LM for MOOC in an LLL that

are of vital importance.

ACKNOWLEDGEMENTS

This project was supported by the French government

through the Programme Investissement d’Avenir

(I–SITE ULNE / ANR–16–IDEX–0004 ULNE)

managed by the Agence Nationale de la Recherche.

REFERENCES

Abyaa, A., Khalidi Idrissi, M. & Bennani, S. 2019. Learner

modelling: systematic review of the literature from the

last 5 years. Education Tech Research Dev 67, 1105–

1143 (2019). https://doi.org/10.1007/s11423-018-

09644-1

Afini Normadhi, N. B., Shuib, L., Md Nasir, H. N., Bimba,

A., Idris, N., & Balakrishnan, V. 2019. Identification of

personal traits in adaptive learning environment:

Systematic literature review. Computers and

10

https://moocgdp.gestiondeprojet.pm

Education, 130, 168–190. https://doi.org/10.1016/

j.compedu.2018.11.005

Anderson, J. R., Corbett, A. T., Koedinger, K. R., and

Pelletier, R. 1995. Cognitive tutors: Lessons learned.

The Journal of the Learning Sciences. 4, 2 (1995), 167-

207.

Assami S., Daoudi N., Ajhoun R. 2019 Ontology-Based

Modeling for a Personalized MOOC Recommender

System. In: Rocha Á., Serrhini M. (eds) Information

Systems and Technologies to Support Learning.

EMENA-ISTL 2018. Smart Innovation, Systems and

Technologies, vol 111. Springer, Cham

Bodily, R., Kay, J. Aleven, V., Jivet, I., Davis, D., Xhakaj,

F. and Verbert, K. 2018. Open learner models and

learning analytics dashboards: a systematic review. In

Proceedings of the 8th International Conference on

Learning Analytics and Knowledge (LAK ’18).

Association for Computing Machinery, New York, NY,

USA, 41–50. DOI: https://doi.org/10.1145/

3170358.3170409

Brahimi, T., & Sarirete, A. 2015. Learning outside the

classroom through MOOCs. Computers in Human

Behavior, 51, 604-609.

Breslow, L., Pritchard, D. E., DeBoer, J., Stump, G. S., Ho,

A. D., & Steaton, D. T. 2013. Studying learning in the

worldwide classroom: Research into edX’s first

MOOC. Research & Practice in Assessment, 8(1), 13–

25. Retrieved from http://www.rpajournal.com/dev/

wpcontent/uploads/2013/05/SF2.pdf

Bulathwela, S., Perez-Ortiz, M., Yilmaz, E., & Shawe-

Taylor, J. (2019). TrueLearn: A Family of Bayesian

Algorithms to Match Lifelong Learners to Open

Educational Resources. (i). Retrieved from

http://arxiv.org/abs/1911.09471

Bull, S. Negotiated learner modelling to maintain today’s

learner models. RPTEL 11, 10 2016. https://

doi.org/10.1186/s41039-016-0035-3

Bull, S., Jackson, T., Lancaster, M. 2010. Students’ Interest

in their Misconceptions in First Year Electrical Circuits

and Mathematics Courses. International Journal of

Electrical Engineering Education 47(3), 307–318

(2010)

Bull, S., & Kay, J. 2010. Open learner models. In R.

Nkambou, R. Mizoguchi, & J. Bourdeau (Eds.),

Advances in intelligent tutoring systems (pp. 301–322).

Berlin: Springer.

Calle-Archila, C. R., & Drews, O. M. (2017). Student-

Based Gamification Framework for Online Courses.

https://doi.org/10.1007/978-3-319-66562-7_29

Chatti, M. A., Dyckhoff, A. L., Schroeder, U., & Thüs, H.

2013. A reference model for learning analytics.

International Journal of Technology Enhanced

Learning, 4(5-6), 318-331.

Chatti, M. A., Dugoija, D., Thüs, H. and Schroeder, U.

2014. Learner Modeling in Academic Networks, 2014

IEEE 14th International Conference on Advanced

Learning Technologies, Athens, 2014, pp. 117-121.

doi: 10.1109/ICALT.2014.42 URL: http://

A Literature Review on Learner Models for MOOC to Support Lifelong Learning

537

ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=6

901413&isnumber=6901368

Cook, R., Kay, J., & Kummerfeld, B. (2015). MOOClm:

User Modelling for MOOCs. 9146, 80–91.

https://doi.org/10.1007/978-3-319-20267-9

Cropley, A.J. 1978. Some Guidelines for the Reform of

School Curricula in the Perspective of Lifelong

Education. International Review of Education, 24

(1):21–33.

Dimitrova, V., & Brna, P. (2016). From Interactive Open

Learner Modelling to Intelligent Mentoring: STyLE-

OLM and beyond. International Journal of Artificial

Intelligence in Education, 26(1), 332–349.

https://doi.org/10.1007/s40593-015-0087-3

El Mawas, N., Gilliot, J. M., Garlatti, S., Euler, R., &

Pascual, S. (2018). Towards personalized content in

massive open online courses. CSEDU 2018 -

Proceedings of the 10th International Conference on

Computer Supported Education, 2, 331–339.

https://doi.org/10.5220/0006816703310339

El Mawas N., Gilliot JM., Garlatti S., Euler R., Pascual S.

2019. As One Size Doesn’t Fit All, Personalized

Massive Open Online Courses Are Required. In:

McLaren B., Reilly R., Zvacek S., Uhomoibhi J. (eds)

Computer Supported Education. CSEDU 2018.

Communications in Computer and Information

Science, vol 1022. Springer, Cham

Giannandrea, L., & Sansoni, M. 2013. A literature review

on intelligent tutoring systems and on student profiling.

Learning & Teaching with Media & Technology, 287.

Harrathi, M., Touzani, N., & Braham, R. (2018). A hybrid

knowlegde-based approach for recommending massive

learning activities. Proceedings of IEEE/ACS

International Conference on Computer Systems and

Applications, AICCSA, 2017-Octob, 49–54. https://

doi.org/10.1109/AICCSA.2017.150

He, X., Liu, P., & Zhang, W. (2017). Design and

Implementation of a Unified Mooc Recommendation

System for Social Work Major: Experiences and

Lessons. Proceedings - 2017 IEEE International

Conference on Computational Science and Engineering

and IEEE/IFIP International Conference on Embedded

and Ubiquitous Computing, CSE and EUC 2017, 1,

219–223. https://doi.org/10.1109/CSE-EUC.2017.46

Herder, E. 2016. User Modeling and Personalization 3:

User Modeling – Techniques. https://

www.eelcoherder.com/images/teaching/usermodeling/

03_user_modeling_techniques.pdf

Iatrellis, O., Kameas, A., & Fitsilis, P. (2019). EDUC8

ontology: semantic modeling of multi-facet learning

pathways. Education and Information Technologies,

24(4), 2371–2390. https://doi.org/10.1007/s10639-019-

09877-4

Ishola, O. M., & McCalla, G. 2016. Tracking and Reacting

to the Evolving Knowledge Needs of Lifelong

Professional Learners. In UMAP (Extended

Proceedings).

Jordan, K. 2014. Initial Trends in Enrolment and

Completion of Massive Open Online Courses. The

International Review of Research in Open and

Distributed Learning 15 (1)

Jung, Y., & Lee, J. (2018). Learning engagement and

persistence in massive open online courses (MOOCs).

Computers & Education, 122, 9–22. https://

doi.org/10.1016/j.compedu.2018.02.013

Karahoca, A., Yengin, I., & Karahoca, D. (2018). Cognitive

dialog games as cognitive assistants: Tracking and

adapting knowledge and interactions in student’s

dialogs. International Journal of Cognitive Research in

Science, Engineering and Education, 6(1), 45–52.

https://doi.org/10.5937/ijcrsee1801045K

Kay, J. 2012. AI and education: Grand challenges. IEEE

Intelligent Systems, 27(5), 66–69.

Kay, J. & Kummerfeld, B. 2011. Lifelong Learner

Modeling. In P. J. Durlach and A. Lesgold (Eds.),

Adaptive Technologies for Training and Education.

Cambridge University Press.

Kay, J., & Kummerfeld, B. 2019. From data to personal

user models for life‐long, life‐wide learners. British

Journal of Educational Technology, 50(6), 2871-2884.

Knapper, C., & Cropley, A. J. 2000. Lifelong learning in

higher education. Psychology Press.

Li, Y., Zheng, Y., Kang, J., & Bao, H. (2016). Designing a

Learning Recommender System by Incorporating

Resource Association Analysis and Social Interaction

Computing. https://doi.org/10.1007/978-981-287-868-

7_16

Liang-Zhong, C., Fu-Liang, G., and Ying-ji, L. 2018.

Research Overview of Educational Recommender

Systems. In Proceedings of the 2nd International

Conference on Computer Science and Application

Engineering (CSAE ’18). Association for Computing

Machinery, New York, NY, USA, Article 155, 1–7.

DOI: https://doi.org/10.1145/3207677.3278071

Lynda, H., & Bouarab-Dahmani, F. (2019). Gradual

learners’ assessment in massive open online courses

based on ODALA approach. Journal of Information

Technology Research, 12(3), 21–43. https://doi.org/

10.4018/JITR.2019070102

Maalej, W., Pernelle, P., Ben Amar, C., Carron, T., &

Kredens, E. (2016). Modeling Skills in a Learner-

Centred Approach Within MOOCs (D. K. W. Chiu, I.

Marenzi, U. Nanni, M. Spaniol, & M. Temperini, eds.).

In (pp. 102–111). https://doi.org/10.1007/978-3-319-

47440-3_11

Maravanyika, M., Dlodlo, N., & Jere, N. (2017). An

adaptive recommender-system based framework for

personalised teaching and learning on e-learning

platforms. 2017 IST-Africa Week Conference, IST-

Africa 2017, 1–9. Martins, C., Faria, L., De Carvalho,

C. V., & Carrapatoso, E. 2008. User modeling in

adaptive hypermedia educational systems. In

Educational Technology & Society, 11(1), 194–207.

https://doi.org/10.23919/ISTAFRICA.2017.8102297

Morales, R., Van Labeke, N., Brna, P., & Chan, M. E. 2009.

Open Learner Modelling as the Keystone of the Next

Generation of Adaptive Learning Environments. In C.

Mourlas, & P. Germanakos (Eds.), Intelligent User

Interfaces: Adaptation and Personalization Systems and

CSEDU 2020 - 12th International Conference on Computer Supported Education

538

Technologies (pp. 288-312). Hershey, PA: IGI Global.

doi:10.4018/978-1-60566-032-5.ch014

Kitchenham, B., & Charters, S. 2007. Guidelines for

performing systematic literature reviews in software

engineering Version 2.3. Engineering, 45(4), 1051.

Nakic, J., Granic, A., & Glavinic, V. 2015. Anatomy of

student models in adaptive learning systems: A

systematic literature review of individual differences

from 2001 to 2013. Journal of Educational Computing

Research, 51(4), 459–489.

Nguyen L., Do P. 2009 Combination of Bayesian Network

and Overlay Model in User Modeling. In: Allen G.,

Nabrzyski J., Seidel E., van Albada G.D., Dongarra J.,

Sloot P.M.A. (eds) Computational Science – ICCS

2009. ICCS 2009. Lecture Notes in Computer Science,

vol 5545. Springer, Berlin, Heidelberg

Qazdar, A., Cherkaoui, C., Er-Raha, B., & Mammass, D.

(2015). AeLF: Mixing Adaptive Learning System with

Learning Management System. International Journal of

Computer Applications, 119(15), 1–8. https://doi.org/

10.5120/21140-4171

Sein-Echaluce, M. L., Fidalgo-Blanco, Á., García-Peñalvo,

F. J., & Conde, M. Á. (2016, July). iMOOC Platform:

Adaptive MOOCs. In International Conference on

Learning and Collaboration Technologies (pp. 380-

390). Springer, Cham.

Sergis S., Sampson D. 2019 An Analysis of Open Learner

Models for Supporting Learning Analytics. In:

Sampson D., Spector J., Ifenthaler D., Isaías P., Sergis

S. (eds) Learning Technologies for Transforming

Large-Scale Teaching, Learning, and Assessment.

Springer, Cham

Shah, D. 2015. By the numbers: MOOCS in 2015.

https://www.classcentral.com/report/moocs-2015-

stats/

Shah, D. 2016. By the numbers: MOOCS in 2016.

https://www.classcentral.com/report/mooc-stats-2016/

Shah, D. 2017. By the numbers: MOOCS in 2017.

https://www.classcentral.com/report/mooc-stats-2017/

Shah, D. 2018. By the numbers: MOOCS in 2018.

https://www.classcentral.com/report/mooc-stats-2018/

Shah, D. 2019. By the numbers: MOOCS in 2019.

https://www.classcentral.com/report/mooc-stats-2019/

Sloep, P., Boon, J., Cornu, B., Kleb, M., Lefrere, P., Naeve,

A., Scott, P. and Tinoca, L., 2008. A European research

agenda for lifelong learning.

Somyürek, S. 2009. Student modeling: Recognizing the

individual needs of users in e-learning environments. In

Journal of Human Sciences, 6(2), 429–450.

Sun, G., Cui, T., Guo, W., Beydoun, G., Xu, D., & Shen, J.

(2015). Micro Learning Adaptation in MOOC: A

Software as a Service and a Personalized Learner

Model. In Lecture Notes in Computer Science

(including subseries Lecture Notes in Artificial

Intelligence and Lecture Notes in Bioinformatics) (Vol.

9412, pp. 174–184). https://doi.org/10.1007/978-3-

319-25515-6_16

Sunar, A. S., Abdullah, N. A., White, S. and Davis, H. C.

2015. Personalisation of moocs: The state of the art

Swartout, W., Nye, B. D., Hartholt, A., Reilly, A., Graesser,

A. C., Vanlehn, K., et al. 2016. Designing a Personal

Assistant for Life-Long Learning (PAL3). In

Proceedings of the 29th International Florida Artificial

Intelligence Research Society Conference, FLAIRS

2016 (pp. 491–496).

Tanimoto, S., 2005. Dimensions of transparency in open

learner models. In 12th International Conference on

Artificial Intelligence in Education (pp. 100-106).

Tato, A., Nkambou, R., Brisson, J., & Robert, S. (2017).

Predicting Learner’s Deductive reasoning skills using a

Bayesian Network. Lecture Notes in Computer

Science, 1(June), 650–655.

https://doi.org/10.1007/978-3-319-61425-0

Thüs, H., Chatti, M. A., Brandt, R., & Schroeder, U. 2015.

Evolution of interests in the learning context data

model. In: Design for Teaching and Learning in a

Networked World (pp. 479–484). Cham: Springer.

Tmimi, M., Benslimane, M., Berrada, M., & Ouazzani, K.

(2017). A proposed conception of the learner model for

adaptive hypermedia. International Journal of Applied

Engineering Research, 12(24), 16008–16016.

Vagale, V., & Niedrite, L. 2012. Learner model’s utilization

in the E-learning environments. In: DB & Local

Proceedings (pp. 162–174).

Wang, Y., & Baker, R. 2018. Grit and intention: Why do

learners complete MOOCs? In: International Review of

Research in Open and Distributed Learning, 19(3), 21–

42. http://dx.doi.org/10.19173/irrodl.v19i3.3393

Watson, L. 2003. Lifelong Learning in Australia, Canberra,

Department of Education, Science and Training.

Watted, A., & Barak, M. 2018. Motivating factors of

MOOC completers: Comparing between university

affiliated students and general participants. Internet and

Higher Education, 37, 11–20. https://doi.org/10.1016/

j.iheduc.2017.12.001

Woolf, B. P. 2010. Student modeling. In R. Nkambou, R.

Mizoguchi, & J. Bourdeau (Eds.), Advances in

intelligent tutoring systems (pp. 267–279). Berlin:

Springer.

Yuan, L., Powell, S., Cetis, J. 2013. MOOCs and open

education: implications for higher education. Centre for

educational technology and interoperability standards

Yousef, A.M.F., Chatti, M.A., Schroeder, U., Wosnitza, M.,

Jakobs, H. 2014. A review of the state-of-the-art. In:

Proceedings of CSEDU, pp. 9–20

APPENDIX

The summary table of LM for MOOC that support

LLL can be found at the following address:

https://nextcloud.univ-lille.fr/index.php/s/

wjsFrYP5P5BFPKo

A Literature Review on Learner Models for MOOC to Support Lifelong Learning

539